jeecg-boot-starter

JeeccgBoot项目的启动模块,拆分出来便于维护 含各种starter:微服务启动、xxljob、分布式锁starter、rabbitmq、分布式事务、分库分表shardingsphere、mongondb

Stars: 74

The jeecg-boot-starter is a module that simplifies projects and facilitates maintenance by extracting the starter module from jeecg-boot. It includes various sub-modules for common classes, cloud services, job scheduling, distributed locking, messaging middleware, distributed transactions, sharding databases, and MongoDB.

README:

当前最新版本: 3.7.3(发布日期:2025-02-05)

jeecg-boot的starter启动模块独立出来,简化项目,便于维护。

- spring-cloud:2021.0.3

- spring-cloud-alibaba:2021.0.1.0

├── jeecg-boot-starter -- starter父模块

├── jeecg-boot-common -- 底层共通类(单体和微服务公用)

├── jeecg-boot-starter-cloud -- 微服务启动starter

├── jeecg-boot-starter-job -- xxl-job定时任务starter

├── jeecg-boot-starter-lock -- 分布式锁starter

├── jeecg-boot-starter-rabbitmq -- 消息中间件starter

├── jeecg-boot-starter-seata --分布式事务starter

├── jeecg-boot-starter-shardingsphere -- 分库分表starter

├── jeecg-boot-starter-mongon -- mongostarter

- 本项目关闭issue,使用中遇到问题或BUG可以在 JeecgBoot主项目上提Issues

- 官方支持: http://jeecg.com/doc/help

- 官方文档: http://doc.jeecg.com

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for jeecg-boot-starter

Similar Open Source Tools

jeecg-boot-starter

The jeecg-boot-starter is a module that simplifies projects and facilitates maintenance by extracting the starter module from jeecg-boot. It includes various sub-modules for common classes, cloud services, job scheduling, distributed locking, messaging middleware, distributed transactions, sharding databases, and MongoDB.

spring-ai-tutorial

Spring AI Tutorial is a comprehensive guide for beginners to learn about integrating artificial intelligence capabilities into Spring Boot applications. The tutorial covers various AI concepts such as machine learning, natural language processing, and computer vision, and demonstrates how to implement them using popular AI libraries and tools within the Spring framework. By following this tutorial, users will gain a solid understanding of how to leverage AI technologies to enhance the functionality and intelligence of their Spring applications.

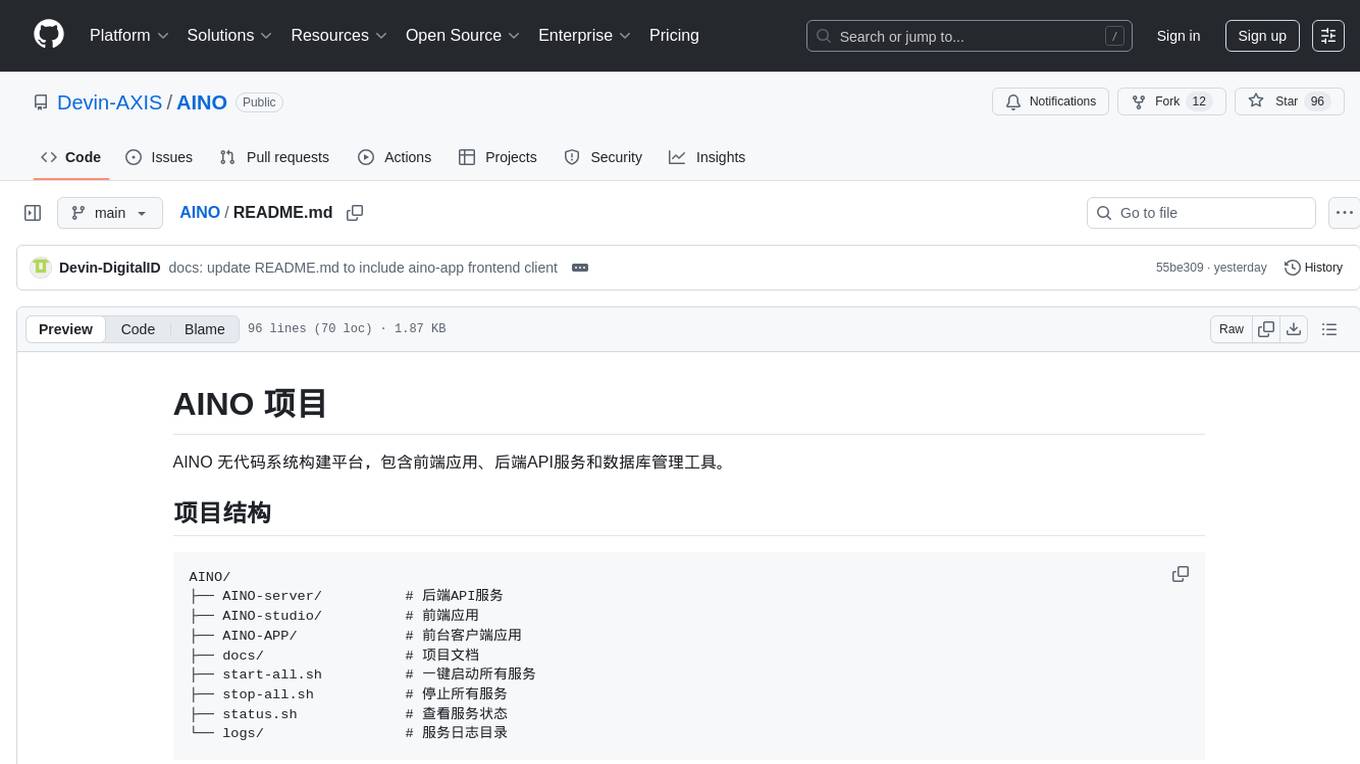

AINO

AINO is a no-code system construction platform that includes front-end applications, back-end API services, and database management tools. The project structure consists of AINO-server for back-end API services, AINO-studio for front-end applications, AINO-APP for front-end client applications, docs for project documentation, start-all.sh for one-click starting of all services, stop-all.sh for stopping all services, status.sh for checking service status, and logs for service logs. AINO utilizes Hono + TypeScript + Drizzle ORM for the back-end, Next.js + React + TypeScript for the front-end, PostgreSQL for the database, and pnpm as the package manager.

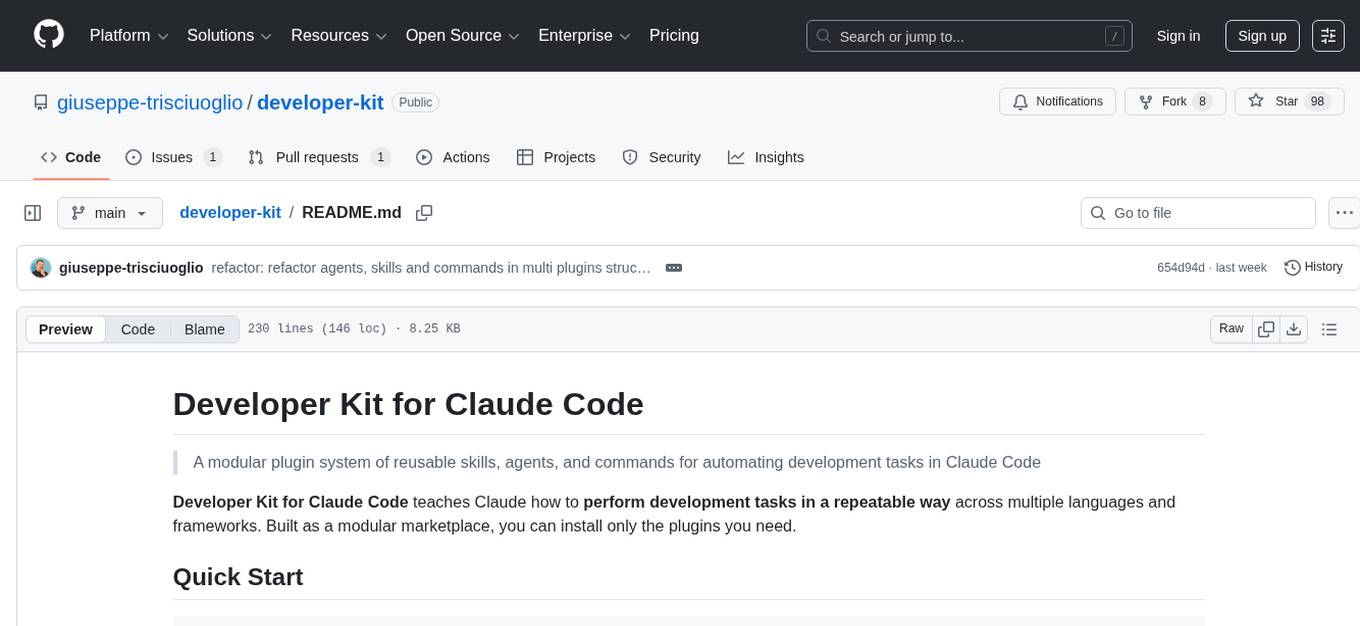

developer-kit

A modular plugin system for automating development tasks in Claude Code. It provides reusable skills, agents, and commands across multiple languages and frameworks. Users can install only the necessary plugins from a marketplace. The tool is specialized for code review, testing, AI development, and full-stack development, with composable and portable features.

comfyui_prompt_assistant

ComfyUI Prompt Assistant is a plugin that enables prompt word translation, expansion, preset tag insertion, image reverse prompt words, and history record functions without adding nodes. It offers features like UI optimization, avoiding scroll bar overlap, tag popup window scrollbar fix, and more. Users can manually install the latest version from the Releases section. The tool supports various functionalities like image reverse, Kontext presets, translation nodes, and custom rules. It also provides features for tag insertion, LLM expansion, translation switching between Baidu and LLM, and history management.

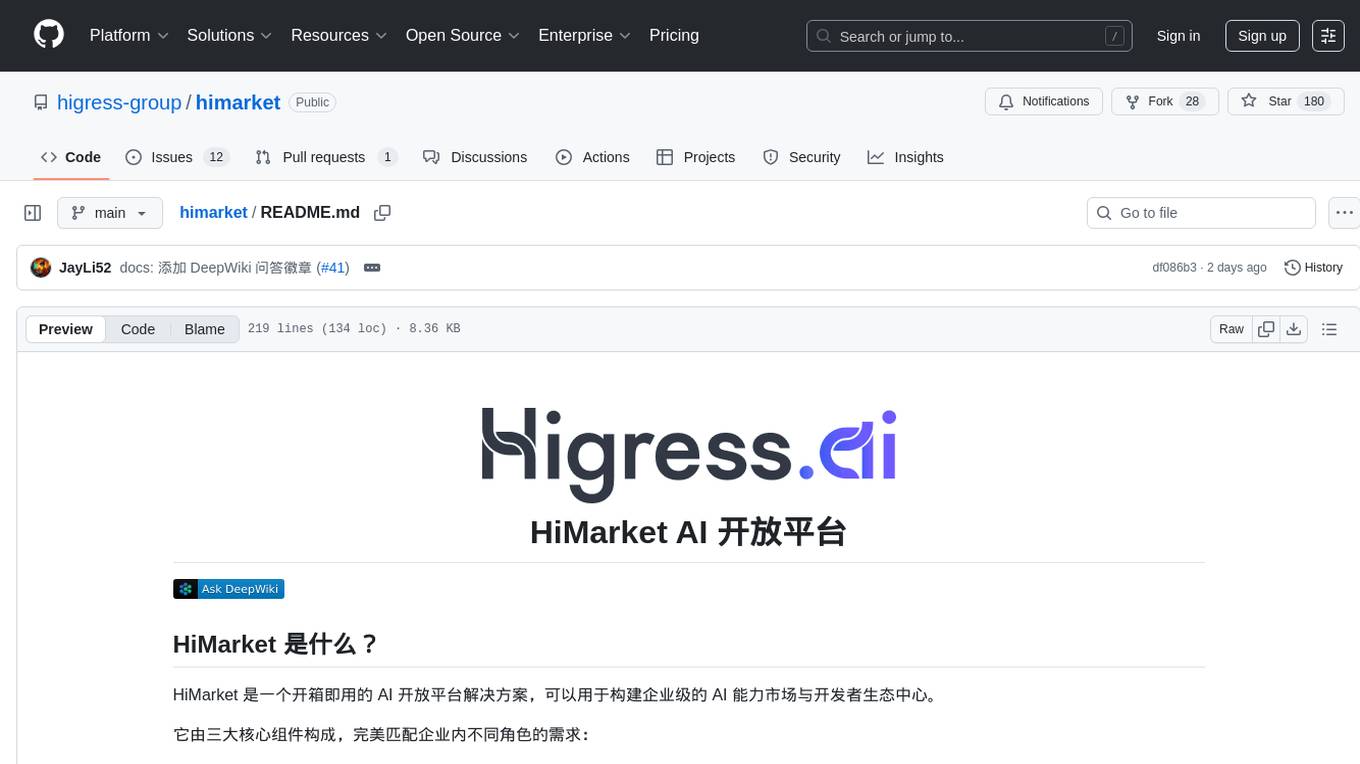

himarket

HiMarket is an out-of-the-box AI open platform solution that can be used to build enterprise-level AI capability markets and developer ecosystem centers. It consists of three core components tailored to different roles within the enterprise: 1. AI open platform management backend (for administrators/operators) for easy packaging of diverse AI capabilities such as model services, MCP Server, Agent, etc., into standardized 'AI products' in API form with comprehensive documentation and examples for one-click publishing to the portal. 2. AI open platform portal (for developers/internal users) as a 'storefront' for developers to complete registration, create consumers, obtain credentials, browse and subscribe to AI products, test online, and monitor their own call status and costs clearly. 3. AI Gateway: As a subproject of the Higress community, the Higress AI Gateway carries out all AI call authentication, security, flow control, protocol conversion, and observability capabilities.

claude-code-ultimate-guide

The Claude Code Ultimate Guide is an exhaustive documentation resource that takes users from beginner to power user in using Claude Code. It includes production-ready templates, workflow guides, a quiz, and a cheatsheet for daily use. The guide covers educational depth, methodologies, and practical examples to help users understand concepts and workflows. It also provides interactive onboarding, a repository structure overview, and learning paths for different user levels. The guide is regularly updated and offers a unique 257-question quiz for comprehensive assessment. Users can also find information on agent teams coverage, methodologies, annotated templates, resource evaluations, and learning paths for different roles like junior developer, senior developer, power user, and product manager/devops/designer.

ComfyUI-BRIA_AI-RMBG

ComfyUI-BRIA_AI-RMBG is an unofficial implementation of the BRIA Background Removal v1.4 model for ComfyUI. The tool supports batch processing, including video background removal, and introduces a new mask output feature. Users can install the tool using ComfyUI Manager or manually by cloning the repository. The tool includes nodes for automatically loading the Removal v1.4 model and removing backgrounds. Updates include support for batch processing and the addition of a mask output feature.

multi-agent-ralph-loop

Multi-agent RALPH (Reinforcement Learning with Probabilistic Hierarchies) Loop is a framework for multi-agent reinforcement learning research. It provides a flexible and extensible platform for developing and testing multi-agent reinforcement learning algorithms. The framework supports various environments, including grid-world environments, and allows users to easily define custom environments. Multi-agent RALPH Loop is designed to facilitate research in the field of multi-agent reinforcement learning by providing a set of tools and utilities for experimenting with different algorithms and scenarios.

ai-paint-today-BE

AI Paint Today is an API server repository that allows users to record their emotions and daily experiences, and based on that, AI generates a beautiful picture diary of their day. The project includes features such as generating picture diaries from written entries, utilizing DALL-E 2 model for image generation, and deploying on AWS and Cloudflare. The project also follows specific conventions and collaboration strategies for development.

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

azure-agentic-infraops

Agentic InfraOps is a multi-agent orchestration system for Azure infrastructure development that transforms how you build Azure infrastructure with AI agents. It provides a structured 7-step workflow that coordinates specialized AI agents through a complete infrastructure development cycle: Requirements → Architecture → Design → Plan → Code → Deploy → Documentation. The system enforces Azure Well-Architected Framework (WAF) alignment and Azure Verified Modules (AVM) at every phase, combining the speed of AI coding with best practices in cloud engineering.

LLM-Travel

LLM-Travel is a repository dedicated to exploring the mysteries of Large Language Models (LLM). It provides in-depth technical explanations, practical code implementations, and a platform for discussions and questions related to LLM. Join the journey to explore the fascinating world of large language models with LLM-Travel.

awesome-cuda-tensorrt-fpga

Okay, here is a JSON object with the requested information about the awesome-cuda-tensorrt-fpga repository:

VT.ai

VT.ai is a multimodal AI platform that offers dynamic conversation routing with SemanticRouter, multi-modal interactions (text/image/audio), an assistant framework with code interpretation, real-time response streaming, cross-provider model switching, and local model support with Ollama integration. It supports various AI providers such as OpenAI, Anthropic, Google Gemini, Groq, Cohere, and OpenRouter, providing a wide range of core capabilities for AI orchestration.

For similar tasks

jeecg-boot-starter

The jeecg-boot-starter is a module that simplifies projects and facilitates maintenance by extracting the starter module from jeecg-boot. It includes various sub-modules for common classes, cloud services, job scheduling, distributed locking, messaging middleware, distributed transactions, sharding databases, and MongoDB.

bee

Bee is an easy and high efficiency ORM framework that simplifies database operations by providing a simple interface and eliminating the need to write separate DAO code. It supports various features such as automatic filtering of properties, partial field queries, native statement pagination, JSON format results, sharding, multiple database support, and more. Bee also offers powerful functionalities like dynamic query conditions, transactions, complex queries, MongoDB ORM, cache management, and additional tools for generating distributed primary keys, reading Excel files, and more. The newest versions introduce enhancements like placeholder precompilation, default date sharding, ElasticSearch ORM support, and improved query capabilities.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

Chat2DB

Chat2DB is an AI-driven data development and analysis platform that enables users to communicate with databases using natural language. It supports a wide range of databases, including MySQL, PostgreSQL, Oracle, SQLServer, SQLite, MariaDB, ClickHouse, DM, Presto, DB2, OceanBase, Hive, KingBase, MongoDB, Redis, and Snowflake. Chat2DB provides a user-friendly interface that allows users to query databases, generate reports, and explore data using natural language commands. It also offers a variety of features to help users improve their productivity, such as auto-completion, syntax highlighting, and error checking.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

tau

Tau is a framework for building low maintenance & highly scalable cloud computing platforms that software developers will love. It aims to solve the high cost and time required to build, deploy, and scale software by providing a developer-friendly platform that offers autonomy and flexibility. Tau simplifies the process of building and maintaining a cloud computing platform, enabling developers to achieve 'Local Coding Equals Global Production' effortlessly. With features like auto-discovery, content-addressing, and support for WebAssembly, Tau empowers users to create serverless computing environments, host frontends, manage databases, and more. The platform also supports E2E testing and can be extended using a plugin system called orbit.

AI4DBCode

AI4DBCode is a repository that is no longer actively maintained. It likely contains code related to artificial intelligence for databases. Users can explore the existing codebase for reference or historical purposes, but should be aware that updates and support are no longer provided.

sql-explorer

SQL Explorer is a Django-based application that simplifies the flow of data between users by providing a user-friendly SQL editor to write and share queries. It supports multiple database connections, AI-powered SQL assistant, schema information access, query snapshots, in-browser statistics, parameterized queries, ad-hoc query running, email query results, and more. Users can upload and query JSON or CSV files, and the tool can connect to various SQL databases supported by Django. It aims for simplicity, stability, and ease of use, offering features like autocomplete, pivot tables, and query history logs.

For similar jobs

AirGo

AirGo is a front and rear end separation, multi user, multi protocol proxy service management system, simple and easy to use. It supports vless, vmess, shadowsocks, and hysteria2.

mosec

Mosec is a high-performance and flexible model serving framework for building ML model-enabled backend and microservices. It bridges the gap between any machine learning models you just trained and the efficient online service API. * **Highly performant** : web layer and task coordination built with Rust 🦀, which offers blazing speed in addition to efficient CPU utilization powered by async I/O * **Ease of use** : user interface purely in Python 🐍, by which users can serve their models in an ML framework-agnostic manner using the same code as they do for offline testing * **Dynamic batching** : aggregate requests from different users for batched inference and distribute results back * **Pipelined stages** : spawn multiple processes for pipelined stages to handle CPU/GPU/IO mixed workloads * **Cloud friendly** : designed to run in the cloud, with the model warmup, graceful shutdown, and Prometheus monitoring metrics, easily managed by Kubernetes or any container orchestration systems * **Do one thing well** : focus on the online serving part, users can pay attention to the model optimization and business logic

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

pezzo

Pezzo is a fully cloud-native and open-source LLMOps platform that allows users to observe and monitor AI operations, troubleshoot issues, save costs and latency, collaborate, manage prompts, and deliver AI changes instantly. It supports various clients for prompt management, observability, and caching. Users can run the full Pezzo stack locally using Docker Compose, with prerequisites including Node.js 18+, Docker, and a GraphQL Language Feature Support VSCode Extension. Contributions are welcome, and the source code is available under the Apache 2.0 License.

learn-generative-ai

Learn Cloud Applied Generative AI Engineering (GenEng) is a course focusing on the application of generative AI technologies in various industries. The course covers topics such as the economic impact of generative AI, the role of developers in adopting and integrating generative AI technologies, and the future trends in generative AI. Students will learn about tools like OpenAI API, LangChain, and Pinecone, and how to build and deploy Large Language Models (LLMs) for different applications. The course also explores the convergence of generative AI with Web 3.0 and its potential implications for decentralized intelligence.

gcloud-aio

This repository contains shared codebase for two projects: gcloud-aio and gcloud-rest. gcloud-aio is built for Python 3's asyncio, while gcloud-rest is a threadsafe requests-based implementation. It provides clients for Google Cloud services like Auth, BigQuery, Datastore, KMS, PubSub, Storage, and Task Queue. Users can install the library using pip and refer to the documentation for usage details. Developers can contribute to the project by following the contribution guide.

fluid

Fluid is an open source Kubernetes-native Distributed Dataset Orchestrator and Accelerator for data-intensive applications, such as big data and AI applications. It implements dataset abstraction, scalable cache runtime, automated data operations, elasticity and scheduling, and is runtime platform agnostic. Key concepts include Dataset and Runtime. Prerequisites include Kubernetes version > 1.16, Golang 1.18+, and Helm 3. The tool offers features like accelerating remote file accessing, machine learning, accelerating PVC, preloading dataset, and on-the-fly dataset cache scaling. Contributions are welcomed, and the project is under the Apache 2.0 license with a vendor-neutral approach.

aiges

AIGES is a core component of the Athena Serving Framework, designed as a universal encapsulation tool for AI developers to deploy AI algorithm models and engines quickly. By integrating AIGES, you can deploy AI algorithm models and engines rapidly and host them on the Athena Serving Framework, utilizing supporting auxiliary systems for networking, distribution strategies, data processing, etc. The Athena Serving Framework aims to accelerate the cloud service of AI algorithm models and engines, providing multiple guarantees for cloud service stability through cloud-native architecture. You can efficiently and securely deploy, upgrade, scale, operate, and monitor models and engines without focusing on underlying infrastructure and service-related development, governance, and operations.