ruby_llm

One beautiful Ruby API for OpenAI, Anthropic, Gemini, Bedrock, Azure, OpenRouter, DeepSeek, Ollama, VertexAI, Perplexity, Mistral, xAI, GPUStack & OpenAI compatible APIs. Chat, Vision, Audio, PDF, Images, Embeddings, Tools, Streaming & Rails integration.

Stars: 3537

RubyLLM is a delightful Ruby tool for working with AI, providing a beautiful API for various AI providers like OpenAI, Anthropic, Gemini, and DeepSeek. It simplifies AI usage by offering a consistent format, minimal dependencies, and a joyful coding experience. Users can chat, analyze images, audio, and documents, generate images, create vector embeddings, and integrate AI with Ruby code effortlessly. The tool also supports Rails integration, streaming responses, and tool creation, making AI tasks seamless and enjoyable.

README:

[!NOTE] Using RubyLLM? Share your story! Takes 5 minutes.

Build chatbots, AI agents, RAG applications. Works with OpenAI, xAI, Anthropic, Google, AWS, local models, and any OpenAI-compatible API.

Every AI provider ships their own bloated client. Different APIs. Different response formats. Different conventions. It's exhausting.

RubyLLM gives you one beautiful API for all of them. Same interface whether you're using GPT, Claude, or your local Ollama. Just three dependencies: Faraday, Zeitwerk, and Marcel. That's it.

# Just ask questions

chat = RubyLLM.chat

chat.ask "What's the best way to learn Ruby?"# Analyze any file type

chat.ask "What's in this image?", with: "ruby_conf.jpg"

chat.ask "What's happening in this video?", with: "video.mp4"

chat.ask "Describe this meeting", with: "meeting.wav"

chat.ask "Summarize this document", with: "contract.pdf"

chat.ask "Explain this code", with: "app.rb"# Multiple files at once

chat.ask "Analyze these files", with: ["diagram.png", "report.pdf", "notes.txt"]# Stream responses

chat.ask "Tell me a story about Ruby" do |chunk|

print chunk.content

end# Generate images

RubyLLM.paint "a sunset over mountains in watercolor style"# Create embeddings

RubyLLM.embed "Ruby is elegant and expressive"# Transcribe audio to text

RubyLLM.transcribe "meeting.wav"# Moderate content for safety

RubyLLM.moderate "Check if this text is safe"# Let AI use your code

class Weather < RubyLLM::Tool

description "Get current weather"

param :latitude

param :longitude

def execute(latitude:, longitude:)

url = "https://api.open-meteo.com/v1/forecast?latitude=#{latitude}&longitude=#{longitude}¤t=temperature_2m,wind_speed_10m"

JSON.parse(Faraday.get(url).body)

end

end

chat.with_tool(Weather).ask "What's the weather in Berlin?"# Define an agent with instructions + tools

class WeatherAssistant < RubyLLM::Agent

model "gpt-4.1-nano"

instructions "Be concise and always use tools for weather."

tools Weather

end

WeatherAssistant.new.ask "What's the weather in Berlin?"# Get structured output

class ProductSchema < RubyLLM::Schema

string :name

number :price

array :features do

string

end

end

response = chat.with_schema(ProductSchema).ask "Analyze this product", with: "product.txt"-

Chat: Conversational AI with

RubyLLM.chat - Vision: Analyze images and videos

-

Audio: Transcribe and understand speech with

RubyLLM.transcribe - Documents: Extract from PDFs, CSVs, JSON, any file type

-

Image generation: Create images with

RubyLLM.paint -

Embeddings: Generate embeddings with

RubyLLM.embed -

Moderation: Content safety with

RubyLLM.moderate - Tools: Let AI call your Ruby methods

-

Agents: Reusable assistants with

RubyLLM::Agent - Structured output: JSON schemas that just work

- Streaming: Real-time responses with blocks

-

Rails: ActiveRecord integration with

acts_as_chat - Async: Fiber-based concurrency

- Model registry: 800+ models with capability detection and pricing

- Extended thinking: Control, view, and persist model deliberation

- Providers: OpenAI, xAI, Anthropic, Gemini, VertexAI, Bedrock, DeepSeek, Mistral, Ollama, OpenRouter, Perplexity, GPUStack, and any OpenAI-compatible API

Add to your Gemfile:

gem 'ruby_llm'Then bundle install.

Configure your API keys:

# config/initializers/ruby_llm.rb

RubyLLM.configure do |config|

config.openai_api_key = ENV['OPENAI_API_KEY']

end# Install Rails Integration

rails generate ruby_llm:install

# Add Chat UI (optional)

rails generate ruby_llm:chat_uiclass Chat < ApplicationRecord

acts_as_chat

end

chat = Chat.create! model: "claude-sonnet-4"

chat.ask "What's in this file?", with: "report.pdf"Visit http://localhost:3000/chats for a ready-to-use chat interface!

See CONTRIBUTING.md.

Released under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ruby_llm

Similar Open Source Tools

ruby_llm

RubyLLM is a delightful Ruby tool for working with AI, providing a beautiful API for various AI providers like OpenAI, Anthropic, Gemini, and DeepSeek. It simplifies AI usage by offering a consistent format, minimal dependencies, and a joyful coding experience. Users can chat, analyze images, audio, and documents, generate images, create vector embeddings, and integrate AI with Ruby code effortlessly. The tool also supports Rails integration, streaming responses, and tool creation, making AI tasks seamless and enjoyable.

redcache-ai

RedCache-ai is a memory framework designed for Large Language Models and Agents. It provides a dynamic memory framework for developers to build various applications, from AI-powered dating apps to healthcare diagnostics platforms. Users can store, retrieve, search, update, and delete memories using RedCache-ai. The tool also supports integration with OpenAI for enhancing memories. RedCache-ai aims to expand its functionality by integrating with more LLM providers, adding support for AI Agents, and providing a hosted version.

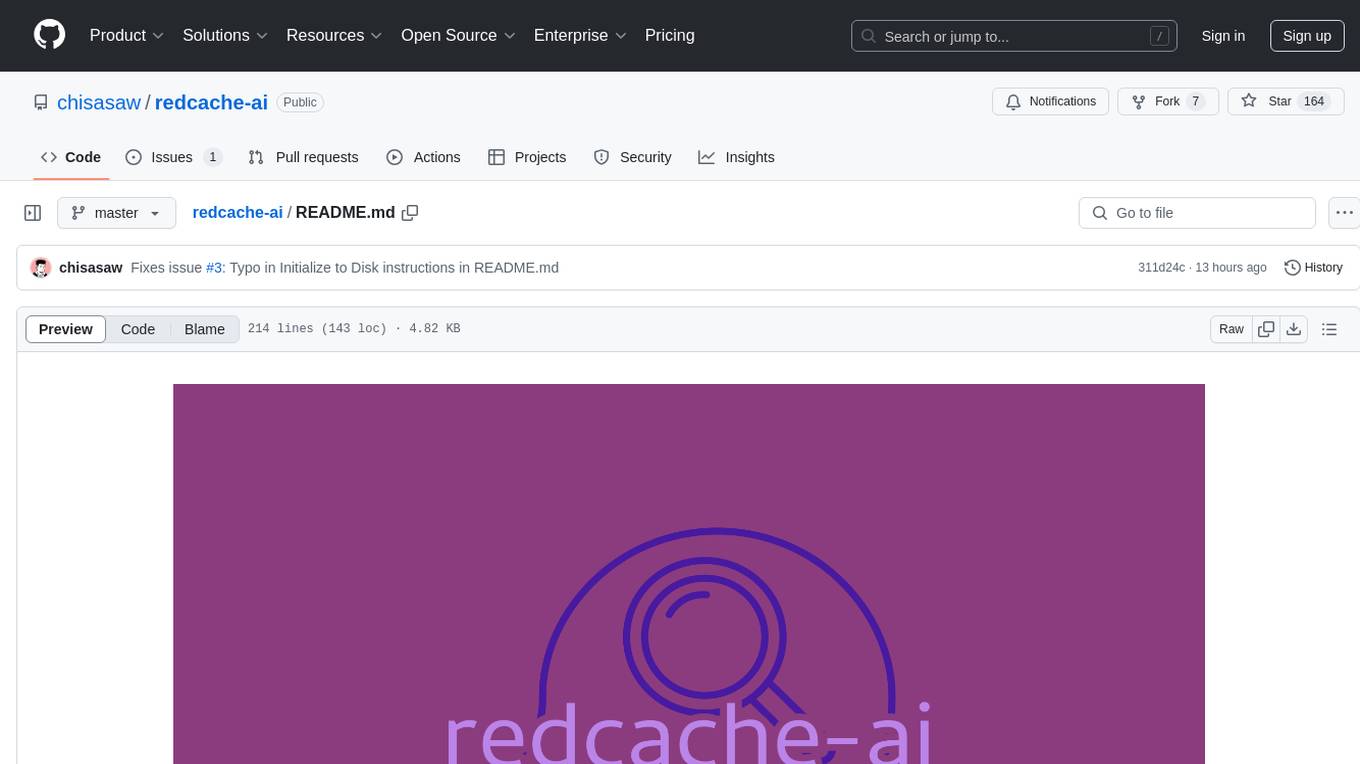

nvim.ai

nvim.ai is a powerful Neovim plugin that enables AI-assisted coding and chat capabilities within the editor. Users can chat with buffers, insert code with an inline assistant, and utilize various LLM providers for context-aware AI assistance. The plugin supports features like interacting with AI about code and documents, receiving relevant help based on current work, code insertion, code rewriting (Work in Progress), and integration with multiple LLM providers. Users can configure the plugin, add API keys to dotfiles, and integrate with nvim-cmp for command autocompletion. Keymaps are available for chat and inline assist functionalities. The chat dialog allows parsing content with keywords and supports roles like /system, /you, and /assistant. Context-aware assistance can be accessed through inline assist by inserting code blocks anywhere in the file.

openai-edge-tts

This project provides a local, OpenAI-compatible text-to-speech (TTS) API using `edge-tts`. It emulates the OpenAI TTS endpoint (`/v1/audio/speech`), enabling users to generate speech from text with various voice options and playback speeds, just like the OpenAI API. `edge-tts` uses Microsoft Edge's online text-to-speech service, making it completely free. The project supports multiple audio formats, adjustable playback speed, and voice selection options, providing a flexible and customizable TTS solution for users.

omniai

OmniAI provides a unified Ruby API for integrating with multiple AI providers, streamlining AI development by offering a consistent interface for features such as chat, text-to-speech, speech-to-text, and embeddings. It ensures seamless interoperability across platforms and effortless switching between providers, making integrations more flexible and reliable.

instructor

Instructor is a popular Python library for managing structured outputs from large language models (LLMs). It offers a user-friendly API for validation, retries, and streaming responses. With support for various LLM providers and multiple languages, Instructor simplifies working with LLM outputs. The library includes features like response models, retry management, validation, streaming support, and flexible backends. It also provides hooks for logging and monitoring LLM interactions, and supports integration with Anthropic, Cohere, Gemini, Litellm, and Google AI models. Instructor facilitates tasks such as extracting user data from natural language, creating fine-tuned models, managing uploaded files, and monitoring usage of OpenAI models.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

dexter

Dexter is a set of mature LLM tools used in production at Dexa, with a focus on real-world RAG (Retrieval Augmented Generation). It is a production-quality RAG that is extremely fast and minimal, and handles caching, throttling, and batching for ingesting large datasets. It also supports optional hybrid search with SPLADE embeddings, and is a minimal TS package with full typing that uses `fetch` everywhere and supports Node.js 18+, Deno, Cloudflare Workers, Vercel edge functions, etc. Dexter has full docs and includes examples for basic usage, caching, Redis caching, AI function, AI runner, and chatbot.

npcpy

npcpy is a core library of the NPC Toolkit that enhances natural language processing pipelines and agent tooling. It provides a flexible framework for building applications and conducting research with LLMs. The tool supports various functionalities such as getting responses for agents, setting up agent teams, orchestrating jinx workflows, obtaining LLM responses, generating images, videos, audio, and more. It also includes a Flask server for deploying NPC teams, supports LiteLLM integration, and simplifies the development of NLP-based applications. The tool is versatile, supporting multiple models and providers, and offers a graphical user interface through NPC Studio and a command-line interface via NPC Shell.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

hf-waitress

HF-Waitress is a powerful server application for deploying and interacting with HuggingFace Transformer models. It simplifies running open-source Large Language Models (LLMs) locally on-device, providing on-the-fly quantization via BitsAndBytes, HQQ, and Quanto. It requires no manual model downloads, offers concurrency, streaming responses, and supports various hardware and platforms. The server uses a `config.json` file for easy configuration management and provides detailed error handling and logging.

code_puppy

Code Puppy is an AI-powered code generation agent designed to understand programming tasks, generate high-quality code, and explain its reasoning. It supports multi-language code generation, interactive CLI, and detailed code explanations. The tool requires Python 3.9+ and API keys for various models like GPT, Google's Gemini, Cerebras, and Claude. It also integrates with MCP servers for advanced features like code search and documentation lookups. Users can create custom JSON agents for specialized tasks and access a variety of tools for file management, code execution, and reasoning sharing.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

deep-searcher

DeepSearcher is a tool that combines reasoning LLMs and Vector Databases to perform search, evaluation, and reasoning based on private data. It is suitable for enterprise knowledge management, intelligent Q&A systems, and information retrieval scenarios. The tool maximizes the utilization of enterprise internal data while ensuring data security, supports multiple embedding models, and provides support for multiple LLMs for intelligent Q&A and content generation. It also includes features like private data search, vector database management, and document loading with web crawling capabilities under development.

For similar tasks

HPT

Hyper-Pretrained Transformers (HPT) is a novel multimodal LLM framework from HyperGAI, trained for vision-language models capable of understanding both textual and visual inputs. The repository contains the open-source implementation of inference code to reproduce the evaluation results of HPT Air on different benchmarks. HPT has achieved competitive results with state-of-the-art models on various multimodal LLM benchmarks. It offers models like HPT 1.5 Air and HPT 1.0 Air, providing efficient solutions for vision-and-language tasks.

learnopencv

LearnOpenCV is a repository containing code for Computer Vision, Deep learning, and AI research articles shared on the blog LearnOpenCV.com. It serves as a resource for individuals looking to enhance their expertise in AI through various courses offered by OpenCV. The repository includes a wide range of topics such as image inpainting, instance segmentation, robotics, deep learning models, and more, providing practical implementations and code examples for readers to explore and learn from.

spark-free-api

Spark AI Free 服务 provides high-speed streaming output, multi-turn dialogue support, AI drawing support, long document interpretation, and image parsing. It offers zero-configuration deployment, multi-token support, and automatic session trace cleaning. It is fully compatible with the ChatGPT interface. The repository includes multiple free-api projects for various AI services. Users can access the API for tasks such as chat completions, AI drawing, document interpretation, image analysis, and ssoSessionId live checking. The project also provides guidelines for deployment using Docker, Docker-compose, Render, Vercel, and native deployment methods. It recommends using custom clients for faster and simpler access to the free-api series projects.

mlx-vlm

MLX-VLM is a package designed for running Vision LLMs on Mac systems using MLX. It provides a convenient way to install and utilize the package for processing large language models related to vision tasks. The tool simplifies the process of running LLMs on Mac computers, offering a seamless experience for users interested in leveraging MLX for vision-related projects.

clarifai-python-grpc

This is the official Clarifai gRPC Python client for interacting with their recognition API. Clarifai offers a platform for data scientists, developers, researchers, and enterprises to utilize artificial intelligence for image, video, and text analysis through computer vision and natural language processing. The client allows users to authenticate, predict concepts in images, and access various functionalities provided by the Clarifai API. It follows a versioning scheme that aligns with the backend API updates and includes specific instructions for installation and troubleshooting. Users can explore the Clarifai demo, sign up for an account, and refer to the documentation for detailed information.

horde-worker-reGen

This repository provides the latest implementation for the AI Horde Worker, allowing users to utilize their graphics card(s) to generate, post-process, or analyze images for others. It offers a platform where users can create images and earn 'kudos' in return, granting priority for their own image generations. The repository includes important details for setup, recommendations for system configurations, instructions for installation on Windows and Linux, basic usage guidelines, and information on updating the AI Horde Worker. Users can also run the worker with multiple GPUs and receive notifications for updates through Discord. Additionally, the repository contains models that are licensed under the CreativeML OpenRAIL License.

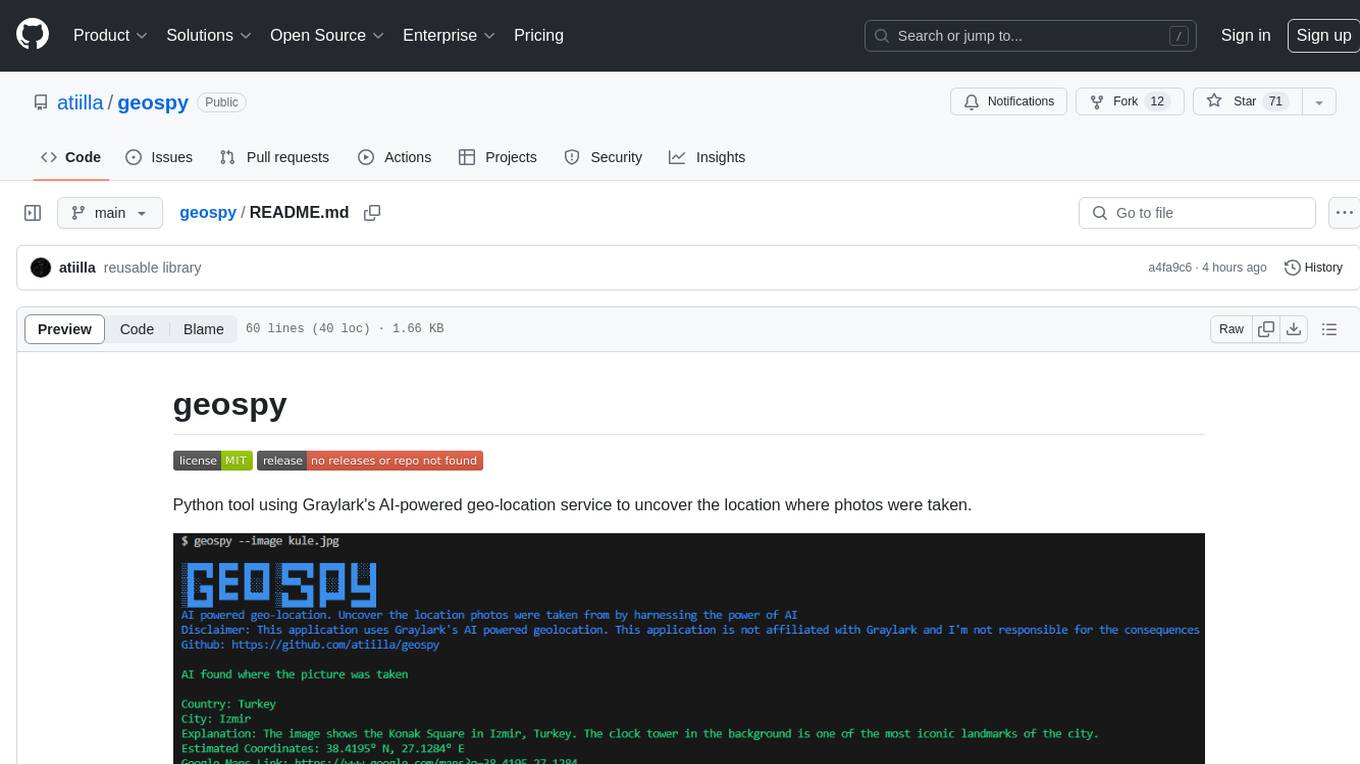

geospy

Geospy is a Python tool that utilizes Graylark's AI-powered geolocation service to determine the location where photos were taken. It allows users to analyze images and retrieve information such as country, city, explanation, coordinates, and Google Maps links. The tool provides a seamless way to integrate geolocation services into various projects and applications.

Awesome-Colorful-LLM

Awesome-Colorful-LLM is a meticulously assembled anthology of vibrant multimodal research focusing on advancements propelled by large language models (LLMs) in domains such as Vision, Audio, Agent, Robotics, and Fundamental Sciences like Mathematics. The repository contains curated collections of works, datasets, benchmarks, projects, and tools related to LLMs and multimodal learning. It serves as a comprehensive resource for researchers and practitioners interested in exploring the intersection of language models and various modalities for tasks like image understanding, video pretraining, 3D modeling, document understanding, audio analysis, agent learning, robotic applications, and mathematical research.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.