codesandbox-sdk

Programmatically start (AI) sandboxes on top of CodeSandbox

Stars: 56

CodeSandbox SDK enables users to programmatically spin up development environments and run untrusted code securely. It provides a programmatic API for creating and running sandboxes quickly. The SDK uses the microVM infrastructure of CodeSandbox, supporting features like snapshotting/restoring VMs, cloning VMs & Snapshots, source control integration, and running any Dockerfile. Users can authenticate with an API token, create sandboxes, run code in various languages, interact with the filesystem, clone sandboxes, get metrics, hibernate sandboxes, and more. The sandboxes are created inside the user's workspace in CodeSandbox, allowing for controlled environments and resource billing. Example use cases include code interpretation, creating development environments, running AI agents, and CI/CD testing.

README:

The power of CodeSandbox in a library

CodeSandbox SDK enables you to programmatically spin up development environments and run untrusted code. It provides a programmatic API to create and run sandboxes quickly and securely.

Under the hood, the SDK uses the microVM infrastructure of CodeSandbox to spin up sandboxes. It supports:

- Snapshotting/restoring VMs (checkpointing) at any point in time

- With snapshot restore times within 1 second

- Cloning VMs & Snapshots within 2 seconds

- Source control (git, GitHub, CodeSandbox SCM)

- Running any Dockerfile

Check out the CodeSandbox SDK documentation for more information.

To get started, install the SDK:

npm install @codesandbox/sdkCreate an API token by going to https://codesandbox.io/t/api, and clicking on the "Create API Token" button. You can then use this token to authenticate with the SDK:

import { CodeSandbox } from "@codesandbox/sdk";

// Create the client with your token

const sdk = new CodeSandbox(token);

// This creates a new sandbox by forking our default template sandbox.

// You can also pass in other template ids, or create your own template to fork from.

const sandbox = await sdk.sandbox.create();

// You can run JS code directly

await sandbox.shells.js.run("console.log(1+1)");

// Or Python code (if it's installed in the template)

await sandbox.shells.python.run("print(1+1)");

// Or anything else

await sandbox.shells.run("echo 'Hello, world!'");

// We have a FS API to interact with the filesystem

await sandbox.fs.writeTextFile("./hello.txt", "world");

// And you can clone sandboxes! This does not only clone the filesystem, processes that are running in the original sandbox will also be cloned!

const sandbox2 = await sandbox.fork();

// Check that the file is still there

await sandbox2.fs.readTextFile("./hello.txt");

// You can also get the opened ports, with the URL to access those

console.log(sandbox2.ports.getOpenedPorts());

// Getting metrics...

const metrics = await sandbox2.getMetrics();

console.log(

`Memory: ${metrics.memory.usedKiB} KiB / ${metrics.memory.totalKiB} KiB`

);

console.log(`CPU: ${(metrics.cpu.used / metrics.cpu.cores) * 100}%`);

// Finally, you can hibernate a sandbox. This will snapshot the sandbox and stop it. Next time you start the sandbox, it will continue where it left off, as we created a memory snapshot.

await sandbox.hibernate();

await sandbox2.hibernate();

// Open the sandbox again

const resumedSandbox = await sdk.sandbox.open(sandbox.id);This SDK uses the API token from your workspace in CodeSandbox to authenticate and create sandboxes. Because of this, the sandboxes will be created inside your workspace, and the resources will be billed to your workspace.

You could, for example, create a private template in your workspace that has all the dependencies you need (even running servers), and then use that template to fork sandboxes from. This way, you can control the environment that the sandboxes run in.

These are some example use cases that you could use this library for:

Code interpretation: Run code in a sandbox to interpret it. This way, you can run untrusted code without worrying about it affecting your system.

Development environments: Create a sandbox for each developer, and run their code in the sandbox. This way, you can run multiple development environments in parallel without them interfering with each other.

AI Agents: Create a sandbox for each AI agent, and run the agent in the sandbox. This way, you can run multiple agents in parallel without them interfering with each other. Using the forking mechanism, you can also A/B test different agents.

CI/CD: Run tests inside a sandbox, and hibernate the sandbox when the tests are done. This way, you can quickly start the sandbox again when you need to run the tests again or evaluate the results.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for codesandbox-sdk

Similar Open Source Tools

codesandbox-sdk

CodeSandbox SDK enables users to programmatically spin up development environments and run untrusted code securely. It provides a programmatic API for creating and running sandboxes quickly. The SDK uses the microVM infrastructure of CodeSandbox, supporting features like snapshotting/restoring VMs, cloning VMs & Snapshots, source control integration, and running any Dockerfile. Users can authenticate with an API token, create sandboxes, run code in various languages, interact with the filesystem, clone sandboxes, get metrics, hibernate sandboxes, and more. The sandboxes are created inside the user's workspace in CodeSandbox, allowing for controlled environments and resource billing. Example use cases include code interpretation, creating development environments, running AI agents, and CI/CD testing.

jaison-core

J.A.I.son is a Python project designed for generating responses using various components and applications. It requires specific plugins like STT, T2T, TTSG, and TTSC to function properly. Users can customize responses, voice, and configurations. The project provides a Discord bot, Twitch events and chat integration, and VTube Studio Animation Hotkeyer. It also offers features for managing conversation history, training AI models, and monitoring conversations.

magic

Magic Cloud is a software development automation platform based on AI, Low-Code, and No-Code. It allows dynamic code creation and orchestration using Hyperlambda, generative AI, and meta programming. The platform includes features like CRUD generation, No-Code AI, Hyperlambda programming language, AI agents creation, and various components for software development. Magic is suitable for backend development, AI-related tasks, and creating AI chatbots. It offers high-level programming capabilities, productivity gains, and reduced technical debt.

vespa

Vespa is a platform that performs operations such as selecting a subset of data in a large corpus, evaluating machine-learned models over the selected data, organizing and aggregating it, and returning it, typically in less than 100 milliseconds, all while the data corpus is continuously changing. It has been in development for many years and is used on a number of large internet services and apps which serve hundreds of thousands of queries from Vespa per second.

llm-code-interpreter

The 'llm-code-interpreter' repository is a deprecated plugin that provides a code interpreter on steroids for ChatGPT by E2B. It gives ChatGPT access to a sandboxed cloud environment with capabilities like running any code, accessing Linux OS, installing programs, using filesystem, running processes, and accessing the internet. The plugin exposes commands to run shell commands, read files, and write files, enabling various possibilities such as running different languages, installing programs, starting servers, deploying websites, and more. It is powered by the E2B API and is designed for agents to freely experiment within a sandboxed environment.

wandb

Weights & Biases (W&B) is a platform that helps users build better machine learning models faster by tracking and visualizing all components of the machine learning pipeline, from datasets to production models. It offers tools for tracking, debugging, evaluating, and monitoring machine learning applications. W&B provides integrations with popular frameworks like PyTorch, TensorFlow/Keras, Hugging Face Transformers, PyTorch Lightning, XGBoost, and Sci-Kit Learn. Users can easily log metrics, visualize performance, and compare experiments using W&B. The platform also supports hosting options in the cloud or on private infrastructure, making it versatile for various deployment needs.

azure-search-openai-demo

This sample demonstrates a few approaches for creating ChatGPT-like experiences over your own data using the Retrieval Augmented Generation pattern. It uses Azure OpenAI Service to access a GPT model (gpt-35-turbo), and Azure AI Search for data indexing and retrieval. The repo includes sample data so it's ready to try end to end. In this sample application we use a fictitious company called Contoso Electronics, and the experience allows its employees to ask questions about the benefits, internal policies, as well as job descriptions and roles.

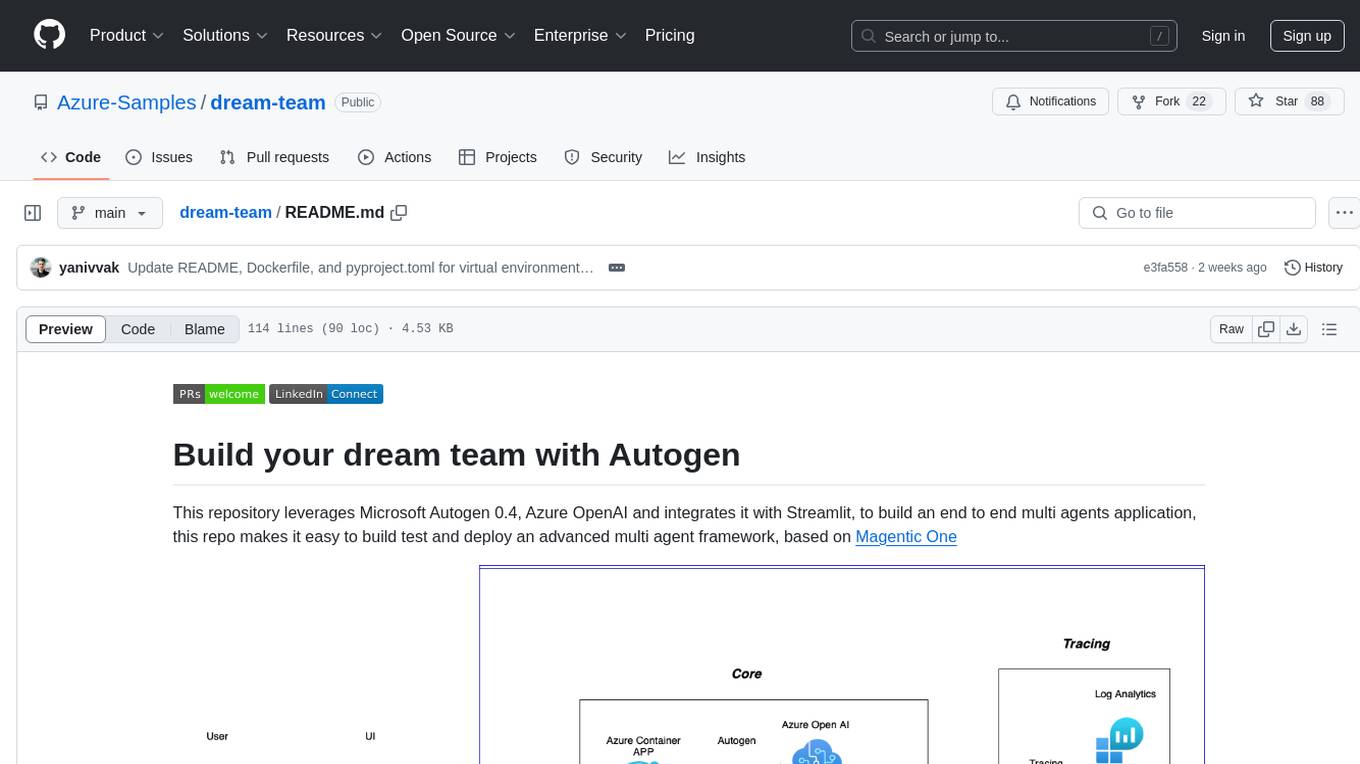

dream-team

Build your dream team with Autogen is a repository that leverages Microsoft Autogen 0.4, Azure OpenAI, and Streamlit to create an end-to-end multi-agent application. It provides an advanced multi-agent framework based on Magentic One, with features such as a friendly UI, single-line deployment, secure code execution, managed identities, and observability & debugging tools. Users can deploy Azure resources and the app with simple commands, work locally with virtual environments, install dependencies, update configurations, and run the application. The repository also offers resources for learning more about building applications with Autogen.

Dot

Dot is a standalone, open-source application designed for seamless interaction with documents and files using local LLMs and Retrieval Augmented Generation (RAG). It is inspired by solutions like Nvidia's Chat with RTX, providing a user-friendly interface for those without a programming background. Pre-packaged with Mistral 7B, Dot ensures accessibility and simplicity right out of the box. Dot allows you to load multiple documents into an LLM and interact with them in a fully local environment. Supported document types include PDF, DOCX, PPTX, XLSX, and Markdown. Users can also engage with Big Dot for inquiries not directly related to their documents, similar to interacting with ChatGPT. Built with Electron JS, Dot encapsulates a comprehensive Python environment that includes all necessary libraries. The application leverages libraries such as FAISS for creating local vector stores, Langchain, llama.cpp & Huggingface for setting up conversation chains, and additional tools for document management and interaction.

FlowTest

FlowTestAI is the world’s first GenAI powered OpenSource Integrated Development Environment (IDE) designed for crafting, visualizing, and managing API-first workflows. It operates as a desktop app, interacting with the local file system, ensuring privacy and enabling collaboration via version control systems. The platform offers platform-specific binaries for macOS, with versions for Windows and Linux in development. It also features a CLI for running API workflows from the command line interface, facilitating automation and CI/CD processes.

trinityX

TrinityX is an open-source HPC, AI, and cloud platform designed to provide all services required in a modern system, with full customization options. It includes default services like Luna node provisioner, OpenLDAP, SLURM or OpenPBS, Prometheus, Grafana, OpenOndemand, and more. TrinityX also sets up NFS-shared directories, OpenHPC applications, environment modules, HA, and more. Users can install TrinityX on Enterprise Linux, configure network interfaces, set up passwordless authentication, and customize the installation using Ansible playbooks. The platform supports HA, OpenHPC integration, and provides detailed documentation for users to contribute to the project.

cannoli

Cannoli allows you to build and run no-code LLM scripts using the Obsidian Canvas editor. Cannolis are scripts that leverage the OpenAI API to read/write to your vault, and take actions using HTTP requests. They can be used to automate tasks, create custom llm-chatbots, and more.

hashbrown

Hashbrown is a lightweight and efficient hashing library for Python, designed to provide easy-to-use cryptographic hashing functions for secure data storage and transmission. It supports a variety of hashing algorithms, including MD5, SHA-1, SHA-256, and SHA-512, allowing users to generate hash values for strings, files, and other data types. With Hashbrown, developers can quickly implement data integrity checks, password hashing, digital signatures, and other security features in their Python applications.

ai-services

AI Services is a WordPress plugin that provides a centralized infrastructure for integrating AI capabilities into WordPress websites. It allows other plugins to utilize AI services via a common API, making it easier for developers to incorporate AI features without the need to implement separate API layers. The plugin supports various AI services such as Anthropic, Google, and OpenAI, enabling users to choose their preferred service. It simplifies the process of configuring AI APIs and unlocks AI capabilities for smaller plugins or features. The plugin is still in early stages, with ongoing enhancements and improvements planned to streamline API usage and enhance user experience.

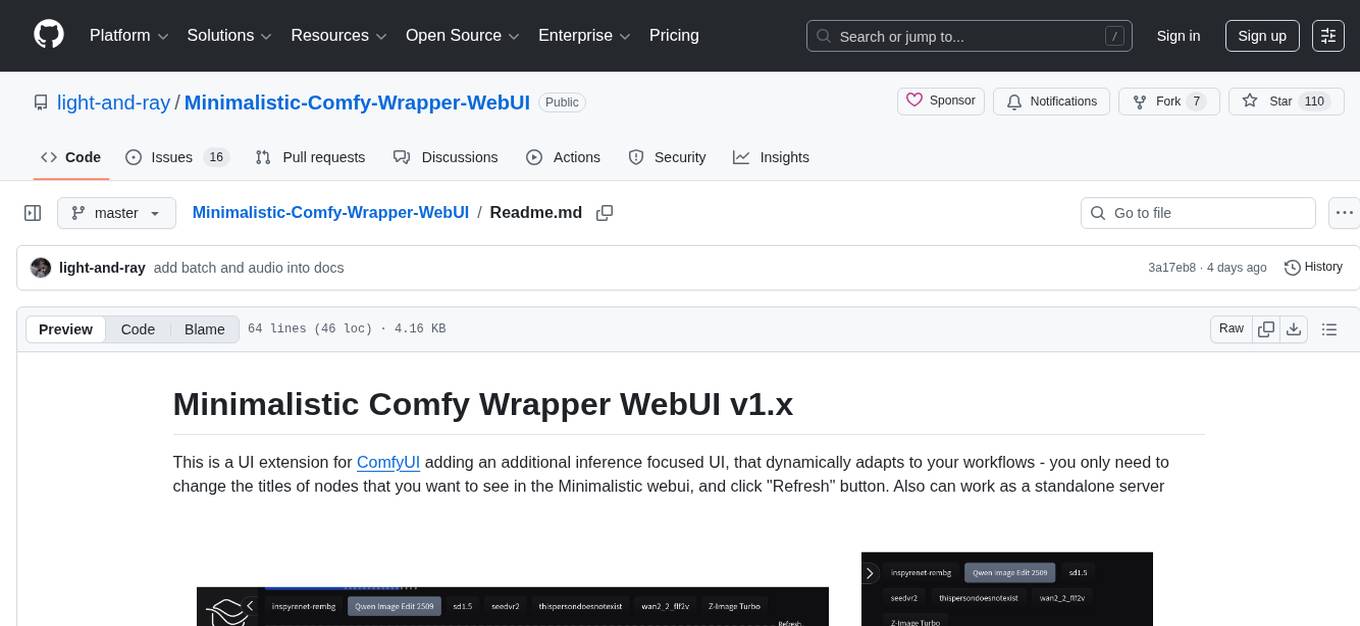

Minimalistic-Comfy-Wrapper-WebUI

Minimalistic Comfy Wrapper WebUI is a user interface extension for ComfyUI that provides an additional inference-focused UI. It dynamically adapts to workflows, allowing users to change node titles and refresh the UI. The tool ensures stability by storing data in the browser's local storage, supports working with the same workflows in Comfy and the webui, offers better queues management, prompt presets, batch support, a minimalist image editor, and mobile-friendly UI. Users can install it from the ComfyUI manager and customize node titles for input and output nodes. The tool is designed for users who prefer a simpler interface for inference tasks and want to work with ComfyUI workflows from a different perspective.

mentat

Mentat is an AI tool designed to assist with coding tasks directly from the command line. It combines human creativity with computer-like processing to help users understand new codebases, add new features, and refactor existing code. Unlike other tools, Mentat coordinates edits across multiple locations and files, with the context of the project already in mind. The tool aims to enhance the coding experience by providing seamless assistance and improving edit quality.

For similar tasks

codesandbox-sdk

CodeSandbox SDK enables users to programmatically spin up development environments and run untrusted code securely. It provides a programmatic API for creating and running sandboxes quickly. The SDK uses the microVM infrastructure of CodeSandbox, supporting features like snapshotting/restoring VMs, cloning VMs & Snapshots, source control integration, and running any Dockerfile. Users can authenticate with an API token, create sandboxes, run code in various languages, interact with the filesystem, clone sandboxes, get metrics, hibernate sandboxes, and more. The sandboxes are created inside the user's workspace in CodeSandbox, allowing for controlled environments and resource billing. Example use cases include code interpretation, creating development environments, running AI agents, and CI/CD testing.

craftium

Craftium is an open-source platform based on the Minetest voxel game engine and the Gymnasium and PettingZoo APIs, designed for creating fast, rich, and diverse single and multi-agent environments. It allows for connecting to Craftium's Python process, executing actions as keyboard and mouse controls, extending the Lua API for creating RL environments and tasks, and supporting client/server synchronization for slow agents. Craftium is fully extensible, extensively documented, modern RL API compatible, fully open source, and eliminates the need for Java. It offers a variety of environments for research and development in reinforcement learning.

InternLM

InternLM is a powerful language model series with features such as 200K context window for long-context tasks, outstanding comprehensive performance in reasoning, math, code, chat experience, instruction following, and creative writing, code interpreter & data analysis capabilities, and stronger tool utilization capabilities. It offers models in sizes of 7B and 20B, suitable for research and complex scenarios. The models are recommended for various applications and exhibit better performance than previous generations. InternLM models may match or surpass other open-source models like ChatGPT. The tool has been evaluated on various datasets and has shown superior performance in multiple tasks. It requires Python >= 3.8, PyTorch >= 1.12.0, and Transformers >= 4.34 for usage. InternLM can be used for tasks like chat, agent applications, fine-tuning, deployment, and long-context inference.

chocolate-factory

Chocolate Factory is an open-source LLM application development framework designed to help you easily create powerful software development SDLC + LLM assistants. It provides a set of modules for integration into JVM projects and offers RAGScript for querying and local deployment examples. The tool follows a domain-driven problem-solving approach with key concepts like ProblemClarifier, ProblemAnalyzer, SolutionDesigner, SolutionReviewer, and SolutionExecutor. It supports use cases in desktop/IDE, server, and Android development, with a focus on AI-powered coding assistance and semantic search capabilities.

CodeGeeX4

CodeGeeX4-ALL-9B is an open-source multilingual code generation model based on GLM-4-9B, offering enhanced code generation capabilities. It supports functions like code completion, code interpreter, web search, function call, and repository-level code Q&A. The model has competitive performance on benchmarks like BigCodeBench and NaturalCodeBench, outperforming larger models in terms of speed and performance.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

claudex

Claudex is an open-source, self-hosted Claude Code UI that runs entirely on your machine. It provides multiple sandboxes, allows users to use their own plans, offers a full IDE experience with VS Code in the browser, and is extensible with skills, agents, slash commands, and MCP servers. Users can run AI agents in isolated environments, view and interact with a browser via VNC, switch between multiple AI providers, automate tasks with Celery workers, and enjoy various chat features and preview capabilities. Claudex also supports marketplace plugins, secrets management, integrations like Gmail, and custom instructions. The tool is configured through providers and supports various providers like Anthropic, OpenAI, OpenRouter, and Custom. It has a tech stack consisting of React, FastAPI, Python, PostgreSQL, Celery, Redis, and more.

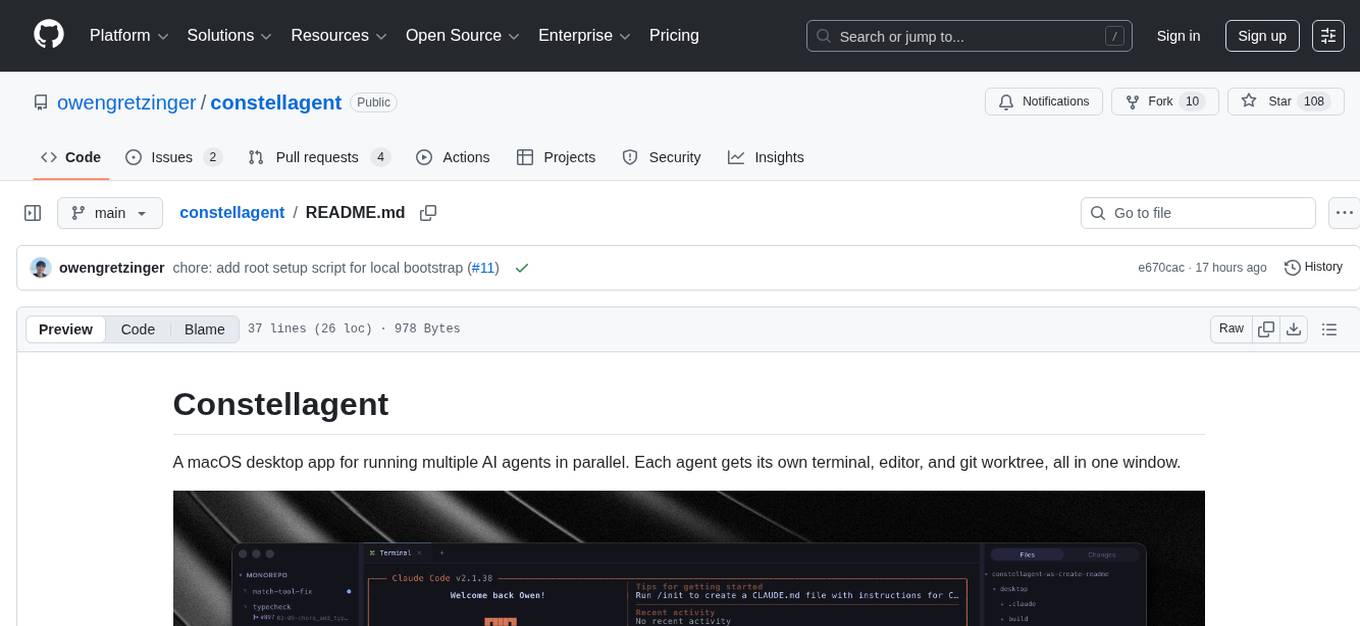

constellagent

Constellagent is a macOS desktop app designed for running multiple AI agents in parallel. It provides a seamless environment for each agent with its own terminal, editor, and git worktree, all within a single window. The app offers features such as separate agent sessions, full terminal emulator, Monaco code editor, git management tools, file tree navigation, automation scheduling, and keyboard-driven functionalities. Constellagent is a versatile tool that streamlines the workflow for developers and AI practitioners working on multiple projects simultaneously.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.