redcache-ai

A memory framework for Large Language Models and Agents.

Stars: 163

RedCache-ai is a memory framework designed for Large Language Models and Agents. It provides a dynamic memory framework for developers to build various applications, from AI-powered dating apps to healthcare diagnostics platforms. Users can store, retrieve, search, update, and delete memories using RedCache-ai. The tool also supports integration with OpenAI for enhancing memories. RedCache-ai aims to expand its functionality by integrating with more LLM providers, adding support for AI Agents, and providing a hosted version.

README:

Join the redcache-ai discord server | Email me

REmote Dictionary cache - Artificial Intelligence (RedCache-AI). While developing a chat application, every solution I came across was either expensive, closed source or lacked extensive support for external dependencies. Redcache-ai provides a dynamic memory framework for Large Language Models, enabling developers to build wide ranging applications, from AI-powered dating apps to healthcare diagnostics platforms.

Install redcache-ai as a python package

pip install redcache-ai

Initialize Redcache-ai

Redcache-ai provides two options. Initialize to disk or sqlite. By default redcache-ai initializes to disk. This where memories are stored.

Option 1: initialize to disk

Make sure to import all the required dependencies

from redcache_ai import RedCache, load_config, set_openai_api_key

from redcache_ai.storage import DiskStorage, SQLiteStorage

storage = DiskStorage()Option 2: Initialize to sqlite

storage = SQLiteStorage(db_path='my_cache.db')Store text as memory

Note: the output visual representation below is for disk storage only. The input is the same for sqlite

Input

memory_cache = storage.add("England is nice country", user_id = "James", metadata={"category": "facts"})Output

{"James": {"7bbfbcbf-da9e-44ca-9cbb-ab558c64b36a": {"id": "7bbfbcbf-da9e-44ca-9cbb-ab558c64b36a", "text": "\"England is a nice country\"", "metadata": {"data": "\"England is a nice country\"", "category": "facts"}, "vector": [0.4472135954999579]}}}Retrieve Memory

# Get all memories

memories = storage(user_id = "James")

print(all_memories)Output:

# Get all memories

[

{

"id": "7bbfbcbf-da9e-44ca-9cbb-ab558c64b36a",

"text": "England is a nice country",

"metadata": {

"category": "facts"

}

}

]

Search Memories

# Get all memories

results = storage.search("country", user_id="James", num_results=1)Output:

[

{

"id": "7bbfbcbf-da9e-44ca-9cbb-ab558c64b36a",

"text": "England is a nice country",

"metadata": {

"category": "facts"

},

"score": 0.849

}

]Update Memory

updated_memory = storage.update("7bbfbcbf-da9e-44ca-9cbb-ab558c64b36a", "England is a beautiful country", user_id="James") Output:

{

"id": "7bbfbcbf-da9e-44ca-9cbb-ab558c64b36a",

"text": "England is a beautiful country",

"metadata": {

"category": "facts"

}

}Delete Memory

storage.delete("7bbfbcbf-da9e-44ca-9cbb-ab558c64b36a", user_id="James")Output:

# No output, memory is deleted if successfulDelete all memories

storage.delete_all(user_id="James")# No output, all memories for the user are deleted if successful Enhance a memory using LLMs 🔥

Large Langugae Model Integradtion

For now, redcache-ai only supports OpenAI. To integrate redcache-ai memories into openAI, you have to set an OPENAI API Key.

Once you have the key, you'll integrate your text memories like this:

from redcache_ai.config import set_openai_api_key

set_openai_api_key("your-openai-api-key-here") from redcache_ai import RedCache, load_config

# Load the default configuration

config = load_config()

# Initialize RedCache with OpenAI LLM

redcache = RedCache.from_config(config)The output for load_config is below:

{

"llm": {

"provider": "openai",

"config": {

"model": "gpt-4",

"temperature": 0.2,

"max_tokens": 1500,

}

}

}enhanced_memory = redcache.enhance_memory("England has a rich history", user_id="James", category="facts")

summary = redcache.generate_summary(user_id="James")These examples demonstrate the basic usage of redcache-ai. For more detailed information and advanced features, please refer to the full documentation. If implementation details are unclear please see the test_redcache.py file in examples. For more information, visit the discord channel.

What's Next

Redcache-ai is still early. Priority is to fix any and all existing bugs. Improve documentation then:

1.Add integration with more LLM providers including Llama, Mixtral, Claude etc.

2.Add functionality for AI Agents.

3.Provide support for a hosted version.

Happy memory building. ❤️

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for redcache-ai

Similar Open Source Tools

redcache-ai

RedCache-ai is a memory framework designed for Large Language Models and Agents. It provides a dynamic memory framework for developers to build various applications, from AI-powered dating apps to healthcare diagnostics platforms. Users can store, retrieve, search, update, and delete memories using RedCache-ai. The tool also supports integration with OpenAI for enhancing memories. RedCache-ai aims to expand its functionality by integrating with more LLM providers, adding support for AI Agents, and providing a hosted version.

hf-waitress

HF-Waitress is a powerful server application for deploying and interacting with HuggingFace Transformer models. It simplifies running open-source Large Language Models (LLMs) locally on-device, providing on-the-fly quantization via BitsAndBytes, HQQ, and Quanto. It requires no manual model downloads, offers concurrency, streaming responses, and supports various hardware and platforms. The server uses a `config.json` file for easy configuration management and provides detailed error handling and logging.

core

CORE is an open-source unified, persistent memory layer for all AI tools, allowing developers to maintain context across different tools like Cursor, ChatGPT, and Claude. It aims to solve the issue of context switching and information loss between sessions by creating a knowledge graph that remembers conversations, decisions, and insights. With features like unified memory, temporal knowledge graph, browser extension, chat with memory, auto-sync from apps, and MCP integration hub, CORE provides a seamless experience for managing and recalling context. The tool's ingestion pipeline captures evolving context through normalization, extraction, resolution, and graph integration, resulting in a dynamic memory that grows and changes with the user. When recalling from memory, CORE utilizes search, re-ranking, filtering, and output to provide relevant and contextual answers. Security measures include data encryption, authentication, access control, and vulnerability reporting.

promptic

Promptic is a tool designed for LLM app development, providing a productive and pythonic way to build LLM applications. It leverages LiteLLM, allowing flexibility to switch LLM providers easily. Promptic focuses on building features by providing type-safe structured outputs, easy-to-build agents, streaming support, automatic prompt caching, and built-in conversation memory.

LightRAG

LightRAG is a repository hosting the code for LightRAG, a system that supports seamless integration of custom knowledge graphs, Oracle Database 23ai, Neo4J for storage, and multiple file types. It includes features like entity deletion, batch insert, incremental insert, and graph visualization. LightRAG provides an API server implementation for RESTful API access to RAG operations, allowing users to interact with it through HTTP requests. The repository also includes evaluation scripts, code for reproducing results, and a comprehensive code structure.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

sonarqube-mcp-server

The SonarQube MCP Server is a Model Context Protocol (MCP) server that enables seamless integration with SonarQube Server or Cloud for code quality and security. It supports the analysis of code snippets directly within the agent context. The server provides various tools for analyzing code, managing issues, accessing metrics, and interacting with SonarQube projects. It also supports advanced features like dependency risk analysis, enterprise portfolio management, and system health checks. The server can be configured for different transport modes, proxy settings, and custom certificates. Telemetry data collection can be disabled if needed.

crush

Crush is a versatile tool designed to enhance coding workflows in your terminal. It offers support for multiple LLMs, allows for flexible switching between models, and enables session-based work management. Crush is extensible through MCPs and works across various operating systems. It can be installed using package managers like Homebrew and NPM, or downloaded directly. Crush supports various APIs like Anthropic, OpenAI, Groq, and Google Gemini, and allows for customization through environment variables. The tool can be configured locally or globally, and supports LSPs for additional context. Crush also provides options for ignoring files, allowing tools, and configuring local models. It respects `.gitignore` files and offers logging capabilities for troubleshooting and debugging.

shell-ai

Shell-AI (`shai`) is a CLI utility that enables users to input commands in natural language and receive single-line command suggestions. It leverages natural language understanding and interactive CLI tools to enhance command line interactions. Users can describe tasks in plain English and receive corresponding command suggestions, making it easier to execute commands efficiently. Shell-AI supports cross-platform usage and is compatible with Azure OpenAI deployments, offering a user-friendly and efficient way to interact with the command line.

openmacro

Openmacro is a multimodal personal agent that allows users to run code locally. It acts as a personal agent capable of completing and automating tasks autonomously via self-prompting. The tool provides a CLI natural-language interface for completing and automating tasks, analyzing and plotting data, browsing the web, and manipulating files. Currently, it supports API keys for models powered by SambaNova, with plans to add support for other hosts like OpenAI and Anthropic in future versions.

vim-ai

vim-ai is a plugin that adds Artificial Intelligence (AI) capabilities to Vim and Neovim. It allows users to generate code, edit text, and have interactive conversations with GPT models powered by OpenAI's API. The plugin uses OpenAI's API to generate responses, requiring users to set up an account and obtain an API key. It supports various commands for text generation, editing, and chat interactions, providing a seamless integration of AI features into the Vim text editor environment.

npi

NPi is an open-source platform providing Tool-use APIs to empower AI agents with the ability to take action in the virtual world. It is currently under active development, and the APIs are subject to change in future releases. NPi offers a command line tool for installation and setup, along with a GitHub app for easy access to repositories. The platform also includes a Python SDK and examples like Calendar Negotiator and Twitter Crawler. Join the NPi community on Discord to contribute to the development and explore the roadmap for future enhancements.

swarmzero

SwarmZero SDK is a library that simplifies the creation and execution of AI Agents and Swarms of Agents. It supports various LLM Providers such as OpenAI, Azure OpenAI, Anthropic, MistralAI, Gemini, Nebius, and Ollama. Users can easily install the library using pip or poetry, set up the environment and configuration, create and run Agents, collaborate with Swarms, add tools for complex tasks, and utilize retriever tools for semantic information retrieval. Sample prompts are provided to help users explore the capabilities of the agents and swarms. The SDK also includes detailed examples and documentation for reference.

FlashLearn

FlashLearn is a tool that provides a simple interface and orchestration for incorporating Agent LLMs into workflows and ETL pipelines. It allows data transformations, classifications, summarizations, rewriting, and custom multi-step tasks using LLMs. Each step and task has a compact JSON definition, making pipelines easy to understand and maintain. FlashLearn supports LiteLLM, Ollama, OpenAI, DeepSeek, and other OpenAI-compatible clients.

ruby-openai

Use the OpenAI API with Ruby! 🤖🩵 Stream text with GPT-4, transcribe and translate audio with Whisper, or create images with DALL·E... Hire me | 🎮 Ruby AI Builders Discord | 🐦 Twitter | 🧠 Anthropic Gem | 🚂 Midjourney Gem ## Table of Contents * Ruby OpenAI * Table of Contents * Installation * Bundler * Gem install * Usage * Quickstart * With Config * Custom timeout or base URI * Extra Headers per Client * Logging * Errors * Faraday middleware * Azure * Ollama * Counting Tokens * Models * Examples * Chat * Streaming Chat * Vision * JSON Mode * Functions * Edits * Embeddings * Batches * Files * Finetunes * Assistants * Threads and Messages * Runs * Runs involving function tools * Image Generation * DALL·E 2 * DALL·E 3 * Image Edit * Image Variations * Moderations * Whisper * Translate * Transcribe * Speech * Errors * Development * Release * Contributing * License * Code of Conduct

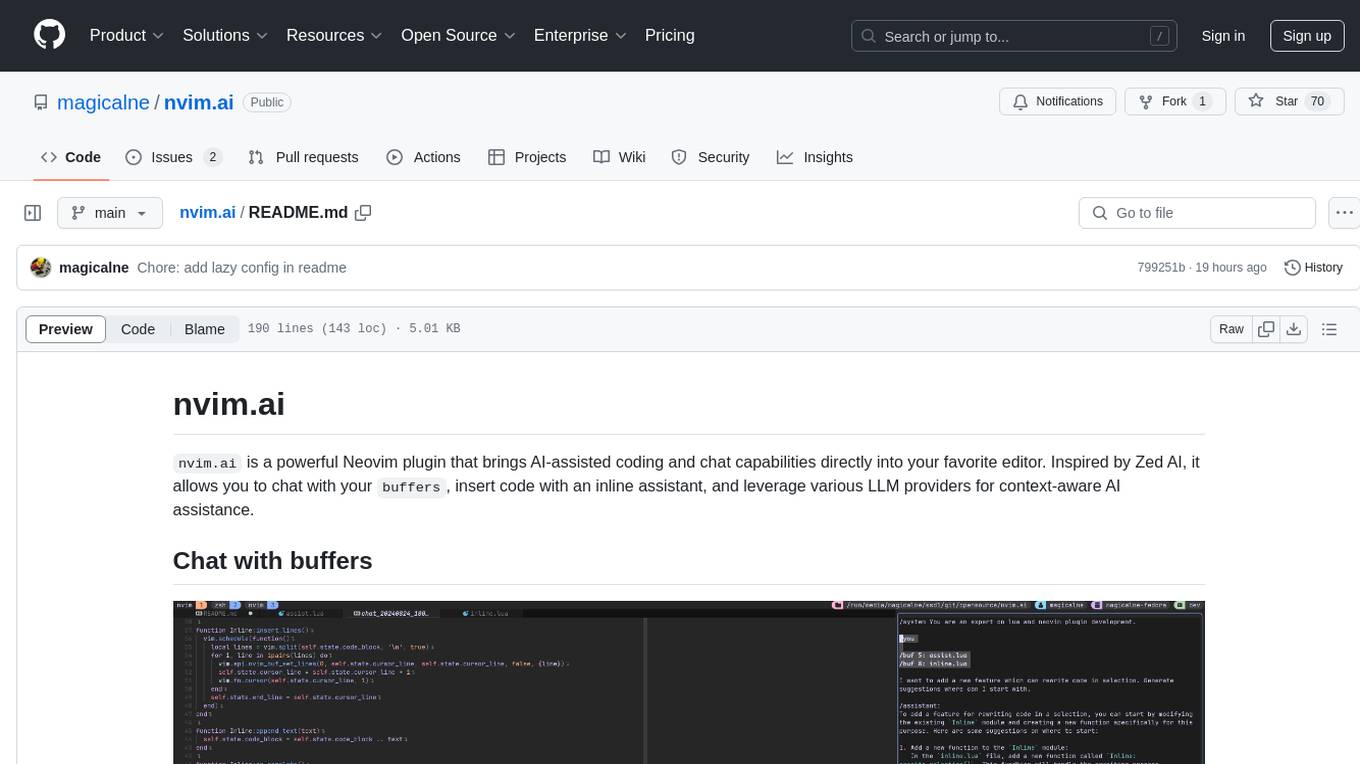

nvim.ai

nvim.ai is a powerful Neovim plugin that enables AI-assisted coding and chat capabilities within the editor. Users can chat with buffers, insert code with an inline assistant, and utilize various LLM providers for context-aware AI assistance. The plugin supports features like interacting with AI about code and documents, receiving relevant help based on current work, code insertion, code rewriting (Work in Progress), and integration with multiple LLM providers. Users can configure the plugin, add API keys to dotfiles, and integrate with nvim-cmp for command autocompletion. Keymaps are available for chat and inline assist functionalities. The chat dialog allows parsing content with keywords and supports roles like /system, /you, and /assistant. Context-aware assistance can be accessed through inline assist by inserting code blocks anywhere in the file.

For similar tasks

mem0

Mem0 is a tool that provides a smart, self-improving memory layer for Large Language Models, enabling personalized AI experiences across applications. It offers persistent memory for users, sessions, and agents, self-improving personalization, a simple API for easy integration, and cross-platform consistency. Users can store memories, retrieve memories, search for related memories, update memories, get the history of a memory, and delete memories using Mem0. It is designed to enhance AI experiences by enabling long-term memory storage and retrieval.

redcache-ai

RedCache-ai is a memory framework designed for Large Language Models and Agents. It provides a dynamic memory framework for developers to build various applications, from AI-powered dating apps to healthcare diagnostics platforms. Users can store, retrieve, search, update, and delete memories using RedCache-ai. The tool also supports integration with OpenAI for enhancing memories. RedCache-ai aims to expand its functionality by integrating with more LLM providers, adding support for AI Agents, and providing a hosted version.

mcp-memory-service

The MCP Memory Service is a universal memory service designed for AI assistants, providing semantic memory search and persistent storage. It works with various AI applications and offers fast local search using SQLite-vec and global distribution through Cloudflare. The service supports intelligent memory management, universal compatibility with AI tools, flexible storage options, and is production-ready with cross-platform support and secure connections. Users can store and recall memories, search by tags, check system health, and configure the service for Claude Desktop integration and environment variables.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.