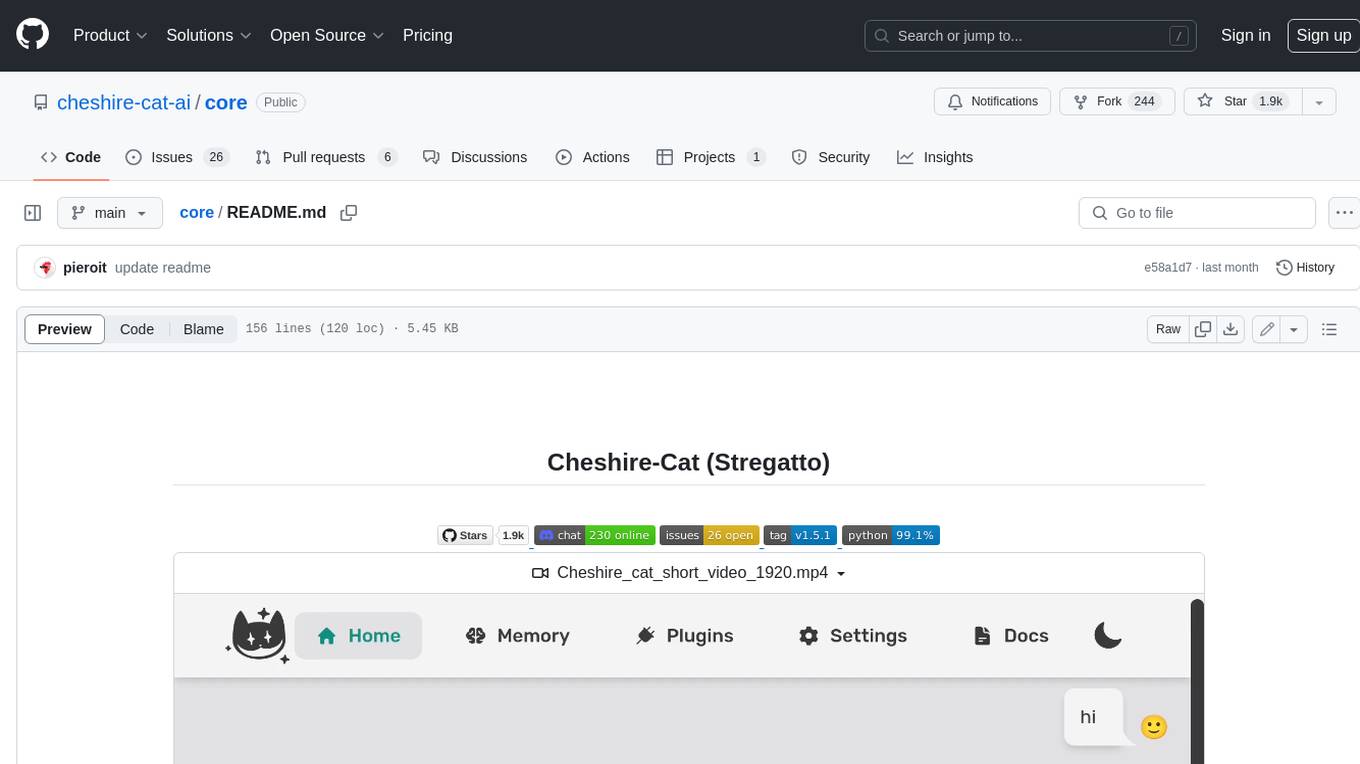

core

AI agent microservice

Stars: 2650

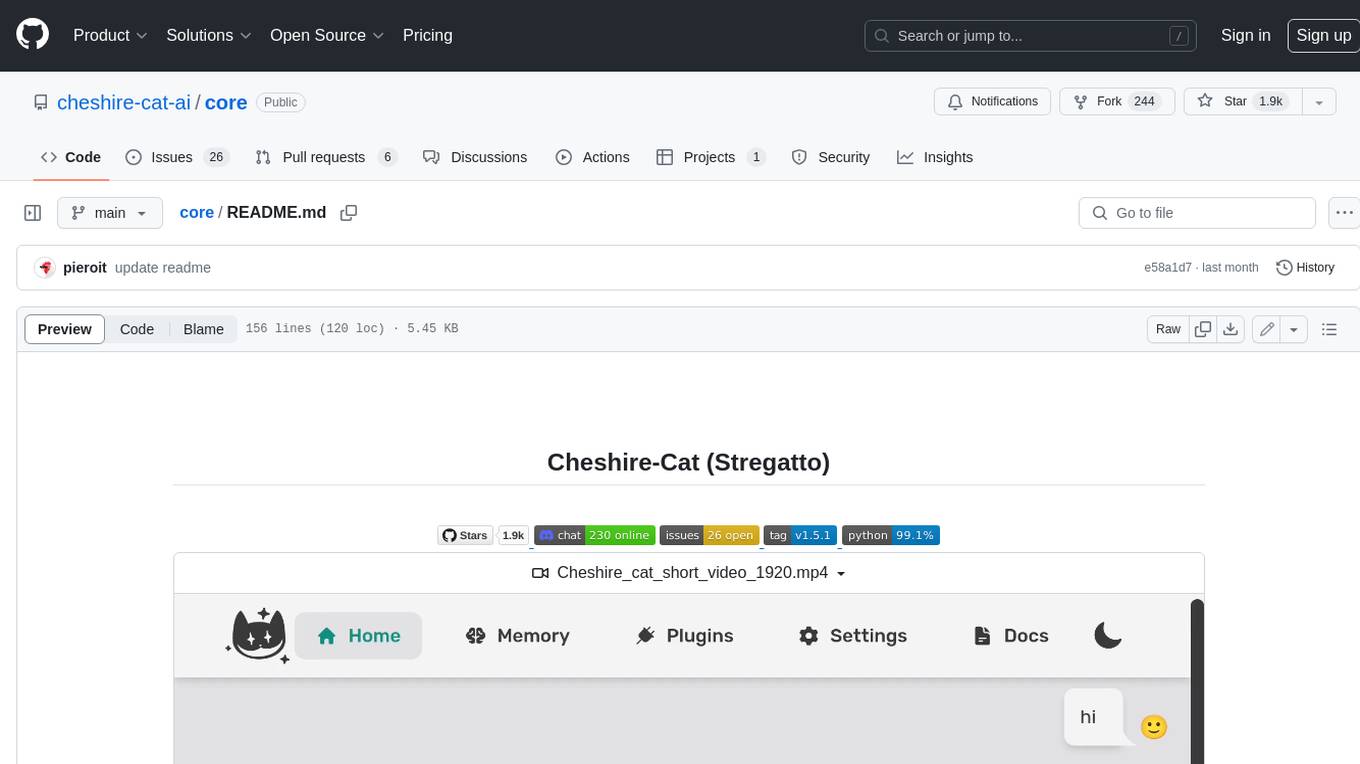

The Cheshire Cat is a framework for building custom AIs on top of any language model. It provides an API-first approach, making it easy to add a conversational layer to your application. The Cat remembers conversations and documents, and uses them in conversation. It is extensible via plugins, and supports event callbacks, function calling, and conversational forms. The Cat is easy to use, with an admin panel that allows you to chat with the AI, visualize memory and plugins, and adjust settings. It is also production-ready, 100% dockerized, and supports any language model.

README:

The Cheshire Cat is a framework to build custom AI agents:

- ⚡️ API first, to easily add a conversational layer to your app

- 💬 Chat via WebSocket and manage your agent with an customizable REST API

- 🐘 Built-in RAG with Qdrant

- 🚀 Extensible via plugins

- 🪛 Event callbacks, function calling (tools), conversational forms

- 🏛 Easy to use admin panel

- 🌍 Supports any language model via langchain

- 👥 Multiuser with granular permissions, compatible with any identity provider

- 🐋 100% dockerized

- 🦄 Active Discord community and easy to understand docs

To make Cheshire Cat run on your machine, you just need docker installed:

docker run --rm -it -p 1865:80 ghcr.io/cheshire-cat-ai/core:latest- Chat with the Cheshire Cat on localhost:1865/admin

- Try out the REST API on localhost:1865/docs

Enjoy the Cat!

Follow instructions on how to run it properly with docker compose and volumes.

Hooks (events)

from cat.mad_hatter.decorators import hook

# hooks are an event system to get finegraned control over your assistant

@hook

def agent_prompt_prefix(prefix, cat):

prefix = """You are Marvin the socks seller, a poetic vendor of socks.

You are an expert in socks, and you reply with exactly one rhyme.

"""

return prefixTools

from cat.mad_hatter.decorators import tool

# langchain inspired tools (function calling)

@tool(return_direct=True)

def socks_prices(color, cat):

"""How much do socks cost? Input is the sock color."""

prices = {

"black": 5,

"white": 10,

"pink": 50,

}

price = prices.get(color, 0)

return f"{price} bucks, meeeow!" Conversational Forms

from pydantic import BaseModel

from cat.experimental.form import form, CatForm

# data structure to fill up

class PizzaOrder(BaseModel):

pizza_type: str

phone: int

# forms let you control goal oriented conversations

@form

class PizzaForm(CatForm):

description = "Pizza Order"

model_class = PizzaOrder

start_examples = [

"order a pizza!",

"I want pizza"

]

stop_examples = [

"stop pizza order",

"not hungry anymore",

]

ask_confirm = True

def submit(self, form_data):

# do the actual order here!

# return to convo

return {

"output": f"Pizza order on its way: {form_data}"

}Detailed roadmap is here.

Send your pull request to the develop branch. Here is a full guide to contributing.

We are committed to openness, privacy and creativity, we want to bring AI to the long tail. If you want to know more about our vision and values, read the Code of Ethics.

Join our community on Discord and give the project a star ⭐! Thanks again!🙏

Code is licensed under GPL3.

The Cheshire Cat AI logo and name are property of Piero Savastano (founder and maintainer).

"Would you tell me, please, which way I ought to go from here?"

"That depends a good deal on where you want to get to," said the Cat.

"I don't much care where--" said Alice.

"Then it doesn't matter which way you go," said the Cat.

(Alice's Adventures in Wonderland - Lewis Carroll)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for core

Similar Open Source Tools

core

The Cheshire Cat is a framework for building custom AIs on top of any language model. It provides an API-first approach, making it easy to add a conversational layer to your application. The Cat remembers conversations and documents, and uses them in conversation. It is extensible via plugins, and supports event callbacks, function calling, and conversational forms. The Cat is easy to use, with an admin panel that allows you to chat with the AI, visualize memory and plugins, and adjust settings. It is also production-ready, 100% dockerized, and supports any language model.

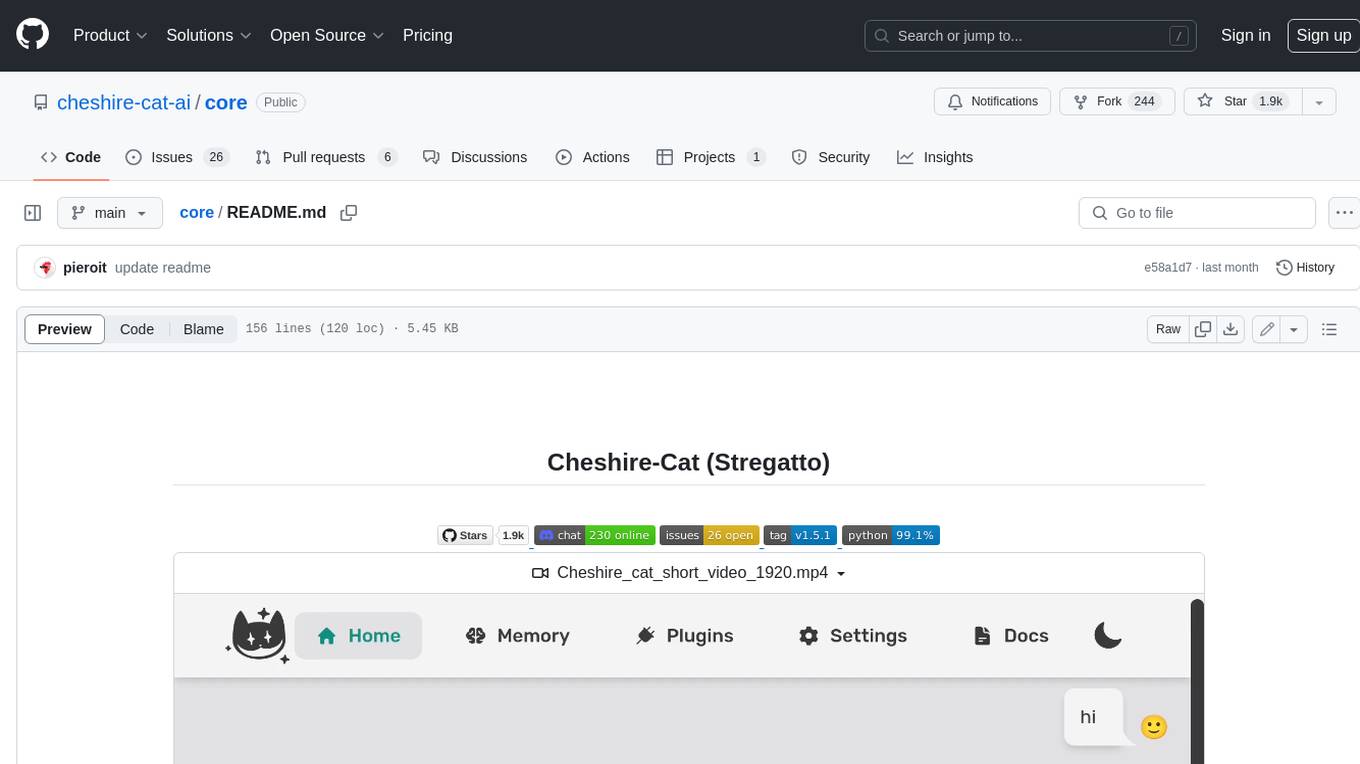

1backend

1Backend is a flexible and scalable platform designed for running AI models on private servers and handling high-concurrency workloads. It provides a ChatGPT-like interface for users and a network-accessible API for machines, serving as a general-purpose backend framework. The platform offers on-premise ChatGPT alternatives, a microservices-first web framework, out-of-the-box services like file uploads and user management, infrastructure simplification acting as a container orchestrator, reverse proxy, multi-database support with its own ORM, and AI integration with platforms like LlamaCpp and StableDiffusion.

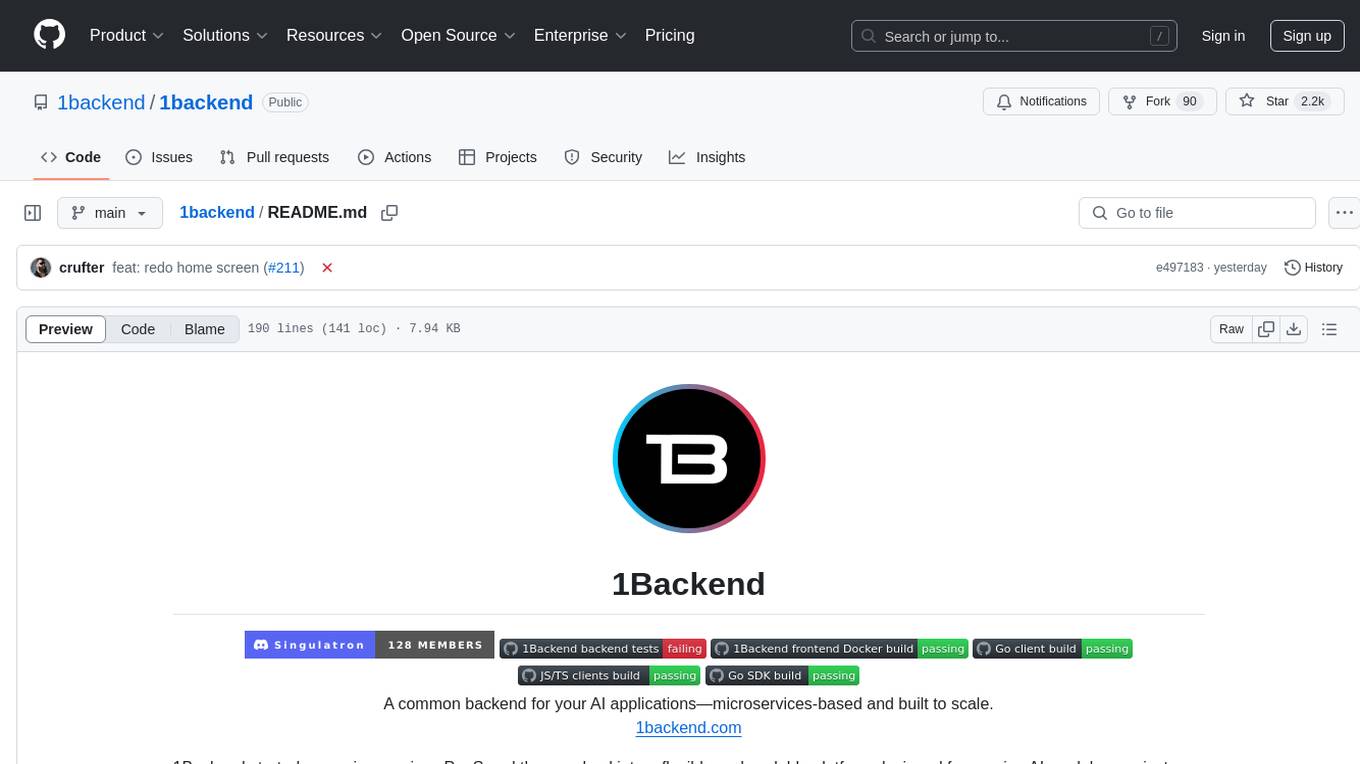

openorch

OpenOrch is a daemon that transforms servers into a powerful development environment, running AI models, containers, and microservices. It serves as a blend of Kubernetes and a language-agnostic backend framework for building applications on fixed-resource setups. Users can deploy AI models and build microservices, managing applications while retaining control over infrastructure and data.

voice-chat-ai

Voice Chat AI is a project that allows users to interact with different AI characters using speech. Users can choose from various characters with unique personalities and voices, and have conversations or role play with them. The project supports OpenAI, xAI, or Ollama language models for chat, and provides text-to-speech synthesis using XTTS, OpenAI TTS, or ElevenLabs. Users can seamlessly integrate visual context into conversations by having the AI analyze their screen. The project offers easy configuration through environment variables and can be run via WebUI or Terminal. It also includes a huge selection of built-in characters for engaging conversations.

pandas-ai

PandasAI is a Python library that makes it easy to ask questions to your data in natural language. It helps you to explore, clean, and analyze your data using generative AI.

openai-cf-workers-ai

OpenAI for Workers AI is a simple, quick, and dirty implementation of OpenAI's API on Cloudflare's new Workers AI platform. It allows developers to use the OpenAI SDKs with the new LLMs without having to rewrite all of their code. The API currently supports completions, chat completions, audio transcription, embeddings, audio translation, and image generation. It is not production ready but will be semi-regularly updated with new features as they roll out to Workers AI.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

Bard-API

The Bard API is a Python package that returns responses from Google Bard through the value of a cookie. It is an unofficial API that operates through reverse-engineering, utilizing cookie values to interact with Google Bard for users struggling with frequent authentication problems or unable to authenticate via Google Authentication. The Bard API is not a free service, but rather a tool provided to assist developers with testing certain functionalities due to the delayed development and release of Google Bard's API. It has been designed with a lightweight structure that can easily adapt to the emergence of an official API. Therefore, using it for any other purposes is strongly discouraged. If you have access to a reliable official PaLM-2 API or Google Generative AI API, replace the provided response with the corresponding official code. Check out https://github.com/dsdanielpark/Bard-API/issues/262.

Kuebiko

Kuebiko is a Twitch Chat Bot that reads twitch chat and generates text-to-speech responses using Google Cloud API and OpenAI's GPT-3 text completion model. It allows users to set up their own VTuber AI similar to 'Neuro-Sama'. The project is built with Python and requires setting up various API keys and configurations to enable the bot functionality. Users can customize the voice of their VTuber and route audio using VBAudio Cable. Kuebiko provides a unique way to interact with viewers through chat responses and captions in OBS.

robocorp

Robocorp is a platform that allows users to create, deploy, and operate Python automations and AI actions. It provides an easy way to extend the capabilities of AI agents, assistants, and copilots with custom actions written in Python. Users can create and deploy tools, skills, loaders, and plugins that securely connect any AI Assistant platform to their data and applications. The Robocorp Action Server makes Python scripts compatible with ChatGPT and LangChain by automatically creating and exposing an API based on function declaration, type hints, and docstrings. It simplifies the process of developing and deploying AI actions, enabling users to interact with AI frameworks effortlessly.

fabric

Fabric is an open-source framework for augmenting humans using AI. It provides a structured approach to breaking down problems into individual components and applying AI to them one at a time. Fabric includes a collection of pre-defined Patterns (prompts) that can be used for a variety of tasks, such as extracting the most interesting parts of YouTube videos and podcasts, writing essays, summarizing academic papers, creating AI art prompts, and more. Users can also create their own custom Patterns. Fabric is designed to be easy to use, with a command-line interface and a variety of helper apps. It is also extensible, allowing users to integrate it with their own AI applications and infrastructure.

ray-llm

RayLLM (formerly known as Aviary) is an LLM serving solution that makes it easy to deploy and manage a variety of open source LLMs, built on Ray Serve. It provides an extensive suite of pre-configured open source LLMs, with defaults that work out of the box. RayLLM supports Transformer models hosted on Hugging Face Hub or present on local disk. It simplifies the deployment of multiple LLMs, the addition of new LLMs, and offers unique autoscaling support, including scale-to-zero. RayLLM fully supports multi-GPU & multi-node model deployments and offers high performance features like continuous batching, quantization and streaming. It provides a REST API that is similar to OpenAI's to make it easy to migrate and cross test them. RayLLM supports multiple LLM backends out of the box, including vLLM and TensorRT-LLM.

MITSUHA

OneReality is a virtual waifu/assistant that you can speak to through your mic and it'll speak back to you! It has many features such as: * You can speak to her with a mic * It can speak back to you * Has short-term memory and long-term memory * Can open apps * Smarter than you * Fluent in English, Japanese, Korean, and Chinese * Can control your smart home like Alexa if you set up Tuya (more info in Prerequisites) It is built with Python, Llama-cpp-python, Whisper, SpeechRecognition, PocketSphinx, VITS-fast-fine-tuning, VITS-simple-api, HyperDB, Sentence Transformers, and Tuya Cloud IoT.

actions

Sema4.ai Action Server is a tool that allows users to build semantic actions in Python to connect AI agents with real-world applications. It enables users to create custom actions, skills, loaders, and plugins that securely connect any AI Assistant platform to data and applications. The tool automatically creates and exposes an API based on function declaration, type hints, and docstrings by adding '@action' to Python scripts. It provides an end-to-end stack supporting various connections between AI and user's apps and data, offering ease of use, security, and scalability.

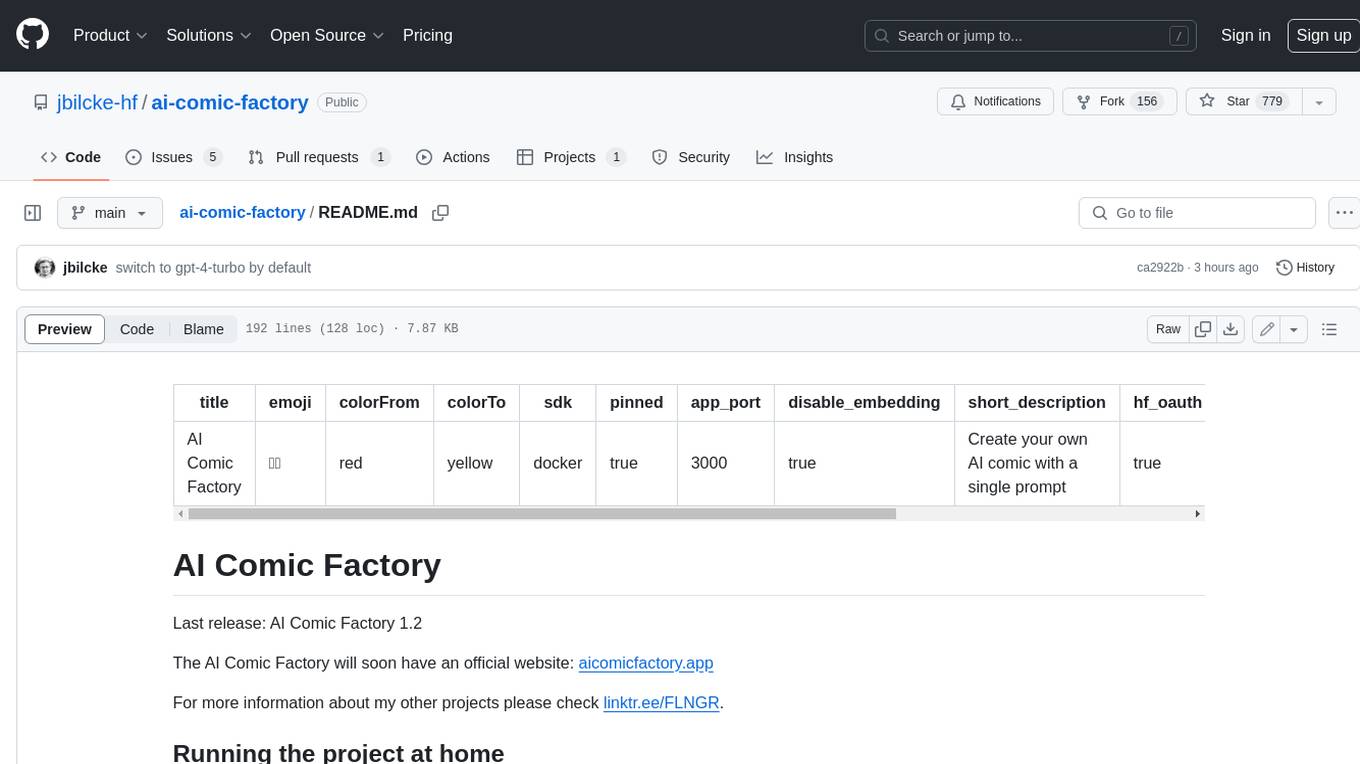

ai-comic-factory

The AI Comic Factory is a tool that allows you to create your own AI comics with a single prompt. It uses a large language model (LLM) to generate the story and dialogue, and a rendering API to generate the panel images. The AI Comic Factory is open-source and can be run on your own website or computer. It is a great tool for anyone who wants to create their own comics, or for anyone who is interested in the potential of AI for storytelling.

PythonAI

PythonAI is an open-source AI Assistant designed for the Raspberry Pi by Kevin McAleer. The project aims to enhance the capabilities of the Raspberry Pi by providing features such as conversation history, a conversation API, a web interface, a skills framework using plugin technology, and an event framework for adding functionality via plugins. The tool utilizes the Vosk offline library for speech-to-text conversion and offers a simple skills framework for easy implementation of new skills. Users can create new skills by adding Python files to the 'skills' folder and updating the 'skills.json' file. PythonAI is designed to be easy to read, maintain, and extend, making it a valuable tool for Raspberry Pi enthusiasts looking to build AI applications.

For similar tasks

core

The Cheshire Cat is a framework for building custom AIs on top of any language model. It provides an API-first approach, making it easy to add a conversational layer to your application. The Cat remembers conversations and documents, and uses them in conversation. It is extensible via plugins, and supports event callbacks, function calling, and conversational forms. The Cat is easy to use, with an admin panel that allows you to chat with the AI, visualize memory and plugins, and adjust settings. It is also production-ready, 100% dockerized, and supports any language model.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.