calfkit-sdk

🐮 The SDK to build event-driven, distributed AI agents on Kafka

Stars: 86

The Calfkit SDK is a Python SDK designed to build event-driven, distributed AI agents. It allows users to compose agents with independent services such as chat, tools, and routing that communicate asynchronously. The SDK enables users to add agent capabilities without coordination, scale each component independently, and stream agent outputs to any downstream system. Calfkit aims to create AI employees that integrate seamlessly into existing systems by providing benefits like loose coupling, horizontal scalability, and event persistence for reliable message delivery.

README:

The SDK to build event-driven, distributed AI agents.

Calfkit lets you compose agents with independent services—chat, tools, routing—that communicate asynchronously. Add agent capabilities without coordination. Scale each component independently. Stream agent outputs to any downstream system.

Agents should work like real teams, with independent, distinct roles, async communication, and the ability to onboard new teammates or tools without restructuring the whole org. Build AI employees that integrate.

pip install calfkitBuilding agents like traditional web applications, with tight coupling and synchronous API calls, creates the same scalability problems that plagued early microservices:

- Tight coupling: Changing one tool or agent breaks dependent agents and tools

- Scaling bottlenecks: Since all agents and tools live on one runtime, everything must scale together

- Siloed outputs: Agent and tool outputs stay trapped in your AI layer, streaming outputs to external dependencies is not as natural as distributed, event-driven designs

Event-driven architectures provide the solution. Instead of direct API calls between components, agents and tools asynchronously communicate. Each component runs independently, scales horizontally, and outputs can flow anywhere: CRMs, data warehouses, analytics platforms, other agents, or even more tools.

Calfkit is a Python SDK that builds event-driven agents out-the-box. You get all the benefits of a asynchronous, distributed system (loose coupling, horizontal scalability, durability) without the complexity of managing event-driven infrastructure and orchestration yourself.

- Distributed agents out-the-box: Build event-driven, multi-service agents without writing orchestration code or managing infrastructure

- Add agent capabilities without touching existing code: Deploy new tool capabilities as independent services that agents can dynamically discover, no need to touch your agent code

- Scale what you need, when you need it: Chat handling, tool execution, and routing each scale independently based on demand

- Nothing gets lost: Event persistence ensures reliable message delivery and traceability, even during service failures or restarts

- High throughput under pressure: Asynchronous communication decouples requests from processing, so Calfkit agents work through bursty traffic reliably, maximizing throughput

- Real-time responses: Low-latency event processing enables agents to react instantly to incoming data

- Development team independence: Because of the decoupled design, dev teams can develop and deploy chat, tools, routing, and upstream or downstream dependencies in parallel without cross-team collaboration overhead

- Universal data flow: Decoupling enables data to flow freely in both directions.

- Downstream, agent outputs can be streamed to any system (CRMs, customer data platforms, warehouses, or even another AI workflow).

- Upstream, tools can wrap any data sources and deploy independently, no coordination needed.

- Python 3.10 or later

- Docker installed and running (for local testing with a Calfkit broker)

- OpenAI API key (or another OpenAI API compliant LLM provider)

pip install calfkitSkip the infrastructure. Calfkit Cloud is a fully-managed Kafka service built for Calfkit AI agents and multi-agent teams. No server infrastructure to self-host or maintain, with built-in observability and agent-event tracing.

Coming soon. Fill out the interest form →

Calfkit uses Kafka as the event broker. Run the following command to clone the calfkit-broker repo and start a local Kafka broker container:

git clone https://github.com/calf-ai/calfkit-broker && cd calfkit-broker && make dev-upOnce the broker is ready, open a new terminal tab to continue with the quickstart.

Define and deploy a tool as an independent service. Tools are not owned by or coupled to any specific agent—once deployed, any agent in your system can discover and invoke the tool. Deploy once, use everywhere.

# weather_tool.py

import asyncio

from calfkit.nodes import agent_tool

from calfkit.broker import BrokerClient

from calfkit.runners import NodesService

# Example tool definition

@agent_tool

def get_weather(location: str) -> str:

"""Get the current weather at a location"""

return f"It's sunny in {location}"

async def main():

broker_client = BrokerClient(bootstrap_servers="localhost:9092") # Connect to Kafka broker

service = NodesService(broker_client) # Initialize a service instance

service.register_node(get_weather) # Register the tool node in the service

await service.run() # (Blocking call) Deploy the service to start serving traffic

if __name__ == "__main__":

asyncio.run(main())Run the file to deploy the tool service:

python weather_tool.pyDeploy the LLM chat node as its own service.

# chat_service.py

import asyncio

from calfkit.nodes import ChatNode

from calfkit.providers import OpenAIModelClient

from calfkit.broker import BrokerClient

from calfkit.runners import NodesService

async def main():

broker_client = BrokerClient(bootstrap_servers="localhost:9092") # Connect to Kafka broker

model_client = OpenAIModelClient(model_name="gpt-5-nano")

chat_node = ChatNode(model_client) # Inject a model client into the chat node definition so the chat deployment can perform LLM calls

service = NodesService(broker_client) # Initialize a service instance

service.register_node(chat_node) # Register the chat node in the service

await service.run() # (Blocking call) Deploy the service to start serving traffic

if __name__ == "__main__":

asyncio.run(main())Set your OpenAI API key:

export OPENAI_API_KEY=sk-...Run the file to deploy the chat service:

python chat_service.pyDeploy the agent router that orchestrates chat, tools, and conversation-level memory.

# agent_router_service.py

import asyncio

from calfkit.nodes import agent_tool, AgentRouterNode, ChatNode

from calfkit.stores import InMemoryMessageHistoryStore

from calfkit.broker import BrokerClient

from calfkit.runners import NodesService

from weather_tool import get_weather # Import the tool, the tool definition is reusable

async def main():

broker_client = BrokerClient(bootstrap_servers="localhost:9092") # Connect to Kafka broker

router_node = AgentRouterNode(

chat_node=ChatNode(), # Provide the chat node definition for the router to orchestrate the nodes

tool_nodes=[get_weather],

system_prompt="You are a helpful assistant",

message_history_store=InMemoryMessageHistoryStore(), # Stores messages in-memory in the deployment runtime

)

service = NodesService(broker_client) # Initialize a service instance

service.register_node(router_node) # Register the router node in the service

await service.run() # (Blocking call) Deploy the service to start serving traffic

if __name__ == "__main__":

asyncio.run(main())Run the file to deploy the agent router service:

python agent_router_service.pySend a request and receive the response.

When invoking an already-deployed agent, use the RouterServiceClient. The node is just a configuration object, so you don't need to redefine the deployment parameters.

# client.py

import asyncio

from calfkit.nodes import AgentRouterNode

from calfkit.broker import BrokerClient

from calfkit.runners import RouterServiceClient

async def main():

broker_client = BrokerClient(bootstrap_servers="localhost:9092") # Connect to Kafka broker

# Thin client - no deployment parameters needed

router_node = AgentRouterNode()

client = RouterServiceClient(broker_client, router_node)

# Invoke and wait for response

response = await client.invoke(user_prompt="What's the weather in Tokyo?")

final_msg = await response.get_final_response()

print(f"Assistant: {final_msg.text}")

if __name__ == "__main__":

asyncio.run(main())Run the file to invoke the agent:

python client.pyThe RouterServiceClient handles ephemeral Kafka communication and cleanup automatically. You can also stream intermediate messages:

response = await client.invoke(user_prompt="What's the weather in Tokyo?")

# Stream all messages (tool calls, intermediate responses, etc.)

async for message in response.messages_stream():

print(message)Clients can override the system prompt and restrict available tools at invocation time without redeploying:

from weather_tool import get_weather

# Client with runtime patches

router_node = AgentRouterNode(

system_prompt="You are an assistant with no tools :(", # Override the deployed system prompt

tool_nodes=[], # Patch in any subset of the deployed agent's set of tools

)

client = RouterServiceClient(broker_client, router_node)

response = await client.invoke(user_prompt="Weather in Tokyo?")This lets different clients customize agent behavior per-request. Tool patching is currently limited to subsets of tools configured in the deployed router.

Scalable agent teams must progress beyond brittle, tightly coupled, synchronous coordination. This means embracing event-driven, asynchronous communication patterns between agents and their dependencies.

If you found this project interesting or useful, please consider:

- ⭐ Starring the repository — it helps others discover it!

- 🐛 Reporting issues

- 🔀 Submitting PRs

This project is licensed under the Apache License 2.0. See the LICENSE file for details.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for calfkit-sdk

Similar Open Source Tools

calfkit-sdk

The Calfkit SDK is a Python SDK designed to build event-driven, distributed AI agents. It allows users to compose agents with independent services such as chat, tools, and routing that communicate asynchronously. The SDK enables users to add agent capabilities without coordination, scale each component independently, and stream agent outputs to any downstream system. Calfkit aims to create AI employees that integrate seamlessly into existing systems by providing benefits like loose coupling, horizontal scalability, and event persistence for reliable message delivery.

Upsonic

Upsonic offers a cutting-edge enterprise-ready framework for orchestrating LLM calls, agents, and computer use to complete tasks cost-effectively. It provides reliable systems, scalability, and a task-oriented structure for real-world cases. Key features include production-ready scalability, task-centric design, MCP server support, tool-calling server, computer use integration, and easy addition of custom tools. The framework supports client-server architecture and allows seamless deployment on AWS, GCP, or locally using Docker.

agents

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

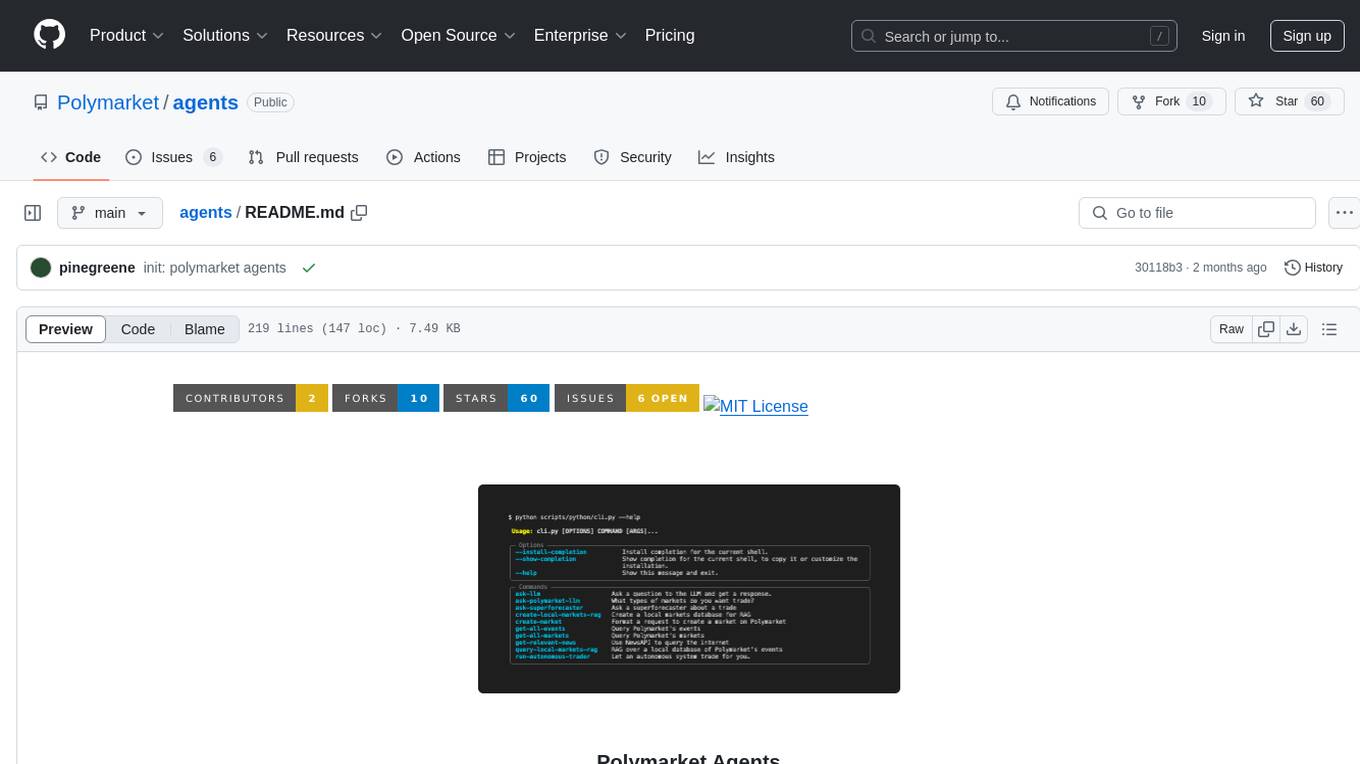

TapeAgents

TapeAgents is a framework that leverages a structured, replayable log of the agent session to facilitate all stages of the LLM Agent development lifecycle. The agent reasons by processing the tape and the LLM output to produce new thoughts, actions, control flow steps, and append them to the tape. Key features include building agents as low-level state machines or high-level multi-agent team configurations, debugging agents with TapeAgent studio or TapeBrowser apps, serving agents with response streaming, and optimizing agent configurations using successful tapes. The Tape-centric design of TapeAgents provides ultimate flexibility in project development, allowing access to tapes for making prompts, generating next steps, and controlling agent behavior.

project_alice

Alice is an agentic workflow framework that integrates task execution and intelligent chat capabilities. It provides a flexible environment for creating, managing, and deploying AI agents for various purposes, leveraging a microservices architecture with MongoDB for data persistence. The framework consists of components like APIs, agents, tasks, and chats that interact to produce outputs through files, messages, task results, and URL references. Users can create, test, and deploy agentic solutions in a human-language framework, making it easy to engage with by both users and agents. The tool offers an open-source option, user management, flexible model deployment, and programmatic access to tasks and chats.

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

amazon-bedrock-agentcore-samples

Amazon Bedrock AgentCore Samples repository provides examples and tutorials to deploy and operate AI agents securely at scale using any framework and model. It is framework-agnostic and model-agnostic, allowing flexibility in deployment. The repository includes tutorials, end-to-end applications, integration guides, deployment automation, and full-stack reference applications for developers to understand and implement Amazon Bedrock AgentCore capabilities into their applications.

vector-vein

VectorVein is a no-code AI workflow software inspired by LangChain and langflow, aiming to combine the powerful capabilities of large language models and enable users to achieve intelligent and automated daily workflows through simple drag-and-drop actions. Users can create powerful workflows without the need for programming, automating all tasks with ease. The software allows users to define inputs, outputs, and processing methods to create customized workflow processes for various tasks such as translation, mind mapping, summarizing web articles, and automatic categorization of customer reviews.

Revornix

Revornix is an information management tool designed for the AI era. It allows users to conveniently integrate all visible information and generates comprehensive reports at specific times. The tool offers cross-platform availability, all-in-one content aggregation, document transformation & vectorized storage, native multi-tenancy, localization & open-source features, smart assistant & built-in MCP, seamless LLM integration, and multilingual & responsive experience for users.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

litlytics

LitLytics is an affordable analytics platform leveraging LLMs for automated data analysis. It simplifies analytics for teams without data scientists, generates custom pipelines, and allows customization. Cost-efficient with low data processing costs. Scalable and flexible, works with CSV, PDF, and plain text data formats.

mattermost-plugin-agents

The Mattermost Agents Plugin integrates AI capabilities directly into your Mattermost workspace, allowing users to run local LLMs on their infrastructure or connect to cloud providers. It offers multiple AI assistants with specialized personalities, thread and channel summarization, action item extraction, meeting transcription, semantic search, smart reactions, direct conversations with AI assistants, and flexible LLM support. The plugin comes with comprehensive documentation, installation instructions, system requirements, and development guidelines for users to interact with AI features and configure LLM providers.

llm-d-inference-sim

The `llm-d-inference-sim` is a lightweight, configurable, and real-time simulator designed to mimic the behavior of vLLM without the need for GPUs or running heavy models. It operates as an OpenAI-compliant server, allowing developers to test clients, schedulers, and infrastructure using realistic request-response cycles, token streaming, and latency patterns. The simulator offers modes of operation, response generation from predefined text or real datasets, latency simulation, tokenization options, LoRA management, KV cache simulation, failure injection, and deployment options for standalone or Kubernetes testing. It supports a subset of standard vLLM Prometheus metrics for observability.

cosdata

Cosdata is a cutting-edge AI data platform designed to power the next generation search pipelines. It features immutability, version control, and excels in semantic search, structured knowledge graphs, hybrid search capabilities, real-time search at scale, and ML pipeline integration. The platform is customizable, scalable, efficient, enterprise-grade, easy to use, and can manage multi-modal data. It offers high performance, indexing, low latency, and high requests per second. Cosdata is designed to meet the demands of modern search applications, empowering businesses to harness the full potential of their data.

AntSK

AntSK is an AI knowledge base/agent built with .Net8+Blazor+SemanticKernel. It features a semantic kernel for accurate natural language processing, a memory kernel for continuous learning and knowledge storage, a knowledge base for importing and querying knowledge from various document formats, a text-to-image generator integrated with StableDiffusion, GPTs generation for creating personalized GPT models, API interfaces for integrating AntSK into other applications, an open API plugin system for extending functionality, a .Net plugin system for integrating business functions, real-time information retrieval from the internet, model management for adapting and managing different models from different vendors, support for domestic models and databases for operation in a trusted environment, and planned model fine-tuning based on llamafactory.

For similar tasks

calfkit-sdk

The Calfkit SDK is a Python SDK designed to build event-driven, distributed AI agents. It allows users to compose agents with independent services such as chat, tools, and routing that communicate asynchronously. The SDK enables users to add agent capabilities without coordination, scale each component independently, and stream agent outputs to any downstream system. Calfkit aims to create AI employees that integrate seamlessly into existing systems by providing benefits like loose coupling, horizontal scalability, and event persistence for reliable message delivery.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.