llm-rank-optimizer

None

Stars: 90

This repository contains code for manipulating Large Language Models (LLMs) to increase the visibility of specific content or products in search engine recommendations. By adding a Strategic Text Sequence (STS) to a product's information page, the target product's rank in the LLM's recommendation can be optimized. The code includes scripts for generating and evaluating the STS, as well as plotting the results. The tool requires NVIDIA A100 GPUs for optimization and can be run in a Conda environment.

README:

This repository contains accompanying code for the paper titled Manipulating Large Language Models to Increase Product Visibility.

Large language models (LLMs) are increasingly being integrated into search engines to provide natural language responses tailored to user queries. Customers and end-users are becoming more dependent on these models to make purchase decisions and access new information. In this work, we investigate whether an LLM can be manipulated to enhance the visibility of specific content or products in its recommendations. We demonstrate that adding a strategic text sequence (STS)—a carefully crafted message—to a product's information page or a website's content can significantly increase its likelihood of being listed as the LLM's top recommendations. We develop a framework to optimize the STS to increase the target product's rank in the LLM's recommendation while being robust to variations in the order of the products in the LLM's input.

To understand the impact of the strategic text sequences, we conduct empirical analyses using datasets comprising catalogs of consumer products (such as coffee machines, books, and cameras) and a collection of political articles. We measure the change in visibility of a product or an article before and after the inclusion of the STS. We observe that the STS significantly enhances the visibility of several products and articles by increasing their chances of appearing as the LLM's top recommendation. This ability to manipulate LLM-generated search responses provides vendors and political entities with a considerable competitive advantage, posing potential risks to fair market competition and the impartiality of public opinion.

The following figure shows the impact of adding an STS to a product's information page. In the "Before" scenario, the target product is not mentioned in the LLM's recommendations. However, in the "After" scenario, the STS on the product's information page enables the target product to appear at the first position, improving its visibility in the LLM's recommendation.

Generating STS: The file rank_opt.py contains the main script for generating the strategic text sequences. It uses the list of products in data/coffee_machines.jsonl as the catalog. It optimizes

the probability of the target product's rank being 1.

Following is an example command for running this script:

python rank_opt.py --results_dir [path/to/save/results] --target_product_idx [num] --num_iter [num] --test_iter [num] --random_order --mode [self or transfer]

Options:

-

--results_dir: To specify the location to save the outputs of the script, such as the STS of the target product. -

--target_product_idx: To specify the index of the target product in the list of products indata/coffee_machines.jsonl. -

--num_iter: Number of iterations of the optimization algorithm. -

--test_iter: Interval to test the STS. -

--random_order: To optimize the STS to tolerate variations in the product order. -

--mode: Mode in which to generate the STS:a.

self: Optimize and test STS on the same LLM (applicable to open-access LLMs like Llama)b.

transfer: Optimize to transfer to a different LLM (applicable for API-access models like GPT-3.5), e.g., Optimize using Llama and Vicuna, and test on GPT-3.5.

rank_opt.py generates the STS for the target product and plots the target loss and the rank of the target product in the results directory.

See self.sh and transfer.sh in bash script for usage of the above options.

coffee_machines.jsonl in data contains a catalog of ten fictitious coffee machines listed in increasing order of price.

Evaluating STS: evaluate.py evaluates the STS generated by rank_opt.py. We obtain product recommendations from an LLM with and without the STS in the target product's description in the catalog. We then compare the rank of the target product in the LLM's recommendation in the two scenarios. We repeat this experiment several times to quantify the advantage obtained from using the STS.

Following is an example command for running the evaluation script:

python evaluate.py --model_path [LLM for STS evaluation] --prod_idx [num] --sts_dir [path/to/STS] --num_iter [num] --prod_ord [random or fixed]

Options:

-

--model_path: Path to the LLM to use for STS evaluation. -

--prod_idx: Target product index. -

--sts_dir: Path to STS to evaluate. Same as--results_dirforrank_opt.py. -

--num_iter: To specify the number of evaluations. -

--prod_ord: To specify the product order in the LLMs input.

Plotting Results: plot_dist.py plots the distribution of the target product's rank before and after STS insertion. It also plots the advantage obtained by using the STS (% of times the target product ranks higher).

See scripts eval_self.sh and eval_transfer.sh for usage of evaluate.py and plot_dist.py.

System Requirements: The strategic text sequences were optimized using NVIDIA A100 GPUs with 80GB memory. When run in transfer mode, rank_opt.py requires access to GPUs. All the abopve scripts need to be run in a Conda environment created as per the instructions below.

Follow the instructions below to set up the environment for the experiments.

- Install Anaconda:

- Download .sh installer file from https://www.anaconda.com/products/distribution

- Run:

bash Anaconda3-2023.03-Linux-x86_64.sh

- Set up conda environment

llm-rankwith required packages:conda env create -f env.yml - Activate environment:

conda activate llm-rank

If setting up the environment using env.yml does not work, manually build an environment

with the required packages using the following steps:

- Create Conda Environment with Python:

conda create -n [env] python=3.10 - Activate environment:

conda activate [env] - Install PyTorch with CUDA from: https://pytorch.org/

conda install pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia - Install transformers from Huggingface:

pip install transformers - Install accelerate:

conda install -c conda-forge accelerate

- Install

seaborn:conda install anaconda::seaborn - Install

termcolor:conda install -c conda-forge termcolor - Instal OpenAI python package:

conda install conda-forge::openai

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llm-rank-optimizer

Similar Open Source Tools

llm-rank-optimizer

This repository contains code for manipulating Large Language Models (LLMs) to increase the visibility of specific content or products in search engine recommendations. By adding a Strategic Text Sequence (STS) to a product's information page, the target product's rank in the LLM's recommendation can be optimized. The code includes scripts for generating and evaluating the STS, as well as plotting the results. The tool requires NVIDIA A100 GPUs for optimization and can be run in a Conda environment.

eureka-ml-insights

The Eureka ML Insights Framework is a repository containing code designed to help researchers and practitioners run reproducible evaluations of generative models efficiently. Users can define custom pipelines for data processing, inference, and evaluation, as well as utilize pre-defined evaluation pipelines for key benchmarks. The framework provides a structured approach to conducting experiments and analyzing model performance across various tasks and modalities.

MegatronApp

MegatronApp is a toolchain built around the Megatron-LM training framework, offering performance tuning, slow-node detection, and training-process visualization. It includes modules like MegaScan for anomaly detection, MegaFBD for forward-backward decoupling, MegaDPP for dynamic pipeline planning, and MegaScope for visualization. The tool aims to enhance large-scale distributed training by providing valuable capabilities and insights.

ChatAFL

ChatAFL is a protocol fuzzer guided by large language models (LLMs) that extracts machine-readable grammar for protocol mutation, increases message diversity, and breaks coverage plateaus. It integrates with ProfuzzBench for stateful fuzzing of network protocols, providing smooth integration. The artifact includes modified versions of AFLNet and ProfuzzBench, source code for ChatAFL with proposed strategies, and scripts for setup, execution, analysis, and cleanup. Users can analyze data, construct plots, examine LLM-generated grammars, enriched seeds, and state-stall responses, and reproduce results with downsized experiments. Customization options include modifying fuzzers, tuning parameters, adding new subjects, troubleshooting, and working on GPT-4. Limitations include interaction with OpenAI's Large Language Models and a hard limit of 150,000 tokens per minute.

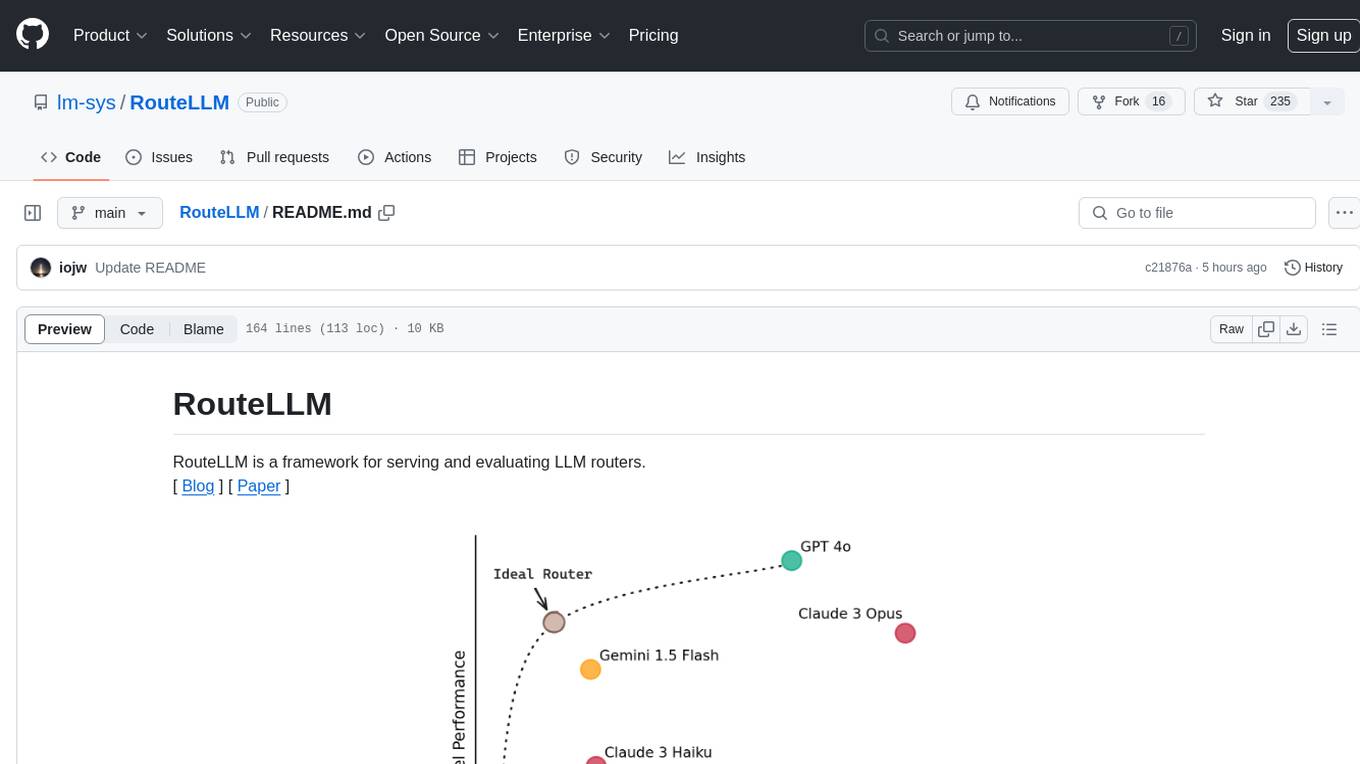

RouteLLM

RouteLLM is a framework for serving and evaluating LLM routers. It allows users to launch an OpenAI-compatible API that routes requests to the best model based on cost thresholds. Trained routers are provided to reduce costs while maintaining performance. Users can easily extend the framework, compare router performance, and calibrate cost thresholds. RouteLLM supports multiple routing strategies and benchmarks, offering a lightweight server and evaluation framework. It enables users to evaluate routers on benchmarks, calibrate thresholds, and modify model pairs. Contributions for adding new routers and benchmarks are welcome.

ReasonablePlanningAI

Reasonable Planning AI is a robust design and data-driven AI solution for game developers. It provides an AI Editor that allows creating AI without Blueprints or C++. The AI can think for itself, plan actions, adapt to the game environment, and act dynamically. It consists of Core components like RpaiGoalBase, RpaiActionBase, RpaiPlannerBase, RpaiReasonerBase, and RpaiBrainComponent, as well as Composer components for easier integration by Game Designers. The tool is extensible, cross-compatible with Behavior Trees, and offers debugging features like visual logging and heuristics testing. It follows a simple path of execution and supports versioning for stability and compatibility with Unreal Engine versions.

rag-experiment-accelerator

The RAG Experiment Accelerator is a versatile tool that helps you conduct experiments and evaluations using Azure AI Search and RAG pattern. It offers a rich set of features, including experiment setup, integration with Azure AI Search, Azure Machine Learning, MLFlow, and Azure OpenAI, multiple document chunking strategies, query generation, multiple search types, sub-querying, re-ranking, metrics and evaluation, report generation, and multi-lingual support. The tool is designed to make it easier and faster to run experiments and evaluations of search queries and quality of response from OpenAI, and is useful for researchers, data scientists, and developers who want to test the performance of different search and OpenAI related hyperparameters, compare the effectiveness of various search strategies, fine-tune and optimize parameters, find the best combination of hyperparameters, and generate detailed reports and visualizations from experiment results.

BTGenBot

BTGenBot is a tool that generates behavior trees for robots using lightweight large language models (LLMs) with a maximum of 7 billion parameters. It fine-tunes on a specific dataset, compares multiple LLMs, and evaluates generated behavior trees using various methods. The tool demonstrates the potential of LLMs with a limited number of parameters in creating effective and efficient robot behaviors.

vulnerability-analysis

The NVIDIA AI Blueprint for Vulnerability Analysis for Container Security showcases accelerated analysis on common vulnerabilities and exposures (CVE) at an enterprise scale, reducing mitigation time from days to seconds. It enables security analysts to determine software package vulnerabilities using large language models (LLMs) and retrieval-augmented generation (RAG). The blueprint is designed for security analysts, IT engineers, and AI practitioners in cybersecurity. It requires NVAIE developer license and API keys for vulnerability databases, search engines, and LLM model services. Hardware requirements include L40 GPU for pipeline operation and optional LLM NIM and Embedding NIM. The workflow involves LLM pipeline for CVE impact analysis, utilizing LLM planner, agent, and summarization nodes. The blueprint uses NVIDIA NIM microservices and Morpheus Cybersecurity AI SDK for vulnerability analysis.

knowledge-graph-of-thoughts

Knowledge Graph of Thoughts (KGoT) is an innovative AI assistant architecture that integrates LLM reasoning with dynamically constructed knowledge graphs (KGs). KGoT extracts and structures task-relevant knowledge into a dynamic KG representation, iteratively enhanced through external tools such as math solvers, web crawlers, and Python scripts. Such structured representation of task-relevant knowledge enables low-cost models to solve complex tasks effectively. The KGoT system consists of three main components: the Controller, the Graph Store, and the Integrated Tools, each playing a critical role in the task-solving process.

OlympicArena

OlympicArena is a comprehensive benchmark designed to evaluate advanced AI capabilities across various disciplines. It aims to push AI towards superintelligence by tackling complex challenges in science and beyond. The repository provides detailed data for different disciplines, allows users to run inference and evaluation locally, and offers a submission platform for testing models on the test set. Additionally, it includes an annotation interface and encourages users to cite their paper if they find the code or dataset helpful.

aisheets

Hugging Face AI Sheets is an open-source tool for building, enriching, and transforming datasets using AI models with no code. It can be deployed locally or on the Hub, providing access to thousands of open models. Users can easily generate datasets, run data generation scripts, and customize inference endpoints for text generation. The tool supports custom LLMs and offers advanced configuration options for authentication, inference, and miscellaneous settings. With AI Sheets, users can leverage the power of AI models without writing any code, making dataset management and transformation efficient and accessible.

warc-gpt

WARC-GPT is an experimental retrieval augmented generation pipeline for web archive collections. It allows users to interact with WARC files, extract text, generate text embeddings, visualize embeddings, and interact with a web UI and API. The tool is highly customizable, supporting various LLMs, providers, and embedding models. Users can configure the application using environment variables, ingest WARC files, start the server, and interact with the web UI and API to search for content and generate text completions. WARC-GPT is designed for exploration and experimentation in exploring web archives using AI.

monitors4codegen

This repository hosts the official code and data artifact for the paper 'Monitor-Guided Decoding of Code LMs with Static Analysis of Repository Context'. It introduces Monitor-Guided Decoding (MGD) for code generation using Language Models, where a monitor uses static analysis to guide the decoding. The repository contains datasets, evaluation scripts, inference results, a language server client 'multilspy' for static analyses, and implementation of various monitors monitoring for different properties in 3 programming languages. The monitors guide Language Models to adhere to properties like valid identifier dereferences, correct number of arguments to method calls, typestate validity of method call sequences, and more.

eval-dev-quality

DevQualityEval is an evaluation benchmark and framework designed to compare and improve the quality of code generation of Language Model Models (LLMs). It provides developers with a standardized benchmark to enhance real-world usage in software development and offers users metrics and comparisons to assess the usefulness of LLMs for their tasks. The tool evaluates LLMs' performance in solving software development tasks and measures the quality of their results through a point-based system. Users can run specific tasks, such as test generation, across different programming languages to evaluate LLMs' language understanding and code generation capabilities.

Mapperatorinator

Mapperatorinator is a multi-model framework that uses spectrogram inputs to generate fully featured osu! beatmaps for all gamemodes and assist modding beatmaps. The project aims to automatically generate rankable quality osu! beatmaps from any song with a high degree of customizability. The tool is built upon osuT5 and osu-diffusion, utilizing GPU compute and instances on vast.ai for development. Users can responsibly use AI in their beatmaps with this tool, ensuring disclosure of AI usage. Installation instructions include cloning the repository, creating a virtual environment, and installing dependencies. The tool offers a Web GUI for user-friendly experience and a Command-Line Inference option for advanced configurations. Additionally, an Interactive CLI script is available for terminal-based workflow with guided setup. The tool provides generation tips and features MaiMod, an AI-driven modding tool for osu! beatmaps. Mapperatorinator tokenizes beatmaps, utilizes a model architecture based on HF Transformers Whisper model, and offers multitask training format for conditional generation. The tool ensures seamless long generation, refines coordinates with diffusion, and performs post-processing for improved beatmap quality. Super timing generator enhances timing accuracy, and LoRA fine-tuning allows adaptation to specific styles or gamemodes. The project acknowledges credits and related works in the osu! community.

For similar tasks

llm-rank-optimizer

This repository contains code for manipulating Large Language Models (LLMs) to increase the visibility of specific content or products in search engine recommendations. By adding a Strategic Text Sequence (STS) to a product's information page, the target product's rank in the LLM's recommendation can be optimized. The code includes scripts for generating and evaluating the STS, as well as plotting the results. The tool requires NVIDIA A100 GPUs for optimization and can be run in a Conda environment.

For similar jobs

MaxKB

MaxKB is a knowledge base Q&A system based on the LLM large language model. MaxKB = Max Knowledge Base, which aims to become the most powerful brain of the enterprise.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.

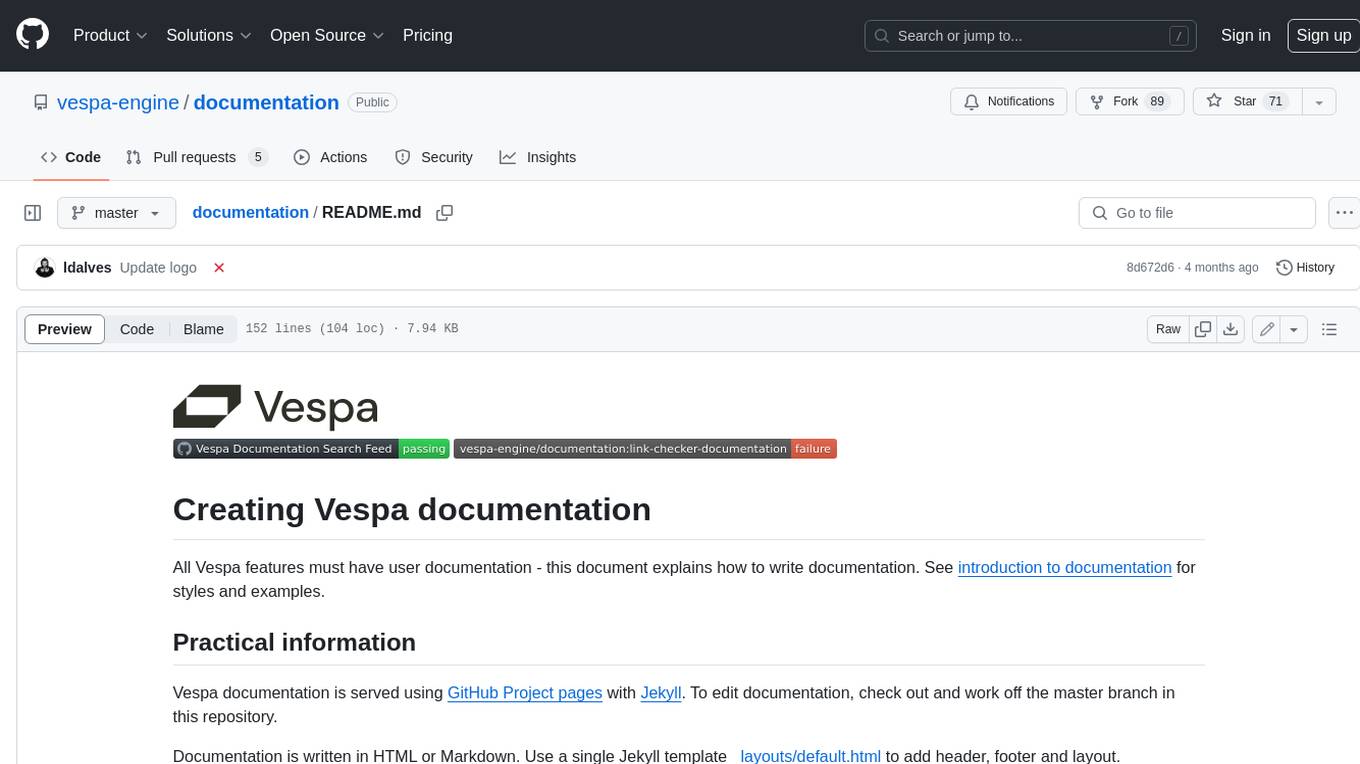

documentation

Vespa documentation is served using GitHub Project pages with Jekyll. To edit documentation, check out and work off the master branch in this repository. Documentation is written in HTML or Markdown. Use a single Jekyll template _layouts/default.html to add header, footer and layout. Install bundler, then $ bundle install $ bundle exec jekyll serve --incremental --drafts --trace to set up a local server at localhost:4000 to see the pages as they will look when served. If you get strange errors on bundle install try $ export PATH=“/usr/local/opt/[email protected]/bin:$PATH” $ export LDFLAGS=“-L/usr/local/opt/[email protected]/lib” $ export CPPFLAGS=“-I/usr/local/opt/[email protected]/include” $ export PKG_CONFIG_PATH=“/usr/local/opt/[email protected]/lib/pkgconfig” The output will highlight rendering/other problems when starting serving. Alternatively, use the docker image `jekyll/jekyll` to run the local server on Mac $ docker run -ti --rm --name doc \ --publish 4000:4000 -e JEKYLL_UID=$UID -v $(pwd):/srv/jekyll \ jekyll/jekyll jekyll serve or RHEL 8 $ podman run -it --rm --name doc -p 4000:4000 -e JEKYLL_ROOTLESS=true \ -v "$PWD":/srv/jekyll:Z docker.io/jekyll/jekyll jekyll serve The layout is written in denali.design, see _layouts/default.html for usage. Please do not add custom style sheets, as it is harder to maintain.

deep-seek

DeepSeek is a new experimental architecture for a large language model (LLM) powered internet-scale retrieval engine. Unlike current research agents designed as answer engines, DeepSeek aims to process a vast amount of sources to collect a comprehensive list of entities and enrich them with additional relevant data. The end result is a table with retrieved entities and enriched columns, providing a comprehensive overview of the topic. DeepSeek utilizes both standard keyword search and neural search to find relevant content, and employs an LLM to extract specific entities and their associated contents. It also includes a smaller answer agent to enrich the retrieved data, ensuring thoroughness. DeepSeek has the potential to revolutionize research and information gathering by providing a comprehensive and structured way to access information from the vastness of the internet.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

discourse-chatbot

The discourse-chatbot is an original AI chatbot for Discourse forums that allows users to converse with the bot in posts or chat channels. Users can customize the character of the bot, enable RAG mode for expert answers, search Wikipedia, news, and Google, provide market data, perform accurate math calculations, and experiment with vision support. The bot uses cutting-edge Open AI API and supports Azure and proxy server connections. It includes a quota system for access management and can be used in RAG mode or basic bot mode. The setup involves creating embeddings to make the bot aware of forum content and setting up bot access permissions based on trust levels. Users must obtain an API token from Open AI and configure group quotas to interact with the bot. The plugin is extensible to support other cloud bots and content search beyond the provided set.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.