web-codegen-scorer

Web Codegen Scorer is a tool for evaluating the quality of web code generated by LLMs.

Stars: 237

Web Codegen Scorer is a tool designed to evaluate the quality of web code generated by Large Language Models (LLMs). It allows users to make evidence-based decisions related to AI-generated code by iterating on system prompts, comparing code quality from different models, and monitoring code quality over time. The tool focuses specifically on web code and offers various features such as configuring evaluations, specifying system instructions, using built-in checks for code quality, automatically repairing issues, and viewing results with an intuitive report viewer UI.

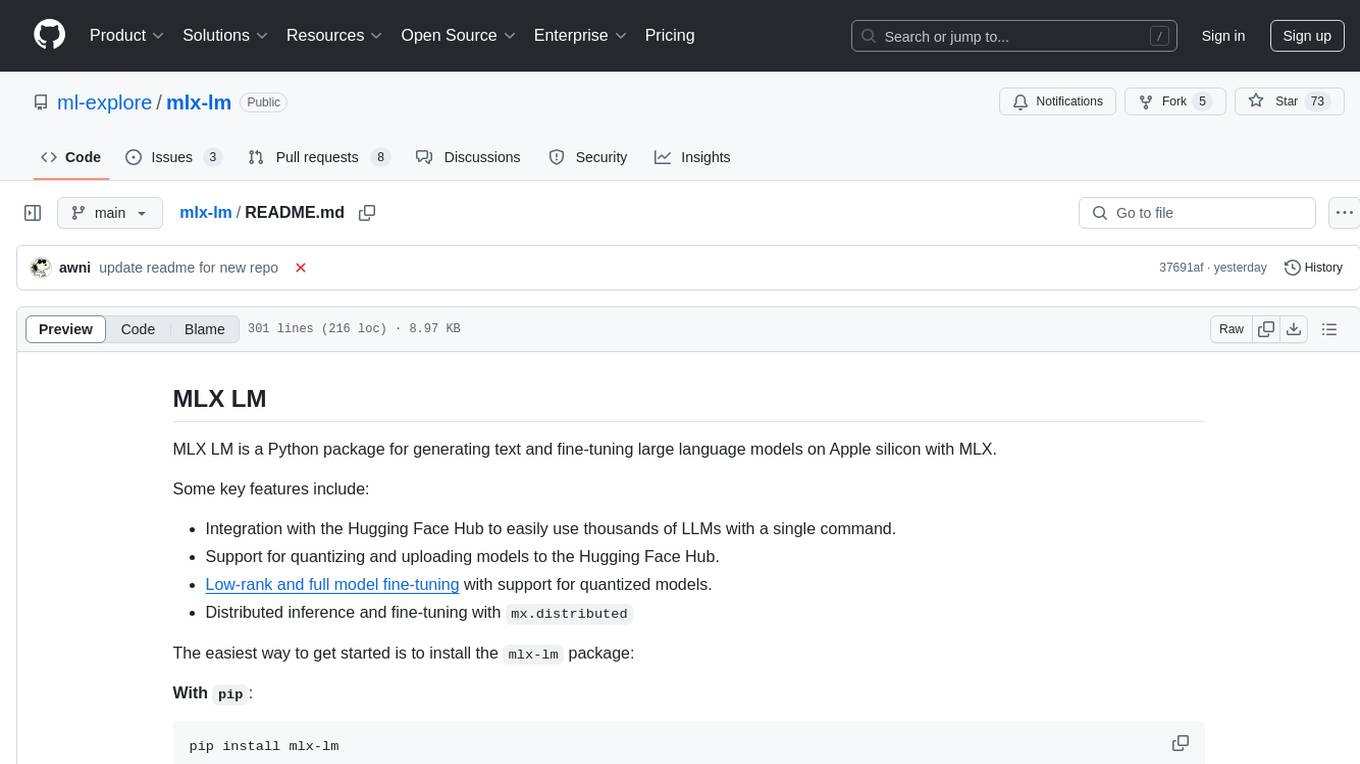

README:

Web Codegen Scorer is a tool for evaluating the quality of web code generated by Large Language Models (LLMs).

You can use this tool to make evidence-based decisions relating to AI-generated code. For example:

- 🔄 Iterate on a system prompt to find most effective instructions for your project.

- ⚖️ Compare the code quality of code produced by different models.

- 📈 Monitor generated code quality over time as models and agents evolve.

Web Codegen Scorer is different from other code benchmarks in that it focuses specifically on web code and relies primarily on well-established measures of code quality.

- ⚙️ Configure your evaluations with different models, frameworks, and tools.

- ✍️ Specify system instructions and add MCP servers.

- 📋 Use built-in checks for build success, runtime errors, accessibility, security, LLM rating, and coding best practices. (More built-in checks coming soon!)

- 🔧 Automatically attempt to repair issues detected during code generating.

- 📊 View and compare results with an intuitive report viewer UI.

- Install the package:

npm install -g web-codegen-scorer-

Set up your API keys:

In order to run an eval, you have to specify an API keys for the relevant providers as environment variables:

export GEMINI_API_KEY="YOUR_API_KEY_HERE" # If you're using Gemini models

export OPENAI_API_KEY="YOUR_API_KEY_HERE" # If you're using OpenAI models

export ANTHROPIC_API_KEY="YOUR_API_KEY_HERE" # If you're using Anthropic models-

Run an eval:

You can run your first eval using our Angular example with the following command:

web-codegen-scorer eval --env=angular-example-

(Optional) Set up your own eval:

If you want to set up a custom eval, instead of using our built-in examples, you can run the following command which will guide you through the process:

web-codegen-scorer init-

(Optional) Run an evaluated app locally:

Once you've evaluated an app, you can run it locally with the following command:

web-codegen-scorer run --env=angular-example --prompt=<name of the prompt you want to run>You can customize the web-codegen-scorer eval script with the following flags:

-

--env=<path>(alias:--environment): (Required) Specifies the path from which to load the environment config.- Example:

web-codegen-scorer eval --env=foo/bar/my-env.mjs

- Example:

-

--model=<name>: Specifies the model to use when generating code. Defaults to the value ofDEFAULT_MODEL_NAME.- Example:

web-codegen-scorer eval --model=gemini-2.5-flash --env=<config path>

- Example:

-

--runner=<name>: Specifies the runner to use to execute the eval. Supported runners aregenkit(default) orgemini-cli. -

--local: Runs the script in local mode for the initial code generation request. Instead of calling the LLM, it will attempt to read the initial code from a corresponding file in the.web-codegen-scorer/llm-outputdirectory (e.g.,.web-codegen-scorer/llm-output/todo-app.ts). This is useful for re-running assessments or debugging the build/repair process without incurring LLM costs for the initial generation.-

Note: You typically need to run

web-codegen-scorer evalonce without--localto generate the initial files in.web-codegen-scorer/llm-output. - The

web-codegen-scorer eval:localscript is a shortcut forweb-codegen-scorer eval --local.

-

Note: You typically need to run

-

--limit=<number>: Specifies the number of application prompts to process. Defaults to5.- Example:

web-codegen-scorer eval --limit=10 --env=<config path>

- Example:

-

--output-directory=<name>(alias:--output-dir): Specifies which directory to output the generated code under which is useful for debugging. By default, the code will be generated in a temporary directory.- Example:

web-codegen-scorer eval --output-dir=test-output --env=<config path>

- Example:

-

--concurrency=<number>: Sets the maximum number of concurrent AI API requests. Defaults to5( as defined byDEFAULT_CONCURRENCYinsrc/config.ts).- Example:

web-codegen-scorer eval --concurrency=3 --env=<config path>

- Example:

-

--report-name=<name>: Sets the name for the generated report directory. Defaults to a timestamp (e.g.,2023-10-27T10-30-00-000Z). The name will be sanitized (non-alphanumeric characters replaced with hyphens).- Example:

web-codegen-scorer eval --report-name=my-custom-report --env=<config path>

- Example:

-

--rag-endpoint=<url>: Specifies a custom RAG (Retrieval-Augmented Generation) endpoint URL. The URL must contain aPROMPTsubstring, which will be replaced with the user prompt.- Example:

web-codegen-scorer eval --rag-endpoint="http://localhost:8080/my-rag-endpoint?query=PROMPT" --env=<config path>

- Example:

-

--prompt-filter=<name>: String used to filter which prompts should be run. By default, a random sample (controlled by--limit) will be taken from the prompts in the current environment. Setting this can be useful for debugging a specific prompt.- Example:

web-codegen-scorer eval --prompt-filter=tic-tac-toe --env=<config path>

- Example:

-

--skip-screenshots: Whether to skip taking screenshots of the generated app. Defaults tofalse.- Example:

web-codegen-scorer eval --skip-screenshots --env=<config path>

- Example:

-

--labels=<label1> <label2>: Metadata labels that will be attached to the run.- Example:

web-codegen-scorer eval --labels my-label another-label --env=<config path>

- Example:

-

--mcp: Whether to start an MCP for the evaluation. Defaults tofalse.- Example:

web-codegen-scorer eval --mcp --env=<config path>

- Example:

-

--help: Prints out usage information about the script.

If you've cloned this repo and want to work on the tool, you have to install its dependencies by

running pnpm install.

Once they're installed, you can run the following commands:

-

pnpm run release-build- Creates a release build of the package indistdirectory. -

pnpm run npm-publish- Builds the package and publishes it to npm. -

pnpm run eval- Runs an eval from source. -

pnpm run report- Runs the report app from source. -

pnpm run init- Runs the init script from source. -

pnpm run format- Formats the source code using Prettier.

This tool is built by the Angular team at Google.

No! You can use this tool with any web library or framework (or none at all) as well as any model.

As more and more developers reach for LLM-based tools to create and modify code, we wanted to be able to empirically measure the effect of different factors on the quality of generated code. While many LLM coding benchmarks exist, we found that these were often too broad and didn't measure the specific quality metrics we cared about.

In the absence of such a tool, we found that many developers based their judgements on codegen with different models, frameworks, and tools on loosely structured trial-and-error. In contrast, Web Codegen Scorer gives us a platform to consistently measure codegen across different configurations with consistency and repeatability.

Yes! We plan to both expand the number of built-in checks and the variety of codegen scenarios.

Our roadmap includes:

- Including interaction testing in the rating, to ensure the generated code performs any requested behaviors.

- Measure Core Web Vitals.

- Measuring the effectiveness of LLM-driven edits on an existing codebase.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for web-codegen-scorer

Similar Open Source Tools

web-codegen-scorer

Web Codegen Scorer is a tool designed to evaluate the quality of web code generated by Large Language Models (LLMs). It allows users to make evidence-based decisions related to AI-generated code by iterating on system prompts, comparing code quality from different models, and monitoring code quality over time. The tool focuses specifically on web code and offers various features such as configuring evaluations, specifying system instructions, using built-in checks for code quality, automatically repairing issues, and viewing results with an intuitive report viewer UI.

reader

Reader is a tool that converts any URL to an LLM-friendly input with a simple prefix `https://r.jina.ai/`. It improves the output for your agent and RAG systems at no cost. Reader supports image reading, captioning all images at the specified URL and adding `Image [idx]: [caption]` as an alt tag. This enables downstream LLMs to interact with the images in reasoning, summarizing, etc. Reader offers a streaming mode, useful when the standard mode provides an incomplete result. In streaming mode, Reader waits a bit longer until the page is fully rendered, providing more complete information. Reader also supports a JSON mode, which contains three fields: `url`, `title`, and `content`. Reader is backed by Jina AI and licensed under Apache-2.0.

sage

Sage is a tool that allows users to chat with any codebase, providing a chat interface for code understanding and integration. It simplifies the process of learning how a codebase works by offering heavily documented answers sourced directly from the code. Users can set up Sage locally or on the cloud with minimal effort. The tool is designed to be easily customizable, allowing users to swap components of the pipeline and improve the algorithms powering code understanding and generation.

llm-finetuning

llm-finetuning is a repository that provides a serverless twist to the popular axolotl fine-tuning library using Modal's serverless infrastructure. It allows users to quickly fine-tune any LLM model with state-of-the-art optimizations like Deepspeed ZeRO, LoRA adapters, Flash attention, and Gradient checkpointing. The repository simplifies the fine-tuning process by not exposing all CLI arguments, instead allowing users to specify options in a config file. It supports efficient training and scaling across multiple GPUs, making it suitable for production-ready fine-tuning jobs.

aides-jeunes

The user interface (and the main server) of the simulator of aids and social benefits for young people. It is based on the free socio-fiscal simulator Openfisca.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

ai-models

The `ai-models` command is a tool used to run AI-based weather forecasting models. It provides functionalities to install, run, and manage different AI models for weather forecasting. Users can easily install and run various models, customize model settings, download assets, and manage input data from different sources such as ECMWF, CDS, and GRIB files. The tool is designed to optimize performance by running on GPUs and provides options for better organization of assets and output files. It offers a range of command line options for users to interact with the models and customize their forecasting tasks.

gpt-cli

gpt-cli is a command-line interface tool for interacting with various chat language models like ChatGPT, Claude, and others. It supports model customization, usage tracking, keyboard shortcuts, multi-line input, markdown support, predefined messages, and multiple assistants. Users can easily switch between different assistants, define custom assistants, and configure model parameters and API keys in a YAML file for easy customization and management.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

repo-to-text

The `repo-to-text` tool converts a directory's structure and contents into a single text file. It generates a formatted text representation that includes the directory tree and file contents, making it easy to share code with LLMs for development and debugging. Users can customize the tool's behavior with various options and settings, including output directory specification, debug logging, and file inclusion/exclusion rules. The tool supports Docker usage for containerized environments and provides detailed instructions for installation, usage, settings configuration, and contribution guidelines. It is a versatile tool for converting repository contents into text format for easy sharing and documentation.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

telemetry-airflow

This repository codifies the Airflow cluster that is deployed at workflow.telemetry.mozilla.org (behind SSO) and commonly referred to as "WTMO" or simply "Airflow". Some links relevant to users and developers of WTMO: * The `dags` directory in this repository contains some custom DAG definitions * Many of the DAGs registered with WTMO don't live in this repository, but are instead generated from ETL task definitions in bigquery-etl * The Data SRE team maintains a WTMO Developer Guide (behind SSO)

safety-tooling

This repository, safety-tooling, is designed to be shared across various AI Safety projects. It provides an LLM API with a common interface for OpenAI, Anthropic, and Google models. The aim is to facilitate collaboration among AI Safety researchers, especially those with limited software engineering backgrounds, by offering a platform for contributing to a larger codebase. The repo can be used as a git submodule for easy collaboration and updates. It also supports pip installation for convenience. The repository includes features for installation, secrets management, linting, formatting, Redis configuration, testing, dependency management, inference, finetuning, API usage tracking, and various utilities for data processing and experimentation.

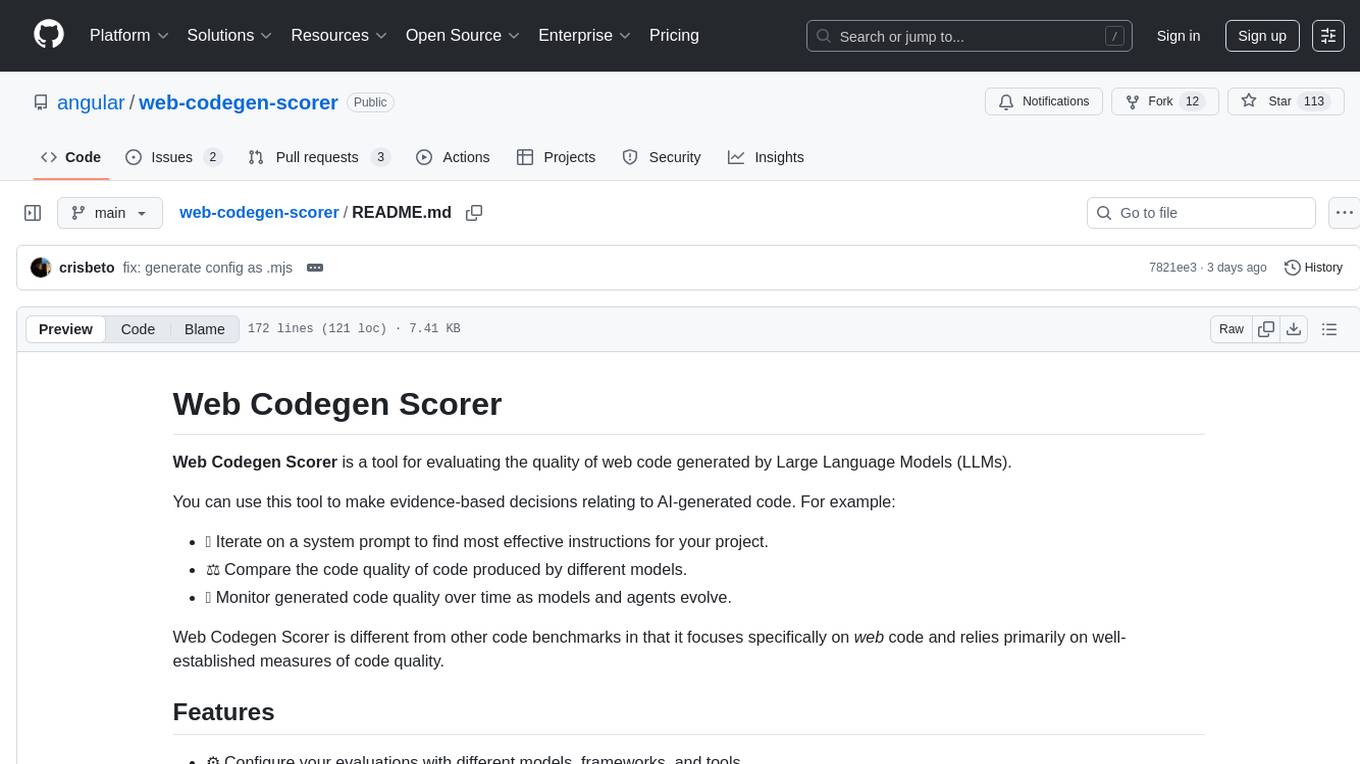

mlx-lm

MLX LM is a Python package designed for generating text and fine-tuning large language models on Apple silicon using MLX. It offers integration with the Hugging Face Hub for easy access to thousands of LLMs, support for quantizing and uploading models to the Hub, low-rank and full model fine-tuning capabilities, and distributed inference and fine-tuning with `mx.distributed`. Users can interact with the package through command line options or the Python API, enabling tasks such as text generation, chatting with language models, model conversion, streaming generation, and sampling. MLX LM supports various Hugging Face models and provides tools for efficient scaling to long prompts and generations, including a rotating key-value cache and prompt caching. It requires macOS 15.0 or higher for optimal performance.

pytest-evals

pytest-evals is a minimalistic pytest plugin designed to help evaluate the performance of Language Model (LLM) outputs against test cases. It allows users to test and evaluate LLM prompts against multiple cases, track metrics, and integrate easily with pytest, Jupyter notebooks, and CI/CD pipelines. Users can scale up by running tests in parallel with pytest-xdist and asynchronously with pytest-asyncio. The tool focuses on simplifying evaluation processes without the need for complex frameworks, keeping tests and evaluations together, and emphasizing logic over infrastructure.

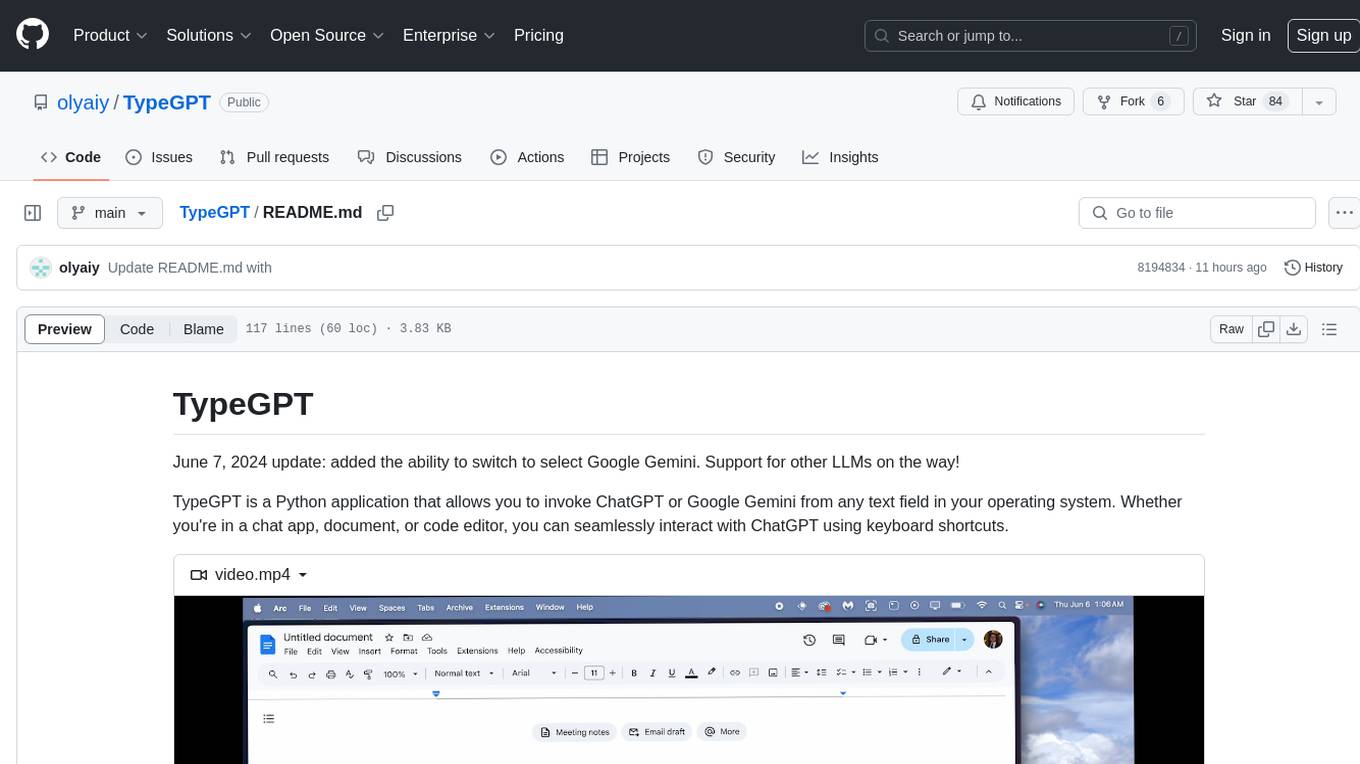

TypeGPT

TypeGPT is a Python application that enables users to interact with ChatGPT or Google Gemini from any text field in their operating system using keyboard shortcuts. It provides global accessibility, keyboard shortcuts for communication, and clipboard integration for larger text inputs. Users need to have Python 3.x installed along with specific packages and API keys from OpenAI for ChatGPT access. The tool allows users to run the program normally or in the background, manage processes, and stop the program. Users can use keyboard shortcuts like `/ask`, `/see`, `/stop`, `/chatgpt`, `/gemini`, `/check`, and `Shift + Cmd + Enter` to interact with the application in any text field. Customization options are available by modifying files like `keys.txt` and `system_prompt.txt`. Contributions are welcome, and future plans include adding support for other APIs and a user-friendly GUI.

For similar tasks

web-codegen-scorer

Web Codegen Scorer is a tool designed to evaluate the quality of web code generated by Large Language Models (LLMs). It allows users to make evidence-based decisions related to AI-generated code by iterating on system prompts, comparing code quality from different models, and monitoring code quality over time. The tool focuses specifically on web code and offers various features such as configuring evaluations, specifying system instructions, using built-in checks for code quality, automatically repairing issues, and viewing results with an intuitive report viewer UI.

evalplus

EvalPlus is a rigorous evaluation framework for LLM4Code, providing HumanEval+ and MBPP+ tests to evaluate large language models on code generation tasks. It offers precise evaluation and ranking, coding rigorousness analysis, and pre-generated code samples. Users can use EvalPlus to generate code solutions, post-process code, and evaluate code quality. The tool includes tools for code generation and test input generation using various backends.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.