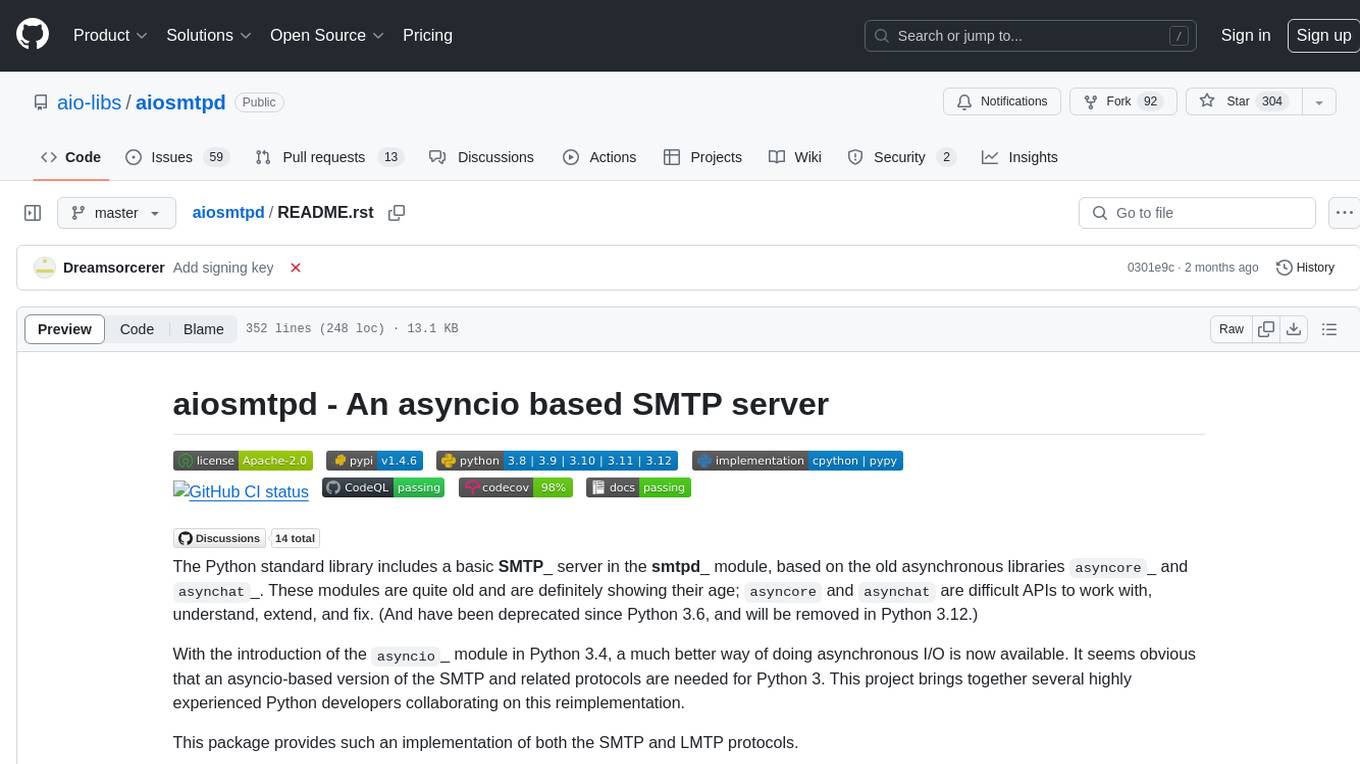

aiosmtpd

A reimplementation of the Python stdlib smtpd.py based on asyncio.

Stars: 304

aiosmtpd is an asyncio-based SMTP server implementation that provides a modern and efficient way to handle SMTP and LMTP protocols in Python 3. It replaces the outdated asyncore and asynchat modules with asyncio for improved asynchronous I/O operations. The project aims to offer a more user-friendly, extendable, and maintainable solution for handling email protocols in Python applications. It is actively maintained by experienced Python developers and offers full documentation for easy integration and usage.

README:

| |github license| || |PyPI Version| || |PyPI Python| || |PyPI PythonImpl| | |GA badge| || |CodeQL badge| || |codecov| || |readthedocs| | | |GH Discussions| |

.. |_| unicode:: 0xA0 :trim: .. |github license| image:: https://img.shields.io/github/license/aio-libs/aiosmtpd?logo=Open+Source+Initiative&logoColor=0F0 :target: https://github.com/aio-libs/aiosmtpd/blob/master/LICENSE :alt: Project License on GitHub .. |PyPI Version| image:: https://img.shields.io/pypi/v/aiosmtpd?logo=pypi&logoColor=yellow :target: https://pypi.org/project/aiosmtpd/ :alt: PyPI Package .. |PyPI Python| image:: https://img.shields.io/pypi/pyversions/aiosmtpd?logo=python&logoColor=yellow :target: https://pypi.org/project/aiosmtpd/ :alt: Supported Python Versions .. |PyPI PythonImpl| image:: https://img.shields.io/pypi/implementation/aiosmtpd?logo=python :target: https://pypi.org/project/aiosmtpd/ :alt: Supported Python Implementations .. .. For |GA badge|, don't forget to check actual workflow name in unit-testing-and-coverage.yml .. |GA badge| image:: https://github.com/aio-libs/aiosmtpd/workflows/aiosmtpd%20CI/badge.svg :target: https://github.com/aio-libs/aiosmtpd/actions/workflows/unit-testing-and-coverage.yml :alt: GitHub CI status .. |CodeQL badge| image:: https://github.com/aio-libs/aiosmtpd/workflows/CodeQL/badge.svg :target: https://github.com/aio-libs/aiosmtpd/actions/workflows/codeql.yml :alt: CodeQL status .. |codecov| image:: https://codecov.io/github/aio-libs/aiosmtpd/coverage.svg?branch=master :target: https://codecov.io/github/aio-libs/aiosmtpd?branch=master :alt: Code Coverage .. |readthedocs| image:: https://img.shields.io/readthedocs/aiosmtpd?logo=Read+the+Docs&logoColor=white :target: https://aiosmtpd.readthedocs.io/en/latest/ :alt: Documentation Status .. |GH Discussions| image:: https://img.shields.io/github/discussions/aio-libs/aiosmtpd?logo=github&style=social :target: https://github.com/aio-libs/aiosmtpd/discussions :alt: GitHub Discussions

The Python standard library includes a basic |SMTP|_ server in the |smtpd|_ module,

based on the old asynchronous libraries |asyncore|_ and |asynchat|_.

These modules are quite old and are definitely showing their age;

asyncore and asynchat are difficult APIs to work with, understand, extend, and fix.

(And have been deprecated since Python 3.6, and will be removed in Python 3.12.)

With the introduction of the |asyncio|_ module in Python 3.4, a much better way of doing asynchronous I/O is now available. It seems obvious that an asyncio-based version of the SMTP and related protocols are needed for Python 3. This project brings together several highly experienced Python developers collaborating on this reimplementation.

This package provides such an implementation of both the SMTP and LMTP protocols.

Full documentation is available on |aiosmtpd rtd|_

aiosmtpd has been tested on CPython>=3.8 and |PyPy|_>=3.8

for the following platforms (in alphabetical order):

- Cygwin (as of 2022-12-22, only for CPython 3.8, and 3.9)

- MacOS 11 and 12

- Ubuntu 18.04

- Ubuntu 20.04

- Ubuntu 22.04

- Windows 10

- Windows Server 2019

- Windows Server 2022

aiosmtpd probably can run on platforms not listed above,

but we cannot provide support for unlisted platforms.

.. |PyPy| replace:: PyPy

.. _PyPy: https://www.pypy.org/

Install as usual with pip::

pip install aiosmtpd

If you receive an error message ModuleNotFoundError: No module named 'public',

it likely means your setuptools is too old;

try to upgrade setuptools to at least version 46.4.0

which had implemented a fix for this issue_.

.. _implemented a fix for this issue: https://setuptools.readthedocs.io/en/latest/history.html#v46-4-0

As of 2016-07-14, aiosmtpd has been put under the |aiolibs|_ umbrella project and moved to GitHub.

- Project home: https://github.com/aio-libs/aiosmtpd

- PyPI project page: https://pypi.org/project/aiosmtpd/

- Report bugs at: https://github.com/aio-libs/aiosmtpd/issues

- Git clone: https://github.com/aio-libs/aiosmtpd.git

- Documentation: http://aiosmtpd.readthedocs.io/

- StackOverflow: https://stackoverflow.com/questions/tagged/aiosmtpd

The best way to contact the developers is through the GitHub links above. You can also request help by submitting a question on StackOverflow.

You can install this package in a virtual environment like so::

$ python3 -m venv /path/to/venv

$ source /path/to/venv/bin/activate

$ python setup.py install

This will give you a command line script called aiosmtpd which implements the

SMTP server. Use aiosmtpd --help for a quick reference.

You will also have access to the aiosmtpd library, which you can use as a

testing environment for your SMTP clients. See the documentation links above

for details.

You'll need the tox <https://pypi.python.org/pypi/tox>__ tool to run the

test suite for Python 3. Once you've got that, run::

$ tox

Individual tests can be run like this::

$ tox -- <testname>

where <testname> is the "node id" of the test case to run, as explained

in the pytest documentation_. The command above will run that one test case

against all testenvs defined in tox.ini (see below).

If you want test to stop as soon as it hit a failure, use the -x/--exitfirst

option::

$ tox -- -x

You can also add the -s/--capture=no option to show output, e.g.::

$ tox -e py311-nocov -- -s

and these options can be combined::

$ tox -e py311-nocov -- -x -s <testname>

(The -e parameter is explained in the next section about 'testenvs'.

In general, you'll want to choose the nocov testenvs if you want to show output,

so you can see which test is generating which output.)

In general, the -e parameter to tox specifies one (or more) testenv

to run (separate using comma if more than one testenv). The following testenvs

have been configured and tested:

-

{py38,py39,py310,py311,py312,pypy3,pypy37,pypy38,pypy39}-{nocov,cov,diffcov,profile}Specifies the interpreter to run and the kind of testing to perform.

-

nocov= no coverage testing. Tests will run verbosely. -

cov= with coverage testing. Tests will run in brief mode (showing a single character per test run) -

diffcov= with diff-coverage report (showing difference in coverage compared to previous commit). Tests will run in brief mode -

profile= no coverage testing, but code profiling instead. This must be invoked manually using the-eparameter

Note 1: As of 2021-02-23, only the

{py38,py39}-{nocov,cov}combinations work on Cygwin.Note 2: It is also possible to use whatever Python version is used when invoking

toxby using thepytarget, but you must explicitly include the type of testing you want. For example::$ tox -e "py-{nocov,cov,diffcov}"

(Don't forget the quotes if you want to use braces!)

You might want to do this for CI platforms where the exact Python version is pre-prepared, such as Travis CI or |GitHub Actions|_; this will definitely save some time during tox's testenv prepping.

For all testenv combinations except diffcov, |bandit|_ security check will also be run prior to running pytest.

-

.. _bandit: https://github.com/PyCQA/bandit

.. |bandit| replace:: bandit

-

qaPerforms |flake8|_ code style checking, and |flake8-bugbear|_ design checking.

In addition, some tests to help ensure that

aiosmtpdis releasable to PyPI are also run.

.. _flake8: https://flake8.pycqa.org/en/latest/

.. |flake8| replace:: flake8

.. _flake8-bugbear: https://github.com/PyCQA/flake8-bugbear

.. |flake8-bugbear| replace:: flake8-bugbear

-

docsBuilds HTML documentation and manpage using Sphinx. A

pytest doctest_ will run prior to actual building of the documentation. -

staticPerforms a static type checking using

pytype.Note 1: Please ensure that

all pytype dependencies_ have been installed before executing this testenv.Note 2: This testenv will be SKIPPED on Windows, because

pytypecurrently cannot run on Windows.Note 3: This testenv does NOT work on Cygwin.

.. _all pytype dependencies: https://github.com/google/pytype/blob/2021.02.09/CONTRIBUTING.md#pytype-dependencies

ASYNCIO_CATCHUP_DELAY

Due to how asyncio event loop works, some actions do not instantly get

responded to. This is especially so on slower / overworked systems.

In consideration of such situations, some test cases invoke a slight

delay to let the event loop catch up.

Defaults to `0.1` and can be set to any float value you want.

Different Python Versions

-----------------------------

The tox configuration files have been created to cater for more than one

Python versions `safely`: If an interpreter is not found for a certain

Python version, tox will skip that whole testenv.

However, with a little bit of effort, you can have multiple Python interpreter

versions on your system by using ``pyenv``. General steps:

1. Install ``pyenv`` from https://github.com/pyenv/pyenv#installation

2. Install ``tox-pyenv`` from https://pypi.org/project/tox-pyenv/

3. Using ``pyenv``, install the Python versions you want to test on

4. Create a ``.python-version`` file in the root of the repo, listing the

Python interpreter versions you want to make available to tox (see pyenv's

documentation about this file)

**Tip:** The 1st line of ``.python-version`` indicates your *preferred* Python version

which will be used to run tox.

5. Invoke tox with the option ``--tox-pyenv-no-fallback`` (see tox-pyenv's

documentation about this option)

``housekeep.py``

----------------

If you ever need to 'reset' your repo, you can use the ``housekeep.py`` utility

like so::

$ python housekeep.py superclean

It is *strongly* recommended to NOT do superclean too often, though.

Every time you invoke ``superclean``,

tox will have to recreate all its testenvs,

and this will make testing *much* longer to finish.

``superclean`` is typically only needed when you switch branches,

or if you want to really ensure that artifacts from previous testing sessions

won't interfere with your next testing sessions.

For example, you want to force Sphinx to rebuild all documentation.

Or, you're sharing a repo between environments (say, PSCore and Cygwin)

and the cached Python bytecode messes up execution

(e.g., sharing the exact same directory between Windows PowerShell and Cygwin

will cause problems as Python becomes confused about the locations of the source code).

Signing Keys

============

Starting version 1.3.1,

files provided through `PyPI`_ or `GitHub Releases`_

will be signed using one of the following GPG Keys:

+-------------------------+----------------+----------------------------------+

| GPG Key ID | Owner | Email |

+=========================+================+==================================+

| ``5D60 CE28 9CD7 C258`` | Pandu E POLUAN | pepoluan at gmail period com |

+-------------------------+----------------+----------------------------------+

| ``5555 A6A6 7AE1 DC91`` | Pandu E POLUAN | pepoluan at gmail period com |

+-------------------------+----------------+----------------------------------+

| ``E309 FD82 73BD 8465`` | Wayne Werner | waynejwerner at gmail period com |

+-------------------------+----------------+----------------------------------+

| ``5FE9 28CD 9626 CE2B`` | Sam Bull | sam at sambull period org |

+-------------------------+----------------+----------------------------------+

.. _PyPI: https://pypi.org/project/aiosmtpd/

.. _`GitHub Releases`: https://github.com/aio-libs/aiosmtpd/releases

License

=======

``aiosmtpd`` is released under the Apache License version 2.0.

.. _`GitHub Actions`: https://docs.github.com/en/free-pro-team@latest/actions/guides/building-and-testing-python#running-tests-with-tox

.. |GitHub Actions| replace:: **GitHub Actions**

.. _`pytest doctest`: https://docs.pytest.org/en/stable/doctest.html

.. _`the pytest documentation`: https://docs.pytest.org/en/stable/usage.html#specifying-tests-selecting-tests

.. _`aiosmtpd rtd`: https://aiosmtpd.readthedocs.io

.. |aiosmtpd rtd| replace:: **aiosmtpd.readthedocs.io**

.. _`SMTP`: https://tools.ietf.org/html/rfc5321

.. |SMTP| replace:: **SMTP**

.. _`smtpd`: https://docs.python.org/3/library/smtpd.html

.. |smtpd| replace:: **smtpd**

.. _`asyncore`: https://docs.python.org/3/library/asyncore.html

.. |asyncore| replace:: ``asyncore``

.. _`asynchat`: https://docs.python.org/3/library/asynchat.html

.. |asynchat| replace:: ``asynchat``

.. _`asyncio`: https://docs.python.org/3/library/asyncio.html

.. |asyncio| replace:: ``asyncio``

.. _`aiolibs`: https://github.com/aio-libs

.. |aiolibs| replace:: **aio-libs**

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiosmtpd

Similar Open Source Tools

aiosmtpd

aiosmtpd is an asyncio-based SMTP server implementation that provides a modern and efficient way to handle SMTP and LMTP protocols in Python 3. It replaces the outdated asyncore and asynchat modules with asyncio for improved asynchronous I/O operations. The project aims to offer a more user-friendly, extendable, and maintainable solution for handling email protocols in Python applications. It is actively maintained by experienced Python developers and offers full documentation for easy integration and usage.

airbadge

Airbadge is a Stripe addon for Auth.js that simplifies the process of creating a SaaS site by integrating payment, authentication, gating, self-service account management, webhook handling, trials & free plans, session data, and more. It allows users to launch a SaaS app without writing any authentication or payment code. The project is open source and free to use with optional paid features under the BSL License.

agenticSeek

AgenticSeek is a voice-enabled AI assistant powered by DeepSeek R1 agents, offering a fully local alternative to cloud-based AI services. It allows users to interact with their filesystem, code in multiple languages, and perform various tasks autonomously. The tool is equipped with memory to remember user preferences and past conversations, and it can divide tasks among multiple agents for efficient execution. AgenticSeek prioritizes privacy by running entirely on the user's hardware without sending data to the cloud.

mcp-victoriametrics

The VictoriaMetrics MCP Server is an implementation of Model Context Protocol (MCP) server for VictoriaMetrics. It provides access to your VictoriaMetrics instance and seamless integration with VictoriaMetrics APIs and documentation. The server allows you to use almost all read-only APIs of VictoriaMetrics, enabling monitoring, observability, and debugging tasks related to your VictoriaMetrics instances. It also contains embedded up-to-date documentation and tools for exploring metrics, labels, alerts, and more. The server can be used for advanced automation and interaction capabilities for engineers and tools.

well-architected-iac-analyzer

Well-Architected Infrastructure as Code (IaC) Analyzer is a project demonstrating how generative AI can evaluate infrastructure code for alignment with best practices. It features a modern web application allowing users to upload IaC documents, complete IaC projects, or architecture diagrams for assessment. The tool provides insights into infrastructure code alignment with AWS best practices, offers suggestions for improving cloud architecture designs, and can generate IaC templates from architecture diagrams. Users can analyze CloudFormation, Terraform, or AWS CDK templates, architecture diagrams in PNG or JPEG format, and complete IaC projects with supporting documents. Real-time analysis against Well-Architected best practices, integration with AWS Well-Architected Tool, and export of analysis results and recommendations are included.

code2prompt

Code2Prompt is a powerful command-line tool that generates comprehensive prompts from codebases, designed to streamline interactions between developers and Large Language Models (LLMs) for code analysis, documentation, and improvement tasks. It bridges the gap between codebases and LLMs by converting projects into AI-friendly prompts, enabling users to leverage AI for various software development tasks. The tool offers features like holistic codebase representation, intelligent source tree generation, customizable prompt templates, smart token management, Gitignore integration, flexible file handling, clipboard-ready output, multiple output options, and enhanced code readability.

pentagi

PentAGI is an innovative tool for automated security testing that leverages cutting-edge artificial intelligence technologies. It is designed for information security professionals, researchers, and enthusiasts who need a powerful and flexible solution for conducting penetration tests. The tool provides secure and isolated operations in a sandboxed Docker environment, fully autonomous AI-powered agent for penetration testing steps, a suite of 20+ professional security tools, smart memory system for storing research results, web intelligence for gathering information, integration with external search systems, team delegation system, comprehensive monitoring and reporting, modern interface, API integration, persistent storage, scalable architecture, self-hosted solution, flexible authentication, and quick deployment through Docker Compose.

r2ai

r2ai is a tool designed to run a language model locally without internet access. It can be used to entertain users or assist in answering questions related to radare2 or reverse engineering. The tool allows users to prompt the language model, index large codebases, slurp file contents, embed the output of an r2 command, define different system-level assistant roles, set environment variables, and more. It is accessible as an r2lang-python plugin and can be scripted from various languages. Users can use different models, adjust query templates dynamically, load multiple models, and make them communicate with each other.

roast

Roast is a convention-oriented framework for creating structured AI workflows maintained by the Augmented Engineering team at Shopify. It provides a structured, declarative approach to building AI workflows with convention over configuration, built-in tools for file operations, search, and AI interactions, Ruby integration for custom steps, shared context between steps, step customization with AI models and parameters, session replay, parallel execution, function caching, and extensive instrumentation for monitoring workflow execution, AI calls, and tool usage.

vue-markdown-render

vue-renderer-markdown is a high-performance tool designed for streaming and rendering Markdown content in real-time. It is optimized for handling incomplete or rapidly changing Markdown blocks, making it ideal for scenarios like AI model responses, live content updates, and real-time Markdown rendering. The tool offers features such as ultra-high performance, streaming-first design, Monaco integration, progressive Mermaid rendering, custom components integration, complete Markdown support, real-time updates, TypeScript support, and zero configuration setup. It solves challenges like incomplete syntax blocks, rapid content changes, cursor positioning complexities, and graceful handling of partial tokens with a streaming-optimized architecture.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

k8sgpt

K8sGPT is a tool for scanning your Kubernetes clusters, diagnosing, and triaging issues in simple English. It has SRE experience codified into its analyzers and helps to pull out the most relevant information to enrich it with AI.

runpod-worker-comfy

runpod-worker-comfy is a serverless API tool that allows users to run any ComfyUI workflow to generate an image. Users can provide input images as base64-encoded strings, and the generated image can be returned as a base64-encoded string or uploaded to AWS S3. The tool is built on Ubuntu + NVIDIA CUDA and provides features like built-in checkpoints and VAE models. Users can configure environment variables to upload images to AWS S3 and interact with the RunPod API to generate images. The tool also supports local testing and deployment to Docker hub using Github Actions.

chatgpt-cli

ChatGPT CLI provides a powerful command-line interface for seamless interaction with ChatGPT models via OpenAI and Azure. It features streaming capabilities, extensive configuration options, and supports various modes like streaming, query, and interactive mode. Users can manage thread-based context, sliding window history, and provide custom context from any source. The CLI also offers model and thread listing, advanced configuration options, and supports GPT-4, GPT-3.5-turbo, and Perplexity's models. Installation is available via Homebrew or direct download, and users can configure settings through default values, a config.yaml file, or environment variables.

showdown

Showdown is a Pokémon battle-bot that can play battles on Pokemon Showdown. It can play single battles in generations 3 through 8. The project offers different battle bot implementations such as Safest, Nash-Equilibrium, Team Datasets, and Most Damage. Users can configure the bot using environment variables and run it either without Docker by cloning the repository and installing requirements or with Docker by building the Docker image and running it with an environment variable file. Additionally, users can write their own bot by creating a package in showdown/battle_bots with a module named main.py and implementing a find_best_move function.

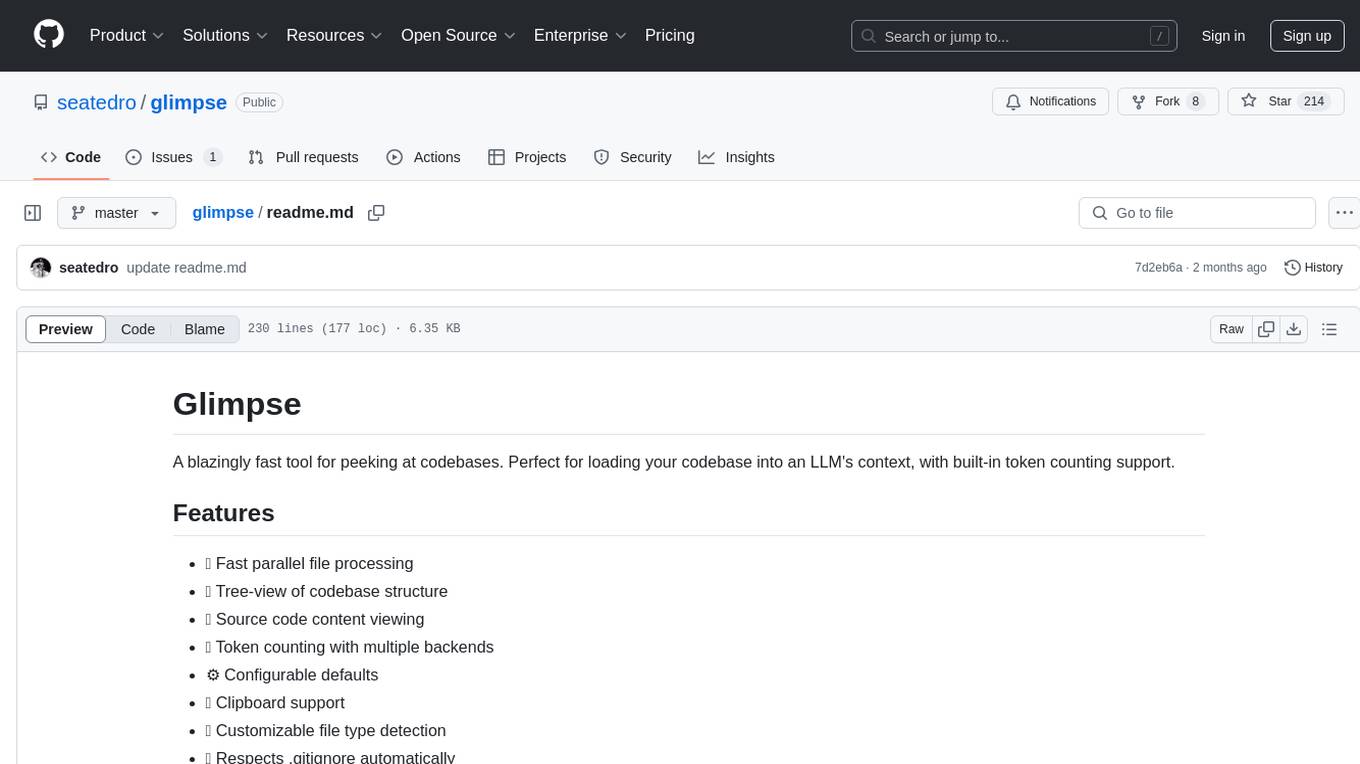

glimpse

Glimpse is a blazingly fast tool for peeking at codebases, offering features like fast parallel file processing, tree-view of codebase structure, source code content viewing, token counting with multiple backends, configurable defaults, clipboard support, customizable file type detection, .gitignore respect, web content processing with Markdown conversion, Git repository support, and URL traversal with configurable depth. It supports token counting using Tiktoken or HuggingFace tokenizer backends, helping estimate context window usage for large language models. Glimpse can process local directories, multiple files, Git repositories, web pages, and convert content to Markdown. It offers various options for customization and configuration, including file type inclusions/exclusions, token counting settings, URL processing settings, and default exclude patterns. Glimpse is suitable for developers and data scientists looking to analyze codebases, estimate token counts, and process web content efficiently.

For similar tasks

aiosmtpd

aiosmtpd is an asyncio-based SMTP server implementation that provides a modern and efficient way to handle SMTP and LMTP protocols in Python 3. It replaces the outdated asyncore and asynchat modules with asyncio for improved asynchronous I/O operations. The project aims to offer a more user-friendly, extendable, and maintainable solution for handling email protocols in Python applications. It is actively maintained by experienced Python developers and offers full documentation for easy integration and usage.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.