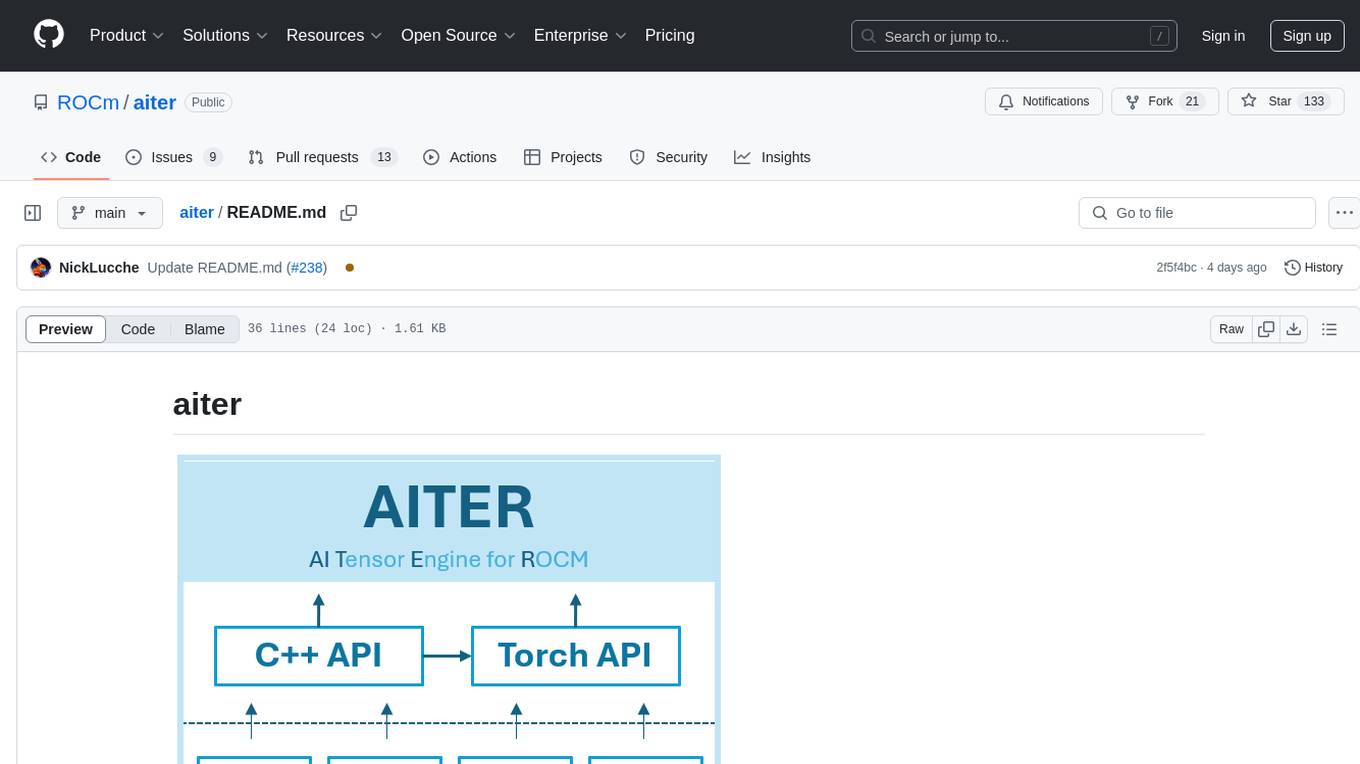

aiter

AI Tensor Engine for ROCm

Stars: 358

AITER is AMD’s centralized repository that supports various high performance AI operators for AI workloads acceleration. It serves as a unified platform for customer operator-level requests, catering to different customer needs. Developers can focus on operators and customers can integrate this collection into their own frameworks. Features include C++ and Python level APIs, kernels from triton/ck/asm, support for inference, training, GEMM, and communication kernels for workarounds in any kernel-framework combination for any architecture limitation.

README:

AITER is AMD’s centralized repository that support various of high performance AI operators for AI workloads acceleration, where a good unified place for all the customer operator-level requests, which can match different customers' needs. Developers can focus on operators, and let the customers integrate this op collection into their own private/public/whatever framework.

Some summary of the features:

- C++ level API

- Python level API

- The underneath kernel could come from triton/ck/asm

- Not just inference kernels, but also training kernels and GEMM+communication kernels—allowing for workarounds in any kernel-framework combination for any architecture limitation.

git clone --recursive https://github.com/ROCm/aiter.git

cd aiter

python3 setup.py develop

If you happen to forget the --recursive during clone, you can use the following command after cd aiter

git submodule sync && git submodule update --init --recursive

AITER supports GPU-initiated communication using the Iris library. This enables high-performance Triton-based communication primitives like reduce-scatter and all-gather.

Installation

Install with Triton communication support:

# Install AITER with Triton communication dependencies

pip install -e .

pip install -r requirements-triton-comms.txtFor more details, see docs/triton_comms.md.

There are number of op test, you can run them with: python3 op_tests/test_layernorm2d.py

| Ops | Description |

|---|---|

| ELEMENT WISE | ops: + - * / |

| SIGMOID | (x) = 1 / (1 + e^-x) |

| AllREDUCE | Reduce + Broadcast |

| KVCACHE | W_K W_V |

| MHA | Multi-Head Attention |

| MLA | Multi-head Latent Attention with KV-Cache layout |

| PA | Paged Attention |

| FusedMoe | Mixture of Experts |

| QUANT | BF16/FP16 -> FP8/INT4 |

| RMSNORM | root mean square |

| LAYERNORM | x = (x - u) / (σ2 + ϵ) e*0.5 |

| ROPE | Rotary Position Embedding |

| GEMM | D=αAβB+C |

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiter

Similar Open Source Tools

aiter

AITER is AMD’s centralized repository that supports various high performance AI operators for AI workloads acceleration. It serves as a unified platform for customer operator-level requests, catering to different customer needs. Developers can focus on operators and customers can integrate this collection into their own frameworks. Features include C++ and Python level APIs, kernels from triton/ck/asm, support for inference, training, GEMM, and communication kernels for workarounds in any kernel-framework combination for any architecture limitation.

thinc

Thinc is a lightweight deep learning library that offers an elegant, type-checked, functional-programming API for composing models, with support for layers defined in other frameworks such as PyTorch, TensorFlow and MXNet. You can use Thinc as an interface layer, a standalone toolkit or a flexible way to develop new models.

noether

Noether is Emmi AI's open software framework for Engineering AI. It is built on transformer building blocks, delivering the full engineering stack for building, training, and operating industrial simulation models across engineering verticals. The framework eliminates the need for component re-engineering or an in-house deep learning team. Noether features a modular transformer architecture optimized for physical systems, hardware agnostic execution across CPU, MPS, and NVIDIA GPUs, industrial-grade design for high-fidelity simulations, and built-in support for Multi-GPU and SLURM cluster environments.

keras-llm-robot

The Keras-llm-robot Web UI project is an open-source tool designed for offline deployment and testing of various open-source models from the Hugging Face website. It allows users to combine multiple models through configuration to achieve functionalities like multimodal, RAG, Agent, and more. The project consists of three main interfaces: chat interface for language models, configuration interface for loading models, and tools & agent interface for auxiliary models. Users can interact with the language model through text, voice, and image inputs, and the tool supports features like model loading, quantization, fine-tuning, role-playing, code interpretation, speech recognition, image recognition, network search engine, and function calling.

helix-db

HelixDB is a database designed specifically for AI applications, providing a single platform to manage all components needed for AI applications. It supports graph + vector data model and also KV, documents, and relational data. Key features include built-in tools for MCP, embeddings, knowledge graphs, RAG, security, logical isolation, and ultra-low latency. Users can interact with HelixDB using the Helix CLI tool and SDKs in TypeScript and Python. The roadmap includes features like organizational auth, server code improvements, 3rd party integrations, educational content, and binary quantisation for better performance. Long term projects involve developing in-house tools for knowledge graph ingestion, graph-vector storage engine, and network protocol & serdes libraries.

atropos

Atropos is a robust and scalable framework for Reinforcement Learning Environments with Large Language Models (LLMs). It provides a flexible platform to accelerate LLM-based RL research across diverse interactive settings. Atropos supports multi-turn and asynchronous RL interactions, integrates with various inference APIs, offers a standardized training interface for experimenting with different RL algorithms, and allows for easy scalability by launching more environment instances. The framework manages diverse environment types concurrently for heterogeneous, multi-modal training.

clai

Clai is a command line context-feeder for AI tasks, supporting MCP client, vendor agnosticism, conversations, rate limit circumvention, profiles, and Unix-like functionality. Users can easily combine and tweak features for diverse use cases. Supported vendors include OpenAI, Anthropic, Mistral, Deepseek, Novita AI, Ollama, and Inception. Users need API keys for model access. Installation via 'go install' or setup script. 'clai help' provides guidance on usage. Glow can be installed for formatted markdown output.

AIOS

AIOS, a Large Language Model (LLM) Agent operating system, embeds large language model into Operating Systems (OS) as the brain of the OS, enabling an operating system "with soul" -- an important step towards AGI. AIOS is designed to optimize resource allocation, facilitate context switch across agents, enable concurrent execution of agents, provide tool service for agents, maintain access control for agents, and provide a rich set of toolkits for LLM Agent developers.

FlexFlow

FlexFlow Serve is an open-source compiler and distributed system for **low latency**, **high performance** LLM serving. FlexFlow Serve outperforms existing systems by 1.3-2.0x for single-node, multi-GPU inference and by 1.4-2.4x for multi-node, multi-GPU inference.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

open-chatgpt

Open-ChatGPT is an open-source library that enables users to train a hyper-personalized ChatGPT-like AI model using their own data with minimal computational resources. It provides an end-to-end training framework for ChatGPT-like models, supporting distributed training and offloading for extremely large models. The project implements RLHF (Reinforcement Learning with Human Feedback) powered by transformer library and DeepSpeed, allowing users to create high-quality ChatGPT-style models. Open-ChatGPT is designed to be user-friendly and efficient, aiming to empower users to develop their own conversational AI models easily.

AgentBench

AgentBench is a benchmark designed to evaluate Large Language Models (LLMs) as autonomous agents in various environments. It includes 8 distinct environments such as Operating System, Database, Knowledge Graph, Digital Card Game, and Lateral Thinking Puzzles. The tool provides a comprehensive evaluation of LLMs' ability to operate as agents by offering Dev and Test sets for each environment. Users can quickly start using the tool by following the provided steps, configuring the agent, starting task servers, and assigning tasks. AgentBench aims to bridge the gap between LLMs' proficiency as agents and their practical usability.

TokenFormer

TokenFormer is a fully attention-based neural network architecture that leverages tokenized model parameters to enhance architectural flexibility. It aims to maximize the flexibility of neural networks by unifying token-token and token-parameter interactions through the attention mechanism. The architecture allows for incremental model scaling and has shown promising results in language modeling and visual modeling tasks. The codebase is clean, concise, easily readable, state-of-the-art, and relies on minimal dependencies.

axoned

Axone is a public dPoS layer 1 designed for connecting, sharing, and monetizing resources in the AI stack. It is an open network for collaborative AI workflow management compatible with any data, model, or infrastructure, allowing sharing of data, algorithms, storage, compute, APIs, both on-chain and off-chain. The 'axoned' node of the AXONE network is built on Cosmos SDK & Tendermint consensus, enabling companies & individuals to define on-chain rules, share off-chain resources, and create new applications. Validators secure the network by maintaining uptime and staking $AXONE for rewards. The blockchain supports various platforms and follows Semantic Versioning 2.0.0. A docker image is available for quick start, with documentation on querying networks, creating wallets, starting nodes, and joining networks. Development involves Go and Cosmos SDK, with smart contracts deployed on the AXONE blockchain. The project provides a Makefile for building, installing, linting, and testing. Community involvement is encouraged through Discord, open issues, and pull requests.

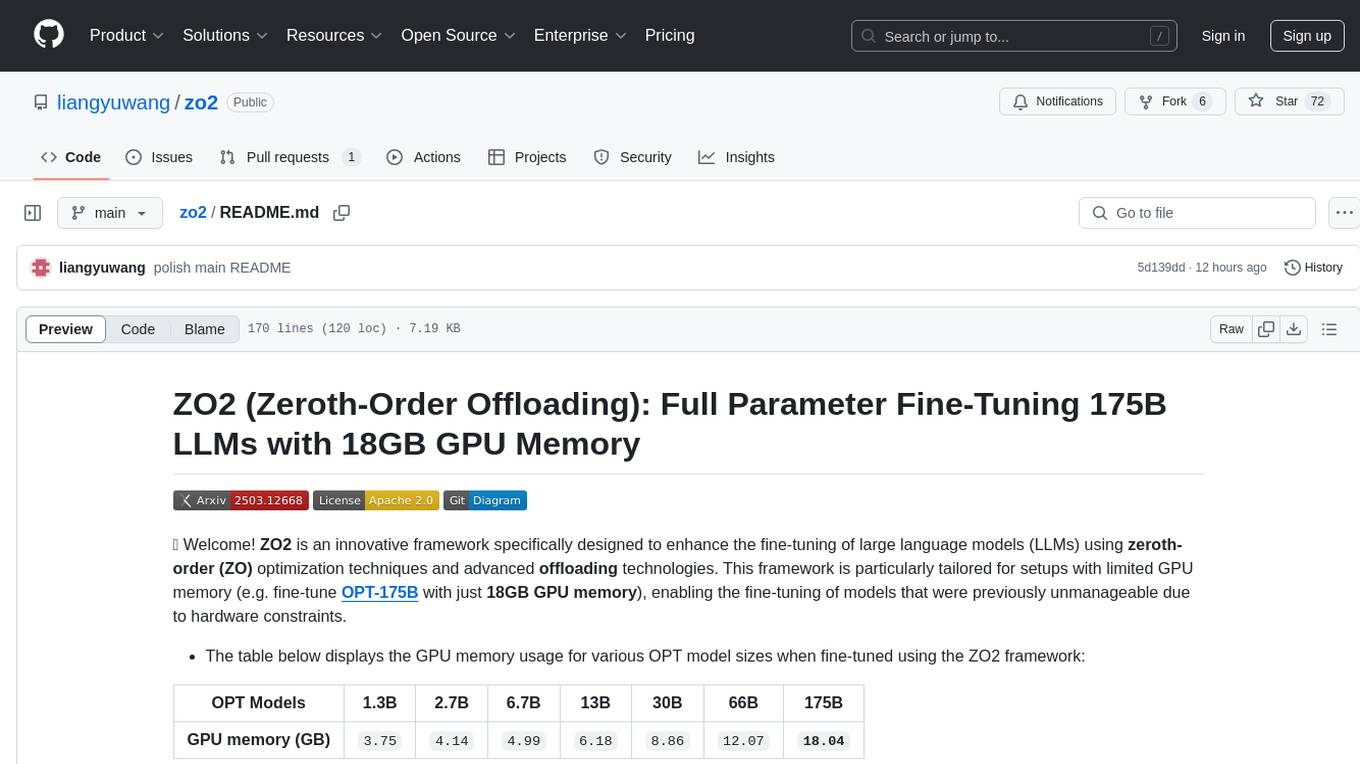

zo2

ZO2 (Zeroth-Order Offloading) is an innovative framework designed to enhance the fine-tuning of large language models (LLMs) using zeroth-order (ZO) optimization techniques and advanced offloading technologies. It is tailored for setups with limited GPU memory, enabling the fine-tuning of models with over 175 billion parameters on single GPUs with as little as 18GB of memory. ZO2 optimizes CPU offloading, incorporates dynamic scheduling, and has the capability to handle very large models efficiently without extra time costs or accuracy losses.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

For similar tasks

genai-os

Kuwa GenAI OS is an open, free, secure, and privacy-focused Generative-AI Operating System. It provides a multi-lingual turnkey solution for GenAI development and deployment on Linux and Windows. Users can enjoy features such as concurrent multi-chat, quoting, full prompt-list import/export/share, and flexible orchestration of prompts, RAGs, bots, models, and hardware/GPUs. The system supports various environments from virtual hosts to cloud, and it is open source, allowing developers to contribute and customize according to their needs.

Neurite

Neurite is an innovative project that combines chaos theory and graph theory to create a digital interface that explores hidden patterns and connections for creative thinking. It offers a unique workspace blending fractals with mind mapping techniques, allowing users to navigate the Mandelbrot set in real-time. Nodes in Neurite represent various content types like text, images, videos, code, and AI agents, enabling users to create personalized microcosms of thoughts and inspirations. The tool supports synchronized knowledge management through bi-directional synchronization between mind-mapping and text-based hyperlinking. Neurite also features FractalGPT for modular conversation with AI, local AI capabilities for multi-agent chat networks, and a Neural API for executing code and sequencing animations. The project is actively developed with plans for deeper fractal zoom, advanced control over node placement, and experimental features.

fast-stable-diffusion

Fast-stable-diffusion is a project that offers notebooks for RunPod, Paperspace, and Colab Pro adaptations with AUTOMATIC1111 Webui and Dreambooth. It provides tools for running and implementing Dreambooth, a stable diffusion project. The project includes implementations by XavierXiao and is sponsored by Runpod, Paperspace, and Colab Pro.

big-AGI

big-AGI is an AI suite designed for professionals seeking function, form, simplicity, and speed. It offers best-in-class Chats, Beams, and Calls with AI personas, visualizations, coding, drawing, side-by-side chatting, and more, all wrapped in a polished UX. The tool is powered by the latest models from 12 vendors and open-source servers, providing users with advanced AI capabilities and a seamless user experience. With continuous updates and enhancements, big-AGI aims to stay ahead of the curve in the AI landscape, catering to the needs of both developers and AI enthusiasts.

generative-ai

This repository contains codes related to Generative AI as per YouTube video. It includes various notebooks and files for different days covering topics like map reduce, text to SQL, LLM parameters, tagging, and Kaggle competition. The repository also includes resources like PDF files and databases for different projects related to Generative AI.

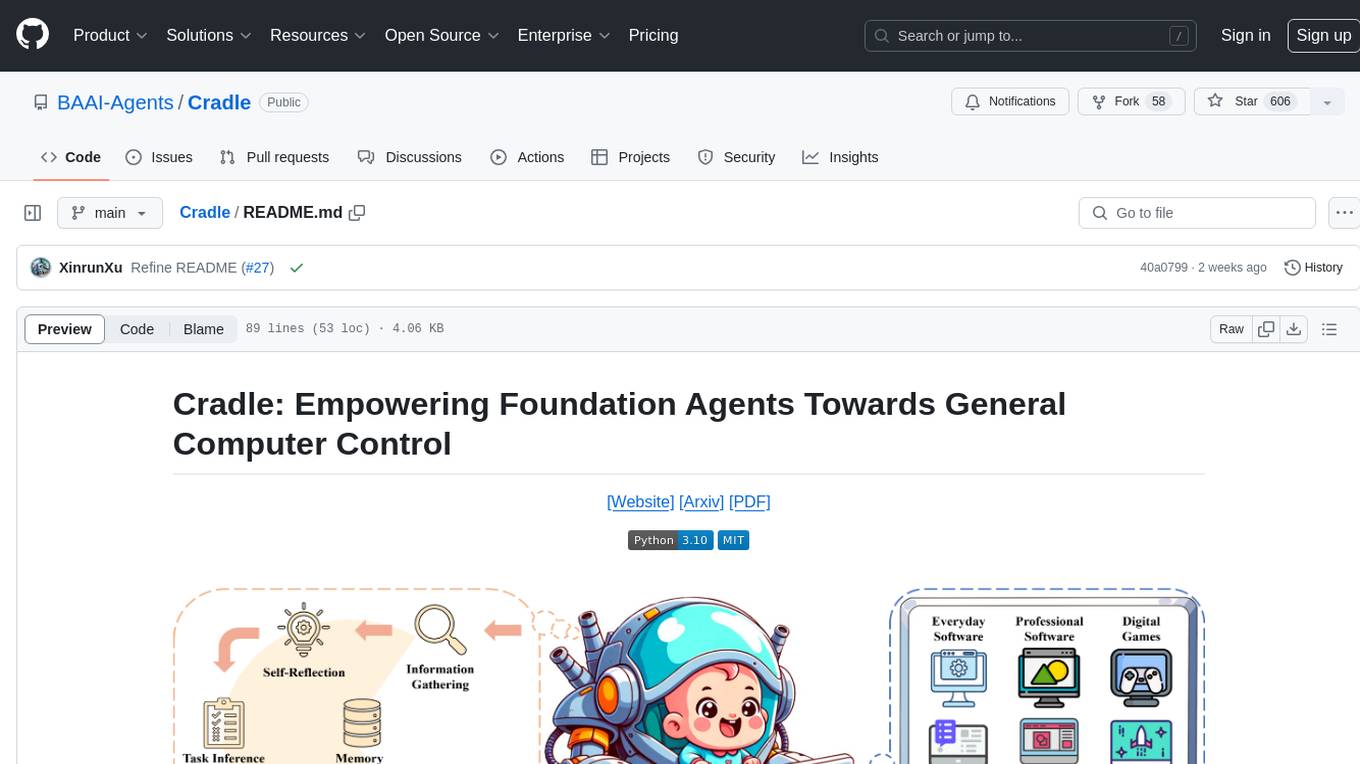

Cradle

The Cradle project is a framework designed for General Computer Control (GCC), empowering foundation agents to excel in various computer tasks through strong reasoning abilities, self-improvement, and skill curation. It provides a standardized environment with minimal requirements, constantly evolving to support more games and software. The repository includes released versions, publications, and relevant assets.

azure-functions-openai-extension

Azure Functions OpenAI Extension is a project that adds support for OpenAI LLM (GPT-3.5-turbo, GPT-4) bindings in Azure Functions. It provides NuGet packages for various functionalities like text completions, chat completions, assistants, embeddings generators, and semantic search. The project requires .NET 6 SDK or greater, Azure Functions Core Tools v4.x, and specific settings in Azure Function or local settings for development. It offers features like text completions, chat completion, assistants with custom skills, embeddings generators for text relatedness, and semantic search using vector databases. The project also includes examples in C# and Python for different functionalities.

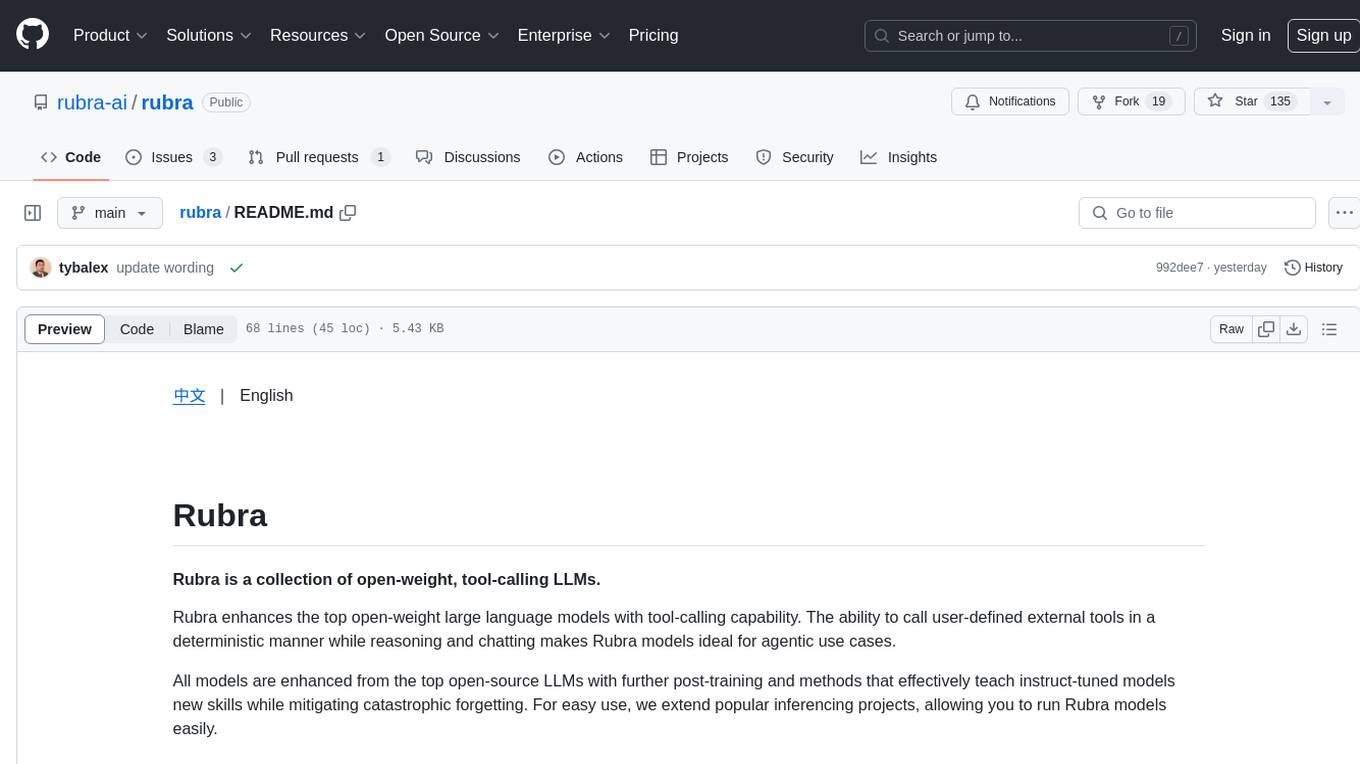

rubra

Rubra is a collection of open-weight large language models enhanced with tool-calling capability. It allows users to call user-defined external tools in a deterministic manner while reasoning and chatting, making it ideal for agentic use cases. The models are further post-trained to teach instruct-tuned models new skills and mitigate catastrophic forgetting. Rubra extends popular inferencing projects for easy use, enabling users to run the models easily.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.