Memori

SQL Native Memory Layer for LLMs, AI Agents & Multi-Agent Systems

Stars: 12086

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

README:

The memory fabric for enterprise AI

Memori plugs into the software and infrastructure you already use. It is LLM, datastore and framework agnostic and seamlessly integrates into the architecture you've already designed.

Install Memori:

pip install memoriimport os

import sqlite3

from memori import Memori

from openai import OpenAI

def get_sqlite_connection():

return sqlite3.connect("memori.db")

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

memori = Memori(conn=get_sqlite_connection).llm.register(client)

memori.attribution(entity_id="123456", process_id="test-ai-agent")

memori.config.storage.build()

response = client.chat.completions.create(

model="gpt-4.1-mini",

messages=[

{"role": "user", "content": "My favorite color is blue."}

]

)

print(response.choices[0].message.content + "\n")

# Advanced Augmentation runs asynchronously to efficiently

# create memories. For this example, a short lived command

# line program, we need to wait for it to finish.

memori.augmentation.wait()

# Memori stored that your favorite color is blue in SQLite.

# Now reset everything so there's no prior context.

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

memori = Memori(conn=get_sqlite_connection).llm.register(client)

memori.attribution(entity_id="123456", process_id="test-ai-agent")

response = client.chat.completions.create(

model="gpt-4.1-mini",

messages=[

{"role": "user", "content": "What's my favorite color?"}

]

)

print(response.choices[0].message.content + "\n")/bin/echo "select * from memori_conversation_message" | /usr/bin/sqlite3 memori.db

/bin/echo "select * from memori_entity_fact" | /usr/bin/sqlite3 memori.db

/bin/echo "select * from memori_process_attribute" | /usr/bin/sqlite3 memori.db

/bin/echo "select * from memori_knowledge_graph" | /usr/bin/sqlite3 memori.db- Significant performance improvements using Advanced Augmentation.

- Threaded, zero latency replacement for the v2 extraction agent.

- LLM agnostic with support for all of the major foundational models.

- Datastore agnostic with support for all major databases and document stores.

- Adapter/driver architecture to make contributions easier.

- Vectorized memories and in-memory semantic search for more accurate context.

- Third normal form schema including storage of semantic triples for a knowledge graph.

- Reduced development overhead to a single line of code.

- Automatic schema migrations.

To get the most out of Memori, you want to attribute your LLM interactions to an entity (think person, place or thing; like a user) and a process (think your agent, LLM interaction or program).

If you do not provide any attribution, Memori cannot make memories for you.

mem.attribution(entity_id="12345", process_id="my-ai-bot")Memori uses sessions to group your LLM interactions together. For example, if you have an agent that executes multiple steps you want those to be recorded in a single session.

By default, Memori handles setting the session for you but you can start a new session or override the session by executing the following:

mem.new_session()or

session_id = mem.config.session_id

# ...

mem.set_session(session_id)To make sure everything is installed in the most efficient manner, we suggest you execute the following once:

python -m memori setupThis step is not necessary but will prep your environment for faster execution. If you do not perform this step, it will be executed the first time Memori is run which will cause the first execution (and only the first one) to be a little slower.

-

Run this command once, via CI/CD or anytime you update Memori.

Memori(conn=db_session_factory).config.storage.build()

-

Instantiate Memori with the connection factory.

from openai import OpenAI from memori import Memori client = OpenAI(...) mem = Memori(conn=db_session_factory).llm.register(client)

- Anthropic

- Bedrock

- Gemini

- Grok (xAI)

- OpenAI (Chat Completions & Responses API)

(unstreamed, streamed, synchronous and asynchronous)

- Agno

- LangChain

- Nebius AI Studio

-

DB API 2.0 - Direct support for any Python database driver that implements the PEP 249 Database API Specification v2.0. This includes drivers like

psycopg,pymysql,MySQLdb,cx_Oracle,oracledb, andsqlite3. - Django - Native integration with Django's ORM and database layer

- SQLAlchemy

- CockroachDB - Full example with setup instructions

- MariaDB

- MongoDB - Full example with setup instructions

- MySQL

- OceanBase - Full example with setup instructions

- Neon - Full example with setup instructions

- Oracle

- PostgreSQL - Full example with setup instructions

- SQLite - Full example with setup instructions

- Supabase

For more examples and demos, check out the Memori Cookbook.

Memories are tracked at several different levels:

- entity: think person, place, or thing; like a user

- process: think your agent, LLM interaction or program

- session: the current interactions between the entity, process and the LLM

Memori's Advanced Augmentation enhances memories at each of these levels with:

- attributes

- events

- facts

- people

- preferences

- relationships

- rules

- skills

Memori knows who your user is, what tasks your agent handles and creates unparalleled context between the two. Augmentation occurs in the background incurring no latency.

By default, Memori Advanced Augmentation is available without an account but rate limited. When you need increased limits, sign up for Memori Advanced Augmentation or execute the following:

python -m memori sign-up <email_address>Memori Advanced Augmentation is always free for developers!

Once you've obtained an API key, simply set the following environment variable:

export MEMORI_API_KEY=[api_key]At any time, you can check your quota by executing the following:

python -m memori quotaOr by checking your account at https://memorilabs.ai/. If you have reached your IP address quota, sign up and get an API key for increased limits.

If your API key exceeds its quota limits we will email you and let you know.

To use the Memori CLI, execute the following from the command line:

python -m memoriThis will display a menu of the available options. For more information about what you can do with the Memori CLI, please reference Command Line Interface.

We welcome contributions from the community! Please see our Contributing Guidelines for details on:

- Setting up your development environment

- Code style and standards

- Submitting pull requests

- Reporting issues

- Documentation: https://memorilabs.ai/docs

- Discord: https://discord.gg/abD4eGym6v

- Issues: GitHub Issues

Apache 2.0 - see LICENSE

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Memori

Similar Open Source Tools

Memori

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

tinystruct

Tinystruct is a simple Java framework designed for easy development with better performance. It offers a modern approach with features like CLI and web integration, built-in lightweight HTTP server, minimal configuration philosophy, annotation-based routing, and performance-first architecture. Developers can focus on real business logic without dealing with unnecessary complexities, making it transparent, predictable, and extensible.

deep-research

Deep Research is a lightning-fast tool that uses powerful AI models to generate comprehensive research reports in just a few minutes. It leverages advanced 'Thinking' and 'Task' models, combined with an internet connection, to provide fast and insightful analysis on various topics. The tool ensures privacy by processing and storing all data locally. It supports multi-platform deployment, offers support for various large language models, web search functionality, knowledge graph generation, research history preservation, local and server API support, PWA technology, multi-key payload support, multi-language support, and is built with modern technologies like Next.js and Shadcn UI. Deep Research is open-source under the MIT License.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

exospherehost

Exosphere is an open source infrastructure designed to run AI agents at scale for large data and long running flows. It allows developers to define plug and playable nodes that can be run on a reliable backbone in the form of a workflow, with features like dynamic state creation at runtime, infinite parallel agents, persistent state management, and failure handling. This enables the deployment of production agents that can scale beautifully to build robust autonomous AI workflows.

Biomni

Biomni is a general-purpose biomedical AI agent designed to autonomously execute a wide range of research tasks across diverse biomedical subfields. By integrating cutting-edge large language model (LLM) reasoning with retrieval-augmented planning and code-based execution, Biomni helps scientists dramatically enhance research productivity and generate testable hypotheses.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

OpenAdapt

OpenAdapt is an open-source software adapter between Large Multimodal Models (LMMs) and traditional desktop and web Graphical User Interfaces (GUIs). It aims to automate repetitive GUI workflows by leveraging the power of LMMs. OpenAdapt records user input and screenshots, converts them into tokenized format, and generates synthetic input via transformer model completions. It also analyzes recordings to generate task trees and replay synthetic input to complete tasks. OpenAdapt is model agnostic and generates prompts automatically by learning from human demonstration, ensuring that agents are grounded in existing processes and mitigating hallucinations. It works with all types of desktop GUIs, including virtualized and web, and is open source under the MIT license.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

TaskWeaver

TaskWeaver is a code-first agent framework designed for planning and executing data analytics tasks. It interprets user requests through code snippets, coordinates various plugins to execute tasks in a stateful manner, and preserves both chat history and code execution history. It supports rich data structures, customized algorithms, domain-specific knowledge incorporation, stateful execution, code verification, easy debugging, security considerations, and easy extension. TaskWeaver is easy to use with CLI and WebUI support, and it can be integrated as a library. It offers detailed documentation, demo examples, and citation guidelines.

honcho

Honcho is a platform for creating personalized AI agents and LLM powered applications for end users. The repository is a monorepo containing the server/API for managing database interactions and storing application state, along with a Python SDK. It utilizes FastAPI for user context management and Poetry for dependency management. The API can be run using Docker or manually by setting environment variables. The client SDK can be installed using pip or Poetry. The project is open source and welcomes contributions, following a fork and PR workflow. Honcho is licensed under the AGPL-3.0 License.

aiconfig

AIConfig is a framework that makes it easy to build generative AI applications for production. It manages generative AI prompts, models and model parameters as JSON-serializable configs that can be version controlled, evaluated, monitored and opened in a local editor for rapid prototyping. It allows you to store and iterate on generative AI behavior separately from your application code, offering a streamlined AI development workflow.

labo

LABO is a time series forecasting and analysis framework that integrates pre-trained and fine-tuned LLMs with multi-domain agent-based systems. It allows users to create and tune agents easily for various scenarios, such as stock market trend prediction and web public opinion analysis. LABO requires a specific runtime environment setup, including system requirements, Python environment, dependency installations, and configurations. Users can fine-tune their own models using LABO's Low-Rank Adaptation (LoRA) for computational efficiency and continuous model updates. Additionally, LABO provides a Python library for building model training pipelines and customizing agents for specific tasks.

BentoML

BentoML is an open-source model serving library for building performant and scalable AI applications with Python. It comes with everything you need for serving optimization, model packaging, and production deployment.

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

For similar tasks

Memori

Memori is a memory fabric designed for enterprise AI that seamlessly integrates into existing software and infrastructure. It is agnostic to LLM, datastore, and framework, providing support for major foundational models and databases. With features like vectorized memories, in-memory semantic search, and a knowledge graph, Memori simplifies the process of attributing LLM interactions and managing sessions. It offers Advanced Augmentation for enhancing memories at different levels and supports various platforms, frameworks, database integrations, and datastores. Memori is designed to reduce development overhead and provide efficient memory management for AI applications.

claude-memory

Claude Memory is a Chrome extension that enhances interactions with Claude by storing and retrieving important information from conversations, making interactions personalized and context-aware. It allows users to easily manage and organize stored information, with seamless integration with the Claude AI interface.

swarmgo

SwarmGo is a Go package designed to create AI agents capable of interacting, coordinating, and executing tasks. It focuses on lightweight agent coordination and execution, offering powerful primitives like Agents and handoffs. SwarmGo enables building scalable solutions with rich dynamics between tools and networks of agents, all while keeping the learning curve low. It supports features like memory management, streaming support, concurrent agent execution, LLM interface, and structured workflows for organizing and coordinating multiple agents.

mem0-chrome-extension

Mem0 Chrome Extension is a tool that enhances AI interactions by providing a universal memory layer across various AI assistants. It allows users to seamlessly share context, automatically capture relevant information, and retrieve memories intelligently. The extension offers features like one-click sync with existing ChatGPT memories and a memory dashboard for easy management. Users can install the extension in Google Chrome, sign in with Google, and start using it with supported AI assistants. Mem0 is free to use with no usage limits or ads, and it prioritizes privacy and data security by sending messages to the Mem0 API for memory extraction and retrieval.

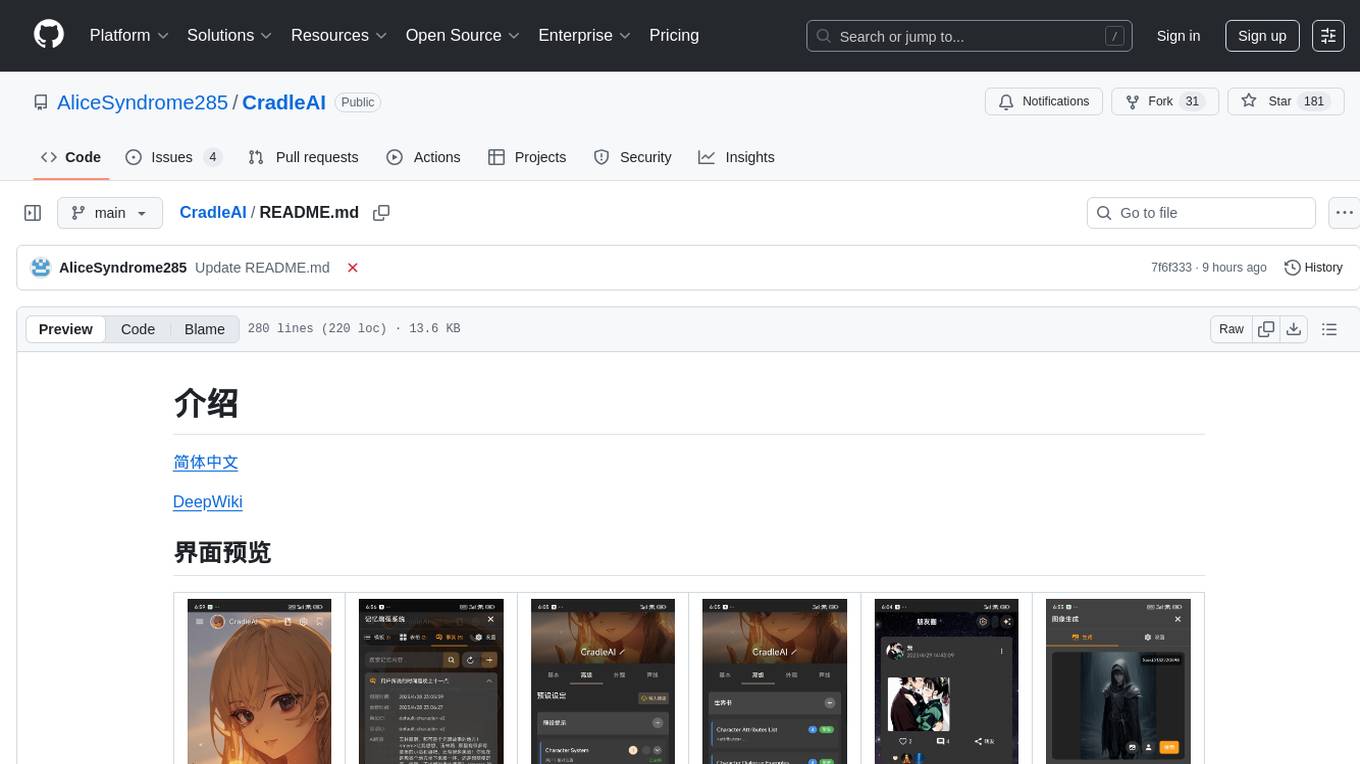

CradleAI

CradleAI is an open-source front-end tool designed for non-commercial purposes. It allows users to create and manage characters, engage in AI roleplay chats, publish dynamic content in a social circle, participate in group chats, and manage memories and knowledge. The tool supports features like author notes, voice interactions, multimedia messaging, visual novel mode, rich text formatting, image generation, TTS enhancement, and more. Users can deploy the tool using Github Action for APK builds or EAS Build for Android and iOS platforms. The project is licensed under CC BY-NC 4.0, prohibiting commercial use and emphasizing proper attribution.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.