AstrBot

Agentic IM Chatbot infrastructure that integrates lots of IM platforms, LLMs, plugins and AI features. Your clawdbot alternative. ✨

Stars: 15773

AstrBot is an open-source one-stop Agentic chatbot platform and development framework. It supports large model conversations, multiple messaging platforms, Agent capabilities, plugin extensions, and WebUI for visual configuration and management of the chatbot.

README:

AstrBot 是一个开源的一站式 Agentic 个人和群聊助手,可在 QQ、Telegram、企业微信、飞书、钉钉、Slack、等数十款主流即时通讯软件上部署,此外还内置类似 OpenWebUI 的轻量化 ChatUI,为个人、开发者和团队打造可靠、可扩展的对话式智能基础设施。无论是个人 AI 伙伴、智能客服、自动化助手,还是企业知识库,AstrBot 都能在你的即时通讯软件平台的工作流中快速构建 AI 应用。

- 💯 免费 & 开源。

- ✨ AI 大模型对话,多模态,Agent,MCP,Skills,知识库,人格设定,自动压缩对话。

- 🤖 支持接入 Dify、阿里云百炼、Coze 等智能体平台。

- 🌐 多平台,支持 QQ、企业微信、飞书、钉钉、微信公众号、Telegram、Slack 以及更多。

- 📦 插件扩展,已有近 800 个插件可一键安装。

- 🛡️ Agent Sandbox 隔离化环境,安全地执行任何代码、调用 Shell、会话级资源复用。

- 💻 WebUI 支持。

- 🌈 Web ChatUI 支持,ChatUI 内置代理沙盒、网页搜索等。

- 🌐 国际化(i18n)支持。

| 💙 角色扮演 & 情感陪伴 | ✨ 主动式 Agent | 🚀 通用 Agentic 能力 | 🧩 900+ 社区插件 |

|---|---|---|---|

推荐使用 Docker / Docker Compose 方式部署 AstrBot。

请参阅官方文档 使用 Docker 部署 AstrBot 。

uvx astrbotAstrBot 与宝塔面板合作,已上架至宝塔面板。

请参阅官方文档 宝塔面板部署 。

AstrBot 已由 1Panel 官方上架至 1Panel 面板。

请参阅官方文档 1Panel 部署 。

AstrBot 已由雨云官方上架至云应用平台,可一键部署。

社区贡献的部署方式。

请参阅官方文档 使用 Windows 一键安装器部署 AstrBot 。

社区贡献的部署方式。

请参阅官方文档 CasaOS 部署 。

首先安装 uv:

pip install uv通过 Git Clone 安装 AstrBot:

git clone https://github.com/AstrBotDevs/AstrBot && cd AstrBot

uv run main.py或者请参阅官方文档 通过源码部署 AstrBot 。

桌面端(Electron 打包,pnpm 工作流)构建流程请参阅:desktop/README.md。

官方维护

- QQ (官方平台 & OneBot)

- Telegram

- 企微应用 & 企微智能机器人

- 微信客服 & 微信公众号

- 飞书

- 钉钉

- Slack

- Discord

- Satori

- Misskey

- Whatsapp (将支持)

- LINE (将支持)

社区维护

大模型服务

- OpenAI 及兼容服务

- Anthropic

- Google Gemini

- Moonshot AI

- 智谱 AI

- DeepSeek

- Ollama (本地部署)

- LM Studio (本地部署)

- 优云智算

- 302.AI

- 小马算力

- 硅基流动

- PPIO 派欧云

- ModelScope

- OneAPI

LLMOps 平台

- Dify

- 阿里云百炼应用

- Coze

语音转文本服务

- OpenAI Whisper

- SenseVoice

文本转语音服务

- OpenAI TTS

- Gemini TTS

- GPT-Sovits-Inference

- GPT-Sovits

- FishAudio

- Edge TTS

- 阿里云百炼 TTS

- Azure TTS

- Minimax TTS

- 火山引擎 TTS

欢迎任何 Issues/Pull Requests!只需要将你的更改提交到此项目 :)

你可以通过查看问题或帮助审核 PR(拉取请求)来贡献。任何问题或 PR 都欢迎参与,以促进社区贡献。当然,这些只是建议,你可以以任何方式进行贡献。对于新功能的添加,请先通过 Issue 讨论。

AstrBot 使用 ruff 进行代码格式化和检查。

git clone https://github.com/AstrBotDevs/AstrBot

pip install pre-commit

pre-commit install- 1 群:322154837

- 3 群:630166526

- 5 群:822130018

- 6 群:753075035

- 7 群:743746109

- 8 群:1030353265

- 开发者群:975206796

特别感谢所有 Contributors 和插件开发者对 AstrBot 的贡献 ❤️

此外,本项目的诞生离不开以下开源项目的帮助:

- NapNeko/NapCatQQ - 伟大的猫猫框架

[!TIP] 如果本项目对您的生活 / 工作产生了帮助,或者您关注本项目的未来发展,请给项目 Star,这是我们维护这个开源项目的动力 <3

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AstrBot

Similar Open Source Tools

AstrBot

AstrBot is an open-source one-stop Agentic chatbot platform and development framework. It supports large model conversations, multiple messaging platforms, Agent capabilities, plugin extensions, and WebUI for visual configuration and management of the chatbot.

pmhub

PmHub is a smart project management system based on SpringCloud, SpringCloud Alibaba, and LLM. It aims to help students quickly grasp the architecture design and development process of microservices/distributed projects. PmHub provides a platform for students to experience the transformation from monolithic to microservices architecture, understand the pros and cons of both architectures, and prepare for job interviews. It offers popular technologies like SpringCloud-Gateway, Nacos, Sentinel, and provides high-quality code, continuous integration, product design documents, and an enterprise workflow system. PmHub is suitable for beginners and advanced learners who want to master core knowledge of microservices/distributed projects.

AstrBot

AstrBot is a powerful and versatile tool that leverages the capabilities of large language models (LLMs) like GPT-3, GPT-3.5, and GPT-4 to enhance communication and automate tasks. It seamlessly integrates with popular messaging platforms such as QQ, QQ Channel, and Telegram, enabling users to harness the power of AI within their daily conversations and workflows.

yudao-boot-mini

yudao-boot-mini is an open-source project focused on developing a rapid development platform for developers in China. It includes features like system functions, infrastructure, member center, data reports, workflow, mall system, WeChat official account, CRM, ERP, etc. The project is based on Spring Boot with Java backend and Vue for frontend. It offers various functionalities such as user management, role management, menu management, department management, workflow management, payment system, code generation, API documentation, database documentation, file service, WebSocket integration, message queue, Java monitoring, and more. The project is licensed under the MIT License, allowing both individuals and enterprises to use it freely without restrictions.

ruoyi-vue-pro

The ruoyi-vue-pro repository is an open-source project that provides a comprehensive development platform with various functionalities such as system features, infrastructure, member center, data reports, workflow, payment system, mall system, ERP system, CRM system, and AI big model. It is built using Java backend with Spring Boot framework and Vue frontend with different versions like Vue3 with element-plus, Vue3 with vben(ant-design-vue), and Vue2 with element-ui. The project aims to offer a fast development platform for developers and enterprises, supporting features like dynamic menu loading, button-level access control, SaaS multi-tenancy, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and cloud services, and more.

yudao-cloud

Yudao-cloud is an open-source project designed to provide a fast development platform for developers in China. It includes various system functions, infrastructure, member center, data reports, workflow, mall system, WeChat public account, CRM, ERP, etc. The project is based on Java backend with Spring Boot and Spring Cloud Alibaba microservices architecture. It supports multiple databases, message queues, authentication systems, dynamic menu loading, SaaS multi-tenant system, code generator, real-time communication, integration with third-party services like WeChat, Alipay, and more. The project is well-documented and follows the Alibaba Java development guidelines, ensuring clean code and architecture.

MedicalGPT

MedicalGPT is a training medical GPT model with ChatGPT training pipeline, implement of Pretraining, Supervised Finetuning, RLHF(Reward Modeling and Reinforcement Learning) and DPO(Direct Preference Optimization).

llms-from-scratch-cn

This repository provides a detailed tutorial on how to build your own large language model (LLM) from scratch. It includes all the code necessary to create a GPT-like LLM, covering the encoding, pre-training, and fine-tuning processes. The tutorial is written in a clear and concise style, with plenty of examples and illustrations to help you understand the concepts involved. It is suitable for developers and researchers with some programming experience who are interested in learning more about LLMs and how to build them.

LangBot

LangBot is a highly stable, extensible, and multimodal instant messaging chatbot platform based on large language models. It supports various large models, adapts to group chats and private chats, and has capabilities for multi-turn conversations, tool invocation, and multimodal interactions. It is deeply integrated with Dify and currently supports QQ and QQ channels, with plans to support platforms like WeChat, WhatsApp, and Discord. The platform offers high stability, comprehensive functionality, native support for access control, rate limiting, sensitive word filtering mechanisms, and simple configuration with multiple deployment options. It also features plugin extension capabilities, an active community, and a new web management panel for managing LangBot instances through a browser.

gpt_server

The GPT Server project leverages the basic capabilities of FastChat to provide the capabilities of an openai server. It perfectly adapts more models, optimizes models with poor compatibility in FastChat, and supports loading vllm, LMDeploy, and hf in various ways. It also supports all sentence_transformers compatible semantic vector models, including Chat templates with function roles, Function Calling (Tools) capability, and multi-modal large models. The project aims to reduce the difficulty of model adaptation and project usage, making it easier to deploy the latest models with minimal code changes.

Chinese-LLaMA-Alpaca-2

Chinese-LLaMA-Alpaca-2 is a large Chinese language model developed by Meta AI. It is based on the Llama-2 model and has been further trained on a large dataset of Chinese text. Chinese-LLaMA-Alpaca-2 can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. Here are some of the key features of Chinese-LLaMA-Alpaca-2: * It is the largest Chinese language model ever trained, with 13 billion parameters. * It is trained on a massive dataset of Chinese text, including books, news articles, and social media posts. * It can be used for a variety of natural language processing tasks, including text generation, question answering, and machine translation. * It is open-source and available for anyone to use. Chinese-LLaMA-Alpaca-2 is a powerful tool that can be used to improve the performance of a wide range of natural language processing tasks. It is a valuable resource for researchers and developers working in the field of artificial intelligence.

JiwuChat

JiwuChat is a lightweight multi-platform chat application built on Tauri2 and Nuxt3, with various real-time messaging features, AI group chat bots (such as 'iFlytek Spark', 'KimiAI' etc.), WebRTC audio-video calling, screen sharing, and AI shopping functions. It supports seamless cross-device communication, covering text, images, files, and voice messages, also supporting group chats and customizable settings. It provides light/dark mode for efficient social networking.

Chinese-LLaMA-Alpaca

This project open sources the **Chinese LLaMA model and the Alpaca large model fine-tuned with instructions**, to further promote the open research of large models in the Chinese NLP community. These models **extend the Chinese vocabulary based on the original LLaMA** and use Chinese data for secondary pre-training, further enhancing the basic Chinese semantic understanding ability. At the same time, the Chinese Alpaca model further uses Chinese instruction data for fine-tuning, significantly improving the model's understanding and execution of instructions.

sanic-web

Sanic-Web is a lightweight, end-to-end, and easily customizable large model application project built on technologies such as Dify, Ollama & Vllm, Sanic, and Text2SQL. It provides a one-stop solution for developing large model applications, supporting graphical data-driven Q&A using ECharts, handling table-based Q&A with CSV files, and integrating with third-party RAG systems for general knowledge Q&A. As a lightweight framework, Sanic-Web enables rapid iteration and extension to facilitate the quick implementation of large model projects.

ai-hub

AI Hub Project aims to continuously test and evaluate mainstream large language models, while accumulating and managing various effective model invocation prompts. It has integrated all mainstream large language models in China, including OpenAI GPT-4 Turbo, Baidu ERNIE-Bot-4, Tencent ChatPro, MiniMax abab5.5-chat, and more. The project plans to continuously track, integrate, and evaluate new models. Users can access the models through REST services or Java code integration. The project also provides a testing suite for translation, coding, and benchmark testing.

Chinese-LLaMA-Alpaca-3

Chinese-LLaMA-Alpaca-3 is a project based on Meta's latest release of the new generation open-source large model Llama-3. It is the third phase of the Chinese-LLaMA-Alpaca open-source large model series projects (Phase 1, Phase 2). This project open-sources the Chinese Llama-3 base model and the Chinese Llama-3-Instruct instruction fine-tuned large model. These models incrementally pre-train with a large amount of Chinese data on the basis of the original Llama-3 and further fine-tune using selected instruction data, enhancing Chinese basic semantics and instruction understanding capabilities. Compared to the second-generation related models, significant performance improvements have been achieved.

For similar tasks

AstrBot

AstrBot is an open-source one-stop Agentic chatbot platform and development framework. It supports large model conversations, multiple messaging platforms, Agent capabilities, plugin extensions, and WebUI for visual configuration and management of the chatbot.

dify-helm

Deploy langgenius/dify, an LLM based chat bot app on kubernetes with helm chart.

ai-chatbot

Next.js AI Chatbot is an open-source app template for building AI chatbots using Next.js, Vercel AI SDK, OpenAI, and Vercel KV. It includes features like Next.js App Router, React Server Components, Vercel AI SDK for streaming chat UI, support for various AI models, Tailwind CSS styling, Radix UI for headless components, chat history management, rate limiting, session storage with Vercel KV, and authentication with NextAuth.js. The template allows easy deployment to Vercel and customization of AI model providers.

ChatGPT-Telegram-Bot

The ChatGPT Telegram Bot is a powerful Telegram bot that utilizes various GPT models, including GPT3.5, GPT4, GPT4 Turbo, GPT4 Vision, DALL·E 3, Groq Mixtral-8x7b/LLaMA2-70b, and Claude2.1/Claude3 opus/sonnet API. It enables users to engage in efficient conversations and information searches on Telegram. The bot supports multiple AI models, online search with DuckDuckGo and Google, user-friendly interface, efficient message processing, document interaction, Markdown rendering, and convenient deployment options like Zeabur, Replit, and Docker. Users can set environment variables for configuration and deployment. The bot also provides Q&A functionality, supports model switching, and can be deployed in group chats with whitelisting. The project is open source under GPLv3 license.

LLM_AppDev-HandsOn

This repository showcases how to build a simple LLM-based chatbot for answering questions based on documents using retrieval augmented generation (RAG) technique. It also provides guidance on deploying the chatbot using Podman or on the OpenShift Container Platform. The workshop associated with this repository introduces participants to LLMs & RAG concepts and demonstrates how to customize the chatbot for specific purposes. The software stack relies on open-source tools like streamlit, LlamaIndex, and local open LLMs via Ollama, making it accessible for GPU-constrained environments.

langchat

LangChat is an enterprise AIGC project solution in the Java ecosystem. It integrates AIGC large model functionality on top of the RBAC permission system to help enterprises quickly customize AI knowledge bases and enterprise AI robots. It supports integration with various large models such as OpenAI, Gemini, Ollama, Azure, Zhifu, Alibaba Tongyi, Baidu Qianfan, etc. The project is developed solely by TyCoding and is continuously evolving. It features multi-modality, dynamic configuration, knowledge base support, advanced RAG capabilities, function call customization, multi-channel deployment, workflows visualization, AIGC client application, and more.

ai-sdk-chrome-ai

The ai-sdk-chrome-ai repository is an open-source chatbot built with Next.js, the Vercel AI SDK, and the Chrome AI provider. It features Next.js App Router, Vercel AI SDK for interacting with the Gemini Nano model, shadcn/ui, Tailwind CSS styling, and Radix UI for headless component primitives. Users can deploy their own version of the chatbot to Vercel with one click and run it locally by installing dependencies and running the dev server. The repository provides a template for creating and customizing a chatbot powered by AI technology.

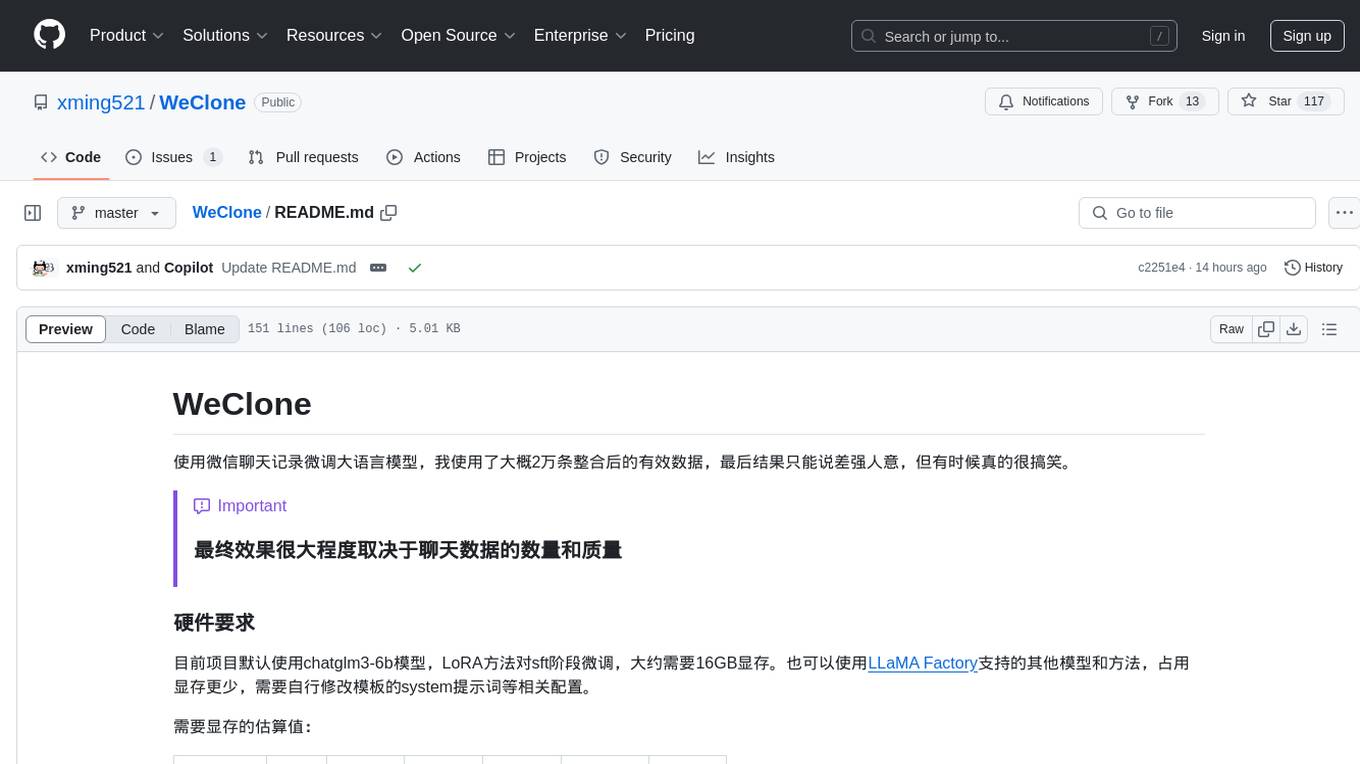

WeClone

WeClone is a tool that fine-tunes large language models using WeChat chat records. It utilizes approximately 20,000 integrated and effective data points, resulting in somewhat satisfactory outcomes that are occasionally humorous. The tool's effectiveness largely depends on the quantity and quality of the chat data provided. It requires a minimum of 16GB of GPU memory for training using the default chatglm3-6b model with LoRA method. Users can also opt for other models and methods supported by LLAMA Factory, which consume less memory. The tool has specific hardware and software requirements, including Python, Torch, Transformers, Datasets, Accelerate, and other optional packages like CUDA and Deepspeed. The tool facilitates environment setup, data preparation, data preprocessing, model downloading, parameter configuration, model fine-tuning, and inference through a browser demo or API service. Additionally, it offers the ability to deploy a WeChat chatbot, although users should be cautious due to the risk of account suspension by WeChat.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.