Best AI tools for< Reference Documentation >

20 - AI tool Sites

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::54zqq-1725301437179-012f02729302). Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::7crbp-1720289011850-d12041b250e9) for reference. Users are directed to check the documentation for further information and troubleshooting.

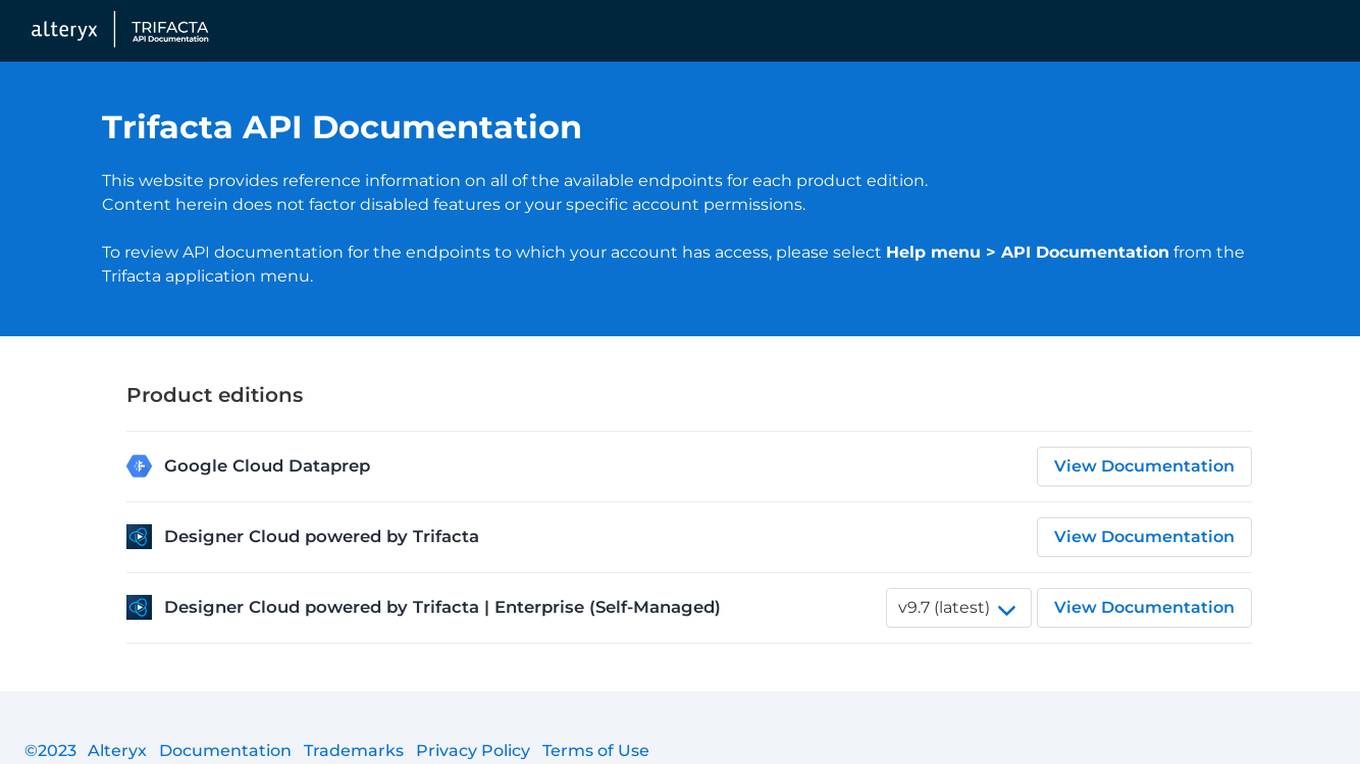

Trifacta API Documentation

Trifacta API Documentation provides reference information on all of the available endpoints for each product edition. This website does not factor disabled features or your specific account permissions. To review API documentation for the endpoints to which your account has access, please select Help menu > API Documentation from the Trifacta application menu.

404 Error Page

The website page displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::fd55k-1727629228031-7a0d0ffffbbb'. Users are directed to refer to the documentation for further information and troubleshooting.

404 Error Page

The website page displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::4wq5g-1718736845999-777f28b346ca) for reference. Users are advised to consult the documentation for further information and troubleshooting.

404 Error Page

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::22md2-1720772812453-4893618e160a) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::l44g5-1727283130745-f99c9f7f28f4) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::n894q-1726678978147-1c9e4ad82a70) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::qhrjt-1726765433586-bc18f7adaa0c) for reference. Users are directed to check the documentation for further information and troubleshooting.

ErrorResolver

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::vphzj-1726938947015-5b9ee22b5622) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Assistant

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::lpcgw-1726939089118-f134fdcd683c) for reference. Users are directed to consult the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code and an ID for reference, along with a suggestion to check the documentation for more information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the requested deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::ltfvp-1727369324219-e2d8330c3f8d) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Page

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::ggptb-1727542270172-dbd5ec692f5f) for reference. Users are directed to check the documentation for further information and troubleshooting.

404 Error Notifier

The website displays a 404 error message indicating that the deployment cannot be found. It provides a code (DEPLOYMENT_NOT_FOUND) and an ID (sin1::7rd4m-1725901316906-8c71a7a2cbd7) for reference. Users are directed to check the documentation for further information and troubleshooting.

pplx-api

The pplx-api is an AI tool designed to provide documentation and examples for blazingly fast LLM inference. It offers a reference for developers to integrate AI capabilities into their applications efficiently. The tool focuses on enhancing natural language processing tasks by leveraging advanced models and algorithms. Users can access detailed guides, API references, changelogs, and engage in discussions related to AI technologies.

Error 404 Not Found

The website displays a '404: NOT_FOUND' error message indicating that the deployment cannot be found. It provides a code 'DEPLOYMENT_NOT_FOUND' and an ID 'sin1::jzd5h-1725561052162-b87af43bcd4a'. Users are directed to refer to the documentation for further information and troubleshooting.

ASKTOWEB

ASKTOWEB is an AI-powered service that enhances websites by adding AI search buttons to SaaS landing pages, software documentation pages, and other websites. It allows visitors to easily search for information without needing specific keywords, making websites more user-friendly and useful. ASKTOWEB analyzes user questions to improve site content and discover customer needs. The service offers multi-model accuracy verification, direct reference jump links, multilingual chatbot support, effortless attachment with a single line of script, and a simple UI without annoying pop-ups. ASKTOWEB reduces the burden on customer support by acting as a buffer for inquiries about available information on the website.

Intel Gaudi AI Accelerator Developer

The Intel Gaudi AI accelerator developer website provides resources, guidance, tools, and support for building, migrating, and optimizing AI models. It offers software, model references, libraries, containers, and tools for training and deploying Generative AI and Large Language Models. The site focuses on the Intel Gaudi accelerators, including tutorials, documentation, and support for developers to enhance AI model performance.

Ref Hub

Ref Hub is an AI-powered online reference checking software based in Australia. The platform offers automated reference checks, fraud detection, lead generation tools, survey builder, and mobile app for managing reference checks on-the-go. With AI assessments, users can test candidates' skills at scale. Ref Hub provides flexible solutions for businesses of all sizes, from startups to large enterprises, streamlining the hiring process with reliable reference checks and smart assessments.

20 - Open Source AI Tools

baml

BAML is a config file format for declaring LLM functions that you can then use in TypeScript or Python. With BAML you can Classify or Extract any structured data using Anthropic, OpenAI or local models (using Ollama) ## Resources  [Discord Community](https://discord.gg/boundaryml)  [Follow us on Twitter](https://twitter.com/boundaryml) * Discord Office Hours - Come ask us anything! We hold office hours most days (9am - 12pm PST). * Documentation - Learn BAML * Documentation - BAML Syntax Reference * Documentation - Prompt engineering tips * Boundary Studio - Observability and more #### Starter projects * BAML + NextJS 14 * BAML + FastAPI + Streaming ## Motivation Calling LLMs in your code is frustrating: * your code uses types everywhere: classes, enums, and arrays * but LLMs speak English, not types BAML makes calling LLMs easy by taking a type-first approach that lives fully in your codebase: 1. Define what your LLM output type is in a .baml file, with rich syntax to describe any field (even enum values) 2. Declare your prompt in the .baml config using those types 3. Add additional LLM config like retries or redundancy 4. Transpile the .baml files to a callable Python or TS function with a type-safe interface. (VSCode extension does this for you automatically). We were inspired by similar patterns for type safety: protobuf and OpenAPI for RPCs, Prisma and SQLAlchemy for databases. BAML guarantees type safety for LLMs and comes with tools to give you a great developer experience:  Jump to BAML code or how Flexible Parsing works without additional LLM calls. | BAML Tooling | Capabilities | | ----------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | | BAML Compiler install | Transpiles BAML code to a native Python / Typescript library (you only need it for development, never for releases) Works on Mac, Windows, Linux  | | VSCode Extension install | Syntax highlighting for BAML files Real-time prompt preview Testing UI | | Boundary Studio open (not open source) | Type-safe observability Labeling |

hume-python-sdk

The Hume AI Python SDK allows users to integrate Hume APIs directly into their Python applications. Users can access complete documentation, quickstart guides, and example notebooks to get started. The SDK is designed to provide support for Hume's expressive communication platform built on scientific research. Users are encouraged to create an account at beta.hume.ai and stay updated on changes through Discord. The SDK may undergo breaking changes to improve tooling and ensure reliable releases in the future.

amadeus-java

Amadeus Java SDK provides a rich set of APIs for the travel industry, allowing developers to access various functionalities such as flight search, booking, airport information, and more. The SDK simplifies interaction with the Amadeus API by providing self-contained code examples and detailed documentation. Developers can easily make API calls, handle responses, and utilize features like pagination and logging. The SDK supports various endpoints for tasks like flight search, booking management, airport information retrieval, and travel analytics. It also offers functionalities for hotel search, booking, and sentiment analysis. Overall, the Amadeus Java SDK is a comprehensive tool for integrating Amadeus APIs into Java applications.

spring-ai

The Spring AI project provides a Spring-friendly API and abstractions for developing AI applications. It offers a portable client API for interacting with generative AI models, enabling developers to easily swap out implementations and access various models like OpenAI, Azure OpenAI, and HuggingFace. Spring AI also supports prompt engineering, providing classes and interfaces for creating and parsing prompts, as well as incorporating proprietary data into generative AI without retraining the model. This is achieved through Retrieval Augmented Generation (RAG), which involves extracting, transforming, and loading data into a vector database for use by AI models. Spring AI's VectorStore abstraction allows for seamless transitions between different vector database implementations.

pinecone-ts-client

The official Node.js client for Pinecone, written in TypeScript. This client library provides a high-level interface for interacting with the Pinecone vector database service. With this client, you can create and manage indexes, upsert and query vector data, and perform other operations related to vector search and retrieval. The client is designed to be easy to use and provides a consistent and idiomatic experience for Node.js developers. It supports all the features and functionality of the Pinecone API, making it a comprehensive solution for building vector-powered applications in Node.js.

llm-apps-java-spring-ai

The 'LLM Applications with Java and Spring AI' repository provides samples demonstrating how to build Java applications powered by Generative AI and Large Language Models (LLMs) using Spring AI. It includes projects for question answering, chat completion models, prompts, templates, multimodality, output converters, embedding models, document ETL pipeline, function calling, image models, and audio models. The repository also lists prerequisites such as Java 21, Docker/Podman, Mistral AI API Key, OpenAI API Key, and Ollama. Users can explore various use cases and projects to leverage LLMs for text generation, vector transformation, document processing, and more.

amadeus-node

Amadeus Node SDK provides a rich set of APIs for the travel industry. It allows developers to interact with various endpoints related to flights, hotels, activities, and more. The SDK simplifies making API calls, handling promises, pagination, logging, and debugging. It supports a wide range of functionalities such as flight search, booking, seat maps, flight status, points of interest, hotel search, sentiment analysis, trip predictions, and more. Developers can easily integrate the SDK into their Node.js applications to access Amadeus APIs and build travel-related applications.

raft

RAFT (Reusable Accelerated Functions and Tools) is a C++ header-only template library with an optional shared library that contains fundamental widely-used algorithms and primitives for machine learning and information retrieval. The algorithms are CUDA-accelerated and form building blocks for more easily writing high performance applications.

cuvs

cuVS is a library that contains state-of-the-art implementations of several algorithms for running approximate nearest neighbors and clustering on the GPU. It can be used directly or through the various databases and other libraries that have integrated it. The primary goal of cuVS is to simplify the use of GPUs for vector similarity search and clustering.

garak

Garak is a free tool that checks if a Large Language Model (LLM) can be made to fail in a way that is undesirable. It probes for hallucination, data leakage, prompt injection, misinformation, toxicity generation, jailbreaks, and many other weaknesses. Garak's a free tool. We love developing it and are always interested in adding functionality to support applications.

Awesome-Embedded

Awesome-Embedded is a curated list of resources for embedded systems enthusiasts. It covers a wide range of topics including MCU programming, RTOS, Linux kernel development, assembly programming, machine learning & AI on MCU, utilities, tips & tricks, and more. The repository provides valuable information, tutorials, and tools for individuals interested in embedded systems development.

ai-cli-lib

The ai-cli-lib is a library designed to enhance interactive command-line editing programs by integrating with GPT large language model servers. It allows users to obtain AI help from servers like Anthropic's or OpenAI's, or a llama.cpp server. The library acts as a command line copilot, providing natural language prompts and responses to enhance user experience and productivity. It supports various platforms such as Debian GNU/Linux, macOS, and Cygwin, and requires specific packages for installation and operation. Users can configure the library to activate during shell startup and interact with command-line programs like bash, mysql, psql, gdb, sqlite3, and bc. Additionally, the library provides options for configuring API keys, setting up llama.cpp servers, and ensuring data privacy by managing context settings.

ai

The Vercel AI SDK is a library for building AI-powered streaming text and chat UIs. It provides React, Svelte, Vue, and Solid helpers for streaming text responses and building chat and completion UIs. The SDK also includes a React Server Components API for streaming Generative UI and first-class support for various AI providers such as OpenAI, Anthropic, Mistral, Perplexity, AWS Bedrock, Azure, Google Gemini, Hugging Face, Fireworks, Cohere, LangChain, Replicate, Ollama, and more. Additionally, it offers Node.js, Serverless, and Edge Runtime support, as well as lifecycle callbacks for saving completed streaming responses to a database in the same request.

nucliadb

NucliaDB is a robust database that allows storing and searching on unstructured data. It is an out of the box hybrid search database, utilizing vector, full text and graph indexes. NucliaDB is written in Rust and Python. We designed it to index large datasets and provide multi-teanant support. When utilizing NucliaDB with Nuclia cloud, you are able to the power of an NLP database without the hassle of data extraction, enrichment and inference. We do all the hard work for you.

langserve

LangServe helps developers deploy `LangChain` runnables and chains as a REST API. This library is integrated with FastAPI and uses pydantic for data validation. In addition, it provides a client that can be used to call into runnables deployed on a server. A JavaScript client is available in LangChain.js.

gemini-ai

Gemini AI is a Ruby Gem designed to provide low-level access to Google's generative AI services through Vertex AI, Generative Language API, or AI Studio. It allows users to interact with Gemini to build abstractions on top of it. The Gem provides functionalities for tasks such as generating content, embeddings, predictions, and more. It supports streaming capabilities, server-sent events, safety settings, system instructions, JSON format responses, and tools (functions) calling. The Gem also includes error handling, development setup, publishing to RubyGems, updating the README, and references to resources for further learning.

experts

Experts.js is a tool that simplifies the creation and deployment of OpenAI's Assistants, allowing users to link them together as Tools to create a Panel of Experts system with expanded memory and attention to detail. It leverages the new Assistants API from OpenAI, which offers advanced features such as referencing attached files & images as knowledge sources, supporting instructions up to 256,000 characters, integrating with 128 tools, and utilizing the Vector Store API for efficient file search. Experts.js introduces Assistants as Tools, enabling the creation of Multi AI Agent Systems where each Tool is an LLM-backed Assistant that can take on specialized roles or fulfill complex tasks.

ollama

Ollama is a lightweight, extensible framework for building and running language models on the local machine. It provides a simple API for creating, running, and managing models, as well as a library of pre-built models that can be easily used in a variety of applications. Ollama is designed to be easy to use and accessible to developers of all levels. It is open source and available for free on GitHub.

vim-ai

vim-ai is a plugin that adds Artificial Intelligence (AI) capabilities to Vim and Neovim. It allows users to generate code, edit text, and have interactive conversations with GPT models powered by OpenAI's API. The plugin uses OpenAI's API to generate responses, requiring users to set up an account and obtain an API key. It supports various commands for text generation, editing, and chat interactions, providing a seamless integration of AI features into the Vim text editor environment.

20 - OpenAI Gpts

Octorate Code Companion

I help developers understand and use APIs, referencing a YAML model.

Navigator for OpenAI

Your documentation guide for OpenAI, loaded with the latest guides and API references.

MODX GPT

MODX GPT is trained on the MODX.com CMS documentation and helps guide you with MODX development tasks. Learn the basics, use me to look up function references, discover MODX Extras, or even help with debugging.

Visionary Scholar

Assistant to help researchers with thesis research and documentation process.

Virtual Hair Stylist

I will create personalized reference photos to show your hair stylist. Upload a photo to try.

PsychoHelper

Using various psychological literature as a reference, we provide advice on problems related to work and daily life.

Detailed Speech Drafting Wizard

Crafts speeches from PowerPoint slides and reference materials, adding depth and context.

Strum Tutor

Guitar teacher focused on giving tabular reference material for song writing or music theory education.

Longevity Lab Test Analyzer

Analyze your results based on reference ranges from the most influential longevity doctors and organizations.

Harvard Quick Citations

This tool is only useful if you have added new sources to your reference list and need to ensure that your in-text citations reflect these updates. Paste your essay below to get started.

cinematic character costume concept design master

Upload an avatar and indicate the costume concept to design the movie character costume concept and set different angles for reference.