Best AI tools for< Evaluate Machine Learning Models >

20 - AI tool Sites

Arize AI

Arize AI is an AI Observability & LLM Evaluation Platform that helps you monitor, troubleshoot, and evaluate your machine learning models. With Arize, you can catch model issues, troubleshoot root causes, and continuously improve performance. Arize is used by top AI companies to surface, resolve, and improve their models.

HappyML

HappyML is an AI tool designed to assist users in machine learning tasks. It provides a user-friendly interface for running machine learning algorithms without the need for complex coding. With HappyML, users can easily build, train, and deploy machine learning models for various applications. The tool offers a range of features such as data preprocessing, model evaluation, hyperparameter tuning, and model deployment. HappyML simplifies the machine learning process, making it accessible to users with varying levels of expertise.

Datumbox

Datumbox is a machine learning platform that offers a powerful open-source Machine Learning Framework written in Java. It provides a large collection of algorithms, models, statistical tests, and tools to power up intelligent applications. The platform enables developers to build smart software and services quickly using its REST Machine Learning API. Datumbox API offers off-the-shelf Classifiers and Natural Language Processing services for applications like Sentiment Analysis, Topic Classification, Language Detection, and more. It simplifies the process of designing and training Machine Learning models, making it easy for developers to create innovative applications.

Stanford HAI

Stanford HAI is a research institute at Stanford University dedicated to advancing AI research, education, and policy to improve the human condition. The institute brings together researchers from a variety of disciplines to work on a wide range of AI-related projects, including developing new AI algorithms, studying the ethical and societal implications of AI, and creating educational programs to train the next generation of AI leaders. Stanford HAI is committed to developing human-centered AI technologies and applications that benefit all of humanity.

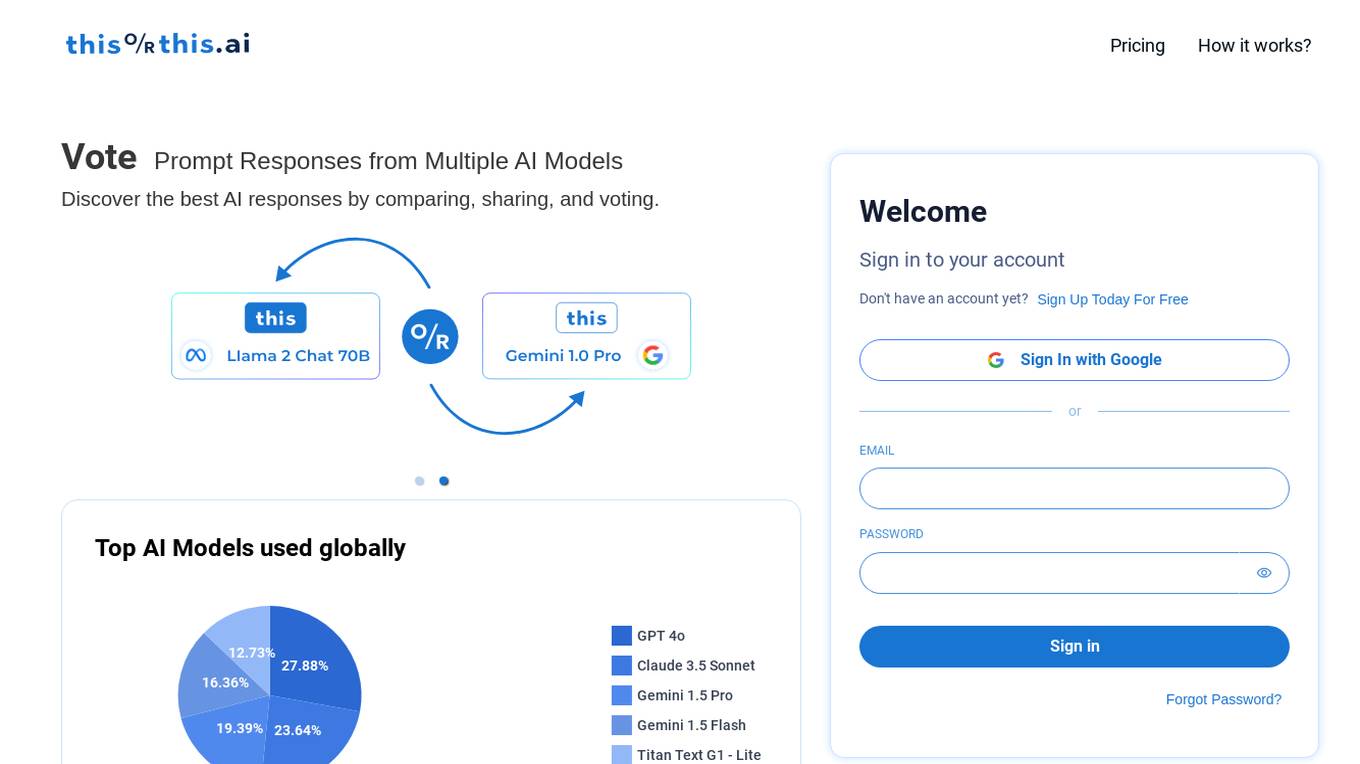

thisorthis.ai

thisorthis.ai is an AI tool that allows users to compare generative AI models and AI model responses. It helps users analyze and evaluate different AI models to make informed decisions. The tool requires JavaScript to be enabled for optimal functionality.

BenchLLM

BenchLLM is an AI tool designed for AI engineers to evaluate LLM-powered apps by running and evaluating models with a powerful CLI. It allows users to build test suites, choose evaluation strategies, and generate quality reports. The tool supports OpenAI, Langchain, and other APIs out of the box, offering automation, visualization of reports, and monitoring of model performance.

Langtrace AI

Langtrace AI is an open-source observability tool powered by Scale3 Labs that helps monitor, evaluate, and improve LLM (Large Language Model) applications. It collects and analyzes traces and metrics to provide insights into the ML pipeline, ensuring security through SOC 2 Type II certification. Langtrace supports popular LLMs, frameworks, and vector databases, offering end-to-end observability and the ability to build and deploy AI applications with confidence.

Evidently AI

Evidently AI is an open-source machine learning (ML) monitoring and observability platform that helps data scientists and ML engineers evaluate, test, and monitor ML models from validation to production. It provides a centralized hub for ML in production, including data quality monitoring, data drift monitoring, ML model performance monitoring, and NLP and LLM monitoring. Evidently AI's features include customizable reports, structured checks for data and models, and a Python library for ML monitoring. It is designed to be easy to use, with a simple setup process and a user-friendly interface. Evidently AI is used by over 2,500 data scientists and ML engineers worldwide, and it has been featured in publications such as Forbes, VentureBeat, and TechCrunch.

Dr. Randal S. Olson

Dr. Randal S. Olson is an AI Researcher & Builder known for turning ambitious AI ideas into business wins by bridging the gap between technical promise and real-world impact. His work encompasses data science, AI engineering, and executive strategy. He has worked on various projects in AI, data science, and technology leadership, including the development of the Truesight Expert-grounded AI evaluation platform and the AutoML Tool TPOT. Dr. Olson's focus is on building privacy-first AI solutions that prioritize ethical AI development and user-centric design.

Welo Data

Welo Data is an AI tool that specializes in AI benchmarking, model assessment, and training high-quality datasets for AI models. The platform offers services such as supervised fine tuning, reinforcement learning with human feedback, data generation, expert evaluations, and data quality framework to support the development of world-class AI models. With over 27 years of experience, Welo Data combines language expertise and AI data to deliver exceptional training and performance evaluation solutions.

Entry Point AI

Entry Point AI is a modern AI optimization platform for fine-tuning proprietary and open-source language models. It provides a user-friendly interface to manage prompts, fine-tunes, and evaluations in one place. The platform enables users to optimize models from leading providers, train across providers, work collaboratively, write templates, import/export data, share models, and avoid common pitfalls associated with fine-tuning. Entry Point AI simplifies the fine-tuning process, making it accessible to users without the need for extensive data, infrastructure, or insider knowledge.

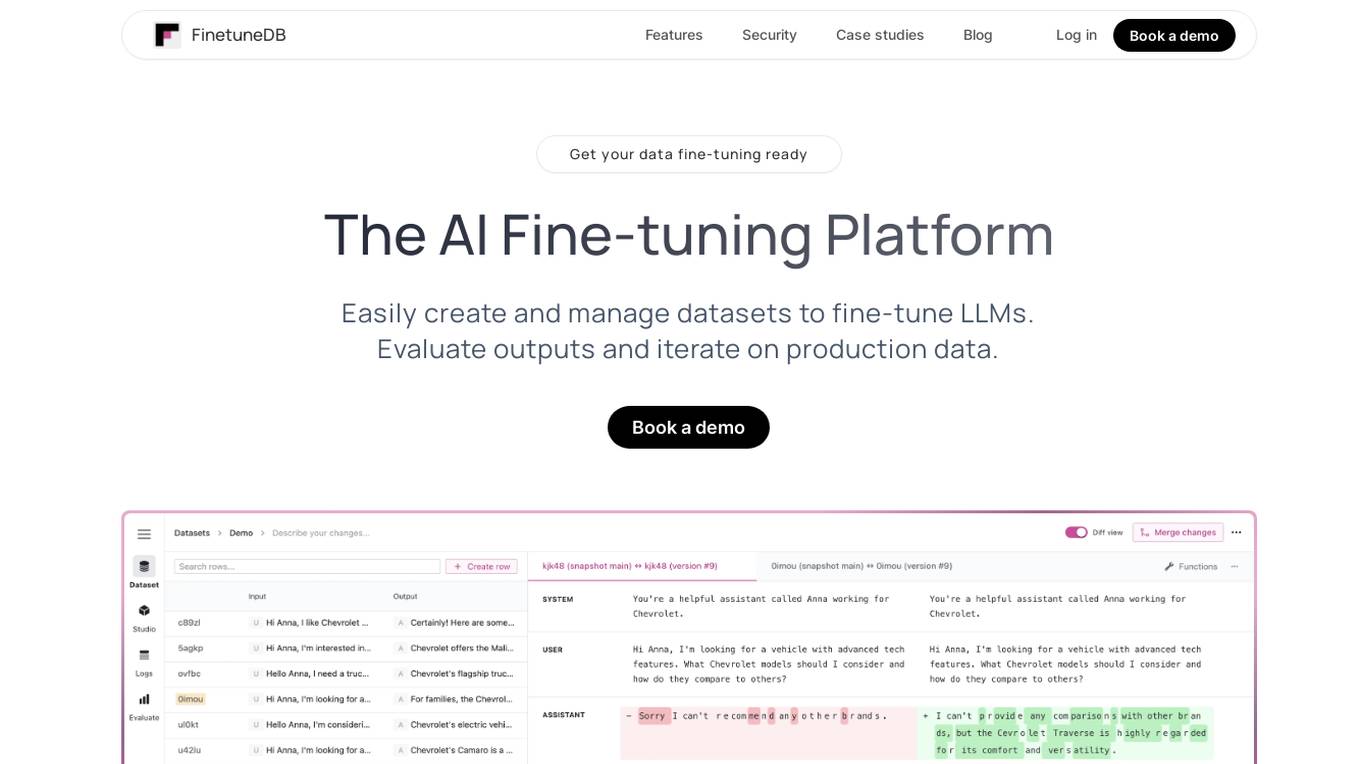

FinetuneDB

FinetuneDB is an AI fine-tuning platform that allows users to easily create and manage datasets to fine-tune LLMs, evaluate outputs, and iterate on production data. It integrates with open-source and proprietary foundation models, and provides a collaborative editor for building datasets. FinetuneDB also offers a variety of features for evaluating model performance, including human and AI feedback, automated evaluations, and model metrics tracking.

Robust Intelligence

Robust Intelligence is an end-to-end solution for securing AI applications. It automates the evaluation of AI models, data, and files for security and safety vulnerabilities and provides guardrails for AI applications in production against integrity, privacy, abuse, and availability violations. Robust Intelligence helps enterprises remove AI security blockers, save time and resources, meet AI safety and security standards, align AI security across stakeholders, and protect against evolving threats.

Flow AI

Flow AI is an advanced AI tool designed for evaluating and improving Large Language Model (LLM) applications. It offers a unique system for creating custom evaluators, deploying them with an API, and developing specialized LMs tailored to specific use cases. The tool aims to revolutionize AI evaluation and model development by providing transparent, cost-effective, and controllable solutions for AI teams across various domains.

Maxim

Maxim is an end-to-end AI evaluation and observability platform that empowers modern AI teams to ship products with quality, reliability, and speed. It offers a comprehensive suite of tools for experimentation, evaluation, observability, and data management. Maxim aims to bring the best practices of traditional software development into non-deterministic AI workflows, enabling rapid iteration and deployment of AI models. The platform caters to the needs of AI developers, data scientists, and machine learning engineers by providing a unified framework for evaluation, visual flows for workflow testing, and observability features for monitoring and optimizing AI systems in real-time.

Athina AI Hub

Athina AI Hub is an ultimate resource for AI development teams, offering a wide range of AI development blogs, research papers, and original content. It provides valuable insights into cutting-edge technologies such as Large Language Models (LLMs), Retrieval-Augmented Generation (RAG), and AI agents. Athina AI Hub aims to empower AI engineers, researchers, data scientists, and product developers by offering comprehensive resources and fostering innovation in the field of Artificial Intelligence.

Future AGI

Future AGI is a revolutionary AI data management platform that aims to achieve 99% accuracy in AI applications across software and hardware. It provides a comprehensive evaluation and optimization platform for enterprises to enhance the performance of their AI models. Future AGI offers features such as creating trustworthy, accurate, and responsible AI, 10x faster processing, generating and managing diverse synthetic datasets, testing and analyzing agentic workflow configurations, assessing agent performance, enhancing LLM application performance, monitoring and protecting applications in production, and evaluating AI across different modalities.

LlamaIndex

LlamaIndex is a leading data framework designed for building LLM (Large Language Model) applications. It allows enterprises to turn their data into production-ready applications by providing functionalities such as loading data from various sources, indexing data, orchestrating workflows, and evaluating application performance. The platform offers extensive documentation, community-contributed resources, and integration options to support developers in creating innovative LLM applications.

Labelbox

Labelbox is a data factory platform that empowers AI teams to manage data labeling, train models, and create better data with internet scale RLHF platform. It offers an all-in-one solution comprising tooling and services powered by a global community of domain experts. Labelbox operates a global data labeling infrastructure and operations for AI workloads, providing expert human network for data labeling in various domains. The platform also includes AI-assisted alignment for maximum efficiency, data curation, model training, and labeling services. Customers achieve breakthroughs with high-quality data through Labelbox.

integrate.ai

integrate.ai is a platform that enables data and analytics providers to collaborate easily with enterprise data science teams without moving data. Powered by federated learning technology, the platform allows for efficient proof of concepts, data experimentation, infrastructure agnostic evaluations, collaborative data evaluations, and data governance controls. It supports various data science jobs such as match rate analysis, exploratory data analysis, correlation analysis, model performance analysis, feature importance & data influence, and model validation. The platform integrates with popular data science tools like Azure, Jupyter, Databricks, AWS, GCP, Snowflake, Pandas, PyTorch, MLflow, and scikit-learn.

1 - Open Source AI Tools

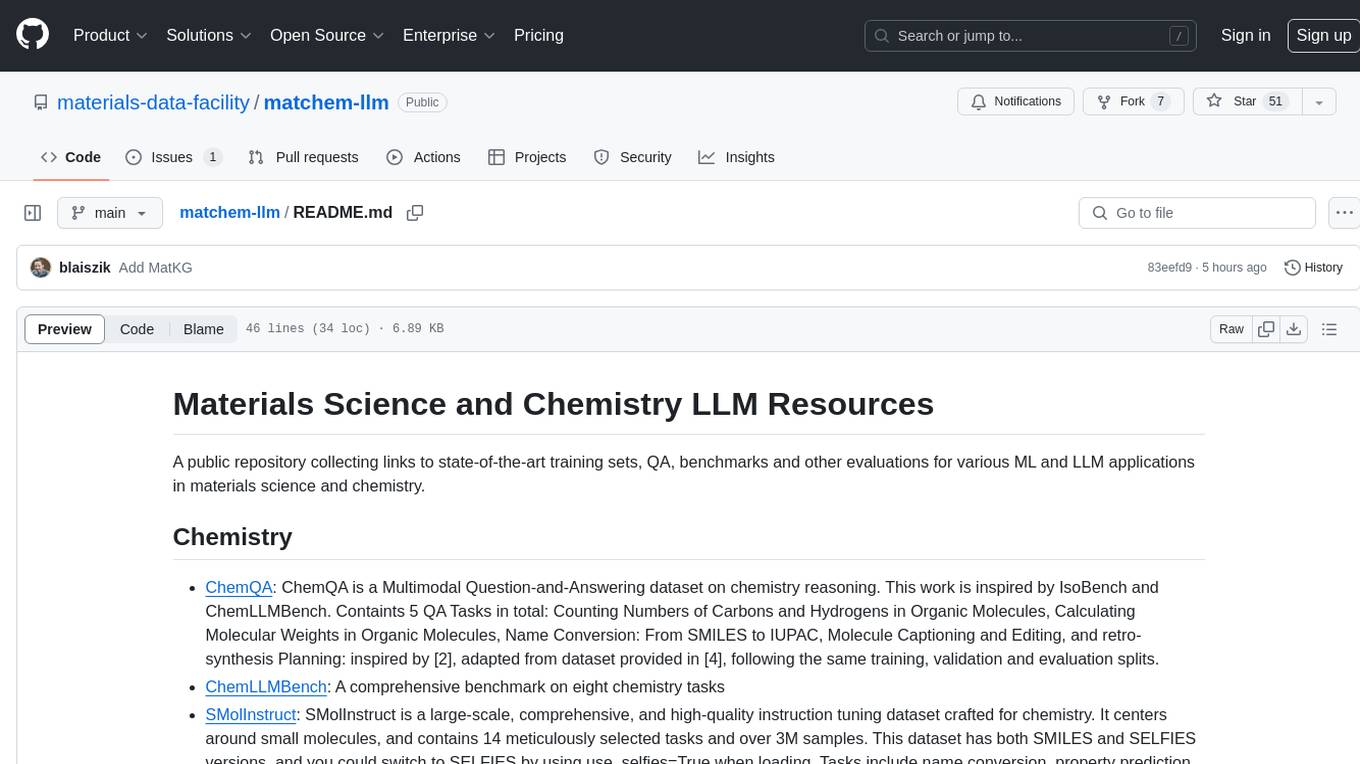

matchem-llm

A public repository collecting links to state-of-the-art training sets, QA, benchmarks and other evaluations for various ML and LLM applications in materials science and chemistry. It includes datasets related to chemistry, materials, multimodal data, and knowledge graphs in the field. The repository aims to provide resources for training and evaluating machine learning models in the materials science and chemistry domains.

20 - OpenAI Gpts

Pytorch Trainer GPT

Your purpose is to create the pytorch code to train language models using pytorch

GPT Architect

Expert in designing GPT models and translating user needs into technical specs.

GPT Designer

A creative aide for designing new GPT models, skilled in ideation and prompting.

HuggingFace Helper

A witty yet succinct guide for HuggingFace, offering technical assistance on using the platform - based on their Learning Hub

Instructor GCP ML

Formador para la certificación de ML Engineer en GCP, con respuestas y explicaciones detalladas.

ecosystem.Ai Use Case Designer v2

The use case designer is configured with the latest Data Science and Behavioral Social Science insights to guide you through the process of defining AI and Machine Learning use cases for the ecosystem.Ai platform.

Learning Hero

Your personal A.I. learning hero when creating interactive e-learning content

The Learning Architect

An all-in-one, consultative L&D expert AI helping you build impactful, customized learning solutions for your organization.

Training Material Design Advisor

Designs effective training materials to enhance organizational learning and performance.

Course Architect

Assists in course design, offering expandable responses based on user input.

Academic Paper Evaluator

Enthusiastic about truth in academic papers, critical and analytical.