Best AI tools for< data modification >

20 - AI tool Sites

Automaited

Automaited is an AI-powered automation software that helps businesses automate repetitive tasks and processes. It uses a combination of AI, RPA, and ML technologies to create automations that are tailored to the specific needs of each business. Automaited is easy to use and requires no coding knowledge. It can be used to automate a wide range of tasks, including data entry, data extraction, system navigation, and data modification. Automaited can help businesses save time and money, and improve efficiency and accuracy.

heißdocs

heißdocs is a tool that adds a superfast search layer on top of scanned or digital PDFs. It also has AI-powered Question-Answering capabilities. With heißdocs, you can easily find information in your PDFs without having to sift through thousands of pages. You can also own your data and host it yourself. heißdocs is open source, so you can view the code, make modifications, or request modifications as you like.

Deepfake Detection Challenge Dataset

The Deepfake Detection Challenge Dataset is a project initiated by Facebook AI to accelerate the development of new ways to detect deepfake videos. The dataset consists of over 100,000 videos and was created in collaboration with industry leaders and academic experts. It includes two versions: a preview dataset with 5k videos and a full dataset with 124k videos, each featuring facial modification algorithms. The dataset was used in a Kaggle competition to create better models for detecting manipulated media. The top-performing models achieved high accuracy on the public dataset but faced challenges when tested against the black box dataset, highlighting the importance of generalization in deepfake detection. The project aims to encourage the research community to continue advancing in detecting harmful manipulated media.

Google Gemma

Google Gemma is a lightweight, state-of-the-art open language model (LLM) developed by Google. It is part of the same research used in the creation of Google's Gemini models. Gemma models come in two sizes, the 2B and 7B parameter versions, where each has a base (pre-trained) and instruction-tuned modifications. Gemma models are designed to be cross-device compatible and optimized for Google Cloud and NVIDIA GPUs. They are also accessible through Kaggle, Hugging Face, Google Cloud with Vertex AI or GKE. Gemma models can be used for a variety of applications, including text generation, summarization, RAG, and both commercial and research use.

Duplikate

Duplikate is a next-generation community management tool powered by AI that helps users save time and effort in managing their social media accounts. It retrieves relevant social media posts, categorizes them, and duplicates them with modifications to make them more suitable for the audience. With features like post scraping, filtering, and copying, Duplikate streamlines the process of creating engaging content. Users can enjoy the benefits of automated content creation, customizable templates, and intuitive interface to enhance their online presence.

Towards Data Science

Towards Data Science is a Medium publication dedicated to sharing concepts, ideas, and codes in the field of data science. It provides a platform for data scientists, researchers, and practitioners to connect, learn, and contribute to the advancement of the field.

What's The Big Data

What's The Big Data is an AI tool directory that helps users unleash their potential by providing a comprehensive source for AI tools, data, and ChatGPT. The platform is updated daily and caters to every need, offering a wide range of AI assistants across various categories. Users can easily find their perfect AI assistant with just a click, making it a valuable resource for those seeking AI solutions.

Domino Data Lab

Domino Data Lab is an enterprise AI platform that enables data scientists and IT leaders to build, deploy, and manage AI models at scale. It provides a unified platform for accessing data, tools, compute, models, and projects across any environment. Domino also fosters collaboration, establishes best practices, and tracks models in production to accelerate and scale AI while ensuring governance and reducing costs.

Research Center Trustworthy Data Science and Security

The Research Center Trustworthy Data Science and Security is a hub for interdisciplinary research focusing on building trust in artificial intelligence, machine learning, and cyber security. The center aims to develop trustworthy intelligent systems through research in trustworthy data analytics, explainable machine learning, and privacy-aware algorithms. By addressing the intersection of technological progress and social acceptance, the center seeks to enable private citizens to understand and trust technology in safety-critical applications.

Dot Group Data Advisory

Dot Group is an AI-powered data advisory and solutions platform that specializes in effective data management. They offer services to help businesses maximize the potential of their data estate, turning complex challenges into profitable opportunities using AI technologies. With a focus on data strategy, data engineering, and data transport, Dot Group provides innovative solutions to drive better profitability for their clients.

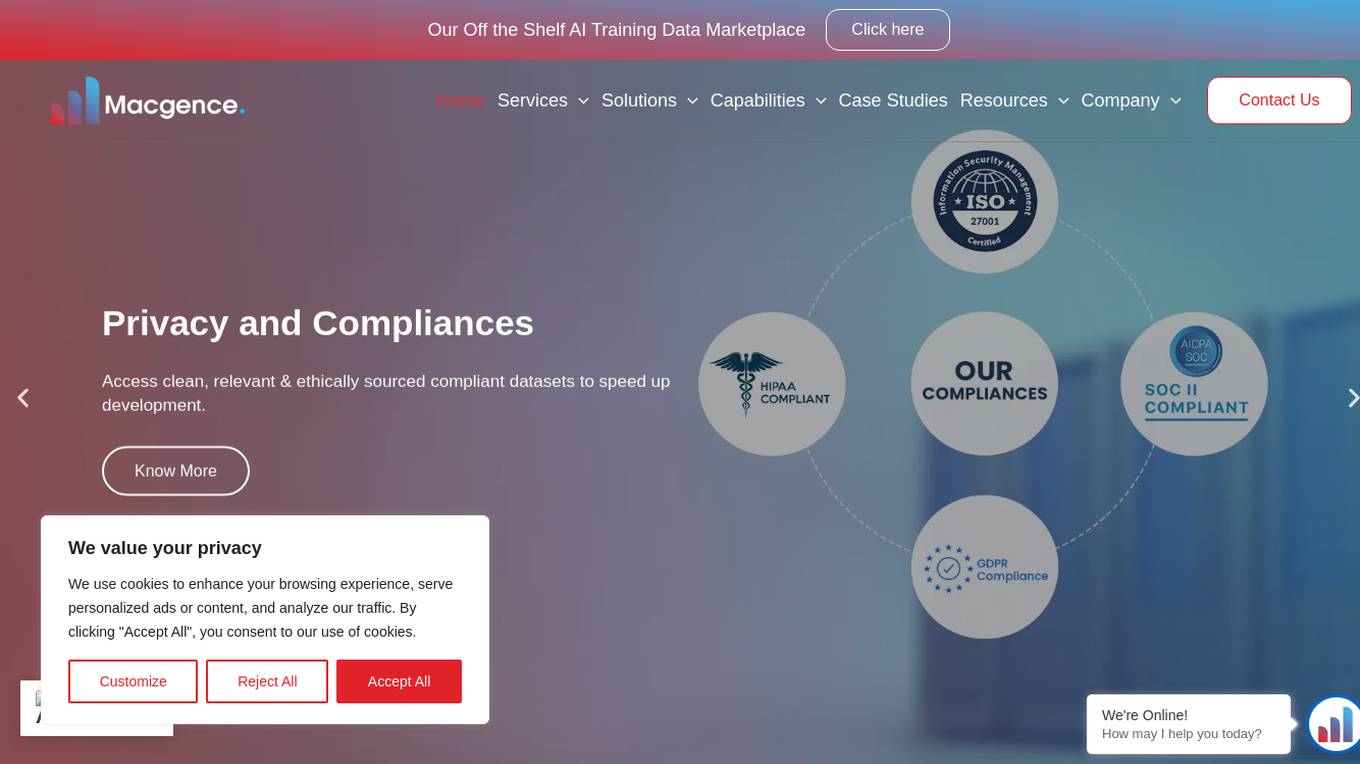

Macgence AI Training Data Services

Macgence is an AI training data services platform that offers high-quality off-the-shelf structured training data for organizations to build effective AI systems at scale. They provide services such as custom data sourcing, data annotation, data validation, content moderation, and localization. Macgence combines global linguistic, cultural, and technological expertise to create high-quality datasets for AI models, enabling faster time-to-market across the entire model value chain. With more than 5 years of experience, they support and scale AI initiatives of leading global innovators by designing custom data collection programs. Macgence specializes in handling AI training data for text, speech, image, and video data, offering cognitive annotation services to unlock the potential of unstructured textual data.

Compact Data Science

Compact Data Science is a data science platform that provides a comprehensive set of tools and resources for data scientists and analysts. The platform includes a variety of features such as data preparation, data visualization, machine learning, and predictive analytics. Compact Data Science is designed to be easy to use and accessible to users of all skill levels.

Data Hivemind

Data Hivemind is a company that provides automation services to businesses. They help businesses automate tasks such as lead generation, project management, recruiting, and CRM setup. Data Hivemind uses a variety of tools to automate tasks, including Zapier, Make.Com, Alteryx, N8N, Python, and others. They also offer a variety of services, including onboarding, weekly consultations, and documentation with every project.

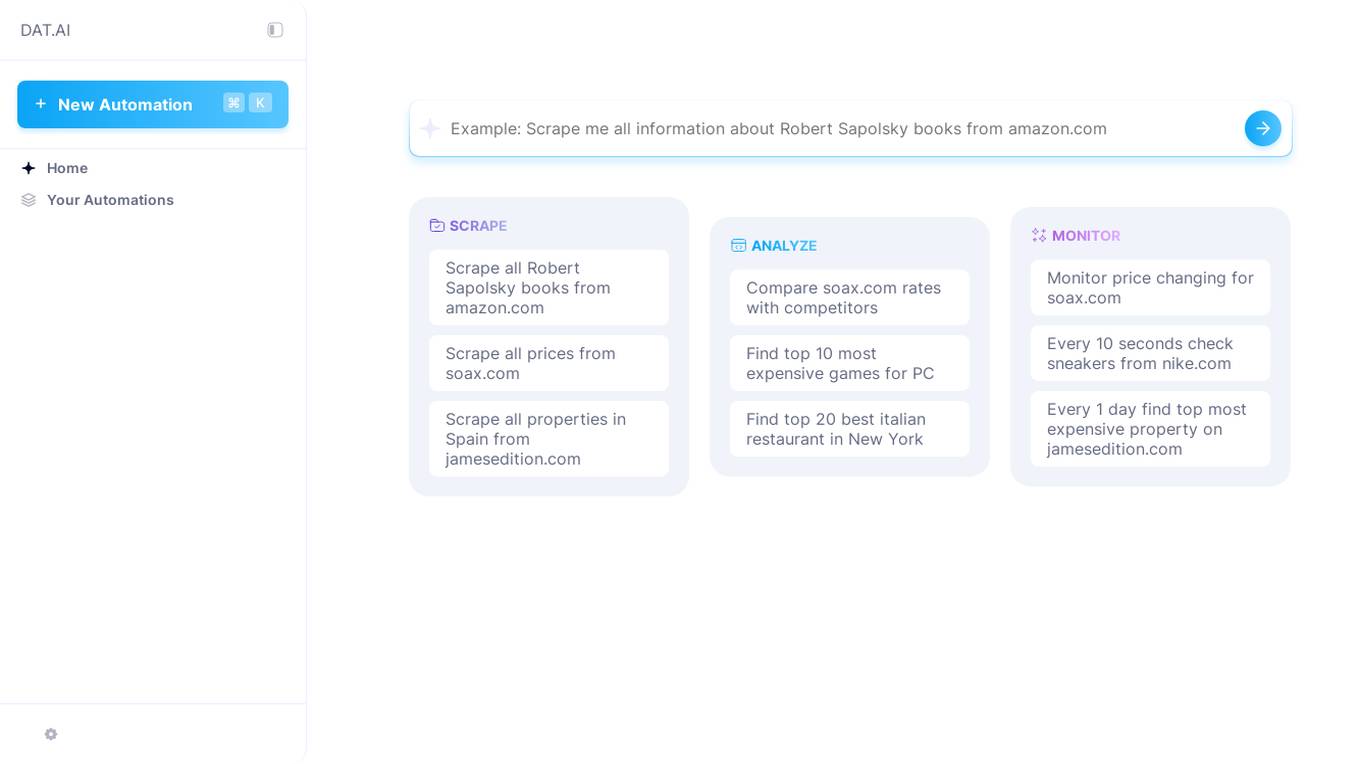

SOAX AI data collection

SOAX AI data collection is a powerful tool that utilizes artificial intelligence to gather and analyze data from various online sources. It automates the process of data collection, saving time and effort for users. The tool is designed to extract relevant information efficiently and accurately, providing valuable insights for businesses and researchers. With its advanced algorithms, SOAX AI data collection can handle large volumes of data quickly and effectively, making it a valuable asset for anyone in need of data-driven decision-making.

Chat Data

Chat Data is an AI application that allows users to create custom chatbots using their own data sources. Users can easily build and integrate chatbots with their websites or other platforms, personalize the chatbot's interface, and access advanced features like human support escalation and product updates synchronization. The platform offers HIPAA-compliant medical chat models and ensures data privacy by retaining conversation data exclusively within the user's browser. With Chat Data, users can enhance customer interactions, gather insights, and streamline communication processes.

Overwatch Data

Overwatch Data is a comprehensive open-source intelligence platform that provides real-time, global understanding for cyber, fraud, security, supply chain, and market intelligence needs. The platform offers actionable insights through concise information, customizable monitoring, intuitive data visualizations, and real-time executive summaries. Users can chat with their data, ask free-form questions, and tailor alerts to their interests. Overwatch Data aims to save time and cut through the noise by delivering the most important information directly to the user's team.

Industrial Data Labs (IDL)

Industrial Data Labs (IDL) provides AI-powered sales tools specifically designed for the Pipe, Valve, Fitting, and Flange (PVF) industry. Their flagship product, the RFQ Organizer, uses AI to automate the time-consuming process of organizing and analyzing RFQs, saving sales teams hours per RFQ. This allows sales reps to focus on more strategic tasks, such as customer engagement and follow-ups, leading to increased sales performance and improved accuracy.

Radical Data Science

The website page text discusses the latest advancements in AI technology, specifically focusing on the introduction of AI assistants and capabilities by various companies. It highlights the use of Large Language Models (LLMs) and generative AI to enhance customer service experiences, improve operational efficiency, and drive innovation across industries. The text showcases how AI avatars powered by NVIDIA technology are revolutionizing customer interactions and employee service experiences. It also mentions the collaboration between ServiceNow and NVIDIA to develop AI avatars for Now Assist, demonstrating the potential for more engaging and personalized communication through digital characters. Additionally, the text features the launch of Orchestrator LLM by Yellow.ai, an agent model that enables contextually aware and human-like customer conversations without the need for training, leading to increased customer satisfaction and operational efficiency.

Legal Data

Legal Data is a comprehensive legal research platform developed by lawyers for lawyers. It offers a powerful search feature that covers various legal areas from commercial to criminal law. The platform recognizes synonyms, legalese, and abbreviations, corrects typos, and provides suggestions as you type. Additionally, Legal Data includes an AI-assistant called FlyBot, trained on carefully selected laws and cases, to provide accurate legal answers without fabricating information.

Rapid AI DAta Yields

Rapid AI DAta Yields (RAIDAY) is a platform that provides AI tools, data products, and educational resources to help businesses and individuals leverage the power of artificial intelligence. RAIDAY's mission is to democratize and streamline the creation of simple yet powerful AI and data products for everyone, regardless of their technical expertise or resources. The platform offers a range of AI tools, including content generators, data analysis tools, and AI-powered chatbots. RAIDAY also provides a library of AI-generated content and data products that can be used to train AI models or to create new AI applications. In addition to its AI tools and data products, RAIDAY also offers a variety of educational resources, including tutorials, webinars, and blog posts, to help users learn about AI and how to use it effectively.

20 - Open Source AI Tools

DB-GPT

DB-GPT is a personal database administrator that can solve database problems by reading documents, using various tools, and writing analysis reports. It is currently undergoing an upgrade. **Features:** * **Online Demo:** * Import documents into the knowledge base * Utilize the knowledge base for well-founded Q&A and diagnosis analysis of abnormal alarms * Send feedbacks to refine the intermediate diagnosis results * Edit the diagnosis result * Browse all historical diagnosis results, used metrics, and detailed diagnosis processes * **Language Support:** * English (default) * Chinese (add "language: zh" in config.yaml) * **New Frontend:** * Knowledgebase + Chat Q&A + Diagnosis + Report Replay * **Extreme Speed Version for localized llms:** * 4-bit quantized LLM (reducing inference time by 1/3) * vllm for fast inference (qwen) * Tiny LLM * **Multi-path extraction of document knowledge:** * Vector database (ChromaDB) * RESTful Search Engine (Elasticsearch) * **Expert prompt generation using document knowledge** * **Upgrade the LLM-based diagnosis mechanism:** * Task Dispatching -> Concurrent Diagnosis -> Cross Review -> Report Generation * Synchronous Concurrency Mechanism during LLM inference * **Support monitoring and optimization tools in multiple levels:** * Monitoring metrics (Prometheus) * Flame graph in code level * Diagnosis knowledge retrieval (dbmind) * Logical query transformations (Calcite) * Index optimization algorithms (for PostgreSQL) * Physical operator hints (for PostgreSQL) * Backup and Point-in-time Recovery (Pigsty) * **Continuously updated papers and experimental reports** This project is constantly evolving with new features. Don't forget to star ⭐ and watch 👀 to stay up to date.

tiny-llm-zh

Tiny LLM zh is a project aimed at building a small-parameter Chinese language large model for quick entry into learning large model-related knowledge. The project implements a two-stage training process for large models and subsequent human alignment, including tokenization, pre-training, instruction fine-tuning, human alignment, evaluation, and deployment. It is deployed on ModeScope Tiny LLM website and features open access to all data and code, including pre-training data and tokenizer. The project trains a tokenizer using 10GB of Chinese encyclopedia text to build a Tiny LLM vocabulary. It supports training with Transformers deepspeed, multiple machine and card support, and Zero optimization techniques. The project has three main branches: llama2_torch, main tiny_llm, and tiny_llm_moe, each with specific modifications and features.

2024-AICS-EXP

This repository contains the complete archive of the 2024 version of the 'Intelligent Computing System' experiment at the University of Chinese Academy of Sciences. The experiment content for 2024 has undergone extensive adjustments to the knowledge system and experimental topics, including the transition from TensorFlow to PyTorch, significant modifications to previous code, and the addition of experiments with large models. The project is continuously updated in line with the course progress, currently up to the seventh experiment. Updates include the addition of experiments like YOLOv5 in Experiment 5-3, updates to theoretical teaching materials, and fixes for bugs in Experiment 6 code. The repository also includes experiment manuals, questions, and answers for various experiments, with some data sets hosted on Baidu Cloud due to size limitations on GitHub.

KwaiAgents

KwaiAgents is a series of Agent-related works open-sourced by the [KwaiKEG](https://github.com/KwaiKEG) from [Kuaishou Technology](https://www.kuaishou.com/en). The open-sourced content includes: 1. **KAgentSys-Lite**: a lite version of the KAgentSys in the paper. While retaining some of the original system's functionality, KAgentSys-Lite has certain differences and limitations when compared to its full-featured counterpart, such as: (1) a more limited set of tools; (2) a lack of memory mechanisms; (3) slightly reduced performance capabilities; and (4) a different codebase, as it evolves from open-source projects like BabyAGI and Auto-GPT. Despite these modifications, KAgentSys-Lite still delivers comparable performance among numerous open-source Agent systems available. 2. **KAgentLMs**: a series of large language models with agent capabilities such as planning, reflection, and tool-use, acquired through the Meta-agent tuning proposed in the paper. 3. **KAgentInstruct**: over 200k Agent-related instructions finetuning data (partially human-edited) proposed in the paper. 4. **KAgentBench**: over 3,000 human-edited, automated evaluation data for testing Agent capabilities, with evaluation dimensions including planning, tool-use, reflection, concluding, and profiling.

DataFrame

DataFrame is a C++ analytical library designed for data analysis similar to libraries in Python and R. It allows you to slice, join, merge, group-by, and perform various statistical, summarization, financial, and ML algorithms on your data. DataFrame also includes a large collection of analytical algorithms in form of visitors, ranging from basic stats to more involved analysis. You can easily add your own algorithms as well. DataFrame employs extensive multithreading in almost all its APIs, making it suitable for analyzing large datasets. Key principles followed in the library include supporting any type without needing new code, avoiding pointer chasing, having all column data in contiguous memory space, minimizing space usage, avoiding data copying, using multi-threading judiciously, and not protecting the user against garbage in, garbage out.

ai-clone-whatsapp

This repository provides a tool to create an AI chatbot clone of yourself using your WhatsApp chats as training data. It utilizes the Torchtune library for finetuning and inference. The code includes preprocessing of WhatsApp chats, finetuning models, and chatting with the AI clone via a command-line interface. Supported models are Llama3-8B-Instruct and Mistral-7B-Instruct-v0.2. Hardware requirements include approximately 16 GB vRAM for QLoRa Llama3 finetuning with a 4k context length. The repository addresses common issues like adjusting parameters for training and preprocessing non-English chats.

Consistency_LLM

Consistency Large Language Models (CLLMs) is a family of efficient parallel decoders that reduce inference latency by efficiently decoding multiple tokens in parallel. The models are trained to perform efficient Jacobi decoding, mapping any randomly initialized token sequence to the same result as auto-regressive decoding in as few steps as possible. CLLMs have shown significant improvements in generation speed on various tasks, achieving up to 3.4 times faster generation. The tool provides a seamless integration with other techniques for efficient Large Language Model (LLM) inference, without the need for draft models or architectural modifications.

ai-on-openshift

AI on OpenShift is a site providing installation recipes, patterns, and demos for AI/ML tools and applications used in Data Science and Data Engineering projects running on OpenShift. It serves as a comprehensive resource for developers looking to deploy AI solutions on the OpenShift platform.

airflow-code-editor

The Airflow Code Editor Plugin is a tool designed for Apache Airflow users to edit Directed Acyclic Graphs (DAGs) directly within their browser. It offers a user-friendly file management interface for effortless editing, uploading, and downloading of files. With Git support enabled, users can store DAGs in a Git repository, explore Git history, review local modifications, and commit changes. The plugin enhances workflow efficiency by providing seamless DAG management capabilities.

LLM-Finetuning-Toolkit

LLM Finetuning toolkit is a config-based CLI tool for launching a series of LLM fine-tuning experiments on your data and gathering their results. It allows users to control all elements of a typical experimentation pipeline - prompts, open-source LLMs, optimization strategy, and LLM testing - through a single YAML configuration file. The toolkit supports basic, intermediate, and advanced usage scenarios, enabling users to run custom experiments, conduct ablation studies, and automate fine-tuning workflows. It provides features for data ingestion, model definition, training, inference, quality assurance, and artifact outputs, making it a comprehensive tool for fine-tuning large language models.

bia-bob

BIA `bob` is a Jupyter-based assistant for interacting with data using large language models to generate Python code. It can utilize OpenAI's chatGPT, Google's Gemini, Helmholtz' blablador, and Ollama. Users need respective accounts to access these services. Bob can assist in code generation, bug fixing, code documentation, GPU-acceleration, and offers a no-code custom Jupyter Kernel. It provides example notebooks for various tasks like bio-image analysis, model selection, and bug fixing. Installation is recommended via conda/mamba environment. Custom endpoints like blablador and ollama can be used. Google Cloud AI API integration is also supported. The tool is extensible for Python libraries to enhance Bob's functionality.

freegenius

FreeGenius AI is an ambitious project offering a comprehensive suite of AI solutions that mirror the capabilities of LetMeDoIt AI. It is designed to engage in intuitive conversations, execute codes, provide up-to-date information, and perform various tasks. The tool is free, customizable, and provides access to real-time data and device information. It aims to support offline and online backends, open-source large language models, and optional API keys. Users can use FreeGenius AI for tasks like generating tweets, analyzing audio, searching financial data, checking weather, and creating maps.

crawl4ai

Crawl4AI is a powerful and free web crawling service that extracts valuable data from websites and provides LLM-friendly output formats. It supports crawling multiple URLs simultaneously, replaces media tags with ALT, and is completely free to use and open-source. Users can integrate Crawl4AI into Python projects as a library or run it as a standalone local server. The tool allows users to crawl and extract data from specified URLs using different providers and models, with options to include raw HTML content, force fresh crawls, and extract meaningful text blocks. Configuration settings can be adjusted in the `crawler/config.py` file to customize providers, API keys, chunk processing, and word thresholds. Contributions to Crawl4AI are welcome from the open-source community to enhance its value for AI enthusiasts and developers.

VMind

VMind is an open-source solution for intelligent visualization, providing an intelligent chart component based on LLM by VisActor. It allows users to create chart narrative works with natural language interaction, edit charts through dialogue, and export narratives as videos or GIFs. The tool is easy to use, scalable, supports various chart types, and offers one-click export functionality. Users can customize chart styles, specify themes, and aggregate data using LLM models. VMind aims to enhance efficiency in creating data visualization works through dialogue-based editing and natural language interaction.

Model-References

The 'Model-References' repository contains examples for training and inference using Intel Gaudi AI Accelerator. It includes models for computer vision, natural language processing, audio, generative models, MLPerf™ training, and MLPerf™ inference. The repository provides performance data and model validation information for various frameworks like PyTorch. Users can find examples of popular models like ResNet, BERT, and Stable Diffusion optimized for Intel Gaudi AI accelerator.

deeplake

Deep Lake is a Database for AI powered by a storage format optimized for deep-learning applications. Deep Lake can be used for: 1. Storing data and vectors while building LLM applications 2. Managing datasets while training deep learning models Deep Lake simplifies the deployment of enterprise-grade LLM-based products by offering storage for all data types (embeddings, audio, text, videos, images, pdfs, annotations, etc.), querying and vector search, data streaming while training models at scale, data versioning and lineage, and integrations with popular tools such as LangChain, LlamaIndex, Weights & Biases, and many more. Deep Lake works with data of any size, it is serverless, and it enables you to store all of your data in your own cloud and in one place. Deep Lake is used by Intel, Bayer Radiology, Matterport, ZERO Systems, Red Cross, Yale, & Oxford.

sql-eval

This repository contains the code that Defog uses for the evaluation of generated SQL. It's based off the schema from the Spider, but with a new set of hand-selected questions and queries grouped by query category. The testing procedure involves generating a SQL query, running both the 'gold' query and the generated query on their respective database to obtain dataframes with the results, comparing the dataframes using an 'exact' and a 'subset' match, logging these alongside other metrics of interest, and aggregating the results for reporting. The repository provides comprehensive instructions for installing dependencies, starting a Postgres instance, importing data into Postgres, importing data into Snowflake, using private data, implementing a query generator, and running the test with different runners.

Chinese-Tiny-LLM

Chinese-Tiny-LLM is a repository containing procedures for cleaning Chinese web corpora and pre-training code. It introduces CT-LLM, a 2B parameter language model focused on the Chinese language. The model primarily uses Chinese data from a 1,200 billion token corpus, showing excellent performance in Chinese language tasks. The repository includes tools for filtering, deduplication, and pre-training, aiming to encourage further research and innovation in language model development.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

langtest

LangTest is a comprehensive evaluation library for custom LLM and NLP models. It aims to deliver safe and effective language models by providing tools to test model quality, augment training data, and support popular NLP frameworks. LangTest comes with benchmark datasets to challenge and enhance language models, ensuring peak performance in various linguistic tasks. The tool offers more than 60 distinct types of tests with just one line of code, covering aspects like robustness, bias, representation, fairness, and accuracy. It supports testing LLMS for question answering, toxicity, clinical tests, legal support, factuality, sycophancy, and summarization.

20 - OpenAI Gpts

Interactive Spring API Creator

Pass in the attributes of Pojo entity class objects, generate corresponding addition, deletion, modification, and pagination query functions, including generating database connection configuration files yaml and database script files, as well as XML dynamic SQL concatenation statements.

Your Business Data Optimizer Pro

A chatbot expert in business data analysis and optimization.

Data Dynamo

A friendly data science coach offering practical, useful, and accurate advice.

DataKitchen DataOps and Data Observability GPT

A specialist in DataOps and Data Observability, aiding in data management and monitoring.

Alas Data Analytics Student Mentor

Salam mən Alas Academy-nin Data Analitika üzrə Süni İntellekt mentoruyam. Mənə istənilən sualı verə bilərsiniz :)

CannaIndustry Data Expert

Data trend analysis expert in cannabis, also skilled in image and data analysis, document generation, and web search.

Data Extractor Pro

Expert in data extraction and context-driven analysis. Can read most filetypes including PDFS, XLSX, Word, TXT, CSV, EML, Etc.