Best AI tools for< Critique Form >

11 - AI tool Sites

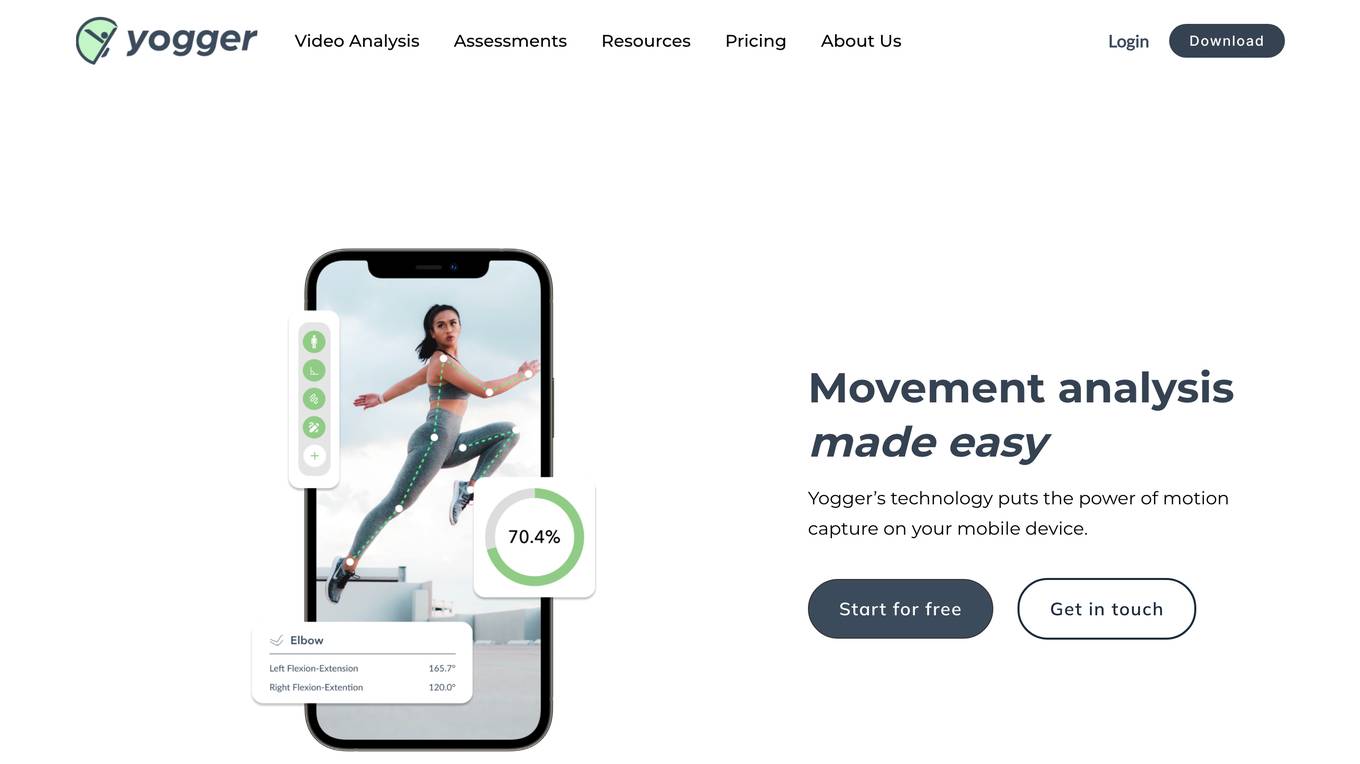

Yogger

Yogger is an AI-powered video analysis and movement assessment tool designed for coaches, trainers, physical therapists, and athletes. It allows users to track form, gather data, and analyze movement for any sport or activity in seconds. With AI-powered screenings, users can get instant scores and insights whether training in person or online. Yogger's software solutions help in recovery, enhance training, and prevent injuries, all accessible from a phone. The tool offers features like precision movement analysis, joint tracking with skeleton visualization, range of motion tracking, drawing tools for analysis, and virtual assessments for streamlined client evaluations.

Critique

Critique is an AI tool that redefines browsing by offering autonomous fact-checking, informed question answering, and a localized universal recommendation system. It automatically critiques comments and posts on platforms like Reddit, Youtube, and Linkedin by vetting text on any website. The tool cross-references and analyzes articles in real-time, providing vetted and summarized information directly in the user's browser.

Sun Group (China) Co., Ltd.

The website is the official site of the Sun Group (China) Co., Ltd., endorsed by Louis Koo. It provides information about the company's history, leadership, organizational structure, educational programs, research achievements, and employee activities. The site also features news updates, announcements, and resources for download.

LLM Quality Beefer-Upper

LLM Quality Beefer-Upper is an AI tool designed to enhance the quality and productivity of LLM responses by automating critique, reflection, and improvement. Users can generate multi-agent prompt drafts, choose from different quality levels, and upload knowledge text for processing. The application aims to maximize output quality by utilizing the best available LLM models in the market.

ProWritingAid

ProWritingAid is an AI-powered writing assistant that helps writers improve their writing. It offers a range of features, including a grammar checker, plagiarism checker, and story critique tool. ProWritingAid is used by writers of all levels, from beginners to bestselling authors. It is available as a web app, desktop app, and browser extension.

ScriptReader.ai

ScriptReader.ai is an AI-powered screenplay analysis tool that provides detailed critiques and suggestions for every scene of your screenplay. It offers personalized feedback to help writers improve their scripts and elevate their writing game. With the ability to analyze strengths and weaknesses, provide grades, critiques, and suggestions for improvement on a scene-by-scene basis, ScriptReader.ai aims to help both seasoned screenwriters and beginners enhance their work and create captivating masterpieces.

Feedback Wizard

Feedback Wizard is an AI-powered tool designed to provide instant design feedback directly within Figma. It leverages AI technology to offer design wisdom and actionable insights to improve user experience and elevate the visual elements of Figma designs. With over 2700 designers already using the tool, Feedback Wizard aims to streamline the design feedback process and enhance the overall design quality.

Mock-My-Mockup

Mock-My-Mockup is an AI-powered product design tool created by Fairpixels. It allows users to upload a screenshot of a page they are working on and receive brutally honest feedback. The tool offers a user-friendly interface where users can easily drag and drop their product screenshots for analysis.

Resume Roaster AI

The website offers a service where users can have their resumes analyzed and critiqued by an AI tool. Users can submit their resumes to receive feedback on areas for improvement. The AI tool provides insights on resume quality, structure, and content to help users enhance their job application documents. It aims to assist individuals in creating more effective resumes to increase their chances of securing job opportunities.

Hell's Pitching

Hell's Pitching is an AI-powered assistant designed to help entrepreneurs refine their startup ideas by providing brutally honest feedback and insightful questions. It offers a unique approach to guiding and challenging founders in building successful startups. The tool allows users to pitch their ideas and receive side-splittingly funny roasts that lead to 'aha' moments and innovative insights. With a focus on no-nonsense critiques and humor, Hell's Pitching aims to transform startup ideas by providing wisdom and valuable feedback. The platform is free for all users, encouraging access to honest feedback for everyone.

RAD AI

RAD AI is an AI-powered platform that provides solutions for audience insights, influencer discovery, content optimization, managed services, and more. The platform uses advanced machine learning to analyze real-time conversations from social platforms like Reddit, TikTok, and Twitter. RAD AI offers actionable critiques to enhance brand content and helps in selecting the right influencers based on various factors. The platform aims to help brands reach their target audiences effectively and efficiently by leveraging AI technology.

20 - Open Source AI Tools

Awesome-LLM-in-Social-Science

This repository compiles a list of academic papers that evaluate, align, simulate, and provide surveys or perspectives on the use of Large Language Models (LLMs) in the field of Social Science. The papers cover various aspects of LLM research, including assessing their alignment with human values, evaluating their capabilities in tasks such as opinion formation and moral reasoning, and exploring their potential for simulating social interactions and addressing issues in diverse fields of Social Science. The repository aims to provide a comprehensive resource for researchers and practitioners interested in the intersection of LLMs and Social Science.

pywhy-llm

PyWhy-LLM is an innovative library that integrates Large Language Models (LLMs) into the causal analysis process, empowering users with knowledge previously only available through domain experts. It seamlessly augments existing causal inference processes by suggesting potential confounders, relationships between variables, backdoor sets, front door sets, IV sets, estimands, critiques of DAGs, latent confounders, and negative controls. By leveraging LLMs and formalizing human-LLM collaboration, PyWhy-LLM aims to enhance causal analysis accessibility and insight.

awesome-RLAIF

Reinforcement Learning from AI Feedback (RLAIF) is a concept that describes a type of machine learning approach where **an AI agent learns by receiving feedback or guidance from another AI system**. This concept is closely related to the field of Reinforcement Learning (RL), which is a type of machine learning where an agent learns to make a sequence of decisions in an environment to maximize a cumulative reward. In traditional RL, an agent interacts with an environment and receives feedback in the form of rewards or penalties based on the actions it takes. It learns to improve its decision-making over time to achieve its goals. In the context of Reinforcement Learning from AI Feedback, the AI agent still aims to learn optimal behavior through interactions, but **the feedback comes from another AI system rather than from the environment or human evaluators**. This can be **particularly useful in situations where it may be challenging to define clear reward functions or when it is more efficient to use another AI system to provide guidance**. The feedback from the AI system can take various forms, such as: - **Demonstrations** : The AI system provides demonstrations of desired behavior, and the learning agent tries to imitate these demonstrations. - **Comparison Data** : The AI system ranks or compares different actions taken by the learning agent, helping it to understand which actions are better or worse. - **Reward Shaping** : The AI system provides additional reward signals to guide the learning agent's behavior, supplementing the rewards from the environment. This approach is often used in scenarios where the RL agent needs to learn from **limited human or expert feedback or when the reward signal from the environment is sparse or unclear**. It can also be used to **accelerate the learning process and make RL more sample-efficient**. Reinforcement Learning from AI Feedback is an area of ongoing research and has applications in various domains, including robotics, autonomous vehicles, and game playing, among others.

llm-self-correction-papers

This repository contains a curated list of papers focusing on the self-correction of large language models (LLMs) during inference. It covers various frameworks for self-correction, including intrinsic self-correction, self-correction with external tools, self-correction with information retrieval, and self-correction with training designed specifically for self-correction. The list includes survey papers, negative results, and frameworks utilizing reinforcement learning and OpenAI o1-like approaches. Contributions are welcome through pull requests following a specific format.

CritiqueLLM

CritiqueLLM is an official implementation of a model designed for generating informative critiques to evaluate large language model generation. It includes functionalities for data collection, referenced pointwise grading, referenced pairwise comparison, reference-free pairwise comparison, reference-free pointwise grading, inference for pointwise grading and pairwise comparison, and evaluation of the generated results. The model aims to provide a comprehensive framework for evaluating the performance of large language models based on human ratings and comparisons.

RAG-Survey

This repository is dedicated to collecting and categorizing papers related to Retrieval-Augmented Generation (RAG) for AI-generated content. It serves as a survey repository based on the paper 'Retrieval-Augmented Generation for AI-Generated Content: A Survey'. The repository is continuously updated to keep up with the rapid growth in the field of RAG.

awesome-llm-attributions

This repository focuses on unraveling the sources that large language models tap into for attribution or citation. It delves into the origins of facts, their utilization by the models, the efficacy of attribution methodologies, and challenges tied to ambiguous knowledge reservoirs, biases, and pitfalls of excessive attribution.

LLM-Powered-RAG-System

LLM-Powered-RAG-System is a comprehensive repository containing frameworks, projects, components, evaluation tools, papers, blogs, and other resources related to Retrieval-Augmented Generation (RAG) systems powered by Large Language Models (LLMs). The repository includes various frameworks for building applications with LLMs, data frameworks, modular graph-based RAG systems, dense retrieval models, and efficient retrieval augmentation and generation frameworks. It also features projects such as personal productivity assistants, knowledge-based platforms, chatbots, question and answer systems, and code assistants. Additionally, the repository provides components for interacting with documents, databases, and optimization methods using ML and LLM technologies. Evaluation frameworks, papers, blogs, and other resources related to RAG systems are also included.

Awesome-LLM-RAG

This repository, Awesome-LLM-RAG, aims to record advanced papers on Retrieval Augmented Generation (RAG) in Large Language Models (LLMs). It serves as a resource hub for researchers interested in promoting their work related to LLM RAG by updating paper information through pull requests. The repository covers various topics such as workshops, tutorials, papers, surveys, benchmarks, retrieval-enhanced LLMs, RAG instruction tuning, RAG in-context learning, RAG embeddings, RAG simulators, RAG search, RAG long-text and memory, RAG evaluation, RAG optimization, and RAG applications.

LLM-as-a-Judge

LLM-as-a-Judge is a repository that includes papers discussed in a survey paper titled 'A Survey on LLM-as-a-Judge'. The repository covers various aspects of using Large Language Models (LLMs) as judges for tasks such as evaluation, reasoning, and decision-making. It provides insights into evaluation pipelines, improvement strategies, and specific tasks related to LLMs. The papers included in the repository explore different methodologies, applications, and future research directions for leveraging LLMs as evaluators in various domains.

lagent

Lagent is a lightweight open-source framework that allows users to efficiently build large language model(LLM)-based agents. It also provides some typical tools to augment LLM. The overview of our framework is shown below:

bosquet

Bosquet is a tool designed for LLMOps in large language model-based applications. It simplifies building AI applications by managing LLM and tool services, integrating with Selmer templating library for prompt templating, enabling prompt chaining and composition with Pathom graph processing, defining agents and tools for external API interactions, handling LLM memory, and providing features like call response caching. The tool aims to streamline the development process for AI applications that require complex prompt templates, memory management, and interaction with external systems.

awesome-deliberative-prompting

The 'awesome-deliberative-prompting' repository focuses on how to ask Large Language Models (LLMs) to produce reliable reasoning and make reason-responsive decisions through deliberative prompting. It includes success stories, prompting patterns and strategies, multi-agent deliberation, reflection and meta-cognition, text generation techniques, self-correction methods, reasoning analytics, limitations, failures, puzzles, datasets, tools, and other resources related to deliberative prompting. The repository provides a comprehensive overview of research, techniques, and tools for enhancing reasoning capabilities of LLMs.

parlant

Parlant is a structured approach to building and guiding customer-facing AI agents. It allows developers to create and manage robust AI agents, providing specific feedback on agent behavior and helping understand user intentions better. With features like guidelines, glossary, coherence checks, dynamic context, and guided tool use, Parlant offers control over agent responses and behavior. Developer-friendly aspects include instant changes, Git integration, clean architecture, and type safety. It enables confident deployment with scalability, effective debugging, and validation before deployment. Parlant works with major LLM providers and offers client SDKs for Python and TypeScript. The tool facilitates natural customer interactions through asynchronous communication and provides a chat UI for testing new behaviors before deployment.

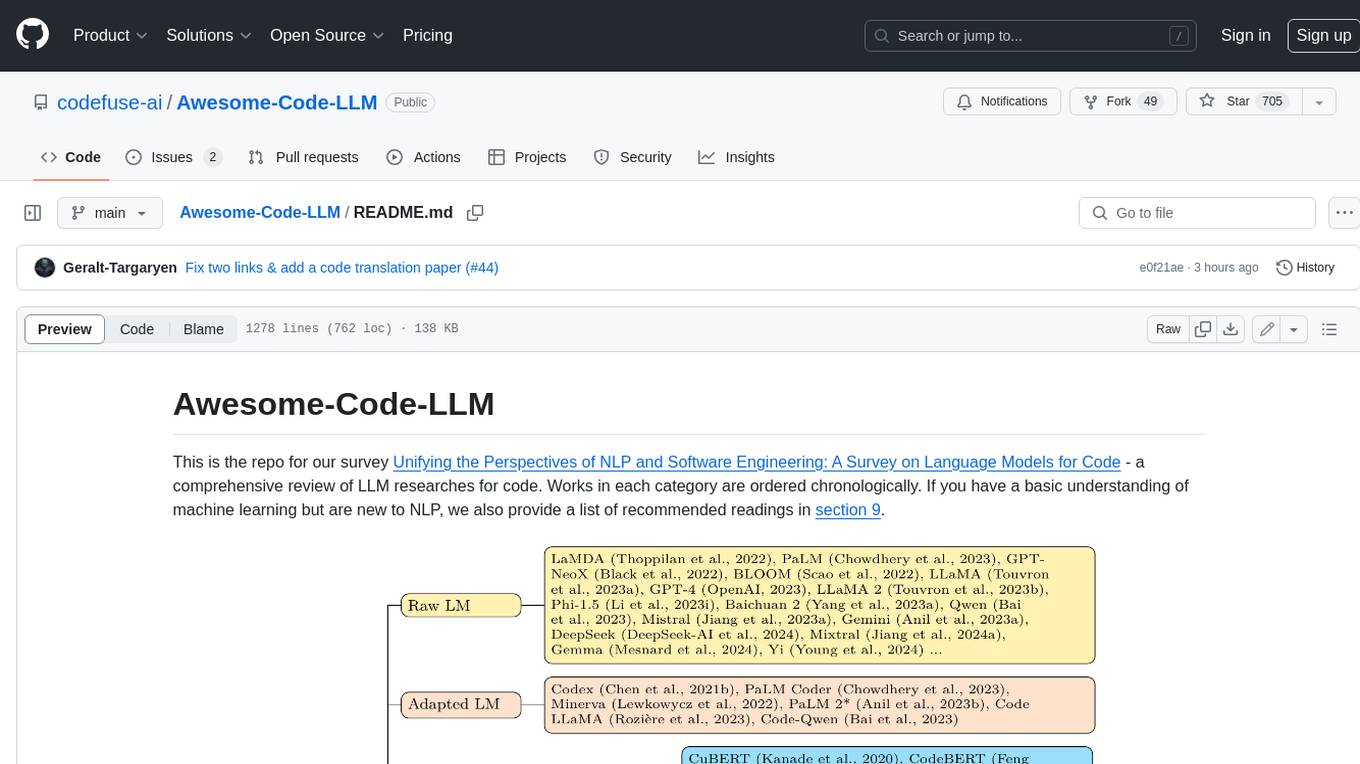

Awesome-Code-LLM

Analyze the following text from a github repository (name and readme text at end) . Then, generate a JSON object with the following keys and provide the corresponding information for each key, in lowercase letters: 'description' (detailed description of the repo, must be less than 400 words,Ensure that no line breaks and quotation marks.),'for_jobs' (List 5 jobs suitable for this tool,in lowercase letters), 'ai_keywords' (keywords of the tool,user may use those keyword to find the tool,in lowercase letters), 'for_tasks' (list of 5 specific tasks user can use this tool to do,in lowercase letters), 'answer' (in english languages)

chatgpt-universe

ChatGPT is a large language model that can generate human-like text, translate languages, write different kinds of creative content, and answer your questions in a conversational way. It is trained on a massive amount of text data, and it is able to understand and respond to a wide range of natural language prompts. Here are 5 jobs suitable for this tool, in lowercase letters: 1. content writer 2. chatbot assistant 3. language translator 4. creative writer 5. researcher

NeMo-Guardrails

NeMo Guardrails is an open-source toolkit for easily adding _programmable guardrails_ to LLM-based conversational applications. Guardrails (or "rails" for short) are specific ways of controlling the output of a large language model, such as not talking about politics, responding in a particular way to specific user requests, following a predefined dialog path, using a particular language style, extracting structured data, and more.

DecryptPrompt

This repository does not provide a tool, but rather a collection of resources and strategies for academics in the field of artificial intelligence who are feeling depressed or overwhelmed by the rapid advancements in the field. The resources include articles, blog posts, and other materials that offer advice on how to cope with the challenges of working in a fast-paced and competitive environment.

20 - OpenAI Gpts

Website Design Critique Expert

Critiques website designs and creates shareable summary graphics.

Trey Ratcliff's Fun Photo Critique GPT

Critiquing photos with humor and expertise, drawing from my 5,000 blog entries and books. Share your photo for a unique critique experience!

Legal Tech Generhater

I'll critique your legal tech ideas with my signature snark and design fittingly bad logos.

Apollo

Expert in art critique and analysis, knowledgeable in art history, theory, and psychology.

Image Generation with Selfcritique & Improvement

More accurate and easier image generation with self critique & improvement! Try it now

Academic Research Reviewer

Upon uploading a research paper, I provide a concise section wise analysis covering Abstract, Lit Review, Findings, Methodology, and Conclusion. I also critique the work, highlight its strengths, and answer any open questions from my Knowledge base of Open source materials.

AutoExpert (Academic)

Upon uploading a research paper, I provide a concise analysis covering its authors, key findings, methodology, and relevance. I also critique the work, highlight its strengths, and identify any open questions from a professional perspective.

Executive Insight

I'm a Fortune 100 exec who critiques presentations, papers, emails, etc.

Arte Crítico

Experto en crítica y curaduría de arte, especializado en reseñas y descripción de obras.

Riley

An interactive resume assistant, providing detailed insights on Randy's professional background.