tlm

Local CLI Copilot, powered by Ollama. 💻🦙

Stars: 1394

tlm is a local CLI copilot tool powered by CodeLLaMa, providing efficient command line suggestions without the need for an API key or internet connection. It works on macOS, Linux, and Windows, with automatic shell detection for Powershell, Bash, and Zsh. The tool offers one-liner generation and command explanation, and can be installed via an installation script or using Go Install. Ollama is required to download necessary models, and the tool can be easily deployed and configured. Contributors are welcome to enhance the tool's functionality.

README:

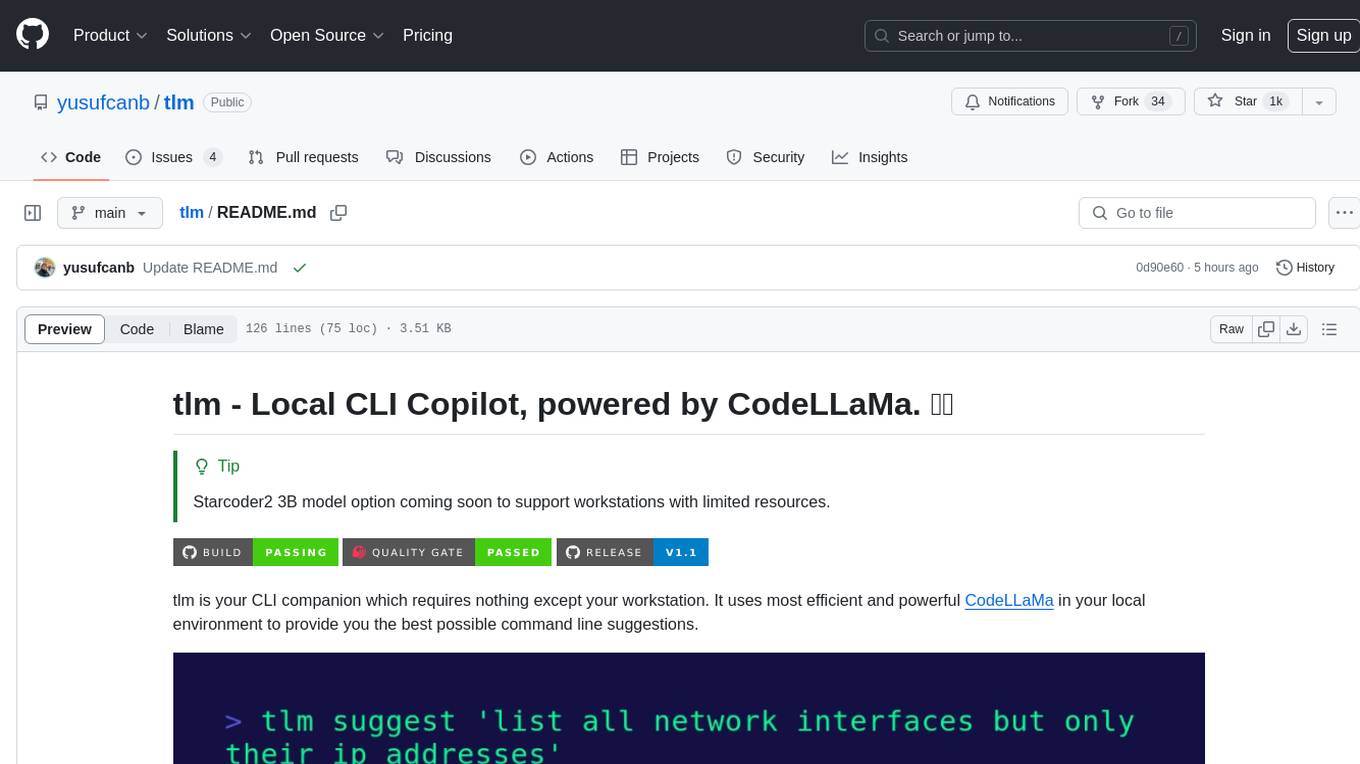

tlm is your CLI companion which requires nothing except your workstation. It uses most efficient and powerful open-source models like Llama 3.3, Phi4, DeepSeek-R1, Qwen of your choice in your local environment to provide you the best possible command line assistance.

| Get a suggestion | Explain a command |

|---|---|

|

|

| Ask with context (One-liner RAG) | Configure your favorite model |

|---|---|

|

|

-

💸 No API Key (Subscription) is required. (ChatGPT, Claude, Github Copilot, Azure OpenAI, etc.)

-

📡 No internet connection is required.

-

💻 Works on macOS, Linux and Windows.

-

👩🏻💻 Automatic shell detection. (Powershell, Bash, Zsh)

-

🚀 One liner generation and command explanation.

-

🖺 No-brainer RAG (Retrieval Augmented Generation)

-

🧠 Experiment any model. (Llama3, Phi4, DeepSeek-R1, Qwen) with parameters of your choice.

Installation can be done in two ways;

- Installation script (recommended)

- Go Install

Installation script is the recommended way to install tlm. It will recognize the which platform and architecture to download and will execute install command for you.

Download and execute the installation script by using the following command;

curl -fsSL https://raw.githubusercontent.com/yusufcanb/tlm/1.2/install.sh | sudo -E bashDownload and execute the installation script by using the following command;

Invoke-RestMethod -Uri https://raw.githubusercontent.com/yusufcanb/tlm/1.2/install.ps1 | Invoke-ExpressionIf you have Go 1.22 or higher installed on your system, you can easily use the following command to install tlm;

go install github.com/yusufcanb/[email protected]You're ready! Check installation by using the following command;

tlm$ tlm

NAME:

tlm - terminal copilot, powered by open-source models.

USAGE:

tlm suggest "<prompt>"

tlm s --model=qwen2.5-coder:1.5b --style=stable "<prompt>"

tlm explain "<command>" # explain a command

tlm e --model=llama3.2:1b --style=balanced "<command>" # explain a command with a overrided model

tlm ask "<prompt>" # ask a question

tlm ask --context . --include *.md "<prompt>" # ask a question with a context

VERSION:

1.2

COMMANDS:

ask, a Asks a question (beta)

suggest, s Suggests a command.

explain, e Explains a command.

config, c Configures language model, style and shell

version, v Prints tlm version.

help, h Shows a list of commands or help for one command

GLOBAL OPTIONS:

--help, -h show help

--version, -v print the version

Ask a question with context. Here is an example question with a context of this repositories Go files under ask package.

$ tlm ask --help

NAME:

tlm ask - Asks a question (beta)

USAGE:

tlm ask "<prompt>" # ask a question

tlm ask --context . --include *.md "<prompt>" # ask a question with a context

OPTIONS:

--context value, -c value context directory path

--include value, -i value [ --include value, -i value ] include patterns. e.g. --include=*.txt or --include=*.txt,*.md

--exclude value, -e value [ --exclude value, -e value ] exclude patterns. e.g. --exclude=**/*_test.go or --exclude=*.pyc,*.pyd

--interactive, --it enable interactive chat mode (default: false)

--model value, -m value override the model for command suggestion. (default: qwen2 5-coder:3b)

--help, -h show help

$ tlm suggest --help

NAME:

tlm suggest - Suggests a command.

USAGE:

tlm suggest <prompt>

tlm suggest --model=llama3.2:1b <prompt>

tlm suggest --model=llama3.2:1b --style=<stable|balanced|creative> <prompt>

DESCRIPTION:

suggests a command for given prompt.

COMMANDS:

help, h Shows a list of commands or help for one command

OPTIONS:

--model value, -m value override the model for command suggestion. (default: qwen2.5-coder:3b)

--style value, -s value override the style for command suggestion. (default: balanced)

--help, -h show help

$ tlm explain --help

NAME:

tlm explain - Explains a command.

USAGE:

tlm explain <command>

tlm explain --model=llama3.2:1b <command>

tlm explain --model=llama3.2:1b --style=<stable|balanced|creative> <command>

DESCRIPTION:

explains given shell command.

COMMANDS:

help, h Shows a list of commands or help for one command

OPTIONS:

--model value, -m value override the model for command suggestion. (default: qwen2.5-coder:3b)

--style value, -s value override the style for command suggestion. (default: balanced)

--help, -h show help

On Linux and macOS;

rm /usr/local/bin/tlm

rm ~/.tlm.ymlOn Windows;

Remove-Item -Recurse -Force "C:\Users\$env:USERNAME\AppData\Local\Programs\tlm"

Remove-Item -Force "$HOME\.tlm.yml"For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for tlm

Similar Open Source Tools

tlm

tlm is a local CLI copilot tool powered by CodeLLaMa, providing efficient command line suggestions without the need for an API key or internet connection. It works on macOS, Linux, and Windows, with automatic shell detection for Powershell, Bash, and Zsh. The tool offers one-liner generation and command explanation, and can be installed via an installation script or using Go Install. Ollama is required to download necessary models, and the tool can be easily deployed and configured. Contributors are welcome to enhance the tool's functionality.

tgpt

tgpt is a cross-platform command-line interface (CLI) tool that allows users to interact with AI chatbots in the Terminal without needing API keys. It supports various AI providers such as KoboldAI, Phind, Llama2, Blackbox AI, and OpenAI. Users can generate text, code, and images using different flags and options. The tool can be installed on GNU/Linux, MacOS, FreeBSD, and Windows systems. It also supports proxy configurations and provides options for updating and uninstalling the tool.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

raglite

RAGLite is a Python toolkit for Retrieval-Augmented Generation (RAG) with PostgreSQL or SQLite. It offers configurable options for choosing LLM providers, database types, and rerankers. The toolkit is fast and permissive, utilizing lightweight dependencies and hardware acceleration. RAGLite provides features like PDF to Markdown conversion, multi-vector chunk embedding, optimal semantic chunking, hybrid search capabilities, adaptive retrieval, and improved output quality. It is extensible with a built-in Model Context Protocol server, customizable ChatGPT-like frontend, document conversion to Markdown, and evaluation tools. Users can configure RAGLite for various tasks like configuring, inserting documents, running RAG pipelines, computing query adapters, evaluating performance, running MCP servers, and serving frontends.

clickclickclick

ClickClickClick is a framework designed to enable autonomous Android and computer use using various LLM models, both locally and remotely. It supports tasks such as drafting emails, opening browsers, and starting games, with current support for local models via Ollama, Gemini, and GPT 4o. The tool is highly experimental and evolving, with the best results achieved using specific model combinations. Users need prerequisites like `adb` installation and USB debugging enabled on Android phones. The tool can be installed via cloning the repository, setting up a virtual environment, and installing dependencies. It can be used as a CLI tool or script, allowing users to configure planner and finder models for different tasks. Additionally, it can be used as an API to execute tasks based on provided prompts, platform, and models.

mLoRA

mLoRA (Multi-LoRA Fine-Tune) is an open-source framework for efficient fine-tuning of multiple Large Language Models (LLMs) using LoRA and its variants. It allows concurrent fine-tuning of multiple LoRA adapters with a shared base model, efficient pipeline parallelism algorithm, support for various LoRA variant algorithms, and reinforcement learning preference alignment algorithms. mLoRA helps save computational and memory resources when training multiple adapters simultaneously, achieving high performance on consumer hardware.

raycast_api_proxy

The Raycast AI Proxy is a tool that acts as a proxy for the Raycast AI application, allowing users to utilize the application without subscribing. It intercepts and forwards Raycast requests to various AI APIs, then reformats the responses for Raycast. The tool supports multiple AI providers and allows for custom model configurations. Users can generate self-signed certificates, add them to the system keychain, and modify DNS settings to redirect requests to the proxy. The tool is designed to work with providers like OpenAI, Azure OpenAI, Google, and more, enabling tasks such as AI chat completions, translations, and image generation.

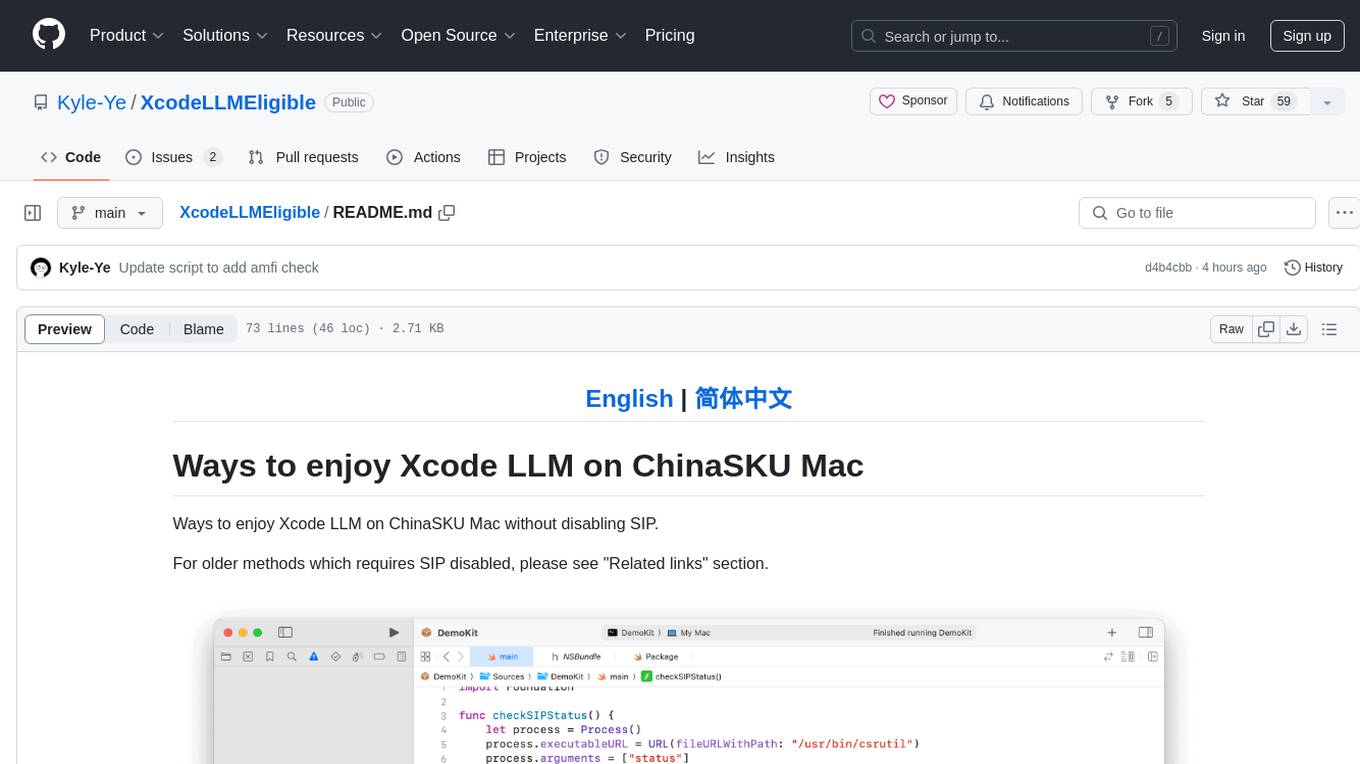

XcodeLLMEligible

XcodeLLMEligible is a project that provides ways to enjoy Xcode LLM on ChinaSKU Mac without disabling SIP. It offers methods for script execution and manual execution, allowing users to override eligibility service features. The project is for learning and research purposes only, and users are responsible for compliance with applicable laws. The author disclaims any responsibility for consequences arising from the use of the project.

metis

Metis is an open-source, AI-driven tool for deep security code review, created by Arm's Product Security Team. It helps engineers detect subtle vulnerabilities, improve secure coding practices, and reduce review fatigue. Metis uses LLMs for semantic understanding and reasoning, RAG for context-aware reviews, and supports multiple languages and vector store backends. It provides a plugin-friendly and extensible architecture, named after the Greek goddess of wisdom, Metis. The tool is designed for large, complex, or legacy codebases where traditional tooling falls short.

r2ai

r2ai is a tool designed to run a language model locally without internet access. It can be used to entertain users or assist in answering questions related to radare2 or reverse engineering. The tool allows users to prompt the language model, index large codebases, slurp file contents, embed the output of an r2 command, define different system-level assistant roles, set environment variables, and more. It is accessible as an r2lang-python plugin and can be scripted from various languages. Users can use different models, adjust query templates dynamically, load multiple models, and make them communicate with each other.

tuui

TUUI is a desktop MCP client designed for accelerating AI adoption through the Model Context Protocol (MCP) and enabling cross-vendor LLM API orchestration. It is an LLM chat desktop application based on MCP, created using AI-generated components with strict syntax checks and naming conventions. The tool integrates AI tools via MCP, orchestrates LLM APIs, supports automated application testing, TypeScript, multilingual, layout management, global state management, and offers quick support through the GitHub community and official documentation.

xFasterTransformer

xFasterTransformer is an optimized solution for Large Language Models (LLMs) on the X86 platform, providing high performance and scalability for inference on mainstream LLM models. It offers C++ and Python APIs for easy integration, along with example codes and benchmark scripts. Users can prepare models in a different format, convert them, and use the APIs for tasks like encoding input prompts, generating token ids, and serving inference requests. The tool supports various data types and models, and can run in single or multi-rank modes using MPI. A web demo based on Gradio is available for popular LLM models like ChatGLM and Llama2. Benchmark scripts help evaluate model inference performance quickly, and MLServer enables serving with REST and gRPC interfaces.

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

trieve

Trieve is an advanced relevance API for hybrid search, recommendations, and RAG. It offers a range of features including self-hosting, semantic dense vector search, typo tolerant full-text/neural search, sub-sentence highlighting, recommendations, convenient RAG API routes, the ability to bring your own models, hybrid search with cross-encoder re-ranking, recency biasing, tunable popularity-based ranking, filtering, duplicate detection, and grouping. Trieve is designed to be flexible and customizable, allowing users to tailor it to their specific needs. It is also easy to use, with a simple API and well-documented features.

fragments

Fragments is an open-source tool that leverages Anthropic's Claude Artifacts, Vercel v0, and GPT Engineer. It is powered by E2B Sandbox SDK and Code Interpreter SDK, allowing secure execution of AI-generated code. The tool is based on Next.js 14, shadcn/ui, TailwindCSS, and Vercel AI SDK. Users can stream in the UI, install packages from npm and pip, and add custom stacks and LLM providers. Fragments enables users to build web apps with Python interpreter, Next.js, Vue.js, Streamlit, and Gradio, utilizing providers like OpenAI, Anthropic, Google AI, and more.

shellChatGPT

ShellChatGPT is a shell wrapper for OpenAI's ChatGPT, DALL-E, Whisper, and TTS, featuring integration with LocalAI, Ollama, Gemini, Mistral, Groq, and GitHub Models. It provides text and chat completions, vision, reasoning, and audio models, voice-in and voice-out chatting mode, text editor interface, markdown rendering support, session management, instruction prompt manager, integration with various service providers, command line completion, file picker dialogs, color scheme personalization, stdin and text file input support, and compatibility with Linux, FreeBSD, MacOS, and Termux for a responsive experience.

For similar tasks

tlm

tlm is a local CLI copilot tool powered by CodeLLaMa, providing efficient command line suggestions without the need for an API key or internet connection. It works on macOS, Linux, and Windows, with automatic shell detection for Powershell, Bash, and Zsh. The tool offers one-liner generation and command explanation, and can be installed via an installation script or using Go Install. Ollama is required to download necessary models, and the tool can be easily deployed and configured. Contributors are welcome to enhance the tool's functionality.

please-cli

Please CLI is an AI helper script designed to create CLI commands by leveraging the GPT model. Users can input a command description, and the script will generate a Linux command based on that input. The tool offers various functionalities such as invoking commands, copying commands to the clipboard, asking questions about commands, and more. It supports parameters for explanation, using different AI models, displaying additional output, storing API keys, querying ChatGPT with specific models, showing the current version, and providing help messages. Users can install Please CLI via Homebrew, apt, Nix, dpkg, AUR, or manually from source. The tool requires an OpenAI API key for operation and offers configuration options for setting API keys and OpenAI settings. Please CLI is licensed under the Apache License 2.0 by TNG Technology Consulting GmbH.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

magic-cli

Magic CLI is a command line utility that leverages Large Language Models (LLMs) to enhance command line efficiency. It is inspired by projects like Amazon Q and GitHub Copilot for CLI. The tool allows users to suggest commands, search across command history, and generate commands for specific tasks using local or remote LLM providers. Magic CLI also provides configuration options for LLM selection and response generation. The project is still in early development, so users should expect breaking changes and bugs.

ShellOracle

ShellOracle is an innovative terminal utility designed for intelligent shell command generation, bringing a new level of efficiency to your command-line interactions. It supports seamless shell command generation from written descriptions, command history for easy reference, Unix pipe support for advanced command chaining, self-hosted for full control over your environment, and highly configurable to adapt to your preferences. It can be easily installed using pipx, upgraded with simple commands, and used as a BASH/ZSH widget activated by the CTRL+F keyboard shortcut. ShellOracle can also be run as a Python module or using its entrypoint 'shor'. The tool supports providers like Ollama, OpenAI, and LocalAI, with detailed instructions for each provider. Configuration options are available to customize the utility according to user preferences and requirements. ShellOracle is compatible with BASH and ZSH on macOS and Linux, with no specific hardware requirements for cloud providers like OpenAI.

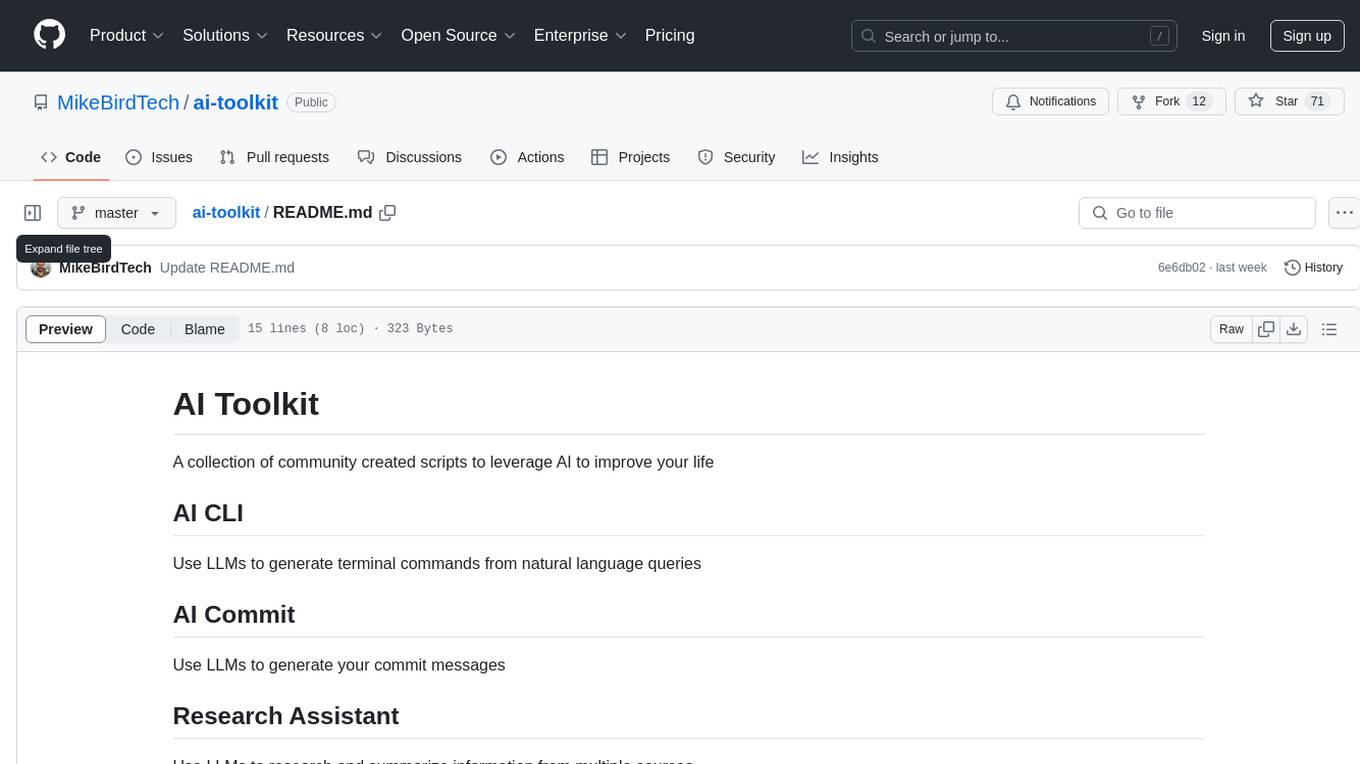

ai-toolkit

AI Toolkit is a collection of community created scripts that leverage AI to improve your life. It includes tools like AI CLI for generating terminal commands from natural language queries, AI Commit for generating commit messages using LLMs, and Research Assistant for researching and summarizing information from multiple sources.

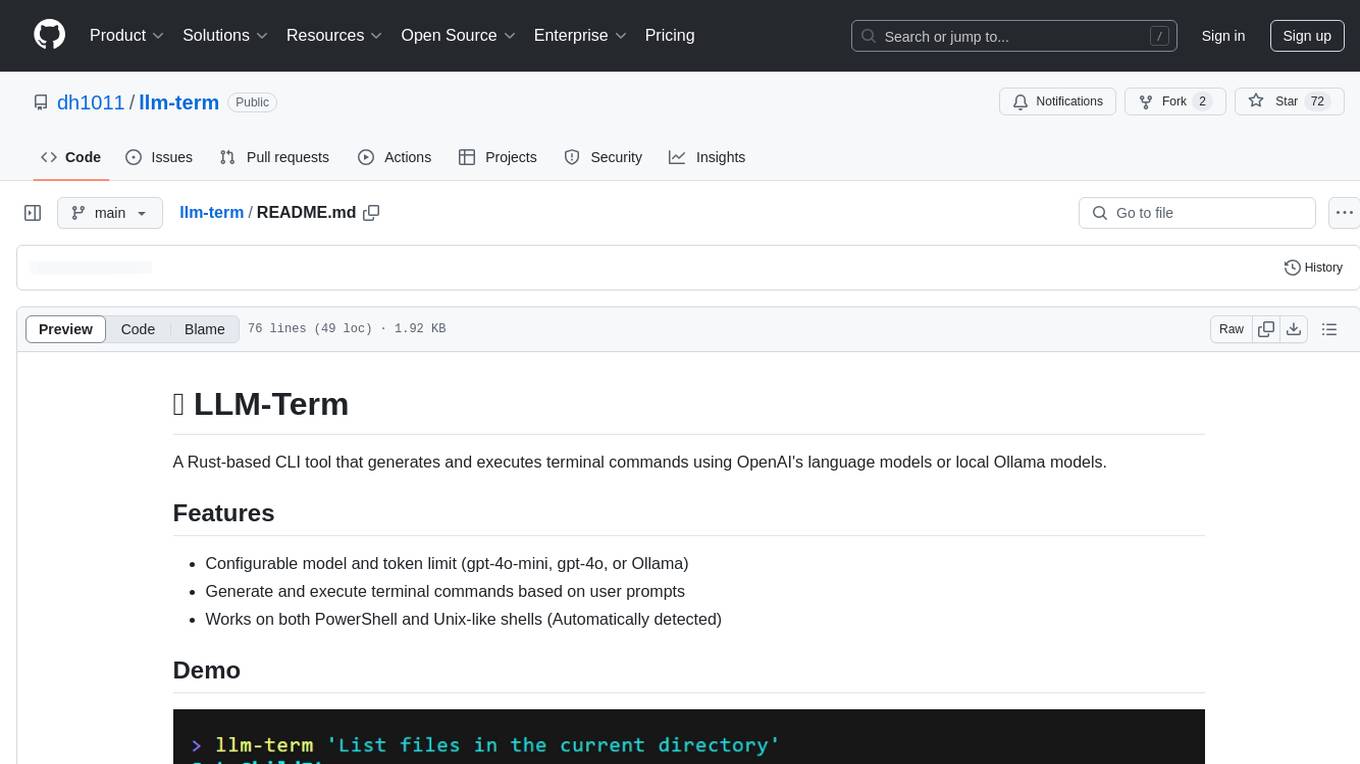

llm-term

LLM-Term is a Rust-based CLI tool that generates and executes terminal commands using OpenAI's language models or local Ollama models. It offers configurable model and token limits, works on both PowerShell and Unix-like shells, and provides a seamless user experience for generating commands based on prompts. Users can easily set up the tool, customize configurations, and leverage different models for command generation.

arena-breakout-ai

Arena Breakout AI is a repository that offers detailed information and resources for downloading, installing, and using the ExCheats tool. Users can access premium plan functionalities and ensure the safety of their Windows system. The tool supports various Windows systems, including Windows 8, 8.1, 10, and 11 (x32/64). To get started, users need to visit the provided website, navigate to the cheats catalog, select CheatSquad Loader 3.5 for any games, download the tool, open the archive, and choose the game to continue.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.