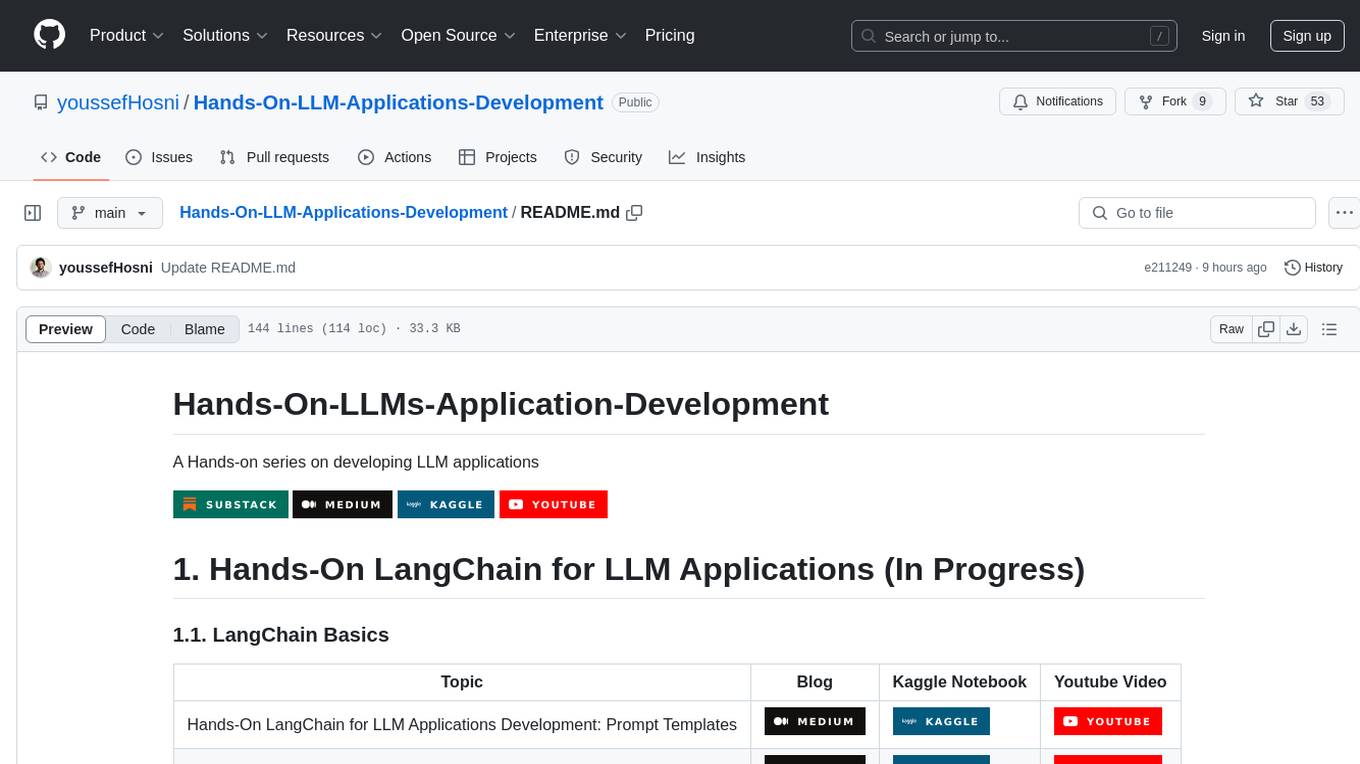

Hands-On-LLM-Applications-Development

A Hands on series on developing LLM applications

Stars: 53

Hands-On-LLM-Applications-Development is a repository focused on developing applications using Large Language Models (LLMs). The repository provides hands-on tutorials, guides, and resources for building various applications such as LangChain for LLM applications, Retrieval Augmented Generation (RAG) with LangChain, building LLM agents with LangGraph, and advanced LangChain with OpenAI. It covers topics like prompt engineering for LLMs, building applications using HuggingFace open-source models, LLM fine-tuning, and advanced RAG applications.

README:

A Hands-on series on developing LLM applications

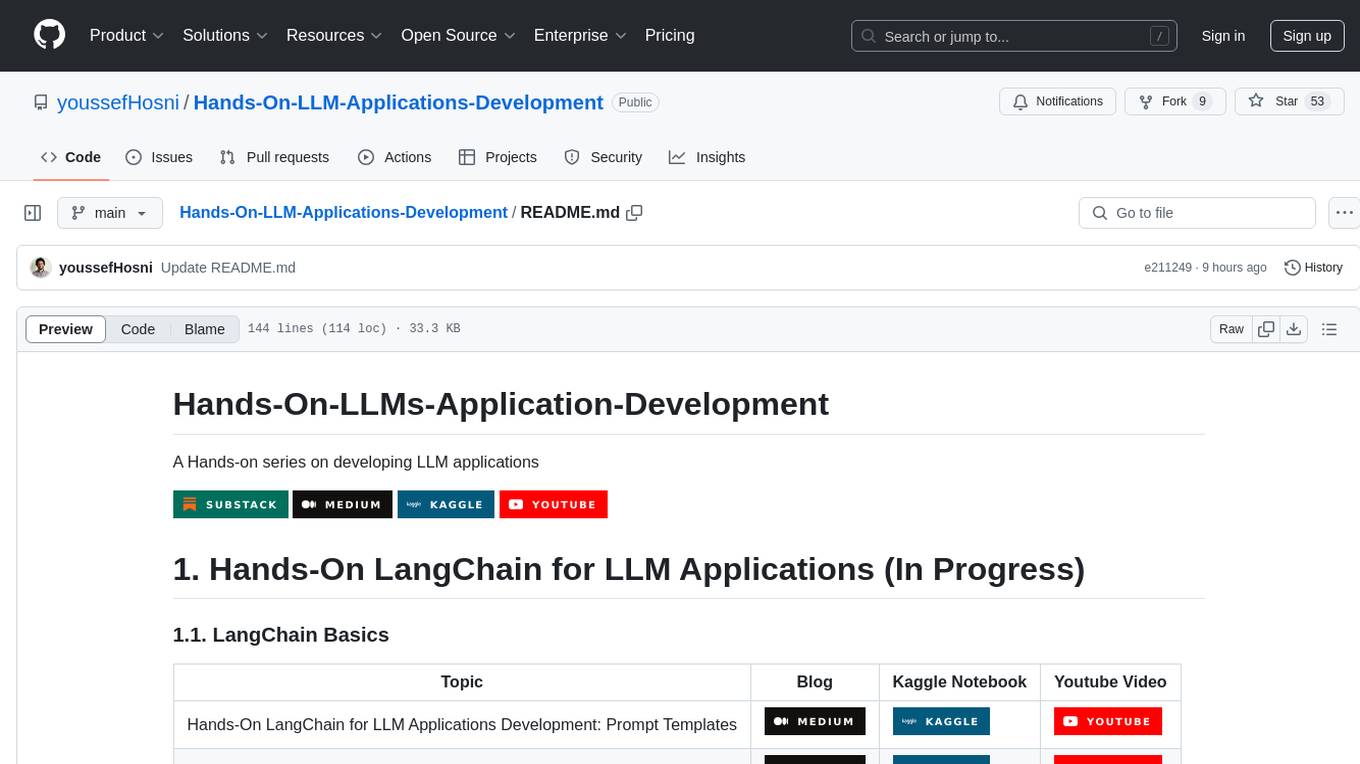

| Topic | Blog | Kaggle Notebook | Youtube Video |

|---|---|---|---|

| Building Simple ReAct Agent from Scratch |  |

|

|

| LangGraph Components |  |

|

|

| Agentic Search Tools |  |

|

|

| Persistence and Streaming |  |

|

|

| Human in the Loop |  |

|

|

| Building Essay Writer Agent |  |

|

|

| Topic | Blog | Kaggle Notebook | Youtube Video |

|---|---|---|---|

| Building Chatbot Using HuggingFace Open Source Models |  |

|

|

| Building Text Translation System using Meta NLLB Open-Source Model |  |

|

|

| Topic | Blog | Kaggle Notebook | Youtube Video |

|---|---|---|---|

| Topic |  |

|

|

| Topic | Blog | Kaggle Notebook | Youtube Video |

|---|---|---|---|

| Finetune Falcon-7b with LoRA: A Step-by-Step Guide |  |

|

|

| Topic | Blog | Kaggle Notebook | Youtube Video |

|---|---|---|---|

| Instruction Fine-Tuning Large Language Models for Summarization: Step-by-Step Guide |  |

|

|

| Topic | Blog | Kaggle Notebook | Youtube Video |

|---|---|---|---|

| Topic |  |

|

|

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Hands-On-LLM-Applications-Development

Similar Open Source Tools

Hands-On-LLM-Applications-Development

Hands-On-LLM-Applications-Development is a repository focused on developing applications using Large Language Models (LLMs). The repository provides hands-on tutorials, guides, and resources for building various applications such as LangChain for LLM applications, Retrieval Augmented Generation (RAG) with LangChain, building LLM agents with LangGraph, and advanced LangChain with OpenAI. It covers topics like prompt engineering for LLMs, building applications using HuggingFace open-source models, LLM fine-tuning, and advanced RAG applications.

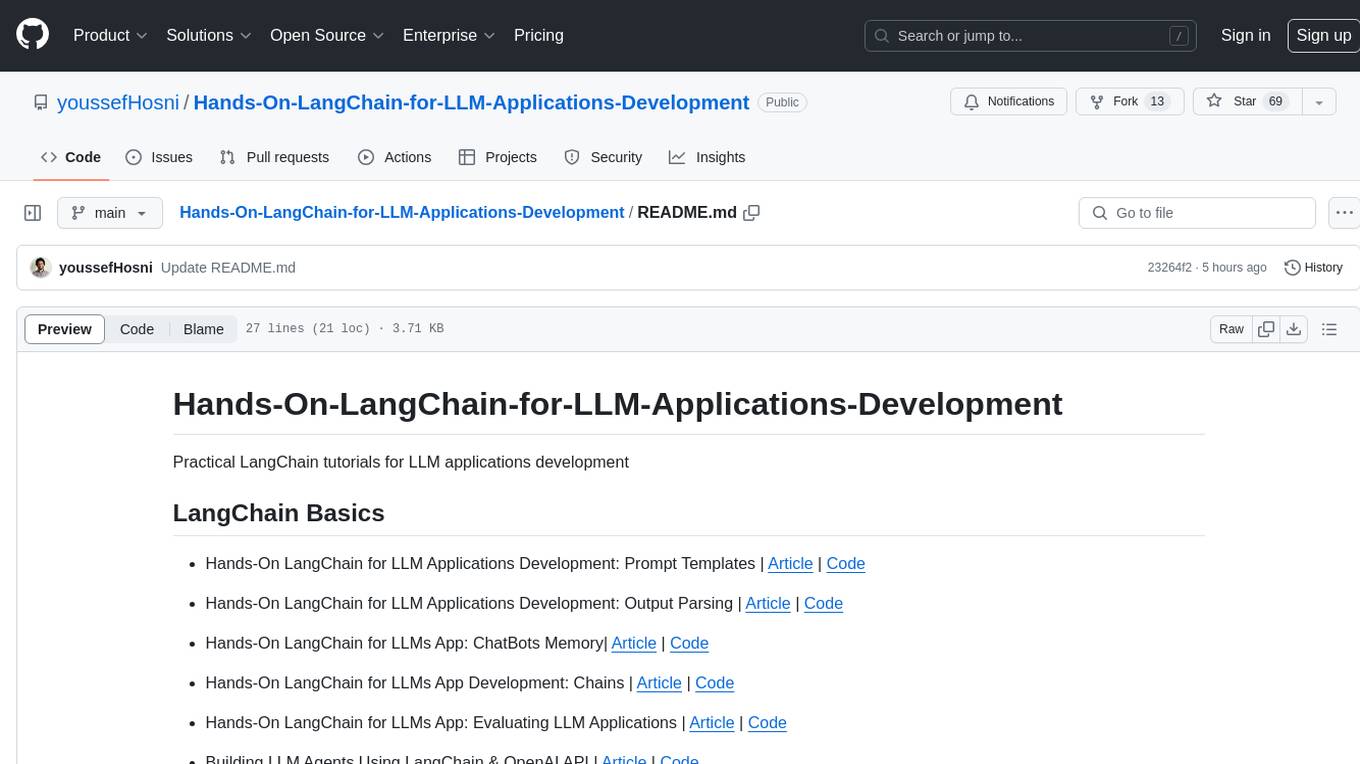

Hands-On-LangChain-for-LLM-Applications-Development

Practical LangChain tutorials for developing LLM applications, including prompt templates, output parsing, chatbots memory, chains, evaluating applications, building agents using LangChain & OpenAI API, retrieval augmented generation with LangChain, documents loading, splitting, vector database & text embeddings, information retrieval, answering questions from documents, chat with files, and introduction to Open AI function calling.

Awesome-RL-for-LRMs

This repository contains a collection of awesome resources for reinforcement learning in language models. It includes tutorials, code implementations, research papers, and tools to help researchers and practitioners explore and apply reinforcement learning techniques in natural language processing tasks. Whether you are a beginner or an expert in the field, this repository aims to provide valuable insights and guidance to enhance your understanding and implementation of reinforcement learning in language models.

Steel-LLM

Steel-LLM is a project to pre-train a large Chinese language model from scratch using over 1T of data to achieve a parameter size of around 1B, similar to TinyLlama. The project aims to share the entire process including data collection, data processing, pre-training framework selection, model design, and open-source all the code. The goal is to enable reproducibility of the work even with limited resources. The name 'Steel' is inspired by a band '万能青年旅店' and signifies the desire to create a strong model despite limited conditions. The project involves continuous data collection of various cultural elements, trivia, lyrics, niche literature, and personal secrets to train the LLM. The ultimate aim is to fill the model with diverse data and leave room for individual input, fostering collaboration among users.

VideoLLaMA2

VideoLLaMA 2 is a project focused on advancing spatial-temporal modeling and audio understanding in video-LLMs. It provides tools for multi-choice video QA, open-ended video QA, and video captioning. The project offers model zoo with different configurations for visual encoder and language decoder. It includes training and evaluation guides, as well as inference capabilities for video and image processing. The project also features a demo setup for running a video-based Large Language Model web demonstration.

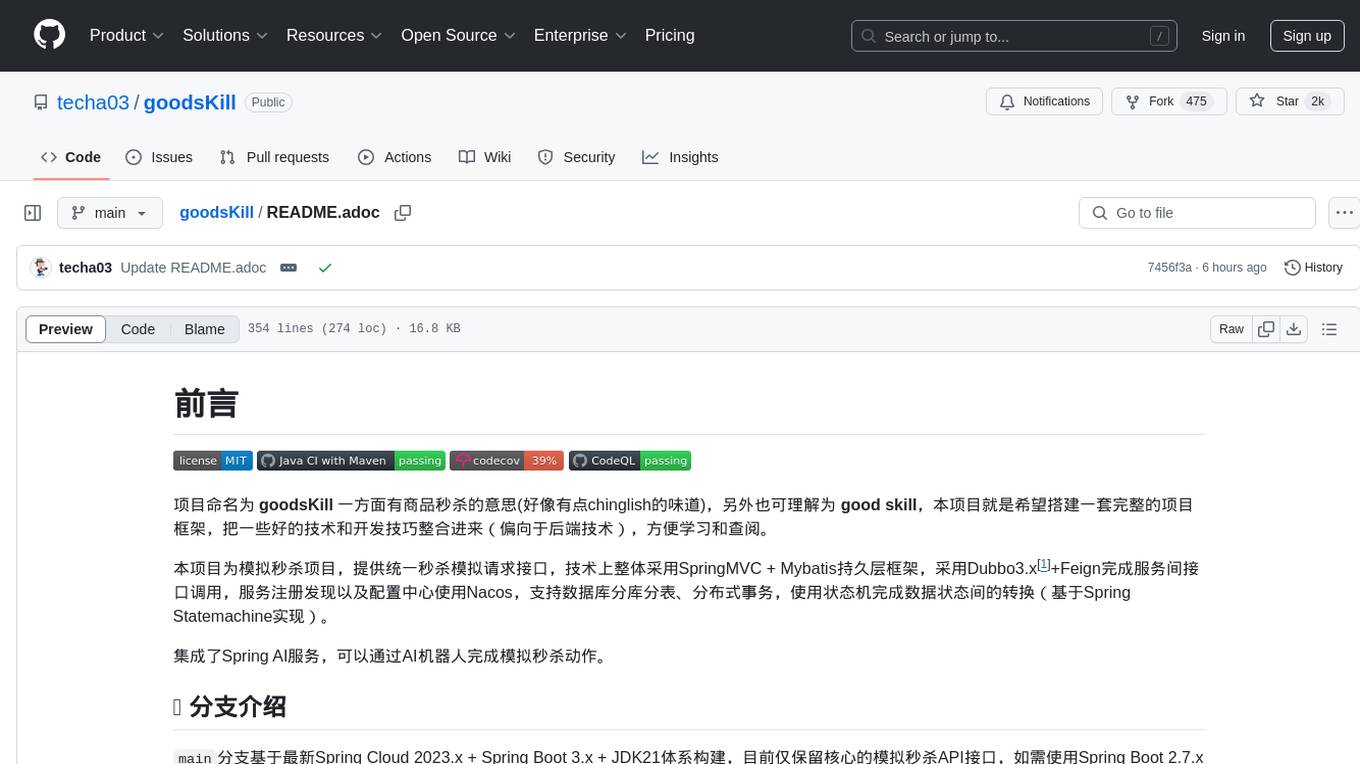

goodsKill

The 'goodsKill' project aims to build a complete project framework integrating good technologies and development techniques, mainly focusing on backend technologies. It provides a simulated flash sale project with unified flash sale simulation request interface. The project uses SpringMVC + Mybatis for the overall technology stack, Dubbo3.x for service intercommunication, Nacos for service registration and discovery, and Spring State Machine for data state transitions. It also integrates Spring AI service for simulating flash sale actions.

chatgpt-auto-continue

ChatGPT Auto-Continue is a userscript that automatically continues generating ChatGPT responses when chats cut off. It relies on the powerful chatgpt.js library and is easy to install and use. Simply install Tampermonkey and ChatGPT Auto-Continue, and visit chat.openai.com as normal. Multi-reply conversations will automatically continue generating when cut-off!

Awesome-LLMOps

Awesome-LLMOps is a curated list of the best LLMOps tools, providing a comprehensive collection of frameworks and tools for building, deploying, and managing large language models (LLMs) and AI agents. The repository includes a wide range of tools for tasks such as building multimodal AI agents, fine-tuning models, orchestrating applications, evaluating models, and serving models for inference. It covers various aspects of the machine learning operations (MLOps) lifecycle, from training to deployment and observability. The tools listed in this repository cater to the needs of developers, data scientists, and machine learning engineers working with large language models and AI applications.

LLMs-from-scratch-CN

This repository is a Chinese translation of the GitHub project 'LLMs-from-scratch', including detailed markdown notes and related Jupyter code. The translation process aims to maintain the accuracy of the original content while optimizing the language and expression to better suit Chinese learners' reading habits. The repository features detailed Chinese annotations for all Jupyter code, aiding users in practical implementation. It also provides various supplementary materials to expand knowledge. The project focuses on building Large Language Models (LLMs) from scratch, covering fundamental constructions like Transformer architecture, sequence modeling, and delving into deep learning models such as GPT and BERT. Each part of the project includes detailed code implementations and learning resources to help users construct LLMs from scratch and master their core technologies.

pi-nexus-autonomous-banking-network

A decentralized, AI-driven system accelerating the Open Mainet Pi Network, connecting global banks for secure, efficient, and autonomous transactions. The Pi-Nexus Autonomous Banking Network is built using Raspberry Pi devices and allows for the creation of a decentralized, autonomous banking system.

chatgpt-widescreen

ChatGPT Widescreen Mode is a browser extension that adds widescreen and fullscreen modes to ChatGPT, enhancing chat sessions by reducing scrolling and creating a more immersive viewing experience. Users can experience clearer programming code display, view multi-step instructions or long recipes on a single page, enjoy original content in a visually pleasing format, customize features like a larger chatbox and hidden header/footer, and use the tool with chat.openai.com and poe.com. The extension is compatible with various browsers and relies on code from the chatgpt.js library under the MIT license.

kirara-ai

Kirara AI is a chatbot that supports mainstream large language models and chat platforms. It provides features such as image sending, keyword-triggered replies, multi-account support, personality settings, and support for various chat platforms like QQ, Telegram, Discord, and WeChat. The tool also supports HTTP server for Web API, popular large models like OpenAI and DeepSeek, plugin mechanism, conditional triggers, admin commands, drawing models, voice replies, multi-turn conversations, cross-platform message sending, custom workflows, web management interface, and built-in Frpc intranet penetration.

ComfyUI-OllamaGemini

ComfyUI GeminiOllama Extension integrates Google's Gemini API, OpenAI (ChatGPT), Anthropic's Claude, Ollama, Qwen, and image processing tools into ComfyUI for leveraging powerful models and features directly within workflows. Features include multiple AI API integrations, advanced prompt engineering, Gemini image generation, background removal, SVG conversion, FLUX resolutions, ComfyUI Styler, smart prompt generator, and more. The extension offers comprehensive API integration, advanced prompt engineering with researched templates, high-quality tools like Smart Prompt Generator and BRIA RMBG, and supports video & audio processing. It provides a single interface to access powerful AI models, transform prompts into detailed instructions, and use various tools for image processing, styling, and content generation.

chatgpt-apps

This repository contains a collection of apps that utilize the astounding AI of ChatGPT or enhance its UX. These apps range from simple scripts to full-fledged extensions, each designed to make your ChatGPT experience more efficient, enjoyable, or private.

For similar tasks

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

BotSharp

BotSharp is an open-source machine learning framework for building AI bot platforms. It provides a comprehensive set of tools and components for developing and deploying intelligent virtual assistants. BotSharp is designed to be modular and extensible, allowing developers to easily integrate it with their existing systems and applications. With BotSharp, you can quickly and easily create AI-powered chatbots, virtual assistants, and other conversational AI applications.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.