algernon

Small self-contained pure-Go web server with Lua, Teal, Markdown, Ollama, HTTP/2, QUIC, Redis, SQLite and PostgreSQL support ++

Stars: 2881

Algernon is a web server with built-in support for QUIC, HTTP/2, Lua, Teal, Markdown, Pongo2, HyperApp, Amber, Sass(SCSS), GCSS, JSX, Ollama (LLMs), BoltDB, Redis, PostgreSQL, MariaDB/MySQL, MSSQL, rate limiting, graceful shutdown, plugins, users, and permissions. It is a small self-contained executable that supports various technologies and features for web development.

README:

Web server with built-in support for QUIC, HTTP/2, Lua, Teal, Markdown, Pongo2, HyperApp, Amber, Sass(SCSS), GCSS, JSX, Ollama (LLMs), BoltDB (built-in, stores the database in a file, like SQLite), Redis, PostgreSQL, SQLite, MariaDB/MySQL, MSSQL, rate limiting, graceful shutdown, plugins, users and permissions.

All in one small self-contained executable.

Requires Go 1.21 or later.

go install github.com/xyproto/algernon@latest

Or manually (development version):

git clone https://github.com/xyproto/algernon

cd algernon

go build -mod=vendor

./welcome.sh

See the release page for releases for a variety of platforms and architectures.

See TUTORIAL.md.

The Docker image is less than 12MB and can be tried out (on x86_64) with:

mkdir localhost

echo 'hi!' > localhost/index.md

docker run -it -p4000:4000 -v .:/srv/algernon xyproto/algernon

And then visiting http://localhost:4000 in a browser.

Written in Go. Uses Bolt (built-in), MySQL, PostgreSQL, SQLite or Redis (recommended) for the database backend, permissions2 for handling users and permissions, gopher-lua for interpreting and running Lua, optional Teal for type-safe Lua scripting, http2 for serving HTTP/2, QUIC for serving over QUIC, gomarkdown/markdown for Markdown rendering, amber for Amber templates, Pongo2 for Pongo2 templates, Sass(SCSS) and GCSS for CSS preprocessing. logrus is used for logging, goja-babel for converting from JSX to JavaScript, tollbooth for rate limiting, pie for plugins and graceful for graceful shutdowns.

- HTTP/2 over SSL/TLS (https) is used by default, if a certificate and key is given.

- If not, regular HTTP is used.

- QUIC ("HTTP over UDP", HTTP/3) can be enabled with a flag.

- /data and /repos have user permissions, /admin has admin permissions and / is public, by default. This is configurable.

- The following filenames are special, in prioritized order:

- index.lua is Lua code that is interpreted as a handler function for the current directory.

- index.html is HTML that is outputted with the correct Content-Type.

- index.md is Markdown code that is rendered as HTML.

- index.txt is plain text that is outputted with the correct Content-Type.

- index.pongo2, index.po2 or index.tmpl is Pongo2 code that is rendered as HTML.

- index.amber is Amber code that is rendered as HTML.

- index.hyper.js or index.hyper.jsx is JSX+HyperApp code that is rendered as HTML

- index.tl is Teal code that is interpreted as a handler function for the current directory.

- index.prompt is a content-type, an Ollama model, a blank line and a prompt, for generating content with LLMs.

- data.lua is Lua code, where the functions and variables are made available for Pongo2, Amber and Markdown pages in the same directory.

- If a single Lua script is given as a command line argument, it will be used as a standalone server. It can be used for setting up handlers or serving files and directories for specific URL prefixes.

- style.gcss is GCSS code that is used as the style for all Pongo2, Amber and Markdown pages in the same directory.

- The following filename extensions are handled by Algernon:

- Markdown: .md (rendered as HTML)

- Pongo2: .po2, .pongo2 or .tpl (rendered as any text, typically HTML)

- Amber: .amber (rendered as HTML)

- Sass: .scss (rendered as CSS)

- GCSS: .gcss (rendered as CSS)

- JSX: .jsx (rendered as JavaScript/ECMAScript)

- Lua: .lua (a script that provides its own output and content type)

- Teal: .tl (same as .lua but with type safety)

- HyperApp: .hyper.js or .hyper.jsx (rendered as HTML)

- Other files are given a mimetype based on the extension.

- Directories without an index file are shown as a directory listing, where the design is hard coded.

- UTF-8 is used whenever possible.

- The server can be configured by command line flags or with a lua script, but no configuration should be needed for getting started.

- Supports HTTP/2, with or without HTTPS (browsers may require HTTPS when using HTTP/2).

- Also supports QUIC and regular HTTP.

- Can use Lua scripts as handlers for HTTP requests.

- The Algernon executable is compiled to native and is reasonably fast.

- Works on Linux, macOS and 64-bit Windows.

- The Lua interpreter is compiled into the executable.

- The Teal typechecker is loaded into the Lua VM.

- Live editing/preview when using the auto-refresh feature.

- The use of Lua allows for short development cycles, where code is interpreted when the page is refreshed (or when the Lua file is modified, if using auto-refresh).

- Self-contained Algernon applications can be zipped into an archive (ending with

.zipor.alg) and be loaded at start. - Built-in support for Markdown, Pongo2, Amber, Sass(SCSS), GCSS and JSX.

- Redis is used for the database backend, by default.

- Algernon will fall back to the built-in Bolt database if no Redis server is available.

- The HTML title for a rendered Markdown page can be provided by the first line specifying the title, like this:

title: Title goes here. This is a subset of MultiMarkdown. - No file converters needs to run in the background (like for SASS). Files are converted on the fly.

- If

-autorefreshis enabled, the browser will automatically refresh pages when the source files are changed. Works for Markdown, Lua error pages and Amber (including Sass, GCSS and data.lua). This only works on Linux and macOS, for now. If listening for changes on too many files, the OS limit for the number of open files may be reached. - Includes an interactive REPL.

- If only given a Markdown filename as the first argument, it will be served on port 3000, without using any database, as regular HTTP. This can be handy for viewing

README.mdfiles locally. Use-mto display it in a browser and only serve it once. - Full multi-threading. All available CPUs will be used.

- Supports rate limiting, by using tollbooth.

- The

helpcommand is available at the Lua REPL, for a quick overview of the available Lua functions. - Can load plugins written in any language. Plugins must offer the

Lua.CodeandLua.Helpfunctions and talk JSON-RPC over stderr+stdin. See pie for more information. Sample plugins for Go and Python are in thepluginsdirectory. - Thread-safe file caching is built-in, with several available cache modes (for only caching images, for example).

- Can read from and save to JSON documents. Supports simple JSON path expressions (like a simple version of XPath, but for JSON).

- If cache compression is enabled, files that are stored in the cache can be sent directly from the cache to the client, without decompressing.

- Files that are sent to the client are compressed with gzip, unless they are under 4096 bytes.

- When using PostgreSQL, the HSTORE key/value type is used (available in PostgreSQL version 9.1 or later).

- No external dependencies, only pure Go.

- Requires Go >= 1.21 or a version of GCC/

gccgothat supports Go 1.21. - The Lua implementation used in Algernon (gopherlua) does not support

package.loadlib.

Q:

What is the benefit of using this? In what scenario would this excel? Thanks. -- mtw@HN.

A:

Good question. I'm not sure if it excels in any scenario. There are specialized web servers that excel at caching or at raw performance. There are dedicated backends for popular front-end toolkits like Vue or React. There are dedicated editors that excel at editing and previewing Markdown, or HTML.

I guess the main benefit is that Algernon covers a lot of ground, with a minimum of configuration, while being powerful enough to have a plugin system and support for programming in Lua. There is an auto-refresh feature that uses Server Sent Events, when editing Markdown or web pages. There is also support for the latest in Web technologies, like HTTP/2, QUIC and TLS 1.3. The caching system is decent. And the use of Go ensures that also smaller platforms like NetBSD and systems like Raspberry Pi are covered. There are no external dependencies, so Algernon can run on any system that Go can support.

The main benefit is that is is versatile, fresh, and covers many platforms and use cases.

For a more specific description of a potential benefit, a more specific use case would be needed.

- Install Homebrew, if needed.

brew install algernon

pacman -S algernon

This method is using the latest commit from the main branch:

go install github.com/xyproto/algernon@main

If neeed, add ~/go/bin to the path. For example: export PATH=$PATH:$HOME/go/bin.

- Comes with the

alg2dockerutility, for creating Docker images from Algernon web applications (.algfiles). - http2check can be used for checking if a web server is offering HTTP/2.

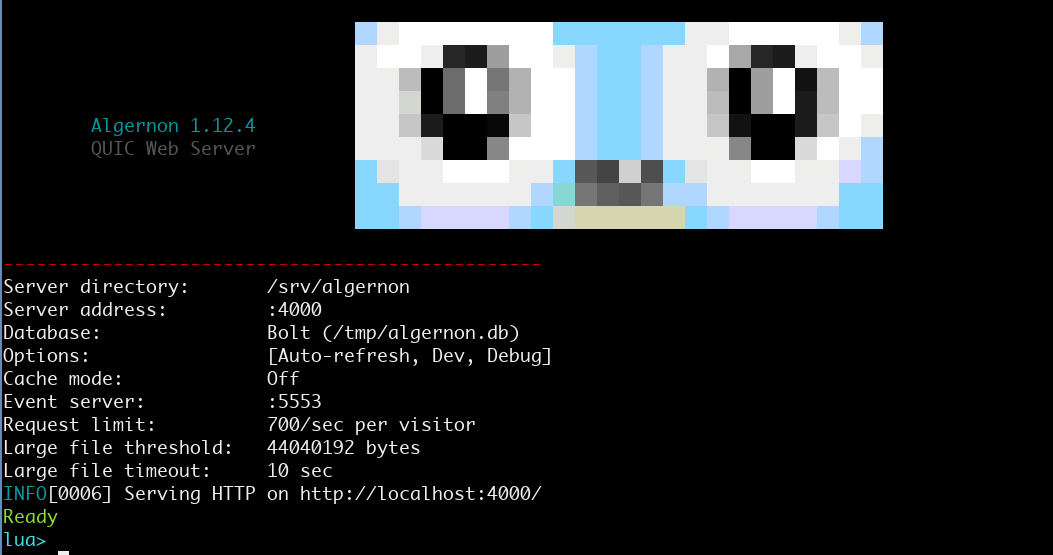

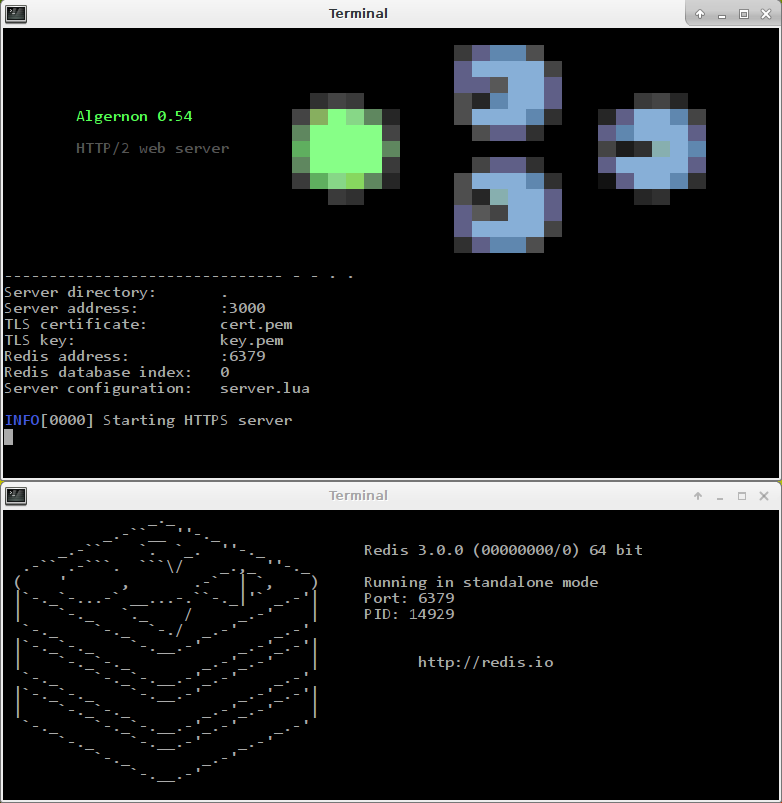

Running Algernon:

Screenshot of an earlier version:

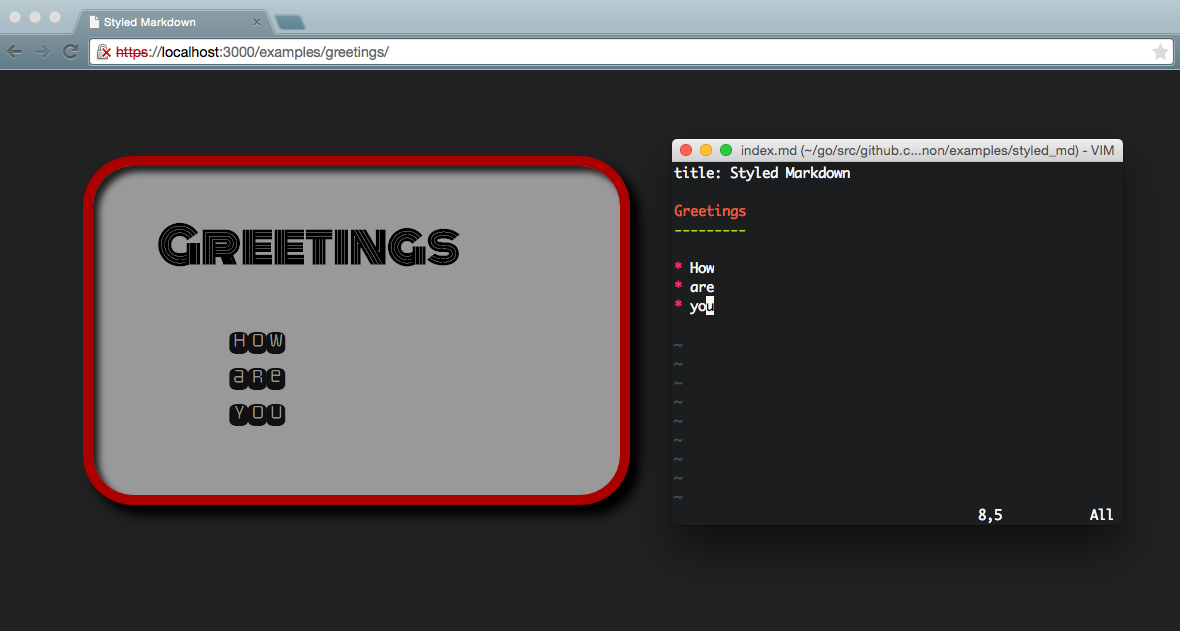

The idea is that web pages can be written in Markdown, Pongo2, Amber, HTML or JSX (+React or HyperApp), depending on the need, and styled with CSS, Sass(SCSS) or GCSS, while data can be provided by a Lua or Teal script that talks to Redis, BoltDB, PostgreSQL, MSQL or MariaDB/MySQL.

Amber and GCSS is a good combination for static pages, that allows for more clarity and less repetition than HTML and CSS. It˙s also easy to use Lua for providing data for the Amber templates, which helps separate model, controller and view.

Pongo2, Sass and Lua or Teal also combines well. Pongo2 is more flexible than Amber.

The auto-refresh feature is supported when using Markdown, Pongo2 or Amber, and is useful to get an instant preview when developing.

The JSX to JavaScript (ECMAscript) transpiler is built-in.

Redis is fast, scalable and offers good data persistence. This should be the preferred backend.

Bolt is a pure key/value store, written in Go. It makes it easy to run Algernon without having to set up a database host first. MariaDB/MySQL support is included because of its widespread availability.

PostgreSQL is a solid and fast database that is also supported.

Markdown can easily be styled with Sass or GCSS.

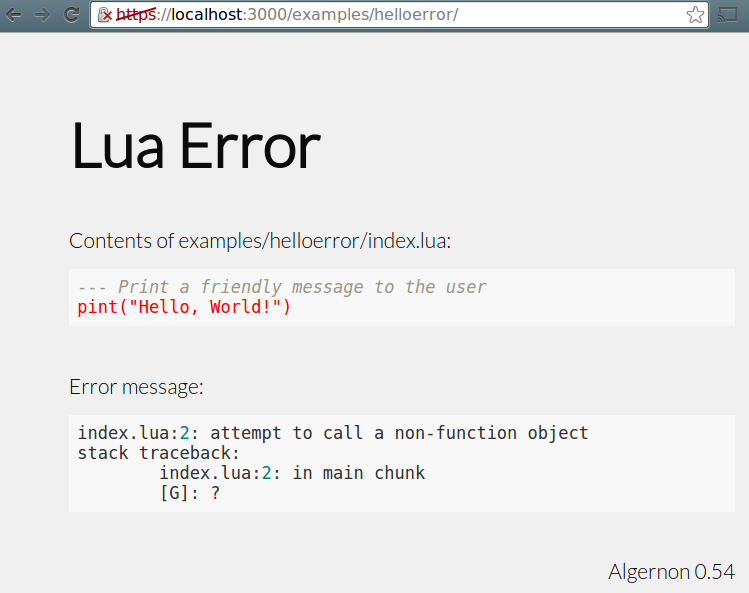

This is how errors in Lua scripts are handled, when Debug mode is enabled.

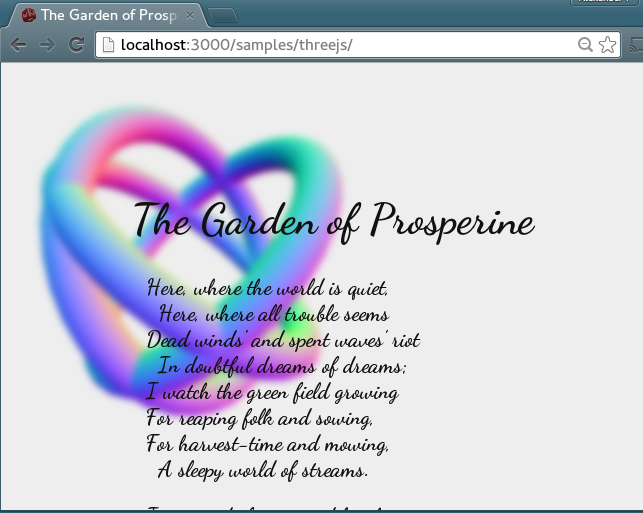

One of the poems of Algernon Charles Swinburne, with three rotating tori in the background. Uses CSS3 for the Gaussian blur and three.js for the 3D graphics.

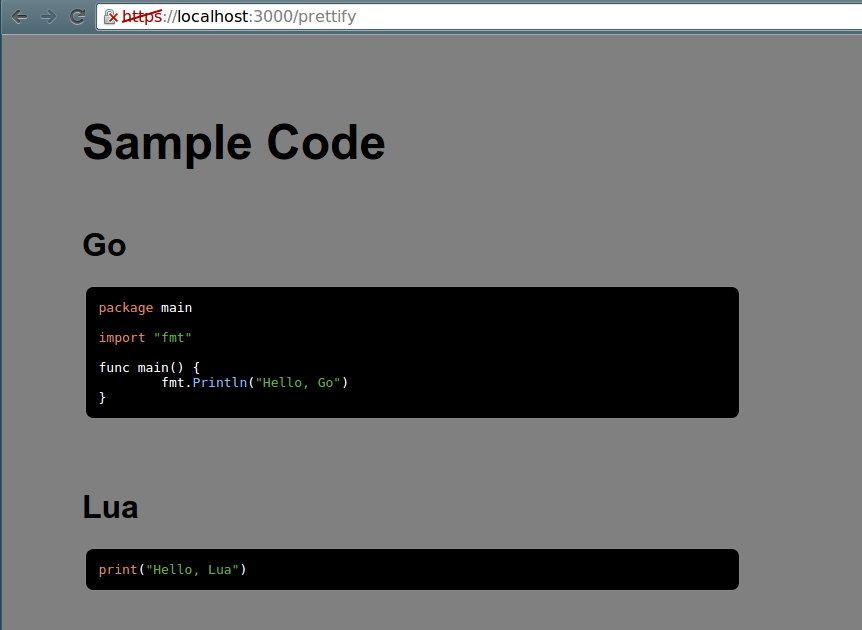

Screenshot of the prettify sample. Served from a single Lua script.

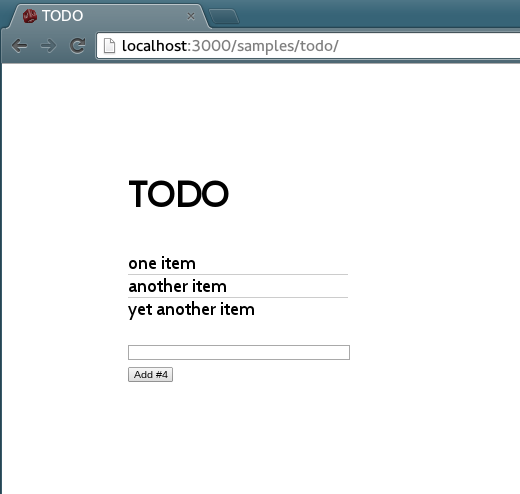

JSX transforms are built-in. Using React together with Algernon is easy.

The sample collection can be downloaded from the samples directory in this repository, or here: samplepack.zip.

This enables debug mode, uses the internal Bolt database, uses regular HTTP instead of HTTPS+HTTP/2 and enables caching for all files except: Pongo2, Amber, Lua, Teal, Sass, GCSS, Markdown and JSX.

algernon -e

Then try creating an index.lua file with print("Hello, World!") and visit the served web page in a browser.

- Chrome: go to

chrome://flags/#enable-spdy4, enable, save and restart the browser. - Firefox: go to

about:config, setnetwork.http.spdy.enabled.http2drafttotrue. You might need the nightly version of Firefox.

- You may need to change the firewall settings for port 3000, if you wish to use the default port for exploring the samples.

- For the auto-refresh feature to work, port 5553 must be available (or another host/port of your choosing, if configured otherwise).

git clone https://github.com/xyproto/algernon

make -C algernon

- Run

./welcome.shto start serving the "welcome" sample. - Visit

http://localhost:3000/

mkdir mypagecd mypage- Create a file named

index.lua, with the following contents:print("Hello, Algernon") - Start

algernon --httponly --autorefresh. - Visit

http://localhost:3000/. - Edit

index.luaand refresh the browser to see the new result. - If there were errors, the page will automatically refresh when

index.luais changed. - Markdown, Pongo2 and Amber pages will also refresh automatically, as long as

-autorefreshis used.

mkdir mypagecd mypage- Create a file named

index.lua, with the following contents:print("Hello, Algernon") - Create a self-signed certificate, just for testing:

openssl req -x509 -newkey rsa:4096 -keyout key.pem -out cert.pem -days 3000 -nodes- Press return at all the prompts, but enter

localhostat Common Name. - For production, store the keys in a directory with as strict permissions as possible, then specify them with the

--certand--keyflags. - Start

algernon. - Visit

https://localhost:3000/. - If you have not imported the certificates into the browser, nor used certificates that are signed by trusted certificate authorities, perform the necessary clicks to confirm that you wish to visit this page.

- Edit

index.luaand refresh the browser to see the result (or a Lua error message, if the script had a problem).

There is also a small tutorial.

- The

ollamaserver must be running locally, or ahost:portmust be set in theOLLAMA_HOSTenvironment variable.

For example, using the default tinyllama model (will be downloaded at first use, the size is 637 MiB and it should run anywhere).

lua> ollama()

Autumn leaves, crisp air, poetry flowing - this is what comes to mind when I think of Algernon.

lua> ollama("Write a haiku about software developers")

The software developer,

In silence, tapping at keys,

Creating digital worlds.

Using OllamaClient and the mixtral model (will be downloaded at first use, the size is 26 GiB and it might require quite a bit of RAM and also a fast CPU and/or GPU).

lua> oc = OllamaClient("mixtral")

lua> oc:ask("Write a quicksort function in OCaml")

Sure! Here's an implementation of the quicksort algorithm in OCaml:

let rec qsort = function

| [] -> []

| pivot :: rest ->

let smaller, greater = List.partition (fun x -> x < pivot) rest in

qsort smaller @ [pivot] @ qsort greater

This function takes a list as input and returns a new list with the same elements but sorted in ascending order using the quicksort algorithm. The `qsort` funct.

Here are some examples of using the `qsort` function:

# qsort [5; 2; 9; 1; 3];;

- : int list = [1; 2; 3; 5; 9]

# qsort ["apple"; "banana"; "cherry"];;

- : string list = ["apple"; "banana"; "cherry"]

# qsort [3.14; 2.718; 1.618];;

- : float list = [1.618; 2.718; 3.14]

I hope this helps! Let me know if you have any questions or need further clarification.

Example use of finding the distance between how the LLM models interpret the prompts:

lua> oc = OllamaClient("llama3")

lua> oc:distance("cat", "dog")

0.3629187146002938

lua> oc:distance("cat", "kitten")

0.3584441305547792

lua> oc:distance("dog", "puppy")

0.2825554473355113

lua> oc:distance("dog", "kraken", "manhattan")

7945.885516248905

lua> oc:distance("dog", "kraken", "cosine")

0.5277307399621305

As you can tell, according to Llama3, "dog" is closer to "puppy" (0.28) than "cat" is to "kitten" (0.36) and "dog" is very different from "kraken" (0.53).

The available distance measurement algorithms are: cosine, euclidean, manhattan, chebyshev and hamming. The default metric is cosine.

Available Ollama models are available here: Ollama Library.

There is also support for .prompt files that can generate contents, such as HTML pages, in a reproducible way. The results will be cached for as long as Algernon is running.

Example index.prompt file:

text/html

gemma

Generate a fun and over the top web page that demonstrates the use of CSS animations and JavaScript.

Everything should be inline in one HTML document. Only output the full and complete HTML document.

The experimental prompt format is very simple:

- The first line is the

content-type. - The second line is the Ollama model, such as

tinyllama:latestor justtinyllama. - The third line is blank.

- The rest of the lines are the prompt that will be passed to the large language model.

Note that the Ollama server must be fast enough to reply within 10 seconds for this to work!

tinyllama or gemma should be more than fast enough with a good GPU or on an M1/M2/M3 processor.

For more fine-grained control, try using the Ollama-related Lua functions instead, and please create a PR or issue if something central is missing.

The ClearCache() function can be used at the Algernon Lua prompt to also clear the AI cache.

// Return the version string for the server.

version() -> string

// Sleep the given number of seconds (can be a float).

sleep(number)

// Log the given strings as information. Takes a variable number of strings.

log(...)

// Log the given strings as a warning. Takes a variable number of strings.

warn(...)

// Log the given strings as an error. Takes a variable number of strings.

err(...)

// Return the number of nanoseconds from 1970 ("Unix time")

unixnano() -> number

// Convert Markdown to HTML

markdown(string) -> string

// Sanitize HTML

sanhtml(string) -> string

// Return the directory where the REPL or script is running. If a filename (optional) is given, then the path to where the script is running, joined with a path separator and the given filename, is returned.

scriptdir([string]) -> string

// Read a glob, ie. "*.md" in the current script directory, or the given directory (optional). The contents of all found files are reeturned as a table.

readglob(string[, string]) -> table

// Return the directory where the server is running. If a filename (optional) is given, then the path to where the server is running, joined with a path separator and the given filename, is returned.

serverdir([string]) -> string// Set the Content-Type for a page.

content(string)

// Return the requested HTTP method (GET, POST etc).

method() -> string

// Output text to the browser/client. Takes a variable number of strings.

print(...)

// Same as print, but does not add a newline at the end.

print_nonl(...)

// Return the requested URL path.

urlpath() -> string

// Return the HTTP header in the request, for a given key, or an empty string.

header(string) -> string

// Set an HTTP header given a key and a value.

setheader(string, string)

// Return the HTTP headers, as a table.

headers() -> table

// Return the HTTP body in the request (will only read the body once, since it's streamed).

body() -> string

// Set a HTTP status code (like 200 or 404). Must be used before other functions that writes to the client!

status(number)

// Set a HTTP status code and output a message (optional).

error(number[, string])

// Serve a file that exists in the same directory as the script. Takes a filename.

serve(string)

// Serve a Pongo2 template file, with an optional table with template key/values.

serve2(string[, table)

// Return the rendered contents of a file that exists in the same directory as the script. Takes a filename.

render(string) -> string

// Return a table with keys and values as given in a posted form, or as given in the URL.

formdata() -> table

// Return a table with keys and values as given in the request URL, or in the given URL (`/some/page?x=7` makes the key `x` with the value `7` available).

urldata([string]) -> table

// Redirect to an absolute or relative URL. May take an HTTP status code that will be used when redirecting.

// Returns false if the connection has been closed.

redirect(string[, number]) -> bool

// Permanent redirect to an absolute or relative URL. Uses status code 302.

// Returns false if the connection has been closed.

permanent_redirect(string) -> bool

// Send "Connection: close" as a header to the client, flush the body and also

// stop Lua functions from writing more data to the HTTP body.

close()

// Transmit what has been outputted so far, to the client. Returns true if it worked out and the connection has not been closed.

flush() -> bool// Output rendered Markdown to the browser/client. The given text is converted from Markdown to HTML. Takes a variable number of strings. A script tag may also be added.

mprint(...)

// Output rendered Markdown to the browser/client. The given text is converted from Markdown to HTML. Takes a variable number of strings. If a script tag needs to be added to the HTML, it is returned as a string.

mprint_ret(...) -> string

// Output rendered Amber to the browser/client. The given text is converted from Amber to HTML. Takes a variable number of strings.

aprint(...)

// Output rendered GCSS to the browser/client. The given text is converted from GCSS to CSS. Takes a variable number of strings.

gprint(...)

// Output rendered HyperApp JSX to the browser/client. The given text is converted from JSX to JavaScript. Takes a variable number of strings.

hprint(...)

// Output rendered React JSX to the browser/client. The given text is converted from JSX to JavaScript. Takes a variable number of strings.

jprint(...)

// Output rendered HTML to the browser/client. The given text is converted from Pongo2 to HTML. The first argument is the Pongo2 template and the second argument is a table. The keys in the table can be referred to in the template.

poprint(string[, table])

// Output a simple HTML page with a message, title and theme.

// The title and theme are optional.

msgpage(string[, string][, string])Tips:

- Use

JFile(filename)to use or store a JSON document in the same directory as the Lua script. - A JSON path is on the form

x.mapkey.listname[2].mapkey, where[,]and.have special meaning. It can be used for pinpointing a specific place within a JSON document. It's a bit like a simple version of XPath, but for JSON. - Use

tostring(userdata)to fetch the JSON string from the JFile object.

// Use, or create, a JSON document/file.

JFile(filename) -> userdata

// Takes a JSON path. Returns a string value, or an empty string.

jfile:getstring(string) -> string

// Takes a JSON path. Returns a JNode or nil.

jfile:getnode(string) -> userdata

// Takes a JSON path. Returns a value or nil.

jfile:get(string) -> value

// Takes a JSON path (optional) and JSON data to be added to the list.

// The JSON path must point to a list, if given, unless the JSON file is empty.

// "x" is the default JSON path. Returns true on success.

jfile:add([string, ]string) -> bool

// Take a JSON path and a string value. Changes the entry. Returns true on success.

jfile:set(string, string) -> bool

// Remove a key in a map. Takes a JSON path, returns true on success.

jfile:delkey(string) -> bool

// Convert a Lua table, where keys are strings and values are strings or numbers, to JSON.

// Takes an optional number of spaces to indent the JSON data.

// (Note that keys in JSON maps are always strings, ref. the JSON standard).

json(table[, number]) -> string

// Create a JSON document node.

JNode() -> userdata

// Add JSON data to a node. The first argument is an optional JSON path.

// The second argument is a JSON data string. Returns true on success.

// "x" is the default JSON path.

jnode:add([string, ]string) -> bool

// Given a JSON path, retrieves a JSON node.

jnode:get(string) -> userdata

// Given a JSON path, retrieves a JSON string.

jnode:getstring(string) -> string

// Given a JSON path and a JSON string, set the value.

jnode:set(string, string)

// Given a JSON path, remove a key from a map.

jnode:delkey(string) -> bool

// Return the JSON data, nicely formatted.

jnode:pretty() -> string

// Return the JSON data, as a compact string.

jnode:compact() -> string

// Sends JSON data to the given URL. Returns the HTTP status code as a string.

// The content type is set to "application/json; charset=utf-8".

// The second argument is an optional authentication token that is used for the

// Authorization header field.

jnode:POST(string[, string]) -> string

// Alias for jnode:POST

jnode:send(string[, string]) -> string

// Same as jnode:POST, but sends HTTP PUT instead.

jnode:PUT(string[, string]) -> string

// Fetches JSON over HTTP given an URL that starts with http or https.

// The JSON data is placed in the JNode. Returns the HTTP status code as a string.

jnode:GET(string) -> string

// Alias for jnode:GET

jnode:receive(string) -> string

// Convert from a simple Lua table to a JSON string

JSON(table) -> stringQuick example: GET("http://ix.io/1FTw")

// Create a new HTTP Client object

HTTPClient() -> userdata

// Select Accept-Language (ie. "en-us")

hc:SetLanguage(string)

// Set the request timeout (in milliseconds)

hc:SetTimeout(number)

// Set a cookie (name and value)

hc:SetCookie(string, string)

// Set the user agent (ie. "curl")

hc:SetUserAgent(string)

// Perform a HTTP GET request. First comes the URL, then an optional table with

// URL parameters, then an optional table with HTTP headers.

hc:Get(string, [table], [table]) -> string

// Perform a HTTP POST request. It's the same arguments as for `Get`, except

// the fourth optional argument is the POST body.

hc:Post(string, [table], [table], [string]) -> string

// Like `Get`, except the first argument is the HTTP method (like "PUT")

hc:Do(string, string, [table], [table]) -> string

// Shorthand for HTTPClient():Get()

GET(string, [table], [table]) -> string

// Shorthand for HTTPClient():Post()

POST(string, [table], [table], [string]) -> string

// Shorthand for HTTPClient():Do()

DO(string, string, [table], [table]) -> string// Connect to the local Ollama server and use either the `tinyllama` model, or the supplied model string (like ie. mixtral:latest).

// Also takes an optional host address.

OllamaClient([string], [string]) -> userdata

// Download the required model, if needed. This may take a while if the model is large.

oc:pull()

// Pass a prompt to Ollama and return the reproducible generated output. If no prompt is given, it will request a poem about Algernon.

// Can also take an optional model name, which will trigger a download if the model is missing.

oc:ask([string], [string]) -> string

// Pass a prompt to Ollama and return the generated output that will differ every time.

// Can also take an optional model name, which will trigger a download if the model is missing.

oc:creative([string], [string]) -> string

// Check if the given model name is downloaded and ready

oc:has(string)

// List all models that are downloaded and ready

oc:list()

// Get or set the currently active model name, but does not pull anything

oc:model([string])

// Get the size of the given model name as a human-friendly string

oc:size(string) -> string

// Get the size of the given model name, in bytes

oc:bytesize(string) -> number

// Given two prompts, return how similar they are.

// The first optional string is the algorithm for measuring the distance: cosine, euclidean, manhattan, chebyshev or hamming.

// Only the two first letters of the algorithm name are needed, so "co" or "ma" are also valid. The default is cosine.

// The second optional string is the model name.

oc:distance(string, string, [string], [string]) -> number

// Convenience function for the local Ollama server that takes an optional prompt and an optional model name.

// Generates a poem with the `tinyllama` model by default.

ollama([string], [string]) -> string

// Convenience function for Base64-encoding the given file

base64EncodeFile(string) -> string

// Describe the given base64-encoded image using Ollama (and the `llava-llama3` model, by default)

describeImage(string, [string]) -> string

// Given two embeddings (tables of floats, representing text or data), return how similar they are.

// The optional string is the algorithm for measuring the distance: cosine, euclidean, manhattan, chebyshev or hamming.

// Only the two first letters of the algorithm name are needed, so "co" or "ma" are also valid. The default is cosine.

embeddedDistance(table, table, [string]) -> number// Load a plugin given the path to an executable. Returns true on success. Will return the plugin help text if called on the Lua prompt.

// Pass in true as the second argument to keep it running.

Plugin(string, [bool])

// Returns the Lua code as returned by the Lua.Code function in the plugin, given a plugin path. May return an empty string.

// Pass in true as the second argument to keep it running.

PluginCode(string, [bool]) -> string

// Takes a plugin path, function name and arguments. Returns an empty string if the function call fails, or the results as a JSON string if successful.

CallPlugin(string, string, ...) -> stringThese functions can be used in combination with the plugin functions for storing Lua code returned by plugins when serverconf.lua is loaded, then retrieve the Lua code later, when handling requests. The code is stored in the database.

// Create or uses a code library object. Optionally takes a data structure name as the first parameter.

CodeLib([string]) -> userdata

// Given a namespace and Lua code, add the given code to the namespace. Returns true on success.

codelib:add(string, string) -> bool

// Given a namespace and Lua code, set the given code as the only code in the namespace. Returns true on success.

codelib:set(string, string) -> bool

// Given a namespace, return Lua code, or an empty string.

codelib:get(string) -> string

// Import (eval) code from the given namespace into the current Lua state. Returns true on success.

codelib:import(string) -> bool

// Completely clear the code library. Returns true on success.

codelib:clear() -> bool// Creates a file upload object. Takes a form ID (from a POST request) as the first parameter.

// Takes an optional maximum upload size (in MiB) as the second parameter.

// Returns nil and an error string on failure, or userdata and an empty string on success.

UploadedFile(string[, number]) -> userdata, string

// Return the uploaded filename, as specified by the client

uploadedfile:filename() -> string

// Return the size of the data that has been received

uploadedfile:size() -> number

// Return the mime type of the uploaded file, as specified by the client

uploadedfile:mimetype() -> string

// Return the full textual content of the uploaded file

uploadedfile:content() -> string

// Save the uploaded data locally. Takes an optional filename. Returns true on success.

uploadedfile:save([string]) -> bool

// Save the uploaded data as the client-provided filename, in the specified directory.

// Takes a relative or absolute path. Returns true on success.

uploadedfile:savein(string) -> bool

// Return the uploaded data as a base64-encoded string

uploadedfile:base64() -> string// Return information about the file cache.

CacheInfo() -> string

// Clear the file cache.

ClearCache()

// Load a file into the cache, returns true on success.

preload(string) -> bool// Get or create a database-backed Set (takes a name, returns a set object)

Set(string) -> userdata

// Add an element to the set

set:add(string)

// Remove an element from the set

set:del(string)

// Check if a set contains a value

// Returns true only if the value exists and there were no errors.

set:has(string) -> bool

// Get all members of the set

set:getall() -> table

// Remove the set itself. Returns true on success.

set:remove() -> bool

// Clear the set

set:clear() -> bool// Get or create a database-backed List (takes a name, returns a list object)

List(string) -> userdata

// Add an element to the list

list:add(string)

// Get all members of the list

list:getall() -> table

// Get the last element of the list

// The returned value can be empty

list:getlast() -> string

// Get the N last elements of the list

list:getlastn(number) -> table

// Remove the list itself. Returns true on success.

list:remove() -> bool

// Clear the list. Returns true on success.

list:clear() -> bool

// Return all list elements (expected to be JSON strings) as a JSON list

list:json() -> string// Get or create a database-backed HashMap (takes a name, returns a hash map object)

HashMap(string) -> userdata

// For a given element id (for instance a user id), set a key

// (for instance "password") and a value.

// Returns true on success.

hash:set(string, string, string) -> bool

// For a given element id (for instance a user id), and a key

// (for instance "password"), return a value.

// Returns a value only if they key was found and if there were no errors.

hash:get(string, string) -> string

// For a given element id (for instance a user id), and a key

// (for instance "password"), check if the key exists in the hash map.

// Returns true only if it exists and there were no errors.

hash:has(string, string) -> bool

// For a given element id (for instance a user id), check if it exists.

// Returns true only if it exists and there were no errors.

hash:exists(string) -> bool

// Get all keys of the hash map

hash:getall() -> table

// Remove a key for an entry in a hash map

// (for instance the email field for a user)

// Returns true on success

hash:delkey(string, string) -> bool

// Remove an element (for instance a user)

// Returns true on success

hash:del(string) -> bool

// Remove the hash map itself. Returns true on success.

hash:remove() -> bool

// Clear the hash map. Returns true on success.

hash:clear() -> bool// Get or create a database-backed KeyValue collection (takes a name, returns a key/value object)

KeyValue(string) -> userdata

// Set a key and value. Returns true on success.

kv:set(string, string) -> bool

// Takes a key, returns a value.

// Returns an empty string if the function fails.

kv:get(string) -> string

// Takes a key, returns the value+1.

// Creates a key/value and returns "1" if it did not already exist.

// Returns an empty string if the function fails.

kv:inc(string) -> string

// Remove a key. Returns true on success.

kv:del(string) -> bool

// Remove the KeyValue itself. Returns true on success.

kv:remove() -> bool

// Clear the KeyValue. Returns true on success.

kv:clear() -> bool// Query a PostgreSQL database with a SQL query and a connection string

PQ([string], [string]) -> tableThe default connection string is host=localhost port=5432 user=postgres dbname=test sslmode=disable and the default SQL query is SELECT version(). Database connections are re-used if they still answer to .Ping(), for the same connection string.

// Query a MSSQL database with SQL, a connection string, and a parameter table

MSSQL([string], [string], [table]) -> table- The default connection string is

server=localhost;user=sa;password=Password123,port=1433and the default SQL query is"SELECT @@VERSION. Database connections are re-used if they still answer to.Ping(), for the same connection string. - If the param table is numerically indexed, positional placeholders are expected:

MSSQL("SELECT * FROM users WHERE first = @p1 AND last = @p2", conn, {"John", "Smith"}) - If the param table is keyed with strings, named placeholders are expected:

MSSQL("SELECT * FROM users WHERE first = @first AND last = @last", conn, {first = "John", last = "Smith"})

// Check if the current user has "user" rights

UserRights() -> bool

// Check if the given username exists (does not look at the list of unconfirmed users)

HasUser(string) -> bool

// Check if the given username exists in the list of unconfirmed users

HasUnconfirmedUser(string) -> bool

// Get the value from the given boolean field

// Takes a username and field name

BooleanField(string, string) -> bool

// Save a value as a boolean field

// Takes a username, field name and boolean value

SetBooleanField(string, string, bool)

// Check if a given username is confirmed

IsConfirmed(string) -> bool

// Check if a given username is logged in

IsLoggedIn(string) -> bool

// Check if the current user has "admin rights"

AdminRights() -> bool

// Check if a given username is an admin

IsAdmin(string) -> bool

// Get the username stored in a cookie, or an empty string

UsernameCookie() -> string

// Store the username in a cookie, returns true on success

SetUsernameCookie(string) -> bool

// Clear the login cookie

ClearCookie()

// Get a table containing all usernames

AllUsernames() -> table

// Get the email for a given username, or an empty string

Email(string) -> string

// Get the password hash for a given username, or an empty string

PasswordHash(string) -> string

// Get all unconfirmed usernames

AllUnconfirmedUsernames() -> table

// Get the existing confirmation code for a given user,

// or an empty string. Takes a username.

ConfirmationCode(string) -> string

// Add a user to the list of unconfirmed users

// Takes a username and a confirmation code

// Remember to also add a user, when registering new users.

AddUnconfirmed(string, string)

// Remove a user from the list of unconfirmed users

// Takes a username

RemoveUnconfirmed(string)

// Mark a user as confirmed

// Takes a username

MarkConfirmed(string)

// Removes a user

// Takes a username

RemoveUser(string)

// Make a user an admin

// Takes a username

SetAdminStatus(string)

// Make an admin user a regular user

// Takes a username

RemoveAdminStatus(string)

// Add a user

// Takes a username, password and email

AddUser(string, string, string)

// Set a user as logged in on the server (not cookie)

// Takes a username

SetLoggedIn(string)

// Set a user as logged out on the server (not cookie)

// Takes a username

SetLoggedOut(string)

// Log in a user, both on the server and with a cookie

// Takes a username

Login(string)

// Log out a user, on the server (which is enough)

// Takes a username

Logout(string)

// Get the current username, from the cookie

Username() -> string

// Get the current cookie timeout

// Takes a username

CookieTimeout(string) -> number

// Set the current cookie timeout

// Takes a timeout number, measured in seconds

SetCookieTimeout(number)

// Get the current server-wide cookie secret. This is used when setting

// and getting browser cookies when users log in.

CookieSecret() -> string

// Set the current server-side cookie secret. This is used when setting

// and getting browser cookies when users log in. Using the same secret

// makes browser cookies usable across server restarts.

SetCookieSecret(string)

// Get the current password hashing algorithm (bcrypt, bcrypt+ or sha256)

PasswordAlgo() -> string

// Set the current password hashing algorithm (bcrypt, bcrypt+ or sha256)

// ‘bcrypt+‘ accepts bcrypt or sha256 for old passwords, but will only use

// bcrypt for new passwords.

SetPasswordAlgo(string)

// Hash the password

// Takes a username and password (username can be used for salting sha256)

HashPassword(string, string) -> string

// Change the password for a user, given a username and a new password

SetPassword(string, string)

// Check if a given username and password is correct

// Takes a username and password

CorrectPassword(string, string) -> bool

// Checks if a confirmation code is already in use

// Takes a confirmation code

AlreadyHasConfirmationCode(string) -> bool

// Find a username based on a given confirmation code,

// or returns an empty string. Takes a confirmation code

FindUserByConfirmationCode(string) -> string

// Mark a user as confirmed

// Takes a username

Confirm(string)

// Mark a user as confirmed, returns true on success

// Takes a confirmation code

ConfirmUserByConfirmationCode(string) -> bool

// Set the minimum confirmation code length

// Takes the minimum number of characters

SetMinimumConfirmationCodeLength(number)

// Generates a unique confirmation code, or an empty string

GenerateUniqueConfirmationCode() -> string// Set the default address for the server on the form [host][:port].

// May be useful in Algernon application bundles (.alg or .zip files).

SetAddr(string)

// Reset the URL prefixes and make everything *public*.

ClearPermissions()

// Add an URL prefix that will have *admin* rights.

AddAdminPrefix(string)

// Add a reverse proxy given a path prefix and an endpoint URL

// For example: "/api" and "http://localhost:8080"

AddReverseProxy(string, string)

// Add an URL prefix that will have *user* rights.

AddUserPrefix(string)

// Provide a lua function that will be used as the permission denied handler.

DenyHandler(function)

// Return a string with various server information.

ServerInfo() -> string

// Direct the logging to the given filename. If the filename is an empty

// string, direct logging to stderr. Returns true on success.

LogTo(string) -> bool

// Returns the version string for the server.

version() -> string

// Logs the given strings as INFO. Takes a variable number of strings.

log(...)

// Logs the given strings as WARN. Takes a variable number of strings.

warn(...)

// Logs the given string as ERROR. Takes a variable number of strings.

err(...)

// Provide a lua function that will be run once, when the server is ready to start serving.

OnReady(function)

// Use a Lua file for setting up HTTP handlers instead of using the directory structure.

ServerFile(string) -> bool

// Serve files from this directory.

ServerDir(string) -> bool

// Get the cookie secret from the server configuration.

CookieSecret() -> string

// Set the cookie secret that will be used when setting and getting browser cookies.

SetCookieSecret(string)This function is only available when a Lua script is used instead of a server directory, or from Lua files that are specified with the ServerFile function in the server configuration.

// Given an URL path prefix (like "/") and a Lua function, set up an HTTP handler.

// The given Lua function should take no arguments, but can use all the Lua functions for handling requests, like `content` and `print`.

handle(string, function)

// Given an URL prefix (like "/") and a directory, serve the files and directories.

servedir(string, string)-

helpdisplays a syntax highlighted overview of most functions. -

webhelpdisplays a syntax highlighted overview of functions related to handling requests. -

confighelpdisplays a syntax highlighted overview of functions related to server configuration.

// Pretty print. Outputs the values in, or a description of, the given Lua value(s).

pprint(...)

// Takes a Python filename, executes the script with the `python` binary in the Path.

// Returns the output as a Lua table, where each line is an entry.

py(string) -> table

// Takes one or more system commands (separated by `;`) and runs them.

// Returns the output lines as a table.

run(string) -> table

// Takes one or more system commands (separated by `;`) and runs them.

// Returns stdout as a table, stderr as a table and the exit code as a number.

run3(string) -> table, table, number

// Lists the keys and values of a Lua table. Returns a string.

// Lists the contents of the global namespace `_G` if no arguments are given.

dir([table]) -> stringAlgernon can be used as a quick Markdown viewer with the -m flag.

Try algernon -m README.md to view README.md in the browser, serving the file once on a port >3000.

In addition to the regular Markdown syntax, Algernon supports setting the page title and syntax highlight style with a header comment like this at the top of a Markdown file:

<!--

title: Page title

theme: dark

code_style: lovelace

replace_with_theme: default_theme

-->

Code is highlighted with highlight.js and several styles are available.

The string that follows replace_with_theme will be used for replacing the current theme string (like dark) with the given string. This makes it possible to use one image (like logo_default_theme.png) for one theme and another image (logo_dark.png) for the dark theme.

The theme can be light, dark, redbox, bw, github, wing, material, neon, default, werc, setconf or a path to a CSS file. Or style.gcss can exist in the same directory.

An overview of available syntax highlighting styles can be found at the Chroma Style Gallery.

Follow the guide at certbot.eff.org for the "None of the above" web server, then start algernon with --cert=/etc/letsencrypt/live/myhappydomain.com/cert.pem --key=/etc/letsencrypt/live/myhappydomain.com/privkey.pem where myhappydomain.com is replaced with your own domain name.

First make Algernon serve a directory for the domain, like /srv/myhappydomain.com, then use that as the webroot when configuring certbot with the certbot certonly command.

Remember to set up a cron-job or something similar to run certbot renew every once in a while (every 12 hours is suggested by certbot.eff.org). Also remember to restart the algernon service after updating the certificates.

Use the --letsencrypt flag together with the --domain flag to automatically fetch and use certificates from Let's Encrypt.

For instance, if /srv/myhappydomain.com exists, then algernon --letsencrypt --domain /srv can be used to serve myhappydomain.com if it points to this server, and fetch certificates from Let's Encrypt.

When --letsencrypt is used, it will try to serve on port 443 and 80 (which redirects to 443).

- Arch Linux package in the AUR.

- Windows executable.

- macOS homebrew package

- Algernon Tray Launcher for macOS, in App Store

- Source releases are tagged with a version number at release.

-

go 1.21or later is a requirement for building Algernon. - For

go 1.10,1.11,1.12,1.13,1.14, '1.15,1.16+gcc-go <10version1.12.7` of Algernon is the last supported version.

Can log to a Combined Log Format access log with the --accesslog flag. This works nicely together with goaccess.

Serve files in one directory:

algernon --accesslog=access.log -x

Then visit the web page once, to create one entry in the access.log.

The wonderful goaccess utility can then be used to view the access log, while it is being filled:

goaccess --no-global-config --log-format=COMBINED access.log

If you have goaccess setup correctly, running goaccess without any flags should work too:

goaccess access.log

.alg files are just renamed .zip files, that can be served by Algernon. There is an example application here: wercstyle.

Thanks to Egon Elbre for the two SVG drawings that I remixed into the current logo (CC0 licensed).

For Linux:

sudo setcap cap_net_bind_service=+ep /usr/bin/algernon

- Version: 1.17.3

- License: BSD-3

- Alexander F. Rødseth <[email protected]>

The jump in stargazers happened when Algernon reached the front page of Hacker News:

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for algernon

Similar Open Source Tools

algernon

Algernon is a web server with built-in support for QUIC, HTTP/2, Lua, Teal, Markdown, Pongo2, HyperApp, Amber, Sass(SCSS), GCSS, JSX, Ollama (LLMs), BoltDB, Redis, PostgreSQL, MariaDB/MySQL, MSSQL, rate limiting, graceful shutdown, plugins, users, and permissions. It is a small self-contained executable that supports various technologies and features for web development.

json_repair

This simple package can be used to fix an invalid json string. To know all cases in which this package will work, check out the unit test. Inspired by https://github.com/josdejong/jsonrepair Motivation Some LLMs are a bit iffy when it comes to returning well formed JSON data, sometimes they skip a parentheses and sometimes they add some words in it, because that's what an LLM does. Luckily, the mistakes LLMs make are simple enough to be fixed without destroying the content. I searched for a lightweight python package that was able to reliably fix this problem but couldn't find any. So I wrote one How to use from json_repair import repair_json good_json_string = repair_json(bad_json_string) # If the string was super broken this will return an empty string You can use this library to completely replace `json.loads()`: import json_repair decoded_object = json_repair.loads(json_string) or just import json_repair decoded_object = json_repair.repair_json(json_string, return_objects=True) Read json from a file or file descriptor JSON repair provides also a drop-in replacement for `json.load()`: import json_repair try: file_descriptor = open(fname, 'rb') except OSError: ... with file_descriptor: decoded_object = json_repair.load(file_descriptor) and another method to read from a file: import json_repair try: decoded_object = json_repair.from_file(json_file) except OSError: ... except IOError: ... Keep in mind that the library will not catch any IO-related exception and those will need to be managed by you Performance considerations If you find this library too slow because is using `json.loads()` you can skip that by passing `skip_json_loads=True` to `repair_json`. Like: from json_repair import repair_json good_json_string = repair_json(bad_json_string, skip_json_loads=True) I made a choice of not using any fast json library to avoid having any external dependency, so that anybody can use it regardless of their stack. Some rules of thumb to use: - Setting `return_objects=True` will always be faster because the parser returns an object already and it doesn't have serialize that object to JSON - `skip_json_loads` is faster only if you 100% know that the string is not a valid JSON - If you are having issues with escaping pass the string as **raw** string like: `r"string with escaping\"" Adding to requirements Please pin this library only on the major version! We use TDD and strict semantic versioning, there will be frequent updates and no breaking changes in minor and patch versions. To ensure that you only pin the major version of this library in your `requirements.txt`, specify the package name followed by the major version and a wildcard for minor and patch versions. For example: json_repair==0.* In this example, any version that starts with `0.` will be acceptable, allowing for updates on minor and patch versions. How it works This module will parse the JSON file following the BNF definition:

LLMUnity

LLM for Unity enables seamless integration of Large Language Models (LLMs) within the Unity engine, allowing users to create intelligent characters for immersive player interactions. The tool supports major LLM models, runs locally without internet access, offers fast inference on CPU and GPU, and is easy to set up with a single line of code. It is free for both personal and commercial use, tested on Unity 2021 LTS, 2022 LTS, and 2023. Users can build multiple AI characters efficiently, use remote servers for processing, and customize model settings for text generation.

blinkid-android

The BlinkID Android SDK is a comprehensive solution for implementing secure document scanning and extraction. It offers powerful capabilities for extracting data from a wide range of identification documents. The SDK provides features for integrating document scanning into Android apps, including camera requirements, SDK resource pre-bundling, customizing the UX, changing default strings and localization, troubleshooting integration difficulties, and using the SDK through various methods. It also offers options for completely custom UX with low-level API integration. The SDK size is optimized for different processor architectures, and API documentation is available for reference. For any questions or support, users can contact the Microblink team at help.microblink.com.

vscode-pddl

The vscode-pddl extension provides comprehensive support for Planning Domain Description Language (PDDL) in Visual Studio Code. It enables users to model planning domains, validate them, industrialize planning solutions, and run planners. The extension offers features like syntax highlighting, auto-completion, plan visualization, plan validation, plan happenings evaluation, search debugging, and integration with Planning.Domains. Users can create PDDL files, run planners, visualize plans, and debug search algorithms efficiently within VS Code.

ain

Ain is a terminal HTTP API client designed for scripting input and processing output via pipes. It allows flexible organization of APIs using files and folders, supports shell-scripts and executables for common tasks, handles url-encoding, and enables sharing the resulting curl, wget, or httpie command-line. Users can put things that change in environment variables or .env-files, and pipe the API output for further processing. Ain targets users who work with many APIs using a simple file format and uses curl, wget, or httpie to make the actual calls.

windows9x

Windows9X is an experimental operating system that allows users to generate applications on the fly by entering descriptions of programs. It leverages an LLM to create HTML files resembling Windows 98 applications, with access to a limited OS API for file operations, registry interactions, and LLM prompting.

aire

Aire is a modern Laravel form builder with a focus on expressive and beautiful code. It allows easy configuration of form components using fluent method calls or Blade components. Aire supports customization through config files and custom views, data binding with Eloquent models or arrays, method spoofing, CSRF token injection, server-side and client-side validation, and translations. It is designed to run on Laravel 5.8.28 and higher, with support for PHP 7.1 and higher. Aire is actively maintained and under consideration for additional features like read-only plain text, cross-browser support for custom checkboxes and radio buttons, support for Choices.js or similar libraries, improved file input handling, and better support for content prepending or appending to inputs.

OpenAI-sublime-text

The OpenAI Completion plugin for Sublime Text provides first-class code assistant support within the editor. It utilizes LLM models to manipulate code, engage in chat mode, and perform various tasks. The plugin supports OpenAI, llama.cpp, and ollama models, allowing users to customize their AI assistant experience. It offers separated chat histories and assistant settings for different projects, enabling context-specific interactions. Additionally, the plugin supports Markdown syntax with code language syntax highlighting, server-side streaming for faster response times, and proxy support for secure connections. Users can configure the plugin's settings to set their OpenAI API key, adjust assistant modes, and manage chat history. Overall, the OpenAI Completion plugin enhances the Sublime Text editor with powerful AI capabilities, streamlining coding workflows and fostering collaboration with AI assistants.

LLPhant

LLPhant is a comprehensive PHP Generative AI Framework designed to be simple yet powerful, compatible with Symfony and Laravel. It supports various LLMs like OpenAI, Anthropic, Mistral, Ollama, and services compatible with OpenAI API. The framework enables tasks such as semantic search, chatbots, personalized content creation, text summarization, personal shopper creation, autonomous AI agents, and coding tool assistance. It provides tools for generating text, images, speech-to-text transcription, and customizing system messages for question answering. LLPhant also offers features for embeddings, vector stores, document stores, and question answering with various query transformations and reranking techniques.

Gemini-API

Gemini-API is a reverse-engineered asynchronous Python wrapper for Google Gemini web app (formerly Bard). It provides features like persistent cookies, ImageFx support, extension support, classified outputs, official flavor, and asynchronous operation. The tool allows users to generate contents from text or images, have conversations across multiple turns, retrieve images in response, generate images with ImageFx, save images to local files, use Gemini extensions, check and switch reply candidates, and control log level.

ai2-scholarqa-lib

Ai2 Scholar QA is a system for answering scientific queries and literature review by gathering evidence from multiple documents across a corpus and synthesizing an organized report with evidence for each claim. It consists of a retrieval component and a three-step generator pipeline. The retrieval component fetches relevant evidence passages using the Semantic Scholar public API and reranks them. The generator pipeline includes quote extraction, planning and clustering, and summary generation. The system is powered by the ScholarQA class, which includes components like PaperFinder and MultiStepQAPipeline. It requires environment variables for Semantic Scholar API and LLMs, and can be run as local docker containers or embedded into another application as a Python package.

lollms_legacy

Lord of Large Language Models (LoLLMs) Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications. The tool supports multiple personalities for generating text with different styles and tones, real-time text generation with WebSocket-based communication, RESTful API for listing personalities and adding new personalities, easy integration with various applications and frameworks, sending files to personalities, running on multiple nodes to provide a generation service to many outputs at once, and keeping data local even in the remote version.

qsv

qsv is a command line program for querying, slicing, indexing, analyzing, filtering, enriching, transforming, sorting, validating, joining, formatting & converting tabular data (CSV, spreadsheets, DBs, parquet, etc). Commands are simple, composable & 'blazing fast'. It is a blazing-fast data-wrangling toolkit with a focus on speed, processing very large files, and being a complete data-wrangling toolkit. It is designed to be portable, easy to use, secure, and easy to contribute to. qsv follows the RFC 4180 CSV standard, requires UTF-8 encoding, and supports various file formats. It has extensive shell completion support, automatic compression/decompression using Snappy, and supports environment variables and dotenv files. qsv has a comprehensive test suite and is dual-licensed under MIT or the UNLICENSE.

Numpy.NET

Numpy.NET is the most complete .NET binding for NumPy, empowering .NET developers with extensive functionality for scientific computing, machine learning, and AI. It provides multi-dimensional arrays, matrices, linear algebra, FFT, and more via a strong typed API. Numpy.NET does not require a local Python installation, as it uses Python.Included to package embedded Python 3.7. Multi-threading must be handled carefully to avoid deadlocks or access violation exceptions. Performance considerations include overhead when calling NumPy from C# and the efficiency of data transfer between C# and Python. Numpy.NET aims to match the completeness of the original NumPy library and is generated using CodeMinion by parsing the NumPy documentation. The project is MIT licensed and supported by JetBrains.

aiac

AIAC is a library and command line tool to generate Infrastructure as Code (IaC) templates, configurations, utilities, queries, and more via LLM providers such as OpenAI, Amazon Bedrock, and Ollama. Users can define multiple 'backends' targeting different LLM providers and environments using a simple configuration file. The tool allows users to ask a model to generate templates for different scenarios and composes an appropriate request to the selected provider, storing the resulting code to a file and/or printing it to standard output.

For similar tasks

aiscript

AiScript is a lightweight scripting language that runs on JavaScript. It supports arrays, objects, and functions as first-class citizens, and is easy to write without the need for semicolons or commas. AiScript runs in a secure sandbox environment, preventing infinite loops from freezing the host. It also allows for easy provision of variables and functions from the host.

algernon

Algernon is a web server with built-in support for QUIC, HTTP/2, Lua, Teal, Markdown, Pongo2, HyperApp, Amber, Sass(SCSS), GCSS, JSX, Ollama (LLMs), BoltDB, Redis, PostgreSQL, MariaDB/MySQL, MSSQL, rate limiting, graceful shutdown, plugins, users, and permissions. It is a small self-contained executable that supports various technologies and features for web development.

devAid-Theme

devAid-Theme is a free Bootstrap theme designed to help developers promote their personal projects. It comes with 4 colour schemes and includes source SCSS files for easy styling customizations. The theme is fully responsive, built on Bootstrap 5, and includes FontAwesome icons. Author Xiaoying Riley offers the template for free with the requirement to keep the footer attribution link. Commercial licenses are available for those who wish to remove the attribution link. The theme is suitable for developers looking to showcase their side projects with a professional and modern design.

AICentral

AI Central is a powerful tool designed to take control of your AI services with minimal overhead. It is built on Asp.Net Core and dotnet 8, offering fast web-server performance. The tool enables advanced Azure APIm scenarios, PII stripping logging to Cosmos DB, token metrics through Open Telemetry, and intelligent routing features. AI Central supports various endpoint selection strategies, proxying asynchronous requests, custom OAuth2 authorization, circuit breakers, rate limiting, and extensibility through plugins. It provides an extensibility model for easy plugin development and offers enriched telemetry and logging capabilities for monitoring and insights.

cloudflare-rag

This repository provides a fullstack example of building a Retrieval Augmented Generation (RAG) app with Cloudflare. It utilizes Cloudflare Workers, Pages, D1, KV, R2, AI Gateway, and Workers AI. The app features streaming interactions to the UI, hybrid RAG with Full-Text Search and Vector Search, switchable providers using AI Gateway, per-IP rate limiting with Cloudflare's KV, OCR within Cloudflare Worker, and Smart Placement for workload optimization. The development setup requires Node, pnpm, and wrangler CLI, along with setting up necessary primitives and API keys. Deployment involves setting up secrets and deploying the app to Cloudflare Pages. The project implements a Hybrid Search RAG approach combining Full Text Search against D1 and Hybrid Search with embeddings against Vectorize to enhance context for the LLM.

k8sgateway

K8sGateway is a feature-rich, fast, and flexible Kubernetes-native API gateway built on Envoy proxy and Kubernetes Gateway API. It excels in function-level routing, supports legacy apps, microservices, and serverless. It offers robust discovery capabilities, seamless integration with open-source projects, and supports hybrid applications with various technologies, architectures, protocols, and clouds.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.