bumpgen

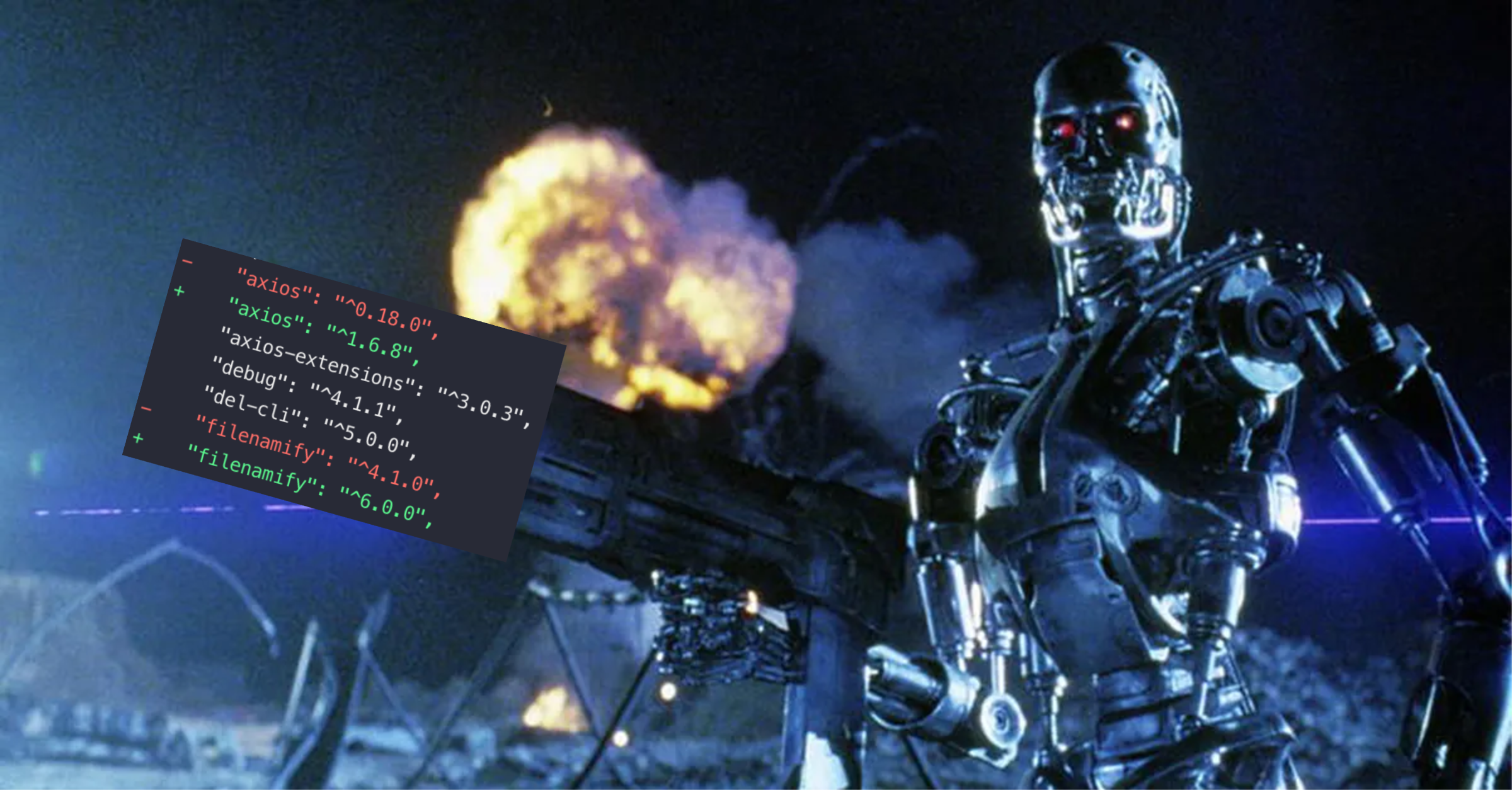

bumpgen is an AI agent that upgrades npm packages

Stars: 67

bumpgen is a tool designed to automatically upgrade TypeScript / TSX dependencies and make necessary code changes to handle any breaking issues that may arise. It uses an abstract syntax tree to analyze code relationships, type definitions for external methods, and a plan graph DAG to execute changes in the correct order. The tool is currently limited to TypeScript and TSX but plans to support other strongly typed languages in the future. It aims to simplify the process of upgrading dependencies and handling code changes caused by updates.

README:

bumpgen bumps your TypeScript / TSX dependencies and makes code changes for you if anything breaks.

Here's a common scenario:

you: "I should upgrade to the latest version of x, it has banging new features and impressive performance improvements"

you (5 minutes later): nevermind, that broke a bunch of stuff

Then use bumpgen!

How does it work?

-

bumpgenbuilds your project to understand what broke when a dependency was bumped - Then

bumpgenuses ts-morph to create an abstract syntax tree from your code, to understand the relationships between statements - It also uses the AST to get type definitions for external methods to understand how to use new package versions

-

bumpgenthen creates a plan graph DAG to execute things in the correct order to handle propagating changes (ref: arxiv 2309.12499)

[!NOTE]

bumpgenonly supports typescript and tsx at the moment, but we're working on adding support for other strongly typed languages. Hit the emoji button on our open issues for Java, golang, C# and Python to request support.

To get started, you'll need an OpenAI API key. gpt-4-turbo-preview from OpenAI is the only supported model at this time, though we plan on supporting more soon.

Then, run bumpgen:

> export LLM_API_KEY="<openai-api-key>"

> cd ~/my-repository

> npm install -g bumpgen

> bumpgen @tanstack/react-query 5.28.14

where @tanstack/react-query is the package you want to bump and 5.28.14 is the version you want to bump to.

You can also run bumpgen without arguments and select which package to upgrade from the menu. Use bumpgen --help for a complete list of options.

[!NOTE] If you'd like to be first in line to try the

bumpgenGitHub App to replace your usage of dependabot + renovatebot, sign up here.

There are some limitations you should know about.

-

bumpgenrelies on build errors to determine what needs to be fixed. If an issue is caused by a behavioral change,bumpgenwon't detect it. -

bumpgencan't handle multiple packages at the same time. It will fail to upgrade packages that require peer dependencies to be updated the same time to work such as@octokit/coreand@octokit/plugin-retry. -

bumpgenis not good with very large frameworks likevue. These kind of upgrades (and vue 2 -> 3 specifically) can be arduous even for a human.

> bumpgen @tanstack/react-query 5.28.14

│

┌┬─────▼──────────────────────────────────────────────────────────────────────┐

││ CLI │

└┴─────┬──▲───────────────────────────────────────────────────────────────────┘

│ │

┌┬─────▼──┴───────────────────────────────────────────────────────────────────┐

││ Core (Codeplan) │

││ │

││ ┌───────────────────────────────────┐ ┌──────────────────────────────────┐ │

││ │ Plan Graph │ │ Abstract Syntax Tree │ │

││ │ │ │ │ │

││ │ │ │ │ │

││ │ ┌─┐ │ │ ┌─┐ │ │

││ │ ┌──┴─┘ │ │ ┌──┴─┴──┐ │ │

││ │ │ │ │ │ │ │ │

││ │ ┌▼┐ ┌──┼─┼──┐ ┌▼┐ ┌▼┐ │ │

││ │ └─┴──┐ │ │ │ │ ┌──┴─┴──┐ └─┘ │ │

││ │ │ │ │ ▼ │ │ │ │

││ │ ┌▼┐ ▲ │ │ ┌▼┐ ┌▼┐ │ │

││ │ └─┴──┐ │ │ │ │ └─┘ ┌──┴─┴──┐ │ │

││ │ │ └──┼─┼──┘ │ │ │ │

││ │ ┌▼┐ │ │ ┌▼┐ ┌▼┐ │ │

││ │ └─┘ │ │ └─┘ └─┘ │ │

││ │ │ │ │ │

││ │ │ │ │ │

││ │ │ │ │ │

││ │ │ │ │ │

││ └───────────────────────────────────┘ └──────────────────────────────────┘ │

││ │

└┴─────┬──▲───────────────────────────────────────────────────────────────────┘

│ │

┌┬─────▼──┴───────────────────────────┐ ┌┬───────────────────────────────────┐

││ Prompt Context │ ││ LLM │

││ │ ││ │

││ - plan graph │ ││ GPT4-Turbo, Claude 3, BYOM │

││ - errors ├──►│ │

││ - code │ ││ │

││ ◄──┼│ │

││ │ ││ │

││ │ ││ │

││ │ ││ │

└┴────────────────────────────────────┘ └┴───────────────────────────────────┘

The AST is generated from ts-morph. This AST allows bumpgen to understand the relationship between nodes in a codebase.

The plan graph is a concept detailed in codeplan by Microsoft. The plan graph allows bumpgen to not only fix an issue at a point but also fix the 2nd order breaking changes from the fix itself. In short, it allows bumpgen to propagate a fix to the rest of the codebase.

We pass the plan graph, the error, and the actual file with the breaking change as context to the LLM to maximize its ability to fix the issue.

We only support gpt-4-turbo-preview at this time.

bumpgen + GPT-4 Turbo ██████████░░░░░░░░░░░ 45% (67 tasks)

We benchmarked bumpgen with GPT-4 Turbo against a suite of version bumps with breaking changes. You can check out the evals here.

Contributions are welcome! To get set up for development, see Development.

- [x] codeplan

- [x] Typescript/TSX support

- [ ]

bumpgenGitHub app - [ ] Embeddings for different package versions

- [ ] Use test runners as an oracle

- [ ] C# support

- [ ] Java support

- [ ] Go support

Join our Discord community to contribute, learn more, and ask questions!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for bumpgen

Similar Open Source Tools

bumpgen

bumpgen is a tool designed to automatically upgrade TypeScript / TSX dependencies and make necessary code changes to handle any breaking issues that may arise. It uses an abstract syntax tree to analyze code relationships, type definitions for external methods, and a plan graph DAG to execute changes in the correct order. The tool is currently limited to TypeScript and TSX but plans to support other strongly typed languages in the future. It aims to simplify the process of upgrading dependencies and handling code changes caused by updates.

chronicle

Chronicle is a self-hostable AI system that captures audio/video data from OMI devices and other sources to generate memories, action items, and contextual insights about conversations and daily interactions. It includes a mobile app for OMI devices, backend services with AI features, a web dashboard for conversation and memory management, and optional services like speaker recognition and offline ASR. The project aims to provide a system that records personal spoken context and visual context to generate memories, action items, and enable home automation.

GenerateAgents.md

GenerateAgents.md is a tool that automatically generates Agents.md for any GitHub repository by analyzing its codebase using dspy.RLM (Recursive Language Model). It supports Gemini, Anthropic (Claude), and OpenAI models out of the box. The tool provides two output styles - Comprehensive Style for detailed overviews and explanations, and Strict Style focusing on coding constraints and anti-patterns. It follows a pipeline that involves cloning the repository, extracting codebase information, compiling conventions into markdown, and creating the final AGENTS.md file. The tool also supports environment variables for API keys and different models for generation tasks.

detour

Detour is an autonomous collision-avoidance system designed to run on-board satellites using NVIDIA's Nemotron LLM on the ASUS Ascent GX10. It utilizes a multi-agent LangGraph pipeline to detect debris threats, assess risk, plan maneuvers, validate safety constraints, and execute avoidance burns locally with zero ground-station latency. The system consists of key components such as Agent Pipeline, Physics Engine, Satellite Model, Tool Wrappers, API, Frontend, and Ascent GX10 Setup. The tool aims to provide fast and autonomous decision-making capabilities to prevent collisions in Low Earth Orbit (LEO) by leveraging edge AI technology.

visual-reasoning-playground

AI-powered visual reasoning tools for broadcast, live streaming, and ProAV professionals. The Visual Reasoning Playground provides 17 ready-to-use tools demonstrating real-world applications of Vision Language Models (VLMs) using Moondream. From PTZ camera auto-tracking to multimodal audio+video automation, the tools offer functionalities like scene description, object detection, gesture control, smart counting, scene analysis, zone monitoring, color matching, multimodal fusion, smart photography, PTZ tracking, tracking comparison, scoreboard extraction, scoreboard OCR, framing assistance, PTZ color tuning, multimodal studio automation, voice triggers, and OBS plugin integration. The tools are designed to streamline tasks in live streaming, broadcast automation, camera control, content creation workflows, security monitoring, and more.

observers

Observers is a lightweight library for AI observability that provides support for various generative AI APIs and storage backends. It allows users to track interactions with AI models and sync observations to different storage systems. The library supports OpenAI, Hugging Face transformers, AISuite, Litellm, and Docling for document parsing and export. Users can configure different stores such as Hugging Face Datasets, DuckDB, Argilla, and OpenTelemetry to manage and query their observations. Observers is designed to enhance AI model monitoring and observability in a user-friendly manner.

Agentic-ADK

Agentic ADK is an Agent application development framework launched by Alibaba International AI Business, based on Google-ADK and Ali-LangEngine. It is used for developing, constructing, evaluating, and deploying powerful, flexible, and controllable complex AI Agents. ADK aims to make Agent development simpler and more user-friendly, enabling developers to more easily build, deploy, and orchestrate various Agent applications ranging from simple tasks to complex collaborations.

aio-coding-hub

AIO Coding Hub is a local AI CLI unified gateway that allows requests from Claude Code, Codex, and Gemini CLI to go through a single entry point. It solves the pain points of configuring base URLs and API keys for each CLI, provides intelligent failover in case of upstream instability, offers full observability with trace tracking and usage statistics, enables easy switching of providers with a single toggle, and ensures security and privacy through local data storage and encrypted API keys. The tool features a unified gateway proxy supporting multiple CLI tools, intelligent routing and fault tolerance, observability with request tracing and usage statistics, channel validation with multi-dimensional templates, and security and privacy measures like local data storage and encrypted API keys.

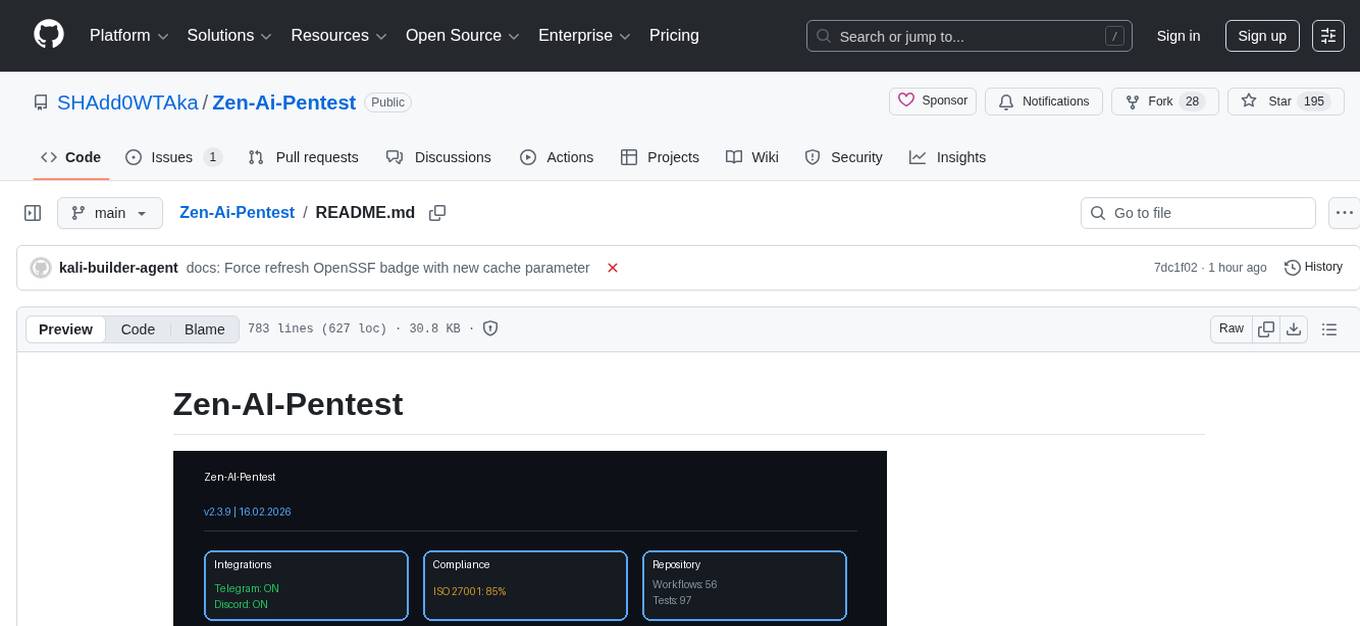

Zen-Ai-Pentest

Zen-AI-Pentest is a professional AI-powered penetration testing framework designed for security professionals, bug bounty hunters, and enterprise security teams. It combines cutting-edge language models with 20+ integrated security tools, offering comprehensive security assessments. The framework is security-first with multiple safety controls, extensible with a plugin system, cloud-native for deployment on AWS, Azure, or GCP, and production-ready with CI/CD, monitoring, and support. It features autonomous AI agents, risk analysis, exploit validation, benchmarking, CI/CD integration, AI persona system, subdomain scanning, and multi-cloud & virtualization support.

claude-emporium

Claude Emporium is a Roman-themed plugin marketplace for Claude Code, offering six plugins that wrap standalone MCP servers with automation hooks, commands, and skills. The plugins include Praetorian for context guard, Historian for session memory, Oracle for tool discovery, Gladiator for continuous learning, Vigil for file recovery, and Orator for prompt rhetoric. Each plugin self-configures on install, and the MCP servers handle the actual work. The plugins coordinate automatically when multiple are installed, enhancing behaviors and synergy. The tool is designed with zero overhead, no external API calls, no background processes, and no databases, making it efficient and lightweight for users.

auto-paper-digest

Auto Paper Digest (APD) is a tool designed to automatically fetch cutting-edge AI research papers, download PDFs, generate video explanations, and publish them on platforms like HuggingFace, Douyin, and portal websites. It provides functionalities such as fetching papers from Hugging Face, downloading PDFs from arXiv, generating videos using NotebookLM, automatic publishing to HuggingFace Dataset, automatic publishing to Douyin, and hosting videos on a Gradio portal website. The tool also supports resuming interrupted tasks, persistent login states for Google and Douyin, and a structured workflow divided into three phases: Upload, Download, and Publish.

lanhu-mcp

Lanhu MCP Server is a powerful Model Context Protocol (MCP) server designed for the AI programming era, perfectly supporting the Lanhu design collaboration platform. It offers features like intelligent requirement analysis, team knowledge base, UI design support, and performance optimization. The server is suitable for Cursor + Lanhu, Windsurf + Lanhu, Claude Code + Lanhu, Trae + Lanhu, and Cline + Lanhu integrations. It aims to break the isolation of AI IDEs and enable all AI assistants to share knowledge and context.

kweaver

KWeaver is an open-source ecosystem for building, deploying, and running decision intelligence AI applications. It adopts ontology as the core methodology for business knowledge networks, with DIP as the core platform, aiming to provide elastic, agile, and reliable enterprise-grade decision intelligence to further unleash productivity. The DIP platform includes key subsystems such as ADP, Decision Agent, DIP Studio, and AI Store.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

aiohomematic

AIO Homematic (hahomematic) is a lightweight Python 3 library for controlling and monitoring HomeMatic and HomematicIP devices, with support for third-party devices/gateways. It automatically creates entities for device parameters, offers custom entity classes for complex behavior, and includes features like caching paramsets for faster restarts. Designed to integrate with Home Assistant, it requires specific firmware versions for HomematicIP devices. The public API is defined in modules like central, client, model, exceptions, and const, with example usage provided. Useful links include changelog, data point definitions, troubleshooting, and developer resources for architecture, data flow, model extension, and Home Assistant lifecycle.

osmedeus

Osmedeus is a security-focused declarative orchestration engine that simplifies complex workflow automation into auditable YAML definitions. It provides powerful automation capabilities without compromising infrastructure integrity and safety. With features like declarative YAML workflows, multiple runners, event-driven triggers, template engine, utility functions, REST API server, distributed execution, notifications, cloud storage, AI integration, SAST integration, language detection, and preset installations, Osmedeus offers a comprehensive solution for security automation tasks.

For similar tasks

bumpgen

bumpgen is a tool designed to automatically upgrade TypeScript / TSX dependencies and make necessary code changes to handle any breaking issues that may arise. It uses an abstract syntax tree to analyze code relationships, type definitions for external methods, and a plan graph DAG to execute changes in the correct order. The tool is currently limited to TypeScript and TSX but plans to support other strongly typed languages in the future. It aims to simplify the process of upgrading dependencies and handling code changes caused by updates.

For similar jobs

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

ai-on-gke

This repository contains assets related to AI/ML workloads on Google Kubernetes Engine (GKE). Run optimized AI/ML workloads with Google Kubernetes Engine (GKE) platform orchestration capabilities. A robust AI/ML platform considers the following layers: Infrastructure orchestration that support GPUs and TPUs for training and serving workloads at scale Flexible integration with distributed computing and data processing frameworks Support for multiple teams on the same infrastructure to maximize utilization of resources

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

nvidia_gpu_exporter

Nvidia GPU exporter for prometheus, using `nvidia-smi` binary to gather metrics.

tracecat

Tracecat is an open-source automation platform for security teams. It's designed to be simple but powerful, with a focus on AI features and a practitioner-obsessed UI/UX. Tracecat can be used to automate a variety of tasks, including phishing email investigation, evidence collection, and remediation plan generation.

openinference

OpenInference is a set of conventions and plugins that complement OpenTelemetry to enable tracing of AI applications. It provides a way to capture and analyze the performance and behavior of AI models, including their interactions with other components of the application. OpenInference is designed to be language-agnostic and can be used with any OpenTelemetry-compatible backend. It includes a set of instrumentations for popular machine learning SDKs and frameworks, making it easy to add tracing to your AI applications.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

kong

Kong, or Kong API Gateway, is a cloud-native, platform-agnostic, scalable API Gateway distinguished for its high performance and extensibility via plugins. It also provides advanced AI capabilities with multi-LLM support. By providing functionality for proxying, routing, load balancing, health checking, authentication (and more), Kong serves as the central layer for orchestrating microservices or conventional API traffic with ease. Kong runs natively on Kubernetes thanks to its official Kubernetes Ingress Controller.

.gif)