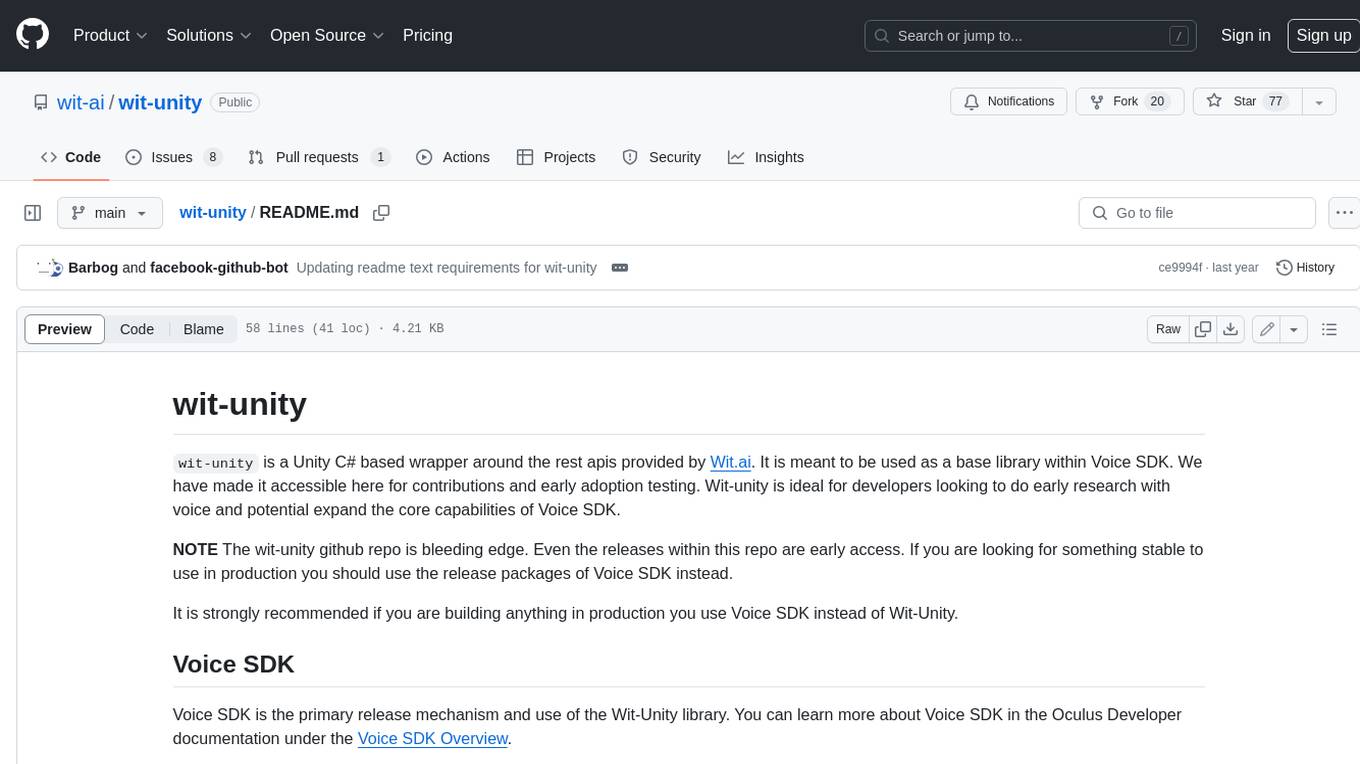

wit-unity

Wit-Unity is a Unity C# wrapper around the the Wit.ai rest APIs and is a core component of Voice SDK.

Stars: 85

Wit-unity is a Unity C# based wrapper around the rest apis provided by Wit.ai. It is meant to be used as a base library within Voice SDK. We have made it accessible here for contributions and early adoption testing. Wit-unity is ideal for developers looking to do early research with voice and potential expand the core capabilities of Voice SDK.

README:

wit-unity is a Unity C# based wrapper around the rest apis provided by Wit.ai. It is meant to be used as a base library within Voice SDK. We have made it accessible here for contributions and early adoption testing. Wit-unity is ideal for developers looking to do early research with voice and potential expand the core capabilities of Voice SDK.

NOTE The wit-unity github repo is bleeding edge. Even the releases within this repo are early access. If you are looking for something stable to use in production you should use the release packages of Voice SDK instead.

It is strongly recommended if you are building anything in production you use Voice SDK instead of Wit-Unity.

Voice SDK is the primary release mechanism and use of the Wit-Unity library. You can learn more about Voice SDK in the Oculus Developer documentation under the Voice SDK Overview.

- Quality One of the core differences between wit-unity and Voice SDK is its QA process. Voice SDK goes through more rigorous testing before releases are published and is considered production ready. Changes published to wit-unity are engineer tested and then vetted by QA after checkin.

- Completion If you are working off of the main-line wit-unity branch you may clone work in progress features.

- Platform Integration Voice SDK offers extended platform specific implementations. Wit-Unity's purpose is to wrap the Wit.ai APIs. It does not deal with any platform specific optimizations. Voice SDK however provides platform integrations and additional optimizations for devices like the Oculus Quest.

- Documentation The documentation around wit-unity is somewhat limited. You will find more detailed documentation and tutorials on Voice SDK as a whole in the Voice SDK documentation.

- Download the repo. You can get it on the Unity Asset Store as part of the Oculus Integration asset. Or you can download just the Voice SDK through the Oculus developer website.

- Once you have imported you can find the initial setup in the Oculus menu under Oculus/Voice SDK/Settings button.

There are a couple ways you can install this plugin in Unity.

- You can download the repo and drop it in your Unity project's Assets directory

- You can add the sdk via Unity's Package Manager.

- Open your unity project

- Open the Package Manager by clicking on Window->Package Manager

- Click the + dropdown in the upper left corner of the package manager window

- Select "Add package from git url"

- In the url box enter "https://github.com/wit-ai/wit-unity.git"

Once you have installed the Wit plugin you will need to add your project's server token to your project.

- Open the Wit configuration window by clicking on Window->Wit->WitConfiguration in the menu bar.

- Go to the Wit.ai website manually or by clicking on "Continue With Facebook"

- Find your project and go to the project settings page

- Copy the Server Token and past it in the box in the Wit Configuration window

Samples using wit-unity can be found in the Samples directory or in the package manager's samples section. You will need to provide your own WitConfiguration for the sample scenes.

The license for wit-unity can be found in LICENSE file in the root directory of this source tree.

Our terms of use can be found at https://opensource.fb.com/legal/terms.

Our privacy policy can be found at https://opensource.fb.com/legal/privacy.

Copyright © Meta Platforms, Inc

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for wit-unity

Similar Open Source Tools

wit-unity

Wit-unity is a Unity C# based wrapper around the rest apis provided by Wit.ai. It is meant to be used as a base library within Voice SDK. We have made it accessible here for contributions and early adoption testing. Wit-unity is ideal for developers looking to do early research with voice and potential expand the core capabilities of Voice SDK.

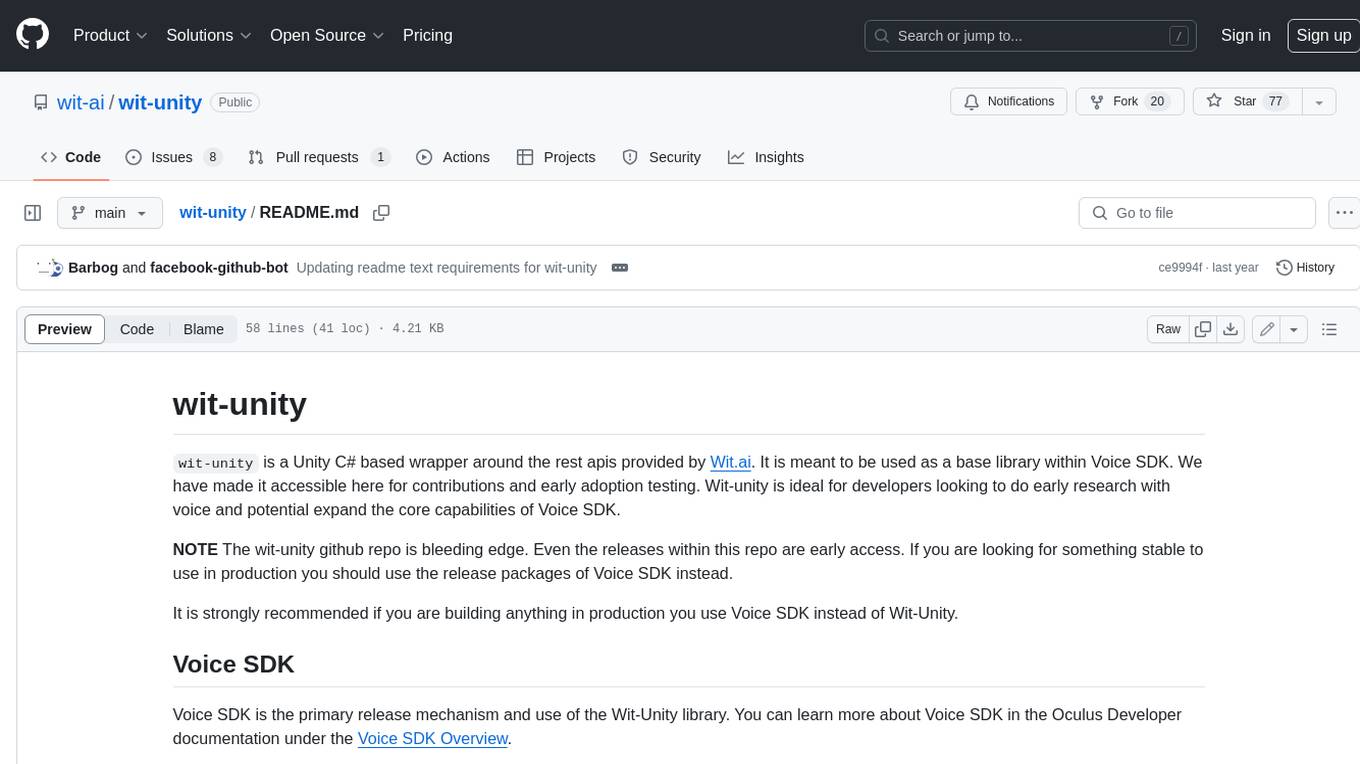

GlaDOS

This project aims to create a real-life version of GLaDOS, an aware, interactive, and embodied AI entity. It involves training a voice generator, developing a 'Personality Core,' implementing a memory system, providing vision capabilities, creating 3D-printable parts, and designing an animatronics system. The software architecture focuses on low-latency voice interactions, utilizing a circular buffer for data recording, text streaming for quick transcription, and a text-to-speech system. The project also emphasizes minimal dependencies for running on constrained hardware. The hardware system includes servo- and stepper-motors, 3D-printable parts for GLaDOS's body, animations for expression, and a vision system for tracking and interaction. Installation instructions cover setting up the TTS engine, required Python packages, compiling llama.cpp, installing an inference backend, and voice recognition setup. GLaDOS can be run using 'python glados.py' and tested using 'demo.ipynb'.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

GLaDOS

GLaDOS Personality Core is a project dedicated to building a real-life version of GLaDOS, an aware, interactive, and embodied AI system. The project aims to train GLaDOS voice generator, create a 'Personality Core,' develop medium- and long-term memory, provide vision capabilities, design 3D-printable parts, and build an animatronics system. The software architecture focuses on low-latency voice interactions and minimal dependencies. The hardware system includes servo- and stepper-motors, 3D printable parts for GLaDOS's body, animations for expression, and a vision system for tracking and interaction. Installation instructions involve setting up a local LLM server, installing drivers, and running GLaDOS on different operating systems.

AppAgent

AppAgent is a novel LLM-based multimodal agent framework designed to operate smartphone applications. Our framework enables the agent to operate smartphone applications through a simplified action space, mimicking human-like interactions such as tapping and swiping. This novel approach bypasses the need for system back-end access, thereby broadening its applicability across diverse apps. Central to our agent's functionality is its innovative learning method. The agent learns to navigate and use new apps either through autonomous exploration or by observing human demonstrations. This process generates a knowledge base that the agent refers to for executing complex tasks across different applications.

AgentStack

AgentStack is a command-line tool that helps users create AI agent projects quickly and efficiently. It offers CLI utilities for code generation and simplifies the process of building agents and tasks. The tool is designed to work on macOS, Windows, and Linux, providing a seamless experience for developers. AgentStack aims to streamline the development process by offering pre-built templates, easy access to tools, and a curated experience on top of popular agent frameworks and LLM providers. It is not a low-code solution but rather a head-start for starting agent projects from scratch.

FlowTest

FlowTestAI is the world’s first GenAI powered OpenSource Integrated Development Environment (IDE) designed for crafting, visualizing, and managing API-first workflows. It operates as a desktop app, interacting with the local file system, ensuring privacy and enabling collaboration via version control systems. The platform offers platform-specific binaries for macOS, with versions for Windows and Linux in development. It also features a CLI for running API workflows from the command line interface, facilitating automation and CI/CD processes.

WilmerAI

WilmerAI is a middleware system designed to process prompts before sending them to Large Language Models (LLMs). It categorizes prompts, routes them to appropriate workflows, and generates manageable prompts for local models. It acts as an intermediary between the user interface and LLM APIs, supporting multiple backend LLMs simultaneously. WilmerAI provides API endpoints compatible with OpenAI API, supports prompt templates, and offers flexible connections to various LLM APIs. The project is under heavy development and may contain bugs or incomplete code.

gpdb

Greenplum Database (GPDB) is an advanced, fully featured, open source data warehouse, based on PostgreSQL. It provides powerful and rapid analytics on petabyte scale data volumes. Uniquely geared toward big data analytics, Greenplum Database is powered by the world’s most advanced cost-based query optimizer delivering high analytical query performance on large data volumes.

fuji-web

Fuji-Web is an intelligent AI partner designed for full browser automation. It autonomously navigates websites and performs tasks on behalf of the user while providing explanations for each action step. Users can easily install the extension in their browser, access the Fuji icon to input tasks, and interact with the tool to streamline web browsing tasks. The tool aims to enhance user productivity by automating repetitive web actions and providing a seamless browsing experience.

trackmania_rl_public

This repository contains the reinforcement learning training code for Trackmania AI with Reinforcement Learning. It is a research work-in-progress project that aims to apply reinforcement learning principles to play Trackmania. The code is constantly evolving and may not be clean or easily usable. The training hyperparameters are intentionally changed in the public repository to encourage understanding of reinforcement learning principles. The project may not receive active support for setup or usage at the moment.

godot_rl_agents

Godot RL Agents is an open-source package that facilitates the integration of Machine Learning algorithms with games created in the Godot Engine. It provides interfaces for popular RL frameworks, support for memory-based agents, 2D and 3D games, AI sensors, and is licensed under MIT. Users can train agents in the Godot editor, create custom environments, export trained agents in ONNX format, and utilize advanced features like different RL training frameworks.

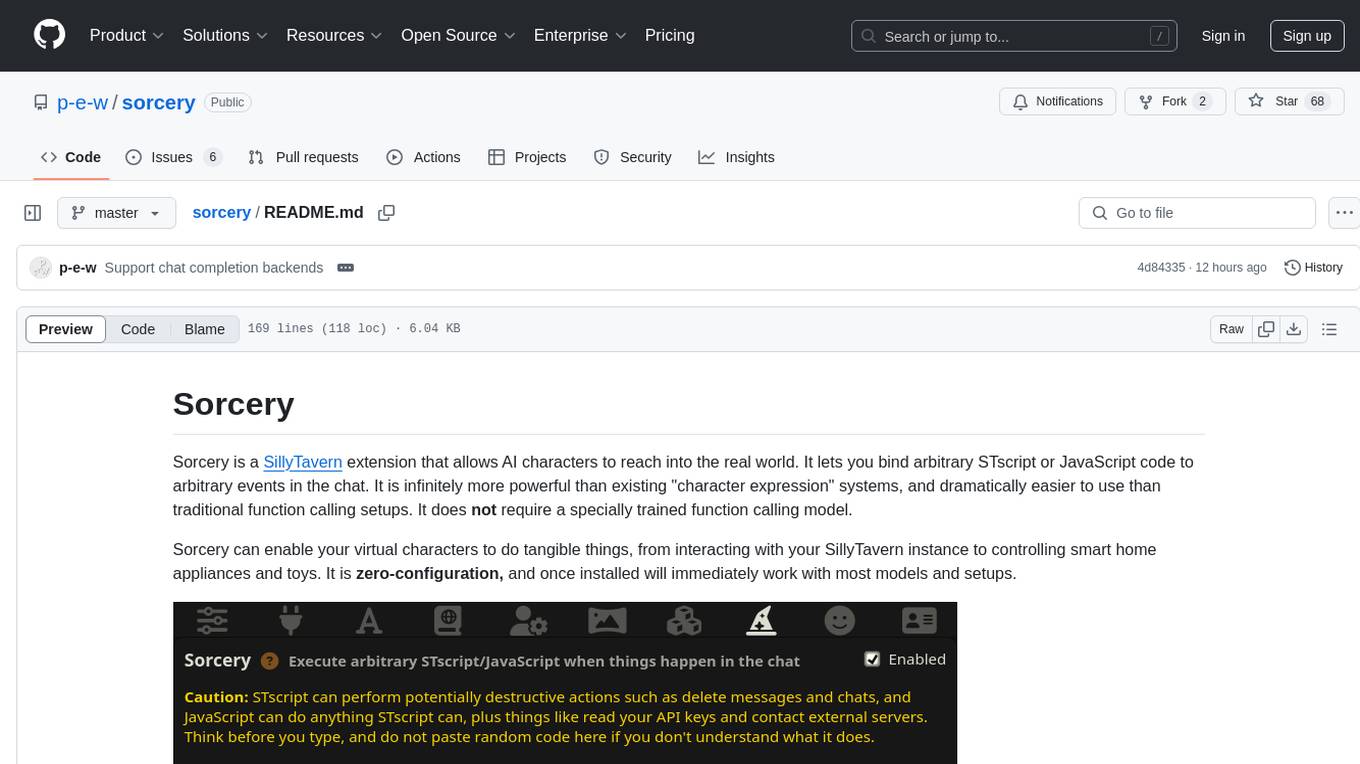

sorcery

Sorcery is a SillyTavern extension that allows AI characters to interact with the real world by executing user-defined scripts at specific events in the chat. It is easy to use and does not require a specially trained function calling model. Sorcery can be used to control smart home appliances, interact with virtual characters, and perform various tasks in the chat environment. It works by injecting instructions into the system prompt and intercepting markers to run associated scripts, providing a seamless user experience.

raggenie

RAGGENIE is a low-code RAG builder tool designed to simplify the creation of conversational AI applications. It offers out-of-the-box plugins for connecting to various data sources and building conversational AI on top of them, including integration with pre-built agents for actions. The tool is open-source under the MIT license, with a current focus on making it easy to build RAG applications and future plans for maintenance, monitoring, and transitioning applications from pilots to production.

lumigator

Lumigator is an open-source platform developed by Mozilla.ai to help users select the most suitable language model for their specific needs. It supports the evaluation of summarization tasks using sequence-to-sequence models such as BART and BERT, as well as causal models like GPT and Mistral. The platform aims to make model selection transparent, efficient, and empowering by providing a framework for comparing LLMs using task-specific metrics to evaluate how well a model fits a project's needs. Lumigator is in the early stages of development and plans to expand support to additional machine learning tasks and use cases in the future.

promptbuddy

Prompt Buddy is a Microsoft Teams app that provides a central location for teams to share and discover their favorite AI prompts. It comes preloaded with Microsoft Copilot and other categories, but users can also add their own custom prompts. The app is easy to use and allows users to upvote their favorite prompts, which raises them to the top of the leaderboard. Prompt Buddy also supports dark mode and offers a mobile layout for use on phones. It is built on the Power Platform and can be customized and extended by the installer.

For similar tasks

wit-unity

Wit-unity is a Unity C# based wrapper around the rest apis provided by Wit.ai. It is meant to be used as a base library within Voice SDK. We have made it accessible here for contributions and early adoption testing. Wit-unity is ideal for developers looking to do early research with voice and potential expand the core capabilities of Voice SDK.

For similar jobs

wit-unity

Wit-unity is a Unity C# based wrapper around the rest apis provided by Wit.ai. It is meant to be used as a base library within Voice SDK. We have made it accessible here for contributions and early adoption testing. Wit-unity is ideal for developers looking to do early research with voice and potential expand the core capabilities of Voice SDK.

tts-generation-webui

TTS Generation WebUI is a comprehensive tool that provides a user-friendly interface for text-to-speech and voice cloning tasks. It integrates various AI models such as Bark, MusicGen, AudioGen, Tortoise, RVC, Vocos, Demucs, SeamlessM4T, and MAGNeT. The tool offers one-click installers, Google Colab demo, videos for guidance, and extra voices for Bark. Users can generate audio outputs, manage models, caches, and system space for AI projects. The project is open-source and emphasizes ethical and responsible use of AI technology.

aiavatarkit

AIAvatarKit is a tool for building AI-based conversational avatars quickly. It supports various platforms like VRChat and cluster, along with real-world devices. The tool is extensible, allowing unlimited capabilities based on user needs. It requires VOICEVOX API, Google or Azure Speech Services API keys, and Python 3.10. Users can start conversations out of the box and enjoy seamless interactions with the avatars.

vocode-python

Vocode is an open source library that enables users to easily build voice-based LLM (Large Language Model) apps. With Vocode, users can create real-time streaming conversations with LLMs and deploy them for phone calls, Zoom meetings, and more. The library offers abstractions and integrations for transcription services, LLMs, and synthesis services, making it a comprehensive tool for voice-based applications.

call-gpt

Call GPT is a voice application that utilizes Deepgram for Speech to Text, elevenlabs for Text to Speech, and OpenAI for GPT prompt completion. It allows users to chat with ChatGPT on the phone, providing better transcription, understanding, and speaking capabilities than traditional IVR systems. The app returns responses with low latency, allows user interruptions, maintains chat history, and enables GPT to call external tools. It coordinates data flow between Deepgram, OpenAI, ElevenLabs, and Twilio Media Streams, enhancing voice interactions.

vocode-core

Vocode is an open source library that enables users to build voice-based LLM (Large Language Model) applications quickly and easily. With Vocode, users can create real-time streaming conversations with LLMs and deploy them for phone calls, Zoom meetings, and more. The library offers abstractions and integrations for transcription services, LLMs, and synthesis services, making it a comprehensive tool for voice-based app development. Vocode also provides out-of-the-box integrations with various services like AssemblyAI, OpenAI, Microsoft Azure, and more, allowing users to leverage these services seamlessly in their applications.

voicechat2

Voicechat2 is a fast, fully local AI voice chat tool that uses WebSockets for communication. It includes a WebSocket server for remote access, default web UI with VAD and Opus support, and modular/swappable SRT, LLM, TTS servers. Users can customize components like SRT, LLM, and TTS servers, and run different models for voice-to-voice communication. The tool aims to reduce latency in voice communication and provides flexibility in server configurations.

ElevenLabs-DotNet

ElevenLabs-DotNet is a non-official Eleven Labs voice synthesis RESTful client that allows users to convert text to speech. The library targets .NET 8.0 and above, working across various platforms like console apps, winforms, wpf, and asp.net, and across Windows, Linux, and Mac. Users can authenticate using API keys directly, from a configuration file, or system environment variables. The tool provides functionalities for text to speech conversion, streaming text to speech, accessing voices, dubbing audio or video files, generating sound effects, managing history of synthesized audio clips, and accessing user information and subscription status.