airsonic-refix

Modern web UI for Subsonic compatible servers

Stars: 317

Airsonic (refix) UI is a modern responsive web frontend designed for airsonic-advanced, navidrome, gonic, and other subsonic compatible music servers. It offers features such as responsive UI for desktop and mobile, browsing library for albums, artists, genres, playback with persistent queue, repeat & shuffle, MediaSession integration, playlist management with drag and drop, search, favorites, internet radio, and podcasts.

README:

Modern responsive web frontend for airsonic-advanced, navidrome, gonic and other subsonic compatible music servers.

- Responsive UI for desktop and mobile

- Browse library for albums, artist, generes

- Playback with persistent queue, repeat & shuffle

- MediaSession integration

- View, create, and edit playlists with drag and drop

- Built-in 'random' playlist

- Search

- Favourites

- Internet radio

- Podcasts

Enter the URL and credentials for your subsonic compatible server, or use one of the following public demo servers:

Subsonic

Server: https://airsonic.netlify.app/api

Username: guest4, guest5, guest6 etc.

Password:guest

Navidrome

Server: https://demo.navidrome.org

Username: demo

Password:demo

Note: if the server is using http only you must allow mixed content in your browser otherwise login will not work.

$ docker run -d -p 8080:80 tamland/airsonic-refix:latest

You can now access the application at http://localhost:8080/

Environment variables:

-

SERVER_URL(Optional): The backend server URL. When set the server input on the login page will not be displayed.

Pre-built bundles can be found in the Actions tab. Download/extract artifact and serve with any web server such as nginx or apache.

$ yarn install

$ yarn build

Bundle can be found in the dist folder.

Build docker image:

$ docker build -f docker/Dockerfile .

$ yarn install

$ yarn serve

- HTTP form POST extension

- Multiple artists/genres

Licensed under the AGPLv3 license.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for airsonic-refix

Similar Open Source Tools

airsonic-refix

Airsonic (refix) UI is a modern responsive web frontend designed for airsonic-advanced, navidrome, gonic, and other subsonic compatible music servers. It offers features such as responsive UI for desktop and mobile, browsing library for albums, artists, genres, playback with persistent queue, repeat & shuffle, MediaSession integration, playlist management with drag and drop, search, favorites, internet radio, and podcasts.

CrewAI-Studio

CrewAI Studio is an application with a user-friendly interface for interacting with CrewAI, offering support for multiple platforms and various backend providers. It allows users to run crews in the background, export single-page apps, and use custom tools for APIs and file writing. The roadmap includes features like better import/export, human input, chat functionality, automatic crew creation, and multiuser environment support.

BodhiApp

Bodhi App runs Open Source Large Language Models locally, exposing LLM inference capabilities as OpenAI API compatible REST APIs. It leverages llama.cpp for GGUF format models and huggingface.co ecosystem for model downloads. Users can run fine-tuned models for chat completions, create custom aliases, and convert Huggingface models to GGUF format. The CLI offers commands for environment configuration, model management, pulling files, serving API, and more.

browser-use

Browser Use is a tool designed to make websites accessible for AI agents. It provides an easy way to connect AI agents with the browser, enabling users to perform tasks such as extracting vision and HTML elements, managing multiple tabs, and executing custom actions. The tool supports various language models and allows users to parallelize multiple agents for efficient processing. With features like self-correction and the ability to register custom actions, Browser Use offers a versatile solution for interacting with web content using AI technology.

VibeSurf

VibeSurf is an open-source AI agentic browser that combines workflow automation with intelligent AI agents, offering faster, cheaper, and smarter browser automation. It allows users to create revolutionary browser workflows, run multiple AI agents in parallel, perform intelligent AI automation tasks, maintain privacy with local LLM support, and seamlessly integrate as a Chrome extension. Users can save on token costs, achieve efficiency gains, and enjoy deterministic workflows for consistent and accurate results. VibeSurf also provides a Docker image for easy deployment and offers pre-built workflow templates for common tasks.

next-money

Next Money Stripe Starter is a SaaS Starter project that empowers your next project with a stack of Next.js, Prisma, Supabase, Clerk Auth, Resend, React Email, Shadcn/ui, and Stripe. It seamlessly integrates these technologies to accelerate your development and SaaS journey. The project includes frameworks, platforms, UI components, hooks and utilities, code quality tools, and miscellaneous features to enhance the development experience. Created by @koyaguo in 2023 and released under the MIT license.

sim

Sim is a platform that allows users to build and deploy AI agent workflows quickly and easily. It provides cloud-hosted and self-hosted options, along with support for local AI models. Users can set up the application using Docker Compose, Dev Containers, or manual setup with PostgreSQL and pgvector extension. The platform utilizes technologies like Next.js, Bun, PostgreSQL with Drizzle ORM, Better Auth for authentication, Shadcn and Tailwind CSS for UI, Zustand for state management, ReactFlow for flow editor, Fumadocs for documentation, Turborepo for monorepo management, Socket.io for real-time communication, and Trigger.dev for background jobs.

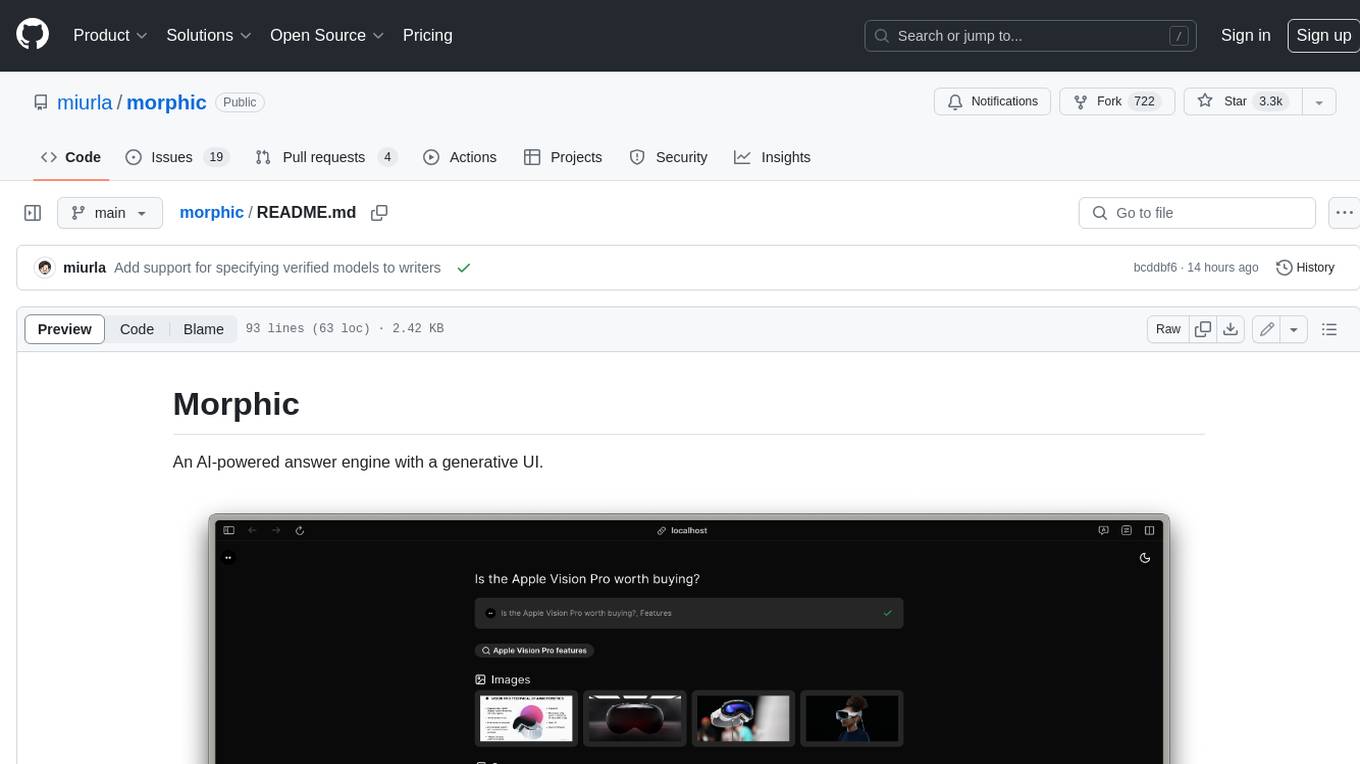

morphic

Morphic is an AI-powered answer engine with a generative UI. It utilizes a stack of Next.js, Vercel AI SDK, OpenAI, Tavily AI, shadcn/ui, Radix UI, and Tailwind CSS. To get started, fork and clone the repo, install dependencies, fill out secrets in the .env.local file, and run the app locally using 'bun dev'. You can also deploy your own live version of Morphic with Vercel. Verified models that can be specified to writers include Groq, LLaMA3 8b, and LLaMA3 70b.

better-chatbot

Better Chatbot is an open-source AI chatbot designed for individuals and teams, inspired by various AI models. It integrates major LLMs, offers powerful tools like MCP protocol and data visualization, supports automation with custom agents and visual workflows, enables collaboration by sharing configurations, provides a voice assistant feature, and ensures an intuitive user experience. The platform is built with Vercel AI SDK and Next.js, combining leading AI services into one platform for enhanced chatbot capabilities.

aiaio

aiaio (AI-AI-O) is a lightweight, privacy-focused web UI for interacting with AI models. It supports both local and remote LLM deployments through OpenAI-compatible APIs. The tool provides features such as dark/light mode support, local SQLite database for conversation storage, file upload and processing, configurable model parameters through UI, privacy-focused design, responsive design for mobile/desktop, syntax highlighting for code blocks, real-time conversation updates, automatic conversation summarization, customizable system prompts, WebSocket support for real-time updates, Docker support for deployment, multiple API endpoint support, and multiple system prompt support. Users can configure model parameters and API settings through the UI, handle file uploads, manage conversations, and use keyboard shortcuts for efficient interaction. The tool uses SQLite for storage with tables for conversations, messages, attachments, and settings. Contributions to the project are welcome under the Apache License 2.0.

farfalle

Farfalle is an open-source AI-powered search engine that allows users to run their own local LLM or utilize the cloud. It provides a tech stack including Next.js for frontend, FastAPI for backend, Tavily for search API, Logfire for logging, and Redis for rate limiting. Users can get started by setting up prerequisites like Docker and Ollama, and obtaining API keys for Tavily, OpenAI, and Groq. The tool supports models like llama3, mistral, and gemma. Users can clone the repository, set environment variables, run containers using Docker Compose, and deploy the backend and frontend using services like Render and Vercel.

chunkr

Chunkr is an open-source document intelligence API that provides a production-ready service for document layout analysis, OCR, and semantic chunking. It allows users to convert PDFs, PPTs, Word docs, and images into RAG/LLM-ready chunks. The API offers features such as layout analysis, OCR with bounding boxes, structured HTML and markdown output, and VLM processing controls. Users can interact with Chunkr through a Python SDK, enabling them to upload documents, process them, and export results in various formats. The tool also supports self-hosted deployment options using Docker Compose or Kubernetes, with configurations for different AI models like OpenAI, Google AI Studio, and OpenRouter. Chunkr is dual-licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) and a commercial license, providing flexibility for different usage scenarios.

comfyui-web-viewer

The ComfyUI Web Viewer by vrch.ai is a real-time AI-generated interactive art framework that integrates realtime streaming into ComfyUI workflows. It supports keyboard control nodes, OSC control nodes, sound input nodes, and more, accessible from any device with a web browser. It enables real-time interaction with AI-generated content, ideal for interactive visual projects and enhancing ComfyUI workflows with efficient content management and display.

minimal-chat

MinimalChat is a minimal and lightweight open-source chat application with full mobile PWA support that allows users to interact with various language models, including GPT-4 Omni, Claude Opus, and various Local/Custom Model Endpoints. It focuses on simplicity in setup and usage while being fully featured and highly responsive. The application supports features like fully voiced conversational interactions, multiple language models, markdown support, code syntax highlighting, DALL-E 3 integration, conversation importing/exporting, and responsive layout for mobile use.

agent-sessions

Unified session browser for Codex CLI, Claude Code, Gemini CLI, GitHub Copilot CLI, Droid (Factory CLI), and OpenCode. Search, browse, and resume your past AI-coding sessions in a local-first macOS app. Agent Sessions helps you search across large session histories, quickly find the right prompt/tool output, then reuse it by copying snippets or resuming supported sessions in your terminal. Core features include unified browsing across supported agents, unified search across all sessions, readable tool calls/outputs, and local-only indexing designed for large histories.

swift-chat

SwiftChat is a fast and responsive AI chat application developed with React Native and powered by Amazon Bedrock. It offers real-time streaming conversations, AI image generation, multimodal support, conversation history management, and cross-platform compatibility across Android, iOS, and macOS. The app supports multiple AI models like Amazon Bedrock, Ollama, DeepSeek, and OpenAI, and features a customizable system prompt assistant. With a minimalist design philosophy and robust privacy protection, SwiftChat delivers a seamless chat experience with various features like rich Markdown support, comprehensive multimodal analysis, creative image suite, and quick access tools. The app prioritizes speed in launch, request, render, and storage, ensuring a fast and efficient user experience. SwiftChat also emphasizes app privacy and security by encrypting API key storage, minimal permission requirements, local-only data storage, and a privacy-first approach.

For similar tasks

airsonic-refix

Airsonic (refix) UI is a modern responsive web frontend designed for airsonic-advanced, navidrome, gonic, and other subsonic compatible music servers. It offers features such as responsive UI for desktop and mobile, browsing library for albums, artists, genres, playback with persistent queue, repeat & shuffle, MediaSession integration, playlist management with drag and drop, search, favorites, internet radio, and podcasts.

aiode

aiode is a Discord bot that plays Spotify tracks and YouTube videos or any URL including Soundcloud links and Twitch streams. It allows users to create cross-platform playlists, customize player commands, create custom command presets, adjust properties for deeper customization, sign in to Spotify to play personal playlists, manage access permissions for commands, customize bot summoning methods, and execute advanced admin commands. The bot also features a scripting sandbox for running and storing custom groovy scripts and modifying command behavior through interceptors.

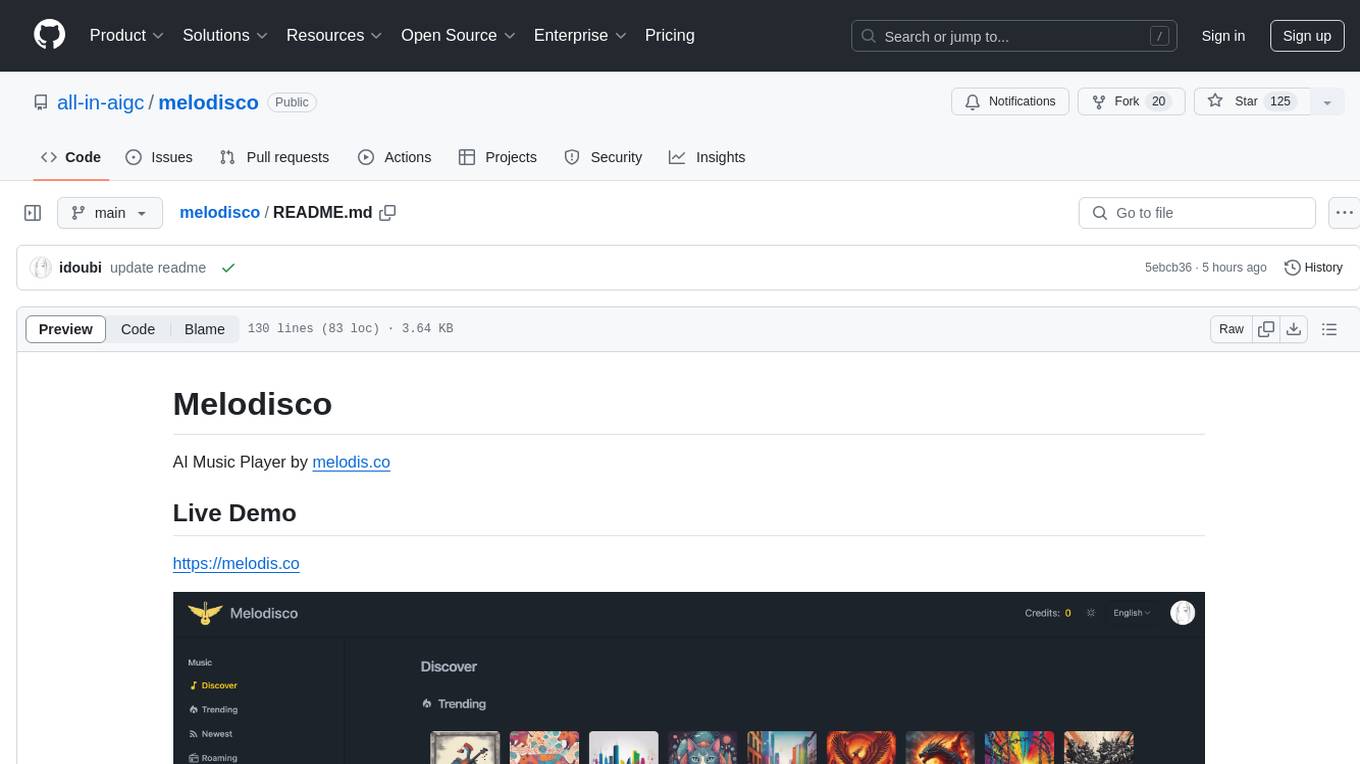

melodisco

Melodisco is an AI music player that allows users to listen to music and manage playlists. It provides a user-friendly interface for music playback and organization. Users can deploy Melodisco with Vercel or Docker for easy setup. Local development instructions are provided for setting up the project environment. The project credits various tools and libraries used in its development, such as Next.js, Tailwind CSS, and Stripe. Melodisco is a versatile tool for music enthusiasts looking for an AI-powered music player with features like authentication, payment integration, and multi-language support.

HMusic

HMusic is an intelligent music player that supports Xiaomi AI speakers. It offers dual modes: xiaomusic server mode and Xiaomi IoT direct connection mode. Users can choose between these modes based on their preferences and needs. The direct connection mode is suitable for regular users who want a plug-and-play experience, while the xiaomusic mode is ideal for users with NAS/servers who require advanced features like a local music library, playlists, and progress control. The tool provides functionalities such as music search, playback, and volume control, catering to a wide range of music enthusiasts.

home-llm

Home LLM is a project that provides the necessary components to control your Home Assistant installation with a completely local Large Language Model acting as a personal assistant. The goal is to provide a drop-in solution to be used as a "conversation agent" component by Home Assistant. The 2 main pieces of this solution are Home LLM and Llama Conversation. Home LLM is a fine-tuning of the Phi model series from Microsoft and the StableLM model series from StabilityAI. The model is able to control devices in the user's house as well as perform basic question and answering. The fine-tuning dataset is a custom synthetic dataset designed to teach the model function calling based on the device information in the context. Llama Conversation is a custom component that exposes the locally running LLM as a "conversation agent" in Home Assistant. This component can be interacted with in a few ways: using a chat interface, integrating with Speech-to-Text and Text-to-Speech addons, or running the oobabooga/text-generation-webui project to provide access to the LLM via an API interface.

Linguflex

Linguflex is a project that aims to simulate engaging, authentic, human-like interaction with AI personalities. It offers voice-based conversation with custom characters, alongside an array of practical features such as controlling smart home devices, playing music, searching the internet, fetching emails, displaying current weather information and news, assisting in scheduling, and searching or generating images.

M.I.L.E.S

M.I.L.E.S. (Machine Intelligent Language Enabled System) is a voice assistant powered by GPT-4 Turbo, offering a range of capabilities beyond existing assistants. With its advanced language understanding, M.I.L.E.S. provides accurate and efficient responses to user queries. It seamlessly integrates with smart home devices, Spotify, and offers real-time weather information. Additionally, M.I.L.E.S. possesses persistent memory, a built-in calculator, and multi-tasking abilities. Its realistic voice, accurate wake word detection, and internet browsing capabilities enhance the user experience. M.I.L.E.S. prioritizes user privacy by processing data locally, encrypting sensitive information, and adhering to strict data retention policies.

leon

Leon is an open-source personal assistant who can live on your server. He does stuff when you ask him to. You can talk to him and he can talk to you. You can also text him and he can also text you. If you want to, Leon can communicate with you by being offline to protect your privacy.

For similar jobs

Protofy

Protofy is a full-stack, batteries-included low-code enabled web/app and IoT system with an API system and real-time messaging. It is based on Protofy (protoflow + visualui + protolib + protodevices) + Expo + Next.js + Tamagui + Solito + Express + Aedes + Redbird + Many other amazing packages. Protofy can be used to fast prototype Apps, webs, IoT systems, automations, or APIs. It is a ultra-extensible CMS with supercharged capabilities, mobile support, and IoT support (esp32 thanks to esphome).

react-native-vision-camera

VisionCamera is a powerful, high-performance Camera library for React Native. It features Photo and Video capture, QR/Barcode scanner, Customizable devices and multi-cameras ("fish-eye" zoom), Customizable resolutions and aspect-ratios (4k/8k images), Customizable FPS (30..240 FPS), Frame Processors (JS worklets to run facial recognition, AI object detection, realtime video chats, ...), Smooth zooming (Reanimated), Fast pause and resume, HDR & Night modes, Custom C++/GPU accelerated video pipeline (OpenGL).

dev-conf-replay

This repository contains information about various IT seminars and developer conferences in South Korea, allowing users to watch replays of past events. It covers a wide range of topics such as AI, big data, cloud, infrastructure, devops, blockchain, mobility, games, security, mobile development, frontend, programming languages, open source, education, and community events. Users can explore upcoming and past events, view related YouTube channels, and access additional resources like free programming ebooks and data structures and algorithms tutorials.

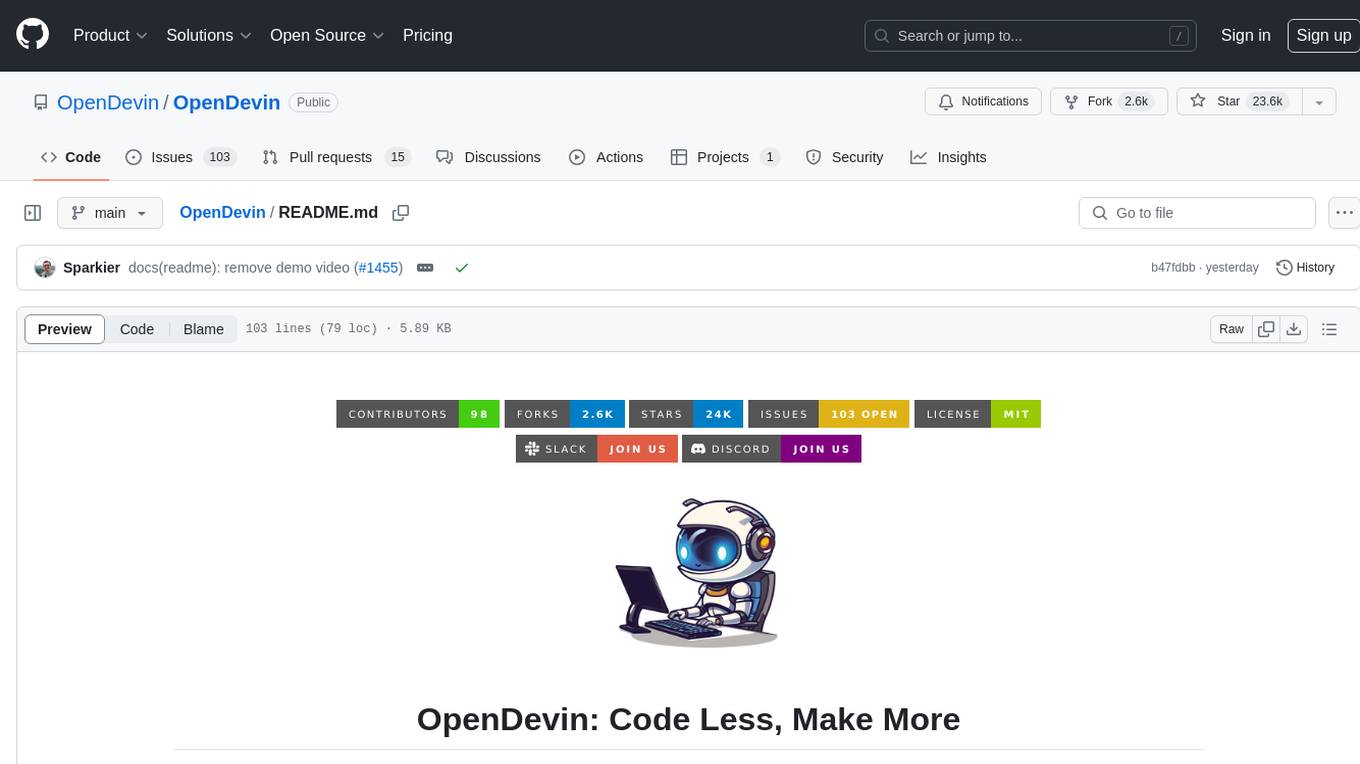

OpenDevin

OpenDevin is an open-source project aiming to replicate Devin, an autonomous AI software engineer capable of executing complex engineering tasks and collaborating actively with users on software development projects. The project aspires to enhance and innovate upon Devin through the power of the open-source community. Users can contribute to the project by developing core functionalities, frontend interface, or sandboxing solutions, participating in research and evaluation of LLMs in software engineering, and providing feedback and testing on the OpenDevin toolset.

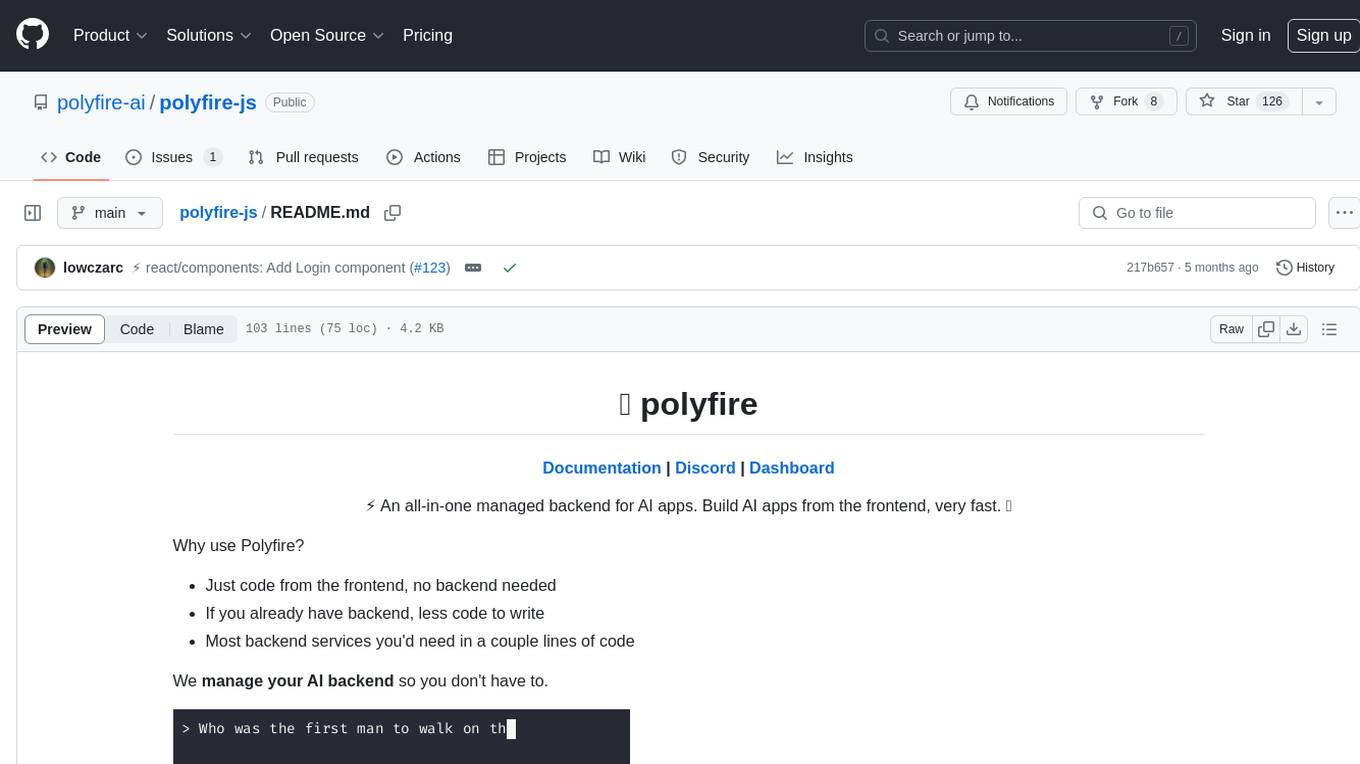

polyfire-js

Polyfire is an all-in-one managed backend for AI apps that allows users to build AI applications directly from the frontend, eliminating the need for a separate backend. It simplifies the process by providing most backend services in just a few lines of code. With Polyfire, users can easily create chatbots, transcribe audio files, generate simple text, manage long-term memory, and generate images. The tool also offers starter guides and tutorials to help users get started quickly and efficiently.

sdfx

SDFX is the ultimate no-code platform for building and sharing AI apps with beautiful UI. It enables the creation of user-friendly interfaces for complex workflows by combining Comfy workflow with a UI. The tool is designed to merge the benefits of form-based UI and graph-node based UI, allowing users to create intricate graphs with a high-level UI overlay. SDFX is fully compatible with ComfyUI, abstracting the need for installing ComfyUI. It offers features like animated graph navigation, node bookmarks, UI debugger, custom nodes manager, app and template export, image and mask editor, and more. The tool compiles as a native app or web app, making it easy to maintain and add new features.

aimeos-laravel

Aimeos Laravel is a professional, full-featured, and ultra-fast Laravel ecommerce package that can be easily integrated into existing Laravel applications. It offers a wide range of features including multi-vendor, multi-channel, and multi-warehouse support, fast performance, support for various product types, subscriptions with recurring payments, multiple payment gateways, full RTL support, flexible pricing options, admin backend, REST and GraphQL APIs, modular structure, SEO optimization, multi-language support, AI-based text translation, mobile optimization, and high-quality source code. The package is highly configurable and extensible, making it suitable for e-commerce SaaS solutions, marketplaces, and online shops with millions of vendors.

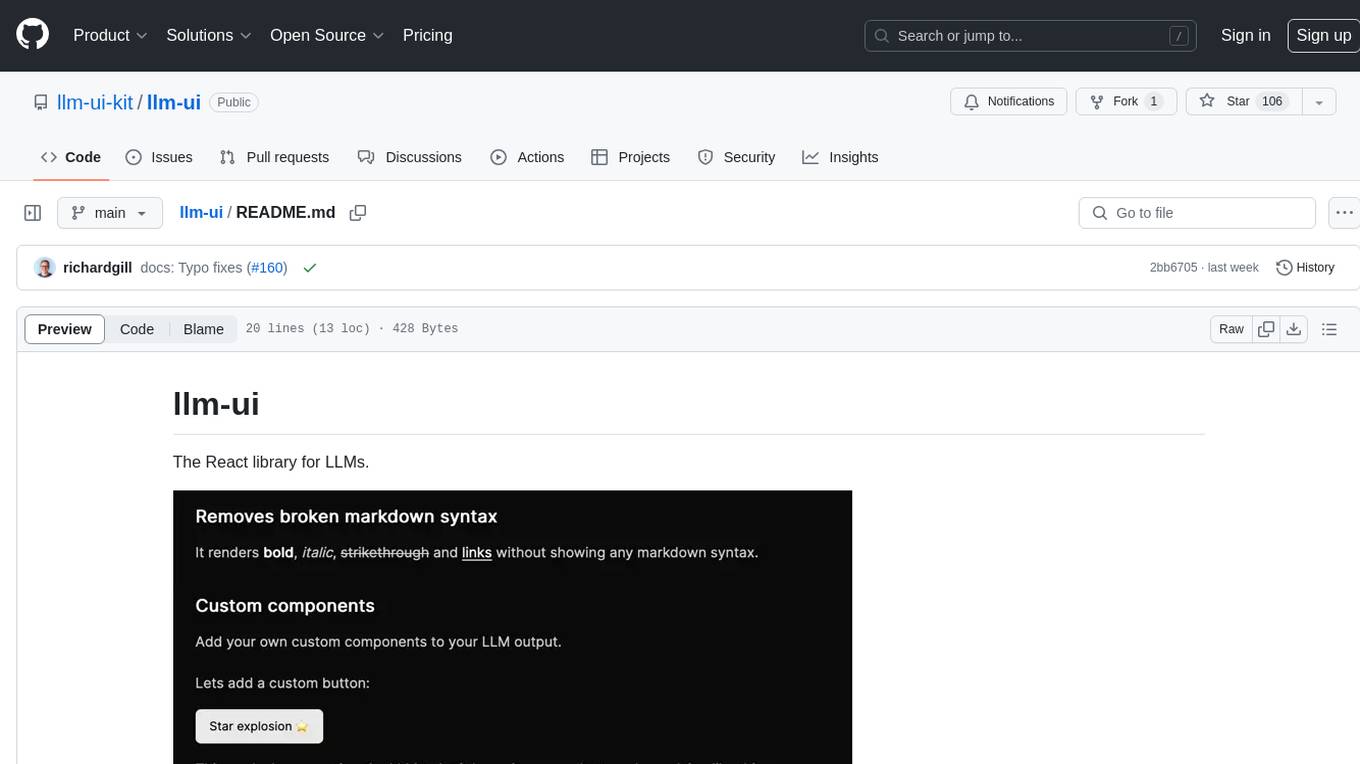

llm-ui

llm-ui is a React library designed for LLMs, providing features such as removing broken markdown syntax, adding custom components to LLM output, smoothing out pauses in streamed output, rendering at native frame rate, supporting code blocks for every language with Shiki, and being headless to allow for custom styles. The library aims to enhance the user experience and flexibility when working with LLMs.