models.dev

An open-source database of AI models.

Stars: 899

Models.dev is an open-source database providing detailed specifications, pricing, and capabilities of various AI models. It serves as a centralized platform for accessing information on AI models, allowing users to contribute and utilize the data through an API. The repository contains data stored in TOML files, organized by provider and model, along with SVG logos. Users can contribute by adding new models following specific guidelines and submitting pull requests for validation. The project aims to maintain an up-to-date and comprehensive database of AI model information.

README:

Models.dev is a comprehensive open-source database of AI model specifications, pricing, and capabilities.

There's no single database with information about all the available AI models. We started Models.dev as a community-contributed project to address this. We also use it internally in opencode.

You can access this data through an API.

curl https://models.dev/api.jsonUse the Model ID field to do a lookup on any model; it's the identifier used by AI SDK.

Provider logos are available as SVG files:

curl https://models.dev/logos/{provider}.svgReplace {provider} with the Provider ID (e.g., anthropic, openai, google). If we don't have a provider's logo, a default logo is served instead.

The data is stored in the repo as TOML files; organized by provider and model. The logo is stored as an SVG. This is used to generate this page and power the API.

We need your help keeping the data up to date.

To add a new model, start by checking if the provider already exists in the providers/ directory. If not, then:

If the provider isn't already in providers/:

-

Create a new folder in

providers/with the provider's ID. For example,providers/newprovider/. -

Add a

provider.tomlwith the provider details:name = "Provider Name" npm = "@ai-sdk/provider" # AI SDK Package name env = ["PROVIDER_API_KEY"] # Environment Variable keys used for auth doc = "https://example.com/docs/models" # Link to provider's documentation

If the provider doesn’t publish an npm package but exposes an OpenAI-compatible endpoint, set the npm field accordingly and include the base URL:

npm = "@ai-sdk/openai-compatible" # Use OpenAI-compatible SDK api = "https://api.example.com/v1" # Required with openai-compatible

To add a logo for the provider:

- Add a

logo.svgfile to the provider's directory (e.g.,providers/newprovider/logo.svg) - Use SVG format with no fixed size or colors - use

currentColorfor fills/strokes

Example SVG structure:

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 24 24" fill="currentColor">

<!-- Logo paths here -->

</svg>Create a new TOML file in the provider's models/ directory where the filename is the model ID:

name = "Model Display Name"

attachment = true # or false - supports file attachments

reasoning = false # or true - supports reasoning / chain-of-thought

tool_call = true # or false - supports tool calling

temperature = true # or false - supports temperature control

knowledge = "2024-04" # Knowledge-cutoff date

release_date = "2025-02-19" # First public release date

last_updated = "2025-02-19" # Most recent update date

[cost]

input = 3.00 # Cost per million input tokens (USD)

output = 15.00 # Cost per million output tokens (USD)

cache_read = 0.30 # Cost per million cached read tokens (USD)

cache_write = 3.75 # Cost per million cached write tokens (USD)

[limit]

context = 200_000 # Maximum context window (tokens)

output = 8_192 # Maximum output tokens

[modalities]

input = ["text", "image"] # Supported input modalities

output = ["text"] # Supported output modalities- Fork this repo

- Create a new branch with your changes

- Add your provider and/or model files

- Open a PR with a clear description

There's a GitHub Action that will automatically validate your submission against our schema to ensure:

- All required fields are present

- Data types are correct

- Values are within acceptable ranges

- TOML syntax is valid

Models must conform to the following schema, as defined in app/schemas.ts.

Provider Schema:

-

name: String - Display name of the provider -

npm: String - AI SDK Package name -

env: String[] - Environment variable keys used for auth -

doc: String - Link to the provider's documentation -

api(optional): String - OpenAI-compatible API endpoint. Required only when using@ai-sdk/openai-compatibleas the npm package

Model Schema:

-

name: String — Display name of the model -

attachment: Boolean — Supports file attachments -

reasoning: Boolean — Supports reasoning / chain-of-thought -

tool_call: Boolean - Supports tool calling -

temperature: Boolean — Supports temperature control -

knowledge(optional): String — Knowledge-cutoff date inYYYY-MMorYYYY-MM-DDformat -

release_date: String — First public release date inYYYY-MMorYYYY-MM-DD -

last_updated: String — Most recent update date inYYYY-MMorYYYY-MM-DD -

cost.input(optional): Number — Cost per million input tokens (USD) -

cost.output(optional): Number — Cost per million output tokens (USD) -

cost.cache_read(optional): Number — Cost per million cached read tokens (USD) -

cost.cache_write(optional): Number — Cost per million cached write tokens (USD) -

limit.context: Number — Maximum context window (tokens) -

limit.output: Number — Maximum output tokens -

modalities.input: Array of strings — Supported input modalities (e.g., ["text", "image", "audio", "video", "pdf"]) -

modalities.output: Array of strings — Supported output modalities (e.g., ["text"])

See existing providers in the providers/ directory for reference:

-

providers/anthropic/- Anthropic Claude models -

providers/openai/- OpenAI GPT models -

providers/google/- Google Gemini models

Make sure you have Bun installed.

$ bun install

$ cd packages/web

$ bun run devAnd it'll open the frontend at http://localhost:3000

Open an issue if you need help or have questions about contributing.

Models.dev is created by the maintainers of SST.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for models.dev

Similar Open Source Tools

models.dev

Models.dev is an open-source database providing detailed specifications, pricing, and capabilities of various AI models. It serves as a centralized platform for accessing information on AI models, allowing users to contribute and utilize the data through an API. The repository contains data stored in TOML files, organized by provider and model, along with SVG logos. Users can contribute by adding new models following specific guidelines and submitting pull requests for validation. The project aims to maintain an up-to-date and comprehensive database of AI model information.

roast

Roast is a convention-oriented framework for creating structured AI workflows maintained by the Augmented Engineering team at Shopify. It provides a structured, declarative approach to building AI workflows with convention over configuration, built-in tools for file operations, search, and AI interactions, Ruby integration for custom steps, shared context between steps, step customization with AI models and parameters, session replay, parallel execution, function caching, and extensive instrumentation for monitoring workflow execution, AI calls, and tool usage.

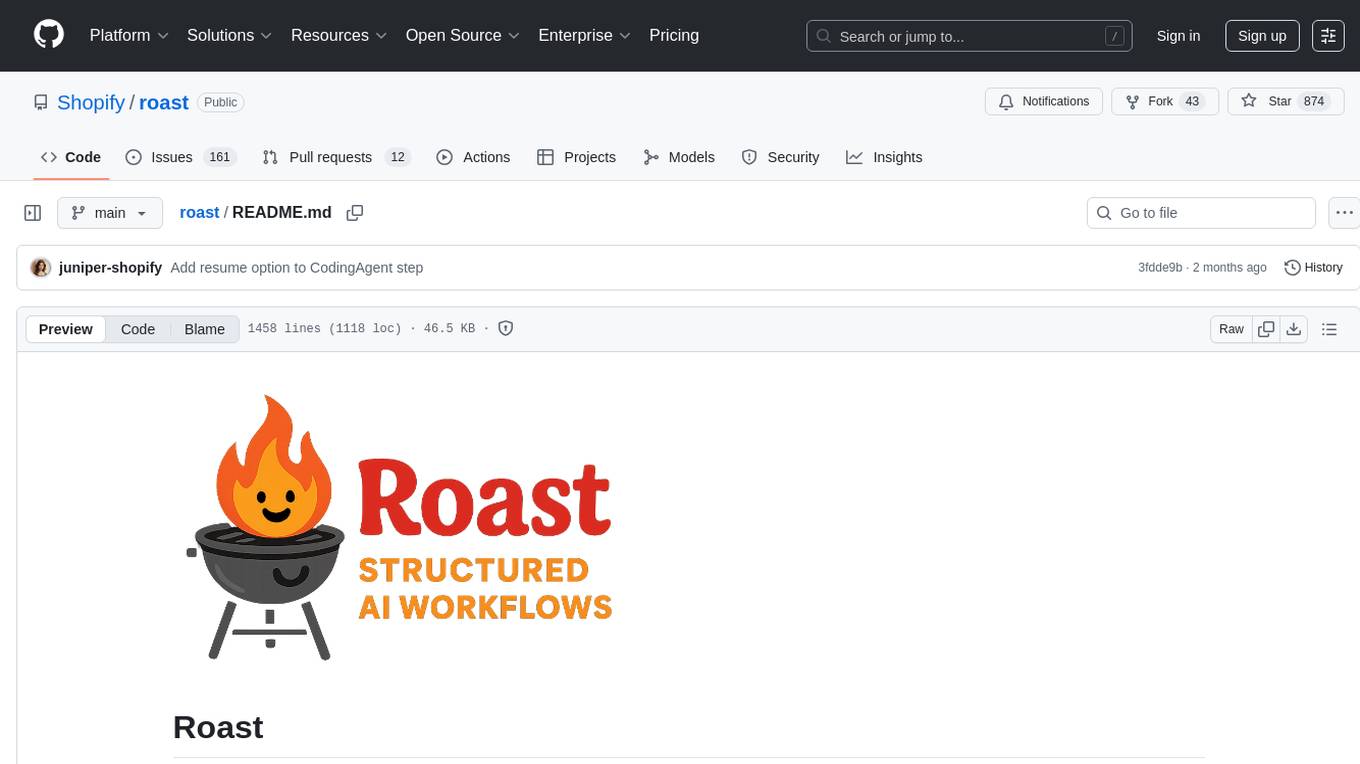

llm-functions

LLM Functions is a project that enables the enhancement of large language models (LLMs) with custom tools and agents developed in bash, javascript, and python. Users can create tools for their LLM to execute system commands, access web APIs, or perform other complex tasks triggered by natural language prompts. The project provides a framework for building tools and agents, with tools being functions written in the user's preferred language and automatically generating JSON declarations based on comments. Agents combine prompts, function callings, and knowledge (RAG) to create conversational AI agents. The project is designed to be user-friendly and allows users to easily extend the capabilities of their language models.

python-tgpt

Python-tgpt is a Python package that enables seamless interaction with over 45 free LLM providers without requiring an API key. It also provides image generation capabilities. The name _python-tgpt_ draws inspiration from its parent project tgpt, which operates on Golang. Through this Python adaptation, users can effortlessly engage with a number of free LLMs available, fostering a smoother AI interaction experience.

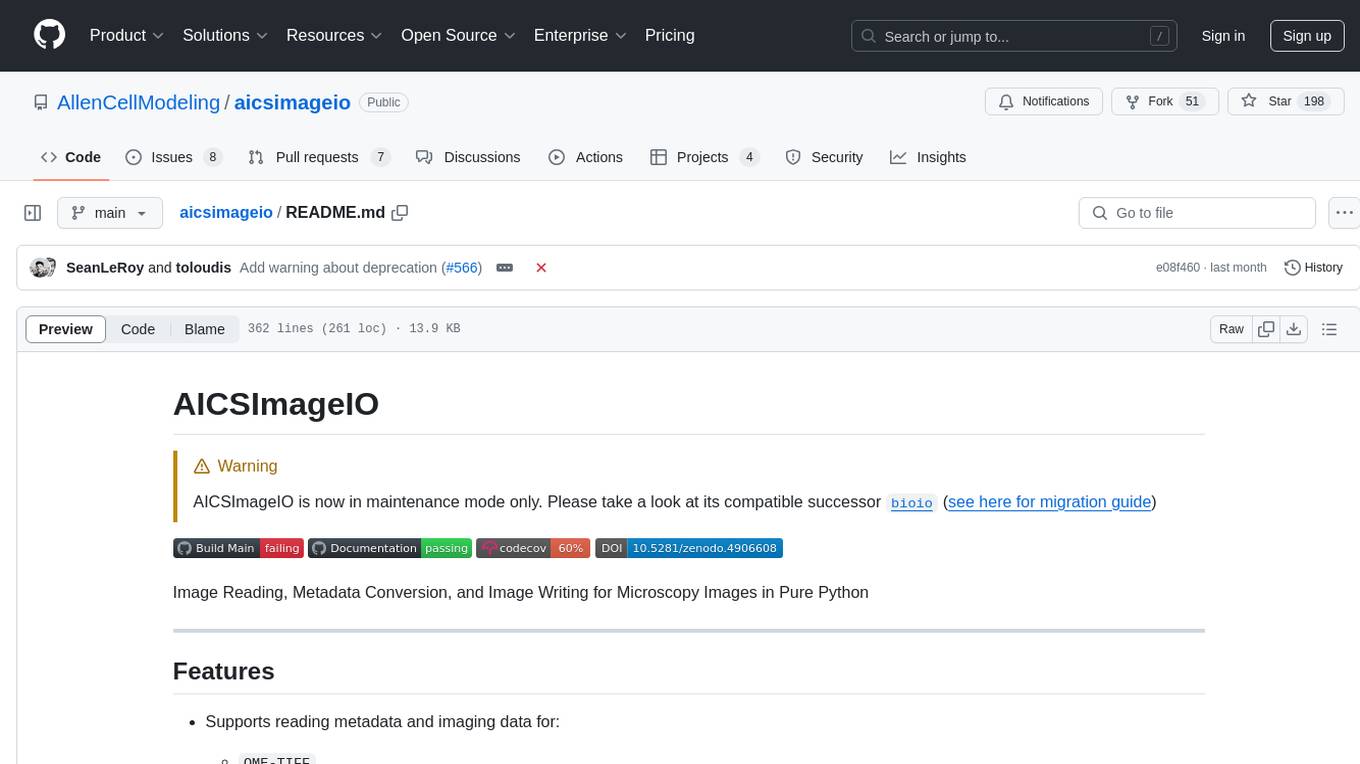

aicsimageio

AICSImageIO is a Python tool for Image Reading, Metadata Conversion, and Image Writing for Microscopy Images. It supports various file formats like OME-TIFF, TIFF, ND2, DV, CZI, LIF, PNG, GIF, and Bio-Formats. Users can read and write metadata and imaging data, work with different file systems like local paths, HTTP URLs, s3fs, and gcsfs. The tool provides functionalities for full image reading, delayed image reading, mosaic image reading, metadata reading, xarray coordinate plane attachment, cloud IO support, and saving to OME-TIFF. It also offers benchmarking and developer resources.

well-architected-iac-analyzer

Well-Architected Infrastructure as Code (IaC) Analyzer is a project demonstrating how generative AI can evaluate infrastructure code for alignment with best practices. It features a modern web application allowing users to upload IaC documents, complete IaC projects, or architecture diagrams for assessment. The tool provides insights into infrastructure code alignment with AWS best practices, offers suggestions for improving cloud architecture designs, and can generate IaC templates from architecture diagrams. Users can analyze CloudFormation, Terraform, or AWS CDK templates, architecture diagrams in PNG or JPEG format, and complete IaC projects with supporting documents. Real-time analysis against Well-Architected best practices, integration with AWS Well-Architected Tool, and export of analysis results and recommendations are included.

upgini

Upgini is an intelligent data search engine with a Python library that helps users find and add relevant features to their ML pipeline from various public, community, and premium external data sources. It automates the optimization of connected data sources by generating an optimal set of machine learning features using large language models, GraphNNs, and recurrent neural networks. The tool aims to simplify feature search and enrichment for external data to make it a standard approach in machine learning pipelines. It democratizes access to data sources for the data science community.

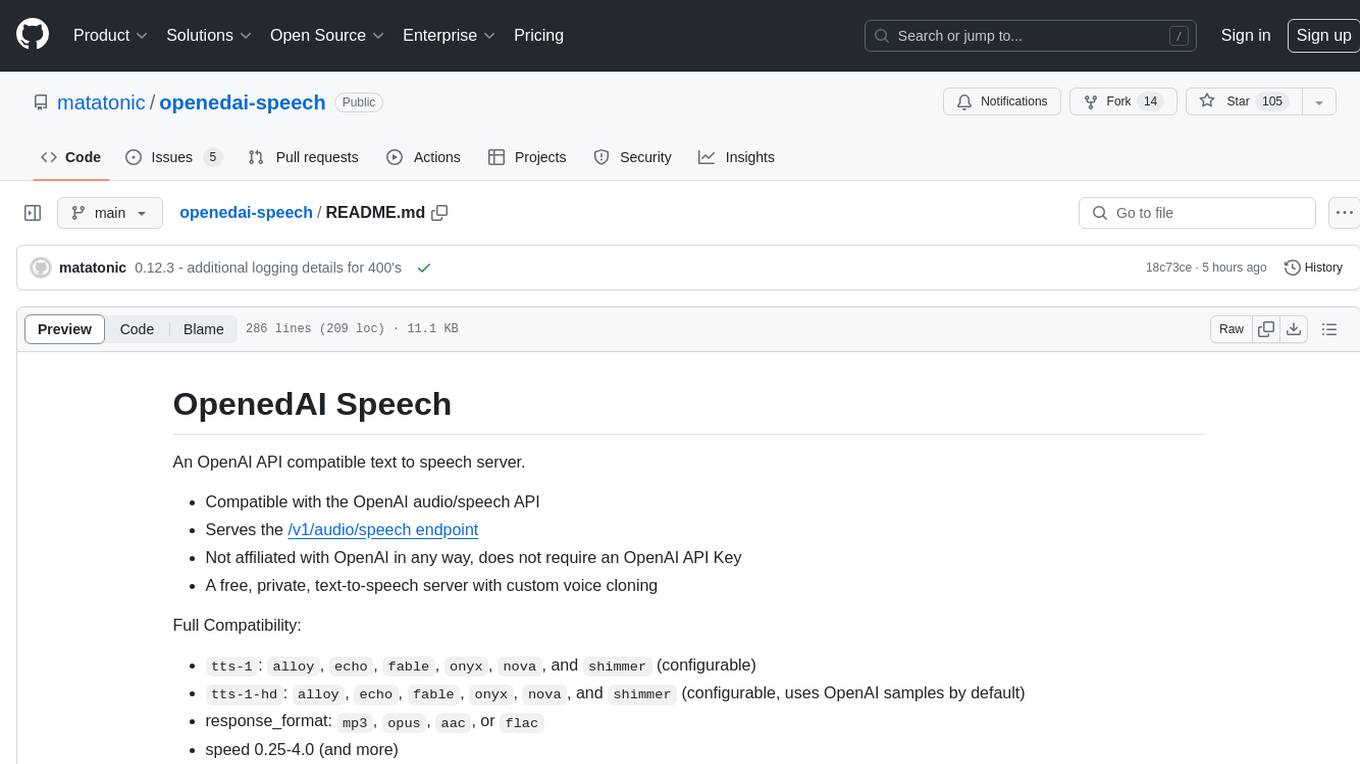

openedai-speech

OpenedAI Speech is a free, private text-to-speech server compatible with the OpenAI audio/speech API. It offers custom voice cloning and supports various models like tts-1 and tts-1-hd. Users can map their own piper voices and create custom cloned voices. The server provides multilingual support with XTTS voices and allows fixing incorrect sounds with regex. Recent changes include bug fixes, improved error handling, and updates for multilingual support. Installation can be done via Docker or manual setup, with usage instructions provided. Custom voices can be created using Piper or Coqui XTTS v2, with guidelines for preparing audio files. The tool is suitable for tasks like generating speech from text, creating custom voices, and multilingual text-to-speech applications.

cursor-tools

cursor-tools is a CLI tool designed to enhance AI agents with advanced skills, such as web search, repository context, documentation generation, GitHub integration, Xcode tools, and browser automation. It provides features like Perplexity for web search, Gemini 2.0 for codebase context, and Stagehand for browser operations. The tool requires API keys for Perplexity AI and Google Gemini, and supports global installation for system-wide access. It offers various commands for different tasks and integrates with Cursor Composer for AI agent usage.

hayhooks

Hayhooks is a tool that simplifies the deployment and serving of Haystack pipelines as REST APIs. It allows users to wrap their pipelines with custom logic and expose them via HTTP endpoints, including OpenAI-compatible chat completion endpoints. With Hayhooks, users can easily convert their Haystack pipelines into API services with minimal boilerplate code.

RA.Aid

RA.Aid is an AI software development agent powered by `aider` and advanced reasoning models like `o1`. It combines `aider`'s code editing capabilities with LangChain's agent-based task execution framework to provide an intelligent assistant for research, planning, and implementation of multi-step development tasks. It handles complex programming tasks by breaking them down into manageable steps, running shell commands automatically, and leveraging expert reasoning models like OpenAI's o1. RA.Aid is designed for everyday software development, offering features such as multi-step task planning, automated command execution, and the ability to handle complex programming tasks beyond single-shot code edits.

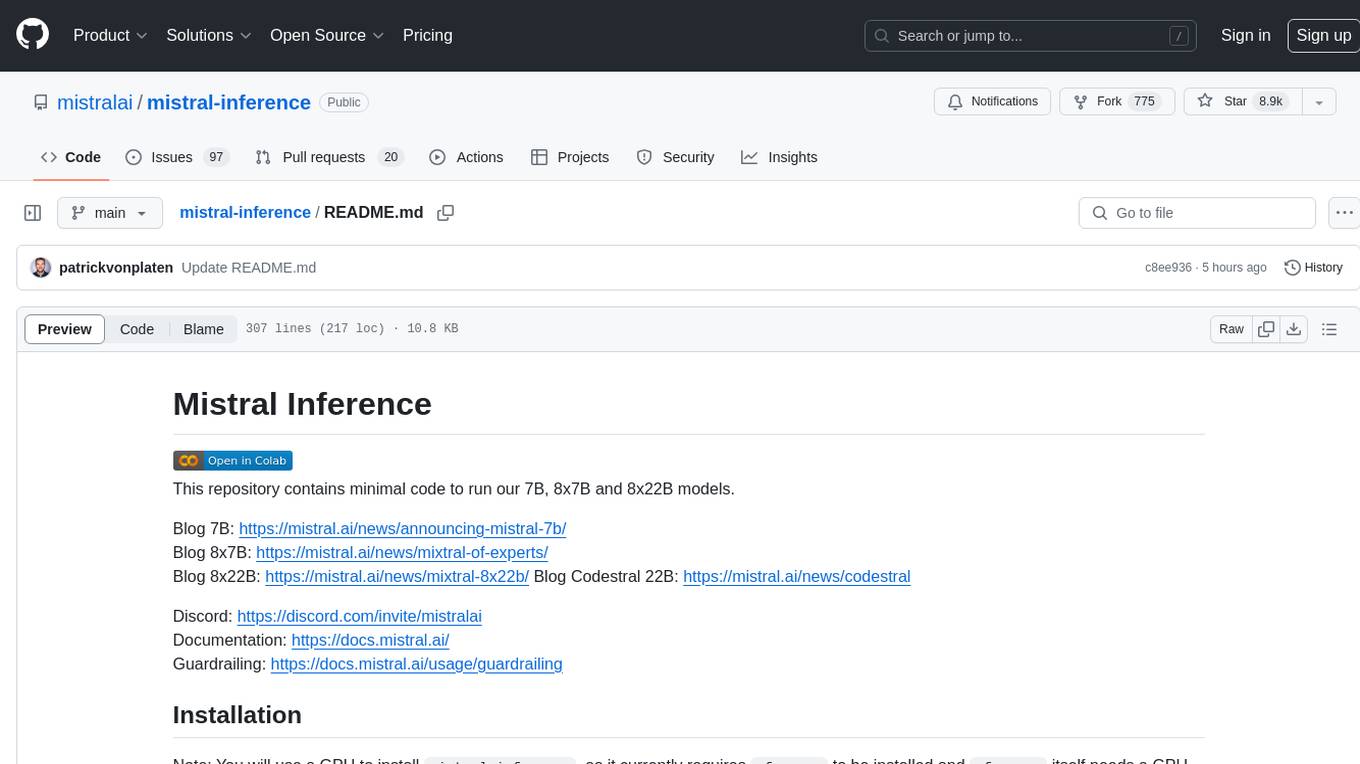

mistral-inference

Mistral Inference repository contains minimal code to run 7B, 8x7B, and 8x22B models. It provides model download links, installation instructions, and usage guidelines for running models via CLI or Python. The repository also includes information on guardrailing, model platforms, deployment, and references. Users can interact with models through commands like mistral-demo, mistral-chat, and mistral-common. Mistral AI models support function calling and chat interactions for tasks like testing models, chatting with models, and using Codestral as a coding assistant. The repository offers detailed documentation and links to blogs for further information.

forge

Forge is a powerful open-source tool for building modern web applications. It provides a simple and intuitive interface for developers to quickly scaffold and deploy projects. With Forge, you can easily create custom components, manage dependencies, and streamline your development workflow. Whether you are a beginner or an experienced developer, Forge offers a flexible and efficient solution for your web development needs.

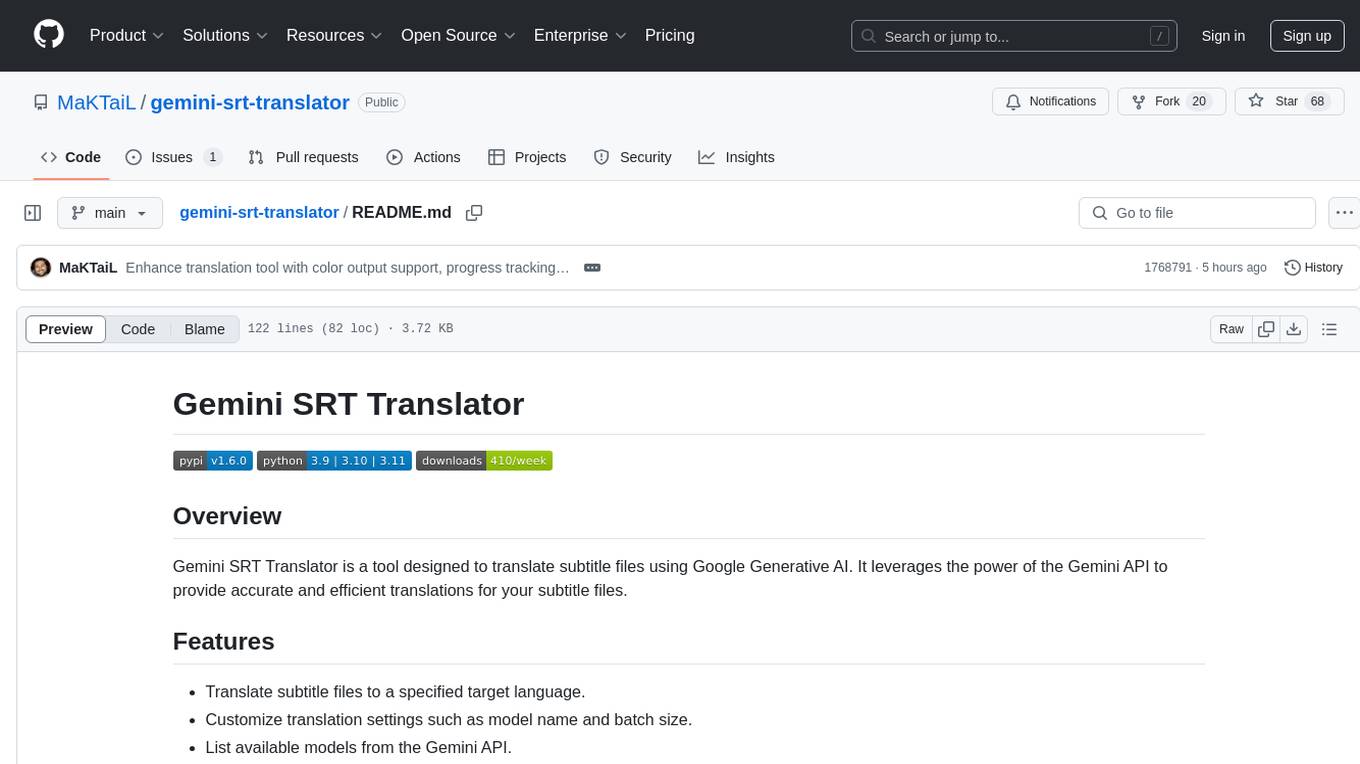

gemini-srt-translator

Gemini SRT Translator is a tool that utilizes Google Generative AI to provide accurate and efficient translations for subtitle files. Users can customize translation settings, such as model name and batch size, and list available models from the Gemini API. The tool requires a free API key from Google AI Studio for setup and offers features like translating subtitles to a specified target language and resuming partial translations. Users can further customize translation settings with optional parameters like gemini_api_key2, output_file, start_line, model_name, batch_size, and more.

shortest

Shortest is an AI-powered natural language end-to-end testing framework built on Playwright. It provides a seamless testing experience by allowing users to write tests in natural language and execute them using Anthropic Claude API. The framework also offers GitHub integration with 2FA support, making it suitable for testing web applications with complex authentication flows. Shortest simplifies the testing process by enabling users to run tests locally or in CI/CD pipelines, ensuring the reliability and efficiency of web applications.

aidermacs

Aidermacs is an AI pair programming tool for Emacs that integrates Aider, a powerful open-source AI pair programming tool. It provides top performance on the SWE Bench, support for multi-file edits, real-time file synchronization, and broad language support. Aidermacs delivers an Emacs-centric experience with features like intelligent model selection, flexible terminal backend support, smarter syntax highlighting, enhanced file management, and streamlined transient menus. It thrives on community involvement, encouraging contributions, issue reporting, idea sharing, and documentation improvement.

For similar tasks

sd-civitai-browser-plus

sd-civitai-browser-plus is an extension designed for Automatic1111's Stable Difussion Web UI, providing features to browse models from CivitAI, check for updates, download specific model versions hassle-free, assign tags to models, access model info quickly, and download models with high-speed using Aria2. The extension offers a sleek and intuitive user interface, actively maintained with feature requests welcome. It also addresses known issues like frozen downloads with possible solutions. The tool is actively developed with regular updates and bug fixes, ensuring a smooth user experience.

models.dev

Models.dev is an open-source database providing detailed specifications, pricing, and capabilities of various AI models. It serves as a centralized platform for accessing information on AI models, allowing users to contribute and utilize the data through an API. The repository contains data stored in TOML files, organized by provider and model, along with SVG logos. Users can contribute by adding new models following specific guidelines and submitting pull requests for validation. The project aims to maintain an up-to-date and comprehensive database of AI model information.

AI_for_Science_paper_collection

AI for Science paper collection is an initiative by AI for Science Community to collect and categorize papers in AI for Science areas by subjects, years, venues, and keywords. The repository contains `.csv` files with paper lists labeled by keys such as `Title`, `Conference`, `Type`, `Application`, `MLTech`, `OpenReviewLink`. It covers top conferences like ICML, NeurIPS, and ICLR. Volunteers can contribute by updating existing `.csv` files or adding new ones for uncovered conferences/years. The initiative aims to track the increasing trend of AI for Science papers and analyze trends in different applications.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.