agor

Orchestrate Claude Code, Codex, and Gemini sessions on a multiplayer canvas. Manage git worktrees, track AI conversations, and visualize your team's agentic work in real-time.

Stars: 964

Agor is a multiplayer spatial canvas where you coordinate multiple AI coding assistants on parallel tasks, with GitHub-linked worktrees, automated workflow zones, and isolated test environments—all running simultaneously. It allows users to run multiple AI coding sessions, manage git worktrees, track AI conversations, and visualize team's work in real-time. Agor provides features like Agent Swarm Control, Multiplayer Spatial Canvas, Session Trees, Zone Triggers, Isolated Development Environments, Real-Time Strategy for AI Teams, and Mobile-Friendly Prompting. It is designed to streamline parallel PR workflows and enhance collaboration among AI teams.

README:

Think Figma, but for AI coding assistants. Orchestrate Claude Code, Codex, and Gemini sessions on a multiplayer canvas. Manage git worktrees, track AI conversations, and visualize your team's agentic work in real-time.

TL;DR: Agor is a multiplayer spatial canvas where you coordinate multiple AI coding assistants on parallel tasks, with GitHub-linked worktrees, automated workflow zones, and isolated test environments—all running simultaneously.

📖 Read the full documentation at agor.live →

Spatial canvas with worktrees and zones |

Rich web UI for AI conversations |

MCP servers and worktree management |

Live collaboration with cursors and comments |

→ Watch unscripted demo on YouTube (13 minutes)

- Node.js 20.x

- Zellij ≥ 0.40 (required - daemon will not start without it)

Install Zellij:

# Ubuntu/Debian

curl -L https://github.com/zellij-org/zellij/releases/latest/download/zellij-x86_64-unknown-linux-musl.tar.gz | sudo tar -xz -C /usr/local/bin

# macOS

brew install zellij

# RHEL/CentOS

curl -L https://github.com/zellij-org/zellij/releases/latest/download/zellij-x86_64-unknown-linux-musl.tar.gz | sudo tar -xz -C /usr/local/binnpm install -g agor-liveNote: Agor requires Zellij for persistent terminal sessions. The daemon will fail to start with a helpful error message if Zellij is not installed.

# 1. Initialize (creates ~/.agor/ and database)

agor init

# 2. Start the daemon

agor daemon start

# 3. Open the UI

agor openTry in Codespaces:

- Run as many Claude Code, Codex, and Gemini sessions as you can handle—simultaneously.

- Agents in Agor can coordinate and supervise through the internal Agor MCP service.

- Built-in scheduler triggers templated prompts on your cadence.

- Figma-esque board layout organizes your AI coding sessions across boards (full 2D canvases).

- Scoped/spatial comments + reactions pinned to boards, zones, or worktrees (Figma-style).

- WebSocket-powered cursor broadcasting and facepiles show teammates in real time.

- Fork sessions to explore alternatives without losing the original path.

- Spawn subsessions for focused subtasks that report back to the parent.

- Visualize the session genealogy in "session trees"

- Define zones on your board that trigger templated prompts when worktrees are dropped.

- Build kanban-style flows or custom pipelines: analyze → develop → review → deploy.

-

GitHub-native workflow: Link worktrees to issues/PRs, auto-inject context into prompts

- Template syntax:

"deeply analyze this github issue: {{ worktree.issue_url }}" - Each worktree = isolated branch for a specific issue/PR

- AI agents automatically read the linked issue/PR context

- Template syntax:

The Problem: Working on 3 PRs simultaneously? Each needs different ports, dependencies, database states.

Agor's Solution:

- Each worktree gets its own isolated environment with auto-managed unique ports

- Configure start/stop commands once with templates:

PORT={{ add 9000 worktree.unique_id }} docker compose up -d - Everyone on your team can one-click start/stop any worktree's environment

- Multiple AI agents work in parallel without stepping on each other

- Health monitoring tracks if services are running properly

No more: "Kill your local server, I need to test my branch"

- Coordinate agentic work like a multiplayer RTS.

- Watch teammates or agents move across tasks live.

- Cluster sessions, delegate, pivot, and iterate together.

- Keep sessions cooking on the go — mobile-optimized UI for sending prompts and monitoring progress.

- Access conversations, send follow-ups, and check agent status from your phone.

- Full conversation view with hamburger navigation to switch between sessions.

Your team has 3 bug fixes and 2 features in flight. With Agor:

- Create 5 worktrees, each linked to its GitHub issue/PR

- Spawn AI sessions for each worktree (Claude, Codex, Gemini)

-

Drop into zones → "Analyze" zone triggers:

"Review this issue: {{ worktree.issue_url }}" - Watch in real-time as all 5 agents work simultaneously on the spatial canvas

- Isolated environments with unique ports prevent conflicts

- Push directly from worktrees to GitHub when ready

No context switching. No port collisions. No waiting.

graph TB

subgraph Clients

CLI["CLI (oclif)"]

UI["Web UI (React)"]

end

Client["Feathers Client<br/>REST + WebSocket"]

subgraph "Agor Daemon"

Feathers["FeathersJS Server"]

MCP["MCP HTTP Endpoint<br/>/mcp?sessionToken=..."]

Services["Services<br/>Sessions, Tasks, Messages<br/>Boards, Worktrees, Repos"]

AgentSDKs["Agent SDKs<br/>Claude, Codex, Gemini"]

ORM["Drizzle ORM"]

end

subgraph Storage

DB[("LibSQL Database<br/>~/.agor/agor.db")]

Git["Git Worktrees<br/>~/.agor/worktrees/"]

Config["Config<br/>~/.agor/config.yaml"]

end

CLI --> Client

UI --> Client

Client <-->|REST + WebSocket| Feathers

Feathers --> Services

Feathers --> MCP

MCP --> Services

Services --> ORM

Services --> AgentSDKs

AgentSDKs -.->|JSON-RPC 2.0| MCP

ORM --> DB

Services --> Git

Services --> ConfigQuick start (localhost):

# Terminal 1: Daemon

cd apps/agor-daemon && pnpm dev # :3030

# Terminal 2: UI

cd apps/agor-ui && pnpm dev # :5173Or use Docker:

docker compose upHighlights:

- Match CLI-Native Features — SDKs are evolving rapidly and exposing more functionality. Push integrations deeper to match all key features available in the underlying CLIs

- Bring Your Own IDE — Connect VSCode, Cursor, or any IDE directly to Agor-managed worktrees via SSH/Remote

- Unix User Integration — Enable true multi-tenancy with per-user Unix isolation for secure collaboration. Read the exploration →

- Discord - Join our Discord community for support and discussion

- GitHub Discussions - Ask questions, share ideas

- GitHub Issues - Report bugs, request features

Heavily prompted by @mistercrunch (Preset, Apache Superset, Apache Airflow), built by an army of Claudes.

Read the story: Making of Agor →

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for agor

Similar Open Source Tools

agor

Agor is a multiplayer spatial canvas where you coordinate multiple AI coding assistants on parallel tasks, with GitHub-linked worktrees, automated workflow zones, and isolated test environments—all running simultaneously. It allows users to run multiple AI coding sessions, manage git worktrees, track AI conversations, and visualize team's work in real-time. Agor provides features like Agent Swarm Control, Multiplayer Spatial Canvas, Session Trees, Zone Triggers, Isolated Development Environments, Real-Time Strategy for AI Teams, and Mobile-Friendly Prompting. It is designed to streamline parallel PR workflows and enhance collaboration among AI teams.

tingly-box

Tingly Box is a tool that helps in deciding which model to call, compressing context, and routing requests efficiently. It offers secure, reliable, and customizable functional extensions. With features like unified API, smart routing, context compression, auto API translation, blazing fast performance, flexible authentication, visual control panel, and client-side usage stats, Tingly Box provides a comprehensive solution for managing AI models and tokens. It supports integration with various IDEs, CLI tools, SDKs, and AI applications, making it versatile and easy to use. The tool also allows seamless integration with OAuth providers like Claude Code, enabling users to utilize existing quotas in OpenAI-compatible tools. Tingly Box aims to simplify AI model management and usage by providing a single endpoint for multiple providers with minimal configuration, promoting seamless integration with SDKs and CLI tools.

AionUi

AionUi is a user interface library for building modern and responsive web applications. It provides a set of customizable components and styles to create visually appealing user interfaces. With AionUi, developers can easily design and implement interactive web interfaces that are both functional and aesthetically pleasing. The library is built using the latest web technologies and follows best practices for performance and accessibility. Whether you are working on a personal project or a professional application, AionUi can help you streamline the UI development process and deliver a seamless user experience.

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

transformerlab-app

Transformer Lab is an app that allows users to experiment with Large Language Models by providing features such as one-click download of popular models, finetuning across different hardware, RLHF and Preference Optimization, working with LLMs across different operating systems, chatting with models, using different inference engines, evaluating models, building datasets for training, calculating embeddings, providing a full REST API, running in the cloud, converting models across platforms, supporting plugins, embedded Monaco code editor, prompt editing, inference logs, all through a simple cross-platform GUI.

local-deep-research

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

layra

LAYRA is the world's first visual-native AI automation engine that sees documents like a human, preserves layout and graphical elements, and executes arbitrarily complex workflows with full Python control. It empowers users to build next-generation intelligent systems with no limits or compromises. Built for Enterprise-Grade deployment, LAYRA features a modern frontend, high-performance backend, decoupled service architecture, visual-native multimodal document understanding, and a powerful workflow engine.

pyspur

PySpur is a graph-based editor designed for LLM (Large Language Models) workflows. It offers modular building blocks, node-level debugging, and performance evaluation. The tool is easy to hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies. Users can quickly set up PySpur by cloning the repository, creating a .env file, starting docker services, and accessing the portal. PySpur can also work with local models served using Ollama, with steps provided for configuration. The roadmap includes features like canvas, async/batch execution, support for Ollama, new nodes, pipeline optimization, templates, code compilation, multimodal support, and more.

RepoMaster

RepoMaster is an AI agent that leverages GitHub repositories to solve complex real-world tasks. It transforms how coding tasks are solved by automatically finding the right GitHub tools and making them work together seamlessly. Users can describe their tasks, and RepoMaster's AI analysis leads to auto discovery and smart execution, resulting in perfect outcomes. The tool provides a web interface for beginners and a command-line interface for advanced users, along with specialized agents for deep search, general assistance, and repository tasks.

llamafarm

LlamaFarm is a comprehensive AI framework that empowers users to build powerful AI applications locally, with full control over costs and deployment options. It provides modular components for RAG systems, vector databases, model management, prompt engineering, and fine-tuning. Users can create differentiated AI products without needing extensive ML expertise, using simple CLI commands and YAML configs. The framework supports local-first development, production-ready components, strategy-based configuration, and deployment anywhere from laptops to the cloud.

handit.ai

Handit.ai is an autonomous engineer tool designed to fix AI failures 24/7. It catches failures, writes fixes, tests them, and ships PRs automatically. It monitors AI applications, detects issues, generates fixes, tests them against real data, and ships them as pull requests—all automatically. Users can write JavaScript, TypeScript, Python, and more, and the tool automates what used to require manual debugging and firefighting.

opcode

opcode is a powerful desktop application built with Tauri 2 that serves as a command center for interacting with Claude Code. It offers a visual GUI for managing Claude Code sessions, creating custom agents, tracking usage, and more. Users can navigate projects, create specialized AI agents, monitor usage analytics, manage MCP servers, create session checkpoints, edit CLAUDE.md files, and more. The tool bridges the gap between command-line tools and visual experiences, making AI-assisted development more intuitive and productive.

jan

Jan is an open-source ChatGPT alternative that runs 100% offline on your computer. It supports universal architectures, including Nvidia GPUs, Apple M-series, Apple Intel, Linux Debian, and Windows x64. Jan is currently in development, so expect breaking changes and bugs. It is lightweight and embeddable, and can be used on its own within your own projects.

sandboxed.sh

sandboxed.sh is a self-hosted cloud orchestrator for AI coding agents that provides isolated Linux workspaces with Claude Code, OpenCode & Amp runtimes. It allows users to hand off entire development cycles, run multi-day operations unattended, and keep sensitive data local by analyzing data against scientific literature. The tool features dual runtime support, mission control for remote agent management, isolated workspaces, a git-backed library, MCP registry, and multi-platform support with a web dashboard and iOS app.

figma-console-mcp

Figma Console MCP is a Model Context Protocol server that bridges design and development, giving AI assistants complete access to Figma for extraction, creation, and debugging. It connects AI assistants like Claude to Figma, enabling plugin debugging, visual debugging, design system extraction, design creation, variable management, real-time monitoring, and three installation methods. The server offers 53+ tools for NPX and Local Git setups, while Remote SSE provides read-only access with 16 tools. Users can create and modify designs with AI, contribute to projects, or explore design data. The server supports authentication via personal access tokens and OAuth, and offers tools for navigation, console debugging, visual debugging, design system extraction, design creation, design-code parity, variable management, and AI-assisted design creation.

osaurus

Osaurus is a native, Apple Silicon-only local LLM server built on Apple's MLX for maximum performance on M‑series chips. It is a SwiftUI app + SwiftNIO server with OpenAI‑compatible and Ollama‑compatible endpoints. The tool supports native MLX text generation, model management, streaming and non‑streaming chat completions, OpenAI‑compatible function calling, real-time system resource monitoring, and path normalization for API compatibility. Osaurus is designed for macOS 15.5+ and Apple Silicon (M1 or newer) with Xcode 16.4+ required for building from source.

For similar tasks

agor

Agor is a multiplayer spatial canvas where you coordinate multiple AI coding assistants on parallel tasks, with GitHub-linked worktrees, automated workflow zones, and isolated test environments—all running simultaneously. It allows users to run multiple AI coding sessions, manage git worktrees, track AI conversations, and visualize team's work in real-time. Agor provides features like Agent Swarm Control, Multiplayer Spatial Canvas, Session Trees, Zone Triggers, Isolated Development Environments, Real-Time Strategy for AI Teams, and Mobile-Friendly Prompting. It is designed to streamline parallel PR workflows and enhance collaboration among AI teams.

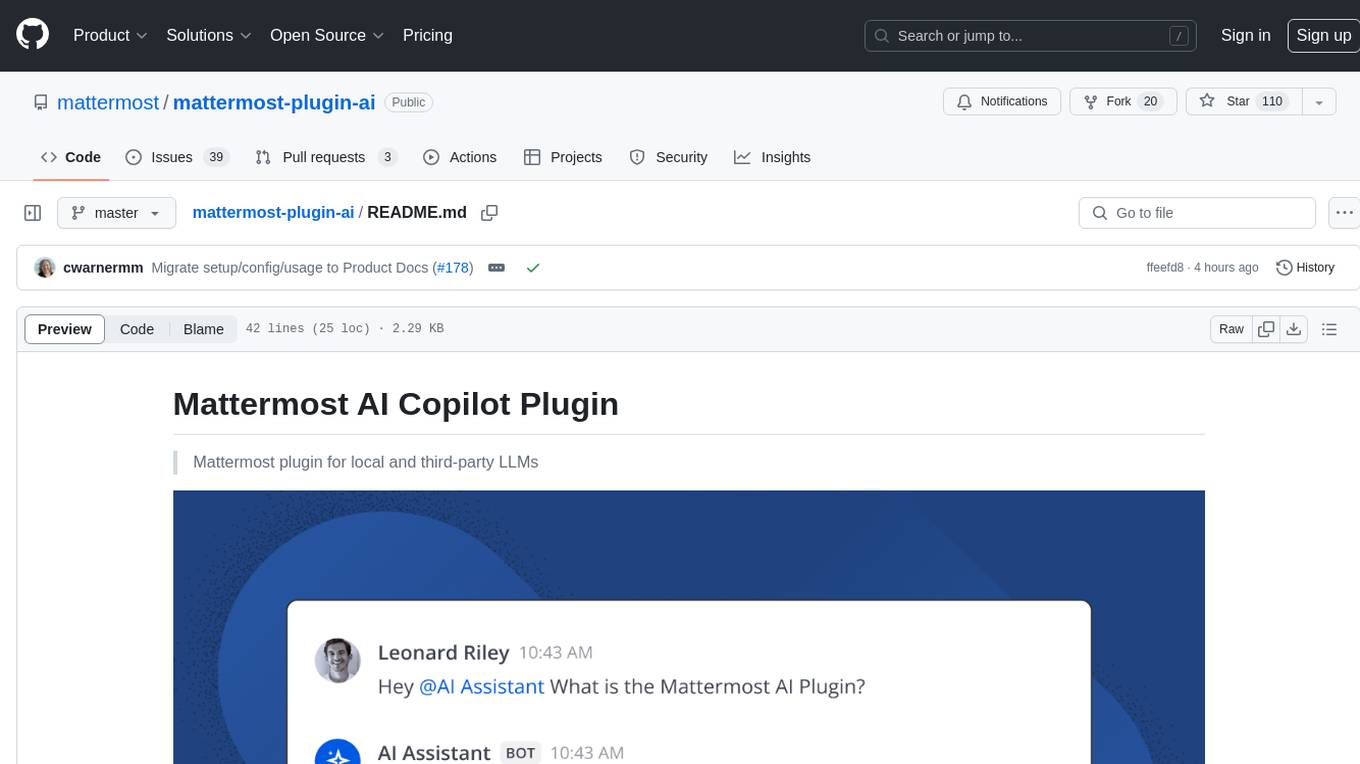

mattermost-plugin-ai

The Mattermost AI Copilot Plugin is an extension that adds functionality for local and third-party LLMs within Mattermost v9.6 and above. It is currently experimental and allows users to interact with AI models seamlessly. The plugin enhances the user experience by providing AI-powered assistance and features for communication and collaboration within the Mattermost platform.

agent-hub

Agent Hub is a platform for AI Agent solutions, containing three different projects aimed at transforming enterprise workflows, enhancing personalized language learning experiences, and enriching multimodal interactions. The projects include GitHub Sentinel for project management and automatic updates, LanguageMentor for personalized language learning support, and ChatPPT for multimodal AI-driven insights and PowerPoint automation in enterprise settings. The future vision of agent-hub is to serve as a launchpad for more AI Agents catering to different industries and pushing the boundaries of AI technology. Users are encouraged to explore, clone the repository, and contribute to the development of transformative AI agents.

Conversation-Knowledge-Mining-Solution-Accelerator

The Conversation Knowledge Mining Solution Accelerator enables customers to leverage intelligence to uncover insights, relationships, and patterns from conversational data. It empowers users to gain valuable knowledge and drive targeted business impact by utilizing Azure AI Foundry, Azure OpenAI, Microsoft Fabric, and Azure Search for topic modeling, key phrase extraction, speech-to-text transcription, and interactive chat experiences.

SpecForge

SpecForge is a powerful tool for generating API specifications from code. It helps developers to easily create and maintain accurate API documentation by extracting information directly from the codebase. With SpecForge, users can streamline the process of documenting APIs, ensuring consistency and reducing manual effort. The tool supports various programming languages and frameworks, making it versatile and adaptable to different development environments. By automating the generation of API specifications, SpecForge enhances collaboration between developers and stakeholders, improving overall project efficiency and quality.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.