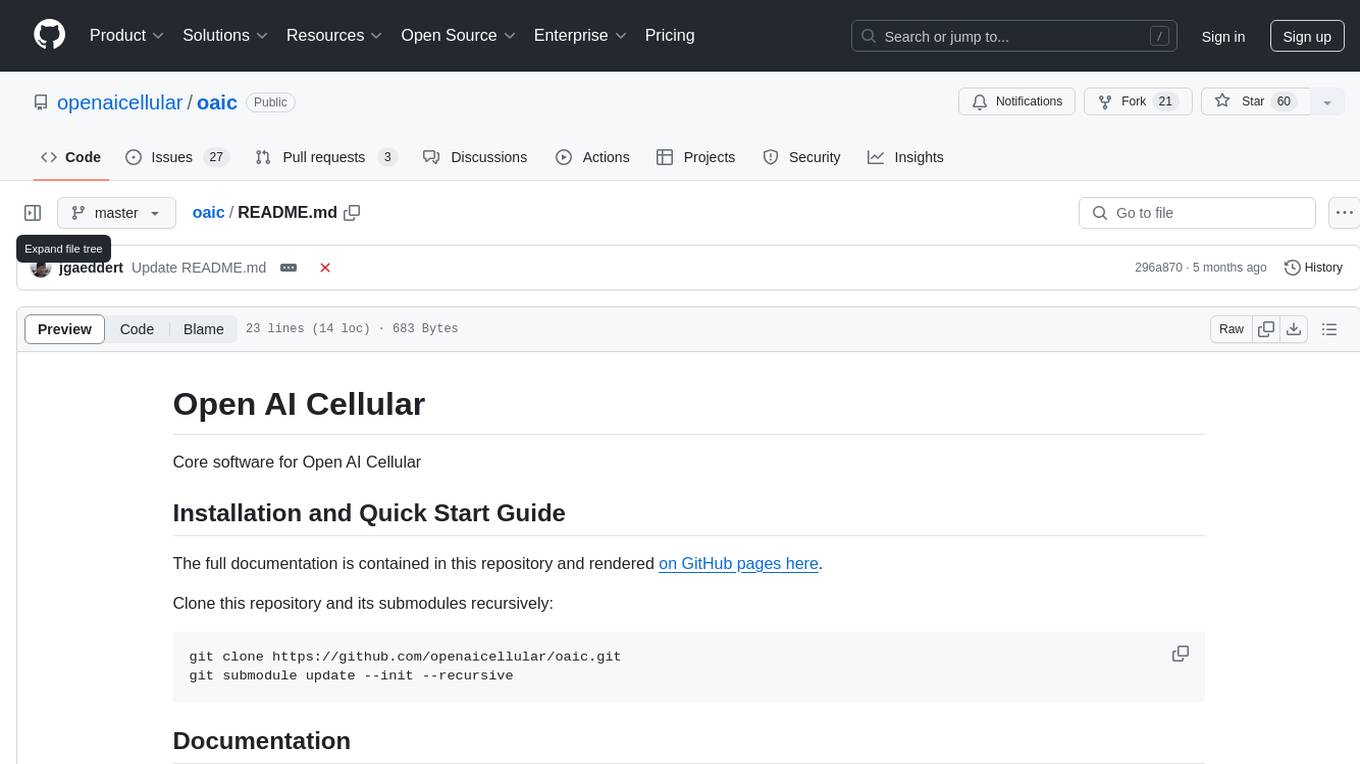

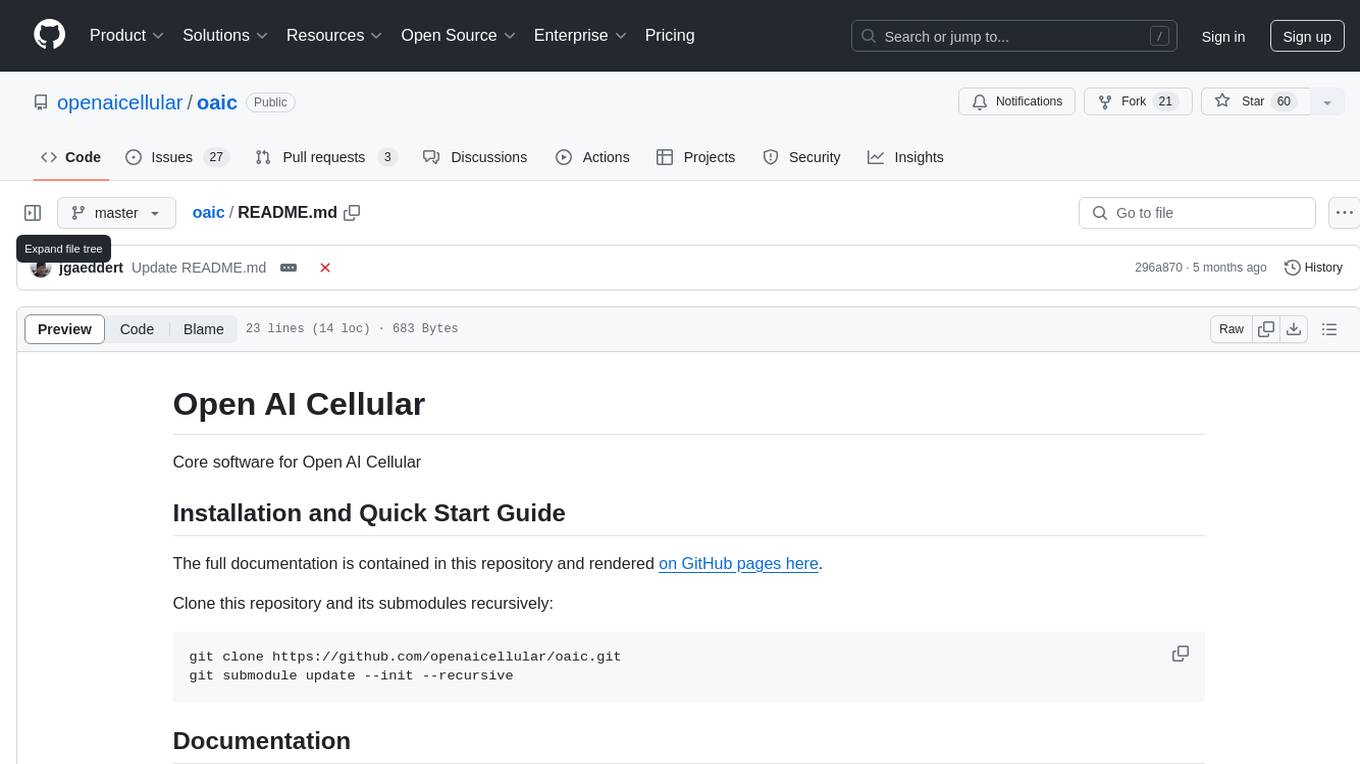

oaic

Core software for Open AI Cellular

Stars: 60

Open AI Cellular is the core software for Open AI Cellular. It provides documentation on installation, quick start guide, and usage. The repository contains submodules and requires sphinx with the read-the-docs theme for building core documentation. The resulting documentation is stored in the 'docs/build/html' directory.

README:

Core software for Open AI Cellular

The full documentation is contained in this repository and rendered on GitHub pages here.

Clone this repository and its submodules recursively:

git clone https://github.com/openaicellular/oaic.git

git submodule update --init --recursive

Install sphinx with the read-the-docs theme:

sudo -H python3 -m pip install -r requirements.txt

To build the core documentation, simply run make.

The resulting documentation is put in docs/build/html.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for oaic

Similar Open Source Tools

oaic

Open AI Cellular is the core software for Open AI Cellular. It provides documentation on installation, quick start guide, and usage. The repository contains submodules and requires sphinx with the read-the-docs theme for building core documentation. The resulting documentation is stored in the 'docs/build/html' directory.

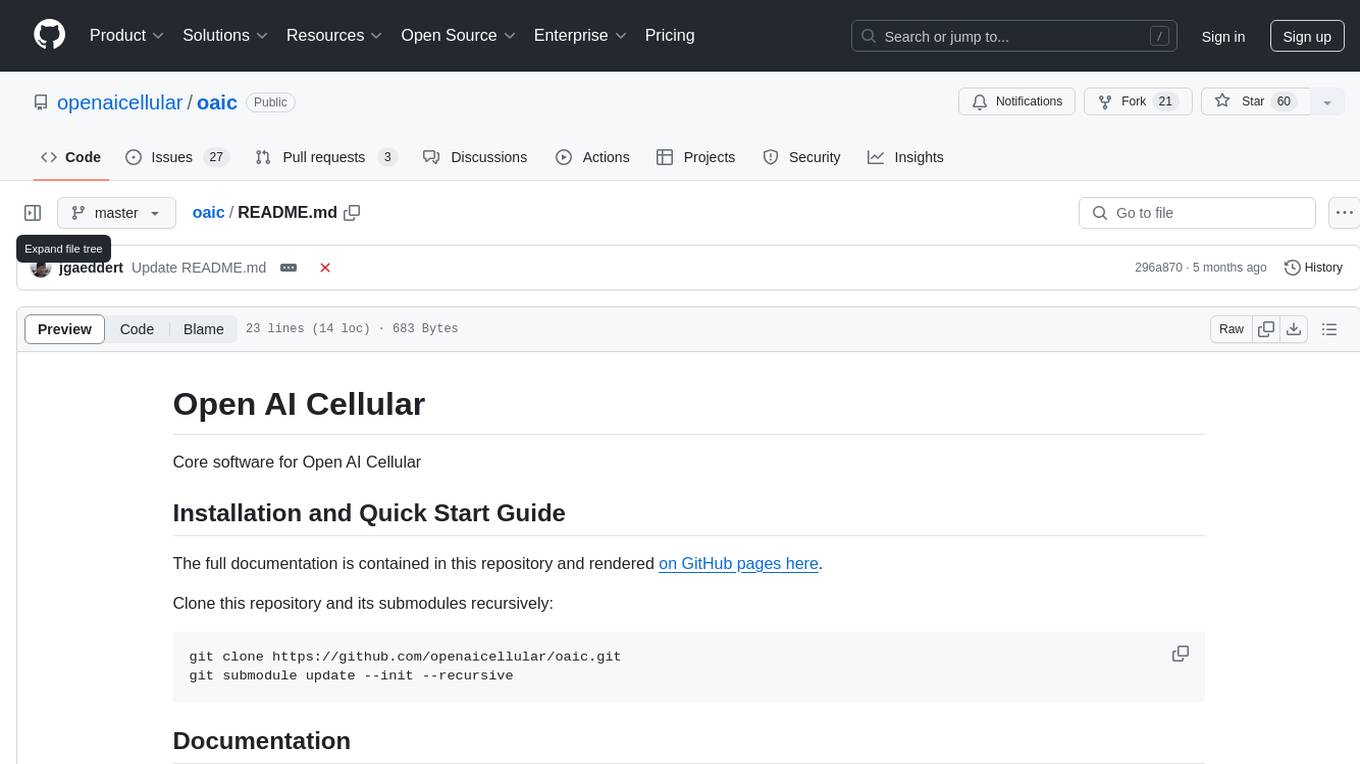

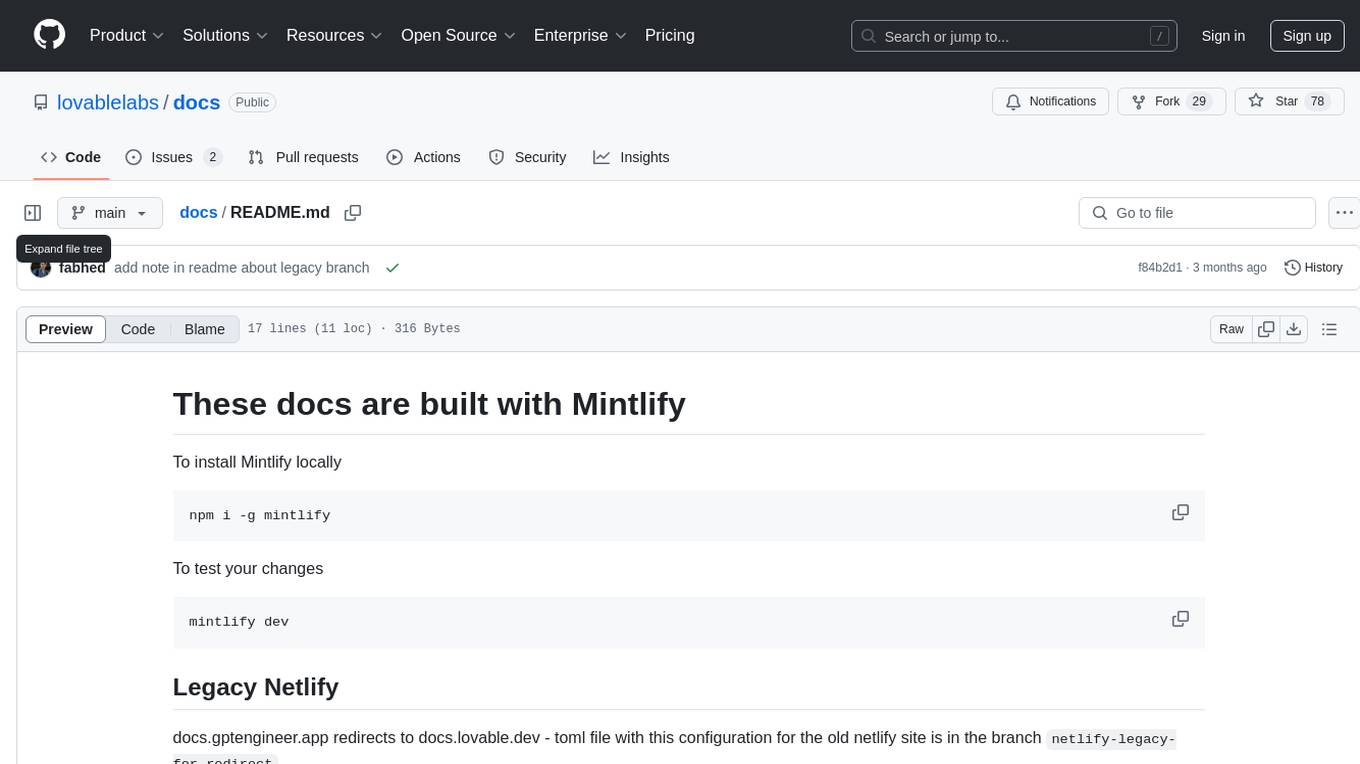

docs

This repository contains documentation for a tool called Mintlify. It provides instructions on how to install Mintlify locally and test changes. Additionally, it includes information on redirecting from an old Netlify site to a new one using a toml file configuration. The documentation is built using Mintlify.

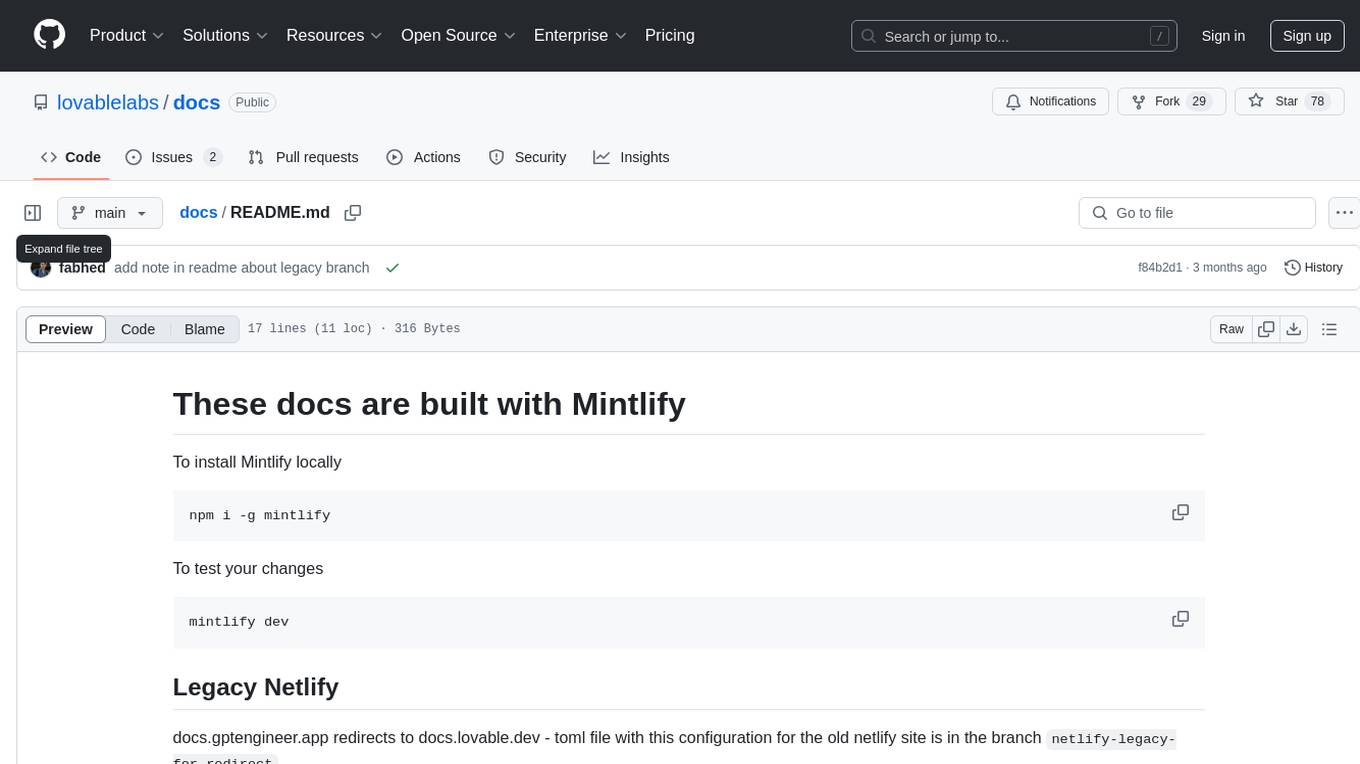

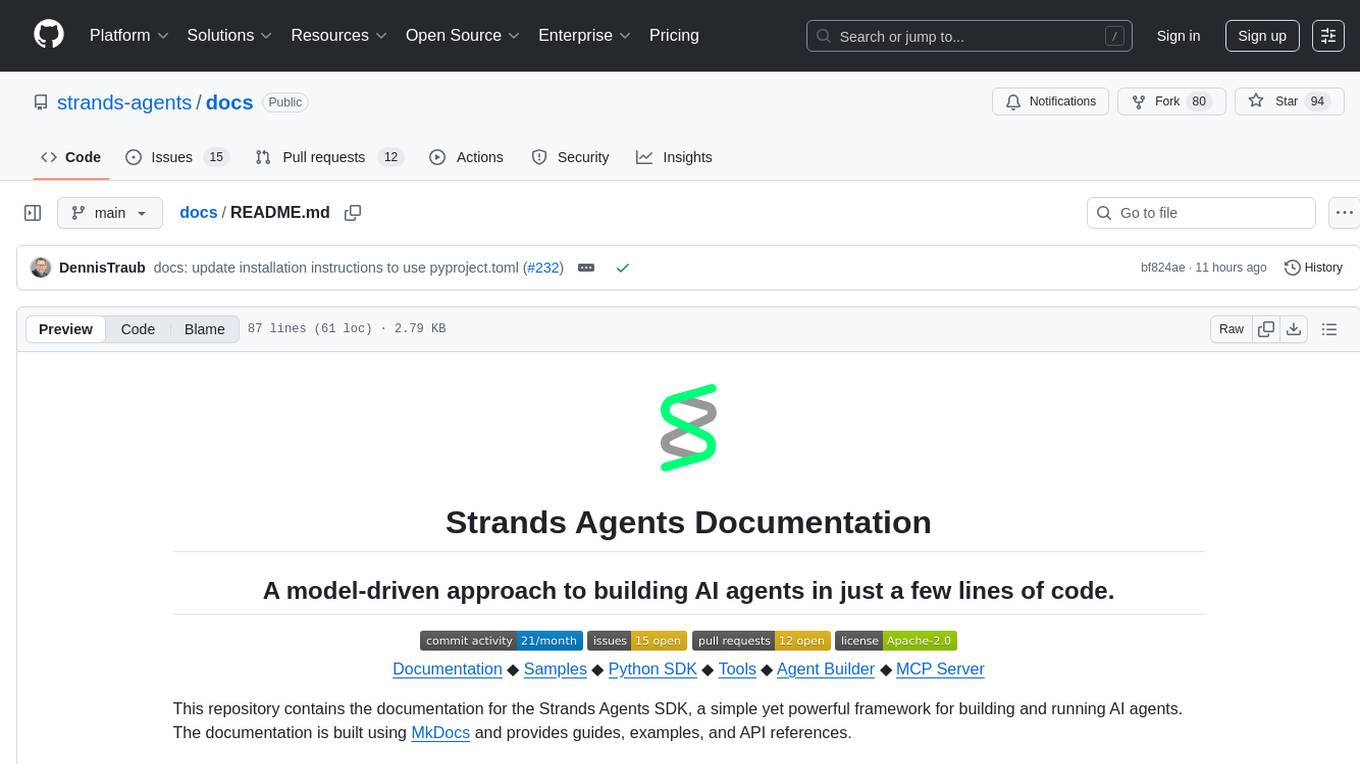

docs

This repository contains the documentation for the Strands Agents SDK, a simple yet powerful framework for building and running AI agents. The documentation is built using MkDocs and provides guides, examples, and API references. The official documentation is available online at: https://strandsagents.com.

airavata

Apache Airavata is a software framework for executing and managing computational jobs on distributed computing resources. It supports local clusters, supercomputers, national grids, academic and commercial clouds. Airavata utilizes service-oriented computing, distributed messaging, and workflow composition. It includes a server package with an API, client SDKs, and a general-purpose UI implementation called Apache Airavata Django Portal.

airflow-site

This repository contains the source code for the Apache Airflow website, including directories for archived documentation versions, landing pages, license templates, and the Sphinx theme. To work on the site locally, users need to install coreutils, Node.js, NPM, and HUGO, and run specific scripts provided in the repository. Contributors can refer to the contributor's guide for detailed instructions on how to contribute to the website.

rai

This repository contains core sources related to Robotics & AI. It serves as a submodule in integrated projects, providing a minimal Ubuntu-specific build system and development tests. The code originated around 2004 in Edinburgh and has grown over the years to encompass various functionalities for Robotics, ML, and AI. Users are advised to explore example projects using this bare code for a better understanding of its capabilities.

describer

Describer is a tool that analyzes codebases using AI to generate architectural overviews, documentation, explanations, bug reports, and more. It scans all files in a directory and uses Google's Gemini AI to provide insights such as markdown architectural overviews, codebase summaries, code pattern analysis, codebase structure documentation, bug identification, and test idea generation. The tool respects .gitignore rules by default but allows users to include/exclude specific files or patterns for analysis.

sdxl-lightning-demo-app

This repository contains a demo application showcasing the usage of the SDXL Lightning API by fal.ai. The application also demonstrates the functionality of the fal.realtime client. To get started, users need to have a Fal AI API key for model access. The setup involves adding the API key to the .env.local file, installing dependencies using 'npm install', and running the application with 'npm run dev'.

brokk

Brokk is a code assistant tool named after the Norse god of the forge. It is designed to understand code semantically, enabling LLMs to work effectively on large codebases. Users can sign up at Brokk.ai, install jbang, and follow instructions to run Brokk. The tool uses Gradle with Scala support and requires JDK 21 or newer for building. Brokk aims to enhance code comprehension and productivity by providing semantic understanding of code.

eShopSupport

eShopSupport is a sample .NET application showcasing common use cases and development practices for building AI solutions in .NET, specifically Generative AI. It demonstrates a customer support application for an e-commerce website using a services-based architecture with .NET Aspire. The application includes support for text classification, sentiment analysis, text summarization, synthetic data generation, and chat bot interactions. It also showcases development practices such as developing solutions locally, evaluating AI responses, leveraging Python projects, and deploying applications to the Cloud.

pyvespa

Vespa is a scalable open-source serving engine that enables users to store, compute, and rank big data at user serving time. Pyvespa provides a Python API to Vespa, allowing users to create, modify, deploy, and interact with running Vespa instances. The library's primary purpose is to facilitate faster prototyping and familiarization with Vespa features.

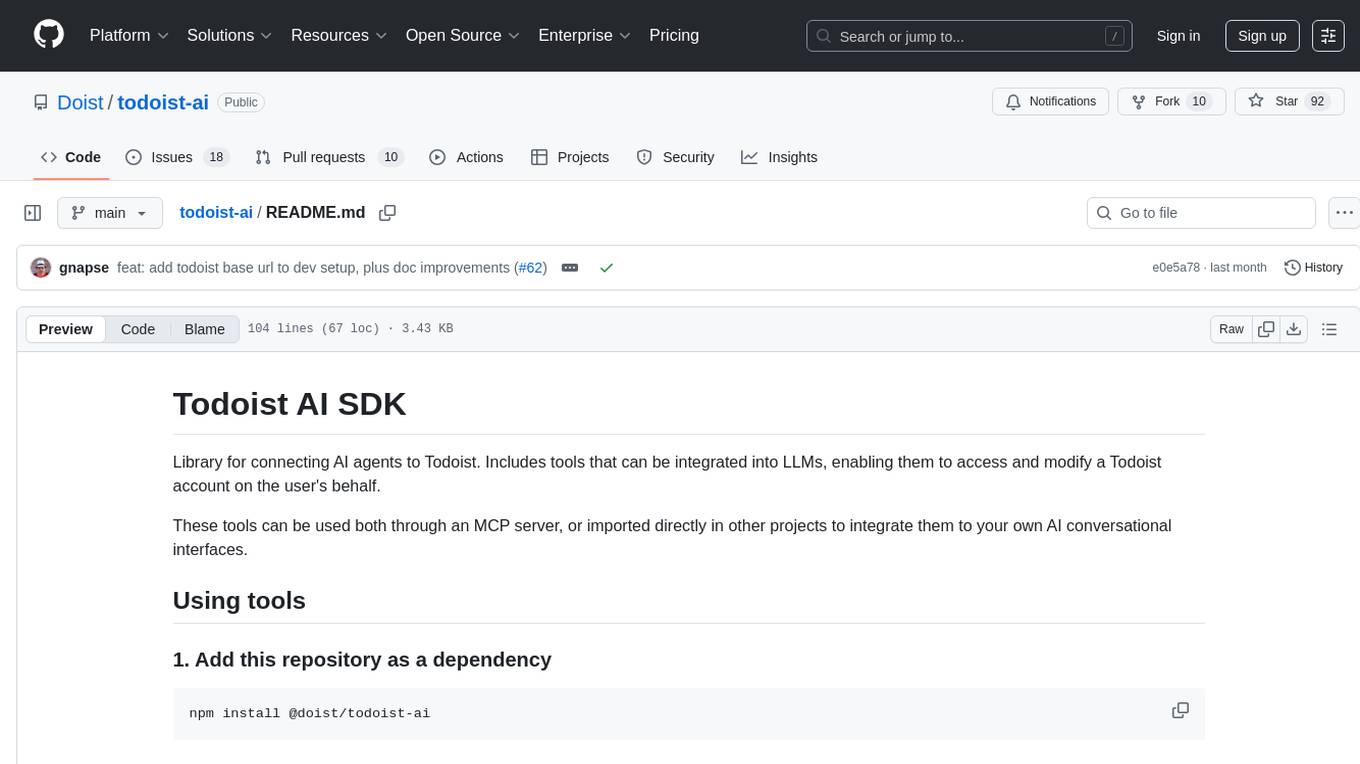

todoist-ai

Library for connecting AI agents to Todoist, enabling them to access and modify a Todoist account on the user's behalf. Tools can be used through an MCP server or integrated into other projects for AI conversational interfaces. Reusable tools allow for complete workflows, balancing flexibility and efficiency for LLMs. Early-stage project with more tools planned. Designed to provide a small set of tools for various AI interfaces.

batteries-included

Batteries Included is an all-in-one platform for building and running modern applications, simplifying cloud infrastructure complexity. It offers production-ready capabilities through an intuitive interface, focusing on automation, security, and enterprise-grade features. The platform includes databases like PostgreSQL and Redis, AI/ML capabilities with Jupyter notebooks, web services deployment, security features like SSL/TLS management, and monitoring tools like Grafana dashboards. Batteries Included is designed to streamline infrastructure setup and management, allowing users to concentrate on application development without dealing with complex configurations.

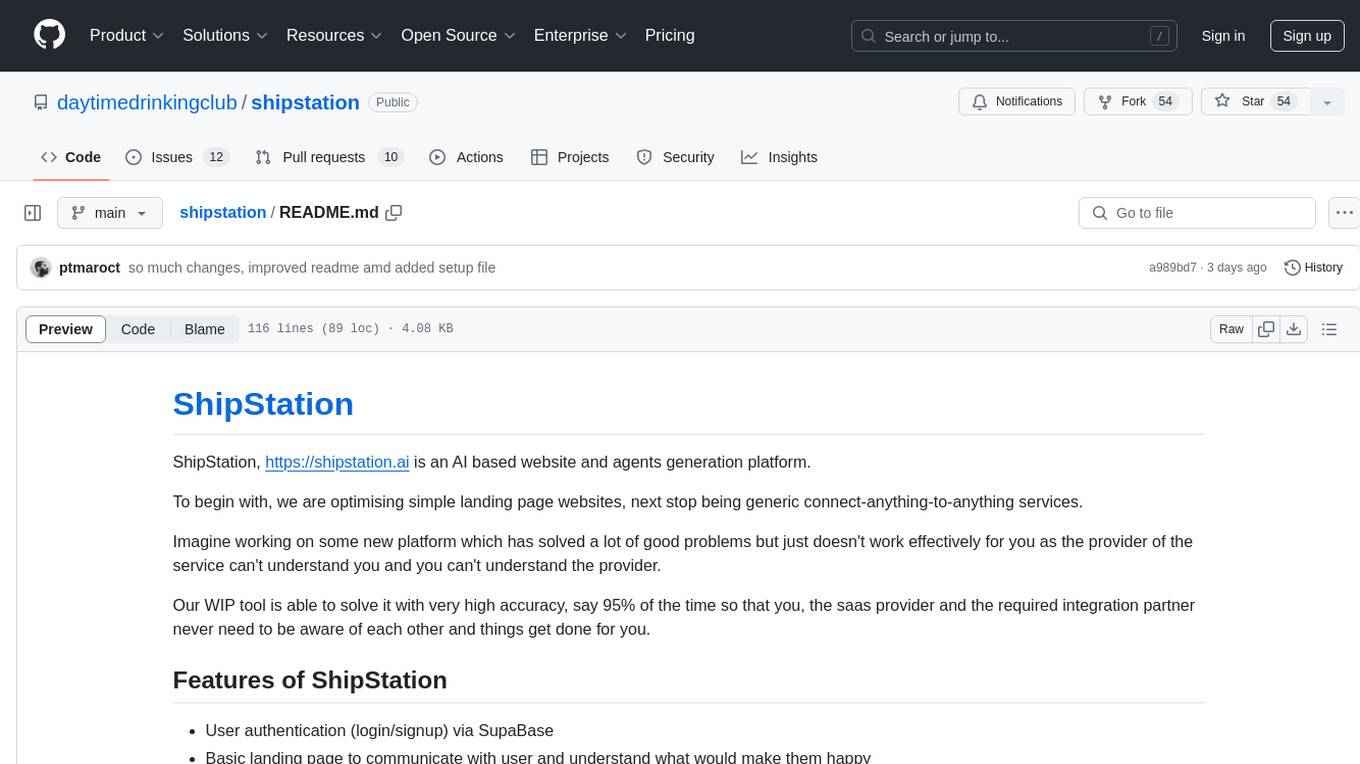

shipstation

ShipStation is an AI-based website and agents generation platform that optimizes landing page websites and generic connect-anything-to-anything services. It enables seamless communication between service providers and integration partners, offering features like user authentication, project management, code editing, payment integration, and real-time progress tracking. The project architecture includes server-side (Node.js) and client-side (React with Vite) components. Prerequisites include Node.js, npm or yarn, Anthropic API key, Supabase account, Tavily API key, and Razorpay account. Setup instructions involve cloning the repository, setting up Supabase, configuring environment variables, and starting the backend and frontend servers. Users can access the application through the browser, sign up or log in, create landing pages or portfolios, and get websites stored in an S3 bucket. Deployment to Heroku involves building the client project, committing changes, and pushing to the main branch. Contributions to the project are encouraged, and the license encourages doing good.

hide

Hide is a headless IDE that provides containerized development environments for codebases and exposes APIs for agents to interact with them. It spins up devcontainers, installs dependencies, and offers APIs for codebase interaction. Hide can be used to create custom toolkits or utilize pre-built toolkits for popular frameworks like Langchain. The Hide Runtime manages development containers and executes tasks, while the SDK provides APIs and toolkits for coding agents to interact with the codebase. Installation can be done via Homebrew or building from source, with Docker Engine as a prerequisite. The tool offers flexibility in managing development environments and simplifies codebase interaction for developers.

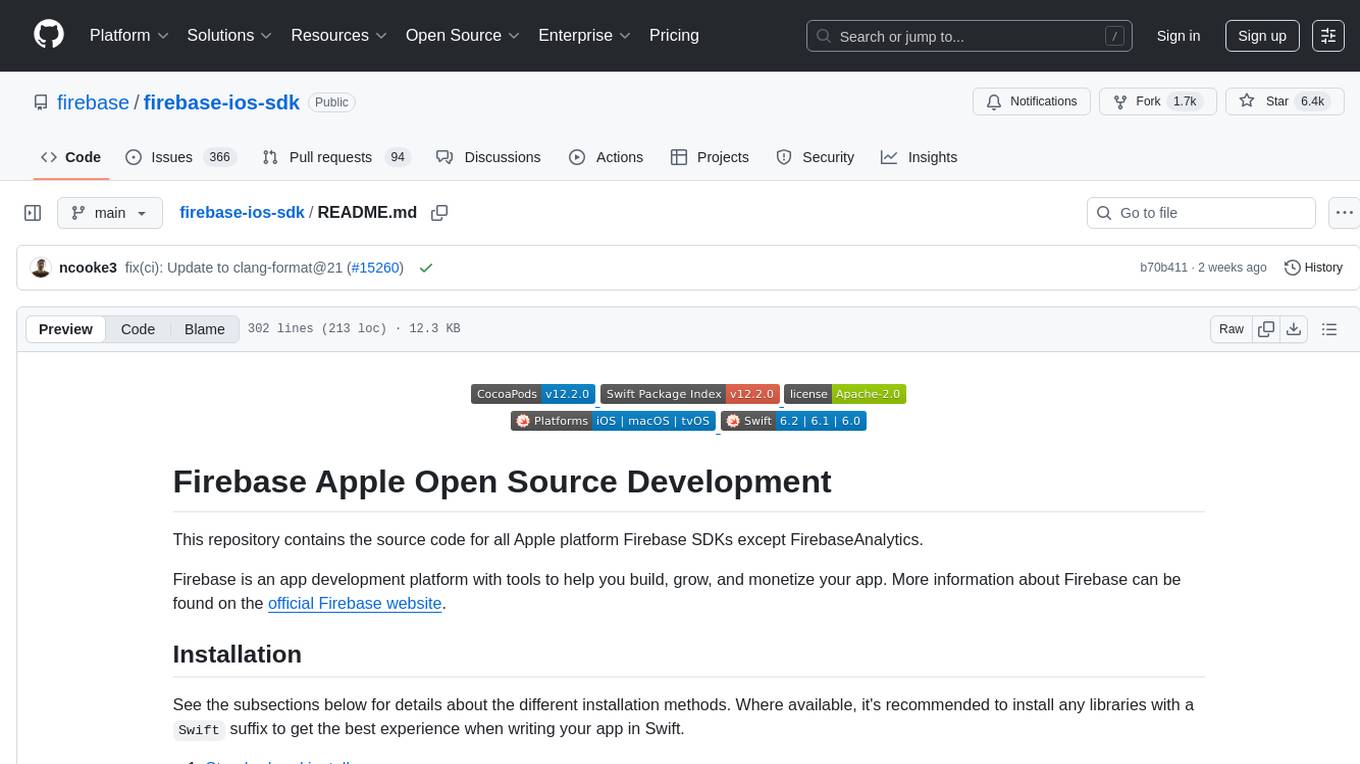

firebase-ios-sdk

This repository contains the source code for all Apple platform Firebase SDKs except FirebaseAnalytics. Firebase is an app development platform with tools to help you build, grow, and monetize your app. It provides installation methods like Standard pod install, Swift Package Manager, Installing from the GitHub repo, and Experimental Carthage. Development requires Xcode 16.2 or later, and supports CocoaPods and Swift Package Manager. The repository includes instructions for adding a new Firebase Pod, managing headers and imports, code formatting, running unit tests, running sample apps, and generating coverage reports. Specific component instructions are provided for Firebase AI Logic, Firebase Auth, Firebase Database, Firebase Dynamic Links, Firebase Performance Monitoring, Firebase Storage, and Push Notifications. Firebase also offers beta support for macOS, Catalyst, and tvOS, with community support for visionOS and watchOS.

For similar tasks

oaic

Open AI Cellular is the core software for Open AI Cellular. It provides documentation on installation, quick start guide, and usage. The repository contains submodules and requires sphinx with the read-the-docs theme for building core documentation. The resulting documentation is stored in the 'docs/build/html' directory.

docs

This repository contains documentation for a tool called Mintlify. It provides instructions on how to install Mintlify locally and test changes. Additionally, it includes information on redirecting from an old Netlify site to a new one using a toml file configuration. The documentation is built using Mintlify.

farfalle

Farfalle is an open-source AI-powered search engine that allows users to run their own local LLM or utilize the cloud. It provides a tech stack including Next.js for frontend, FastAPI for backend, Tavily for search API, Logfire for logging, and Redis for rate limiting. Users can get started by setting up prerequisites like Docker and Ollama, and obtaining API keys for Tavily, OpenAI, and Groq. The tool supports models like llama3, mistral, and gemma. Users can clone the repository, set environment variables, run containers using Docker Compose, and deploy the backend and frontend using services like Render and Vercel.

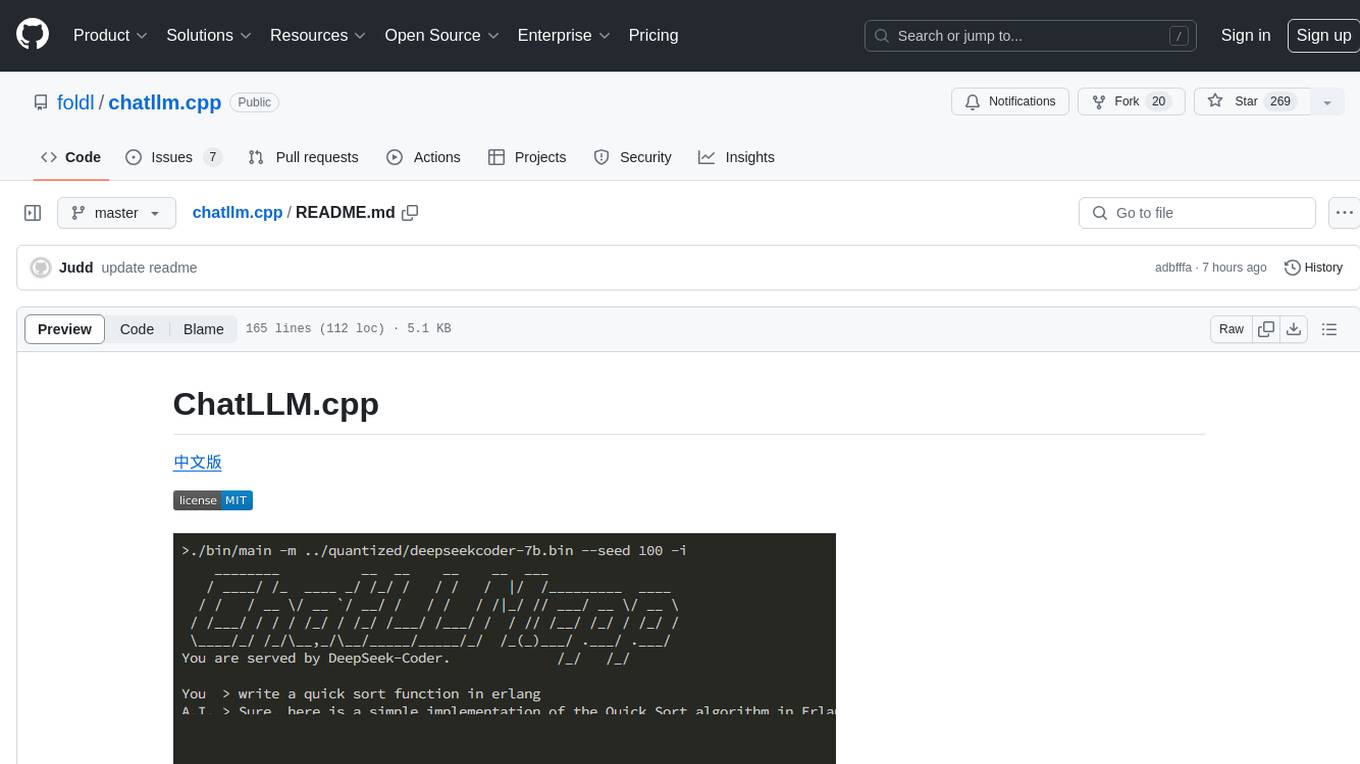

chatllm.cpp

ChatLLM.cpp is a pure C++ implementation tool for real-time chatting with RAG on your computer. It supports inference of various models ranging from less than 1B to more than 300B. The tool provides accelerated memory-efficient CPU inference with quantization, optimized KV cache, and parallel computing. It allows streaming generation with a typewriter effect and continuous chatting with virtually unlimited content length. ChatLLM.cpp also offers features like Retrieval Augmented Generation (RAG), LoRA, Python/JavaScript/C bindings, web demo, and more possibilities. Users can clone the repository, quantize models, build the project using make or CMake, and run quantized models for interactive chatting.

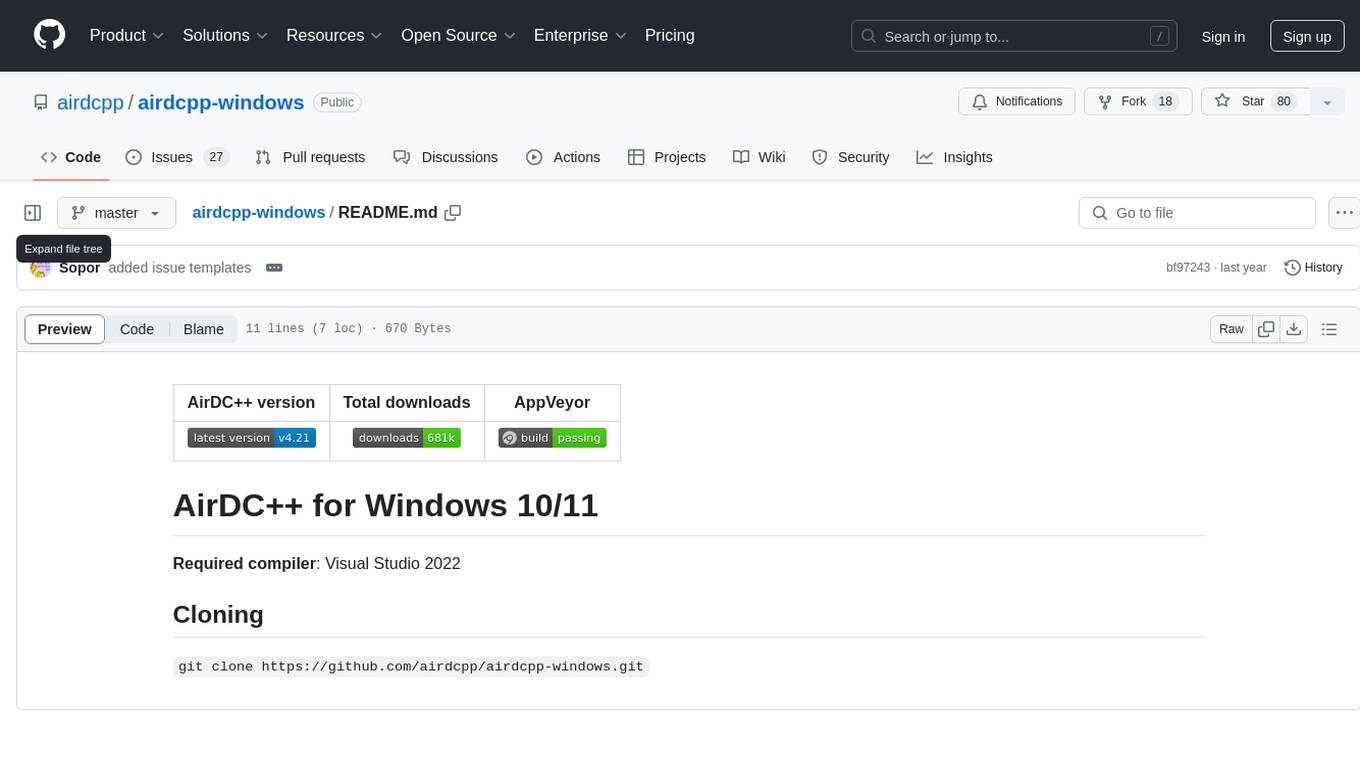

airdcpp-windows

AirDC++ for Windows 10/11 is a file sharing client with a focus on ease of use and performance. It is designed to provide a seamless experience for users looking to share and download files over the internet. The tool is built using Visual Studio 2022 and offers a range of features to enhance the file sharing process. Users can easily clone the repository to access the latest version and contribute to the development of the tool.

CJA_Comprehensive_Jailbreak_Assessment

This public repository contains the paper 'Comprehensive Assessment of Jailbreak Attacks Against LLMs'. It provides a labeling method to label results using Python and offers the opportunity to submit evaluation results to the leaderboard. Full codes will be released after the paper is accepted.

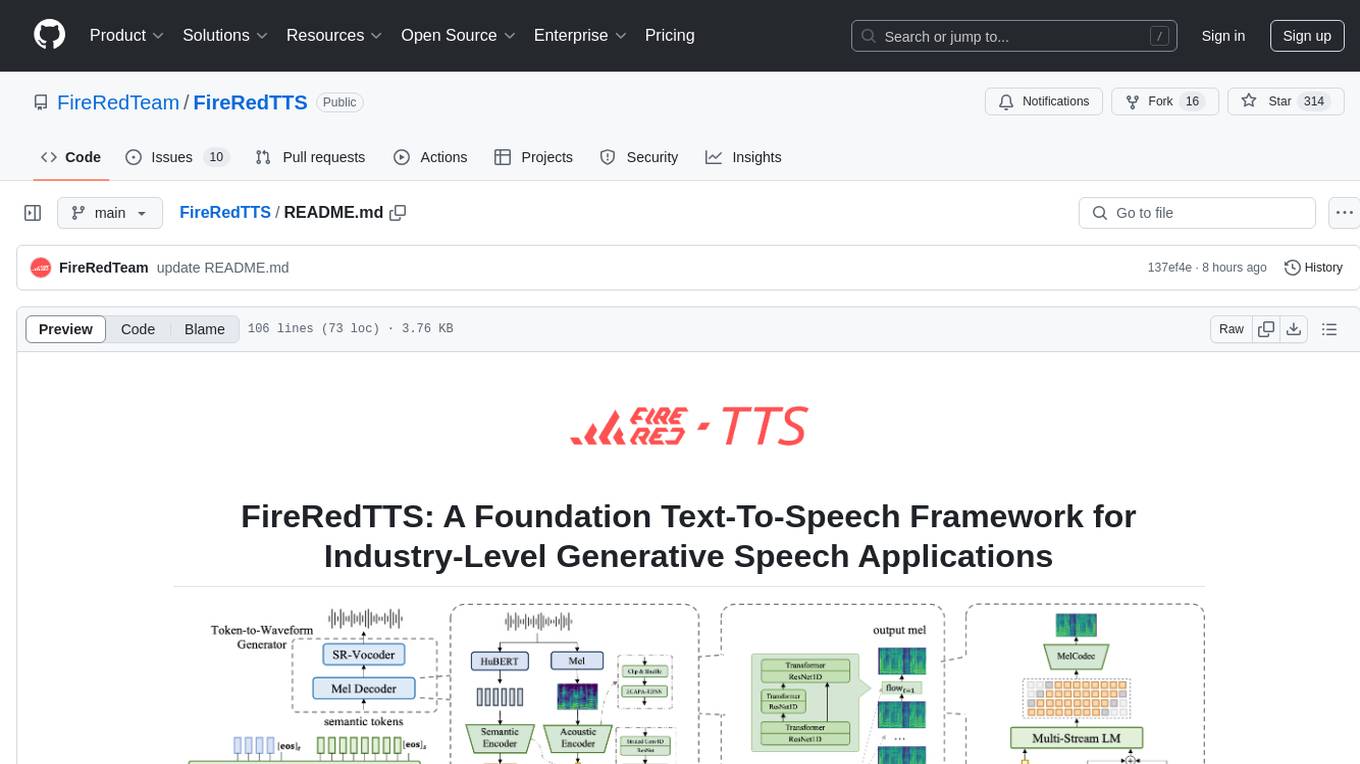

FireRedTTS

FireRedTTS is a foundation text-to-speech framework designed for industry-level generative speech applications. It offers a rich-punctuation model with expanded punctuation coverage and enhanced audio production consistency. The tool provides pre-trained checkpoints, inference code, and an interactive demo space. Users can clone the repository, create a conda environment, download required model files, and utilize the tool for synthesizing speech in various languages. FireRedTTS aims to enhance stability and provide controllable human-like speech generation capabilities.

lexido

Lexido is an innovative assistant for the Linux command line, designed to boost your productivity and efficiency. Powered by Gemini Pro 1.0 and utilizing the free API, Lexido offers smart suggestions for commands based on your prompts and importantly your current environment. Whether you're installing software, managing files, or configuring system settings, Lexido streamlines the process, making it faster and more intuitive.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.