aiexe

aiexe, the cutting-edge command-line interface (CLI/GUI) tool

Stars: 150

aiexe is a cutting-edge command-line interface (CLI) and graphical user interface (GUI) tool that integrates powerful AI capabilities directly into your terminal or desktop. It is designed for developers, tech enthusiasts, and anyone interested in AI-powered automation. aiexe provides an easy-to-use yet robust platform for executing complex tasks with just a few commands. Users can harness the power of various AI models from OpenAI, Anthropic, Ollama, Gemini, and GROQ to boost productivity and enhance decision-making processes.

README:

kstost/aiexe 프로젝트는 이제 kstost/aiexeauto-gui 리포지토리로 옮겨졌습니다.

최신 업데이트와 개발 진행 상황을 확인하려면 새로운 리포지토리(https://github.com/kstost/aiexeauto-gui)를 방문해 주세요.

기존 리포지토리는 더 이상 유지되지 않으며, 모든 작업은 새 리포지토리에서 이루어질 예정입니다. 감사합니다!

The kstost/aiexe project has been moved to the kstost/aiexeauto-gui repository.

Please visit the new repository (https://github.com/kstost/aiexeauto-gui) for the latest updates and development progress.

This existing repository will no longer be maintained, and all future work will take place in the new repository. Thank you!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for aiexe

Similar Open Source Tools

aiexe

aiexe is a cutting-edge command-line interface (CLI) and graphical user interface (GUI) tool that integrates powerful AI capabilities directly into your terminal or desktop. It is designed for developers, tech enthusiasts, and anyone interested in AI-powered automation. aiexe provides an easy-to-use yet robust platform for executing complex tasks with just a few commands. Users can harness the power of various AI models from OpenAI, Anthropic, Ollama, Gemini, and GROQ to boost productivity and enhance decision-making processes.

sycamore

Sycamore is a conversational search and analytics platform for complex unstructured data, such as documents, presentations, transcripts, embedded tables, and internal knowledge repositories. It retrieves and synthesizes high-quality answers through bringing AI to data preparation, indexing, and retrieval. Sycamore makes it easy to prepare unstructured data for search and analytics, providing a toolkit for data cleaning, information extraction, enrichment, summarization, and generation of vector embeddings that encapsulate the semantics of data. Sycamore uses your choice of generative AI models to make these operations simple and effective, and it enables quick experimentation and iteration. Additionally, Sycamore uses OpenSearch for indexing, enabling hybrid (vector + keyword) search, retrieval-augmented generation (RAG) pipelining, filtering, analytical functions, conversational memory, and other features to improve information retrieval.

spring-ai-alibaba

Spring AI Alibaba is an AI application framework for Java developers that seamlessly integrates with Alibaba Cloud QWen LLM services and cloud-native infrastructures. It provides features like support for various AI models, high-level AI agent abstraction, function calling, and RAG support. The framework aims to simplify the development, evaluation, deployment, and observability of AI native Java applications. It offers open-source framework and ecosystem integrations to support features like prompt template management, event-driven AI applications, and more.

freegenius

FreeGenius AI is an ambitious project offering a comprehensive suite of AI solutions that mirror the capabilities of LetMeDoIt AI. It is designed to engage in intuitive conversations, execute codes, provide up-to-date information, and perform various tasks. The tool is free, customizable, and provides access to real-time data and device information. It aims to support offline and online backends, open-source large language models, and optional API keys. Users can use FreeGenius AI for tasks like generating tweets, analyzing audio, searching financial data, checking weather, and creating maps.

web-ai-demos

Collection of client-side AI demos showcasing various AI applications using Chrome's built-in AI, Transformers.js, and Google's Gemma model through MediaPipe. Demos include weather description generation, summarization API, performance tips, utility functions, sentiment analysis, toxicity assessment, and streaming content using Server Sent Events.

jax-ai-stack

JAX AI Stack is a suite of libraries built around the JAX Python package for array-oriented computation and program transformation. It provides a growing ecosystem of packages for specialized numerical computing across various domains, encouraging modularity and innovation in domain-specific libraries. The stack includes core packages like JAX, flax for building neural networks, ml_dtypes for NumPy dtype extensions, optax for gradient processing and optimization, and orbax for checkpointing and persistence utilities. Optional packages like grain data loader and tensorflow are also available for installation.

aphrodite-engine

Aphrodite is an inference engine optimized for serving HuggingFace-compatible models at scale. It leverages vLLM's Paged Attention technology to deliver high-performance model inference for multiple concurrent users. The engine supports continuous batching, efficient key/value management, optimized CUDA kernels, quantization support, distributed inference, and modern samplers. It can be easily installed and launched, with Docker support for deployment. Aphrodite requires Linux or Windows OS, Python 3.8 to 3.12, and CUDA >= 11. It is designed to utilize 90% of GPU VRAM but offers options to limit memory usage. Contributors are welcome to enhance the engine.

genai-factory

GenAI Factory is a collection of end-to-end blueprints to deploy generative AI infrastructures in Google Cloud Platform (GCP), following security best practices. It embraces Infrastructure as Code (IaC) best practices, implements infrastructure in Terraform, and follows the least-privilege principle. The tool is compatible with Cloud Foundation Fabric FAST project-factory and application templates, allowing users to deploy various AI applications and systems on GCP.

aphrodite-engine

Aphrodite is the official backend engine for PygmalionAI, serving as the inference endpoint for the website. It allows serving Hugging Face-compatible models with fast speeds. Features include continuous batching, efficient K/V management, optimized CUDA kernels, quantization support, distributed inference, and 8-bit KV Cache. The engine requires Linux OS and Python 3.8 to 3.12, with CUDA >= 11 for build requirements. It supports various GPUs, CPUs, TPUs, and Inferentia. Users can limit GPU memory utilization and access full commands via CLI.

easydist

EasyDist is an automated parallelization system and infrastructure designed for multiple ecosystems. It offers usability by making parallelizing training or inference code effortless with just a single line of change. It ensures ecological compatibility by serving as a centralized source of truth for SPMD rules at the operator-level for various machine learning frameworks. EasyDist decouples auto-parallel algorithms from specific frameworks and IRs, allowing for the development and benchmarking of different auto-parallel algorithms in a flexible manner. The architecture includes MetaOp, MetaIR, and the ShardCombine Algorithm for SPMD sharding rules without manual annotations.

sdkit

sdkit (stable diffusion kit) is an easy-to-use library for utilizing Stable Diffusion in AI Art projects. It includes features like ControlNets, LoRAs, Textual Inversion Embeddings, GFPGAN, CodeFormer for face restoration, RealESRGAN for upscaling, k-samplers, support for custom VAEs, NSFW filter, model-downloader, parallel GPU support, and more. It offers a model database, auto-scanning for malicious models, and various optimizations. The API consists of modules for loading models, generating images, filters, model merging, and utilities, all managed through the sdkit.Context object.

azure-openai-service-proxy

The Azure OpenAI Proxy service aims to simplify access to an Azure OpenAI `Playground-like` experience by supporting Azure OpenAI SDKs, LangChain, and REST endpoints for developer events, workshops, and hackathons. Users can access the service using a timebound `event code`. The solution documentation is available for reference.

lmstudio-js

LM Studio Client SDK lmstudio-ts is LM Studio's official JavaScript/TypeScript client SDK. It allows you to use LLMs to respond in chats or predict text completions, define functions as tools, and turn LLMs into autonomous agents that run completely locally, load, configure, and unload models from memory, supports both browser and any Node-compatible environments, generate embeddings for text, and more! Why use `lmstudio-js` over `openai` sdk? Open AI's SDK is designed to use with Open AI's proprietary models. As such, it is missing many features that are essential for using LLMs in a local environment, such as managing loading and unloading models from memory, configuring load parameters (context length, gpu offload settings, etc.), speculative decoding, getting information (such as context length, model size, etc.) about a model, and more. In addition, while `openai` sdk is automatically generated, `lmstudio-js` is designed from ground-up to be clean and easy to use for TypeScript/JavaScript developers.

soul-engine

OPEN SOULS offers developers clean, simple, and extensible abstractions for directing the cognitive processes of large language models (LLMs), streamlining the creation of more effective and engaging AI souls. This repo is the public, monorepo hosting our open source core, our command line tool, and code for interacting with the hosted Soul Engine. AI Souls are agentic and embodied digital beings, one day comprising thousands of mental processes (managed by the Soul Engine). Unlike traditional chatbots, this code will give digital souls personality, drive, ego, and will.

llm-search

pyLLMSearch is an advanced RAG system that offers a convenient question-answering system with a simple YAML-based configuration. It enables interaction with multiple collections of local documents, with improvements in document parsing, hybrid search, chat history, deep linking, re-ranking, customizable embeddings, and more. The package is designed to work with custom Large Language Models (LLMs) from OpenAI or installed locally. It supports various document formats, incremental embedding updates, dense and sparse embeddings, multiple embedding models, 'Retrieve and Re-rank' strategy, HyDE (Hypothetical Document Embeddings), multi-querying, chat history, and interaction with embedded documents using different models. It also offers simple CLI and web interfaces, deep linking, offline response saving, and an experimental API.

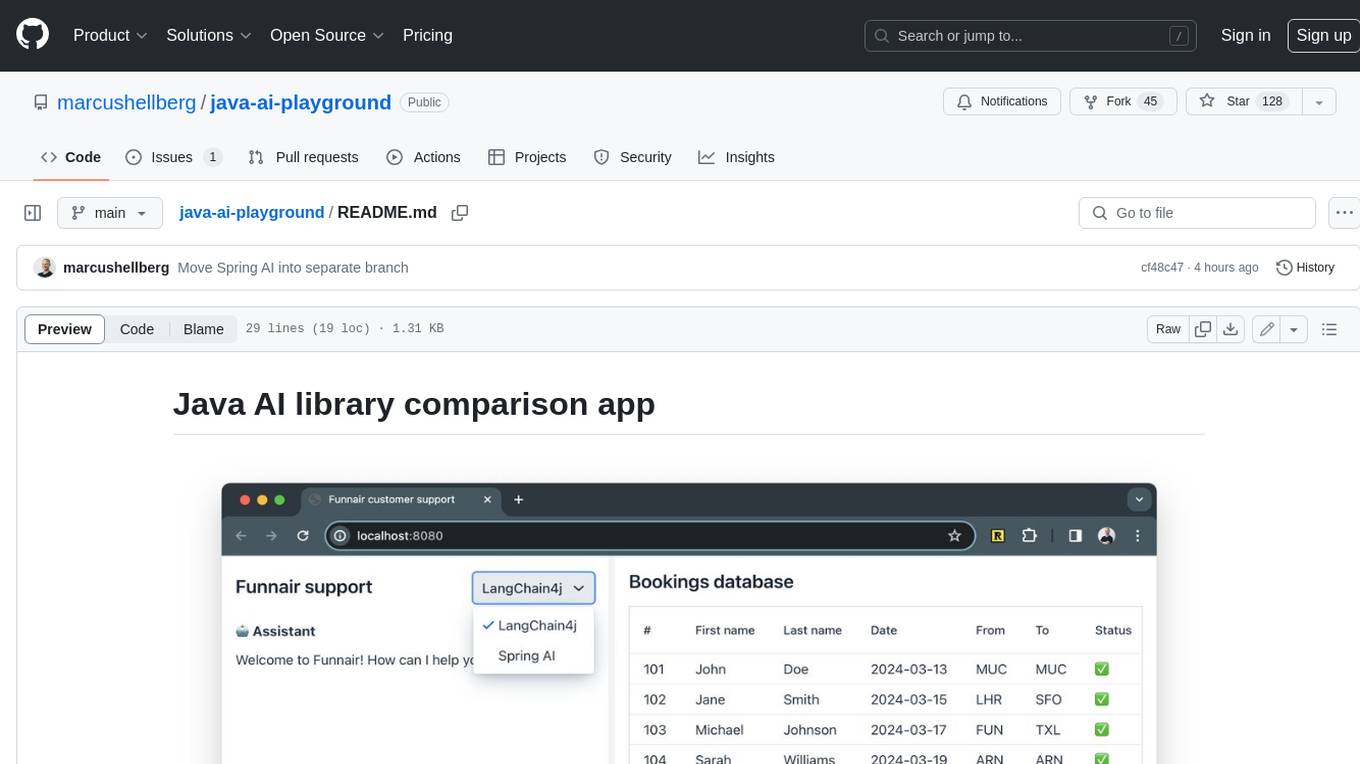

java-ai-playground

This AI-powered customer support application has access to terms and conditions (retrieval augmented generation, RAG), can access tools (Java methods) to perform actions, and uses an LLM to interact with the user. The application includes implementations for LangChain4j in the `main` branch and Spring AI in the `spring-ai` branch. The UI is built using Vaadin Hilla and the backend is built using Spring Boot.

For similar tasks

aiexe

aiexe is a cutting-edge command-line interface (CLI) and graphical user interface (GUI) tool that integrates powerful AI capabilities directly into your terminal or desktop. It is designed for developers, tech enthusiasts, and anyone interested in AI-powered automation. aiexe provides an easy-to-use yet robust platform for executing complex tasks with just a few commands. Users can harness the power of various AI models from OpenAI, Anthropic, Ollama, Gemini, and GROQ to boost productivity and enhance decision-making processes.

AGiXT

AGiXT is a dynamic Artificial Intelligence Automation Platform engineered to orchestrate efficient AI instruction management and task execution across a multitude of providers. Our solution infuses adaptive memory handling with a broad spectrum of commands to enhance AI's understanding and responsiveness, leading to improved task completion. The platform's smart features, like Smart Instruct and Smart Chat, seamlessly integrate web search, planning strategies, and conversation continuity, transforming the interaction between users and AI. By leveraging a powerful plugin system that includes web browsing and command execution, AGiXT stands as a versatile bridge between AI models and users. With an expanding roster of AI providers, code evaluation capabilities, comprehensive chain management, and platform interoperability, AGiXT is consistently evolving to drive a multitude of applications, affirming its place at the forefront of AI technology.

claude.vim

Claude.vim is a Vim plugin that integrates Claude, an AI pair programmer, into your Vim workflow. It allows you to chat with Claude about what to build or how to debug problems, and Claude offers opinions, proposes modifications, or even writes code. The plugin provides a chat/instruction-centric interface optimized for human collaboration, with killer features like access to chat history and vimdiff interface. It can refactor code, modify or extend selected pieces of code, execute complex tasks by reading documentation, cloning git repositories, and more. Note that it is early alpha software and expected to rapidly evolve.

mistreevous

Mistreevous is a library written in TypeScript for Node and browsers, used to declaratively define, build, and execute behaviour trees for creating complex AI. It allows defining trees with JSON or a minimal DSL, providing in-browser editor and visualizer. The tool offers methods for tree state, stepping, resetting, and getting node details, along with various composite, decorator, leaf nodes, callbacks, guards, and global functions/subtrees. Version history includes updates for node types, callbacks, global functions, and TypeScript conversion.

project_alice

Alice is an agentic workflow framework that integrates task execution and intelligent chat capabilities. It provides a flexible environment for creating, managing, and deploying AI agents for various purposes, leveraging a microservices architecture with MongoDB for data persistence. The framework consists of components like APIs, agents, tasks, and chats that interact to produce outputs through files, messages, task results, and URL references. Users can create, test, and deploy agentic solutions in a human-language framework, making it easy to engage with by both users and agents. The tool offers an open-source option, user management, flexible model deployment, and programmatic access to tasks and chats.

flock

Flock is a workflow-based low-code platform that enables rapid development of chatbots, RAG applications, and coordination of multi-agent teams. It offers a flexible, low-code solution for orchestrating collaborative agents, supporting various node types for specific tasks, such as input processing, text generation, knowledge retrieval, tool execution, intent recognition, answer generation, and more. Flock integrates LangChain and LangGraph to provide offline operation capabilities and supports future nodes like Conditional Branch, File Upload, and Parameter Extraction for creating complex workflows. Inspired by StreetLamb, Lobe-chat, Dify, and fastgpt projects, Flock introduces new features and directions while leveraging open-source models and multi-tenancy support.

RoboMatrix

RoboMatrix is a skill-centric hierarchical framework for scalable robot task planning and execution in an open-world environment. It provides a structured approach to robot task execution using a combination of hardware components, environment configuration, installation procedures, and data collection methods. The framework is developed using the ROS2 framework on Ubuntu and supports robots from DJI's RoboMaster series. Users can follow the provided installation guidance to set up RoboMatrix and utilize it for various tasks such as data collection, task execution, and dataset construction. The framework also includes a supervised fine-tuning dataset and aims to optimize communication and release additional components in the future.

agentscript

AgentScript is an open-source framework for building AI agents that think in code. It prompts a language model to generate JavaScript code, which is then executed in a dedicated runtime with resumability, state persistence, and interactivity. The framework allows for abstract task execution without needing to know all the data beforehand, making it flexible and efficient. AgentScript supports tools, deterministic functions, and LLM-enabled functions, enabling dynamic data processing and decision-making. It also provides state management and human-in-the-loop capabilities, allowing for pausing, serialization, and resumption of execution.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.