RoboMatrix

The Official Implementation of RoboMatrix

Stars: 73

RoboMatrix is a skill-centric hierarchical framework for scalable robot task planning and execution in an open-world environment. It provides a structured approach to robot task execution using a combination of hardware components, environment configuration, installation procedures, and data collection methods. The framework is developed using the ROS2 framework on Ubuntu and supports robots from DJI's RoboMaster series. Users can follow the provided installation guidance to set up RoboMatrix and utilize it for various tasks such as data collection, task execution, and dataset construction. The framework also includes a supervised fine-tuning dataset and aims to optimize communication and release additional components in the future.

README:

English | 简体中文

RoboMatrix: A Skill-centric Hierarchical Framework for Scalable Robot Task Planning and Execution in Open-World

📝Paper | 🌍Project Page | 🛢️Data

- [2025/01/08] 🔥 We release data collection method.

- [2024/12/04] 🔥 We release the RoboMatrix supervised fine-tuning (SFT) dataset containing 1,500 high-quality human-annotated demonstration videos.

We use robots from DJI’s RoboMaster series as the hardware platform, including the Engineering Robot (EP) and the Warrior Robot (S1). These two forms of robots share some common components, including the mobile chassis, monocular RGB camera, audio module, and controller. Additionally, each robot is equipped with a unique set of components to perform specific tasks, such as the target shooting capability of the S1 robot and the target grasping capability of the EP robot.

We modified the EP robot by mounting the camera above the robot to prevent the camera’s viewpoint from changing with the movement of the robotic arm. See 3D_Printing for the parts of the designed camera mount.

We use BEITONG ASURA 2PRO+ GAMEPAD NEARLINK VERSION as the controller for robot teleoperation.

We developed RoboMatrix using the ROS2 framework on Ubuntu 20.04. You can follow the official installation guidance to complete the installation of the Foxy distro of ROS2 and the necessary tools. In addition, we passed the test on Ubuntu 22.04 (ROS2 Humble), which may provide some reference for you if you want to install RoboMatrix in a later version of Ubuntu.

We provide a general installation procedure for ROS2, this might give you some help. If you already have it installed on your system, please skip this step.

ROS2 Installation

Open a terminal, check weather your system supports UTF-8.

localeIf not support (no output in terminal), please install.

sudo locale-gen en_US en_US.UTF-8

sudo update-locale LC_ALL=en_US.UTF-8 LANG=en_US.UTF-8

export LANG=en_US.UTF-8Open a terminal, check weather your system supports Ubuntu Universe.

apt-cache policy | grep universeIf not support (no output in terminal), please install.

sudo apt install software-properties-common

sudo add-apt-repository universesudo apt update && sudo apt install curl gnupg2 lsb-release

sudo curl -sSL https://raw.githubusercontent.com/ros/rosdistro/master/ros.key -o /usr/share/keyrings/ros-archive-keyring.gpg

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/ros-archive-keyring.gpg] http://packages.ros.org/ros2/ubuntu $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/ros2.list > /dev/nullInstall the specified version of ROS2, using Foxy as an example.

sudo apt update

sudo apt install ros-foxy-desktopecho "source /opt/ros/foxy/setup.bash" >> ~/.bashrc

source .bashrcOpen a terminal, start talker node.

ros2 run demo_nodes_cpp talkerOpen a new terminal, start listener node.

ros2 run demo_nodes_cpp listenersudo apt install python3-colcon-common-extensionsgit clone https://github.com/WayneMao/RoboMatrix.git

cd ~/RoboMatrix && colcon buildInstall dependencies.

sudo apt install libopus-dev python3-pip

python3 -m pip install -U numpy numpy-quaternion pyyamlInstall SDK from source code.

python3 -m pip install git+https://github.com/jeguzzi/RoboMaster-SDK.git

python3 -m pip install git+https://github.com/jeguzzi/RoboMaster-SDK.git#"egg=libmedia_codec&subdirectory=lib/libmedia_codec"pip install -r requirements.txt

pip install torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 --index-url https://download.pytorch.org/whl/cu118cd ~/RoboMatrix/robomatrix_client/robomatrix_client

git clone https://github.com/IDEA-Research/Grounding-DINO-1.5-API.git

cd Grounding-DINO-1.5-API

pip install -v -e .Download the RoboMaster official APP, follow the instructions to connect the robot to WiFi (only WiFi5), and connect the computer to the same WiFi to complete the connection.

source ~/RoboMatrixinstall/setup.bash

ros2 launch robomaster_ros collect_data.launch.py name:=example idx:=1 dir:=~/RoboMatrixDatasets| Parameter | Definition | Example |

|---|---|---|

| name | A custom task name | move_to_box |

| idx | The sequence number of the current episode of the task | 10 |

| dir | The folder where the data is saved | ~/MyDatasets |

NOTEs

- Make sure the robot is successfully connected to the specified WIFI before launching the launch file.

- Make sure the controller's button mode is XBOX, which you can view in the terminal. In the case of BEITONG, long press the

POWERbutton to switch. - Ensure that the robot initialization is complete before proceeding with the following operations.

By pressing the START button, the robot's status begins to be recorded and the other buttons on the handle are activated, allowing control of the robot's movement.

The control mode of the robot chassis is speed control. The RS axis controls the translation speed of the chassis, and the LT and RT axes control the rotation speed of the chassis.

The control mode of the robot arm is position control. The HAT key set changes the position of the end of the robot arm in the plane. Each press moves its position a fixed distance in the specified direction.

The gripper control is binarized. The A button controls the gripper open to the maximum, and the B button controls the gripper closed to the maximum.

Press the BACK button to save the data, then press the POWER button to clean the ROS2 node and wait for the video to finish saving.

Comming soon.

Comming soon.

- [ ] Package Docker

- [X] 🤗 Release Supervised Fine-tuning dataset

- [x] Optimize VLA ROS communication

- [ ] Open source VLA Skill model code

- [ ] Release VLA Skill model weights

- [ ] Open source Shooting code

If you find our work helpful, please cite us:

@article{mao2024robomatrix,

title={RoboMatrix: A Skill-centric Hierarchical Framework for Scalable Robot Task Planning and Execution in Open-World},

author={Mao, Weixin and Zhong, Weiheng and Jiang, Zhou and Fang, Dong and Zhang, Zhongyue and Lan, Zihan and Jia, Fan and Wang, Tiancai and Fan, Haoqiang and Yoshie, Osamu},

journal={arXiv preprint arXiv:2412.00171},

year={2024}

}- Implementation of Vision-Language-Action (VLA) skill model is based on LLaVA.

- RoboMatrix-ROS is based on official RoboMaster-SDK, modified RoboMaster-SDK and ROS2.

- Some additional libraries: Grounding-DINO-1.5, YOLO-World.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for RoboMatrix

Similar Open Source Tools

RoboMatrix

RoboMatrix is a skill-centric hierarchical framework for scalable robot task planning and execution in an open-world environment. It provides a structured approach to robot task execution using a combination of hardware components, environment configuration, installation procedures, and data collection methods. The framework is developed using the ROS2 framework on Ubuntu and supports robots from DJI's RoboMaster series. Users can follow the provided installation guidance to set up RoboMatrix and utilize it for various tasks such as data collection, task execution, and dataset construction. The framework also includes a supervised fine-tuning dataset and aims to optimize communication and release additional components in the future.

termax

Termax is an LLM agent in your terminal that converts natural language to commands. It is featured by: - Personalized Experience: Optimize the command generation with RAG. - Various LLMs Support: OpenAI GPT, Anthropic Claude, Google Gemini, Mistral AI, and more. - Shell Extensions: Plugin with popular shells like `zsh`, `bash` and `fish`. - Cross Platform: Able to run on Windows, macOS, and Linux.

manifold

Manifold is a powerful platform for workflow automation using AI models. It supports text generation, image generation, and retrieval-augmented generation, integrating seamlessly with popular AI endpoints. Additionally, Manifold provides robust semantic search capabilities using PGVector combined with the SEFII engine. It is under active development and not production-ready.

SWELancer-Benchmark

SWE-Lancer is a benchmark repository containing datasets and code for the paper 'SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?'. It provides instructions for package management, building Docker images, configuring environment variables, and running evaluations. Users can use this tool to assess the performance of language models in real-world freelance software engineering tasks.

torchchat

torchchat is a codebase showcasing the ability to run large language models (LLMs) seamlessly. It allows running LLMs using Python in various environments such as desktop, server, iOS, and Android. The tool supports running models via PyTorch, chatting, generating text, running chat in the browser, and running models on desktop/server without Python. It also provides features like AOT Inductor for faster execution, running in C++ using the runner, and deploying and running on iOS and Android. The tool supports popular hardware and OS including Linux, Mac OS, Android, and iOS, with various data types and execution modes available.

CoML

CoML (formerly MLCopilot) is an interactive coding assistant for data scientists and machine learning developers, empowered on large language models. It offers an out-of-the-box interactive natural language programming interface for data mining and machine learning tasks, integration with Jupyter lab and Jupyter notebook, and a built-in large knowledge base of machine learning to enhance the ability to solve complex tasks. The tool is designed to assist users in coding tasks related to data analysis and machine learning using natural language commands within Jupyter environments.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

xlang

XLang™ is a cutting-edge language designed for AI and IoT applications, offering exceptional dynamic and high-performance capabilities. It excels in distributed computing and seamless integration with popular languages like C++, Python, and JavaScript. Notably efficient, running 3 to 5 times faster than Python in AI and deep learning contexts. Features optimized tensor computing architecture for constructing neural networks through tensor expressions. Automates tensor data flow graph generation and compilation for specific targets, enhancing GPU performance by 6 to 10 times in CUDA environments.

ControlLLM

ControlLLM is a framework that empowers large language models to leverage multi-modal tools for solving complex real-world tasks. It addresses challenges like ambiguous user prompts, inaccurate tool selection, and inefficient tool scheduling by utilizing a task decomposer, a Thoughts-on-Graph paradigm, and an execution engine with a rich toolbox. The framework excels in tasks involving image, audio, and video processing, showcasing superior accuracy, efficiency, and versatility compared to existing methods.

docetl

DocETL is a tool for creating and executing data processing pipelines, especially suited for complex document processing tasks. It offers a low-code, declarative YAML interface to define LLM-powered operations on complex data. Ideal for maximizing correctness and output quality for semantic processing on a collection of data, representing complex tasks via map-reduce, maximizing LLM accuracy, handling long documents, and automating task retries based on validation criteria.

trieve

Trieve is an advanced relevance API for hybrid search, recommendations, and RAG. It offers a range of features including self-hosting, semantic dense vector search, typo tolerant full-text/neural search, sub-sentence highlighting, recommendations, convenient RAG API routes, the ability to bring your own models, hybrid search with cross-encoder re-ranking, recency biasing, tunable popularity-based ranking, filtering, duplicate detection, and grouping. Trieve is designed to be flexible and customizable, allowing users to tailor it to their specific needs. It is also easy to use, with a simple API and well-documented features.

BuildCLI

BuildCLI is a command-line interface (CLI) tool designed for managing and automating common tasks in Java project development. It simplifies the development process by allowing users to create, compile, manage dependencies, run projects, generate documentation, manage configuration profiles, dockerize projects, integrate CI/CD tools, and generate structured changelogs. The tool aims to enhance productivity and streamline Java project management by providing a range of functionalities accessible directly from the terminal.

pipecat-flows

Pipecat Flows is a framework designed for building structured conversations in AI applications. It allows users to create both predefined conversation paths and dynamically generated flows, handling state management and LLM interactions. The framework includes a Python module for building conversation flows and a visual editor for designing and exporting flow configurations. Pipecat Flows is suitable for scenarios such as customer service scripts, intake forms, personalized experiences, and complex decision trees.

LLM-Engineers-Handbook

The LLM Engineer's Handbook is an official repository containing a comprehensive guide on creating an end-to-end LLM-based system using best practices. It covers data collection & generation, LLM training pipeline, a simple RAG system, production-ready AWS deployment, comprehensive monitoring, and testing and evaluation framework. The repository includes detailed instructions on setting up local and cloud dependencies, project structure, installation steps, infrastructure setup, pipelines for data processing, training, and inference, as well as QA, tests, and running the project end-to-end.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

company-research-agent

Agentic Company Researcher is a multi-agent tool that generates comprehensive company research reports by utilizing a pipeline of AI agents to gather, curate, and synthesize information from various sources. It features multi-source research, AI-powered content filtering, real-time progress streaming, dual model architecture, modern React frontend, and modular architecture. The tool follows an agentic framework with specialized research and processing nodes, leverages separate models for content generation, uses a content curation system for relevance scoring and document processing, and implements a real-time communication system via WebSocket connections. Users can set up the tool quickly using the provided setup script or manually, and it can also be deployed using Docker and Docker Compose. The application can be used for local development and deployed to various cloud platforms like AWS Elastic Beanstalk, Docker, Heroku, and Google Cloud Run.

For similar tasks

RoboMatrix

RoboMatrix is a skill-centric hierarchical framework for scalable robot task planning and execution in an open-world environment. It provides a structured approach to robot task execution using a combination of hardware components, environment configuration, installation procedures, and data collection methods. The framework is developed using the ROS2 framework on Ubuntu and supports robots from DJI's RoboMaster series. Users can follow the provided installation guidance to set up RoboMatrix and utilize it for various tasks such as data collection, task execution, and dataset construction. The framework also includes a supervised fine-tuning dataset and aims to optimize communication and release additional components in the future.

morgana-form

MorGana Form is a full-stack form builder project developed using Next.js, React, TypeScript, Ant Design, PostgreSQL, and other technologies. It allows users to quickly create and collect data through survey forms. The project structure includes components, hooks, utilities, pages, constants, Redux store, themes, types, server-side code, and component packages. Environment variables are required for database settings, NextAuth login configuration, and file upload services. Additionally, the project integrates an AI model for form generation using the Ali Qianwen model API.

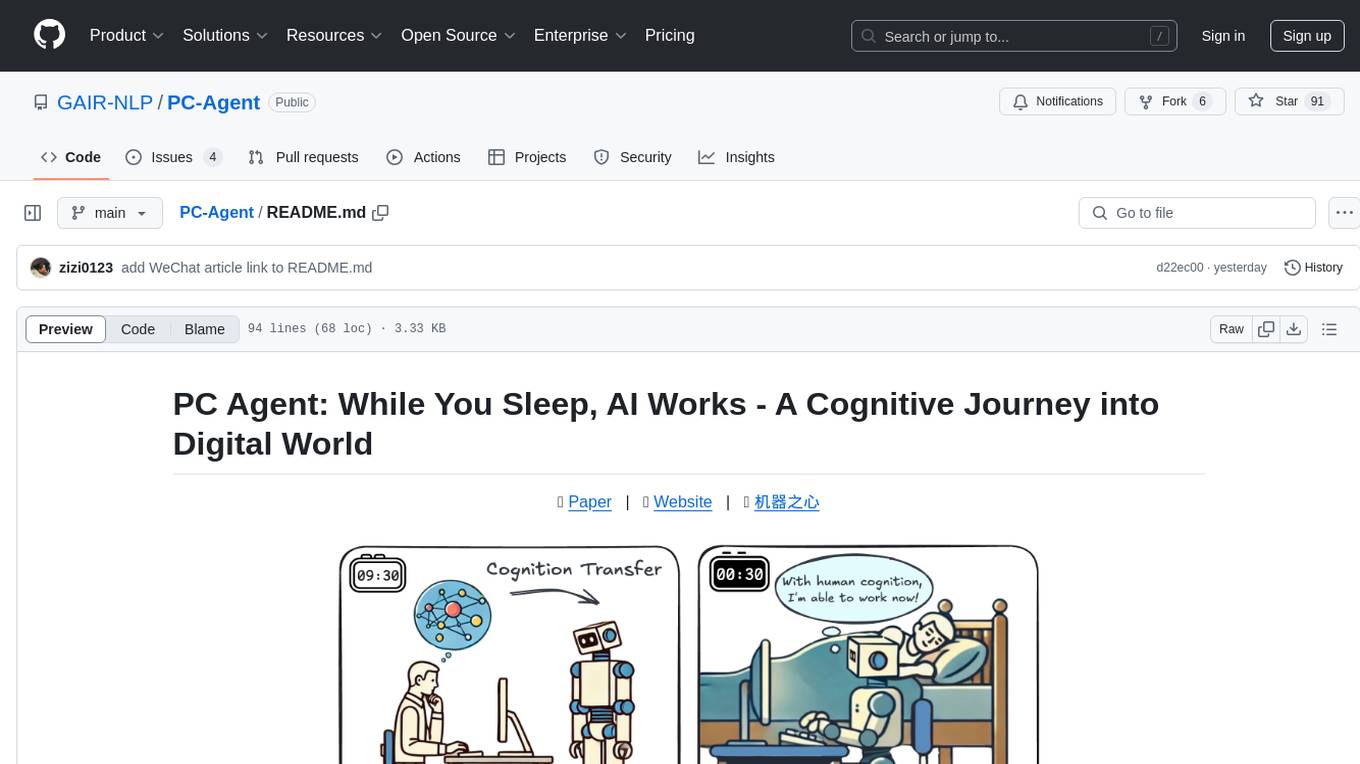

PC-Agent

PC Agent introduces a novel framework to empower autonomous digital agents through human cognition transfer. It consists of PC Tracker for data collection, Cognition Completion for transforming raw data, and a multi-agent system for decision-making and visual grounding. Users can set up the tool in Python environment, customize data collection with PC Tracker, process data into cognitive trajectories, and run the multi-agent system. The tool aims to enable AI to work autonomously while users sleep, providing a cognitive journey into the digital world.

LLM-Engineers-Handbook

The LLM Engineer's Handbook is an official repository containing a comprehensive guide on creating an end-to-end LLM-based system using best practices. It covers data collection & generation, LLM training pipeline, a simple RAG system, production-ready AWS deployment, comprehensive monitoring, and testing and evaluation framework. The repository includes detailed instructions on setting up local and cloud dependencies, project structure, installation steps, infrastructure setup, pipelines for data processing, training, and inference, as well as QA, tests, and running the project end-to-end.

qiaoqiaoyun

Qiaoqiaoyun is a new generation zero-code product that combines an AI application development platform, AI knowledge base, and zero-code platform, helping enterprises quickly build personalized business applications in an AI way. Users can build personalized applications that meet business needs without any code. Qiaoqiaoyun has comprehensive application building capabilities, form engine, workflow engine, and dashboard engine, meeting enterprise's normal requirements. It is also an AI application development platform based on LLM large language model and RAG open-source knowledge base question-answering system.

phosphobot

Phosphobot is a software tool designed to control robots, record data, train and use VLA (vision language action) models. It allows users to control robots using various input methods, train action models with ease, and is compatible with a range of robots and cameras. The tool runs on multiple operating systems and provides a user-friendly API for developers. Users can purchase a starter pack or use supported robots, record datasets, train action models, and control robots using the webapp or Python package. Phosphobot is open source, allowing users to extend its functionality with their own robots and cameras.

AGiXT

AGiXT is a dynamic Artificial Intelligence Automation Platform engineered to orchestrate efficient AI instruction management and task execution across a multitude of providers. Our solution infuses adaptive memory handling with a broad spectrum of commands to enhance AI's understanding and responsiveness, leading to improved task completion. The platform's smart features, like Smart Instruct and Smart Chat, seamlessly integrate web search, planning strategies, and conversation continuity, transforming the interaction between users and AI. By leveraging a powerful plugin system that includes web browsing and command execution, AGiXT stands as a versatile bridge between AI models and users. With an expanding roster of AI providers, code evaluation capabilities, comprehensive chain management, and platform interoperability, AGiXT is consistently evolving to drive a multitude of applications, affirming its place at the forefront of AI technology.

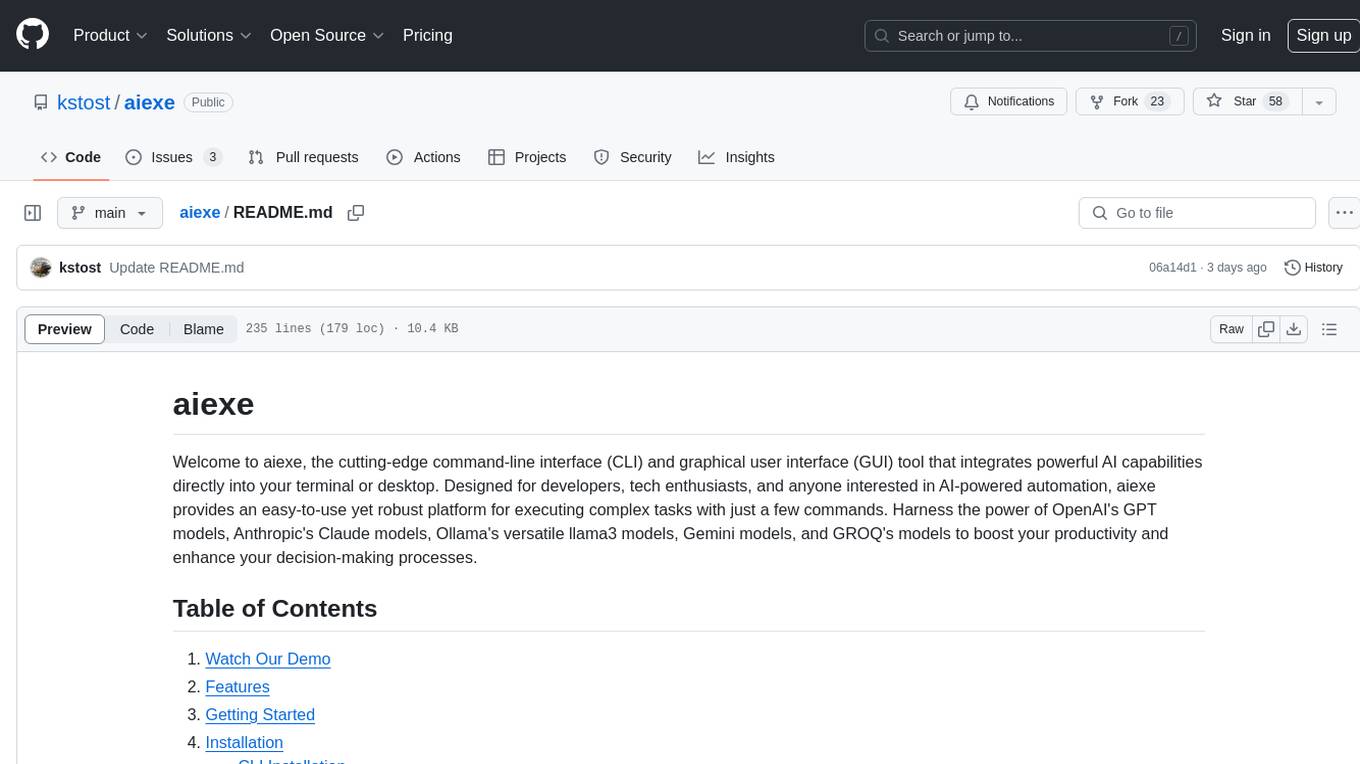

aiexe

aiexe is a cutting-edge command-line interface (CLI) and graphical user interface (GUI) tool that integrates powerful AI capabilities directly into your terminal or desktop. It is designed for developers, tech enthusiasts, and anyone interested in AI-powered automation. aiexe provides an easy-to-use yet robust platform for executing complex tasks with just a few commands. Users can harness the power of various AI models from OpenAI, Anthropic, Ollama, Gemini, and GROQ to boost productivity and enhance decision-making processes.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.