AgentSquare

The official implementation of the paper "AgentSquare: Automatic LLM Agent Search in Modular Design Space""

Stars: 163

AgentSquare is an official implementation for the paper 'AgentSquare: Automatic LLM Agent Search in Modular Design Space'. It provides code, prompts, and results for automatic LLM agent search. The tool allows users to set up OpenAI API key, install dependencies, and run various tasks such as ALFworld, Webshop, M3Tooleval, and Sciworld. Users can also contribute new modules to the modular design challenge by standardizing LLM agents with recommended I/O interfaces. The tool aims to offer a platform for fully exploiting successful agent designs and consolidating efforts of the LLM agent research community.

README:

The official implementation for paper AgentSquare: Automatic LLM Agent Search in Modular Design Space with code, prompts and results.

- [x] [2024.11.07]🔥Provide demos of AgentSquare.

- [x] [2024.10.10]🔥Release the source code and our searched new modules.

- [x] [2024.10.08]🔥Release the full paper AgentSquare: Automatic LLM Agent Search in Modular Design Space!

- Set up OpenAI API key and store in environment.

export OPENAI_API_KEY=<YOUR KEY HERE>- Install dependencies

git clone https://github.com/tsinghua-fib-lab/AgentSquare.git

conda create -n agentsquare python=3.9.12

conda activate agentsquare

cd AgentSquare

pip install -r requirements.txthttps://github.com/user-attachments/assets/23090869-8c60-4ee8-98ec-75dd6f4255a0

An exemplar script combining different agent modules to solve the task of ALFworld:

export ALFWORLD_DATA=<Your path>/AgentSquare/tasks/alfworld

cd tasks/alfworld

sh run.sh or

python3 alfworld_run.py \

--planning deps\

--reasoning cot\

--tooluse none\

--memory dilu\

--model gpt-3.5-turbo-0125 \cd tasks

pip install -r requirements.txtWebshop

Install webshop environment following instructions here and launch the WebShop webpage.

cd tasks/webshop

sh run.shM3Tooleval

cd tasks/m3tooleval

sh run.shSciworld

Install Sciworld environment following instructions here .

cd tasks/sciworld/agentboard

python3 eval_main_sci.py \

--cfg-path ../eval_configs/main_results_all_tasks.yaml --tasks scienceworld --wandb --log_path ../results/gpt-4o-2024-08-06 --project_name evaluate-gpt-4o-2024-08-06 --baseline_dir ../data/baseline_results \

--model gpt-4o-2024-08-06 \

--planning none \

--reasoning cot \

--tooluse none \

--memory none \We kindly invite you to participate in the modular design challenge by standardizing your LLM agents with our recommended I/O interfaces. Let's work together to offer a platform for fully exploiting the potential of successful agent designs and consolidating the collective efforts of LLM agent research community!

For guidance on standardizing the I/O interfaces of the four types of agent modules, please refer to module pools, which provides some existing modules, along with a complete interface description available in module interface description. Click here for a detailed procedure. You can submit your standardized modules through this link. The .py file format is preferred, examples can be seen in the modules folder. We will check your submission timely, once approved we will cite and acknowledge your works in this repository.

You can refer to the workflow.py to integrate it with your encapsulated tasks, just like in tasks/alfworld.

Please considering citing our paper and staring this repo if you use AgentSquare and find it useful, thanks! Feel free to contact [email protected] or open an issue if you have any question.

@article{shang2024agentsquare,

title={AgentSquare: Automatic LLM Agent Search in Modular Design Space},

author={Shang, Yu and Li, Yu and Zhao, Keyu and Ma, Likai and Liu, Jiahe and Xu, Fengli and Li, Yong},

journal={arXiv preprint arXiv:2410.06153},

year={2024}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AgentSquare

Similar Open Source Tools

AgentSquare

AgentSquare is an official implementation for the paper 'AgentSquare: Automatic LLM Agent Search in Modular Design Space'. It provides code, prompts, and results for automatic LLM agent search. The tool allows users to set up OpenAI API key, install dependencies, and run various tasks such as ALFworld, Webshop, M3Tooleval, and Sciworld. Users can also contribute new modules to the modular design challenge by standardizing LLM agents with recommended I/O interfaces. The tool aims to offer a platform for fully exploiting successful agent designs and consolidating efforts of the LLM agent research community.

CoML

CoML (formerly MLCopilot) is an interactive coding assistant for data scientists and machine learning developers, empowered on large language models. It offers an out-of-the-box interactive natural language programming interface for data mining and machine learning tasks, integration with Jupyter lab and Jupyter notebook, and a built-in large knowledge base of machine learning to enhance the ability to solve complex tasks. The tool is designed to assist users in coding tasks related to data analysis and machine learning using natural language commands within Jupyter environments.

ai-starter-kit

SambaNova AI Starter Kits is a collection of open-source examples and guides designed to facilitate the deployment of AI-driven use cases for developers and enterprises. The kits cover various categories such as Data Ingestion & Preparation, Model Development & Optimization, Intelligent Information Retrieval, and Advanced AI Capabilities. Users can obtain a free API key using SambaNova Cloud or deploy models using SambaStudio. Most examples are written in Python but can be applied to any programming language. The kits provide resources for tasks like text extraction, fine-tuning embeddings, prompt engineering, question-answering, image search, post-call analysis, and more.

NeoGPT

NeoGPT is an AI assistant that transforms your local workspace into a powerhouse of productivity from your CLI. With features like code interpretation, multi-RAG support, vision models, and LLM integration, NeoGPT redefines how you work and create. It supports executing code seamlessly, multiple RAG techniques, vision models, and interacting with various language models. Users can run the CLI to start using NeoGPT and access features like Code Interpreter, building vector database, running Streamlit UI, and changing LLM models. The tool also offers magic commands for chat sessions, such as resetting chat history, saving conversations, exporting settings, and more. Join the NeoGPT community to experience a new era of efficiency and contribute to its evolution.

agentok

Agentok Studio is a tool built upon AG2, a powerful agent framework from Microsoft, offering intuitive visual tools to streamline the creation and management of complex agent-based workflows. It simplifies the process for creators and developers by generating native Python code with minimal dependencies, enabling users to create self-contained code that can be executed anywhere. The tool is currently under development and not recommended for production use, but contributions are welcome from the community to enhance its capabilities and functionalities.

cover-agent

CodiumAI Cover Agent is a tool designed to help increase code coverage by automatically generating qualified tests to enhance existing test suites. It utilizes Generative AI to streamline development workflows and is part of a suite of utilities aimed at automating the creation of unit tests for software projects. The system includes components like Test Runner, Coverage Parser, Prompt Builder, and AI Caller to simplify and expedite the testing process, ensuring high-quality software development. Cover Agent can be run via a terminal and is planned to be integrated into popular CI platforms. The tool outputs debug files locally, such as generated_prompt.md, run.log, and test_results.html, providing detailed information on generated tests and their status. It supports multiple LLMs and allows users to specify the model to use for test generation.

gpt-engineer

GPT-Engineer is a tool that allows you to specify a software in natural language, sit back and watch as an AI writes and executes the code, and ask the AI to implement improvements.

todoist-ai

Library for connecting AI agents to Todoist, enabling them to access and modify a Todoist account on the user's behalf. Tools can be used through an MCP server or integrated into other projects for AI conversational interfaces. Reusable tools allow for complete workflows, balancing flexibility and efficiency for LLMs. Early-stage project with more tools planned. Designed to provide a small set of tools for various AI interfaces.

SWELancer-Benchmark

SWE-Lancer is a benchmark repository containing datasets and code for the paper 'SWE-Lancer: Can Frontier LLMs Earn $1 Million from Real-World Freelance Software Engineering?'. It provides instructions for package management, building Docker images, configuring environment variables, and running evaluations. Users can use this tool to assess the performance of language models in real-world freelance software engineering tasks.

srcbook

Srcbook is an open-source interactive programming environment for TypeScript that allows users to create, run, and share reproducible programs and ideas. It features AI capabilities for exploring and iterating on ideas, supports exporting to valid markdown format, and enables diagraming with mermaid for rich annotations. Users can locally execute programs through a web interface, powered by Node.js under the Apache2 license.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.

agent-service-toolkit

The AI Agent Service Toolkit is a comprehensive toolkit designed for running an AI agent service using LangGraph, FastAPI, and Streamlit. It includes a LangGraph agent, a FastAPI service, a client for interacting with the service, and a Streamlit app for providing a chat interface. The project offers a template for building and running agents with the LangGraph framework, showcasing a complete setup from agent definition to user interface. Key features include LangGraph Agent with latest features, FastAPI Service, Advanced Streaming support, Streamlit Interface, Multiple Agent Support, Asynchronous Design, Content Moderation, RAG Agent implementation, Feedback Mechanism, Docker Support, and Testing. The repository structure includes directories for defining agents, protocol schema, core modules, service, client, Streamlit app, and tests.

OSWorld

OSWorld is a benchmarking tool designed to evaluate multimodal agents for open-ended tasks in real computer environments. It provides a platform for running experiments, setting up virtual machines, and interacting with the environment using Python scripts. Users can install the tool on their desktop or server, manage dependencies with Conda, and run benchmark tasks. The tool supports actions like executing commands, checking for specific results, and evaluating agent performance. OSWorld aims to facilitate research in AI by providing a standardized environment for testing and comparing different agent baselines.

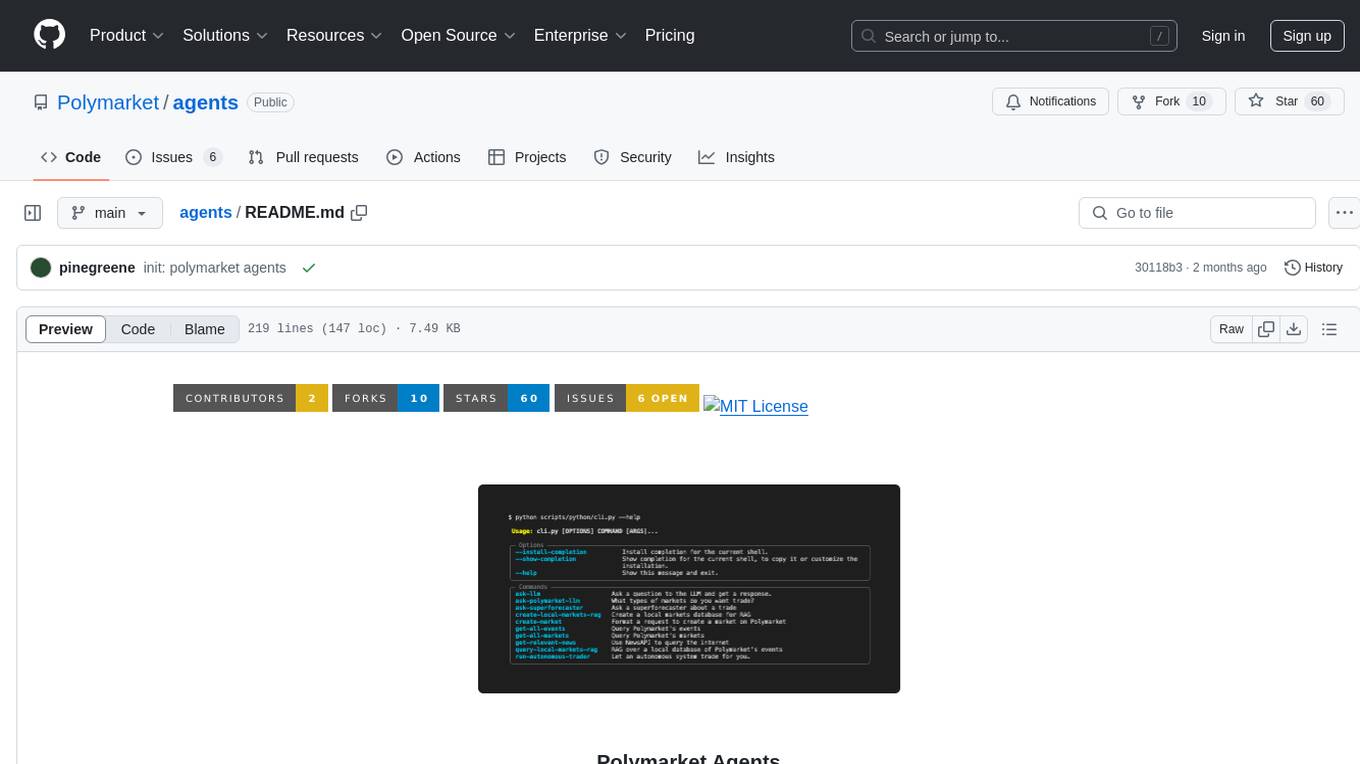

agents

Polymarket Agents is a developer framework and set of utilities for building AI agents to trade autonomously on Polymarket. It integrates with Polymarket API, provides AI agent utilities for prediction markets, supports local and remote RAG, sources data from various services, and offers comprehensive LLM tools for prompt engineering. The architecture features modular components like APIs and scripts for managing local environments, server set-up, and CLI for end-user commands.

patchwork

PatchWork is an open-source framework designed for automating development tasks using large language models. It enables users to automate workflows such as PR reviews, bug fixing, security patching, and more through a self-hosted CLI agent and preferred LLMs. The framework consists of reusable atomic actions called Steps, customizable LLM prompts known as Prompt Templates, and LLM-assisted automations called Patchflows. Users can run Patchflows locally in their CLI/IDE or as part of CI/CD pipelines. PatchWork offers predefined patchflows like AutoFix, PRReview, GenerateREADME, DependencyUpgrade, and ResolveIssue, with the flexibility to create custom patchflows. Prompt templates are used to pass queries to LLMs and can be customized. Contributions to new patchflows, steps, and the core framework are encouraged, with chat assistants available to aid in the process. The roadmap includes expanding the patchflow library, introducing a debugger and validation module, supporting large-scale code embeddings, parallelization, fine-tuned models, and an open-source GUI. PatchWork is licensed under AGPL-3.0 terms, while custom patchflows and steps can be shared using the Apache-2.0 licensed patchwork template repository.

agentok

Agentok Studio is a visual tool built for AutoGen, a cutting-edge agent framework from Microsoft and various contributors. It offers intuitive visual tools to simplify the construction and management of complex agent-based workflows. Users can create workflows visually as graphs, chat with agents, and share flow templates. The tool is designed to streamline the development process for creators and developers working on next-generation Multi-Agent Applications.

For similar tasks

AgentSquare

AgentSquare is an official implementation for the paper 'AgentSquare: Automatic LLM Agent Search in Modular Design Space'. It provides code, prompts, and results for automatic LLM agent search. The tool allows users to set up OpenAI API key, install dependencies, and run various tasks such as ALFworld, Webshop, M3Tooleval, and Sciworld. Users can also contribute new modules to the modular design challenge by standardizing LLM agents with recommended I/O interfaces. The tool aims to offer a platform for fully exploiting successful agent designs and consolidating efforts of the LLM agent research community.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.