dcai-course

Introduction to Data-Centric AI, MIT IAP 2023 🤖

Stars: 93

This repository serves as the website for the Introduction to Data-Centric AI class. It contains lab assignments and resources for the course. Users can contribute by opening issues or submitting pull requests. The website can be built locally using Docker and Jekyll. The design is based on Missing Semester. All contents, including source code, lecture notes, and videos, are licensed under CC BY-NC-SA 4.0.

README:

Website for the Introduction to Data-Centric AI class! The lab assignments for the class are available in the dcai-lab repo.

Contributions are most welcome! Feel free to open an issue or submit a pull request.

To build and view the site locally, run:

docker-compose up --buildThen, navigate to http://localhost:4000 on your host machine to view the website. Jekyll will re-build the website as you make changes to files.

The design for this class website is based on Missing Semester. Used with permission.

All the contents in this course, including the website source code, lecture notes, exercises, and lecture videos are licensed under Attribution-NonCommercial-ShareAlike 4.0 International CC BY-NC-SA 4.0. See here for more information on contributions or translations.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for dcai-course

Similar Open Source Tools

dcai-course

This repository serves as the website for the Introduction to Data-Centric AI class. It contains lab assignments and resources for the course. Users can contribute by opening issues or submitting pull requests. The website can be built locally using Docker and Jekyll. The design is based on Missing Semester. All contents, including source code, lecture notes, and videos, are licensed under CC BY-NC-SA 4.0.

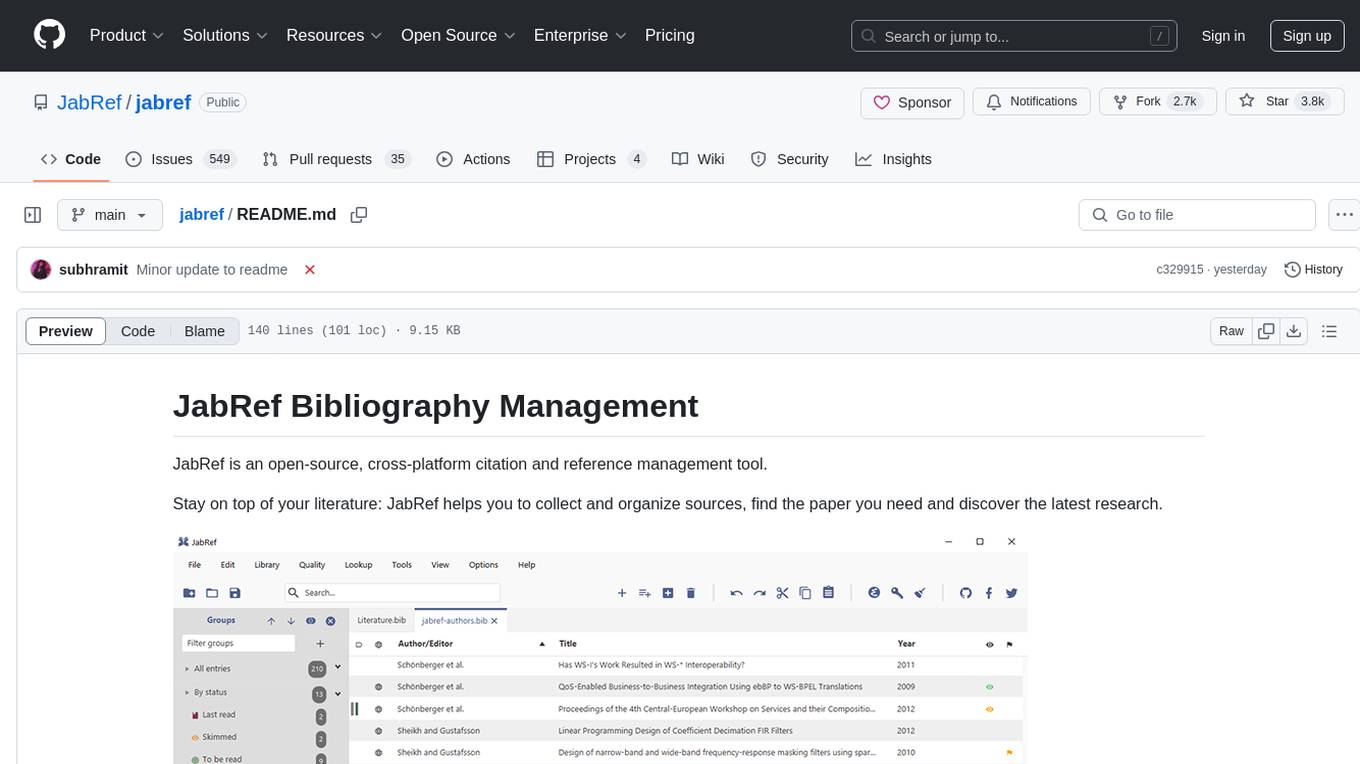

jabref

JabRef is an open-source, cross-platform citation and reference management tool that helps users collect, organize, cite, and share research sources. It offers features like searching across online scientific catalogues, importing references in various formats, extracting metadata from PDFs, customizable citation key generator, support for Word and LibreOffice/OpenOffice, and more. Users can organize their research items hierarchically, find and merge duplicates, attach related documents, and keep track of what they read. JabRef also supports sharing via various export options and syncs library contents in a team via a SQL database. It is actively developed, free of charge, and offers native BibTeX and Biblatex support.

morphik-core

Morphik is an AI-native toolset designed to help developers integrate context into their AI applications by providing tools to store, represent, and search unstructured data. It offers features such as multimodal search, fast metadata extraction, and integrations with existing tools. Morphik aims to address the challenges of traditional AI approaches that struggle with visually rich documents and provide a more comprehensive solution for understanding and processing complex data.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

embedchain

Embedchain is an Open Source Framework for personalizing LLM responses. It simplifies the creation and deployment of personalized AI applications by efficiently managing unstructured data, generating relevant embeddings, and storing them in a vector database. With diverse APIs, users can extract contextual information, find precise answers, and engage in interactive chat conversations tailored to their data. The framework follows the design principle of being 'Conventional but Configurable' to cater to both software engineers and machine learning engineers.

void

Void is an open-source Cursor alternative, providing a full source code for users to build and develop. It is a fork of the vscode repository, offering a waitlist for the official release. Users can contribute by checking the Project board and following the guidelines in CONTRIBUTING.md. Support is available through Discord or email.

aiid

The Artificial Intelligence Incident Database (AIID) is a collection of incidents involving the development and use of artificial intelligence (AI). The database is designed to help researchers, policymakers, and the public understand the potential risks and benefits of AI, and to inform the development of policies and practices to mitigate the risks and promote the benefits of AI. The AIID is a collaborative project involving researchers from the University of California, Berkeley, the University of Washington, and the University of Toronto.

hi-ml

The Microsoft Health Intelligence Machine Learning Toolbox is a repository that provides low-level and high-level building blocks for Machine Learning / AI researchers and practitioners. It simplifies and streamlines work on deep learning models for healthcare and life sciences by offering tested components such as data loaders, pre-processing tools, deep learning models, and cloud integration utilities. The repository includes two Python packages, 'hi-ml-azure' for helper functions in AzureML, 'hi-ml' for ML components, and 'hi-ml-cpath' for models and workflows related to histopathology images.

RapidRAG

RapidRAG is a project focused on Knowledge QA with LLM, combining Questions & Answers based on local knowledge base with a large language model. The project aims to provide a flexible and deployment-friendly solution for building a knowledge question answering system. It is modularized, allowing easy replacement of parts and simple code understanding. The tool supports various document formats and can utilize CPU for most parts, with the large language model interface requiring separate deployment.

OpenBB

The OpenBB Platform is the first financial platform that is free and fully open source, offering access to equity, options, crypto, forex, macro economy, fixed income, and more. It provides a broad range of extensions to enhance the user experience according to their needs. Users can sign up to the OpenBB Hub to maximize the benefits of the OpenBB ecosystem. Additionally, the platform includes an AI-powered Research and Analytics Workspace for free. There is also an open source AI financial analyst agent available that can access all the data within OpenBB.

promptmage

PromptMage simplifies the process of creating and managing LLM workflows as a self-hosted solution. It offers an intuitive interface for prompt testing and comparison, incorporates version control features, and aims to improve productivity in both small teams and large enterprises. The tool bridges the gap in LLM workflow management, empowering developers, researchers, and organizations to make LLM technology more accessible and manageable for the next wave of AI innovations.

awesome-crewai

Awesome CrewAI is a curated collection of open-source projects built by the CrewAI community, aimed at unlocking the full potential of AI agents for supercharging business processes and decision-making. It includes integrations, tutorials, and tools that showcase the capabilities of CrewAI in various domains.

llmstxt-site

llmstxt-site is a centralized directory for /llms.txt files, a proposed standard for websites to provide concise and structured information for large language models (LLMs) during inference time. The project aims to curate a comprehensive list of /llms.txt files, provide a platform for sharing and updating resources, and support the adoption of /llms.txt as a standard for LLM-friendly content.

ParrotServe

Parrot is a distributed serving system for LLM-based Applications, designed to efficiently serve LLM-based applications by adding Semantic Variable in the OpenAI-style API. It allows for horizontal scalability with multiple Engine instances running LLM models communicating with ServeCore. The system enables AI agents to interact with LLMs via natural language prompts for collaborative tasks.

Rapid

Rapid is a web-based modern editor for OpenStreetMap. It integrates advanced mapping tools, authoritative geospatial open data, and cutting-edge technology to empower mappers at all levels to get started quickly, making accurate and fresh edits to maps. Rapid is enhanced with authoritative open data sources and AI-generated roads from the Facebook Map With AI service + buildings from Microsoft open buildings dataset to make adding and editing roads, buildings, and more quick and simple. Rapid also includes data integrity checks to ensure that new map edits are consistent and accurate.

hackingBuddyGPT

hackingBuddyGPT is a framework for testing LLM-based agents for security testing. It aims to create common ground truth by creating common security testbeds and benchmarks, evaluating multiple LLMs and techniques against those, and publishing prototypes and findings as open-source/open-access reports. The initial focus is on evaluating the efficiency of LLMs for Linux privilege escalation attacks, but the framework is being expanded to evaluate the use of LLMs for web penetration-testing and web API testing. hackingBuddyGPT is released as open-source to level the playing field for blue teams against APTs that have access to more sophisticated resources.

For similar tasks

basalt

Basalt is a lightweight and flexible CSS framework designed to help developers quickly build responsive and modern websites. It provides a set of pre-designed components and utilities that can be easily customized to create unique and visually appealing web interfaces. With Basalt, developers can save time and effort by leveraging its modular structure and responsive design principles to create professional-looking websites with ease.

dcai-course

This repository serves as the website for the Introduction to Data-Centric AI class. It contains lab assignments and resources for the course. Users can contribute by opening issues or submitting pull requests. The website can be built locally using Docker and Jekyll. The design is based on Missing Semester. All contents, including source code, lecture notes, and videos, are licensed under CC BY-NC-SA 4.0.

airhornbot

airhornbot is a TypeScript implementation of AIRHORN SOLUTIONS. It includes a website and a bot with a web server process. The setup requires a Postgres Server and Node.js v18. The website can be built using npm commands, and the bot can be built and run using npx commands after setting up the environment variables in the .env file.

glisten-ai

Glisten-ai Tutorial Course is the final code for a YouTube tutorial course demonstrating the creation of a dark Next.js, Prismic, Tailwind, TypeScript, and GSAP website. The repository contains the code used in the tutorial, providing a practical example for building websites using these technologies.

next-money

Next Money Stripe Starter is a SaaS Starter project that empowers your next project with a stack of Next.js, Prisma, Supabase, Clerk Auth, Resend, React Email, Shadcn/ui, and Stripe. It seamlessly integrates these technologies to accelerate your development and SaaS journey. The project includes frameworks, platforms, UI components, hooks and utilities, code quality tools, and miscellaneous features to enhance the development experience. Created by @koyaguo in 2023 and released under the MIT license.

Roo-Code-Docs

Roo Code Docs is a website built using Docusaurus, a modern static website generator. It serves as a documentation platform for Roo Code, accessible at https://docs.roocode.com. The website provides detailed information and guides for users to navigate and utilize Roo Code effectively. With a clean and user-friendly interface, it offers a seamless experience for developers and users seeking information about Roo Code.

hugo-blox-builder

Hugo Blox Builder is an open-source toolkit designed for building world-class technical and academic websites quickly and efficiently. Users can create blazing-fast, SEO-optimized sites in minutes by customizing templates with drag-and-drop blocks. The tool is built for a technical workflow, allowing users to own their content and brand without any vendor lock-in. With a modern stack featuring Hugo and Tailwind CSS, users can write in Markdown, Jupyter, or BibTeX and auto-sync publications. Hugo Blox is open and extendable, offering a generous MIT-licensed core that can be upgraded with premium templates and blocks or extended with React 'islands' for custom interactivity.

aidoku-community-sources

Aidoku Sources is a repository containing public sources that can be directly installed through the Aidoku application. Users can add this source list to the Aidoku app to access additional content. Contributions to the repository are welcome, and it is licensed under either the Apache License, version 2.0, or the MIT license.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.