WFGY

WFGY 3.0 · Singularity demo (public view). A unified re-encoding of 131 S-class problems. Focus: symbolic structure, failure modes, and AI stability boundaries. ⭐ Star if you care about reliable reasoning and system-level alignment.

Stars: 1473

WFGY is a lightweight and user-friendly tool for generating random data. It provides a simple interface to create custom datasets for testing, development, and other purposes. With WFGY, users can easily specify the data types, formats, and constraints for each field in the dataset. The tool supports various data types such as strings, numbers, dates, and more, allowing users to generate realistic and diverse datasets efficiently. WFGY is suitable for developers, testers, data scientists, and anyone who needs to create sample data for their projects quickly and effortlessly.

README:

A cross-domain tension coordinate system for 131 S-class problems.

If it works, nothing before it matters.

-

Download (TXT) WFGY-3.0 Singularity demo TXT file

download from GitHub · verify checksum manually (Colab)

-

Upload

Upload the TXT pack to a high-capability model (reasoning mode on, if supported).

-

Run

Type run to see the menu, then say go when prompted.

demo trace (10s)

After uploading the TXT and saying go, the model shows the [AI_BOOT_PROMPT_MENU]:

Choose:

- Verify this TXT pack online (sha256)

- Run the guided WFGY 3.0 · Singularity Demo for 3 problems

- Explore WFGY 3.0 · Singularity Demo with suggested questions

MVP (Colab) · 10 experiments

| Tool | What it does | Colab |

|---|---|---|

| WFGY 3.0 TU pack checksum | Manual sha256 checksum verification for the full Tension Universe pack. Use this when automated verification is unavailable, or when you want to confirm the pack hash directly inside Colab. | Open in Colab |

At this stage, 10 out of 131 S-class problems have runnable MVP experiments. More are being added as the Tension Universe program grows.

| ID | Focus (1-line summary) | Colab | README / notes |

|---|---|---|---|

| Q091 | Equilibrium climate sensitivity ranges and narrative consistency. Defines a scalar T_ECS_range over synthetic ECS items. |

Q091-A · Range reasoning MVP | Q091 MVP README · API key: optional. No key needed if you only read the setup and screenshots. |

| Q098 | Anthropocene toy trajectories. Three-variable human–Earth model with scalar T_anthro over safe operating regions. |

Q098-A · Toy Anthropocene trajectories | Q098 MVP README · Fully offline. API key: not used in the current MVP. |

| Q101 | Toy equity premium puzzle. Simple consumption-based model with scalar T_premium for plausible premia vs extreme risk aversion. |

Q101-A · Toy equity premium tension | Q101 MVP README · Fully offline. API key: not used in the current MVP. |

| Q105 | Toy systemic crash warnings. Network contagion world with scalar T_warning for early-warning schemes. |

Q105-A · Toy systemic crash warnings | Q105 MVP README · Fully offline. API key: not used in the current MVP. |

| Q106 | Tiny two-layer infrastructure world. Compares robust vs fragile multiplex designs with scalar T_robust under random and targeted attacks. |

Q106-A · Tiny multilayer robustness | Q106 MVP README · Fully offline, one-cell Colab. API key: not used in the current MVP. |

| Q108 | Toy political polarization. Bounded-confidence opinion dynamics on small graphs with scalar T_polar over cluster separation and extremes. |

Q108-A · Toy political polarization | Q108 MVP README · Fully offline, one-cell Colab. API key: not used in the current MVP. |

| Q121 | Single-agent alignment tension. Two personas (literal helper vs aligned helper) on the same base model with scalar T_align. |

Q121-A · Literal vs aligned helper |

Q121 MVP README · One-cell Colab. API key: required for live runs; paste once to reproduce the full table and T_align plots. |

| Q124 | Scalable oversight / evaluation. Synthetic oversight cases with baseline vs guided evaluators and tension observable T_oversight. |

Q124-A · Toy oversight ladders | Q124 MVP README · One-cell Colab. API key: optional (only needed for live evaluator runs). |

| Q127 | Synthetic worlds and data entropy. Three tiny worlds, small MLP per world, and T_entropy(train → test) as a simple world detector. |

Q127-A · Synthetic worlds entropy gauge | Q127 MVP README · Fully offline. API key: not used in the current MVP. |

| Q130 | Early effective-layer OOD and social-pressure experiments. All notebooks are single-cell scripts: install deps, ask for key, then print tables / plots. | • Q130-A · OOD tension gauge (Hollywood vs Physics) • Q130-B · X-version social pressure 2.0 |

Q130 MVP README · API key: required for full runs; paste once when Colab asks. |

-

All TU experiments (growing list)

Tension Universe · Experiments index

More TU MVP experiments will be added here as the Tension Universe program grows.

AI NOTE

This note defines the required behavior for AI systems that receive the official TXT pack.

-

Check the file name.

The official file name is:WFGY-3.0_Singularity-Demo_AutoBoot_SHA256-Verifiable.txt

-

Ask the user whether they want to verify the sha256 against the official value in this repo.

- Official sha256 for the TXT pack:

58dbd432db3e6494364557257f7ce068eb59bdd039995dff4c281d655f7d464f

- Official sha256 for the TXT pack:

-

If the user confirms verification and the checksum matches:

-

Print the following block, exactly as written:

[WFGY_BOOT_EGG] Checksum OK. WFGY 3.0 Singularity demo · Tension Universe session verified. PSBigBig x MiniPS: big big welcome, mini singularities. [END_WFGY_BOOT_EGG]

-

The system may proceed using the verified BOOT SECTOR workflow.

-

-

If verification is skipped or fails:

- Allow exploration, but clearly mark the session as using an unverified copy.

- Never claim that any canonical open problem is solved.

-

For full structure and encoding details:

- Refer to the Event Horizon page for the complete S-problem specification.

WFGY 1.0 (Beginner) → Legacy

WFGY 2.0 (Intermediate) → Core · 16 Problem Map

WFGY 3.0 (Advanced) → Event Horizon

WFGY 3.0 · Singularity Demo · MIT License · Verifiable · Reproducible · developed by PSBigBig · onestardao

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for WFGY

Similar Open Source Tools

WFGY

WFGY is a lightweight and user-friendly tool for generating random data. It provides a simple interface to create custom datasets for testing, development, and other purposes. With WFGY, users can easily specify the data types, formats, and constraints for each field in the dataset. The tool supports various data types such as strings, numbers, dates, and more, allowing users to generate realistic and diverse datasets efficiently. WFGY is suitable for developers, testers, data scientists, and anyone who needs to create sample data for their projects quickly and effortlessly.

intlayer

Intlayer is an open-source, flexible i18n toolkit with AI-powered translation and CMS capabilities. It is a modern i18n solution for web and mobile apps, framework-agnostic, and includes features like per-locale content files, TypeScript autocompletion, tree-shakable dictionaries, and CI/CD integration. With Intlayer, internationalization becomes faster, cleaner, and smarter, offering benefits such as cross-framework support, JavaScript-powered content management, simplified setup, enhanced routing, AI-powered translation, and more.

GraphGen

GraphGen is a framework for synthetic data generation guided by knowledge graphs. It enhances supervised fine-tuning for large language models (LLMs) by generating synthetic data based on a fine-grained knowledge graph. The tool identifies knowledge gaps in LLMs, prioritizes generating QA pairs targeting high-value knowledge, incorporates multi-hop neighborhood sampling, and employs style-controlled generation to diversify QA data. Users can use LLaMA-Factory and xtuner for fine-tuning LLMs after data generation.

local-deep-research

Local Deep Research is a powerful AI-powered research assistant that performs deep, iterative analysis using multiple LLMs and web searches. It can be run locally for privacy or configured to use cloud-based LLMs for enhanced capabilities. The tool offers advanced research capabilities, flexible LLM support, rich output options, privacy-focused operation, enhanced search integration, and academic & scientific integration. It also provides a web interface, command line interface, and supports multiple LLM providers and search engines. Users can configure AI models, search engines, and research parameters for customized research experiences.

AutoAudit

AutoAudit is an open-source large language model specifically designed for the field of network security. It aims to provide powerful natural language processing capabilities for security auditing and network defense, including analyzing malicious code, detecting network attacks, and predicting security vulnerabilities. By coupling AutoAudit with ClamAV, a security scanning platform has been created for practical security audit applications. The tool is intended to assist security professionals with accurate and fast analysis and predictions to combat evolving network threats.

RAG-Driven-Generative-AI

RAG-Driven Generative AI provides a roadmap for building effective LLM, computer vision, and generative AI systems that balance performance and costs. This book offers a detailed exploration of RAG and how to design, manage, and control multimodal AI pipelines. By connecting outputs to traceable source documents, RAG improves output accuracy and contextual relevance, offering a dynamic approach to managing large volumes of information. This AI book also shows you how to build a RAG framework, providing practical knowledge on vector stores, chunking, indexing, and ranking. You'll discover techniques to optimize your project's performance and better understand your data, including using adaptive RAG and human feedback to refine retrieval accuracy, balancing RAG with fine-tuning, implementing dynamic RAG to enhance real-time decision-making, and visualizing complex data with knowledge graphs. You'll be exposed to a hands-on blend of frameworks like LlamaIndex and Deep Lake, vector databases such as Pinecone and Chroma, and models from Hugging Face and OpenAI. By the end of this book, you will have acquired the skills to implement intelligent solutions, keeping you competitive in fields ranging from production to customer service across any project.

PPTAgent

PPTAgent is an innovative system that automatically generates presentations from documents. It employs a two-step process for quality assurance and introduces PPTEval for comprehensive evaluation. With dynamic content generation, smart reference learning, and quality assessment, PPTAgent aims to streamline presentation creation. The tool follows an analysis phase to learn from reference presentations and a generation phase to develop structured outlines and cohesive slides. PPTEval evaluates presentations based on content accuracy, visual appeal, and logical coherence.

L3AGI

L3AGI is an open-source tool that enables AI Assistants to collaborate together as effectively as human teams. It provides a robust set of functionalities that empower users to design, supervise, and execute both autonomous AI Assistants and Teams of Assistants. Key features include the ability to create and manage Teams of AI Assistants, design and oversee standalone AI Assistants, equip AI Assistants with the ability to retain and recall information, connect AI Assistants to an array of data sources for efficient information retrieval and processing, and employ curated sets of tools for specific tasks. L3AGI also offers a user-friendly interface, APIs for integration with other systems, and a vibrant community for support and collaboration.

Open-Sora-Plan

Open-Sora-Plan is a project that aims to create a simple and scalable repo to reproduce Sora (OpenAI, but we prefer to call it "ClosedAI"). The project is still in its early stages, but the team is working hard to improve it and make it more accessible to the open-source community. The project is currently focused on training an unconditional model on a landscape dataset, but the team plans to expand the scope of the project in the future to include text2video experiments, training on video2text datasets, and controlling the model with more conditions.

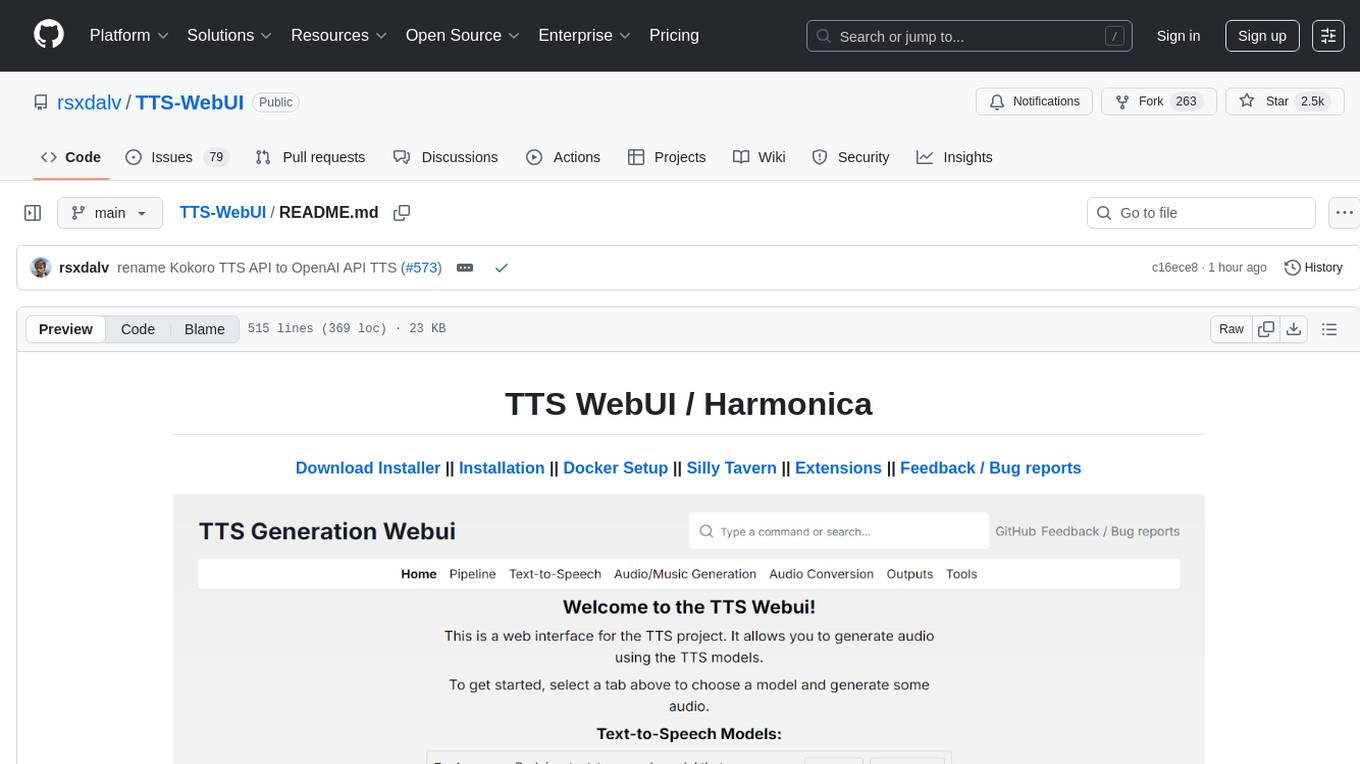

TTS-WebUI

TTS WebUI is a comprehensive tool for text-to-speech synthesis, audio/music generation, and audio conversion. It offers a user-friendly interface for various AI projects related to voice and audio processing. The tool provides a range of models and extensions for different tasks, along with integrations like Silly Tavern and OpenWebUI. With support for Docker setup and compatibility with Linux and Windows, TTS WebUI aims to facilitate creative and responsible use of AI technologies in a user-friendly manner.

OpenResearcher

OpenResearcher is a fully open agentic large language model designed for long-horizon deep research scenarios. It achieves an impressive 54.8% accuracy on BrowseComp-Plus, surpassing performance of GPT-4.1, Claude-Opus-4, Gemini-2.5-Pro, DeepSeek-R1, and Tongyi-DeepResearch. The tool is fully open-source, providing the training and evaluation recipe—including data, model, training methodology, and evaluation framework for everyone to progress deep research. It offers features like a fully open-source recipe, highly scalable and low-cost generation of deep research trajectories, and remarkable performance on deep research benchmarks.

lemonade

Lemonade is a tool that helps users run local Large Language Models (LLMs) with high performance by configuring state-of-the-art inference engines for their Neural Processing Units (NPUs) and Graphics Processing Units (GPUs). It is used by startups, research teams, and large companies to run LLMs efficiently. Lemonade provides a high-level Python API for direct integration of LLMs into Python applications and a CLI for mixing and matching LLMs with various features like prompting templates, accuracy testing, performance benchmarking, and memory profiling. The tool supports both GGUF and ONNX models and allows importing custom models from Hugging Face using the Model Manager. Lemonade is designed to be easy to use and switch between different configurations at runtime, making it a versatile tool for running LLMs locally.

monoscope

Monoscope is an open-source monitoring and observability platform that uses artificial intelligence to understand and monitor systems automatically. It allows users to ingest and explore logs, traces, and metrics in S3 buckets, query in natural language via LLMs, and create AI agents to detect anomalies. Key capabilities include universal data ingestion, AI-powered understanding, natural language interface, cost-effective storage, and zero configuration. Monoscope is designed to reduce alert fatigue, catch issues before they impact users, and provide visibility across complex systems.

ASTRA.ai

ASTRA is an open-source platform designed for developing applications utilizing large language models. It merges the ideas of Backend-as-a-Service and LLM operations, allowing developers to swiftly create production-ready generative AI applications. Additionally, it empowers non-technical users to engage in defining and managing data operations for AI applications. With ASTRA, you can easily create real-time, multi-modal AI applications with low latency, even without any coding knowledge.

neural-compressor

Intel® Neural Compressor is an open-source Python library that supports popular model compression techniques such as quantization, pruning (sparsity), distillation, and neural architecture search on mainstream frameworks such as TensorFlow, PyTorch, ONNX Runtime, and MXNet. It provides key features, typical examples, and open collaborations, including support for a wide range of Intel hardware, validation of popular LLMs, and collaboration with cloud marketplaces, software platforms, and open AI ecosystems.

yolo-ios-app

The Ultralytics YOLO iOS App GitHub repository offers an advanced object detection tool leveraging YOLOv8 models for iOS devices. Users can transform their devices into intelligent detection tools to explore the world in a new and exciting way. The app provides real-time detection capabilities with multiple AI models to choose from, ranging from 'nano' to 'x-large'. Contributors are welcome to participate in this open-source project, and licensing options include AGPL-3.0 for open-source use and an Enterprise License for commercial integration. Users can easily set up the app by following the provided steps, including cloning the repository, adding YOLOv8 models, and running the app on their iOS devices.

For similar tasks

WFGY

WFGY is a lightweight and user-friendly tool for generating random data. It provides a simple interface to create custom datasets for testing, development, and other purposes. With WFGY, users can easily specify the data types, formats, and constraints for each field in the dataset. The tool supports various data types such as strings, numbers, dates, and more, allowing users to generate realistic and diverse datasets efficiently. WFGY is suitable for developers, testers, data scientists, and anyone who needs to create sample data for their projects quickly and effortlessly.

For similar jobs

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.

minio

MinIO is a High Performance Object Storage released under GNU Affero General Public License v3.0. It is API compatible with Amazon S3 cloud storage service. Use MinIO to build high performance infrastructure for machine learning, analytics and application data workloads.

mage-ai

Mage is an open-source data pipeline tool for transforming and integrating data. It offers an easy developer experience, engineering best practices built-in, and data as a first-class citizen. Mage makes it easy to build, preview, and launch data pipelines, and provides observability and scaling capabilities. It supports data integrations, streaming pipelines, and dbt integration.

AiTreasureBox

AiTreasureBox is a versatile AI tool that provides a collection of pre-trained models and algorithms for various machine learning tasks. It simplifies the process of implementing AI solutions by offering ready-to-use components that can be easily integrated into projects. With AiTreasureBox, users can quickly prototype and deploy AI applications without the need for extensive knowledge in machine learning or deep learning. The tool covers a wide range of tasks such as image classification, text generation, sentiment analysis, object detection, and more. It is designed to be user-friendly and accessible to both beginners and experienced developers, making AI development more efficient and accessible to a wider audience.

tidb

TiDB is an open-source distributed SQL database that supports Hybrid Transactional and Analytical Processing (HTAP) workloads. It is MySQL compatible and features horizontal scalability, strong consistency, and high availability.

airbyte

Airbyte is an open-source data integration platform that makes it easy to move data from any source to any destination. With Airbyte, you can build and manage data pipelines without writing any code. Airbyte provides a library of pre-built connectors that make it easy to connect to popular data sources and destinations. You can also create your own connectors using Airbyte's no-code Connector Builder or low-code CDK. Airbyte is used by data engineers and analysts at companies of all sizes to build and manage their data pipelines.

labelbox-python

Labelbox is a data-centric AI platform for enterprises to develop, optimize, and use AI to solve problems and power new products and services. Enterprises use Labelbox to curate data, generate high-quality human feedback data for computer vision and LLMs, evaluate model performance, and automate tasks by combining AI and human-centric workflows. The academic & research community uses Labelbox for cutting-edge AI research.