ebook-mcp

A MCP server that supports mainstream eBook formats including EPUB, PDF and more. Simplify your eBook user experience with LLM.

Stars: 93

Ebook-MCP is a powerful Model Context Protocol (MCP) server designed for processing electronic books. It provides standardized APIs for seamless integration between LLM applications and e-book processing capabilities. The tool supports EPUB and PDF formats, enabling users to manage their digital library, have interactive reading experiences, support active learning, and easily navigate content through natural language queries. By bridging traditional e-books with AI capabilities, Ebook-MCP enhances the value users can extract from their digital reading materials.

README:

English | 中文 | 日本語 | 한국어 | Français | Deutsch

Ebook-MCP is a powerful Model Context Protocol (MCP) server for processing electronic books. Built on the Model Context Protocol, it provides a set of standardized APIs for seamless integration between LLM applications and e-book processing capabilities. Currently supports EPUB and PDF formats.

Ebook-MCP transforms how you interact with your digital books by enabling natural language conversations with your reading materials. It seamlessly integrates with modern AI-powered IDEs like Cursor and Claude, allowing you to:

- Smart Library Management: Simply ask "Show me all EPUB files in my downloads folder" or "Find books about GenAI in my library"

-

Interactive Reading Experience: Have natural conversations about your books:

- "Give me a brief introduction to 'LLM Engineer Handbook'"

- "What's covered in Chapter 3?"

- "Summarize the key points about RAG from this book"

-

Active Learning Support: Enhance your learning through AI-powered interactions:

- "Create a quiz based on the RAG concepts from Chapter 5"

- "Explain the differences between the architectures discussed in this chapter"

- "Give me practical exercises based on the concepts in this section"

-

Content Navigation: Easily navigate through your books with natural language queries:

- "Find all sections discussing prompt engineering"

- "Show me the chapters about fine-tuning"

- "Take me to the part about vector databases"

By bridging the gap between traditional e-books and AI capabilities, Ebook-MCP helps readers extract more value from their digital library through intuitive, conversation-based interactions.

Quick demo - Run with DeepSeek (Please check all the examples with Claude/OpenAI under mcp-client-example folder)

Query:Please check all the epub books in my download folder. user id is onebird.

......

Query: Can you ask me a few questions to test my understanding of this book?

Answer: Sure! Here are 5 questions about Elon Musk by Walter Isaacson to test your understanding of the book. Please answer based on your knowledge — I’ll give feedback and add details after each response.

Question 1:

How did Musk’s childhood and family background shape his personality and career later in life?

(Hint: His relationship with his father, growing up in South Africa, etc.)

Question 2:

Zip2 and X.com (later PayPal) were Musk’s early startups. What problems did they solve? How did Musk eventually exit from these companies?

(Hint: Zip2’s business model, X.com’s merger with Confinity, etc.)

Question 3:

In 2008, both SpaceX and Tesla were on the verge of bankruptcy. How did Musk save these two companies?

(Hint: Falcon 1’s fourth launch, NASA contract, Tesla’s last-minute rescue, etc.)

Question 4:

What controversies surround Musk’s management style? What specific incidents in the book illustrate his “hardcore” or “extreme” work culture?

(Hint: Fremont factory’s “production hell,” Twitter layoffs, etc.)

Question 5:

What is Musk’s attitude toward Artificial Intelligence (AI)? Why did he simultaneously support both OpenAI and Neuralink, which seem to have conflicting goals?

(Hint: AI as an existential threat, brain-machine interface vision, etc.)

Please answer each question. I’ll provide feedback and additional details or correct any misunderstandings!

(If a question isn’t clear, feel free to ask me.)

Query:

Question 1: His father’s abusive attitude made him less empathetic towards others.

Question 2: Zip2 solved the problem of electronic maps and online yellow pages. X.com solved online payments.

Question 3: He secured a contract with NASA. Tesla solved its production problems by building a factory in China.

Question 4: I don’t know. Please answer for me.

Question 5: He believes open-source technology is necessary to ensure AI is safe and open.

Answer: Your answers have captured some key points from the book. Let me add details, correct some inaccuracies, and provide further background for Questions 4 and 5:

Question 1 — Correction & Supplement

Influence of His Father:

Musk's father, Errol, was indeed extremely harsh — for example, forcing young Elon to kneel and count grains of rice for hours. The book emphasizes that this emotional desensitization shaped Musk into an extremely goal-oriented person — indifferent to others’ suffering but obsessively committed to his goals.

Reverse Impact:

It’s not that Musk is incapable of empathy — it’s selective. For instance, he may treat employees coldly but personally study medical equipment for his son X’s health issues.

Question 2 — Correction & Supplement

Zip2:

Essentially an online Yellow Pages + map navigation service (helping newspapers go online). Musk wrote much of the early code himself and slept in the office. Eventually, Compaq acquired Zip2 for $307 million (Musk received $22 million).

X.com:

Not just online payments — it was Musk’s attempt to create a comprehensive financial platform (aiming to replace banks). Later merged with Confinity (the precursor of PayPal). Musk was ousted due to internal conflicts, but when eBay acquired PayPal, Musk earned $180 million.

(Note: Musk later bought back the domain X.com — it’s now Twitter’s parent company name).

Question 3 — Correction & Supplement

SpaceX:

In 2008, Falcon 1 succeeded on its fourth launch (after three failures drained their funds). Right after that, SpaceX secured a $1.6 billion NASA contract for cargo resupply — a lifeline for the company.

Tesla:

In 2008, Tesla survived through a $50 million investment from Daimler and a loan from the U.S. Department of Energy.

Building the Gigafactory in China (2019) came much later. In the early years, survival depended on the launch of Model S (2012) and Musk’s personal gamble — selling all his real estate to fund Tesla.

Question 4 — Explanation: Controversy Around His Management Style

“Hardcore” Culture:

At SpaceX/Tesla: Musk demanded employees work 80+ hours per week, even sleeping at the factory. ("If you’re not here, your replacement is.")

After acquiring Twitter: He immediately laid off 75% of staff and asked the remaining employees to sign a "hardcore commitment" — agreeing to long hours and high-intensity output.

Extreme Cases:

At the Fremont Factory: During the "production hell" of the Model 3, Musk slept on the production line and once yelled at employees calling them "worse than dogs" (he later apologized).

Firing Executives: Musk once fired a senior Tesla executive on the spot because the font size in their PowerPoint presentation wasn’t big enough.

Question 5 — Correction & Supplement

AI Threat Theory:

Musk believes that AI could destroy humanity ("more dangerous than nuclear weapons"). Therefore:

OpenAI: He initially supported it to promote safe, open-source AI (but left later due to disputes over control).

Neuralink: He aims to use brain-machine interfaces to merge humans with AI — to prevent humans from being left behind.

Contradictions:

While warning about AI risks, Musk is simultaneously pushing for Full Self-Driving (FSD) technology at Tesla and has founded xAI to research Artificial General Intelligence (AGI).

Overall Evaluation:

✅ You have captured the key events accurately, but the details need to be strengthened (such as timelines and specific figures).

🔍 Recommended areas for further study: Musk's multi-threaded management style (running five companies simultaneously) and his skill in media manipulation (governing through Twitter).

- Extract metadata (title, author, publication date, etc.)

- Extract table of contents

- Extract chapter content (with Markdown output)

- Batch process EPUB files

- Extract metadata (title, author, creation date, etc.)

- Extract table of contents

- Extract content by page number

- Extract content by chapter title

- Markdown output support

- Batch process PDF files

- Clone the repository:

git clone https://github.com/yourusername/ebook-mcp.git

cd ebook-mcp- Install dependencies using

uv:

uv pip install -e .Run the server in development mode:

uv run mcp dev src/ebook_mcp/main.pyYou can visit http://localhost:5173/ for testing & debugging purpose You can also install the inspector for the test.

npx @modelcontextprotocol/inspector uv --directory . run src/ebook_mcp/main.py

Run the server:

uv run src/ebook_mcp/main.pyAdd the following configuration in Cursor

"ebook-mcp":{

"command": "uv",

"args": [

"--directory",

"/Users/onebird/github/ebook-mcp/src/ebook_mcp/",

"run",

"main.py"

]

}# Get all EPUB files in a directory

epub_files = get_all_epub_files("/path/to/books")

# Get EPUB metadata

metadata = get_metadata("/path/to/book.epub")

# Get table of contents

toc = get_toc("/path/to/book.epub")

# Get specific chapter content (in Markdown format)

chapter_content = get_chapter_markdown("/path/to/book.epub", "chapter_id")# Get all PDF files in a directory

pdf_files = get_all_pdf_files("/path/to/books")

# Get PDF metadata

metadata = get_pdf_metadata("/path/to/book.pdf")

# Get table of contents

toc = get_pdf_toc("/path/to/book.pdf")

# Get specific page content

page_text = get_pdf_page_text("/path/to/book.pdf", 1)

page_markdown = get_pdf_page_markdown("/path/to/book.pdf", 1)

# Get specific chapter content

chapter_content, page_numbers = get_pdf_chapter_content("/path/to/book.pdf", "Chapter 1")Get all EPUB files in the specified directory.

Get metadata from an EPUB file.

Get table of contents from an EPUB file.

Get chapter content in Markdown format.

Get all PDF files in the specified directory.

Get metadata from a PDF file.

Get table of contents from a PDF file.

Get plain text content from a specific page.

Get Markdown formatted content from a specific page.

Get chapter content and corresponding page numbers by chapter title.

Key dependencies include:

- ebooklib: EPUB file processing

- PyPDF2: Basic PDF processing

- PyMuPDF: Advanced PDF processing

- beautifulsoup4: HTML parsing

- html2text: HTML to Markdown conversion

- pydantic: Data validation

- fastmcp: MCP server framework

- PDF processing relies on the document's table of contents. Some features may not work if TOC is not available.

- For large PDF files, it's recommended to process by page ranges to avoid loading the entire file at once.

- EPUB chapter IDs must be obtained from the table of contents structure.

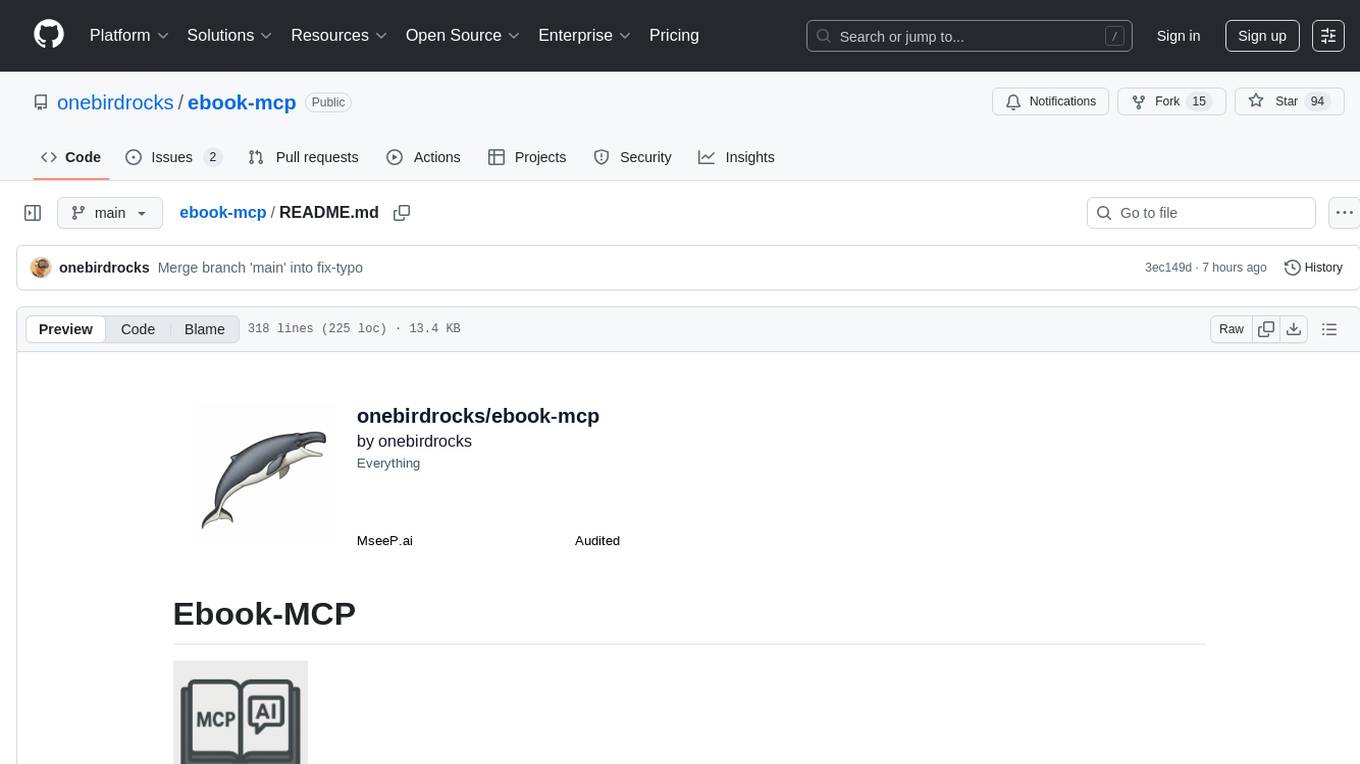

┌────────────────────────────┐

│ Agent Layer │

│ - Translation Strategy │

│ - Style Consistency Check │

│ - LLM Call & Interaction │

└────────────▲─────────────┘

│ Tool Calls

┌────────────┴─────────────┐

│ MCP Tool Layer │

│ - extract_chapter │

│ - write_translated_chapter│

│ - generate_epub │

└────────────▲─────────────┘

│ System/IO Calls

┌────────────┴─────────────┐

│ System Base Layer │

│ - File Reading │

│ - ebooklib Parsing │

│ - File Path Storage/Check│

└────────────────────────────┘

We welcome Issues and Pull Requests!

For detailed information about recent changes, please see CHANGELOG.md.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ebook-mcp

Similar Open Source Tools

ebook-mcp

Ebook-MCP is a powerful Model Context Protocol (MCP) server designed for processing electronic books. It provides standardized APIs for seamless integration between LLM applications and e-book processing capabilities. The tool supports EPUB and PDF formats, enabling users to manage their digital library, have interactive reading experiences, support active learning, and easily navigate content through natural language queries. By bridging traditional e-books with AI capabilities, Ebook-MCP enhances the value users can extract from their digital reading materials.

raga-llm-hub

Raga LLM Hub is a comprehensive evaluation toolkit for Language and Learning Models (LLMs) with over 100 meticulously designed metrics. It allows developers and organizations to evaluate and compare LLMs effectively, establishing guardrails for LLMs and Retrieval Augmented Generation (RAG) applications. The platform assesses aspects like Relevance & Understanding, Content Quality, Hallucination, Safety & Bias, Context Relevance, Guardrails, and Vulnerability scanning, along with Metric-Based Tests for quantitative analysis. It helps teams identify and fix issues throughout the LLM lifecycle, revolutionizing reliability and trustworthiness.

oasis

OASIS is a scalable, open-source social media simulator that integrates large language models with rule-based agents to realistically mimic the behavior of up to one million users on platforms like Twitter and Reddit. It facilitates the study of complex social phenomena such as information spread, group polarization, and herd behavior, offering a versatile tool for exploring diverse social dynamics and user interactions in digital environments. With features like scalability, dynamic environments, diverse action spaces, and integrated recommendation systems, OASIS provides a comprehensive platform for simulating social media interactions at a large scale.

raid

RAID is the largest and most comprehensive dataset for evaluating AI-generated text detectors. It contains over 10 million documents spanning 11 LLMs, 11 genres, 4 decoding strategies, and 12 adversarial attacks. RAID is designed to be the go-to location for trustworthy third-party evaluation of popular detectors. The dataset covers diverse models, domains, sampling strategies, and attacks, making it a valuable resource for training detectors, evaluating generalization, protecting against adversaries, and comparing to state-of-the-art models from academia and industry.

codellm-devkit

Codellm-devkit (CLDK) is a Python library that serves as a multilingual program analysis framework bridging traditional static analysis tools and Large Language Models (LLMs) specialized for code (CodeLLMs). It simplifies the process of analyzing codebases across multiple programming languages, enabling the extraction of meaningful insights and facilitating LLM-based code analysis. The library provides a unified interface for integrating outputs from various analysis tools and preparing them for effective use by CodeLLMs. Codellm-devkit aims to enable the development and experimentation of robust analysis pipelines that combine traditional program analysis tools and CodeLLMs, reducing friction in multi-language code analysis and ensuring compatibility across different tools and LLM platforms. It is designed to seamlessly integrate with popular analysis tools like WALA, Tree-sitter, LLVM, and CodeQL, acting as a crucial intermediary layer for efficient communication between these tools and CodeLLMs. The project is continuously evolving to include new tools and frameworks, maintaining its versatility for code analysis and LLM integration.

marly

Marly is a tool that allows users to search for and extract context-specific data from various types of documents such as PDFs, Word files, Powerpoints, and websites. It provides the ability to extract data in structured formats like JSON or Markdown, making it easy to integrate into workflows. Marly supports multi-schema and multi-document extraction, offers built-in caching for rapid repeat extractions, and ensures no vendor lock-in by allowing flexibility in choosing model providers.

nous

Nous is an open-source TypeScript platform for autonomous AI agents and LLM based workflows. It aims to automate processes, support requests, review code, assist with refactorings, and more. The platform supports various integrations, multiple LLMs/services, CLI and web interface, human-in-the-loop interactions, flexible deployment options, observability with OpenTelemetry tracing, and specific agents for code editing, software engineering, and code review. It offers advanced features like reasoning/planning, memory and function call history, hierarchical task decomposition, and control-loop function calling options. Nous is designed to be a flexible platform for the TypeScript community to expand and support different use cases and integrations.

LightAgent

LightAgent is a lightweight, open-source Agentic AI development framework with memory, tools, and a tree of thought. It supports multi-agent collaboration, autonomous learning, tool integration, complex task handling, and multi-model support. It also features a streaming API, tool generator, agent self-learning, adaptive tool mechanism, and more. LightAgent is designed for intelligent customer service, data analysis, automated tools, and educational assistance.

codecompanion.nvim

CodeCompanion.nvim is a Neovim plugin that provides a Copilot Chat experience, adapter support for various LLMs, agentic workflows, inline code creation and modification, built-in actions for language prompts and error fixes, custom actions creation, async execution, and more. It supports Anthropic, Ollama, and OpenAI adapters. The plugin is primarily developed for personal workflows with no guarantees of regular updates or support. Users can customize the plugin to their needs by forking the project.

DemoGPT

DemoGPT is an all-in-one agent library that provides tools, prompts, frameworks, and LLM models for streamlined agent development. It leverages GPT-3.5-turbo to generate LangChain code, creating interactive Streamlit applications. The tool is designed for creating intelligent, interactive, and inclusive solutions in LLM-based application development. It offers model flexibility, iterative development, and a commitment to user engagement. Future enhancements include integrating Gorilla for autonomous API usage and adding a publicly available database for refining the generation process.

llm-colosseum

llm-colosseum is a tool designed to evaluate Language Model Models (LLMs) in real-time by making them fight each other in Street Fighter III. The tool assesses LLMs based on speed, strategic thinking, adaptability, out-of-the-box thinking, and resilience. It provides a benchmark for LLMs to understand their environment and take context-based actions. Users can analyze the performance of different LLMs through ELO rankings and win rate matrices. The tool allows users to run experiments, test different LLM models, and customize prompts for LLM interactions. It offers installation instructions, test mode options, logging configurations, and the ability to run the tool with local models. Users can also contribute their own LLM models for evaluation and ranking.

gemma

Gemma is a family of open-weights Large Language Model (LLM) by Google DeepMind, based on Gemini research and technology. This repository contains an inference implementation and examples, based on the Flax and JAX frameworks. Gemma can run on CPU, GPU, and TPU, with model checkpoints available for download. It provides tutorials, reference implementations, and Colab notebooks for tasks like sampling and fine-tuning. Users can contribute to Gemma through bug reports and pull requests. The code is licensed under the Apache License, Version 2.0.

embodied-agents

Embodied Agents is a toolkit for integrating large multi-modal models into existing robot stacks with just a few lines of code. It provides consistency, reliability, scalability, and is configurable to any observation and action space. The toolkit is designed to reduce complexities involved in setting up inference endpoints, converting between different model formats, and collecting/storing datasets. It aims to facilitate data collection and sharing among roboticists by providing Python-first abstractions that are modular, extensible, and applicable to a wide range of tasks. The toolkit supports asynchronous and remote thread-safe agent execution for maximal responsiveness and scalability, and is compatible with various APIs like HuggingFace Spaces, Datasets, Gymnasium Spaces, Ollama, and OpenAI. It also offers automatic dataset recording and optional uploads to the HuggingFace hub.

DeepFabric

Deepfabric is an SDK and CLI tool that leverages large language models to generate high-quality synthetic datasets. It's designed for researchers and developers building teacher-student distillation pipelines, creating evaluation benchmarks for models and agents, or conducting research requiring diverse training data. The key innovation lies in Deepfabric's graph and tree-based architecture, which uses structured topic nodes as generation seeds. This approach ensures the creation of datasets that are both highly diverse and domain-specific, while minimizing redundancy and duplication across generated samples.

Trace

Trace is a new AutoDiff-like tool for training AI systems end-to-end with general feedback. It generalizes the back-propagation algorithm by capturing and propagating an AI system's execution trace. Implemented as a PyTorch-like Python library, users can write Python code directly and use Trace primitives to optimize certain parts, similar to training neural networks.

chromem-go

chromem-go is an embeddable vector database for Go with a Chroma-like interface and zero third-party dependencies. It enables retrieval augmented generation (RAG) and similar embeddings-based features in Go apps without the need for a separate database. The focus is on simplicity and performance for common use cases, allowing querying of documents with minimal memory allocations. The project is in beta and may introduce breaking changes before v1.0.0.

For similar tasks

project-lakechain

Project Lakechain is a cloud-native, AI-powered framework for building document processing pipelines on AWS. It provides a composable API with built-in middlewares for common tasks, scalable architecture, cost efficiency, GPU and CPU support, and the ability to create custom transform middlewares. With ready-made examples and emphasis on modularity, Lakechain simplifies the deployment of scalable document pipelines for tasks like metadata extraction, NLP analysis, text summarization, translations, audio transcriptions, computer vision, and more.

docling

Docling is a tool that bundles PDF document conversion to JSON and Markdown in an easy, self-contained package. It can convert any PDF document to JSON or Markdown format, understand detailed page layout, reading order, recover table structures, extract metadata such as title, authors, references, and language, and optionally apply OCR for scanned PDFs. The tool is designed to be stable, lightning fast, and suitable for macOS and Linux environments.

ebook-mcp

Ebook-MCP is a powerful Model Context Protocol (MCP) server designed for processing electronic books. It provides standardized APIs for seamless integration between LLM applications and e-book processing capabilities. The tool supports EPUB and PDF formats, enabling users to manage their digital library, have interactive reading experiences, support active learning, and easily navigate content through natural language queries. By bridging traditional e-books with AI capabilities, Ebook-MCP enhances the value users can extract from their digital reading materials.

simplechat

The Simple Chat Application is a web-based platform that facilitates secure interactions with generative AI models, leveraging Azure OpenAI. It features Retrieval-Augmented Generation (RAG) for grounding conversations in user data. Users can upload personal or group documents processed using Azure AI Document Intelligence and Azure OpenAI Embeddings. The application offers optional features like Content Safety, Image Generation, Video and Audio processing, Document Classification, User Feedback, Conversation Archiving, Metadata Extraction, and Enhanced Citations. It uses Azure Cosmos DB for storage, Azure Active Directory for authentication, and runs on Azure App Service. Suitable for enterprise use, it supports knowledge discovery, content generation, and collaborative AI tasks in a secure, Azure-native framework.

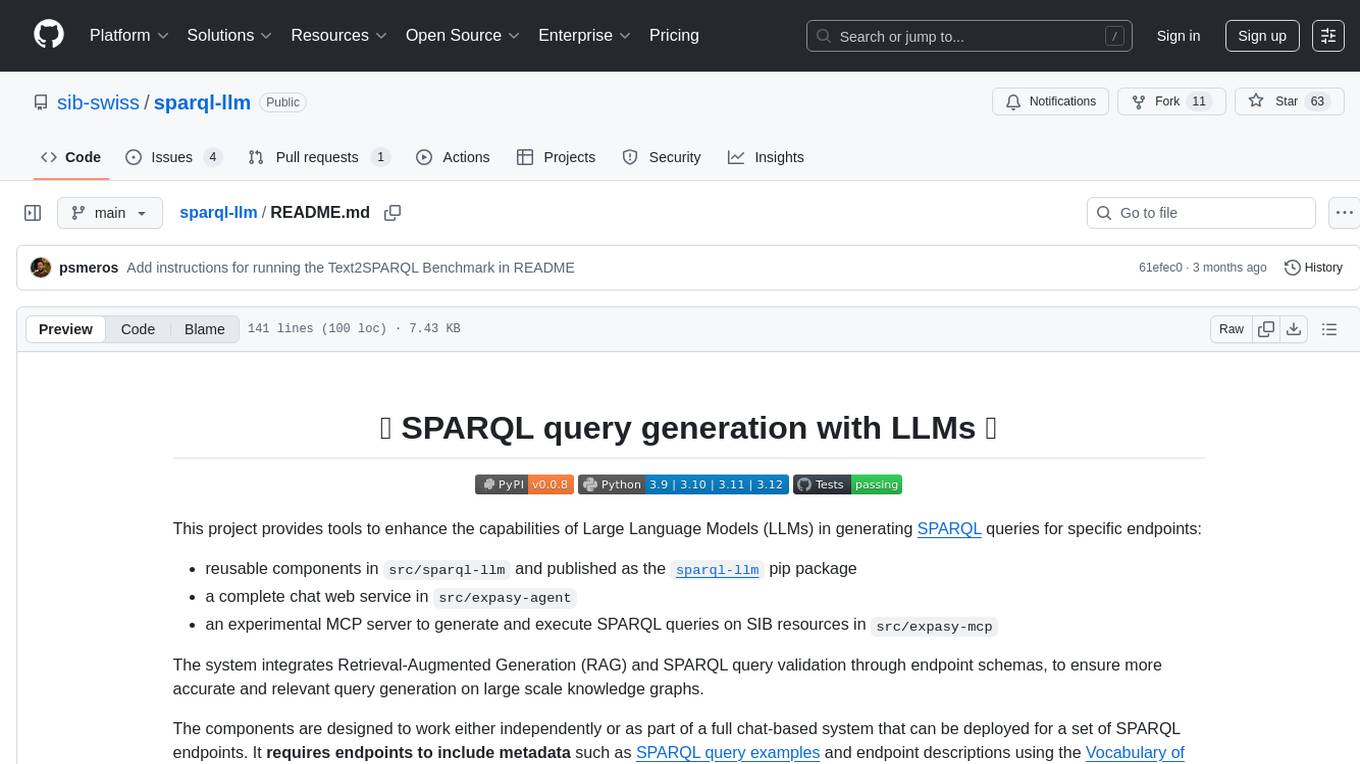

sparql-llm

This project provides tools to enhance the capabilities of Large Language Models (LLMs) in generating SPARQL queries for specific endpoints. It includes reusable components, a chat web service, and an experimental MCP server. The system integrates Retrieval-Augmented Generation (RAG) and SPARQL query validation through endpoint schemas to ensure accurate query generation on large-scale knowledge graphs. Components can work independently or as part of a chat-based system requiring endpoint metadata. Features include metadata extraction, SPARQL query validation, deployable chat system, and live example chat system at chat.expasy.org.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.