AMD-AI

AMD (Radeon GPU) ROCm based setup for popular AI tools on Ubuntu 24.04

Stars: 141

AMD-AI is a repository containing detailed instructions for installing, setting up, and configuring ROCm on Ubuntu systems with AMD GPUs. The repository includes information on installing various tools like Stable Diffusion, ComfyUI, and Oobabooga for tasks like text generation and performance tuning. It provides guidance on adding AMD GPU package sources, installing ROCm-related packages, updating system packages, and finding graphics devices. The instructions are aimed at users with AMD hardware looking to set up their Linux systems for AI-related tasks.

README:

This file is focused on the current stable version of PyTorch. There is another variation of these instructions for the development / nightly version(s) here : https://github.com/nktice/AMD-AI/blob/main/dev.md

2023-07 - I have composed this collection of instructions as they are my notes. I use this setup my own Linux system with AMD parts. I've gone over these doing many re-installs to get them all right. This is what I had hoped to find when I had search for install instructions - so I'm sharing them in the hopes that they save time for other people. There may be in here extra parts that aren't needed but this works for me. Originally text, with comments like a shell script that I cut and paste.

2023-09-09 - I had a report that this doesn't work in virtual machines (virtualbox) as the system there cannot see the hardware, it can't load drivers, etc. While this is not a guide about Windows, Windows users may find it more helpful to try DirectML - https://rocm.docs.amd.com/en/latest/deploy/windows/quick_start.html / https://github.com/lshqqytiger/stable-diffusion-webui-directml

[ ... updates abridged ... ]

2024-07-24 - PyTorch has updated with 2.4 now stable and referring to ROCm 6.1, so there's updates here to reflect those changes.

2024-08-04 - ROCm 6.2 is out, including support for the current version of Ubuntu (24.04 / Noble) so this revision includes changes to emphasize use of the new version. Previous stable has been set aside here - https://github.com/nktice/AMD-AI/blob/main/ROCm-6.1.3-Stable.md - Note I'm getting errors with the 2nd GPU with the new ROCm, bug report is filed, here is a link to that thread so you can follow : https://github.com/ROCm/ROCm/issues/3518

ROCm 6.2 includes support for Ubuntu 24.04 (noble).

At this point we assume you've done the system install and you know what that is, have a user, root, etc.

# update system packages

sudo apt update -y && sudo apt upgrade -y #turn on devel and sources.

sudo apt-add-repository -y -s -s

sudo apt install -y "linux-headers-$(uname -r)" \

"linux-modules-extra-$(uname -r)"This allows calls to older versions of Python by using "deadsnakes"

sudo add-apt-repository ppa:deadsnakes/ppa -y

sudo apt update -y Make the directory if it doesn't exist yet. This location is recommended by the distribution maintainers.

sudo mkdir --parents --mode=0755 /etc/apt/keyringsDownload the key, convert the signing-key to a full Keyring required by apt and store in the keyring directory

wget https://repo.radeon.com/rocm/rocm.gpg.key -O - | \

gpg --dearmor | sudo tee /etc/apt/keyrings/rocm.gpg > /dev/nullamdgpu repository

echo 'deb [arch=amd64 signed-by=/etc/apt/keyrings/rocm.gpg] https://repo.radeon.com/amdgpu/6.2/ubuntu noble main' \

| sudo tee /etc/apt/sources.list.d/amdgpu.list

sudo apt update -y AMDGPU DKMS

sudo apt install -y amdgpu-dkmshttps://rocmdocs.amd.com/en/latest/deploy/linux/os-native/install.html

echo "deb [arch=amd64 signed-by=/etc/apt/keyrings/rocm.gpg] https://repo.radeon.com/rocm/apt/6.2 noble main" \

| sudo tee --append /etc/apt/sources.list.d/rocm.list

echo -e 'Package: *\nPin: release o=repo.radeon.com\nPin-Priority: 600' \

| sudo tee /etc/apt/preferences.d/rocm-pin-600

sudo apt update -yThis is lots of stuff, but comparatively small so worth including, as some stuff later may want as dependencies without much notice.

# ROCm...

sudo apt install -y rocm-dev rocm-libs rocm-hip-sdk rocm-libs# ld.so.conf update

sudo tee --append /etc/ld.so.conf.d/rocm.conf <<EOF

/opt/rocm/lib

/opt/rocm/lib64

EOF

sudo ldconfig# update path

echo "PATH=/opt/rocm/bin:/opt/rocm/opencl/bin:$PATH" >> ~/.profilesudo /opt/rocm/bin/rocminfo | grep gfxMy 6900 reported as gfx1030, and my 7900 XTX show up as gfx1100

Of course note to change the user name to match your user.

sudo adduser `whoami` video

sudo adduser `whoami` render# git and git-lfs (large file support

sudo apt install -y git git-lfs

# development tool may be required later...

sudo apt install -y libstdc++-12-dev

# stable diffusion likes TCMalloc...

sudo apt install -y libtcmalloc-minimal4This section is optional, and as such has been moved to performance-tuning

nvtop Note : I have had issues with the distro version crashes with 2 GPUs, installing new version from sources works fine. Instructions for that are included at the bottom, as they depend on things installed between here and there. Project website : https://github.com/Syllo/nvtop

sudo apt install -y nvtop sudo apt install -y radeontop rovclocksudo rebootThis system is built to use its own venv ( rather than Conda )...

https://github.com/AUTOMATIC1111/stable-diffusion-webui Get the files...

cd

git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git

cd stable-diffusion-webuiThe 1.9.x+ release series breaks the API so that it won't work with Oobabooga's TGW - so the following resets to use the 1.8.0 relaase that does work with Oobabooga.

2024-07-04 - Oobabooga 1.9 resolves this issue - these lines are remarked out for now, but preserved in case someone wants to see how to do something similar in the future...

# git checkout bef51ae

# git reset --hardsudo apt install -y wget git python3.10 python3.10-venv libgl1

python3.10 -m venv venv

source venv/bin/activate

python3.10 -m pip install -U pip

deactivatetee --append webui-user.sh <<EOF

# specify compatible python version

python_cmd="python3.10"

## Torch for ROCm

# workaround for ROCm + Torch > 2.4.x - https://github.com/comfyanonymous/ComfyUI/issues/3698

export TORCH_BLAS_PREFER_HIPBLASLT=0

# generic import...

# export TORCH_COMMAND="pip install torch torchvision --index-url https://download.pytorch.org/whl/nightly/rocm6.1"

# use specific versions to avoid downloading all the nightlies... ( update dates as needed )

export TORCH_COMMAND="pip install --pre torch torchvision --index-url https://download.pytorch.org/whl/rocm6.1"

## And if you want to call this from other programs...

export COMMANDLINE_ARGS="--api"

## crashes with 2 cards, so to get it to run on the second card (only), unremark the following

# export CUDA_VISIBLE_DEVICES="1"

EOFIf you don't do this, it will install a default to get you going. Note that these start files do include things that it needs you'll want to copy into the folder where you have other models ( to avoid issues )

#mv models models.1

#ln -s /path/to/models models Note that the first time it starts it may take it a while to go and get things it's not always good about saying what it's up to.

./webui.sh - variation of https://raw.githubusercontent.com/ltdrdata/ComfyUI-Manager/main/scripts/install-comfyui-venv-linux.sh Includes ComfyUI-Manager

Same install of packages here as for Stable Diffusion ( included here in case you're not installed SD and just want ComfyUI... )

sudo apt install -y wget git python3 python3-venv libgl1 cd

git clone https://github.com/comfyanonymous/ComfyUI

cd ComfyUI/custom_nodes

git clone https://github.com/ltdrdata/ComfyUI-Manager

cd ..

python3 -m venv venv

source venv/bin/activate

# pre-install torch and torchvision from nightlies - note you may want to update versions...

python3 -m pip install --pre torch torchvision --index-url https://download.pytorch.org/whl/rocm6.1

python3 -m pip install -r requirements.txt --extra-index-url https://download.pytorch.org/whl/rocm6.1

python3 -m pip install -r custom_nodes/ComfyUI-Manager/requirements.txt --extra-index-url https://download.pytorch.org/whl/rocm6.1

# end vend if needed...

deactivateScripts for running the program...

# run_gpu.sh

tee --append run_gpu.sh <<EOF

#!/bin/bash

source venv/bin/activate

python3 main.py --preview-method auto

EOF

chmod +x run_gpu.sh

#run_cpu.sh

tee --append run_cpu.sh <<EOF

#!/bin/bash

source venv/bin/activate

python3 main.py --preview-method auto --cpu

EOF

chmod +x run_cpu.shUpdate the config file to point to Stable Diffusion (presuming it's installed...)

# config file - connecto stable-diffusion-webui

cp extra_model_paths.yaml.example extra_model_paths.yaml

sed -i "s@path/to@`echo ~`@g" extra_model_paths.yaml

# edit config file to point to your checkpoints etc

#vi extra_model_paths.yamlProject Website : https://github.com/oobabooga/text-generation-webui.git

First we'll need Conda ... Required for pytorch... Conda provides virtual environments for python, so that programs with different dependencies can have different environments. Here is more info on managing conda : https://docs.conda.io/projects/conda/en/latest/user-guide/getting-started.html# Other notes : https://docs.conda.io/projects/conda/en/latest/user-guide/install/linux.html Download info : https://www.anaconda.com/download/

Anaconda ( if you prefer this to miniconda below )

#cd ~/Downloads/

#wget https://repo.anaconda.com/archive/Anaconda3-2023.09-0-Linux-x86_64.sh

#bash Anaconda3-2023.09-0-Linux-x86_64.sh -b

#cd ~

#ln -s anaconda3 condaMiniconda ( if you prefer this to Anaconda above... ) [ https://docs.conda.io/projects/miniconda/en/latest/ ]

cd ~/Downloads/

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh

bash Miniconda3-latest-Linux-x86_64.sh -b

cd ~

ln -s miniconda3 condaecho "PATH=~/conda/bin:$PATH" >> ~/.profile

source ~/.profile

conda update -y -n base -c defaults condaconda install -y cmake ninjaconda init

source ~/.profilesudo apt install -y pip

pip3 install --upgrade pip## show outdated packages...

#pip list --outdated

## check dependencies

#pip check

## install specified bersion

#pip install <packagename>==<version>conda create -n textgen python=3.11 -y

conda activate textgen# pre-install

pip install --pre cmake colorama filelock lit numpy Pillow Jinja2 \

mpmath fsspec MarkupSafe certifi filelock networkx \

sympy packaging requests \

--index-url https://download.pytorch.org/whl/rocm6.1There's version conflicts, so we specify versions that we want installed -

#pip install --pre torch torchvision torchtext torchaudio triton pytorch-triton-rocm \

#pip install --pre torch==2.3.1+rocm6.0 torchvision==0.18.1+rocm6.0 torchaudio==2.3.1 triton pytorch-triton-rocm \

# --index-url https://download.pytorch.org/whl/rocm6.0

pip install --pre torch==2.4.0+rocm6.1 torchvision==0.19.0+rocm6.1 torchaudio==2.4.0 triton pytorch-triton-rocm \

--index-url https://download.pytorch.org/whl/rocm6.12024-05-12 For some odd reason, torchtext isn't recognized, even though it's there... so we specify it using it's URL to be explicit.

pip install https://download.pytorch.org/whl/cpu/torchtext-0.18.0%2Bcpu-cp311-cp311-linux_x86_64.whl#sha256=c760e672265cd6f3e4a7c8d4a78afe9e9617deacda926a743479ee0418d4207d2024-04-24 - AMD's own ROCm version of bitsandbytes has been updated! - https://github.com/ROCm/bitsandbytes ( ver 0.44.0.dev0 at time of writing )

cd

git clone https://github.com/ROCm/bitsandbytes.git

cd bitsandbytes

pip install .cd

git clone https://github.com/oobabooga/text-generation-webui

cd text-generation-webuicd

git clone https://github.com/oobabooga/text-generation-webui

cd text-generation-webui2024-07-26 Oobabooga release 1.12 changed how requirements are done, including calls that refer to old versions of PyTorch which didn't work for me... So the usual command here is remarked out, and I have instead offered a replacement requirements.txt with minimal includes, that combined with what else is here gets it up and running ( for me ), using more recent versions of packages.

#pip install -r requirements_amd.txt tee --append requirements_amdai.txt <<EOF

# alternate simplified requirements from https://github.com/nktice/AMD-AI

accelerate>=0.32

colorama

datasets

einops

gradio>=4.26

hqq>=0.1.7.post3

jinja2>=3.1.4

lm_eval>=0.3.0

markdown

numba>=0.59

numpy>=1.26

optimum>=1.17

pandas

peft>=0.8

Pillow>=9.5.0

psutil

pyyaml

requests

rich

safetensors>=0.4

scipy

sentencepiece

tensorboard

transformers>=4.43

tqdm

wandb

# API

SpeechRecognition>=3.10.0

flask_cloudflared>=0.0.14

sse-starlette>=1.6.5

tiktoken

EOF

pip install -r requirements_amdai.txt --extra-index-url https://download.pytorch.org/whl/nightly/rocm6.1git clone https://github.com/turboderp/exllamav2 repositories/exllamav2

cd repositories/exllamav2

## Force collection back to base 0.0.11

## git reset --hard a4ecea6

pip install -r requirements.txt --extra-index-url https://download.pytorch.org/whl/rocm6.1

pip install . --index-url https://download.pytorch.org/whl/rocm6.1

cd ../..2024-06-18 - Llama-cpp-python - Another loader, that is highly efficient in resource use, but not very fast. https://github.com/abetlen/llama-cpp-python It may need models in GGUF format ( and not other types ).

## remove old versions

pip uninstall llama_cpp_python -y

pip uninstall llama_cpp_python_cuda -y

## install llama-cpp-python

git clone --recurse-submodules https://github.com/abetlen/llama-cpp-python.git repositories/llama-cpp-python

cd repositories/llama-cpp-python

CC='/opt/rocm/llvm/bin/clang' CXX='/opt/rocm/llvm/bin/clang++' CFLAGS='-fPIC' CXXFLAGS='-fPIC' CMAKE_PREFIX_PATH='/opt/rocm' ROCM_PATH="/opt/rocm" HIP_PATH="/opt/rocm" CMAKE_ARGS="-GNinja -DLLAMA_HIPBLAS=ON -DLLAMA_AVX2=on " pip install --no-cache-dir .

cd ../..

Models : If you're new to this - new models can be downloaded from the shell via a python script, or from a form in the interface. There are lots of them - http://huggingface.co Generally the GPTQ models by TheBloke are likely to load... https://huggingface.co/TheBloke The 30B/33B models will load on 24GB of VRAM, but may error, or run out of memory depending on usage and parameters. Worthy of mention, TurboDerp ( author of the exllama loaders ) has been posting exllamav2 ( exl2 ) processed versions of models - https://huggingface.co/turboderp ( for use with exllamav2 loader ) - when downloading, note the --branch option.

To get new models note the ~/text-generation-webui directory has a program " download-model.py " that is made for downloading models from HuggingFace's collection.

If you have old models, link pre-stored models into the models

# cd ~/text-generation-webui

# mv models models.1

# ln -s /path/to/models modelsLet's create a script (run.sh) to run the program...

tee --append run.sh <<EOF

#!/bin/bash

## activate conda

conda activate textgen

## command to run server...

python server.py --extensions sd_api_pictures send_pictures gallery

# if you want the server to listen on the local network so other machines can access it, add --listen.

#python server.py --listen --extensions sd_api_pictures send_pictures gallery

conda deactivate

EOF

chmod u+x run.shNote that to run the script :

source run.shHere's an example, nvtop, sd console, tgw console...

this screencap taken using ROCm 6.1.3 - under this config : https://github.com/nktice/AMD-AI/blob/main/ROCm-6.1.3-Dev.md

( As one from packages crashes on 2 GPUs, while this never version from sources works fine. ) project website : https://github.com/Syllo/nvtop optional - tool for displaying gpu / memory usage info The package for this crashes with 2 gpu's, here it is from source.

sudo apt install -y libdrm-dev libsystemd-dev libudev-dev

cd

git clone https://github.com/Syllo/nvtop.git

mkdir -p nvtop/build && cd nvtop/build

cmake .. -DNVIDIA_SUPPORT=OFF -DAMDGPU_SUPPORT=ON -DINTEL_SUPPORT=OFF

make

sudo make installFor Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AMD-AI

Similar Open Source Tools

AMD-AI

AMD-AI is a repository containing detailed instructions for installing, setting up, and configuring ROCm on Ubuntu systems with AMD GPUs. The repository includes information on installing various tools like Stable Diffusion, ComfyUI, and Oobabooga for tasks like text generation and performance tuning. It provides guidance on adding AMD GPU package sources, installing ROCm-related packages, updating system packages, and finding graphics devices. The instructions are aimed at users with AMD hardware looking to set up their Linux systems for AI-related tasks.

air

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, customizable build or any command, support for excluding subdirectories, and allows watching new directories after Air started. Users can overwrite specific configuration from arguments and pass runtime arguments for running the built binary. Air can be installed via `go install`, `install.sh`, or `goblin.run`, and can also be used with Docker/Podman. It supports debugging, Docker Compose, and provides a Q&A section for common issues. The tool requires Go 1.16+ for development and welcomes pull requests. Air is released under the GNU General Public License v3.0.

air

Air is a live-reloading command line utility for developing Go applications. It provides colorful log output, allows customization of build or any command, supports excluding subdirectories, and allows watching new directories after Air has started. Air can be installed via `go install`, `install.sh`, `goblin.run`, or Docker/Podman. To use Air, simply run `air` in your project root directory and leave it alone to focus on your code. Air has nothing to do with hot-deploy for production.

comp

Comp AI is an open-source compliance automation platform designed to assist companies in achieving compliance with standards like SOC 2, ISO 27001, and GDPR. It transforms compliance into an engineering problem solved through code, automating evidence collection, policy management, and control implementation while maintaining data and infrastructure control.

screeps-starter-rust

screeps-starter-rust is a Rust AI starter kit for Screeps: World, a JavaScript-based MMO game. It utilizes the screeps-game-api bindings from the rustyscreeps organization and wasm-pack for building Rust code to WebAssembly. The example includes Rollup for bundling javascript, Babel for transpiling code, and screeps-api Node.js package for deployment. Users can refer to the Rust version of game APIs documentation at https://docs.rs/screeps-game-api/. The tool supports most crates on crates.io, except those interacting with OS APIs.

Airshipper

Airshipper is a cross-platform Veloren launcher that allows users to update/download and start nightly builds of the game. It features a fancy UI with self-updating capabilities on Windows. Users can compile it from source and also have the option to install Airshipper-Server for advanced configurations. Note that Airshipper is still in development and may not be stable for all users.

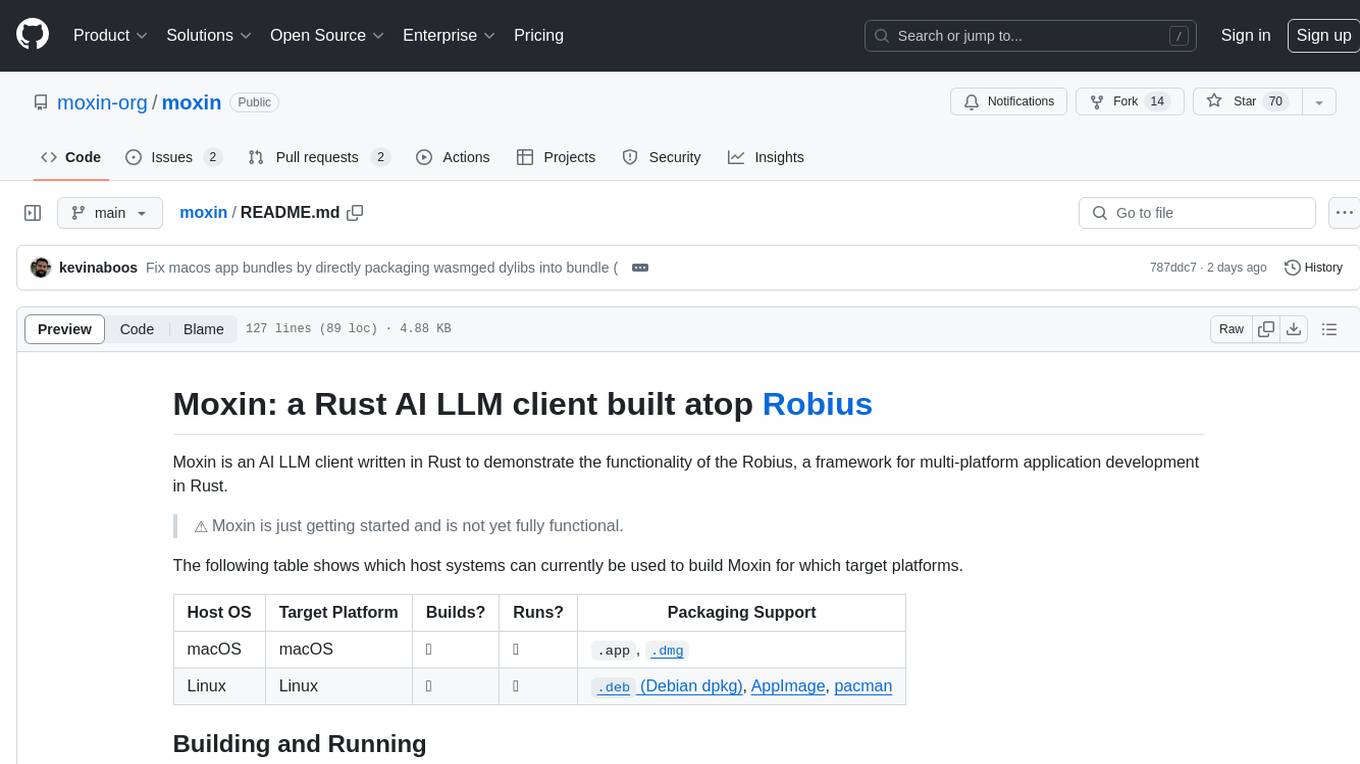

moxin

Moxin is an AI LLM client written in Rust to demonstrate the functionality of the Robius framework for multi-platform application development. It is currently in early stages of development and not fully functional. The tool supports building and running on macOS and Linux systems, with packaging options available for distribution. Users can install the required WasmEdge WASM runtime and dependencies to build and run Moxin. Packaging for distribution includes generating `.deb` Debian packages, AppImage, and pacman installation packages for Linux, as well as `.app` bundles and `.dmg` disk images for macOS. The macOS app is not signed, leading to a warning on installation, which can be resolved by removing the quarantine attribute from the installed app.

sandvault

SandVault is a tool that manages a limited user account to sandbox shell commands and AI agents on macOS, providing a lightweight alternative to application isolation using virtual machines. It allows for running Claude Code, OpenAI Codex, Google Gemini, and shell commands safely within a sandboxed environment. SandVault offers features like fast context switching, passwordless account switching, shared workspace access, and clean uninstallation. The tool operates with limited access to the user's computer, ensuring security by restricting access to certain directories and system files.

elyra

Elyra is a set of AI-centric extensions to JupyterLab Notebooks that includes features like Visual Pipeline Editor, running notebooks/scripts as batch jobs, reusable code snippets, hybrid runtime support, script editors with execution capabilities, debugger, version control using Git, and more. It provides a comprehensive environment for data scientists and AI practitioners to develop, test, and deploy machine learning models and workflows efficiently.

zsh_codex

Zsh Codex is a ZSH plugin that enables AI-powered code completion in the command line. It supports both OpenAI's Codex and Google's Generative AI (Gemini), providing advanced language model capabilities for coding tasks directly in the terminal. Users can easily install the plugin and configure it to enhance their coding experience with AI assistance.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

nosia

Nosia is a platform that allows users to run an AI model on their own data. It is designed to be easy to install and use. Users can follow the provided guides for quickstart, API usage, upgrading, starting, stopping, and troubleshooting. The platform supports custom installations with options for remote Ollama instances, custom completion models, and custom embeddings models. Advanced installation instructions are also available for macOS with a Debian or Ubuntu VM setup. Users can access the platform at 'https://nosia.localhost' and troubleshoot any issues by checking logs and job statuses.

please-cli

Please CLI is an AI helper script designed to create CLI commands by leveraging the GPT model. Users can input a command description, and the script will generate a Linux command based on that input. The tool offers various functionalities such as invoking commands, copying commands to the clipboard, asking questions about commands, and more. It supports parameters for explanation, using different AI models, displaying additional output, storing API keys, querying ChatGPT with specific models, showing the current version, and providing help messages. Users can install Please CLI via Homebrew, apt, Nix, dpkg, AUR, or manually from source. The tool requires an OpenAI API key for operation and offers configuration options for setting API keys and OpenAI settings. Please CLI is licensed under the Apache License 2.0 by TNG Technology Consulting GmbH.

mlir-aie

This repository contains an MLIR-based toolchain for AI Engine-enabled devices, such as AMD Ryzen™ AI and Versal™. This repository can be used to generate low-level configurations for the AI Engine portion of these devices. AI Engines are organized as a spatial array of tiles, where each tile contains AI Engine cores and/or memories. The spatial array is connected by stream switches that can be configured to route data between AI Engine tiles scheduled by their programmable Data Movement Accelerators (DMAs). This repository contains MLIR representations, with multiple levels of abstraction, to target AI Engine devices. This enables compilers and developers to program AI Engine cores, as well as describe data movements and array connectivity. A Python API is made available as a convenient interface for generating MLIR design descriptions. Backend code generation is also included, targeting the aie-rt library. This toolchain uses the AI Engine compiler tool which is part of the AMD Vitis™ software installation: these tools require a free license for use from the Product Licensing Site.

QA-Pilot

QA-Pilot is an interactive chat project that leverages online/local LLM for rapid understanding and navigation of GitHub code repository. It allows users to chat with GitHub public repositories using a git clone approach, store chat history, configure settings easily, manage multiple chat sessions, and quickly locate sessions with a search function. The tool integrates with `codegraph` to view Python files and supports various LLM models such as ollama, openai, mistralai, and localai. The project is continuously updated with new features and improvements, such as converting from `flask` to `fastapi`, adding `localai` API support, and upgrading dependencies like `langchain` and `Streamlit` to enhance performance.

fiftyone

FiftyOne is an open-source tool designed for building high-quality datasets and computer vision models. It supercharges machine learning workflows by enabling users to visualize datasets, interpret models faster, and improve efficiency. With FiftyOne, users can explore scenarios, identify failure modes, visualize complex labels, evaluate models, find annotation mistakes, and much more. The tool aims to streamline the process of improving machine learning models by providing a comprehensive set of features for data analysis and model interpretation.

For similar tasks

AMD-AI

AMD-AI is a repository containing detailed instructions for installing, setting up, and configuring ROCm on Ubuntu systems with AMD GPUs. The repository includes information on installing various tools like Stable Diffusion, ComfyUI, and Oobabooga for tasks like text generation and performance tuning. It provides guidance on adding AMD GPU package sources, installing ROCm-related packages, updating system packages, and finding graphics devices. The instructions are aimed at users with AMD hardware looking to set up their Linux systems for AI-related tasks.

aiconfigurator

The `aiconfigurator` tool assists in finding a strong starting configuration for disaggregated serving in AI deployments. It helps optimize throughput at a given latency by evaluating thousands of configurations based on model, GPU count, and GPU type. The tool models LLM inference using collected data for a target machine and framework, running via CLI and web app. It generates configuration files for deployment with Dynamo, offering features like customized configuration, all-in-one automation, and tuning with advanced features. The tool estimates performance by breaking down LLM inference into operations, collecting operation execution times, and searching for strong configurations. Supported features include models like GPT and operations like attention, KV cache, GEMM, AllReduce, embedding, P2P, element-wise, MoE, MLA BMM, TRTLLM versions, and parallel modes like tensor-parallel and pipeline-parallel.

amd-shark-ai

The amdshark-ai repository contains the amdshark Modeling and Serving Libraries, which include sub-projects like shortfin for high performance inference, amdsharktank for model recipes and conversion tools, and amdsharktuner for tuning program performance. Developers can find API documentation, programming guides, and support matrix for various models within the repository.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.