zsh-github-copilot

🧠 GitHub Copilot for your command line

Stars: 66

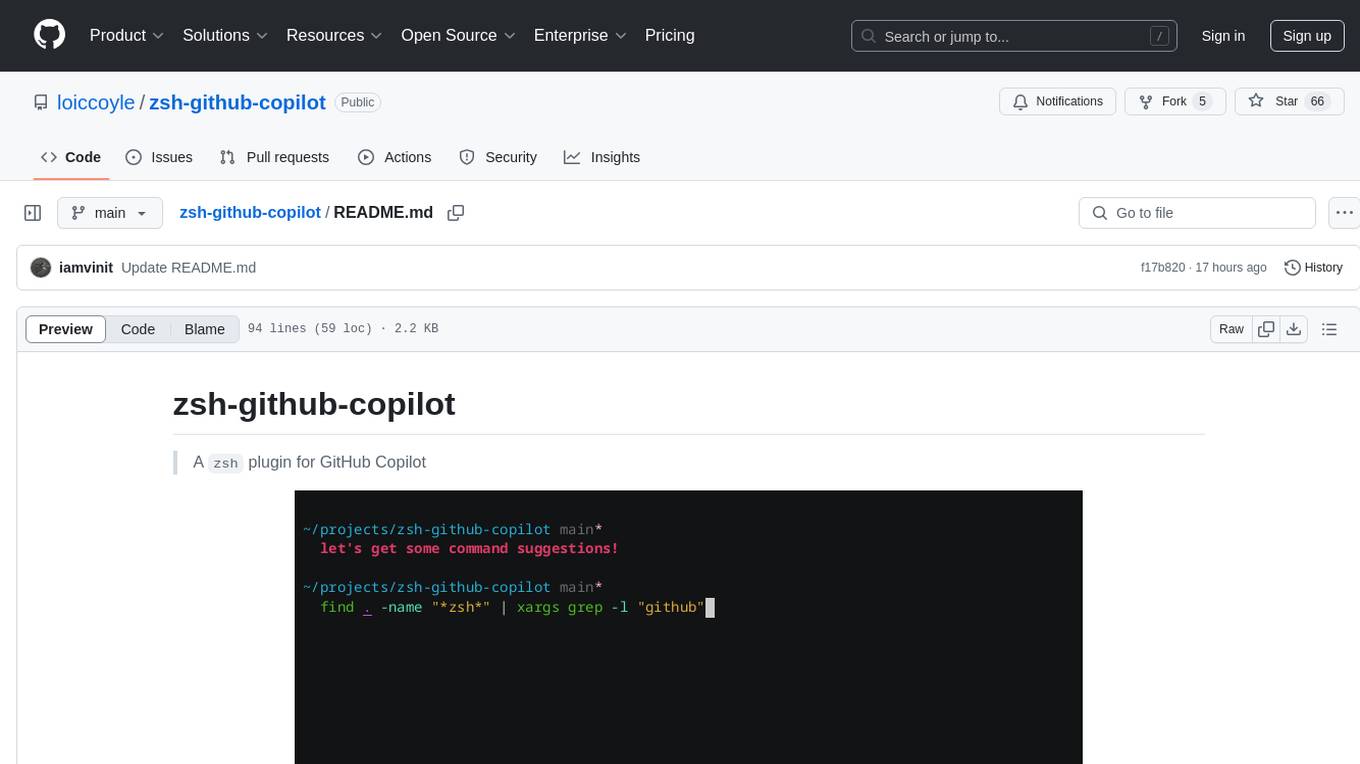

zsh-github-copilot is a `zsh` plugin that enhances the GitHub Copilot experience by providing keybinds to quickly access command explanations and get Copilot suggestions. It integrates seamlessly with GitHub CLI and offers a smooth setup process. Users can easily install the plugin using popular zsh plugin managers like antigen, oh-my-zsh, zinit, zplug, and zpm. By binding specific keys, users can access the 'suggest' and 'explain' functionalities to improve their coding workflow with GitHub Copilot. This plugin is designed to streamline the usage of GitHub Copilot within the zsh shell environment.

README:

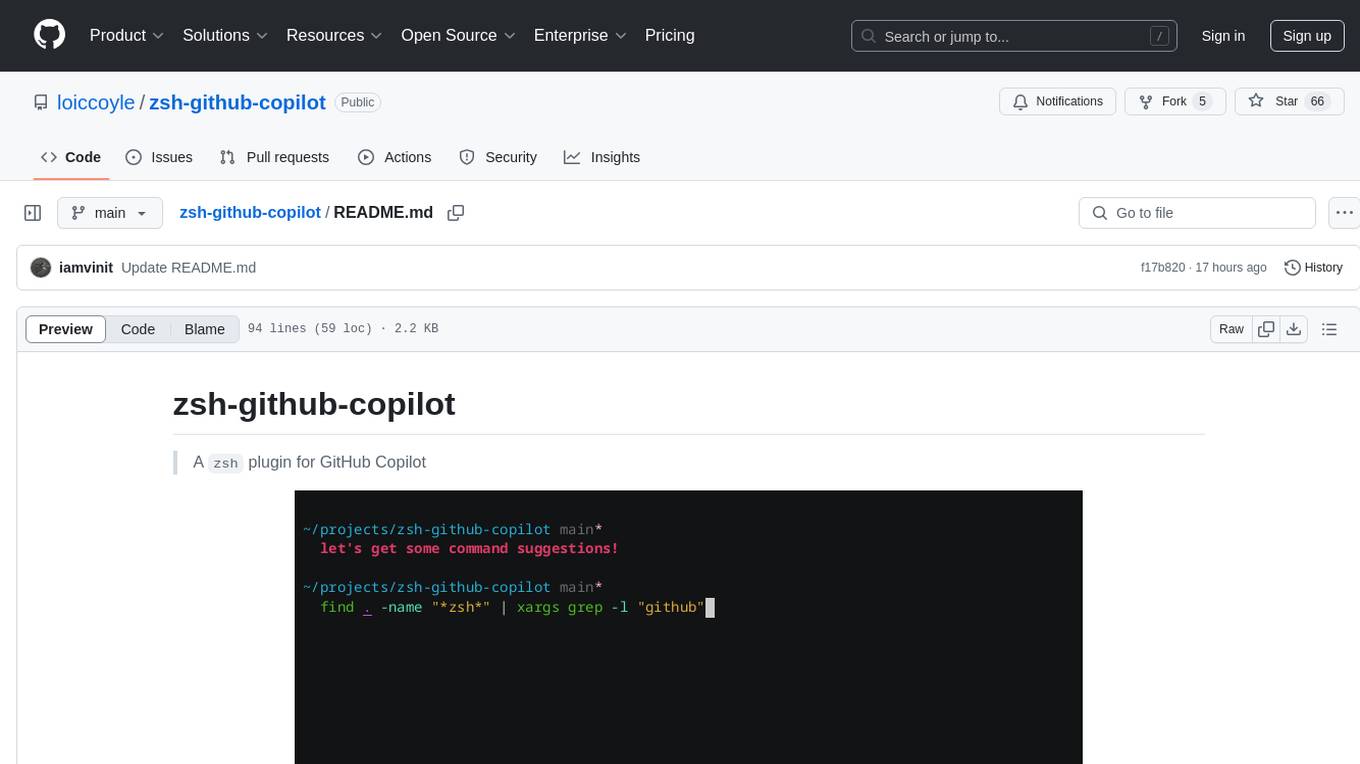

A

zshplugin for GitHub Copilot

Requires the GitHub CLI with the Copilot extension installed and configured.

The plugin will check for the extension and other dependencies at source time, to disable this check, set the

ZSH_GH_COPILOT_NO_CHECKenvironment variable to1.

Add the following to your .zshrc:

antigen bundle loiccoyle/zsh-github-copilotClone this repository into $ZSH_CUSTOM/plugins (by default ~/.oh-my-zsh/custom/plugins):

git clone https://github.com/loiccoyle/zsh-github-copilot ${ZSH_CUSTOM:-~/.oh-my-zsh/custom}/plugins/zsh-github-copilotAdd the plugin to the list of plugins for Oh My Zsh to load (inside ~/.zshrc):

plugins=(

# other plugins...

zsh-github-copilot

)Add the following to your .zshrc:

zinit light loiccoyle/zsh-github-copilotAdd the following to your .zshrc:

zplug "loiccoyle/zsh-github-copilot"Add the following to your .zshrc:

zpm load loiccoyle/zsh-github-copilotBind the suggest and/or explain widgets:

bindkey '^[|' zsh_gh_copilot_explain # bind Alt+shift+\ to explain

bindkey '^[\' zsh_gh_copilot_suggest # bind Alt+\ to suggestbindkey '»' zsh_gh_copilot_explain # bind Option+shift+\ to explain

bindkey '«' zsh_gh_copilot_suggest # bind Option+\ to suggestTo get command explanations, write out the command in your prompt and hit your keybind.

To get Copilot to suggest a command to fulfill a query, type out the query in your prompt and hit your suggest keybind.

This plugin draws from stefanheule/zsh-llm-suggestions

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for zsh-github-copilot

Similar Open Source Tools

zsh-github-copilot

zsh-github-copilot is a `zsh` plugin that enhances the GitHub Copilot experience by providing keybinds to quickly access command explanations and get Copilot suggestions. It integrates seamlessly with GitHub CLI and offers a smooth setup process. Users can easily install the plugin using popular zsh plugin managers like antigen, oh-my-zsh, zinit, zplug, and zpm. By binding specific keys, users can access the 'suggest' and 'explain' functionalities to improve their coding workflow with GitHub Copilot. This plugin is designed to streamline the usage of GitHub Copilot within the zsh shell environment.

AMD-AI

AMD-AI is a repository containing detailed instructions for installing, setting up, and configuring ROCm on Ubuntu systems with AMD GPUs. The repository includes information on installing various tools like Stable Diffusion, ComfyUI, and Oobabooga for tasks like text generation and performance tuning. It provides guidance on adding AMD GPU package sources, installing ROCm-related packages, updating system packages, and finding graphics devices. The instructions are aimed at users with AMD hardware looking to set up their Linux systems for AI-related tasks.

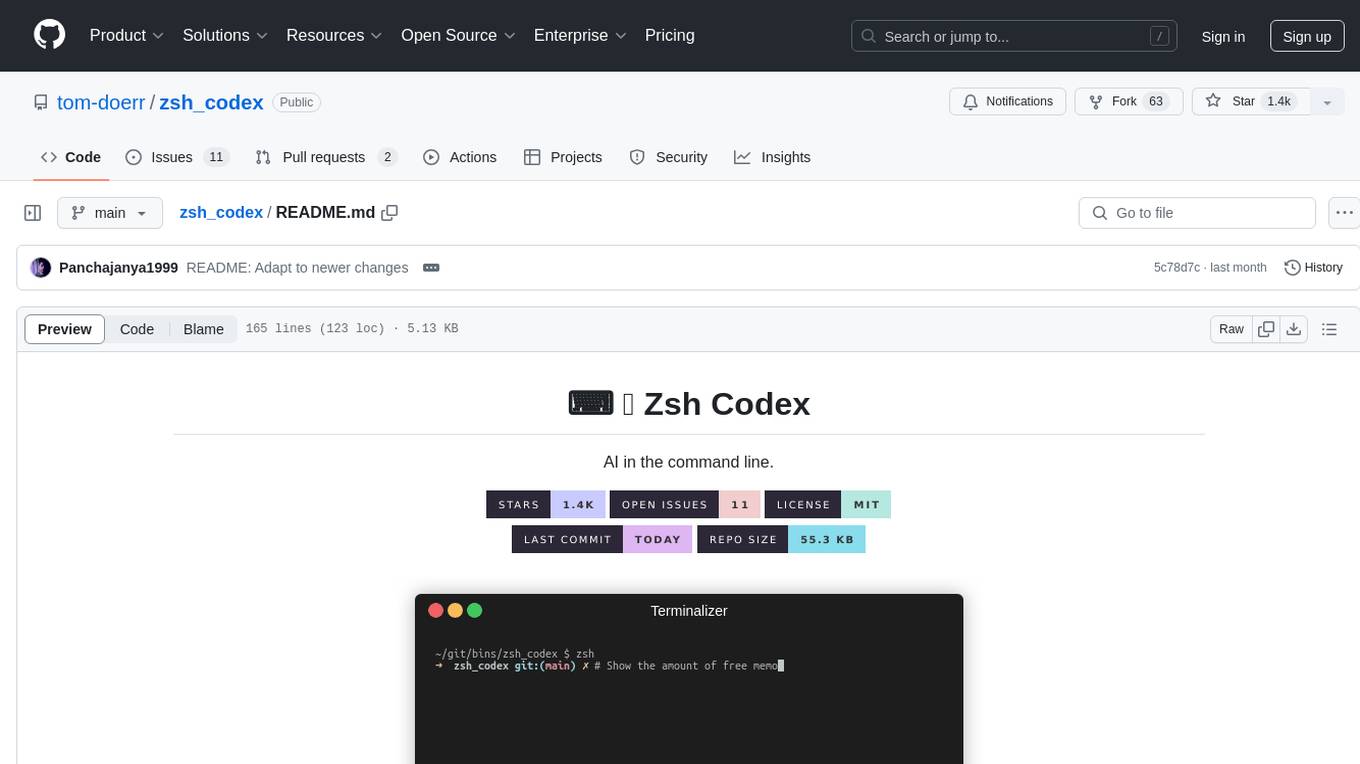

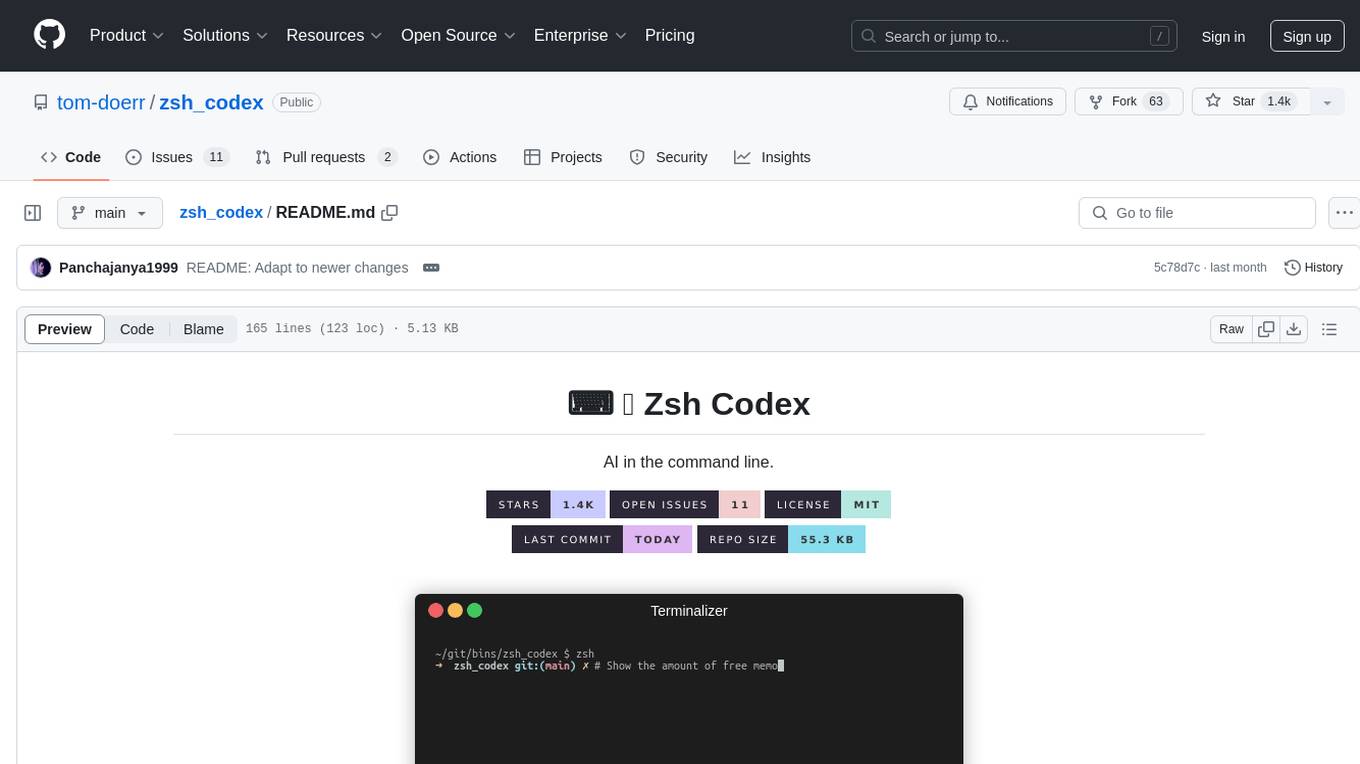

zsh_codex

Zsh Codex is a ZSH plugin that enables AI-powered code completion in the command line. It supports both OpenAI's Codex and Google's Generative AI (Gemini), providing advanced language model capabilities for coding tasks directly in the terminal. Users can easily install the plugin and configure it to enhance their coding experience with AI assistance.

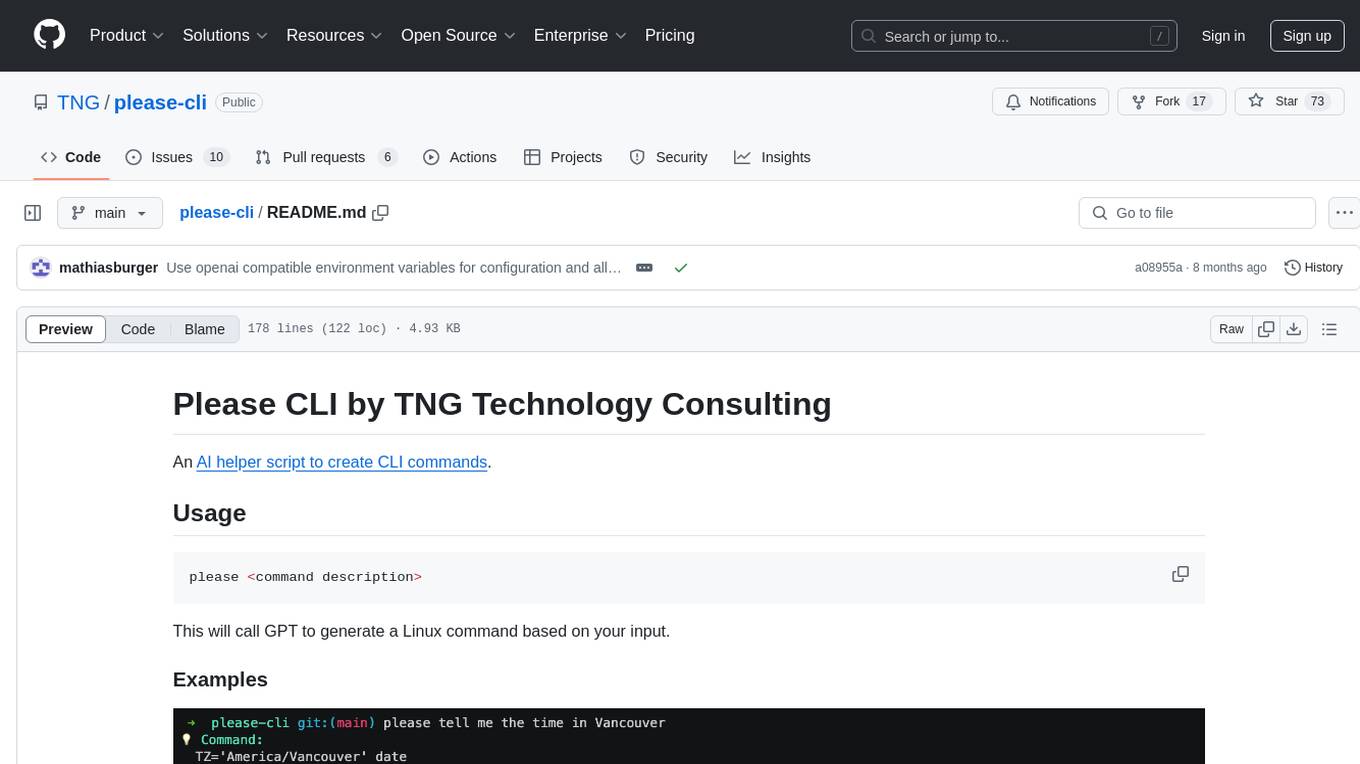

please-cli

Please CLI is an AI helper script designed to create CLI commands by leveraging the GPT model. Users can input a command description, and the script will generate a Linux command based on that input. The tool offers various functionalities such as invoking commands, copying commands to the clipboard, asking questions about commands, and more. It supports parameters for explanation, using different AI models, displaying additional output, storing API keys, querying ChatGPT with specific models, showing the current version, and providing help messages. Users can install Please CLI via Homebrew, apt, Nix, dpkg, AUR, or manually from source. The tool requires an OpenAI API key for operation and offers configuration options for setting API keys and OpenAI settings. Please CLI is licensed under the Apache License 2.0 by TNG Technology Consulting GmbH.

backend.ai-webui

Backend.AI Web UI is a user-friendly web and app interface designed to make AI accessible for end-users, DevOps, and SysAdmins. It provides features for session management, inference service management, pipeline management, storage management, node management, statistics, configurations, license checking, plugins, help & manuals, kernel management, user management, keypair management, manager settings, proxy mode support, service information, and integration with the Backend.AI Web Server. The tool supports various devices, offers a built-in websocket proxy feature, and allows for versatile usage across different platforms. Users can easily manage resources, run environment-supported apps, access a web-based terminal, use Visual Studio Code editor, manage experiments, set up autoscaling, manage pipelines, handle storage, monitor nodes, view statistics, configure settings, and more.

ai-commits-intellij-plugin

AI Commits is a plugin for IntelliJ-based IDEs and Android Studio that generates commit messages using git diff and OpenAI. It offers features such as generating commit messages from diff using OpenAI API, computing diff only from selected files and lines in the commit dialog, creating custom prompts for commit message generation, using predefined variables and hints to customize prompts, choosing any of the models available in OpenAI API, setting OpenAI network proxy, and setting custom OpenAI compatible API endpoint.

sandvault

SandVault is a tool that manages a limited user account to sandbox shell commands and AI agents on macOS, providing a lightweight alternative to application isolation using virtual machines. It allows for running Claude Code, OpenAI Codex, Google Gemini, and shell commands safely within a sandboxed environment. SandVault offers features like fast context switching, passwordless account switching, shared workspace access, and clean uninstallation. The tool operates with limited access to the user's computer, ensuring security by restricting access to certain directories and system files.

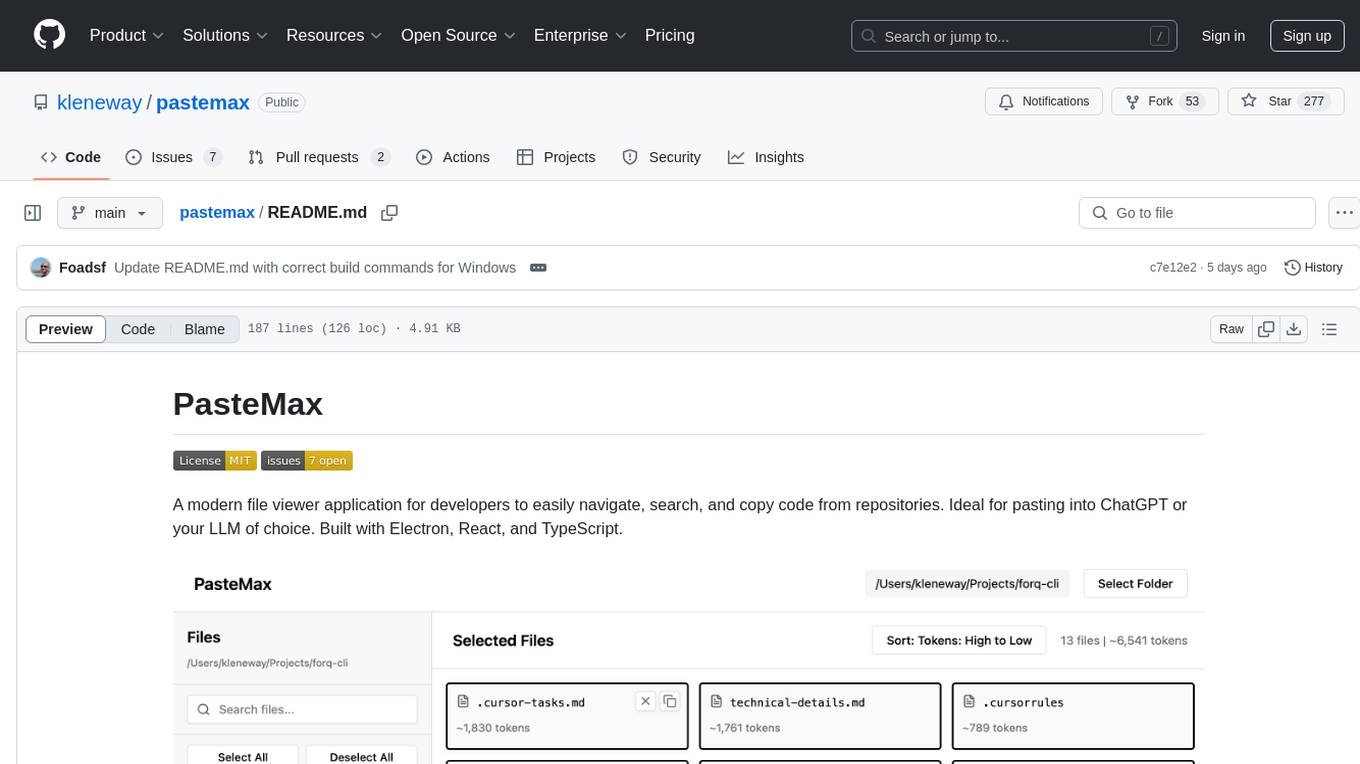

pastemax

PasteMax is a modern file viewer application designed for developers to easily navigate, search, and copy code from repositories. It provides features such as file tree navigation, token counting, search capabilities, selection management, sorting options, dark mode, binary file detection, and smart file exclusion. Built with Electron, React, and TypeScript, PasteMax is ideal for pasting code into ChatGPT or other language models. Users can download the application or build it from source, and customize file exclusions. Troubleshooting steps are provided for common issues, and contributions to the project are welcome under the MIT License.

commitgpt

Commit GPT is a CLI tool that automates the process of writing git commit messages using AI. It leverages OpenAI's GPT API to generate commit messages based on staged changes. Users can easily install and configure the tool to select AI providers, models, and commit message formats. The tool supports multiple providers and offers interactive setup for easy configuration. With Commit GPT, users can streamline the process of writing commit messages and improve productivity in software development workflows.

QA-Pilot

QA-Pilot is an interactive chat project that leverages online/local LLM for rapid understanding and navigation of GitHub code repository. It allows users to chat with GitHub public repositories using a git clone approach, store chat history, configure settings easily, manage multiple chat sessions, and quickly locate sessions with a search function. The tool integrates with `codegraph` to view Python files and supports various LLM models such as ollama, openai, mistralai, and localai. The project is continuously updated with new features and improvements, such as converting from `flask` to `fastapi`, adding `localai` API support, and upgrading dependencies like `langchain` and `Streamlit` to enhance performance.

pacha

Pacha is an AI tool designed for retrieving context for natural language queries using a SQL interface and Python programming environment. It is optimized for working with Hasura DDN for multi-source querying. Pacha is used in conjunction with language models to produce informed responses in AI applications, agents, and chatbots.

gcop

GCOP (Git Copilot) is an AI-powered Git assistant that automates commit message generation, enhances Git workflow, and offers 20+ smart commands. It provides intelligent commit crafting, customizable commit templates, smart learning capabilities, and a seamless developer experience. Users can generate AI commit messages, add all changes with AI-generated messages, undo commits while keeping changes staged, and push changes to the current branch. GCOP offers configuration options for AI models and provides detailed documentation, contribution guidelines, and a changelog. The tool is designed to make version control easier and more efficient for developers.

obsidian-systemsculpt-ai

SystemSculpt AI is a comprehensive AI-powered plugin for Obsidian, integrating advanced AI capabilities into note-taking, task management, knowledge organization, and content creation. It offers modules for brain integration, chat conversations, audio recording and transcription, note templates, and task generation and management. Users can customize settings, utilize AI services like OpenAI and Groq, and access documentation for detailed guidance. The plugin prioritizes data privacy by storing sensitive information locally and offering the option to use local AI models for enhanced privacy.

Flowise

Flowise is a tool that allows users to build customized LLM flows with a drag-and-drop UI. It is open-source and self-hostable, and it supports various deployments, including AWS, Azure, Digital Ocean, GCP, Railway, Render, HuggingFace Spaces, Elestio, Sealos, and RepoCloud. Flowise has three different modules in a single mono repository: server, ui, and components. The server module is a Node backend that serves API logics, the ui module is a React frontend, and the components module contains third-party node integrations. Flowise supports different environment variables to configure your instance, and you can specify these variables in the .env file inside the packages/server folder.

alcless

Alcoholless is a lightweight security sandbox for macOS programs, originally designed for securing Homebrew but can be used for any CLI programs. It allows AI agents to run shell commands with reduced risk of breaking the host OS. The tool creates a separate environment for executing commands, syncing changes back to the host directory upon command exit. It uses utilities like sudo, su, pam_launchd, and rsync, with potential future integration of FSKit for file syncing. The tool also generates a sudo configuration for user-specific sandbox access, enabling users to run commands as the sandbox user without a password.

For similar tasks

zsh-github-copilot

zsh-github-copilot is a `zsh` plugin that enhances the GitHub Copilot experience by providing keybinds to quickly access command explanations and get Copilot suggestions. It integrates seamlessly with GitHub CLI and offers a smooth setup process. Users can easily install the plugin using popular zsh plugin managers like antigen, oh-my-zsh, zinit, zplug, and zpm. By binding specific keys, users can access the 'suggest' and 'explain' functionalities to improve their coding workflow with GitHub Copilot. This plugin is designed to streamline the usage of GitHub Copilot within the zsh shell environment.

zsh_codex

Zsh Codex is a ZSH plugin that enables AI-powered code completion in the command line. It supports both OpenAI's Codex and Google's Generative AI (Gemini), providing advanced language model capabilities for coding tasks directly in the terminal. Users can easily install the plugin and configure it to enhance their coding experience with AI assistance.

wtf.nvim

wtf.nvim is a Neovim plugin that enhances diagnostic debugging by providing explanations and solutions for code issues using ChatGPT. It allows users to search the web for answers directly from Neovim, making the debugging process faster and more efficient. The plugin works with any language that has LSP support in Neovim, offering AI-powered diagnostic assistance and seamless integration with various resources for resolving coding problems.

pearai-submodule

PearAI Submodule / Extension is the source code for the bulk of PearAI's functionality, bundled as a VSCode / PearAI extension. It allows users to easily understand code sections, refactor functions, and ask questions by mentioning a file. The tool aims to enhance coding experience and productivity within the VSCode environment.

p1

p1 is a code completion engine based on Large Language Models (LLM) that operates at the edge. It provides intelligent code suggestions and completions to enhance the coding experience. The tool is designed to assist developers in writing code more efficiently by predicting and offering context-aware completions based on the code being written. With implementations available for popular code editors like Vim and Visual Studio Code, p1 aims to improve productivity and streamline the coding process for software developers.

agentic-context-engine

Agentic Context Engine (ACE) is a framework that enables AI agents to learn from their execution feedback, continuously improving without fine-tuning or training data. It maintains a Skillbook of evolving strategies, extracting patterns from successful tasks and learning from failures transparently in context. ACE offers self-improving agents, better performance on complex tasks, token reduction in browser automation, and preservation of valuable knowledge over time. Users can integrate ACE with popular agent frameworks and benefit from its innovative approach to in-context learning.

GPThemes

GPThemes is a GitHub repository that provides a collection of customizable themes for various programming languages and text editors. It offers a wide range of color schemes and styling options to enhance the visual appearance of code editors and terminals. Users can easily browse through the available themes and apply them to their preferred development environment to personalize the coding experience. With GPThemes, developers can quickly switch between different themes to find the one that best suits their preferences and workflow, making coding more enjoyable and visually appealing.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

oss-fuzz-gen

This framework generates fuzz targets for real-world `C`/`C++` projects with various Large Language Models (LLM) and benchmarks them via the `OSS-Fuzz` platform. It manages to successfully leverage LLMs to generate valid fuzz targets (which generate non-zero coverage increase) for 160 C/C++ projects. The maximum line coverage increase is 29% from the existing human-written targets.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

Azure-Analytics-and-AI-Engagement

The Azure-Analytics-and-AI-Engagement repository provides packaged Industry Scenario DREAM Demos with ARM templates (Containing a demo web application, Power BI reports, Synapse resources, AML Notebooks etc.) that can be deployed in a customer’s subscription using the CAPE tool within a matter of few hours. Partners can also deploy DREAM Demos in their own subscriptions using DPoC.