YuE

YuE: Open Full-song Music Generation Foundation Model, something similar to Suno.ai but open

Stars: 1136

YuE (乐) is an open-source foundation model designed for music generation, specifically transforming lyrics into full songs. It can generate complete songs in various genres and vocal styles, ensuring a polished and cohesive result. The model requires significant GPU memory for generating long sequences and recommends specific configurations for optimal performance. Users can customize the number of sessions for memory usage. The tool provides a quickstart guide for generating music using Transformers and includes tips for execution time and tag selection. The project is licensed under Creative Commons Attribution Non Commercial 4.0.

README:

Demo 🎶 | 📑 Paper (coming soon)

YuE-s1-7B-anneal-en-cot 🤗 | YuE-s1-7B-anneal-en-icl 🤗 | YuE-s1-7B-anneal-jp-kr-cot 🤗

YuE-s1-7B-anneal-jp-kr-icl 🤗 | YuE-s1-7B-anneal-zh-cot 🤗 | YuE-s1-7B-anneal-zh-icl 🤗

YuE-s2-1B-general 🤗 | YuE-upsampler 🤗

Our model's name is YuE (乐). In Chinese, the word means "music" and "happiness." Some of you may find words that start with Yu hard to pronounce. If so, you can just call it "yeah." We wrote a song with our model's name, see here.

YuE is a groundbreaking series of open-source foundation models designed for music generation, specifically for transforming lyrics into full songs (lyrics2song). It can generate a complete song, lasting several minutes, that includes both a catchy vocal track and accompaniment track. YuE is capable of modeling diverse genres/languages/vocal techniques. Please visit the Demo Page for amazing vocal performance.

- 2025.01.29 🎉: We have updated the license description. we ENCOURAGE artists and content creators to sample and incorporate outputs generated by our model into their own works, and even monetize them. The only requirement is to credit our name: YuE by HKUST/M-A-P (alphabetic order).

- 2025.01.28 🫶: Thanks to Fahd for creating a tutorial on how to quickly get started with YuE. Here is his demonstration.

- 2025.01.26 🔥: We have released the YuE series.

- [ ] Support dual-track ICL mode.

- [ ] Support gradio interface. https://github.com/multimodal-art-projection/YuE/issues/1

- [ ] Support transformers tensor parallel. https://github.com/multimodal-art-projection/YuE/issues/7

- [ ] Online serving on huggingface space.

- [ ] Example finetune code for enabling BPM control using 🤗 Transformers.

- [ ] Support stemgen mode https://github.com/multimodal-art-projection/YuE/issues/21

- [ ] Support seeding https://github.com/multimodal-art-projection/YuE/issues/20

YuE requires significant GPU memory for generating long sequences. Below are the recommended configurations:

- For GPUs with 24GB memory or less: Run up to 2 sessions concurrently to avoid out-of-memory (OOM) errors.

- For full song generation (many sessions, e.g., 4 or more): Use GPUs with at least 80GB memory. i.e. H800, A100, or multiple RTX4090s with tensor parallel.

To customize the number of sessions, the interface allows you to specify the desired session count. By default, the model runs 2 sessions (1 verse + 1 chorus) to avoid OOM issue.

On an H800 GPU, generating 30s audio takes 150 seconds. On an RTX 4090 GPU, generating 30s audio takes approximately 360 seconds.

Quick start VIDEO TUTORIAL by Fahd: Link here. We recommend watching this video if you are not familiar with machine learning or the command line.

Make sure properly install flash attention 2 to reduce VRAM usage.

# We recommend using conda to create a new environment.

conda create -n yue python=3.8 # Python >=3.8 is recommended.

conda activate yue

# install cuda >= 11.8

conda install pytorch torchvision torchaudio cudatoolkit=11.8 -c pytorch -c nvidia

pip install -r requirements.txt

# For saving GPU memory, FlashAttention 2 is mandatory.

# Without it, long audio may lead to out-of-memory (OOM) errors.

# Be careful about matching the cuda version and flash-attn version

pip install flash-attn --no-build-isolation# Make sure you have git-lfs installed (https://git-lfs.com)

git lfs install

git clone https://github.com/multimodal-art-projection/YuE.git

cd YuE/inference/

git clone https://huggingface.co/m-a-p/xcodec_mini_inferNow generate music with YuE using 🤗 Transformers. Make sure your step 1 and 2 are properly set up.

Note:

-

Set

--run_n_segmentsto the number of lyric sections if you want to generate a full song. Additionally, you can increase--stage2_batch_sizebased on your available GPU memory. -

You may customize the prompt in

genre.txtandlyrics.txt. See prompt engineering guide here. -

LM ckpts will be automatically downloaded from huggingface.

# This is the CoT mode.

cd YuE/inference/

python infer.py \

--stage1_model m-a-p/YuE-s1-7B-anneal-en-cot \

--stage2_model m-a-p/YuE-s2-1B-general \

--genre_txt genre.txt \

--lyrics_txt lyrics.txt \

--run_n_segments 2 \

--stage2_batch_size 4 \

--output_dir ./output \

--cuda_idx 0 \

--max_new_tokens 3000 If you want to use music in-context-learning (provide a reference song), enable --use_audio_prompt, --prompt_start_time, and --prompt_end_time to specify the audio segment.

Note:

-

ICL requires a different ckpt, e.g.

m-a-p/YuE-s1-7B-anneal-en-icl. -

Music ICL generally requires a 30s audio segment. The model will write new songs with similiar style of the provided audio, and may improve musicality.

-

We have 4 modes for ICL: mix, vocal, instrumental, and dual-track.

-

We currently only support mix mode.

-

Dual-track mode work the best, will support in the infer code soon.

# This is the ICL mode. Currently only mix-ICL is supported.

cd YuE/inference/

python infer.py \

--stage1_model m-a-p/YuE-s1-7B-anneal-en-icl \

--stage2_model m-a-p/YuE-s2-1B-general \

--genre_txt genre.txt \

--lyrics_txt lyrics.txt \

--run_n_segments 2 \

--stage2_batch_size 4 \

--output_dir ./output \

--cuda_idx 0 \

--max_new_tokens 3000 \

--audio_prompt_path {YOUR_AUDIO_FILE} \

--prompt_start_time 0 \

--prompt_end_time 30 The prompt consists of three parts: genre tags, lyrics, and ref audio.

-

An example genre tagging prompt can be found here.

-

A stable tagging prompt usually consists of five components: genre, instrument, mood, gender, and timbre. All five should be included if possible, separated by space (space delimiter).

-

Although our tags have an open vocabulary, we have provided the top 200 most commonly used tags. It is recommended to select tags from this list for more stable results.

-

The order of the tags is flexible. For example, a stable genre tagging prompt might look like: "inspiring female uplifting pop airy vocal electronic bright vocal vocal."

-

Additionally, we have introduced the "Mandarin" and "Cantonese" tags to distinguish between Mandarin and Cantonese, as their lyrics often share similarities.

-

An example lyric prompt can be found here.

-

We support multiple languages, including but not limited to English, Mandarin Chinese, Cantonese, Japanese, and Korean. The default top language distribution during the annealing phase is revealed in issue 12. A language ID on a specific annealing checkpoint indicates that we have adjusted the mixing ratio to enhance support for that language.

-

The lyrics prompt should be divided into sessions, with structure labels (e.g., [verse], [chorus], [bridge], [outro]) prepended. Each session should be separated by 2 newline character "\n\n".

-

DONOT put too many words in a single segment, since each session is around 30s (

--max_new_tokens 3000by default). -

We find that [intro] label is less stable, so we recommend starting with [verse] or [chorus].

-

For generating music with no vocal, see issue 18.

-

Audio prompt is optional. Providing ref audio for ICL usually increase the good case rate, and result in less diversity since the generated token space is bounded by the ref audio. CoT only (no ref) will result in a more diverse output.

-

We find that dual-track ICL mode gives the best musicality and prompt following. We will support this mode soon.

-

Use the chorus part of the music as prompt will result in better musicality.

- Our models are licensed under Creative Commons Attribution Non Commercial 4.0, meaning the model weights themselves CANNOT be used for commercial purposes.

- However, we ENCOURAGE artists and content creators to sample and incorporate outputs generated by our model into their own works, and even monetize them. The only requirement is to credit our name: YuE by HKUST/M-A-P (alphabetic order).

- We DO NOT assume any responsibility for any misuse of this model, including but not limited to illegal, malicious, or unethical activities.

- Users are solely responsible for any content generated with the model and any consequences arising from its use.

The project is co-lead by HKUST and M-A-P (alphabetic order). Also thanks moonshot.ai, bytedance, 01.ai, and geely for supporting the project. A friendly link to HKUST Audio group's huggingface space.

We deeply appreciate all the support we received along the way. Long live open-source AI!

If you find our paper and code useful in your research, please consider giving a star ⭐ and citation 📝 :)

@misc{yuan2025yue,

title={YuE: Open Music Foundation Models for Full-Song Generation},

author={Ruibin Yuan and Hanfeng Lin and Shawn Guo and Ge Zhang and Jiahao Pan and Yongyi Zang and Haohe Liu and Xingjian Du and Xeron Du and Zhen Ye and Tianyu Zheng and Yinghao Ma and Minghao Liu and Lijun Yu and Zeyue Tian and Ziya Zhou and Liumeng Xue and Xingwei Qu and Yizhi Li and Tianhao Shen and Ziyang Ma and Shangda Wu and Jun Zhan and Chunhui Wang and Yatian Wang and Xiaohuan Zhou and Xiaowei Chi and Xinyue Zhang and Zhenzhu Yang and Yiming Liang and Xiangzhou Wang and Shansong Liu and Lingrui Mei and Peng Li and Yong Chen and Chenghua Lin and Xie Chen and Gus Xia and Zhaoxiang Zhang and Chao Zhang and Wenhu Chen and Xinyu Zhou and Xipeng Qiu and Roger Dannenberg and Jiaheng Liu and Jian Yang and Stephen Huang and Wei Xue and Xu Tan and Yike Guo},

howpublished={\url{https://github.com/multimodal-art-projection/YuE}},

year={2025},

note={GitHub repository}

}For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for YuE

Similar Open Source Tools

YuE

YuE (乐) is an open-source foundation model designed for music generation, specifically transforming lyrics into full songs. It can generate complete songs in various genres and vocal styles, ensuring a polished and cohesive result. The model requires significant GPU memory for generating long sequences and recommends specific configurations for optimal performance. Users can customize the number of sessions for memory usage. The tool provides a quickstart guide for generating music using Transformers and includes tips for execution time and tag selection. The project is licensed under Creative Commons Attribution Non Commercial 4.0.

MInference

MInference is a tool designed to accelerate pre-filling for long-context Language Models (LLMs) by leveraging dynamic sparse attention. It achieves up to a 10x speedup for pre-filling on an A100 while maintaining accuracy. The tool supports various decoding LLMs, including LLaMA-style models and Phi models, and provides custom kernels for attention computation. MInference is useful for researchers and developers working with large-scale language models who aim to improve efficiency without compromising accuracy.

qa-mdt

This repository provides an implementation of QA-MDT, integrating state-of-the-art models for music generation. It offers a Quality-Aware Masked Diffusion Transformer for enhanced music generation. The code is based on various repositories like AudioLDM, PixArt-alpha, MDT, AudioMAE, and Open-Sora. The implementation allows for training and fine-tuning the model with different strategies and datasets. The repository also includes instructions for preparing datasets in LMDB format and provides a script for creating a toy LMDB dataset. The model can be used for music generation tasks, with a focus on quality injection to enhance the musicality of generated music.

OpenMusic

OpenMusic is a repository providing an implementation of QA-MDT, a Quality-Aware Masked Diffusion Transformer for music generation. The code integrates state-of-the-art models and offers training strategies for music generation. The repository includes implementations of AudioLDM, PixArt-alpha, MDT, AudioMAE, and Open-Sora. Users can train or fine-tune the model using different strategies and datasets. The model is well-pretrained and can be used for music generation tasks. The repository also includes instructions for preparing datasets, training the model, and performing inference. Contact information is provided for any questions or suggestions regarding the project.

Easy-Translate

Easy-Translate is a script designed for translating large text files with a single command. It supports various models like M2M100, NLLB200, SeamlessM4T, LLaMA, and Bloom. The tool is beginner-friendly and offers seamless and customizable features for advanced users. It allows acceleration on CPU, multi-CPU, GPU, multi-GPU, and TPU, with support for different precisions and decoding strategies. Easy-Translate also provides an evaluation script for translations. Built on HuggingFace's Transformers and Accelerate library, it supports prompt usage and loading huge models efficiently.

MARS5-TTS

MARS5 is a novel English speech model (TTS) developed by CAMB.AI, featuring a two-stage AR-NAR pipeline with a unique NAR component. The model can generate speech for various scenarios like sports commentary and anime with just 5 seconds of audio and a text snippet. It allows steering prosody using punctuation and capitalization in the transcript. Speaker identity is specified using an audio reference file, enabling 'deep clone' for improved quality. The model can be used via torch.hub or HuggingFace, supporting both shallow and deep cloning for inference. Checkpoints are provided for AR and NAR models, with hardware requirements of 750M+450M params on GPU. Contributions to improve model stability, performance, and reference audio selection are welcome.

CogVideo

CogVideo is an open-source repository that provides pretrained text-to-video models for generating videos based on input text. It includes models like CogVideoX-2B and CogVideo, offering powerful video generation capabilities. The repository offers tools for inference, fine-tuning, and model conversion, along with demos showcasing the model's capabilities through CLI, web UI, and online experiences. CogVideo aims to facilitate the creation of high-quality videos from textual descriptions, catering to a wide range of applications.

Video-MME

Video-MME is the first-ever comprehensive evaluation benchmark of Multi-modal Large Language Models (MLLMs) in Video Analysis. It assesses the capabilities of MLLMs in processing video data, covering a wide range of visual domains, temporal durations, and data modalities. The dataset comprises 900 videos with 256 hours and 2,700 human-annotated question-answer pairs. It distinguishes itself through features like duration variety, diversity in video types, breadth in data modalities, and quality in annotations.

viitor-voice

ViiTor-Voice is an LLM based TTS Engine that offers a lightweight design with 0.5B parameters for efficient deployment on various platforms. It provides real-time streaming output with low latency experience, a rich voice library with over 300 voice options, flexible speech rate adjustment, and zero-shot voice cloning capabilities. The tool supports both Chinese and English languages and is suitable for applications requiring quick response and natural speech fluency.

petals

Petals is a tool that allows users to run large language models at home in a BitTorrent-style manner. It enables fine-tuning and inference up to 10x faster than offloading. Users can generate text with distributed models like Llama 2, Falcon, and BLOOM, and fine-tune them for specific tasks directly from their desktop computer or Google Colab. Petals is a community-run system that relies on people sharing their GPUs to increase its capacity and offer a distributed network for hosting model layers.

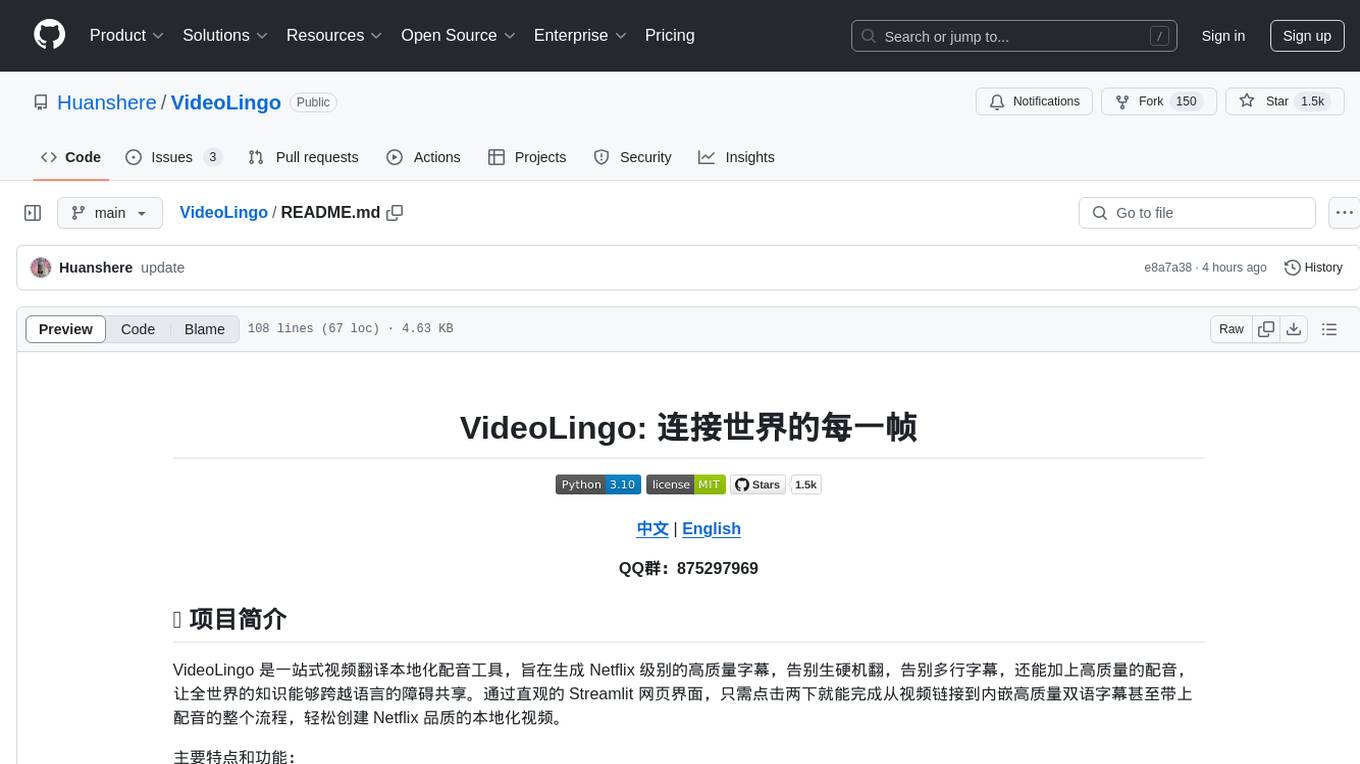

VideoLingo

VideoLingo is an all-in-one video translation and localization dubbing tool designed to generate Netflix-level high-quality subtitles. It aims to eliminate stiff machine translation, multiple lines of subtitles, and can even add high-quality dubbing, allowing knowledge from around the world to be shared across language barriers. Through an intuitive Streamlit web interface, the entire process from video link to embedded high-quality bilingual subtitles and even dubbing can be completed with just two clicks, easily creating Netflix-quality localized videos. Key features and functions include using yt-dlp to download videos from Youtube links, using WhisperX for word-level timeline subtitle recognition, using NLP and GPT for subtitle segmentation based on sentence meaning, summarizing intelligent term knowledge base with GPT for context-aware translation, three-step direct translation, reflection, and free translation to eliminate strange machine translation, checking single-line subtitle length and translation quality according to Netflix standards, using GPT-SoVITS for high-quality aligned dubbing, and integrating package for one-click startup and one-click output in streamlit.

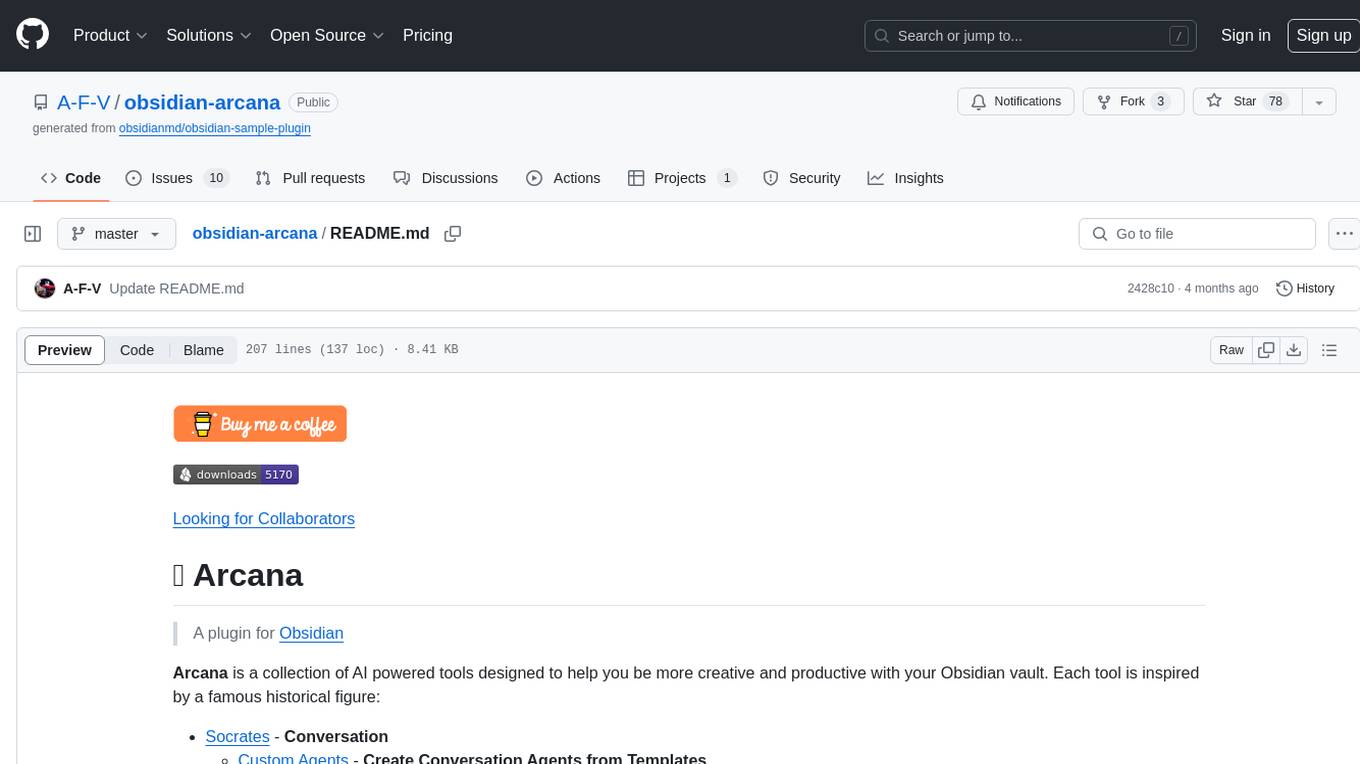

obsidian-arcana

Arcana is a plugin for Obsidian that offers a collection of AI-powered tools inspired by famous historical figures to enhance creativity and productivity. It includes tools for conversation, text-to-speech transcription, speech-to-text replies, metadata markup, text generation, file moving, flashcard generation, auto tagging, and note naming. Users can interact with these tools using the command palette and sidebar views, with an OpenAI API key required for usage. The plugin aims to assist users in various note-taking and knowledge management tasks within the Obsidian vault environment.

RLHF-Reward-Modeling

This repository contains code for training reward models for Deep Reinforcement Learning-based Reward-modulated Hierarchical Fine-tuning (DRL-based RLHF), Iterative Selection Fine-tuning (Rejection sampling fine-tuning), and iterative Decision Policy Optimization (DPO). The reward models are trained using a Bradley-Terry model based on the Gemma and Mistral language models. The resulting reward models achieve state-of-the-art performance on the RewardBench leaderboard for reward models with base models of up to 13B parameters.

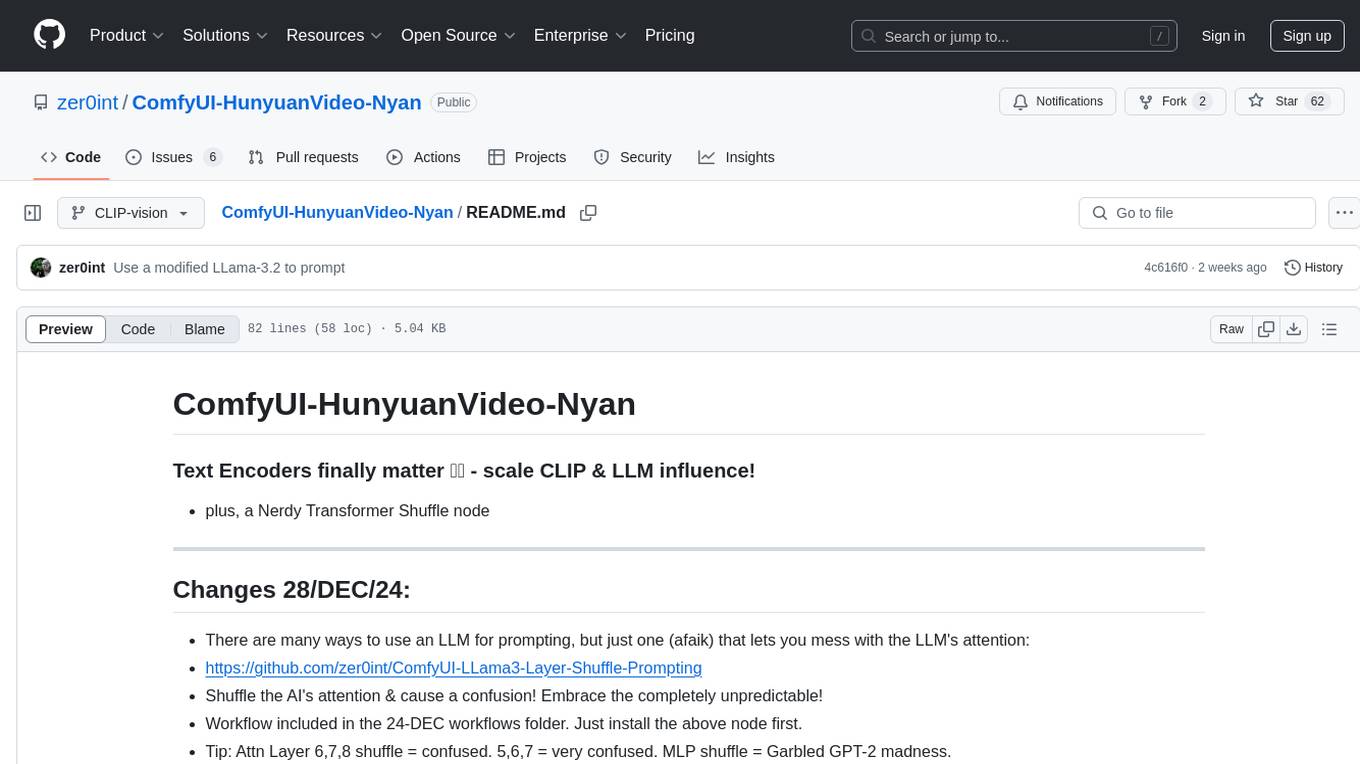

ComfyUI-HunyuanVideo-Nyan

ComfyUI-HunyuanVideo-Nyan is a repository that provides tools for manipulating the attention of LLM models, allowing users to shuffle the AI's attention and cause confusion. The repository includes a Nerdy Transformer Shuffle node that enables users to mess with the LLM's attention layers, providing a workflow for installation and usage. It also offers a new SAE-informed Long-CLIP model with high accuracy, along with recommendations for CLIP models. Users can find detailed instructions on how to use the provided nodes to scale CLIP & LLM factors and create high-quality nature videos. The repository emphasizes compatibility with other related tools and provides insights into the functionality of the included nodes.

hal-9100

This repository is now archived and the code is privately maintained. If you are interested in this infrastructure, please contact the maintainer directly.

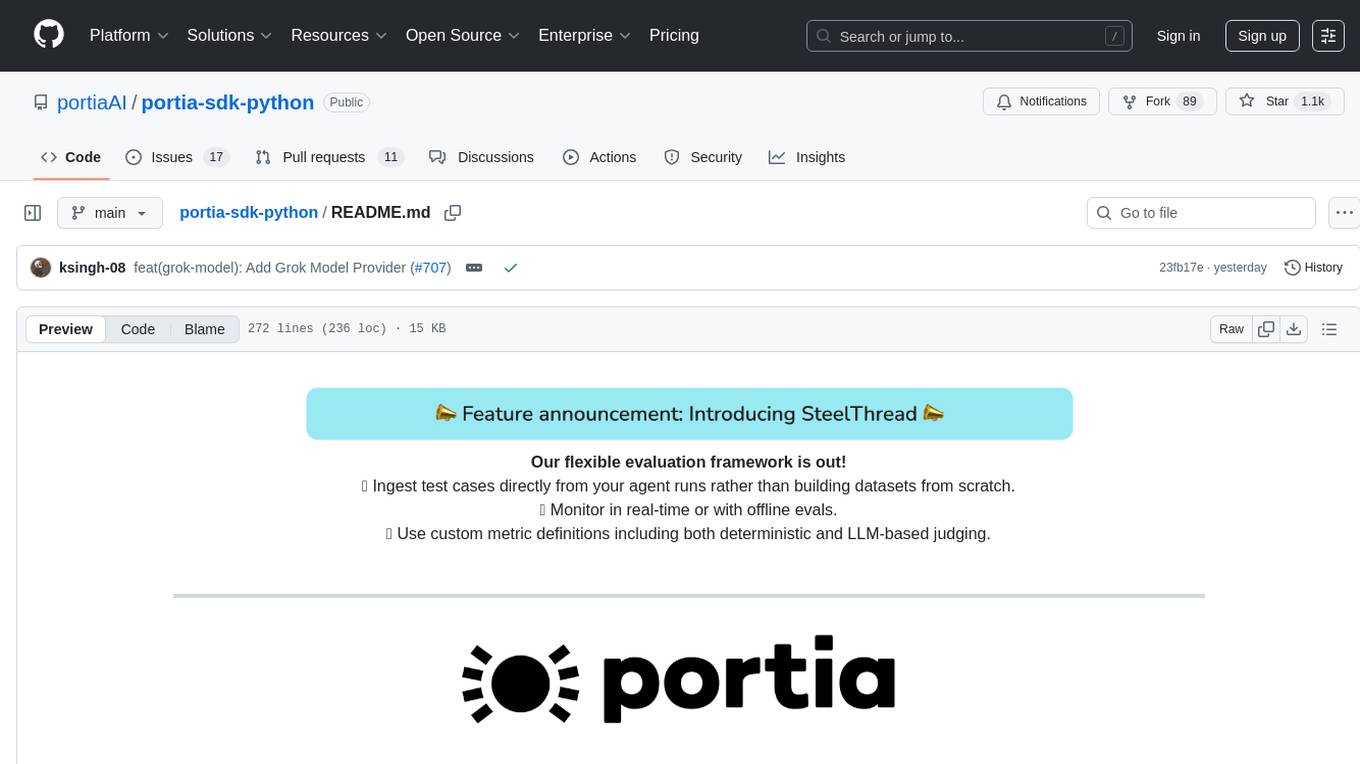

portia-sdk-python

Portia AI is an open source developer framework for predictable, stateful, authenticated agentic workflows. It allows developers to have oversight over their multi-agent deployments and focuses on production readiness. The framework supports iterating on agents' reasoning, extensive tool support including MCP support, authentication for API and web agents, and is production-ready with features like attribute multi-agent runs, large inputs and outputs storage, and connecting any LLM. Portia AI aims to provide a flexible and reliable platform for developing AI agents with tools, authentication, and smart control.

For similar tasks

YuE

YuE (乐) is an open-source foundation model designed for music generation, specifically transforming lyrics into full songs. It can generate complete songs in various genres and vocal styles, ensuring a polished and cohesive result. The model requires significant GPU memory for generating long sequences and recommends specific configurations for optimal performance. Users can customize the number of sessions for memory usage. The tool provides a quickstart guide for generating music using Transformers and includes tips for execution time and tag selection. The project is licensed under Creative Commons Attribution Non Commercial 4.0.

lollms-webui

LoLLMs WebUI (Lord of Large Language Multimodal Systems: One tool to rule them all) is a user-friendly interface to access and utilize various LLM (Large Language Models) and other AI models for a wide range of tasks. With over 500 AI expert conditionings across diverse domains and more than 2500 fine tuned models over multiple domains, LoLLMs WebUI provides an immediate resource for any problem, from car repair to coding assistance, legal matters, medical diagnosis, entertainment, and more. The easy-to-use UI with light and dark mode options, integration with GitHub repository, support for different personalities, and features like thumb up/down rating, copy, edit, and remove messages, local database storage, search, export, and delete multiple discussions, make LoLLMs WebUI a powerful and versatile tool.

suno-api

Suno AI API is an open-source project that allows developers to integrate the music generation capabilities of Suno.ai into their own applications. The API provides a simple and convenient way to generate music, lyrics, and other audio content using Suno.ai's powerful AI models. With Suno AI API, developers can easily add music generation functionality to their apps, websites, and other projects.

openvino-plugins-ai-audacity

OpenVINO™ AI Plugins for Audacity* are a set of AI-enabled effects, generators, and analyzers for Audacity®. These AI features run 100% locally on your PC -- no internet connection necessary! OpenVINO™ is used to run AI models on supported accelerators found on the user's system such as CPU, GPU, and NPU. * **Music Separation**: Separate a mono or stereo track into individual stems -- Drums, Bass, Vocals, & Other Instruments. * **Noise Suppression**: Removes background noise from an audio sample. * **Music Generation & Continuation**: Uses MusicGen LLM to generate snippets of music, or to generate a continuation of an existing snippet of music. * **Whisper Transcription**: Uses whisper.cpp to generate a label track containing the transcription or translation for a given selection of spoken audio or vocals.

SunoApi

SunoAPI is an unofficial client for Suno AI, built on Python and Streamlit. It supports functions like generating music and obtaining music information. Users can set up multiple account information to be saved for use. The tool also features built-in maintenance and activation functions for tokens, eliminating concerns about token expiration. It supports multiple languages and allows users to upload pictures for generating songs based on image content analysis.

awesome-generative-ai

Awesome Generative AI is a curated list of modern Generative Artificial Intelligence projects and services. Generative AI technology creates original content like images, sounds, and texts using machine learning algorithms trained on large data sets. It can produce unique and realistic outputs such as photorealistic images, digital art, music, and writing. The repo covers a wide range of applications in art, entertainment, marketing, academia, and computer science.

ai-audio-datasets

AI Audio Datasets List (AI-ADL) is a comprehensive collection of datasets consisting of speech, music, and sound effects, used for Generative AI, AIGC, AI model training, and audio applications. It includes datasets for speech recognition, speech synthesis, music information retrieval, music generation, audio processing, sound synthesis, and more. The repository provides a curated list of diverse datasets suitable for various AI audio tasks.

awesome-large-audio-models

This repository is a curated list of awesome large AI models in audio signal processing, focusing on the application of large language models to audio tasks. It includes survey papers, popular large audio models, automatic speech recognition, neural speech synthesis, speech translation, other speech applications, large audio models in music, and audio datasets. The repository aims to provide a comprehensive overview of recent advancements and challenges in applying large language models to audio signal processing, showcasing the efficacy of transformer-based architectures in various audio tasks.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.