Phi-3CookBook

This is a Phi Family of SLMs book for getting started with Phi Models. Phi a family of open sourced AI models developed by Microsoft. Phi models are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks

Stars: 2692

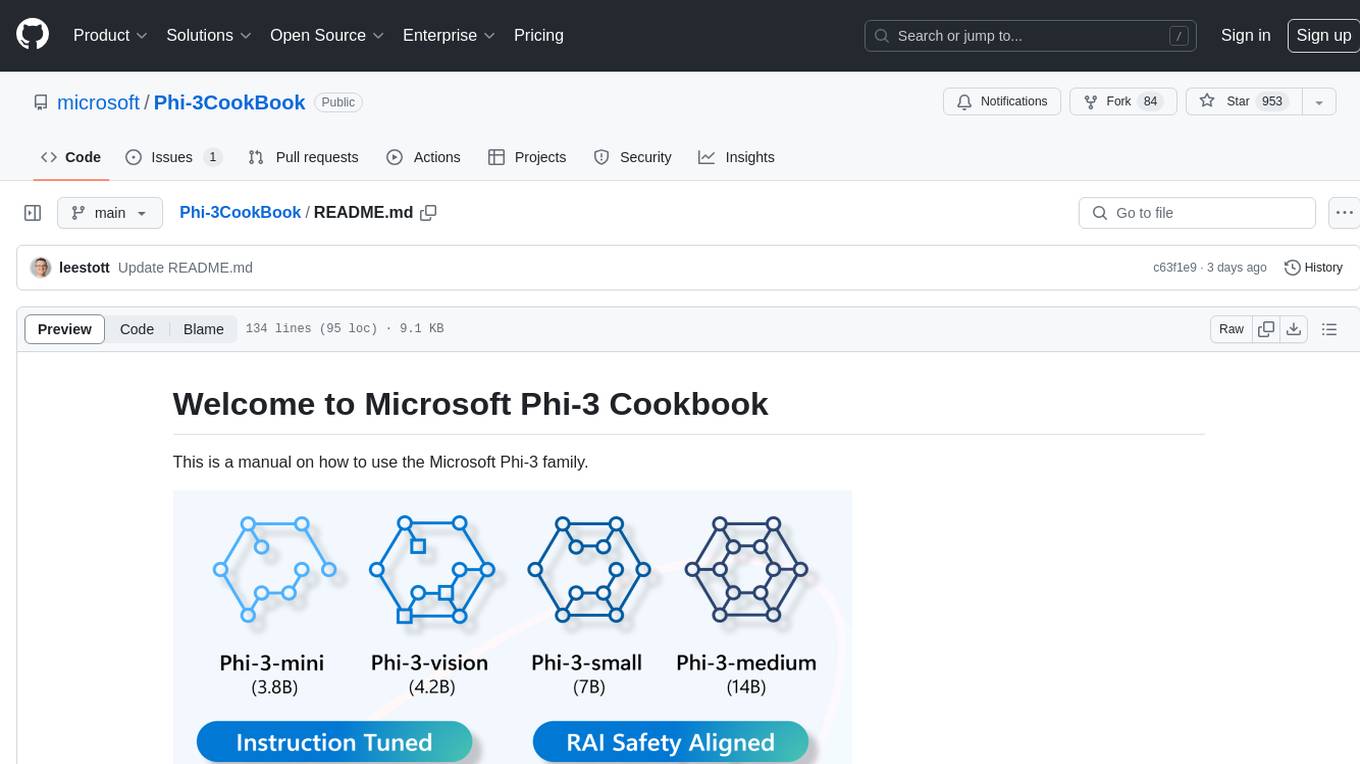

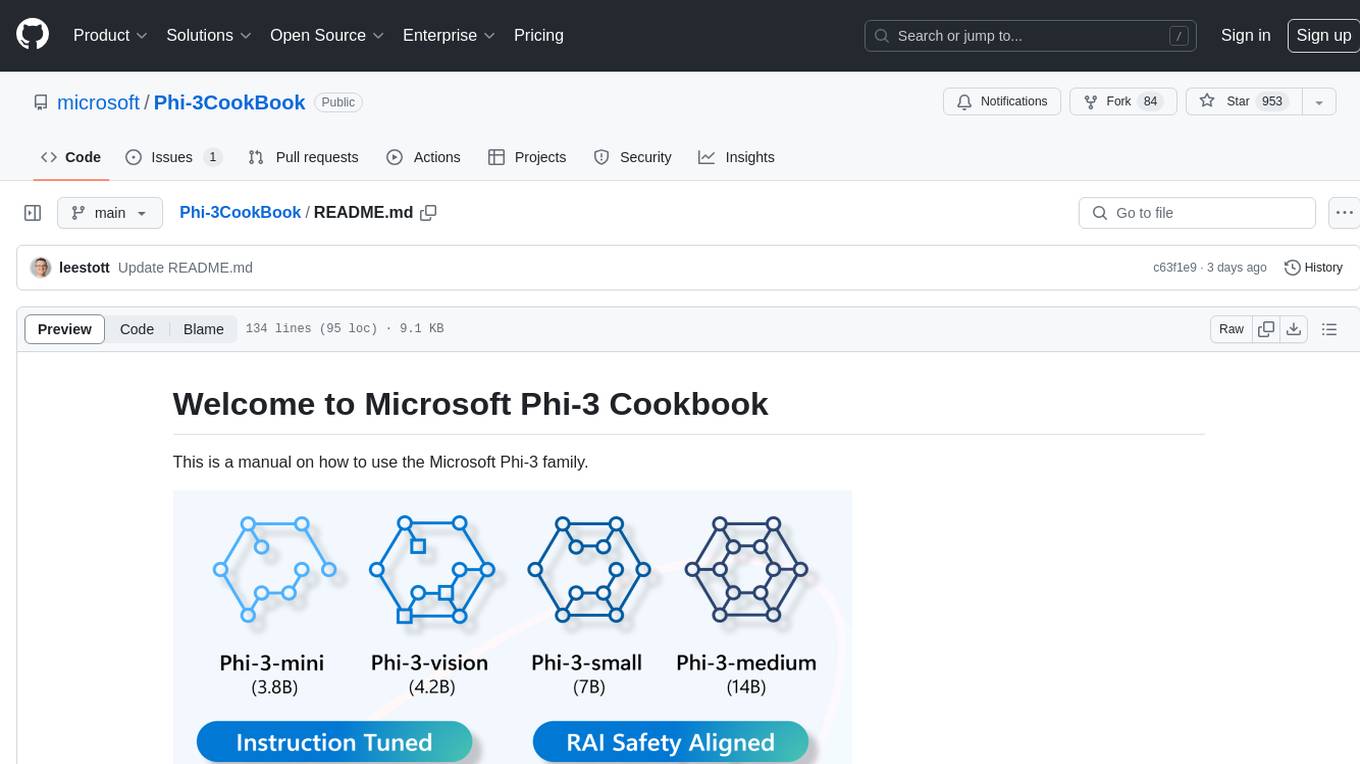

Phi-3CookBook is a manual on how to use the Microsoft Phi-3 family, which consists of open AI models developed by Microsoft. The Phi-3 models are highly capable and cost-effective small language models, outperforming models of similar and larger sizes across various language, reasoning, coding, and math benchmarks. The repository provides detailed information on different Phi-3 models, their performance, availability, and usage scenarios across different platforms like Azure AI Studio, Hugging Face, and Ollama. It also covers topics such as fine-tuning, evaluation, and end-to-end samples for Phi-3-mini and Phi-3-vision models, along with labs, workshops, and contributing guidelines.

README:

Phi, is a family of open AI models developed by Microsoft. Phi models are the most capable and cost-effective small language models (SLMs) available, outperforming models of the same size and next size up across a variety of language, reasoning, coding, and math benchmarks. The Phi-3 Family includes mini, small, medium and vision versions, trained based on different parameter amounts to serve various application scenarios. For more detailed information about Microsoft's Phi family, please visit the Welcome to the Phi Family page.

Follow these steps:

- Fork the Repository: Click on the "Fork" button at the top-right corner of this page.

-

Clone the Repository:

git clone https://github.com/microsoft/Phi-3CookBook.git

-

Introduction

-

Quick Start

- Using Phi-3 in GitHub Model Catalog(✅)

- Using Phi-3 in Hugging face(✅)

- Using Phi-3 with OpenAI SDK(✅)

- Using Phi-3 with Http Requests(✅)

- Using Phi-3 in Azure AI Studio(✅)

- Using Phi-3 Model Inference with Azure MaaS or MaaP(✅)

- Using Phi-3 with Azure Inference API with GitHub and Azure AI

- Deploying Phi-3 models as serverless APIs in Azure AI Studio(✅)

- Using Phi-3 in Ollama(✅)

- Using Phi-3 in LM Studio(✅)

- Using Phi-3 in AI Toolkit VSCode(✅)

- Using Phi-3 and LiteLLM(✅)

-

- Inference Phi-3 in iOS(✅)

- Inference Phi-3.5 in Android(✅)

- Inference Phi-3 in Jetson(✅)

- Inference Phi-3 in AI PC(✅)

- Inference Phi-3 with Apple MLX Framework(✅)

- Inference Phi-3 in Local Server(✅)

- Inference Phi-3 in Remote Server using AI Toolkit(✅)

- Inference Phi-3 with Rust(✅)

- Inference Phi-3-Vision in Local(✅)

- Inference Phi-3 with Kaito AKS, Azure Containers(official support)(✅)

- Inference Your Fine-tuning ONNX Runtime Model(✅)

-

Fine-tuning Phi-3

- Downloading & Creating Sample Data Set(✅)

- Fine-tuning Scenarios(✅)

- Fine-tuning vs RAG(✅)

- Fine-tuning Let Phi-3 become an industry expert(✅)

- Fine-tuning Phi-3 with AI Toolkit for VS Code(✅)

- Fine-tuning Phi-3 with Azure Machine Learning Service(✅)

- Fine-tuning Phi-3 with Lora(✅)

- Fine-tuning Phi-3 with QLora(✅)

- Fine-tuning Phi-3 with Azure AI Studio(✅)

- Fine-tuning Phi-3 with Azure ML CLI/SDK(✅)

- Fine-tuning with Microsoft Olive(✅)

- Fine-tuning with Microsoft Olive Hands-On Lab(✅)

- Fine-tuning Phi-3-vision with Weights and Bias(✅)

- Fine-tuning Phi-3 with Apple MLX Framework(✅)

- Fine-tuning Phi-3-vision (official support)(✅)

- Fine-Tuning Phi-3 with Kaito AKS , Azure Containers(official Support)(✅)

- Fine-Tuning Phi-3 and 3.5 Vision(✅)

-

Evaluation Phi-3

-

E2E Samples for Phi-3-mini

- Introduction to End to End Samples(✅)

- Prepare your industry data(✅)

- Use Microsoft Olive to architect your projects(✅)

- Local Chatbot on Android with Phi-3, ONNXRuntime Mobile and ONNXRuntime Generate API(✅)

- Hugging Face Space WebGPU and Phi-3-mini Demo- Phi-3-mini provides the user with a private (and powerful) chatbot experience. You can try it out(✅)

- Local Chatbot in the browser using Phi3, ONNX Runtime Web and WebGPU(✅)

- OpenVino Chat(✅)

- Multi Model - Interactive Phi-3-mini and OpenAI Whisper(✅)

- MLFlow - Building a wrapper and using Phi-3 with MLFlow(✅)

- Model Optimization - How to optimize Phi-3-min model for ONNX Runtime Web with Olive(✅)

- WinUI3 App with Phi-3 mini-4k-instruct-onnx(✅)

- WinUI3 Multi Model AI Powered Notes App Sample(✅)

- Fine-tune and Integrate custom Phi-3 models with Prompt flow(✅)

- Fine-tune and Integrate custom Phi-3 models with Prompt flow in Azure AI Studio(✅)

- Evaluate the Fine-tuned Phi-3 / Phi-3.5 Model in Azure AI Studio Focusing on Microsoft's Responsible AI Principles(✅)

- Phi-3.5-mini-instruct language prediction sample (Chinese/English)(✅)

-

E2E Samples for Phi-3-vision

-

E2E Samples for Phi-3.5-MoE

-

Labs and workshops samples Phi-3

- C# .NET Labs(✅)

- Build your own Visual Studio Code GitHub Copilot Chat with Microsoft Phi-3 Family(✅)

- Local WebGPU Phi-3 Mini RAG Chatbot Samples with Local RAG File(✅)

- Phi-3 ONNX Tutorial(✅)

- Phi-3-vision ONNX Tutorial(✅)

- Run the Phi-3 models with the ONNX Runtime generate() API(✅)

- Phi-3 ONNX Multi Model LLM Chat UI, This is a chat demo(✅)

- C# Hello Phi-3 ONNX example Phi-3(✅)

- C# API Phi-3 ONNX example to support Phi3-Vision(✅)

- Run C# Phi-3 samples in a CodeSpace(✅)

- Using Phi-3 with Promptflow and Azure AI Search(✅)

- Windows AI-PC APIs with Windows Copilot Library

-

Learning Phi-3.5

- What's new Phi-3.5 Family(✅)

- Quantifying Phi-3.5 Family(✅)

- Phi-3.5 Application Samples

You can learn how to use Microsoft Phi-3 and how to build E2E solutions in your different hardware devices. To experience Phi-3 for yourself, start by playing with the model and customizing Phi-3 for your scenarios using the Azure AI Foundry Azure AI Model Catalog you can learn more at Getting Started with Azure AI Studio

Playground Each model has a dedicated playground to test the model Azure AI Playground.

You can learn how to use Microsoft Phi-3 and how to build E2E solutions in your different hardware devices. To experience Phi-3 for yourself, start by playing with the model and customizing Phi-3 for your scenarios using the GitHub Model Catalog you can learn more at Getting Started with GitHub Model Catalog

Playground Each model has a dedicated playground to test the model.

You can also find the model on the Hugging Face

Playground Hugging Chat playground

Note: These translations were automatically generated using the open-source co-op-translator and may contain errors or inaccuracies. For critical information, it is recommended to refer to the original or consult a professional human translation. If you'd like to add or update a translation, please refer to the co-op-translator repository, where you can easily contribute using simple commands.

| Language | Code | Link to Translated README | Last Updated |

|---|---|---|---|

| Chinese (Simplified) | zh | Chinese Translation | 2024-11-29 |

| Chinese (Traditional) | tw | Chinese Translation | 2024-11-29 |

| French | fr | French Translation | 2024-11-29 |

| Japanese | ja | Japanese Translation | 2024-11-29 |

| Korean | ko | Korean Translation | 2024-11-29 |

| Spanish | es | Spanish Translation | 2024-11-29 |

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft trademarks or logos is subject to and must follow Microsoft's Trademark & Brand Guidelines. Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship. Any use of third-party trademarks or logos are subject to those third-party's policies.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Phi-3CookBook

Similar Open Source Tools

Phi-3CookBook

Phi-3CookBook is a manual on how to use the Microsoft Phi-3 family, which consists of open AI models developed by Microsoft. The Phi-3 models are highly capable and cost-effective small language models, outperforming models of similar and larger sizes across various language, reasoning, coding, and math benchmarks. The repository provides detailed information on different Phi-3 models, their performance, availability, and usage scenarios across different platforms like Azure AI Studio, Hugging Face, and Ollama. It also covers topics such as fine-tuning, evaluation, and end-to-end samples for Phi-3-mini and Phi-3-vision models, along with labs, workshops, and contributing guidelines.

Awesome-local-LLM

Awesome-local-LLM is a curated list of platforms, tools, practices, and resources that help run Large Language Models (LLMs) locally. It includes sections on inference platforms, engines, user interfaces, specific models for general purpose, coding, vision, audio, and miscellaneous tasks. The repository also covers tools for coding agents, agent frameworks, retrieval-augmented generation, computer use, browser automation, memory management, testing, evaluation, research, training, and fine-tuning. Additionally, there are tutorials on models, prompt engineering, context engineering, inference, agents, retrieval-augmented generation, and miscellaneous topics, along with a section on communities for LLM enthusiasts.

xtuner

XTuner is an efficient, flexible, and full-featured toolkit for fine-tuning large models. It supports various LLMs (InternLM, Mixtral-8x7B, Llama 2, ChatGLM, Qwen, Baichuan, ...), VLMs (LLaVA), and various training algorithms (QLoRA, LoRA, full-parameter fine-tune). XTuner also provides tools for chatting with pretrained / fine-tuned LLMs and deploying fine-tuned LLMs with any other framework, such as LMDeploy.

LLM-Hub

LLM Hub is an open-source Android app optimized for mobile usage, supporting multiple model formats for on-device LLM chat and image generation. It offers six AI tools including chat, writing aid, image generator, translator, transcriber, and scam detector. Privacy-first with on-device processing and zero data collection. Advanced capabilities include GPU/NPU acceleration, text-to-speech, RAG with global memory, and custom model import. Developed using Kotlin + Jetpack Compose, LLM Runtime, and various model runtimes.

cl-waffe2

cl-waffe2 is an experimental deep learning framework in Common Lisp, providing fast, systematic, and customizable matrix operations, reverse mode tape-based Automatic Differentiation, and neural network model building and training features accelerated by a JIT Compiler. It offers abstraction layers, extensibility, inlining, graph-level optimization, visualization, debugging, systematic nodes, and symbolic differentiation. Users can easily write extensions and optimize their networks without overheads. The framework is designed to eliminate barriers between users and developers, allowing for easy customization and extension.

deepchecks

Deepchecks is a holistic open-source solution for AI & ML validation needs, enabling thorough testing of data and models from research to production. It includes components for testing, CI & testing management, and monitoring. Users can install and use Deepchecks for testing and monitoring their AI models, with customizable checks and suites for tabular, NLP, and computer vision data. The tool provides visual reports, pythonic/json output for processing, and a dynamic UI for collaboration and monitoring. Deepchecks is open source, with premium features available under a commercial license for monitoring components.

Kiln

Kiln is an intuitive tool for fine-tuning LLM models, generating synthetic data, and collaborating on datasets. It offers desktop apps for Windows, MacOS, and Linux, zero-code fine-tuning for various models, interactive data generation, and Git-based version control. Users can easily collaborate with QA, PM, and subject matter experts, generate auto-prompts, and work with a wide range of models and providers. The tool is open-source, privacy-first, and supports structured data tasks in JSON format. Kiln is free to use and helps build high-quality AI products with datasets, facilitates collaboration between technical and non-technical teams, allows comparison of models and techniques without code, ensures structured data integrity, and prioritizes user privacy.

axolotl

Axolotl is a lightweight and efficient tool for managing and analyzing large datasets. It provides a user-friendly interface for data manipulation, visualization, and statistical analysis. With Axolotl, users can easily import, clean, and explore data to gain valuable insights and make informed decisions. The tool supports various data formats and offers a wide range of functions for data processing and modeling. Whether you are a data scientist, researcher, or business analyst, Axolotl can help streamline your data workflows and enhance your data analysis capabilities.

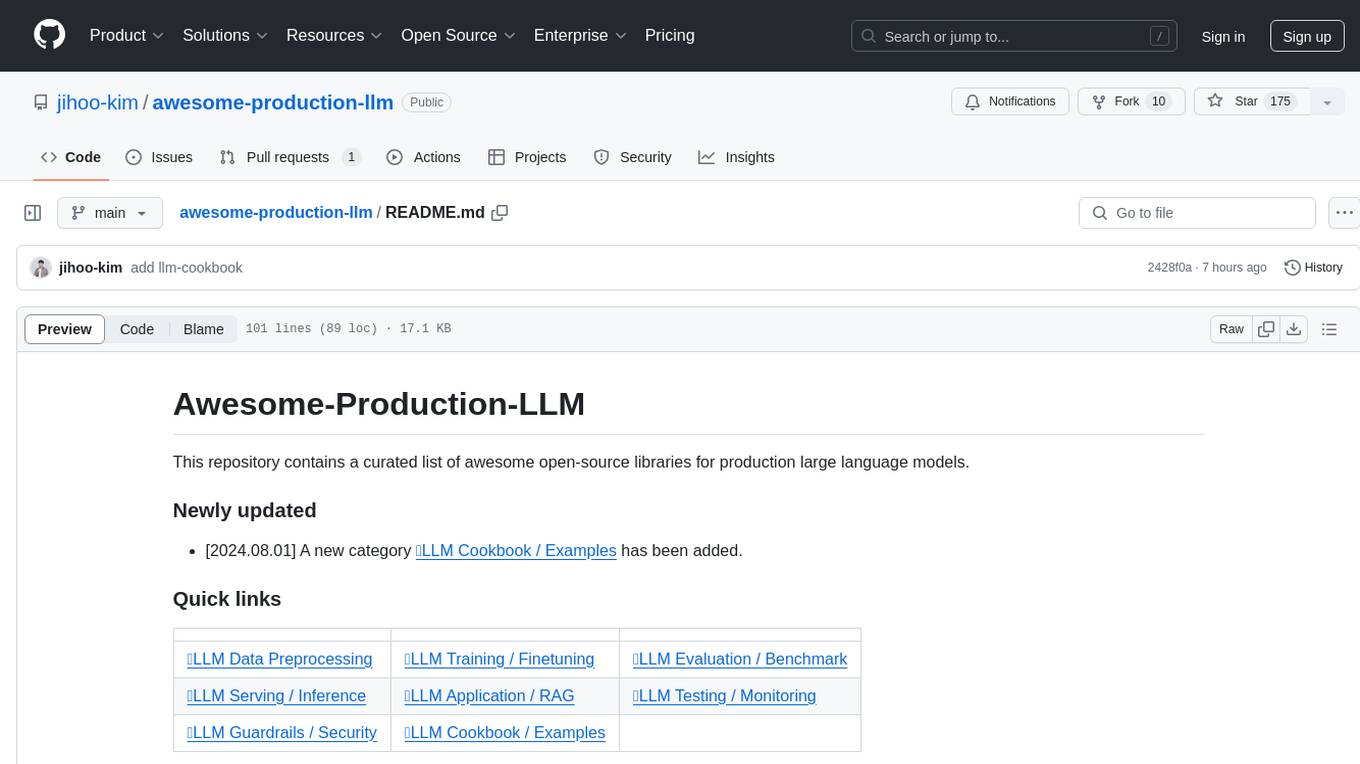

awesome-production-llm

This repository is a curated list of open-source libraries for production large language models. It includes tools for data preprocessing, training/finetuning, evaluation/benchmarking, serving/inference, application/RAG, testing/monitoring, and guardrails/security. The repository also provides a new category called LLM Cookbook/Examples for showcasing examples and guides on using various LLM APIs.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Kori

Kori is a unified note-taking app with AI capabilities, providing a consistent experience across Android, iOS, Windows, macOS, and Linux. It supports various formats like Drawing, Markdown, TXT, LaTeX, Mermaid diagrams, and Todo.txt lists. Users can benefit from AI co-writing features, note outline generation, find and replace, note templates, local media support, and export options. The app follows Material Design 3 guidelines, offers comprehensive mouse and keyboard support, and is optimized for different screen sizes and orientations.

nyxtext

Nyxtext is a text editor built using Python, featuring Custom Tkinter with the Catppuccin color scheme and glassmorphic design. It follows a modular approach with each element organized into separate files for clarity and maintainability. NyxText is not just a text editor but also an AI-powered desktop application for creatives, developers, and students.

clearml

ClearML is an auto-magical suite of tools designed to streamline AI workflows. It includes modules for experiment management, MLOps/LLMOps, data management, model serving, and more. ClearML offers features like experiment tracking, model serving, orchestration, and automation. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm for remote debugging. ClearML aims to simplify collaboration, automate processes, and enhance visibility in AI projects.

musegpt

Run local Large Language Models (LLMs) in your Digital Audio Workstation (DAW) to provide inspiration, instructions, and analysis for your music creation. Currently supported features include LLM chat, VST3 plugin, MIDI input, and Audio input. Requires C++17 compatible compiler, CMake, and Python 3.10 or later. Licensed under AGPL v3. Built by Grey Newell.

flow-like

Flow-Like is an enterprise-grade workflow operating system built upon Rust for uncompromising performance, efficiency, and code safety. It offers a modular frontend for apps, a rich set of events, a node catalog, a powerful no-code workflow IDE, and tools to manage teams, templates, and projects within organizations. With typed workflows, users can create complex, large-scale workflows with clear data origins, transformations, and contracts. Flow-Like is designed to automate any process through seamless integration of LLM, ML-based, and deterministic decision-making instances.

parallax

Parallax is a fully decentralized inference engine developed by Gradient. It allows users to build their own AI cluster for model inference across distributed nodes with varying configurations and physical locations. Core features include hosting local LLM on personal devices, cross-platform support, pipeline parallel model sharding, paged KV cache management, continuous batching for Mac, dynamic request scheduling, and routing for high performance. The backend architecture includes P2P communication powered by Lattica, GPU backend powered by SGLang and vLLM, and MAC backend powered by MLX LM.

For similar tasks

Phi-3CookBook

Phi-3CookBook is a manual on how to use the Microsoft Phi-3 family, which consists of open AI models developed by Microsoft. The Phi-3 models are highly capable and cost-effective small language models, outperforming models of similar and larger sizes across various language, reasoning, coding, and math benchmarks. The repository provides detailed information on different Phi-3 models, their performance, availability, and usage scenarios across different platforms like Azure AI Studio, Hugging Face, and Ollama. It also covers topics such as fine-tuning, evaluation, and end-to-end samples for Phi-3-mini and Phi-3-vision models, along with labs, workshops, and contributing guidelines.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

Firefly

Firefly is an open-source large model training project that supports pre-training, fine-tuning, and DPO of mainstream large models. It includes models like Llama3, Gemma, Qwen1.5, MiniCPM, Llama, InternLM, Baichuan, ChatGLM, Yi, Deepseek, Qwen, Orion, Ziya, Xverse, Mistral, Mixtral-8x7B, Zephyr, Vicuna, Bloom, etc. The project supports full-parameter training, LoRA, QLoRA efficient training, and various tasks such as pre-training, SFT, and DPO. Suitable for users with limited training resources, QLoRA is recommended for fine-tuning instructions. The project has achieved good results on the Open LLM Leaderboard with QLoRA training process validation. The latest version has significant updates and adaptations for different chat model templates.

Awesome-Text2SQL

Awesome Text2SQL is a curated repository containing tutorials and resources for Large Language Models, Text2SQL, Text2DSL, Text2API, Text2Vis, and more. It provides guidelines on converting natural language questions into structured SQL queries, with a focus on NL2SQL. The repository includes information on various models, datasets, evaluation metrics, fine-tuning methods, libraries, and practice projects related to Text2SQL. It serves as a comprehensive resource for individuals interested in working with Text2SQL and related technologies.

create-million-parameter-llm-from-scratch

The 'create-million-parameter-llm-from-scratch' repository provides a detailed guide on creating a Large Language Model (LLM) with 2.3 million parameters from scratch. The blog replicates the LLaMA approach, incorporating concepts like RMSNorm for pre-normalization, SwiGLU activation function, and Rotary Embeddings. The model is trained on a basic dataset to demonstrate the ease of creating a million-parameter LLM without the need for a high-end GPU.

StableToolBench

StableToolBench is a new benchmark developed to address the instability of Tool Learning benchmarks. It aims to balance stability and reality by introducing features such as a Virtual API System with caching and API simulators, a new set of solvable queries determined by LLMs, and a Stable Evaluation System using GPT-4. The Virtual API Server can be set up either by building from source or using a prebuilt Docker image. Users can test the server using provided scripts and evaluate models with Solvable Pass Rate and Solvable Win Rate metrics. The tool also includes model experiments results comparing different models' performance.

BetaML.jl

The Beta Machine Learning Toolkit is a package containing various algorithms and utilities for implementing machine learning workflows in multiple languages, including Julia, Python, and R. It offers a range of supervised and unsupervised models, data transformers, and assessment tools. The models are implemented entirely in Julia and are not wrappers for third-party models. Users can easily contribute new models or request implementations. The focus is on user-friendliness rather than computational efficiency, making it suitable for educational and research purposes.

AI-TOD

AI-TOD is a dataset for tiny object detection in aerial images, containing 700,621 object instances across 28,036 images. Objects in AI-TOD are smaller with a mean size of 12.8 pixels compared to other aerial image datasets. To use AI-TOD, download xView training set and AI-TOD_wo_xview, then generate the complete dataset using the provided synthesis tool. The dataset is publicly available for academic and research purposes under CC BY-NC-SA 4.0 license.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.