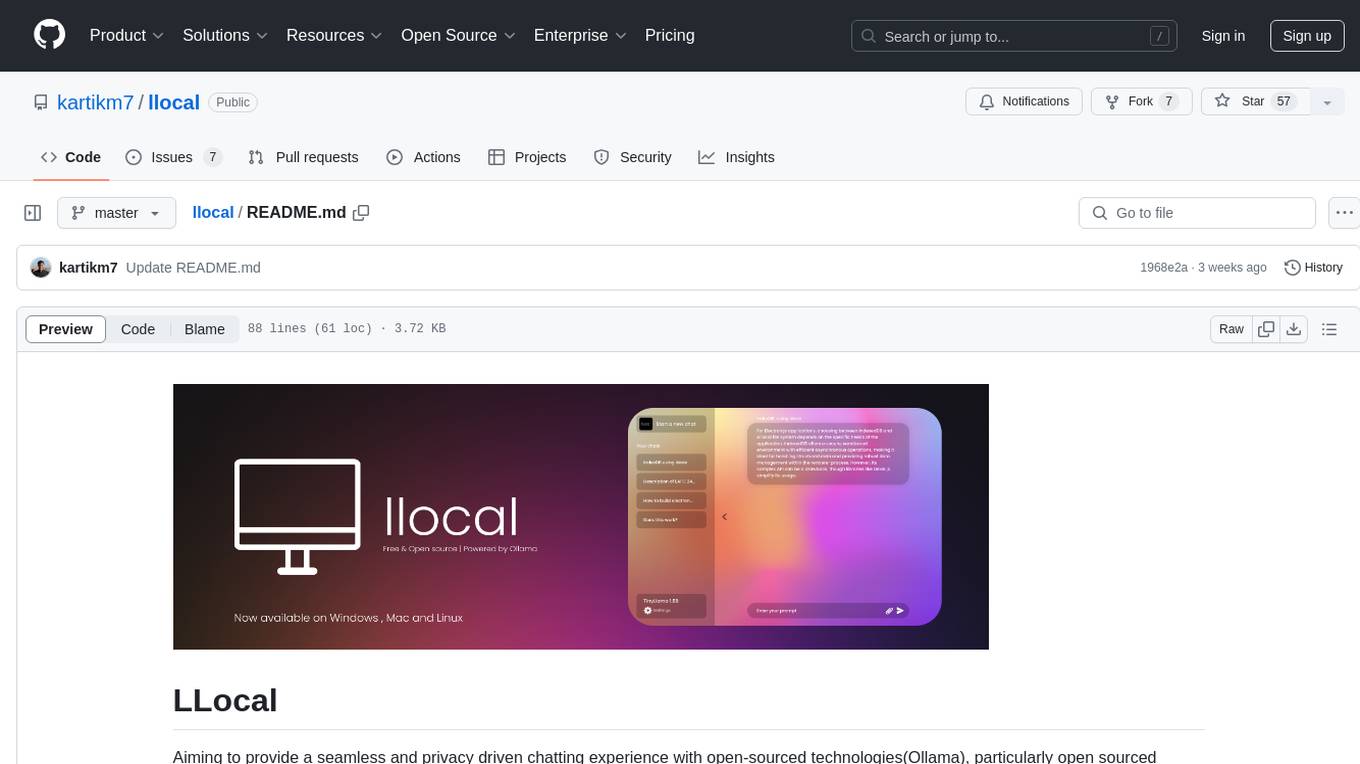

llocal

Aiming to provide a seamless and privacy driven chatting experience with open-sourced technologies(Ollama), particularly open sourced LLM's(eg. Llama3, Phi-3, Mistral). Focused on ease of use. Available on both Windows and Mac.

Stars: 160

LLocal is an Electron application focused on providing a seamless and privacy-driven chatting experience using open-sourced technologies, particularly open-sourced LLM's. It allows users to store chats locally, switch between models, pull new models, upload images, perform web searches, and render responses as markdown. The tool also offers multiple themes, seamless integration with Ollama, and upcoming features like chat with images, web search improvements, retrieval augmented generation, multiple PDF chat, text to speech models, community wallpapers, lofi music, speech to text, and more. LLocal's builds are currently unsigned, requiring manual builds or using the universal build for stability.

README:

Aiming to provide a seamless and privacy driven chatting experience with open-sourced technologies(Ollama), particularly open sourced LLM's(eg. Llama3.1, Phi-3, Mistral). Focused on ease of use.

LLocal can be installed on **Windows**, **Mac** and **Linux**.

https://github.com/user-attachments/assets/bdfefd5d-8a55-46cf-8c63-5a7ba5e093c7

- Llocaly store chats.

- Llocal utilizes Ollama which ensures that from processing to utilizing everything happens on your machine LLocally.

- Seamlessly switch between models.

- Easily pull new models.

- Image upload for models that support vision.

- Web search (i.e Website scraper aswell as duckduckgo search inbuilt) for all models.

- Chat with Files with persistence through vector db's being stored llocally. (Supported file types are PDF, PPTX, DOCX, CSV & TXT)

- Responses are rendered as markdown (Supporting Code Blocks with syntax highlighting, tabular formats and much more).

- Multiple themes (5 themes all suporting both light and dark mode)

- Seamless integration with Ollama, from download to install.

- Chat with images ✅

- Web Search ☑️ (purple because, it still can be improved)

- Retrieval Augmented Generation/RAG (with single PDF's) ✅

- Multiple PDF chat ✅

- Ollama Model Catalogue (Information about all models)

- Support for

<think />code blocks ✅ - Agents, the first two would be

DeepResearchandReasoning - Code live preview for web based code (Something like what Claude Provides)

- Text to Speech Models (only if we can get to be similar to a human like response) ✅

- Community wallpapers

- Community themes (something like what spicetify does)

- Lofi Music (this would be optional)

- Speech to text (Do we really need it?)

- Conversations like those with ChatGPT (Speech to text input and text to speech output, but the aim would be low-latency)

- Chat with chats ?! (Not sure)

At some point: would want to pivot LLocal in a different direction... (Although would need to discuss this with the users.)

LLocal's builds are unsigned at the moment, meaning there will be an unknown publisher alert on Windows and Mac. But, on mac it does not open because the code is unsigned and to solve this issue you can do either of the following:

-

Running a manual build by cloning the repo and then running the

npm run build:mac:armfor m series ornpm run build:mac:intelfor intel based macs. When you build it on your own, that time apple does not throw the error. I know this is inconvenient but the build does take at max a few minutes. -

Incase, you don't want to build it by yourself then you can also try the Universal build that seems to be more stable than the separate builds, but then you'd get the developer is not verified error which can be by passed by following this video .

The link to the mac universal build is this.

LLocal is an Electron application with React and TypeScript.

npm installnpm run dev# For windows

npm run build:win

# For macOS (m-series)

npm run build:mac:arm

# For macOS (intel-chips)

npm run build:mac:intel

# For Linux (Supported now!)

npm run build:linuxYou can refer to the CONTRIBUTING.md

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for llocal

Similar Open Source Tools

llocal

LLocal is an Electron application focused on providing a seamless and privacy-driven chatting experience using open-sourced technologies, particularly open-sourced LLM's. It allows users to store chats locally, switch between models, pull new models, upload images, perform web searches, and render responses as markdown. The tool also offers multiple themes, seamless integration with Ollama, and upcoming features like chat with images, web search improvements, retrieval augmented generation, multiple PDF chat, text to speech models, community wallpapers, lofi music, speech to text, and more. LLocal's builds are currently unsigned, requiring manual builds or using the universal build for stability.

pyqt-openai

VividNode is a cross-platform AI desktop chatbot application for LLM such as GPT, Claude, Gemini, Llama chatbot interaction and image generation. It offers customizable features, local chat history, and enhanced performance without requiring a browser. The application is powered by GPT4Free and allows users to interact with chatbots and generate images seamlessly. VividNode supports Windows, Mac, and Linux, securely stores chat history locally, and provides features like chat interface customization, image generation, focus and accessibility modes, and extensive customization options with keyboard shortcuts for efficient operations.

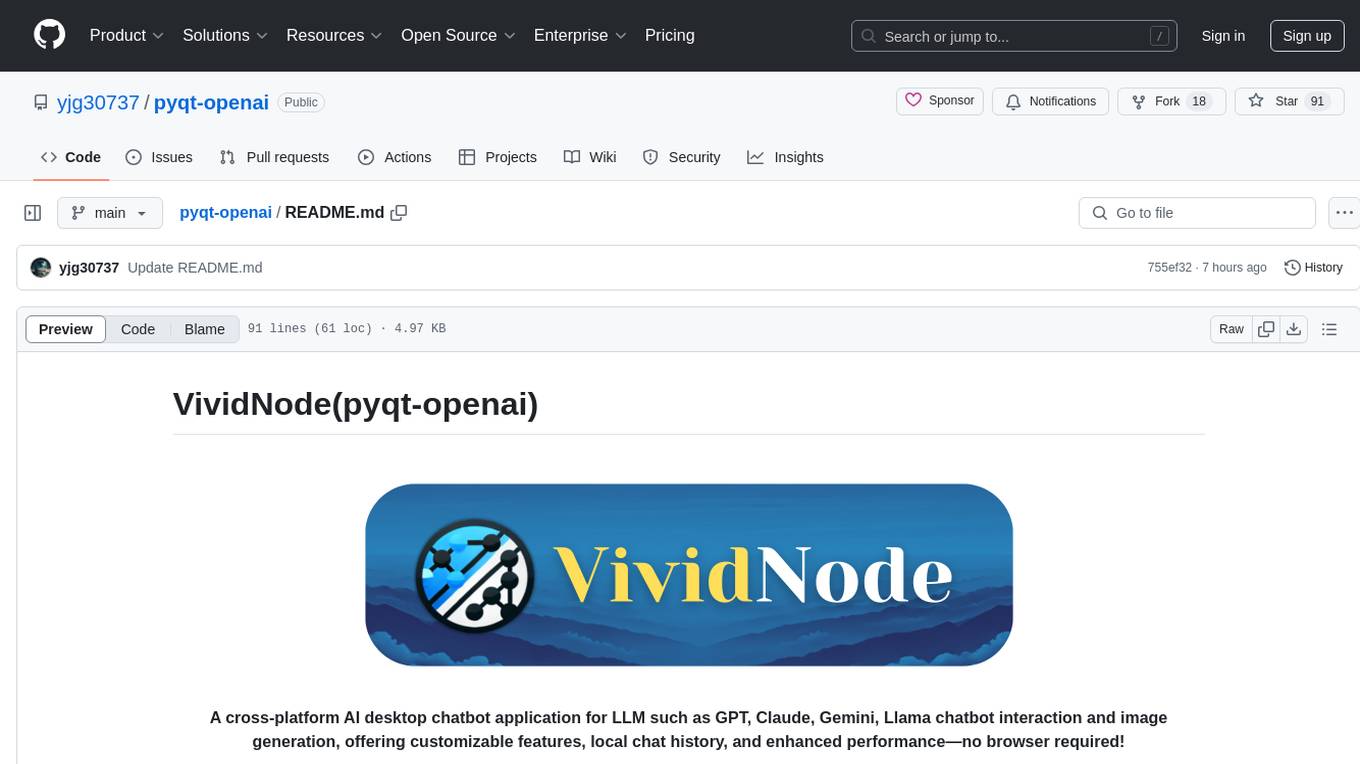

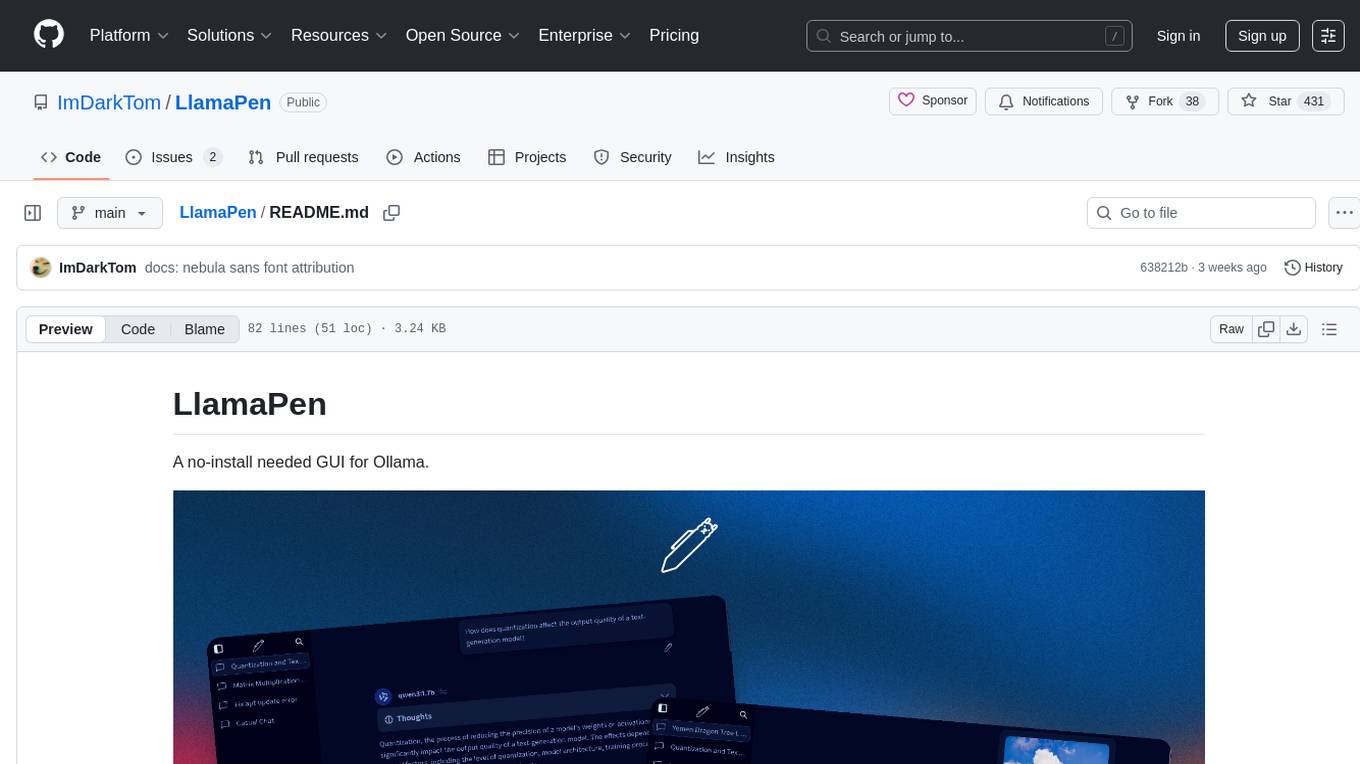

LlamaPen

LlamaPen is a no-install needed GUI tool for Ollama, featuring a web-based interface accessible on both desktop and mobile. It allows easy setup and configuration, renders markdown, text, and LaTeX math, provides keyboard shortcuts for quick navigation, includes a built-in model and download manager, supports offline and PWA, and is 100% free and open-source. Users can chat with complete privacy as all chats are stored locally in the browser, ensuring near-instant chat load times. The tool also offers an optional cloud service, LlamaPen API, for running up-to-date models if unable to run locally, with a subscription option for increased rate limits and access to more expensive models.

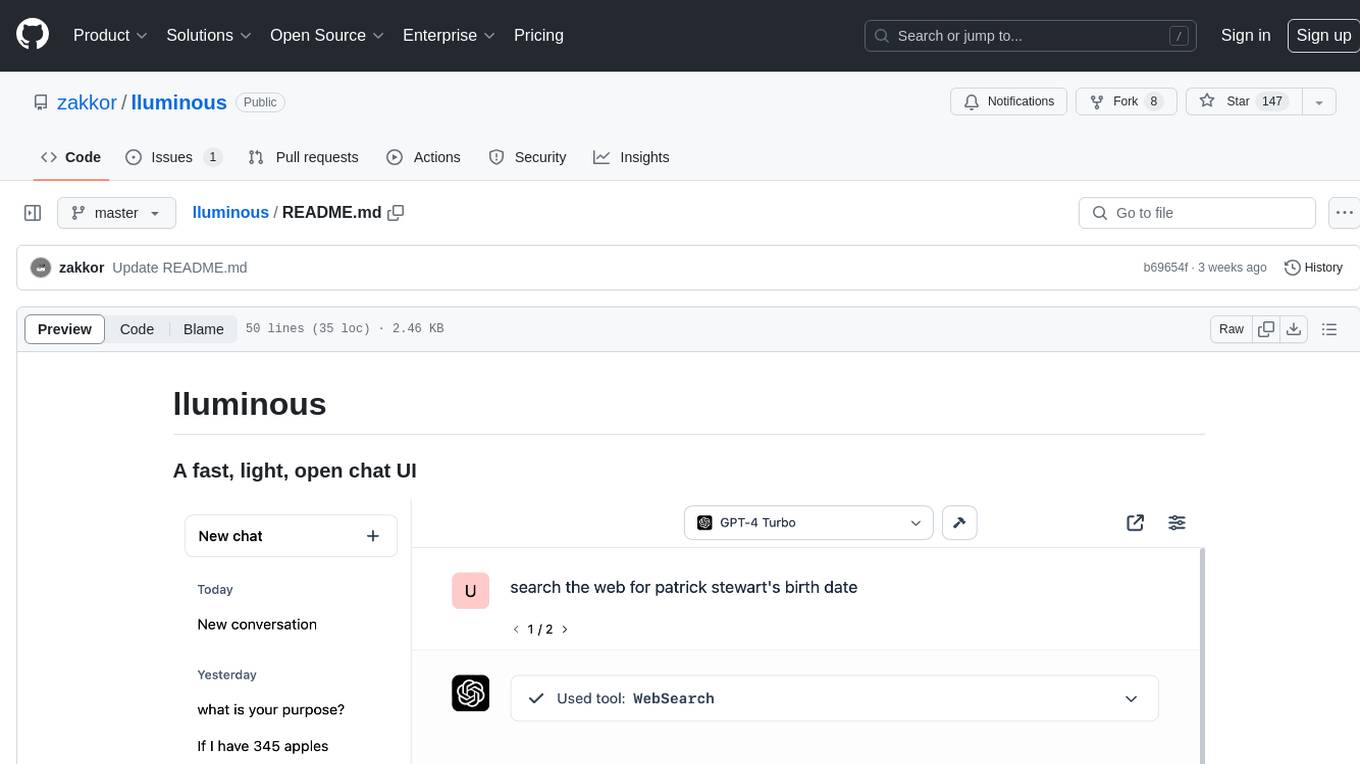

lluminous

lluminous is a fast and light open chat UI that supports multiple providers such as OpenAI, Anthropic, and Groq models. Users can easily plug in their API keys locally to access various models for tasks like multimodal input, image generation, multi-shot prompting, pre-filled responses, and more. The tool ensures privacy by storing all conversation history and keys locally on the user's device. Coming soon features include memory tool, file ingestion/embedding, embeddings-based web search, and prompt templates.

Transtation-KMP

Transtation is an easy-to-use and powerful translation software for Android/Desktop based on Kotlin Multiplatform + Compose Multiplatform. It allows users to translate one item using multiple engines simultaneously, utilize advanced Large Language Models for translation, chat with LLMs for translation, translate long text, support plugin development, image translation, and screen translation. The application is designed for Chinese users and serves as a reference for learning Jetpack Compose or Compose Multiplatform. It features Kotlin Multiplatform, Compose Multiplatform, MVVM, Kotlin Coroutine, Flow, SqlDelight, synchronized translation with multiple engines, plugin development, and makes use of Kotlin language features like lazy loading, Coroutine, sealed classes, and reflection. The application gradually adapts to Android13 with features like setting application language separately and supporting Monet icon.

dataline

DataLine is an AI-driven data analysis and visualization tool designed for technical and non-technical users to explore data quickly. It offers privacy-focused data storage on the user's device, supports various data sources, generates charts, executes queries, and facilitates report building. The tool aims to speed up data analysis tasks for businesses and individuals by providing a user-friendly interface and natural language querying capabilities.

sdk

Vikit.ai SDK is a software development kit that enables easy development of video generators using generative AI and other AI models. It serves as a langchain to orchestrate AI models and video editing tools. The SDK allows users to create videos from text prompts with background music and voice-over narration. It also supports generating composite videos from multiple text prompts. The tool requires Python 3.8+, specific dependencies, and tools like FFMPEG and ImageMagick for certain functionalities. Users can contribute to the project by following the contribution guidelines and standards provided.

Ollama-SwiftUI

Ollama-SwiftUI is a user-friendly interface for Ollama.ai created in Swift. It allows seamless chatting with local Large Language Models on Mac. Users can change models mid-conversation, restart conversations, send system prompts, and use multimodal models with image + text. The app supports managing models, including downloading, deleting, and duplicating them. It offers light and dark mode, multiple conversation tabs, and a localized interface in English and Arabic.

chatty

Chatty is a private AI tool that runs large language models natively and privately in the browser, ensuring in-browser privacy and offline usability. It supports chat history management, open-source models like Gemma and Llama2, responsive design, intuitive UI, markdown & code highlight, chat with files locally, custom memory support, export chat messages, voice input support, response regeneration, and light & dark mode. It aims to bring popular AI interfaces like ChatGPT and Gemini into an in-browser experience.

nobodywho

NobodyWho is a plugin for the Godot game engine that enables interaction with local LLMs for interactive storytelling. Users can install it from Godot editor or GitHub releases page, providing their own LLM in GGUF format. The plugin consists of `NobodyWhoModel` node for model file, `NobodyWhoChat` node for chat interaction, and `NobodyWhoEmbedding` node for generating embeddings. It offers a programming interface for sending text to LLM, receiving responses, and starting the LLM worker.

kubeai

KubeAI is a highly scalable AI platform that runs on Kubernetes, serving as a drop-in replacement for OpenAI with API compatibility. It can operate OSS model servers like vLLM and Ollama, with zero dependencies and additional OSS addons included. Users can configure models via Kubernetes Custom Resources and interact with models through a chat UI. KubeAI supports serving various models like Llama v3.1, Gemma2, and Qwen2, and has plans for model caching, LoRA finetuning, and image generation.

phosphobot

Phosphobot is a software tool designed to control robots, record data, train and use VLA (vision language action) models. It allows users to control robots using various input methods, train action models with ease, and is compatible with a range of robots and cameras. The tool runs on multiple operating systems and provides a user-friendly API for developers. Users can purchase a starter pack or use supported robots, record datasets, train action models, and control robots using the webapp or Python package. Phosphobot is open source, allowing users to extend its functionality with their own robots and cameras.

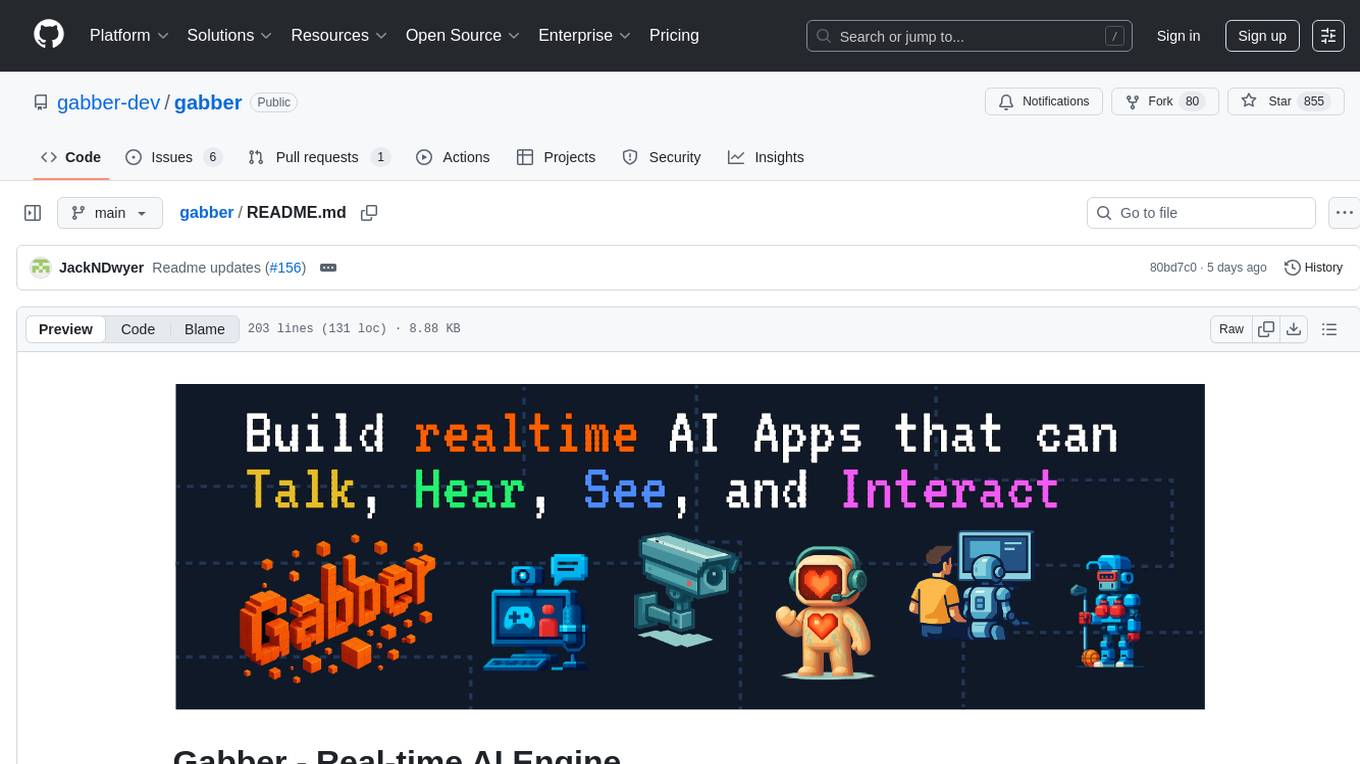

gabber

Gabber is a real-time AI engine that supports graph-based apps with multiple participants and simultaneous media streams. It allows developers to build powerful and developer-friendly AI applications across voice, text, video, and more. The engine consists of frontend and backend services including an editor, engine, and repository. Gabber provides SDKs for JavaScript/TypeScript, React, Python, Unity, and upcoming support for iOS, Android, React Native, and Flutter. The roadmap includes adding more nodes and examples, such as computer use nodes, Unity SDK with robotics simulation, SIP nodes, and multi-participant turn-taking. Users can create apps using nodes, pads, subgraphs, and state machines to define application flow and logic.

RTutor

RTutor is an AI-based app that generates and tests R code by translating natural language into R scripts using API calls to OpenAI's ChatGPT. It executes the scripts within the Shiny platform, generating R Markdown source files and HTML reports. The tool features GPT-4 for accurate code, comprehensive EDA reports, and a chat window for code explanation, making it ideal for learning R and statistics.

supervisely

Supervisely is a computer vision platform that provides a range of tools and services for developing and deploying computer vision solutions. It includes a data labeling platform, a model training platform, and a marketplace for computer vision apps. Supervisely is used by a variety of organizations, including Fortune 500 companies, research institutions, and government agencies.

ai-dev-gallery

The AI Dev Gallery is an app designed to help Windows developers integrate AI capabilities within their own apps and projects. It contains over 25 interactive samples powered by local AI models, allows users to explore, download, and run models from Hugging Face and GitHub, and provides the ability to view the C# source code and export a standalone Visual Studio project for each sample. The app is open-source and welcomes contributions and suggestions from the community.

For similar tasks

hugging-chat-api

Unofficial HuggingChat Python API for creating chatbots, supporting features like image generation, web search, memorizing context, and changing LLMs. Users can log in, chat with the ChatBot, perform web searches, create new conversations, manage conversations, switch models, get conversation info, use assistants, and delete conversations. The API also includes a CLI mode with various commands for interacting with the tool. Users are advised not to use the application for high-stakes decisions or advice and to avoid high-frequency requests to preserve server resources.

KeyboardGPT

Keyboard GPT is an LSPosed Module that integrates Generative AI like ChatGPT into your keyboard, allowing for real-time AI responses, custom prompts, and web search capabilities. It works in all apps and supports popular keyboards like Gboard, Swiftkey, Fleksy, and Samsung Keyboard. Users can easily configure API providers, submit prompts, and perform web searches directly from their keyboard. The tool also supports multiple Generative AI APIs such as ChatGPT, Gemini, and Groq. It offers an easy installation process for both rooted and non-rooted devices, making it a versatile and powerful tool for enhancing text input experiences on mobile devices.

llocal

LLocal is an Electron application focused on providing a seamless and privacy-driven chatting experience using open-sourced technologies, particularly open-sourced LLM's. It allows users to store chats locally, switch between models, pull new models, upload images, perform web searches, and render responses as markdown. The tool also offers multiple themes, seamless integration with Ollama, and upcoming features like chat with images, web search improvements, retrieval augmented generation, multiple PDF chat, text to speech models, community wallpapers, lofi music, speech to text, and more. LLocal's builds are currently unsigned, requiring manual builds or using the universal build for stability.

erag

ERAG is an advanced system that combines lexical, semantic, text, and knowledge graph searches with conversation context to provide accurate and contextually relevant responses. It processes various document types, creates embeddings, builds knowledge graphs, and uses this information to answer user queries intelligently. The tool includes modules for interacting with web content, GitHub repositories, and performing exploratory data analysis using various language models. It offers a GUI for managing local LLaMA.cpp servers, customizable settings, and advanced search utilities. ERAG supports multi-model collaboration, iterative knowledge refinement, automated quality assessment, and structured knowledge format enforcement. Users can generate specific knowledge entries, full-size textbooks, or datasets using AI-generated questions and answers.

scrapegraph-sdk

Official SDKs for the ScrapeGraph AI API - Intelligent web scraping and search powered by AI. Extract structured data from any webpage or perform AI-powered web searches with natural language prompts. The SDK offers features such as SmartScraper for data extraction, SearchScraper for AI-powered web search, Markdownify for converting webpages to markdown, SmartCrawler for intelligent crawling, AgenticScraper for automated browser actions, and more. It provides seamless integration with popular frameworks and tools, supports Python and JavaScript SDKs, LLM frameworks, low-code platforms, and offers core features like AI-powered extraction, structured output, multiple data formats, high performance, and enterprise-grade security.

z-ai-sdk-python

Z.ai Open Platform Python SDK is the official Python SDK for Z.ai's large model open interface, providing developers with easy access to Z.ai's open APIs. The SDK offers core features like chat completions, embeddings, video generation, audio processing, assistant API, and advanced tools. It supports various functionalities such as speech transcription, text-to-video generation, image understanding, and structured conversation handling. Developers can customize client behavior, configure API keys, and handle errors efficiently. The SDK is designed to simplify AI interactions and enhance AI capabilities for developers.

aino

Aino is an experimental HTTP framework for Elixir that uses elli instead of Cowboy like Phoenix and Plug. It focuses on writing handlers to process requests through middleware functions. Aino works on a token instead of a conn, allowing flexibility in adding custom keys. It includes built-in middleware for common tasks and a routing layer for defining routes. Handlers in Aino must return a token with specific keys for response rendering.

langchain4j-aideepin

LangChain4j-AIDeepin is an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and Langchain4j application framework. It offers features like registration & login, multi-session support, image generation, prompt words, quota control, knowledge base, model-based search, model switching, and search engine switching. The project integrates models like ChatGPT 3.5, Tongyi Qianwen, Wenxin Yiyuan, Ollama, and DALL-E 2. The backend uses technologies like JDK 17, Spring Boot 3.0.5, Langchain4j, and PostgreSQL with pgvector extension, while the frontend is built with Vue3, TypeScript, and PNPM.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.