vue-stream-markdown

Streaming markdown output, Useful for text streams like LLM outputs.

Stars: 182

A markdown renderer optimized for streaming scenarios, providing smoother rendering through syntax inference and customizable elements. Features include streaming-optimized rendering, incremental rendering, progressive Mermaid rendering, streaming LaTeX rendering, interactive controls, full customization, theme-aware styles, built-in typography, content hardening & security, and SSR support. Inspired by streamdown, leveraging mdast, Shiki, Mermaid, beautiful-mermaid, KaTeX, and Remend. Acknowledges markstream-vue, ast-explorer, medium-zoom, markdown-sanitizers, and Dify. Troubleshooting support for debugging streaming rendering issues.

README:

A markdown renderer specially optimized for streaming scenarios, inspired by streamdown. Designed to achieve smoother streaming rendering through syntax inference and highly customizable rendering elements.

pnpm add vue-stream-markdown📚 Documentation | 🤹♂️ Playground

- Streaming-optimized rendering - Incomplete node completion with loading states for images, tables, and code blocks to prevent visual jitter

-

Incremental rendering - Leverages Shiki's

codeToTokensAPI for token-level updates, reducing DOM recreation overhead - Progressive Mermaid rendering - Throttled, streaming-friendly diagram rendering with loading states, supporting both vanilla Mermaid.js and beautiful-mermaid renderers with automatic fallback for unsupported diagram types

- Streaming LaTeX rendering - Progressive math equation rendering with KaTeX support

- Interactive controls - Copy and download buttons for images, tables, and code blocks

- Fully customizable - Replace any AST node or UI component with your own Vue components

-

Theme-aware scoped styles - Scoped styles under

.stream-markdownwith semanticdata-stream-markdownattributes, following shadcn/ui design system - Beautiful built-in typography - No atomic CSS required (Tailwind/UnoCSS), self-contained styles

- Content hardening & security - Built-in protection against malicious Markdown with URL validation and protocol blocking

- SSR support - Full server-side rendering compatibility with environment detection utilities

[!IMPORTANT] 🚧 vue-stream-markdown is currently in active feature development. From version

0.4.0onwards, you need to manually includekatex.min.css. If CDN is enabled, it will be automatically loaded.

For detailed usage and API documentation, please refer to the Documentation.

<script setup lang="ts">

import { ref } from 'vue'

import { Markdown } from 'vue-stream-markdown'

// If CDN is enabled, you don't need to manually import katex.min.css

import 'katex/dist/katex.min.css'

import 'vue-stream-markdown/index.css'

// If you don't have shadcn CSS variables globally, import the theme

import 'vue-stream-markdown/theme.css'

const content = ref('# Hello World\n\nThis is a markdown content.')

</script>

<template>

<Markdown :content="content" />

</template>This project is inspired by streamdown and even uses some source code from it.

This project also uses and benefits from:

- mdast - Markdown Abstract Syntax Tree format

- Shiki - Beautiful syntax highlighting

- Mermaid - Diagramming and charting tool

- beautiful-mermaid - Beautiful Mermaid diagram renderer with Shiki integration

- KaTeX - Fast math typesetting library for the web

- Remend - This project implements similar functionality inspired by remend for intelligently parsing and completing incomplete Markdown blocks.

- markstream-vue - The original inspiration for learning AST-based custom markdown rendering, and the source of the animation implementation used in this project

- ast-explorer - Learned AST knowledge from this project, and the playground layout inspiration and AST syntax tree filtering code are derived from it

- medium-zoom - Inspired the custom image zoom implementation

-

markdown-sanitizers - URL validation and security hardening logic in

src/utils/harden.tsis ported fromrehype-harden -

Dify - LaTeX preprocessing logic in

src/preprocess/vendored/markdown-utils.tsis ported from Dify

I would like to express my sincere gratitude to those who provided guidance and support during the project selection phase and promotion phase of this project. Without their encouragement and support, I would not have been able to complete this work. In particular, the streamdown community provided excellent code guidance and even helped fix several issues.

The playground supports generating shareable links and provides streaming controls (forward/backward navigation) for debugging streaming rendering issues.

If you encounter any problems, please:

- Use the Generate Share Links button in the playground to create a shareable link with your current content

- Enable the AST Result toggle to view the parsed AST syntax tree

- Copy the markdown content and AST syntax tree at the time of the issue

Please provide the shareable link, markdown content, and AST syntax tree when creating an issue. This will help me reproduce and diagnose the problem more effectively.

MIT License © jinghaihan

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for vue-stream-markdown

Similar Open Source Tools

vue-stream-markdown

A markdown renderer optimized for streaming scenarios, providing smoother rendering through syntax inference and customizable elements. Features include streaming-optimized rendering, incremental rendering, progressive Mermaid rendering, streaming LaTeX rendering, interactive controls, full customization, theme-aware styles, built-in typography, content hardening & security, and SSR support. Inspired by streamdown, leveraging mdast, Shiki, Mermaid, beautiful-mermaid, KaTeX, and Remend. Acknowledges markstream-vue, ast-explorer, medium-zoom, markdown-sanitizers, and Dify. Troubleshooting support for debugging streaming rendering issues.

Code-Atlas

Code Atlas is a lightweight interpreter developed in C++ that supports the execution of multi-language code snippets and partial Markdown rendering. It consumes significantly lower resources compared to similar tools, making it suitable for resource-limited devices. It leverages llama.cpp for local large-model inference and supports cloud-based large-model APIs. The tool provides features for code execution, Markdown rendering, local AI inference, and resource efficiency.

midscene

Midscene.js is an AI-powered automation SDK that allows users to control web pages, perform assertions, and extract data in JSON format using natural language. It offers features such as natural language interaction, understanding UI and providing responses in JSON, intuitive assertion based on AI understanding, compatibility with public multimodal LLMs like GPT-4o, visualization tool for easy debugging, and a brand new experience in automation development.

agent-zero

Agent Zero is a personal, organic agentic framework designed to be dynamic, transparent, customizable, and interactive. It uses the computer as a tool to accomplish tasks, with features like general-purpose assistant, computer as a tool, multi-agent cooperation, customizable and extensible framework, and communication skills. The tool is fully Dockerized, with Speech-to-Text and TTS capabilities, and offers real-world use cases like financial analysis, Excel automation, API integration, server monitoring, and project isolation. Agent Zero can be dangerous if not used properly and is prompt-based, guided by the prompts folder. The tool is extensively documented and has a changelog highlighting various updates and improvements.

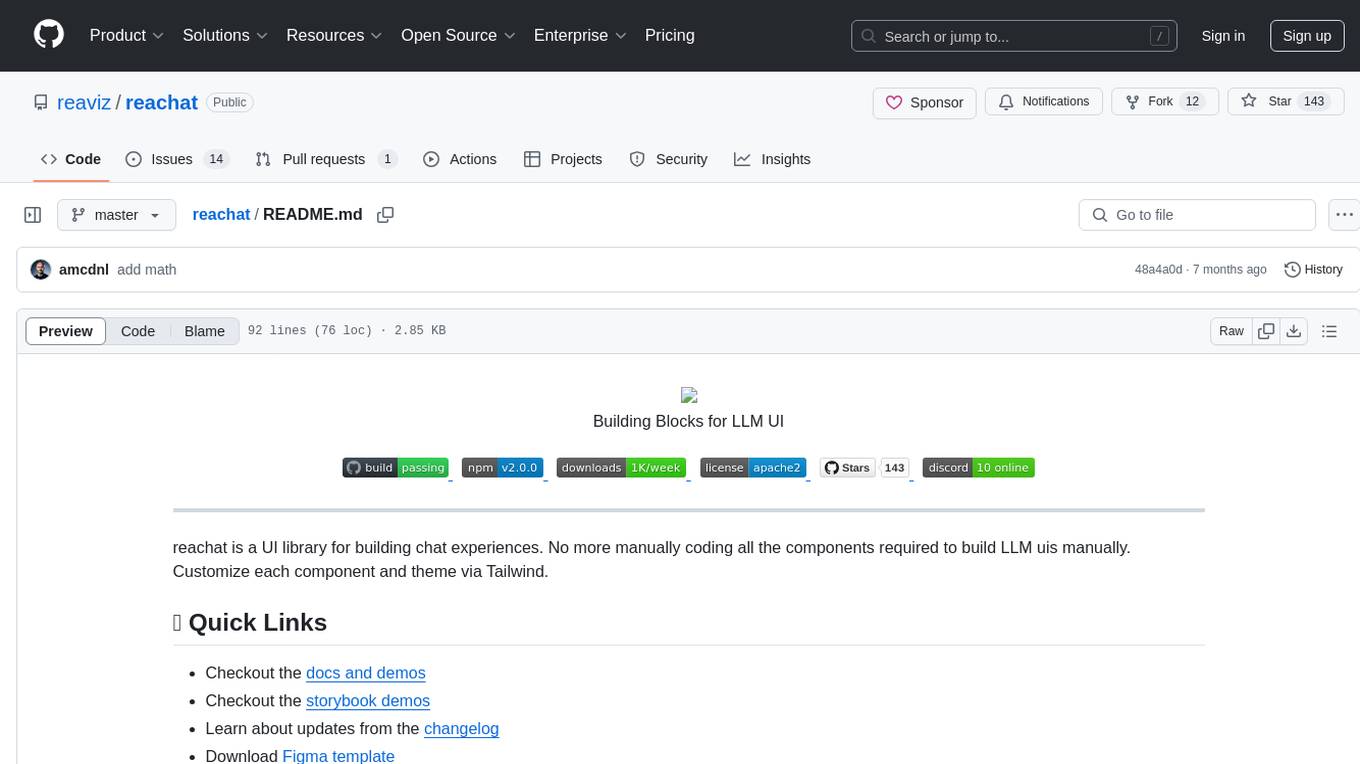

reachat

Reachat is a UI library designed for building chat experiences without the need for manual coding of components. Users can customize each component and theme using Tailwind. The library offers features such as console and companion modes, markdown rendering, code highlighting, tables, JSON support, math rendering, YouTube embeds, file uploads, message sources, animations, conversation pagination, keyboard shortcuts, responsive design, and more. Reachat is highly customizable and suitable for creating interactive chat interfaces.

chatnio

Chat Nio is a next-generation AIGC one-stop business solution that combines the advantages of frontend-oriented lightweight deployment projects with powerful API distribution systems. It offers rich model support, beautiful UI design, complete Markdown support, multi-theme support, internationalization support, text-to-image support, powerful conversation sync, model market & preset system, rich file parsing, full model internet search, Progressive Web App (PWA) support, comprehensive backend management, multiple billing methods, innovative model caching, and additional features. The project aims to address limitations in conversation synchronization, billing, file parsing, conversation URL sharing, channel management, and API call support found in existing AIGC commercial sites, while also providing a user-friendly interface design and C-end features.

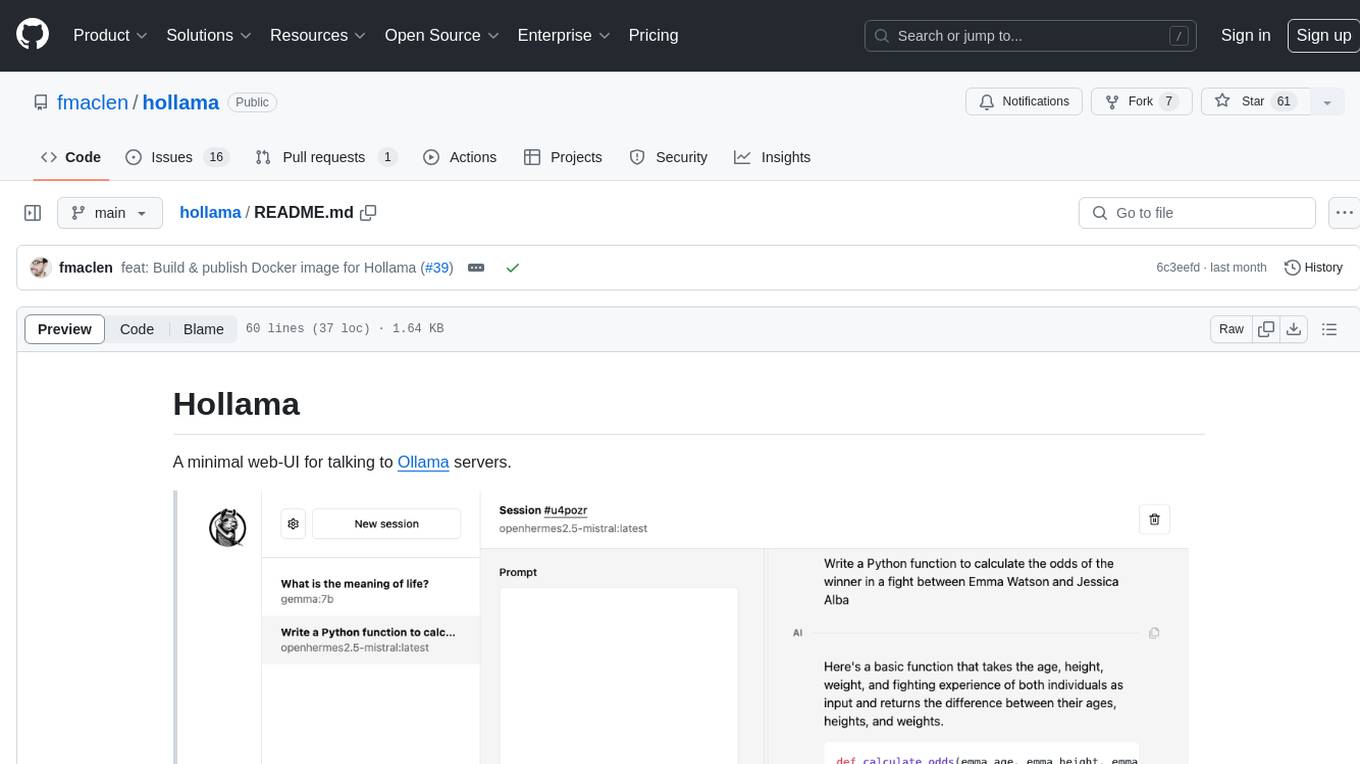

hollama

Hollama is a minimal web-UI tool designed for interacting with Ollama servers. It features large prompt fields, streams completions, ability to copy completions as raw text, Markdown parsing with syntax highlighting, and saves sessions/context in the browser's localStorage. Users can access the latest version of Hollama at https://hollama.fernando.is without sign up, and data is stored locally on the browser. The tool can also be run as a Docker image by executing a specific command. Developers can connect to an Ollama server by updating the ORIGIN settings. Hollama facilitates easy development by providing instructions to set up the environment, install dependencies, and start a development server. Building a production version of the app is straightforward with a single command, and deployment may require installing an adapter for the target environment.

ai-flow

AI Flow is an open-source, user-friendly UI application that empowers you to seamlessly connect multiple AI models together, specifically leveraging the capabilities of multiples AI APIs such as OpenAI, StabilityAI and Replicate. In a nutshell, AI Flow provides a visual platform for crafting and managing AI-driven workflows, thereby facilitating diverse and dynamic AI interactions.

llm-workflow-engine

LLM Workflow Engine (LWE) is a powerful command-line interface (CLI) and workflow manager for large language models (LLMs) like ChatGPT and GPT4. It allows users to interact with LLMs directly from their terminal, making it easy to automate tasks and build complex workflows. LWE supports the official ChatGPT API, providing access to all supported models through your OpenAI account. Additionally, it features a simple plugin architecture that enables users to extend its functionality and integrate with other LLMs. LWE also offers a Python API for integrating LLM capabilities into Python scripts. Notable projects built using the original ChatGPT Wrapper, which LWE evolved from, include bookast, ChatGPT.el, ChatGPT Reddit Bot, Smarty GPT, ChatGPTify, and selection-to-chatgpt.

LLM-Zero-to-Hundred

LLM-Zero-to-Hundred is a repository showcasing various applications of LLM chatbots and providing insights into training and fine-tuning Language Models. It includes projects like WebGPT, RAG-GPT, WebRAGQuery, LLM Full Finetuning, RAG-Master LLamaindex vs Langchain, open-source-RAG-GEMMA, and HUMAIN: Advanced Multimodal, Multitask Chatbot. The projects cover features like ChatGPT-like interaction, RAG capabilities, image generation and understanding, DuckDuckGo integration, summarization, text and voice interaction, and memory access. Tutorials include LLM Function Calling and Visualizing Text Vectorization. The projects have a general structure with folders for README, HELPER, .env, configs, data, src, images, and utils.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

kestra

Kestra is an open-source event-driven orchestration platform that simplifies building scheduled and event-driven workflows. It offers Infrastructure as Code best practices for data, process, and microservice orchestration, allowing users to create reliable workflows using YAML configuration. Key features include everything as code with Git integration, event-driven and scheduled workflows, rich plugin ecosystem for data extraction and script running, intuitive UI with syntax highlighting, scalability for millions of workflows, version control friendly, and various features for structure and resilience. Kestra ensures declarative orchestration logic management even when workflows are modified via UI, API calls, or other methods.

qapyq

qapyq is an image viewer and AI-assisted editing tool designed to help curate datasets for generative AI models. It offers features such as image viewing, editing, captioning, batch processing, and AI assistance. Users can perform tasks like cropping, scaling, editing masks, tagging, and applying sorting and filtering rules. The tool supports state-of-the-art captioning and masking models, with options for model settings, GPU acceleration, and quantization. qapyq aims to streamline the process of preparing images for training AI models by providing a user-friendly interface and advanced functionalities.

replexica

Replexica is an i18n toolkit for React, to ship multi-language apps fast. It doesn't require extracting text into JSON files, and uses AI-powered API for content processing. It comes in two parts: 1. Replexica Compiler - an open-source compiler plugin for React; 2. Replexica API - an i18n API in the cloud that performs translations using LLMs. (Usage based, has a free tier.) Replexica supports several i18n formats: 1. JSON-free Replexica compiler format; 2. .md files for Markdown content; 3. Legacy JSON and YAML-based formats.

h2ogpt

h2oGPT is an Apache V2 open-source project that allows users to query and summarize documents or chat with local private GPT LLMs. It features a private offline database of any documents (PDFs, Excel, Word, Images, Video Frames, Youtube, Audio, Code, Text, MarkDown, etc.), a persistent database (Chroma, Weaviate, or in-memory FAISS) using accurate embeddings (instructor-large, all-MiniLM-L6-v2, etc.), and efficient use of context using instruct-tuned LLMs (no need for LangChain's few-shot approach). h2oGPT also offers parallel summarization and extraction, reaching an output of 80 tokens per second with the 13B LLaMa2 model, HYDE (Hypothetical Document Embeddings) for enhanced retrieval based upon LLM responses, a variety of models supported (LLaMa2, Mistral, Falcon, Vicuna, WizardLM. With AutoGPTQ, 4-bit/8-bit, LORA, etc.), GPU support from HF and LLaMa.cpp GGML models, and CPU support using HF, LLaMa.cpp, and GPT4ALL models. Additionally, h2oGPT provides Attention Sinks for arbitrarily long generation (LLaMa-2, Mistral, MPT, Pythia, Falcon, etc.), a UI or CLI with streaming of all models, the ability to upload and view documents through the UI (control multiple collaborative or personal collections), Vision Models LLaVa, Claude-3, Gemini-Pro-Vision, GPT-4-Vision, Image Generation Stable Diffusion (sdxl-turbo, sdxl) and PlaygroundAI (playv2), Voice STT using Whisper with streaming audio conversion, Voice TTS using MIT-Licensed Microsoft Speech T5 with multiple voices and Streaming audio conversion, Voice TTS using MPL2-Licensed TTS including Voice Cloning and Streaming audio conversion, AI Assistant Voice Control Mode for hands-free control of h2oGPT chat, Bake-off UI mode against many models at the same time, Easy Download of model artifacts and control over models like LLaMa.cpp through the UI, Authentication in the UI by user/password via Native or Google OAuth, State Preservation in the UI by user/password, Linux, Docker, macOS, and Windows support, Easy Windows Installer for Windows 10 64-bit (CPU/CUDA), Easy macOS Installer for macOS (CPU/M1/M2), Inference Servers support (oLLaMa, HF TGI server, vLLM, Gradio, ExLLaMa, Replicate, OpenAI, Azure OpenAI, Anthropic), OpenAI-compliant, Server Proxy API (h2oGPT acts as drop-in-replacement to OpenAI server), Python client API (to talk to Gradio server), JSON Mode with any model via code block extraction. Also supports MistralAI JSON mode, Claude-3 via function calling with strict Schema, OpenAI via JSON mode, and vLLM via guided_json with strict Schema, Web-Search integration with Chat and Document Q/A, Agents for Search, Document Q/A, Python Code, CSV frames (Experimental, best with OpenAI currently), Evaluate performance using reward models, and Quality maintained with over 1000 unit and integration tests taking over 4 GPU-hours.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

For similar tasks

vue-stream-markdown

A markdown renderer optimized for streaming scenarios, providing smoother rendering through syntax inference and customizable elements. Features include streaming-optimized rendering, incremental rendering, progressive Mermaid rendering, streaming LaTeX rendering, interactive controls, full customization, theme-aware styles, built-in typography, content hardening & security, and SSR support. Inspired by streamdown, leveraging mdast, Shiki, Mermaid, beautiful-mermaid, KaTeX, and Remend. Acknowledges markstream-vue, ast-explorer, medium-zoom, markdown-sanitizers, and Dify. Troubleshooting support for debugging streaming rendering issues.

pro-chat

ProChat is a components library focused on quickly building large language model chat interfaces. It empowers developers to create rich, dynamic, and intuitive chat interfaces with features like automatic chat caching, streamlined conversations, message editing tools, auto-rendered Markdown, and programmatic controls. The tool also includes design evolution plans such as customized dialogue rendering, enhanced request parameters, personalized error handling, expanded documentation, and atomic component design.

ChatGPT-Telegram-Bot

The ChatGPT Telegram Bot is a powerful Telegram bot that utilizes various GPT models, including GPT3.5, GPT4, GPT4 Turbo, GPT4 Vision, DALL·E 3, Groq Mixtral-8x7b/LLaMA2-70b, and Claude2.1/Claude3 opus/sonnet API. It enables users to engage in efficient conversations and information searches on Telegram. The bot supports multiple AI models, online search with DuckDuckGo and Google, user-friendly interface, efficient message processing, document interaction, Markdown rendering, and convenient deployment options like Zeabur, Replit, and Docker. Users can set environment variables for configuration and deployment. The bot also provides Q&A functionality, supports model switching, and can be deployed in group chats with whitelisting. The project is open source under GPLv3 license.

PureChat

PureChat is a chat application integrated with ChatGPT, featuring efficient application building with Vite5, screenshot generation and copy support for chat records, IM instant messaging SDK for sessions, automatic light and dark mode switching based on system theme, Markdown rendering, code highlighting, and link recognition support, seamless social experience with GitHub quick login, integration of large language models like ChatGPT Ollama for streaming output, preset prompts, and context, Electron desktop app versions for macOS and Windows, ongoing development of more features. Environment setup requires Node.js 18.20+. Clone code with 'git clone https://github.com/Hyk260/PureChat.git', install dependencies with 'pnpm install', start project with 'pnpm dev', and build with 'pnpm build'.

Sidekick

Sidekick is a native LLM application for macOS that allows users to chat with a local language model to retrieve information from files, folders, and websites without the need for additional software installation. It operates offline, ensuring data privacy and security. Sidekick offers features such as resource access, image generation, inline writing assistance, advanced markdown rendering, fast generation speeds, and more. The tool aims to provide a simple and powerful solution for accessing local, private models with context awareness of user files and content on the web.

Code-Atlas

Code Atlas is a lightweight interpreter developed in C++ that supports the execution of multi-language code snippets and partial Markdown rendering. It consumes significantly lower resources compared to similar tools, making it suitable for resource-limited devices. It leverages llama.cpp for local large-model inference and supports cloud-based large-model APIs. The tool provides features for code execution, Markdown rendering, local AI inference, and resource efficiency.

deepchat

DeepChat is a versatile chat tool that supports multiple model cloud services and local model deployment. It offers multi-channel chat concurrency support, platform compatibility, complete Markdown rendering, and easy usability with a comprehensive guide. The tool aims to enhance chat experiences by leveraging various AI models and ensuring efficient conversation management.

reachat

Reachat is a UI library designed for building chat experiences without the need for manual coding of components. Users can customize each component and theme using Tailwind. The library offers features such as console and companion modes, markdown rendering, code highlighting, tables, JSON support, math rendering, YouTube embeds, file uploads, message sources, animations, conversation pagination, keyboard shortcuts, responsive design, and more. Reachat is highly customizable and suitable for creating interactive chat interfaces.

For similar jobs

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

daily-poetry-image

Daily Chinese ancient poetry and AI-generated images powered by Bing DALL-E-3. GitHub Action triggers the process automatically. Poetry is provided by Today's Poem API. The website is built with Astro.

exif-photo-blog

EXIF Photo Blog is a full-stack photo blog application built with Next.js, Vercel, and Postgres. It features built-in authentication, photo upload with EXIF extraction, photo organization by tag, infinite scroll, light/dark mode, automatic OG image generation, a CMD-K menu with photo search, experimental support for AI-generated descriptions, and support for Fujifilm simulations. The application is easy to deploy to Vercel with just a few clicks and can be customized with a variety of environment variables.

SillyTavern

SillyTavern is a user interface you can install on your computer (and Android phones) that allows you to interact with text generation AIs and chat/roleplay with characters you or the community create. SillyTavern is a fork of TavernAI 1.2.8 which is under more active development and has added many major features. At this point, they can be thought of as completely independent programs.

Twitter-Insight-LLM

This project enables you to fetch liked tweets from Twitter (using Selenium), save it to JSON and Excel files, and perform initial data analysis and image captions. This is part of the initial steps for a larger personal project involving Large Language Models (LLMs).

AISuperDomain

Aila Desktop Application is a powerful tool that integrates multiple leading AI models into a single desktop application. It allows users to interact with various AI models simultaneously, providing diverse responses and insights to their inquiries. With its user-friendly interface and customizable features, Aila empowers users to engage with AI seamlessly and efficiently. Whether you're a researcher, student, or professional, Aila can enhance your AI interactions and streamline your workflow.

ChatGPT-On-CS

This project is an intelligent dialogue customer service tool based on a large model, which supports access to platforms such as WeChat, Qianniu, Bilibili, Douyin Enterprise, Douyin, Doudian, Weibo chat, Xiaohongshu professional account operation, Xiaohongshu, Zhihu, etc. You can choose GPT3.5/GPT4.0/ Lazy Treasure Box (more platforms will be supported in the future), which can process text, voice and pictures, and access external resources such as operating systems and the Internet through plug-ins, and support enterprise AI applications customized based on their own knowledge base.

obs-localvocal

LocalVocal is a live-streaming AI assistant plugin for OBS that allows you to transcribe audio speech into text and perform various language processing functions on the text using AI / LLMs (Large Language Models). It's privacy-first, with all data staying on your machine, and requires no GPU, cloud costs, network, or downtime.