qapyq

An image viewer and AI-assisted editing/captioning/masking tool that helps with curating datasets for generative AI models, finetunes and LoRA.

Stars: 134

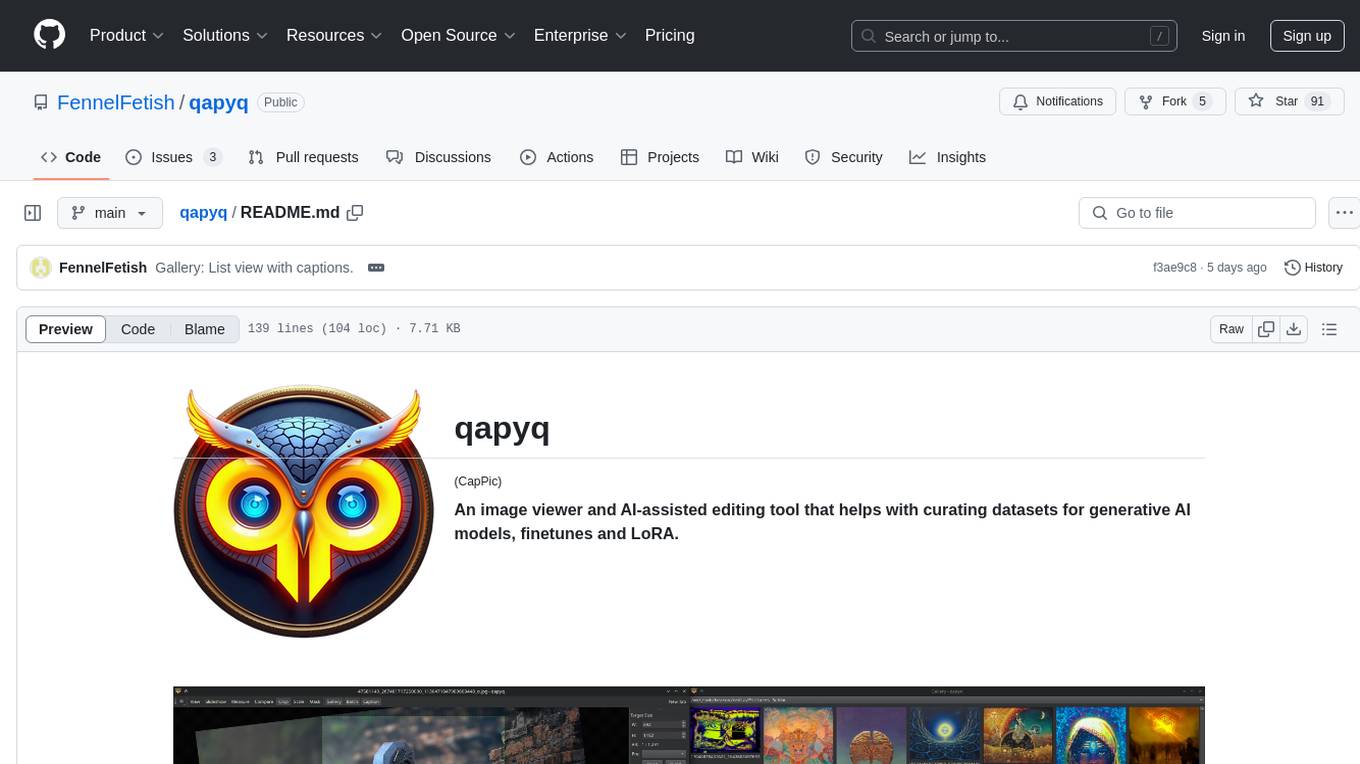

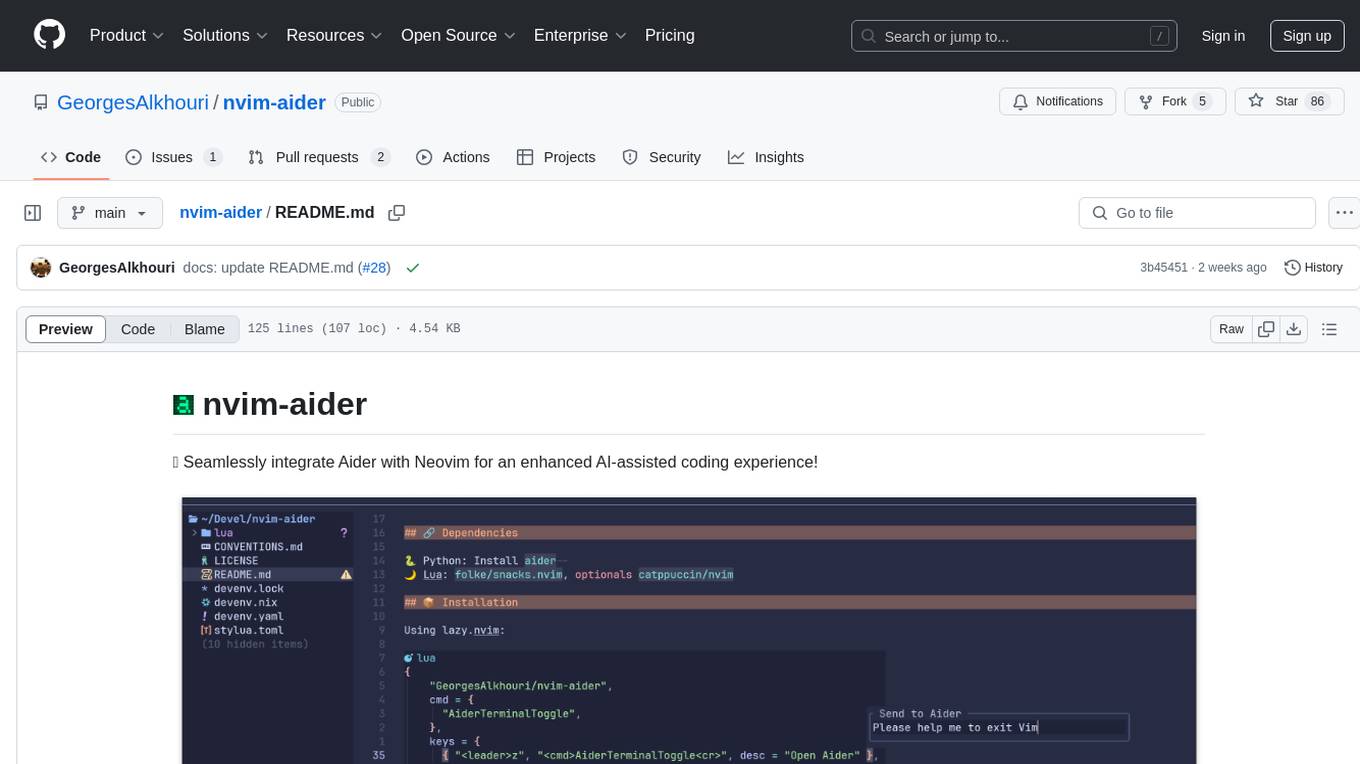

qapyq is an image viewer and AI-assisted editing tool designed to help curate datasets for generative AI models. It offers features such as image viewing, editing, captioning, batch processing, and AI assistance. Users can perform tasks like cropping, scaling, editing masks, tagging, and applying sorting and filtering rules. The tool supports state-of-the-art captioning and masking models, with options for model settings, GPU acceleration, and quantization. qapyq aims to streamline the process of preparing images for training AI models by providing a user-friendly interface and advanced functionalities.

README:

(CapPic)

An image viewer and AI-assisted editing tool that helps with curating datasets for generative AI models, finetunes and LoRA.

-

Image Viewer: Display and navigate images

- Quick-starting desktop application built with Qt

- Runs smoothly with tens of thousands of images

- Modular interface that lets you place windows on different monitors

- Open multiple tabs

- Zoom/pan and fullscreen mode

- Gallery with thumbnails and optionally captions

- Semantic image sorting with text prompts

- Compare two images

- Measure size, area and pixel distances

- Slideshow

-

Image/Mask Editor: Prepare images for training

- Crop and save parts of images

- Scale images, optionally using AI upscale models

- Dynamic save paths with template variables

- Manually edit masks with multiple layers

- Support for pressure-sensitive drawing pens

- Record masking operations into macros

- Automated masking

-

Captioning: Describe images with text

- Edit captions manually with drag-and-drop support

- Multi-Edit Mode for editing captions of multiple images simultaneously

- Focus Mode where one key stroke adds a tag, saves the file and skips to the next image

- Tag grouping, merging, sorting, filtering and replacement rules

- Colored text highlighting

- CLIP Token Counter

- Automated captioning with support for grounding

- Prompt presets

- Multi-turn conversations with each answer saved to different entries in a

.jsonfile - Further refinement with LLMs

-

Stats/Filters: Summarize your data and get an overview

- List all tags, image resolutions, masked regions, or size of concept folders

- Filter images and create subsets

- Combine and chain filters

- Export the summaries as CSV

-

Batch Processing: Process whole folders at once

- Flexible batch captioning, tagging and transformation

- Batch scaling of images

- Batch masking with user-defined macros

- Batch cropping of images using your macros

- Copy and move files, create symlinks, ZIP captions for backups

-

AI Assistance:

- Support for state-of-the-art captioning and masking models

- Model and sampling settings, GPU acceleration with CPU offload support

- On-the-fly NF4 and INT8 quantization

- Run inference locally and/or on multiple remote machines over SSH

- Separate inference subprocess isolates potential crashes and allows complete VRAM cleanup

These are the supported architectures with links to the original models.

Find more specialized finetuned models on huggingface.co.

-

Tagging

Generate keyword captions for images. -

Captioning

Generate complete-sentence captions for images.- Florence-2

- Gemma3 (GGUF)

- InternVL2, InternVL2.5, InternVL2.5-MPO, InternVL3

- JoyCaption

- MiniCPM-V-2.6 (GGUF), MiniCPM-o-2.6 (GGUF), MiniCPM-V-4 (GGUF)

- Molmo

- Moondream2 (GGUF)

- Ovis-1.6, Ovis2

- Qwen2-VL

- Qwen2.5-VL (needs transformers 4.49 manually installed)

-

LLM

Transform existing captions/tags.- Models in GGUF format with embedded chat template (llama-cpp backend).

-

Upscaling

Resize images to higher resolutions.- Model architectures supported by the spandrel backend.

- Find more models at openmodeldb.info.

-

Masking

Generate greyscale masks.- Box Detection

- YOLO/Adetailer detection models

- Search for YOLO models on huggingface.co.

- Florence-2

- Qwen2.5-VL

- YOLO/Adetailer detection models

- Segmentation / Background Removal

- InSPyReNet (Plus_Ultra)

- RMBG-2.0

- Florence-2

- Box Detection

-

Embedding

Sort images by their similarity to a prompt.- CLIP

- SigLIP

-

SigLIP (ONNX), SigLIP2-giant-opt (ONNX)

(recommended: largest text model + fp16 vision model)

Requires Python 3.10 or later.

By default, prebuilt packages for CUDA 12.4 are installed. If you need a different CUDA version, change the index URL in requirements-pytorch.txt and requirements-llamacpp.txt before running the setup script.

- Git clone or download this repository.

- Run

setup.shon Linux,setup.baton Windows.- This will create a virtual environment that needs 7-9 GB.

If the setup scripts didn't work for you, but you manually got it running, please share your solution and raise an issue.

- Linux:

run.sh - Windows:

run.batorrun-console.bat

You can open files or folders directly in qapyq by associating the file types with the respective run script in your OS.

For shortcuts, icons are available in the qapyq/res folder.

If git was used to clone the repository, simply use git pull to update.

If the repository was downloaded as a zip archive, download it again and replace the installed files.

New dependencies may be added. If the program fails to start or crashes, run the setup script again to install the missing packages.

More information is available in the Wiki.

Use the page index on the right side to find topics and navigate the Wiki.

How to setup and configure AI models: Model Setup

How to use qapyq: User Guide

How to caption with qapyq: Captioning

How to use qapyq's features in a workflow: Tips and Workflows

If you have questions, please ask in the Discussions.

- [x] Natural sorting of files

- [x] Gallery list view with captions

- [x] Summary and stats of captions and tags

- [ ] Shortcuts and improved ease-of-use

- [x] AI-assisted mask editing

- [ ] Overlays (difference image) for comparison tool

- [x] Image resizing

- [x] Run inference on remote machines

- [ ] Adapt new captioning and masking models

- [ ] Possibly a plugin system for new tools

- [ ] Integration with ComfyUI

- [ ] Docs, Screenshots, Video Guides

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for qapyq

Similar Open Source Tools

qapyq

qapyq is an image viewer and AI-assisted editing tool designed to help curate datasets for generative AI models. It offers features such as image viewing, editing, captioning, batch processing, and AI assistance. Users can perform tasks like cropping, scaling, editing masks, tagging, and applying sorting and filtering rules. The tool supports state-of-the-art captioning and masking models, with options for model settings, GPU acceleration, and quantization. qapyq aims to streamline the process of preparing images for training AI models by providing a user-friendly interface and advanced functionalities.

lemonai

LemonAI is a versatile machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and algorithms for data preprocessing, model training, and evaluation. With LemonAI, users can easily experiment with different machine learning techniques and optimize their models for various tasks. The library is well-documented and beginner-friendly, making it suitable for both novice and experienced data scientists. LemonAI aims to streamline the development of AI applications and empower users to create innovative solutions using state-of-the-art machine learning methods.

GEN-AI

GEN-AI is a versatile Python library for implementing various artificial intelligence algorithms and models. It provides a wide range of tools and functionalities to support machine learning, deep learning, natural language processing, computer vision, and reinforcement learning tasks. With GEN-AI, users can easily build, train, and deploy AI models for diverse applications such as image recognition, text classification, sentiment analysis, object detection, and game playing. The library is designed to be user-friendly, efficient, and scalable, making it suitable for both beginners and experienced AI practitioners.

amazon-sagemaker-generativeai

Repository for training and deploying Generative AI models, including text-text, text-to-image generation, prompt engineering playground and chain of thought examples using SageMaker Studio. The tool provides a platform for users to experiment with generative AI techniques, enabling them to create text and image outputs based on input data. It offers a range of functionalities for training and deploying models, as well as exploring different generative AI applications.

open-ai

Open AI is a powerful tool for artificial intelligence research and development. It provides a wide range of machine learning models and algorithms, making it easier for developers to create innovative AI applications. With Open AI, users can explore cutting-edge technologies such as natural language processing, computer vision, and reinforcement learning. The platform offers a user-friendly interface and comprehensive documentation to support users in building and deploying AI solutions. Whether you are a beginner or an experienced AI practitioner, Open AI offers the tools and resources you need to accelerate your AI projects and stay ahead in the rapidly evolving field of artificial intelligence.

simple-ai

Simple AI is a lightweight Python library for implementing basic artificial intelligence algorithms. It provides easy-to-use functions and classes for tasks such as machine learning, natural language processing, and computer vision. With Simple AI, users can quickly prototype and deploy AI solutions without the complexity of larger frameworks.

open-webui-tools

Open WebUI Tools Collection is a set of tools for structured planning, arXiv paper search, Hugging Face text-to-image generation, prompt enhancement, and multi-model conversations. It enhances LLM interactions with academic research, image generation, and conversation management. Tools include arXiv Search Tool and Hugging Face Image Generator. Function Pipes like Planner Agent offer autonomous plan generation and execution. Filters like Prompt Enhancer improve prompt quality. Installation and configuration instructions are provided for each tool and pipe.

ml-retreat

ML-Retreat is a comprehensive machine learning library designed to simplify and streamline the process of building and deploying machine learning models. It provides a wide range of tools and utilities for data preprocessing, model training, evaluation, and deployment. With ML-Retreat, users can easily experiment with different algorithms, hyperparameters, and feature engineering techniques to optimize their models. The library is built with a focus on scalability, performance, and ease of use, making it suitable for both beginners and experienced machine learning practitioners.

jadx-mcp-server

JADX-MCP-SERVER is a standalone Python server that interacts with JADX-AI-MCP Plugin to analyze Android APKs using LLMs like Claude. It enables live communication with decompiled Android app context, uncovering vulnerabilities, parsing manifests, and facilitating reverse engineering effortlessly. The tool combines JADX-AI-MCP and JADX MCP SERVER to provide real-time reverse engineering support with LLMs, offering features like quick analysis, vulnerability detection, AI code modification, static analysis, and reverse engineering helpers. It supports various MCP tools for fetching class information, text, methods, fields, smali code, AndroidManifest.xml content, strings.xml file, resource files, and more. Tested on Claude Desktop, it aims to support other LLMs in the future, enhancing Android reverse engineering and APK modification tools connectivity for easier reverse engineering purely from vibes.

jadx-ai-mcp

JADX-AI-MCP is a plugin for the JADX decompiler that integrates with Model Context Protocol (MCP) to provide live reverse engineering support with LLMs like Claude. It allows for quick analysis, vulnerability detection, and AI code modification, all in real time. The tool combines JADX-AI-MCP and JADX MCP SERVER to analyze Android APKs effortlessly. It offers various prompts for code understanding, vulnerability detection, reverse engineering helpers, static analysis, AI code modification, and documentation. The tool is part of the Zin MCP Suite and aims to connect all android reverse engineering and APK modification tools with a single MCP server for easy reverse engineering of APK files.

koog

Koog is a Kotlin-based framework for building and running AI agents entirely in idiomatic Kotlin. It allows users to create agents that interact with tools, handle complex workflows, and communicate with users. Key features include pure Kotlin implementation, MCP integration, embedding capabilities, custom tool creation, ready-to-use components, intelligent history compression, powerful streaming API, persistent agent memory, comprehensive tracing, flexible graph workflows, modular feature system, scalable architecture, and multiplatform support.

AIaW

AIaW is a next-generation LLM client with full functionality, lightweight, and extensible. It supports various basic functions such as streaming transfer, image uploading, and latex formulas. The tool is cross-platform with a responsive interface design. It supports multiple service providers like OpenAI, Anthropic, and Google. Users can modify questions, regenerate in a forked manner, and visualize conversations in a tree structure. Additionally, it offers features like file parsing, video parsing, plugin system, assistant market, local storage with real-time cloud sync, and customizable interface themes. Users can create multiple workspaces, use dynamic prompt word variables, extend plugins, and benefit from detailed design elements like real-time content preview, optimized code pasting, and support for various file types.

NanoBanana-AI-Pose-Transfer

NanoBanana-AI-Pose-Transfer is a lightweight tool for transferring poses between images using artificial intelligence. It leverages advanced AI algorithms to accurately map and transfer poses from a source image to a target image. This tool is designed to be user-friendly and efficient, allowing users to easily manipulate and transfer poses for various applications such as image editing, animation, and virtual reality. With NanoBanana-AI-Pose-Transfer, users can seamlessly transfer poses between images with high precision and quality.

traceroot

TraceRoot is a tool that helps engineers debug production issues 10× faster using AI-powered analysis of traces, logs, and code context. It accelerates the debugging process with AI-powered insights, integrates seamlessly into the development workflow, provides real-time trace and log analysis, code context understanding, and intelligent assistance. Features include ease of use, LLM flexibility, distributed services, AI debugging interface, and integration support. Users can get started with TraceRoot Cloud for a 7-day trial or self-host the tool. SDKs are available for Python and JavaScript/TypeScript.

nvim-aider

Nvim-aider is a plugin for Neovim that provides additional functionality and key mappings to enhance the user's editing experience. It offers features such as code navigation, quick access to commonly used commands, and improved text manipulation tools. With Nvim-aider, users can streamline their workflow and increase productivity while working with Neovim.

InvokeAI

InvokeAI is a leading creative engine built to empower professionals and enthusiasts alike. Generate and create stunning visual media using the latest AI-driven technologies. InvokeAI offers an industry leading Web Interface, interactive Command Line Interface, and also serves as the foundation for multiple commercial products.

For similar tasks

qapyq

qapyq is an image viewer and AI-assisted editing tool designed to help curate datasets for generative AI models. It offers features such as image viewing, editing, captioning, batch processing, and AI assistance. Users can perform tasks like cropping, scaling, editing masks, tagging, and applying sorting and filtering rules. The tool supports state-of-the-art captioning and masking models, with options for model settings, GPU acceleration, and quantization. qapyq aims to streamline the process of preparing images for training AI models by providing a user-friendly interface and advanced functionalities.

ComfyUI-BlenderAI-node

ComfyUI-BlenderAI-node is an addon for Blender that allows users to convert ComfyUI nodes into Blender nodes seamlessly. It offers features such as converting nodes, editing launch arguments, drawing masks with Grease pencil, and more. Users can queue batch processing, use node tree presets, and model preview images. The addon enables users to input or replace 3D models in Blender and output controlnet images using composite. It provides a workflow showcase with presets for camera input, AI-generated mesh import, composite depth channel, character bone editing, and more.

Pallaidium

Pallaidium is a generative AI movie studio integrated into the Blender video editor. It allows users to AI-generate video, image, and audio from text prompts or existing media files. The tool provides various features such as text to video, text to audio, text to speech, text to image, image to image, image to video, video to video, image to text, and more. It requires a Windows system with a CUDA-supported Nvidia card and at least 6 GB VRAM. Pallaidium offers batch processing capabilities, text to audio conversion using Bark, and various performance optimization tips. Users can install the tool by downloading the add-on and following the installation instructions provided. The tool comes with a set of restrictions on usage, prohibiting the generation of harmful, pornographic, violent, or false content.

xaitk-saliency

The `xaitk-saliency` package is an open source Explainable AI (XAI) framework for visual saliency algorithm interfaces and implementations, designed for analytics and autonomy applications. It provides saliency algorithms for various image understanding tasks such as image classification, image similarity, object detection, and reinforcement learning. The toolkit targets data scientists and developers who aim to incorporate visual saliency explanations into their workflow or product, offering both direct accessibility for experimentation and modular integration into systems and applications through Strategy and Adapter patterns. The package includes documentation, examples, and a demonstration tool for visual saliency generation in a user-interface.

generative-ai

This repository contains codes related to Generative AI as per YouTube video. It includes various notebooks and files for different days covering topics like map reduce, text to SQL, LLM parameters, tagging, and Kaggle competition. The repository also includes resources like PDF files and databases for different projects related to Generative AI.

home-gallery

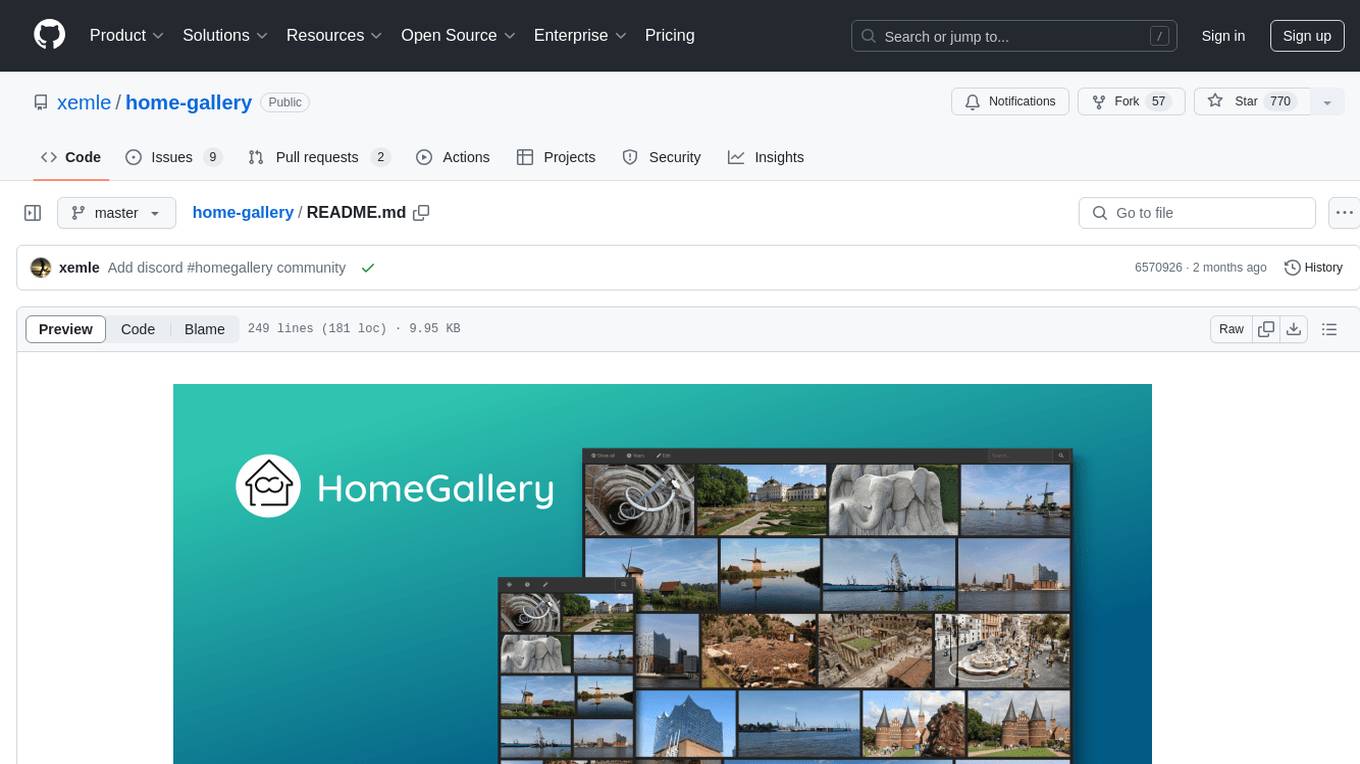

Home-Gallery.org is a self-hosted open-source web gallery for browsing personal photos and videos with tagging, mobile-friendly interface, and AI-powered image and face discovery. It aims to provide a fast user experience on mobile phones and help users browse and rediscover memories from their media archive. The tool allows users to serve their local data without relying on cloud services, view photos and videos from mobile phones, and manage images from multiple media source directories. Features include endless photo stream, video transcoding, reverse image lookup, face detection, GEO location reverse lookups, tagging, and more. The tool runs on NodeJS and supports various platforms like Linux, Mac, and Windows.

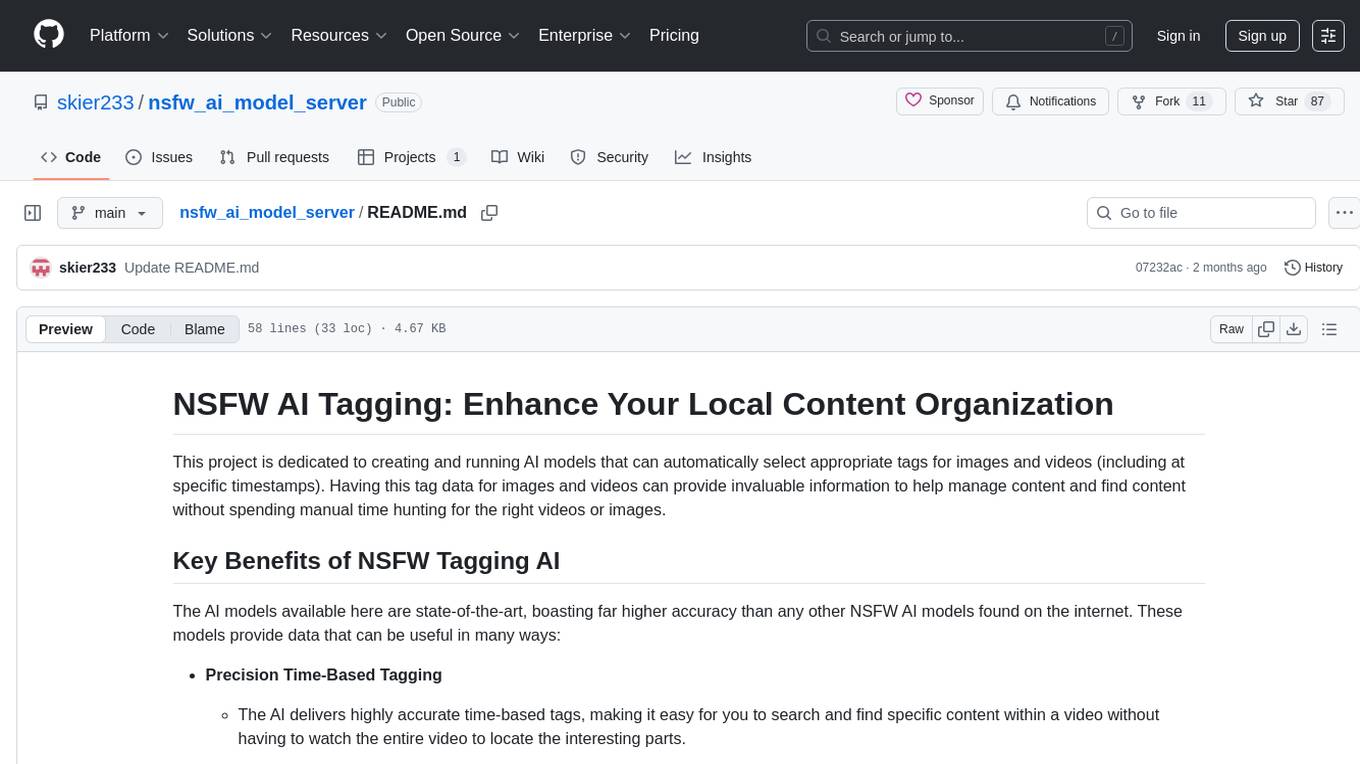

nsfw_ai_model_server

This project is dedicated to creating and running AI models that can automatically select appropriate tags for images and videos, providing invaluable information to help manage content and find content without manual effort. The AI models deliver highly accurate time-based tags, enhance searchability, improve content management, and offer future content recommendations. The project offers a free open source AI model supporting 10 tags and several paid Patreon models with 151 tags and additional variations for different tradeoffs between accuracy and speed. The project has limitations related to usage restrictions, hardware requirements, performance on CPU, complexity, and model access.

For similar jobs

weave

Weave is a toolkit for developing Generative AI applications, built by Weights & Biases. With Weave, you can log and debug language model inputs, outputs, and traces; build rigorous, apples-to-apples evaluations for language model use cases; and organize all the information generated across the LLM workflow, from experimentation to evaluations to production. Weave aims to bring rigor, best-practices, and composability to the inherently experimental process of developing Generative AI software, without introducing cognitive overhead.

LLMStack

LLMStack is a no-code platform for building generative AI agents, workflows, and chatbots. It allows users to connect their own data, internal tools, and GPT-powered models without any coding experience. LLMStack can be deployed to the cloud or on-premise and can be accessed via HTTP API or triggered from Slack or Discord.

VisionCraft

The VisionCraft API is a free API for using over 100 different AI models. From images to sound.

kaito

Kaito is an operator that automates the AI/ML inference model deployment in a Kubernetes cluster. It manages large model files using container images, avoids tuning deployment parameters to fit GPU hardware by providing preset configurations, auto-provisions GPU nodes based on model requirements, and hosts large model images in the public Microsoft Container Registry (MCR) if the license allows. Using Kaito, the workflow of onboarding large AI inference models in Kubernetes is largely simplified.

PyRIT

PyRIT is an open access automation framework designed to empower security professionals and ML engineers to red team foundation models and their applications. It automates AI Red Teaming tasks to allow operators to focus on more complicated and time-consuming tasks and can also identify security harms such as misuse (e.g., malware generation, jailbreaking), and privacy harms (e.g., identity theft). The goal is to allow researchers to have a baseline of how well their model and entire inference pipeline is doing against different harm categories and to be able to compare that baseline to future iterations of their model. This allows them to have empirical data on how well their model is doing today, and detect any degradation of performance based on future improvements.

tabby

Tabby is a self-hosted AI coding assistant, offering an open-source and on-premises alternative to GitHub Copilot. It boasts several key features: * Self-contained, with no need for a DBMS or cloud service. * OpenAPI interface, easy to integrate with existing infrastructure (e.g Cloud IDE). * Supports consumer-grade GPUs.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.

Magick

Magick is a groundbreaking visual AIDE (Artificial Intelligence Development Environment) for no-code data pipelines and multimodal agents. Magick can connect to other services and comes with nodes and templates well-suited for intelligent agents, chatbots, complex reasoning systems and realistic characters.