ComfyUI-BlenderAI-node

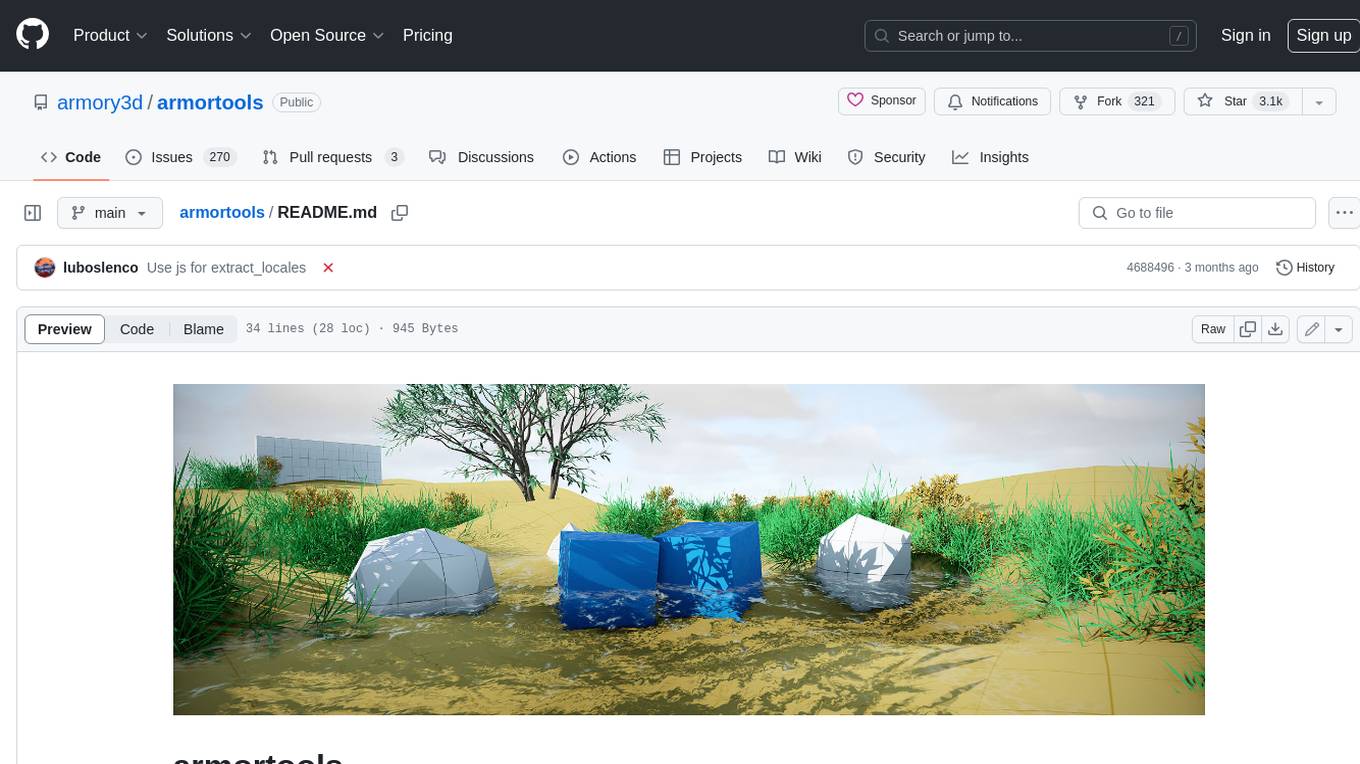

Used for AI model generation, next-generation Blender rendering engine, texture enhancement&generation (based on ComfyUI)

Stars: 943

ComfyUI-BlenderAI-node is an addon for Blender that allows users to convert ComfyUI nodes into Blender nodes seamlessly. It offers features such as converting nodes, editing launch arguments, drawing masks with Grease pencil, and more. Users can queue batch processing, use node tree presets, and model preview images. The addon enables users to input or replace 3D models in Blender and output controlnet images using composite. It provides a workflow showcase with presets for camera input, AI-generated mesh import, composite depth channel, character bone editing, and more.

README:

This is an addon for using ComfyUI in Blender.

ComfyUI : It will convert ComfyUI nodes into Blender nodes, letting you use ComfyUI inside Blender without having to switch between programs.

Blender : Based on ComfyUI, you can directly generate AI full angle materials, use cameras as real-time input sources, and combine with other ComfyUI custom nodes to achieve functions such as AI animation interpolation and style transfer.

(My dream: I hope Blender and ComfyUI can fight side by side in the future ദ്ദി˶>𖥦<)✧ )

- 【New】AI material creation and texture baking

https://github.com/user-attachments/assets/564667d4-588e-47ca-9a28-d983b1f30bd2

https://github.com/user-attachments/assets/888fca0b-b081-496c-9837-7ff18264519f

- Converts ComfyUI nodes to Blender nodes

- Editable launch arguments in the addon's preferences, or just connect to a running ComfyUI process

- Adds some special Blender nodes like camera input or compositing data

- Draw masks with Grease pencil

- Blender-like node groups

- Queue batch processing with mission excel

- Node tree/workflow presets and node group presets

- Image previews for models in the Load Checkpoint node

- Can directly input or replace the 3D models in Blender

- Composition output perfect controlnet image

- Live preview when sampling

- Easily move images to and from Blender's image editor

Here are some workflow showcases:

You can find all these workflow presets in ComfyUI-BlenderAI-node/presets/

- Install Blender

First, you need to install Blender(We recommend Blender 3.5, 3.6.X, or 4.0).

- Install this add-on(ComfyUI BlenderAI node)

- Install from Blender's preferences menu

In Blender's preferences menu, under addons, you can install an addon by selecting the addon's zip file. Blender will automatically show you the addon after it's installed; if you missed it, it's in the Node category, search for "ComfyUI". Don't forget to enable the addon by clicking on the tickbox to the left of the addon's name!

Note: The zip file might not have a preview image. This is normal.

- Install manually (recommended)

This is a standard Blender add-on. You can git clone the addon to Blender's addon directory:

cd %USERPROFILE%\AppData\Roaming\Blender Foundation\blender\%version%\scripts\addons

git clone https://github.com/AIGODLIKE/ComfyUI-BlenderAI-node.git --recursive

Then you can see the addon after refreshing the addons menu or restarting Blender. It is in the Node category, search for "ComfyUI". Don't forget to enable the addon by clicking on the tickbox to the left of the addon's name!

3.AI material generation (Baking requires the use of the add-on EasyBakeNode)

-

Install the add-on EasyBakeNode

-

Your ComfyUI is working properly

-

You need to download Controlnet model (At least download the following ones)and install comfyui controlnet aux

If you're using Linux, assuming you have some experience:

- Install Blender

- Create and activate a Python venv

- Install ComfyUI

cd /home/**YOU**/.config/blender/**BLENDER.VERSION**/scripts/addonsgit clone https://github.com/AIGODLIKE/ComfyUI-BlenderAI-node.git --recursive- Set your ComfyUI path and your venv /bin/ path in the addon's preferences

Some things will not work on Linux, or might break!

- Prepare ComfyUI

You can download ComfyUI from here: ComfyUI Releases

Or you can build one yourself as long as you follow this path structure:

├── ComfyUI

│ ├── main.py

│ ...

├── python_embeded

│ ├── python.exe

│ ...

- Set the "ComfyUI Path" to your ComfyUI directory

- Set the "Python Path" if you're not using the standard ComfyUI file directory

The default (empty) path is:

├── ComfyUI

├── python_embeded

│ ├── python.exe <-- Here

If you're using a virtual environment named venv, the executable is in venv/Scripts/python.exe.

- Open the ComfyUI Node Editor

Switch to the ComfyUI Node Editor, press N to open the sidebar/n-menu, and click the Launch/Connect to ComfyUI button to launch ComfyUI or connect to it.

Or, switch the "Server Type" in the addon's preferences to remote server so that you can link your Blender to a running ComfyUI process.

- Add nodes/presets

Like in the other Node Editors, you can use the shortcut Shift+A to bring up the Add menu to add nodes. You can also click on the "Replace Node Tree" or "Append Node Tree" buttons in the sidebar to add/append a node tree.

For image previews and input, you must use the Blender-specific nodes this addon adds, otherwise the results may not be displayed properly! Using the Blender-specific nodes won't affect generation, results will still be saved as ComfyUI standard data.

- Generate

By clicking Excute Node Tree in n-panel, or the little red button on the right in header in node editor, current node tree will add to queue list.

You can cancel current running task by clicking Cancel, clear all task list by clicking ClearTask.

Loop execution is in advanced executing option at the side of Excute Node Tree button.

- Input image from directory

- Input image list from directory

- Input image from render(Supports current and selected frames)

- Input image from viewport(Supports real-time refresh)

- Create a mask from Grease Pencil

- Create a mask by projecting an object on the camera

- Create a mask by projecting a collection on the camera

- Input texture from object

- Input textures from collection objects

- Normally saves to a folder

- Can save to an image in Blender to replace it

To improve writing long prompts, we made a button that can show all prompts in a separate textbox since Blender doesn't support multiline textboxes in nodes. When you click the button on the side of the textbox, a window will open to write prompts in. The first time you do this, you might need to wait. Keep your cursor over the window while typing.

Select a node, then hold D and drag the cursor to another node's center, you can link all available widgets between them

By pressing R when the cursor is near a widget, a pie menu will display all nodes that have this widget

Hold F and drag the cursor to a mask node, it will automatically create a camera to generate mask from the scene

- Not every node can work perfectly in Blender, for example nodes regarding videos

- You can enable the console under

Window>Toggle System Consoleat the top left - Model preview images need to have the same name as the model, including the extension, for example -

model.ckpt.jpg - Do not install as extensions

Here are some interesting nodes we've tested in Blender

√ = works as in ComfyUI web

? = not all functions work

× = only a few or no functions work

See Change Log.

[CN]How to create and bake AI materials in Blender

[EN]BSLIVE ComfyUI Blender AI Node Addon for Generative AI(By Jimmy Gunawan)

[EN]Generate AI Rendering with Blender ComfyUI AddOn(By Gioxyer)

(Please feel free to contact me for recommendations)

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for ComfyUI-BlenderAI-node

Similar Open Source Tools

ComfyUI-BlenderAI-node

ComfyUI-BlenderAI-node is an addon for Blender that allows users to convert ComfyUI nodes into Blender nodes seamlessly. It offers features such as converting nodes, editing launch arguments, drawing masks with Grease pencil, and more. Users can queue batch processing, use node tree presets, and model preview images. The addon enables users to input or replace 3D models in Blender and output controlnet images using composite. It provides a workflow showcase with presets for camera input, AI-generated mesh import, composite depth channel, character bone editing, and more.

ComfyUI_VLM_nodes

ComfyUI_VLM_nodes is a repository containing various nodes for utilizing Vision Language Models (VLMs) and Language Models (LLMs). The repository provides nodes for tasks such as structured output generation, image to music conversion, LLM prompt generation, automatic prompt generation, and more. Users can integrate different models like InternLM-XComposer2-VL, UForm-Gen2, Kosmos-2, moondream1, moondream2, JoyTag, and Chat Musician. The nodes support features like extracting keywords, generating prompts, suggesting prompts, and obtaining structured outputs. The repository includes examples and instructions for using the nodes effectively.

ComfyUI-HunyuanVideo-Nyan

ComfyUI-HunyuanVideo-Nyan is a repository that provides tools for manipulating the attention of LLM models, allowing users to shuffle the AI's attention and cause confusion. The repository includes a Nerdy Transformer Shuffle node that enables users to mess with the LLM's attention layers, providing a workflow for installation and usage. It also offers a new SAE-informed Long-CLIP model with high accuracy, along with recommendations for CLIP models. Users can find detailed instructions on how to use the provided nodes to scale CLIP & LLM factors and create high-quality nature videos. The repository emphasizes compatibility with other related tools and provides insights into the functionality of the included nodes.

colors_ai

Colors AI is a cross-platform color scheme generator that uses deep learning from public API providers. It is available for all mainstream operating systems, including mobile. Features: - Choose from open APIs, with the ability to set up custom settings - Export section with many export formats to save or clipboard copy - URL providers to other static color generators - Localized to several languages - Dark and light theme - Material Design 3 - Data encryption - Accessibility - And much more

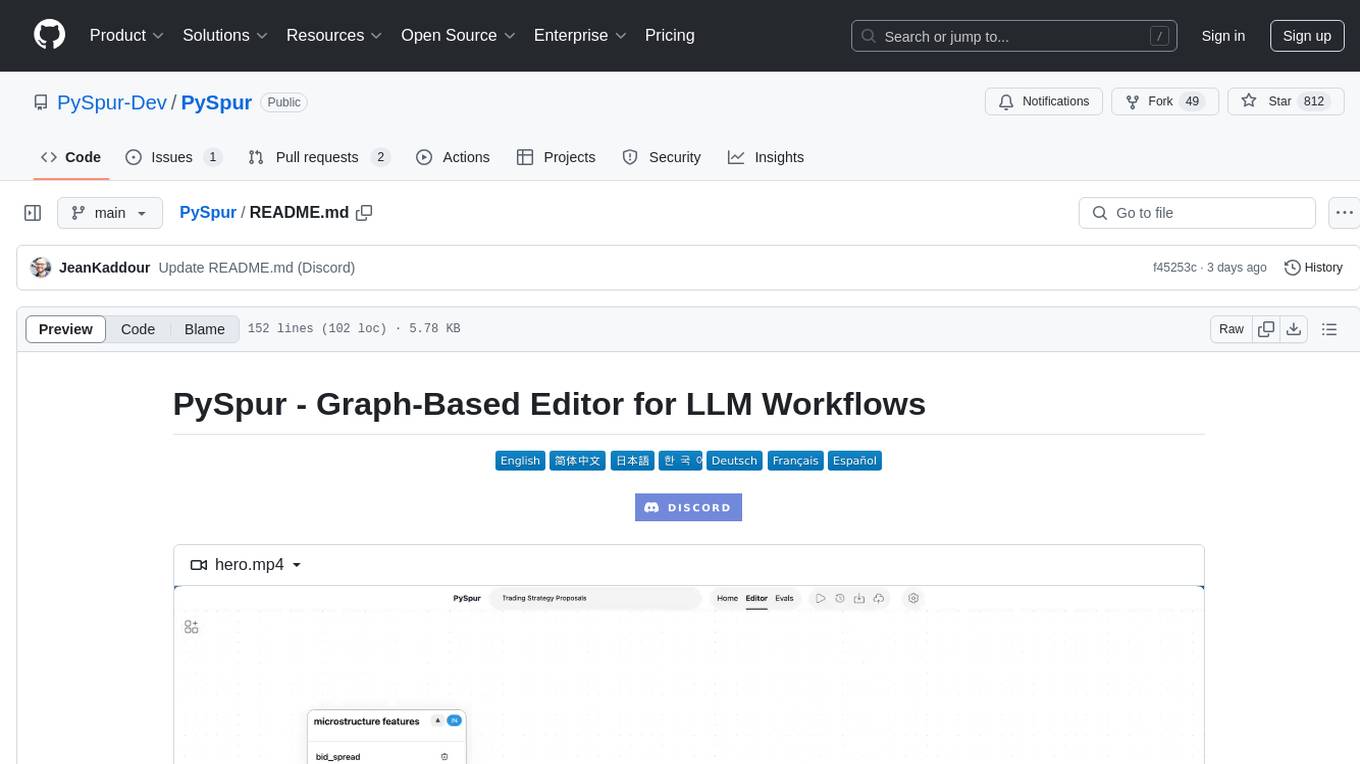

PySpur

PySpur is a graph-based editor designed for LLM workflows, offering modular building blocks for easy workflow creation and debugging at node level. It allows users to evaluate final performance and promises self-improvement features in the future. PySpur is easy-to-hack, supports JSON configs for workflow graphs, and is lightweight with minimal dependencies, making it a versatile tool for workflow management in the field of AI and machine learning.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

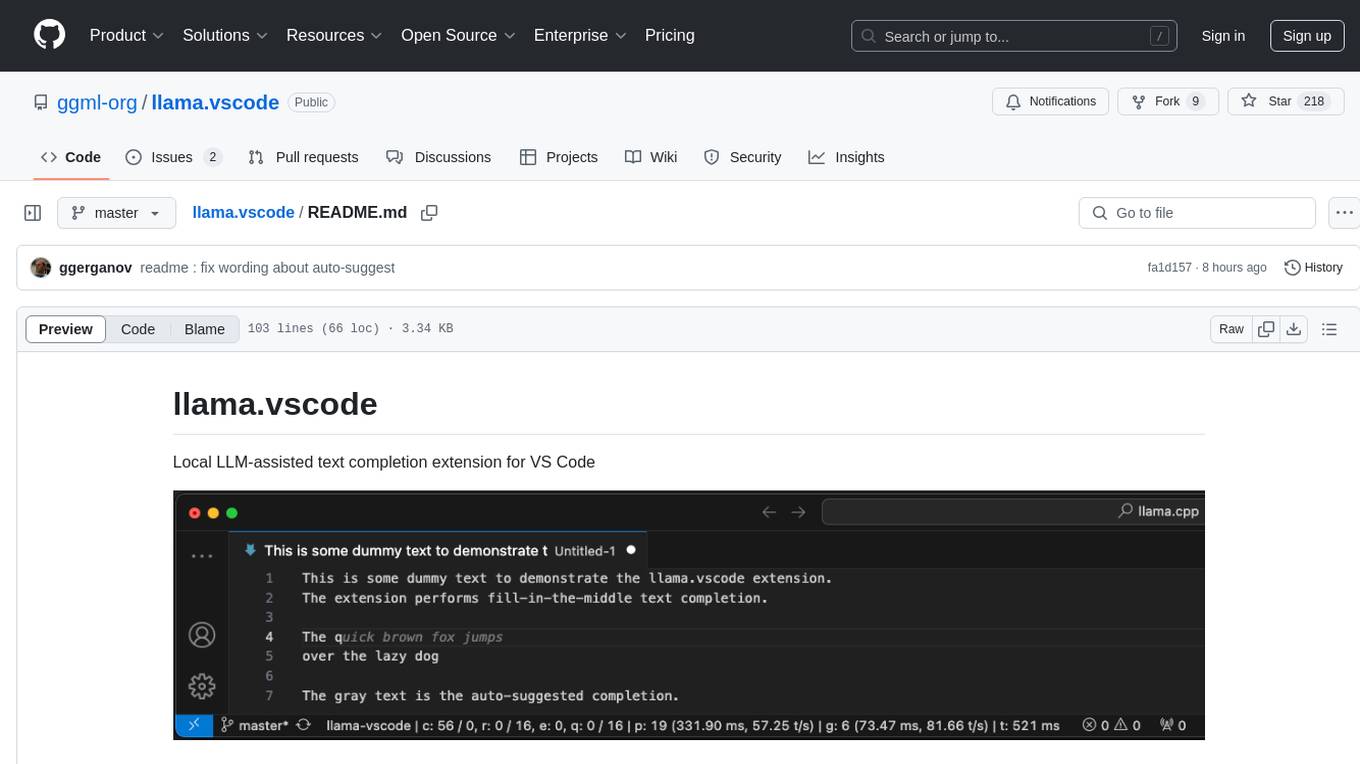

llama.vscode

llama.vscode is a local LLM-assisted text completion extension for Visual Studio Code. It provides auto-suggestions on input, allows accepting suggestions with shortcuts, and offers various features to enhance text completion. The extension is designed to be lightweight and efficient, enabling high-quality completions even on low-end hardware. Users can configure the scope of context around the cursor and control text generation time. It supports very large contexts and displays performance statistics for better user experience.

agentkit

AgentKit is a framework developed by Coinbase Developer Platform for enabling AI agents to take actions onchain. It is designed to be framework-agnostic and wallet-agnostic, allowing users to integrate it with any AI framework and any wallet. The tool is actively being developed and encourages community contributions. AgentKit provides support for various protocols, frameworks, wallets, and networks, making it versatile for blockchain transactions and API integrations using natural language inputs.

air-light

Air-light is a minimalist WordPress starter theme designed to be an ultra minimal starting point for a WordPress project. It is built to be very straightforward, backwards compatible, front-end developer friendly and modular by its structure. Air-light is free of weird "app-like" folder structures or odd syntaxes that nobody else uses. It loves WordPress as it was and as it is.

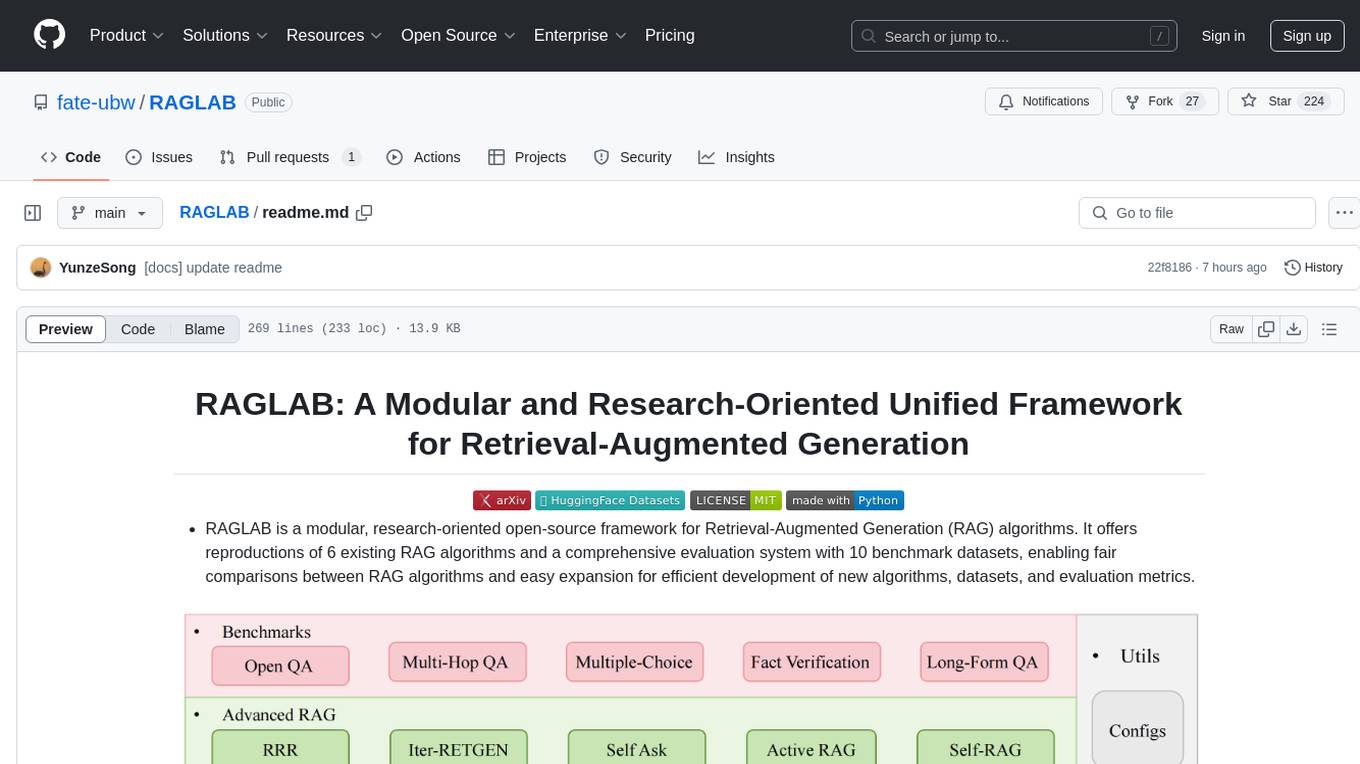

RAGLAB

RAGLAB is a modular, research-oriented open-source framework for Retrieval-Augmented Generation (RAG) algorithms. It offers reproductions of 6 existing RAG algorithms and a comprehensive evaluation system with 10 benchmark datasets, enabling fair comparisons between RAG algorithms and easy expansion for efficient development of new algorithms, datasets, and evaluation metrics. The framework supports the entire RAG pipeline, provides advanced algorithm implementations, fair comparison platform, efficient retriever client, versatile generator support, and flexible instruction lab. It also includes features like Interact Mode for quick understanding of algorithms and Evaluation Mode for reproducing paper results and scientific research.

Open-LLM-VTuber

Open-LLM-VTuber is a voice-interactive AI companion supporting real-time voice conversations and featuring a Live2D avatar. It can run offline on Windows, macOS, and Linux, offering web and desktop client modes. Users can customize appearance and persona, with rich LLM inference, text-to-speech, and speech recognition support. The project is highly customizable, extensible, and actively developed with exciting features planned. It provides privacy with offline mode, persistent chat logs, and various interaction features like voice interruption, touch feedback, Live2D expressions, pet mode, and more.

felafax

Felafax is a framework designed to tune LLaMa3.1 on Google Cloud TPUs for cost efficiency and seamless scaling. It provides a Jupyter notebook for continued-training and fine-tuning open source LLMs using XLA runtime. The goal of Felafax is to simplify running AI workloads on non-NVIDIA hardware such as TPUs, AWS Trainium, AMD GPU, and Intel GPU. It supports various models like LLaMa-3.1 JAX Implementation, LLaMa-3/3.1 PyTorch XLA, and Gemma2 Models optimized for Cloud TPUs with full-precision training support.

vision-agent

AskUI Vision Agent is a powerful automation framework that enables you and AI agents to control your desktop, mobile, and HMI devices and automate tasks. It supports multiple AI models, multi-platform compatibility, and enterprise-ready features. The tool provides support for Windows, Linux, MacOS, Android, and iOS device automation, single-step UI automation commands, in-background automation on Windows machines, flexible model use, and secure deployment of agents in enterprise environments.

Linly-Talker

Linly-Talker is an innovative digital human conversation system that integrates the latest artificial intelligence technologies, including Large Language Models (LLM) 🤖, Automatic Speech Recognition (ASR) 🎙️, Text-to-Speech (TTS) 🗣️, and voice cloning technology 🎤. This system offers an interactive web interface through the Gradio platform 🌐, allowing users to upload images 📷 and engage in personalized dialogues with AI 💬.

RWKV-Runner

RWKV Runner is a project designed to simplify the usage of large language models by automating various processes. It provides a lightweight executable program and is compatible with the OpenAI API. Users can deploy the backend on a server and use the program as a client. The project offers features like model management, VRAM configurations, user-friendly chat interface, WebUI option, parameter configuration, model conversion tool, download management, LoRA Finetune, and multilingual localization. It can be used for various tasks such as chat, completion, composition, and model inspection.

Neurite

Neurite is an innovative project that combines chaos theory and graph theory to create a digital interface that explores hidden patterns and connections for creative thinking. It offers a unique workspace blending fractals with mind mapping techniques, allowing users to navigate the Mandelbrot set in real-time. Nodes in Neurite represent various content types like text, images, videos, code, and AI agents, enabling users to create personalized microcosms of thoughts and inspirations. The tool supports synchronized knowledge management through bi-directional synchronization between mind-mapping and text-based hyperlinking. Neurite also features FractalGPT for modular conversation with AI, local AI capabilities for multi-agent chat networks, and a Neural API for executing code and sequencing animations. The project is actively developed with plans for deeper fractal zoom, advanced control over node placement, and experimental features.

For similar tasks

ComfyUI-BlenderAI-node

ComfyUI-BlenderAI-node is an addon for Blender that allows users to convert ComfyUI nodes into Blender nodes seamlessly. It offers features such as converting nodes, editing launch arguments, drawing masks with Grease pencil, and more. Users can queue batch processing, use node tree presets, and model preview images. The addon enables users to input or replace 3D models in Blender and output controlnet images using composite. It provides a workflow showcase with presets for camera input, AI-generated mesh import, composite depth channel, character bone editing, and more.

Pallaidium

Pallaidium is a generative AI movie studio integrated into the Blender video editor. It allows users to AI-generate video, image, and audio from text prompts or existing media files. The tool provides various features such as text to video, text to audio, text to speech, text to image, image to image, image to video, video to video, image to text, and more. It requires a Windows system with a CUDA-supported Nvidia card and at least 6 GB VRAM. Pallaidium offers batch processing capabilities, text to audio conversion using Bark, and various performance optimization tips. Users can install the tool by downloading the add-on and following the installation instructions provided. The tool comes with a set of restrictions on usage, prohibiting the generation of harmful, pornographic, violent, or false content.

qapyq

qapyq is an image viewer and AI-assisted editing tool designed to help curate datasets for generative AI models. It offers features such as image viewing, editing, captioning, batch processing, and AI assistance. Users can perform tasks like cropping, scaling, editing masks, tagging, and applying sorting and filtering rules. The tool supports state-of-the-art captioning and masking models, with options for model settings, GPU acceleration, and quantization. qapyq aims to streamline the process of preparing images for training AI models by providing a user-friendly interface and advanced functionalities.

VisionDepth3D

VisionDepth3D is an all-in-one 3D suite for creators, combining AI depth and custom stereo logic for cinema in VR. The suite includes features like real-time 3D stereo composer with CUDA + PyTorch acceleration, AI-powered depth estimation supporting 25+ models, AI upscaling & interpolation, depth blender for blending depth maps, audio to video sync, smart GUI workflow, and various output formats & aspect ratios. The tool is production-ready, offering advanced parallax controls, streamlined export for cinema, VR, or streaming, and real-time preview overlays.

ai-game-development-tools

Here we will keep track of the AI Game Development Tools, including LLM, Agent, Code, Writer, Image, Texture, Shader, 3D Model, Animation, Video, Audio, Music, Singing Voice and Analytics. 🔥 * Tool (AI LLM) * Game (Agent) * Code * Framework * Writer * Image * Texture * Shader * 3D Model * Avatar * Animation * Video * Audio * Music * Singing Voice * Speech * Analytics * Video Tool

LayaAir

LayaAir engine, under the Layabox brand, is a 3D engine that supports full-platform publishing. It can be applied in various fields such as games, education, advertising, marketing, digital twins, metaverse, AR guides, VR scenes, architectural design, industrial design, etc.

ai-collective-tools

ai-collective-tools is an open-source community dedicated to creating a comprehensive collection of AI tools for developers, researchers, and enthusiasts. The repository provides a curated selection of AI tools and resources across various categories such as 3D, Agriculture, Art, Audio Editing, Avatars, Chatbots, Code Assistant, Cooking, Copywriting, Crypto, Customer Support, Dating, Design Assistant, Design Generator, Developer, E-Commerce, Education, Email Assistant, Experiments, Fashion, Finance, Fitness, Fun Tools, Gaming, General Writing, Gift Ideas, HealthCare, Human Resources, Image Classification, Image Editing, Image Generator, Interior Designing, Legal Assistant, Logo Generator, Low Code, Models, Music, Paraphraser, Personal Assistant, Presentations, Productivity, Prompt Generator, Psychology, Real Estate, Religion, Research, Resume, Sales, Search Engine, SEO, Shopping, Social Media, Spreadsheets, SQL, Startup Tools, Story Teller, Summarizer, Testing, Text to Speech, Text to Image, Transcriber, Travel, Video Editing, Video Generator, Weather, Writing Generator, and Other Resources.

For similar jobs

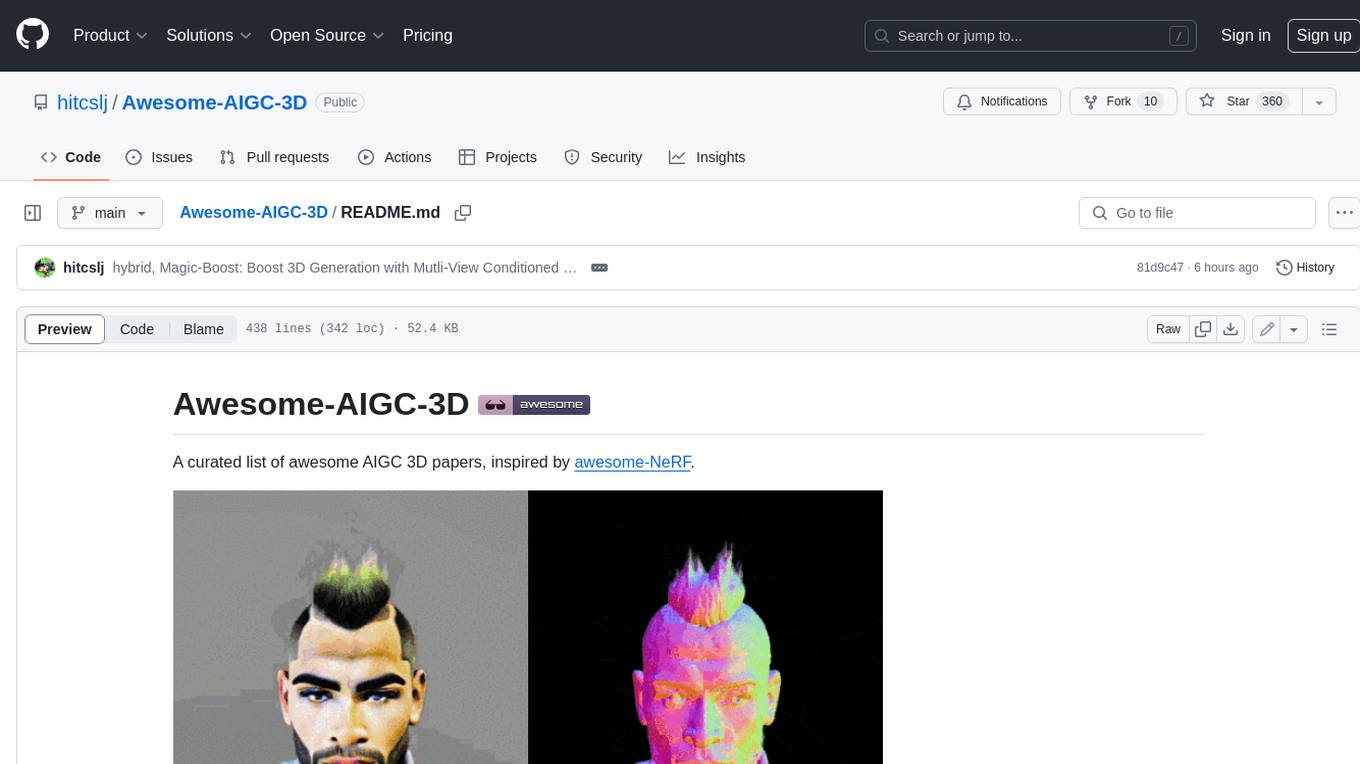

Awesome-AIGC-3D

Awesome-AIGC-3D is a curated list of awesome AIGC 3D papers, inspired by awesome-NeRF. It aims to provide a comprehensive overview of the state-of-the-art in AIGC 3D, including papers on text-to-3D generation, 3D scene generation, human avatar generation, and dynamic 3D generation. The repository also includes a list of benchmarks and datasets, talks, companies, and implementations related to AIGC 3D. The description is less than 400 words and provides a concise overview of the repository's content and purpose.

LayaAir

LayaAir engine, under the Layabox brand, is a 3D engine that supports full-platform publishing. It can be applied in various fields such as games, education, advertising, marketing, digital twins, metaverse, AR guides, VR scenes, architectural design, industrial design, etc.

ComfyUI-BlenderAI-node

ComfyUI-BlenderAI-node is an addon for Blender that allows users to convert ComfyUI nodes into Blender nodes seamlessly. It offers features such as converting nodes, editing launch arguments, drawing masks with Grease pencil, and more. Users can queue batch processing, use node tree presets, and model preview images. The addon enables users to input or replace 3D models in Blender and output controlnet images using composite. It provides a workflow showcase with presets for camera input, AI-generated mesh import, composite depth channel, character bone editing, and more.

Anim

Anim v0.1.0 is an animation tool that allows users to convert videos to animations using mixamorig characters. It features FK animation editing, object selection, embedded Python support (only on Windows), and the ability to export to glTF and FBX formats. Users can also utilize Mediapipe to create animations. The tool is designed to assist users in creating animations with ease and flexibility.

anthrax-ai

AnthraxAI is a Vulkan-based game engine that allows users to create and develop 3D games. The engine provides features such as scene selection, camera movement, object manipulation, debugging tools, audio playback, and real-time shader code updates. Users can build and configure the project using CMake and compile shaders using the glslc compiler. The engine supports building on both Linux and Windows platforms, with specific dependencies for each. Visual Studio Code integration is available for building and debugging the project, with instructions provided in the readme for setting up the workspace and required extensions.

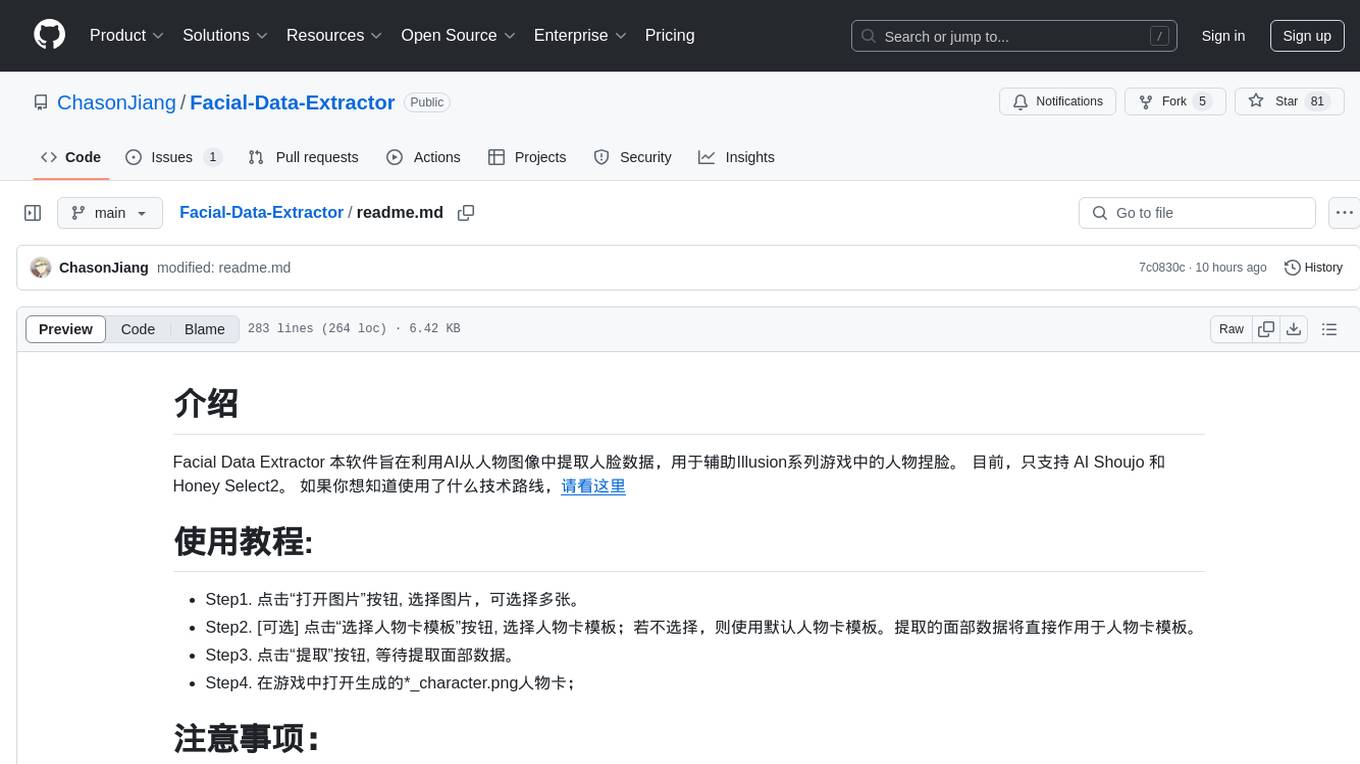

Facial-Data-Extractor

Facial Data Extractor is a software designed to extract facial data from images using AI, specifically to assist in character customization for Illusion series games. Currently, it only supports AI Shoujo and Honey Select2. Users can open images, select character card templates, extract facial data, and apply it to character cards in the game. The tool provides measurements for various facial features and allows for some customization, although perfect replication of faces may require manual adjustments.

VisionDepth3D

VisionDepth3D is an all-in-one 3D suite for creators, combining AI depth and custom stereo logic for cinema in VR. The suite includes features like real-time 3D stereo composer with CUDA + PyTorch acceleration, AI-powered depth estimation supporting 25+ models, AI upscaling & interpolation, depth blender for blending depth maps, audio to video sync, smart GUI workflow, and various output formats & aspect ratios. The tool is production-ready, offering advanced parallax controls, streamlined export for cinema, VR, or streaming, and real-time preview overlays.

spear

SPEAR (Simulator for Photorealistic Embodied AI Research) is a powerful tool for training embodied agents. It features 300 unique virtual indoor environments with 2,566 unique rooms and 17,234 unique objects that can be manipulated individually. Each environment is designed by a professional artist and features detailed geometry, photorealistic materials, and a unique floor plan and object layout. SPEAR is implemented as Unreal Engine assets and provides an OpenAI Gym interface for interacting with the environments via Python.