yu-ai-agent

编程导航 2025 年 AI 开发实战新项目,基于 Spring Boot 3 + Java 21 + Spring AI 构建 AI 恋爱大师应用和 ReAct 模式自主规划智能体YuManus,覆盖 AI 大模型接入、Spring AI 核心特性、Prompt 工程和优化、RAG 检索增强、向量数据库、Tool Calling 工具调用、MCP 模型上下文协议、AI Agent 开发、Cursor AI 工具等核心知识。用一套教程将程序员必知必会的 AI 技术一网打尽,帮你成为 AI 时代企业的香饽饽,给你的简历和求职大幅增加竞争力。

Stars: 68

The Yu AI Agent repository is a comprehensive guide for AI development in 2025, focusing on creating the AI Love Master application and the ReAct mode autonomous planning agent, YuManus. It equips programmers with essential AI skills through tutorials on AI model integration, Spring AI core features, prompt engineering, RAG, vector databases, tool calling, MCP, AI agent development, and Cursor AI tools. The project enhances resume and job prospects in the AI-driven job market.

README:

Welcome to the Yu AI Agent repository! This project serves as a comprehensive guide for AI development in 2025. Built on Spring Boot 3, Java 21, and Spring AI, this repository focuses on creating the AI Love Master application and the ReAct mode autonomous planning agent, YuManus.

- Introduction

- Features

- Installation

- Usage

- Core Concepts

- Technologies Used

- Contributing

- License

- Contact

The Yu AI Agent project aims to equip programmers with essential AI skills. With a structured tutorial, you will learn about:

- AI model integration

- Core features of Spring AI

- Prompt engineering and optimization

- RAG (Retrieval-Augmented Generation)

- Vector databases

- Tool calling

- MCP (Model Context Protocol)

- AI agent development

- Cursor AI tools

This knowledge will significantly enhance your resume and job prospects in the AI-driven job market.

- AI Model Integration: Learn how to connect and utilize large AI models effectively.

- Spring AI Core Features: Understand the fundamental aspects of Spring AI.

- Prompt Engineering: Master the art of crafting effective prompts for AI models.

- RAG: Explore retrieval-augmented generation techniques.

- Vector Databases: Get hands-on experience with vector databases for efficient data retrieval.

- Tool Calling: Implement tool calling for enhanced functionality in your applications.

- MCP: Grasp the Model Context Protocol for better AI interaction.

- AI Agent Development: Build your own intelligent agents using the knowledge gained.

- Cursor AI Tools: Utilize Cursor AI tools to streamline your AI projects.

To get started with the Yu AI Agent, follow these steps:

-

Clone the Repository:

git clone https://github.com/hfgwygey/yu-ai-agent.git

-

Navigate to the Directory:

cd yu-ai-agent -

Install Dependencies: Ensure you have Java 21 and Maven installed. Run the following command:

mvn install

-

Run the Application: You can run the application using:

mvn spring-boot:run

For more detailed installation instructions, visit the Releases section.

After setting up the project, you can start using the AI Love Master application. The application provides an interface for users to interact with the AI models.

-

Access the Application: Open your browser and navigate to

http://localhost:8080. -

Interact with the AI: Use the interface to ask questions or request information. The AI will respond based on the prompts you provide.

-

Explore Features: Experiment with different features such as tool calling and vector database queries.

For more examples and detailed usage instructions, refer to the documentation provided in the repository.

Integrating AI models involves connecting the application to various large models. This allows the application to leverage the capabilities of these models for various tasks.

Crafting effective prompts is crucial for getting the best responses from AI models. This involves understanding how to phrase questions and requests.

Retrieval-Augmented Generation combines retrieval methods with generation techniques. This allows for more accurate and context-aware responses from AI models.

Vector databases store data in a way that allows for efficient similarity searches. This is essential for applications that require quick access to large datasets.

Tool calling refers to the ability of the AI to invoke external tools or services. This enhances the functionality of the AI and allows it to perform more complex tasks.

The Model Context Protocol helps maintain context during interactions with AI models. This ensures that the AI can provide relevant responses based on previous interactions.

Developing AI agents involves creating intelligent systems that can perform tasks autonomously. This requires a solid understanding of the concepts mentioned above.

Cursor AI tools provide additional functionality for managing AI projects. These tools can streamline development and enhance productivity.

- Java 21: The programming language used for building the application.

- Spring Boot 3: The framework that simplifies the development of Java applications.

- Spring AI: A library that provides features for building AI applications.

- Maven: A build automation tool used for managing project dependencies.

- Vector Databases: Used for efficient data storage and retrieval.

- ReAct: A framework for developing autonomous agents.

We welcome contributions to the Yu AI Agent project. If you would like to contribute, please follow these steps:

- Fork the Repository: Click the "Fork" button on the top right corner of the repository page.

-

Create a New Branch:

git checkout -b feature/YourFeatureName

- Make Your Changes: Implement your feature or fix.

-

Commit Your Changes:

git commit -m "Add your message here" -

Push to Your Fork:

git push origin feature/YourFeatureName

- Create a Pull Request: Go to the original repository and click "New Pull Request".

Thank you for considering contributing to this project!

This project is licensed under the MIT License. See the LICENSE file for details.

For any inquiries or feedback, feel free to reach out:

- Email: [email protected]

- GitHub: hfgwygey

Explore the project and become proficient in AI development. Don’t forget to check the Releases section for updates and new features!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for yu-ai-agent

Similar Open Source Tools

yu-ai-agent

The Yu AI Agent repository is a comprehensive guide for AI development in 2025, focusing on creating the AI Love Master application and the ReAct mode autonomous planning agent, YuManus. It equips programmers with essential AI skills through tutorials on AI model integration, Spring AI core features, prompt engineering, RAG, vector databases, tool calling, MCP, AI agent development, and Cursor AI tools. The project enhances resume and job prospects in the AI-driven job market.

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

weam

Weam is an open source platform designed to help teams systematically adopt AI. It provides a production-ready stack with Next.js frontend and Node.js/Python backend, allowing for immediate deployment and use. Weam connects to major LLM providers, enabling easy access to the latest AI models. The platform organizes AI interactions into 'Brains' for different departments, offering customization and expansion options. Features include chat system, productivity tools, sharing & access controls, prompt library, AI agents, RAG, MCP, enterprise features, pre-built automations, and upcoming AI app solutions. Weam is free, open source, and scalable to meet growing needs.

oreilly-ai-agents

This repository contains code for O'Reilly Live Online Training for AI Agents A-Z and Modern Automated AI Agents video series. It provides a guide to understanding, implementing, and managing AI agents, covering frameworks like CrewAI, LangChain, and AutoGen. Participants learn to build agents from scratch using prompt engineering techniques, deploy AI agents, evaluate performance, and make informed decisions in AI projects.

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

Instrukt

Instrukt is a terminal-based AI integrated environment that allows users to create and instruct modular AI agents, generate document indexes for question-answering, and attach tools to any agent. It provides a platform for users to interact with AI agents in natural language and run them inside secure containers for performing tasks. The tool supports custom AI agents, chat with code and documents, tools customization, prompt console for quick interaction, LangChain ecosystem integration, secure containers for agent execution, and developer console for debugging and introspection. Instrukt aims to make AI accessible to everyone by providing tools that empower users without relying on external APIs and services.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

intellij-aicoder

AI Coding Assistant is a free and open-source IntelliJ plugin that leverages cutting-edge Language Model APIs to enhance developers' coding experience. It seamlessly integrates with various leading LLM APIs, offers an intuitive toolbar UI, and allows granular control over API requests. With features like Code & Patch Chat, Planning with AI Agents, Markdown visualization, and versatile text processing capabilities, this tool aims to streamline coding workflows and boost productivity.

dot-ai

Dot-ai is a machine learning library designed to simplify the process of building and deploying AI models. It provides a wide range of tools and utilities for data preprocessing, model training, and evaluation. With Dot-ai, users can easily create and experiment with various machine learning algorithms without the need for extensive coding knowledge. The library is built with scalability and performance in mind, making it suitable for both small-scale projects and large-scale applications. Whether you are a beginner or an experienced data scientist, Dot-ai offers a user-friendly interface to streamline your AI development workflow.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

OpenDAN-Personal-AI-OS

OpenDAN is an open source Personal AI OS that consolidates various AI modules for personal use. It empowers users to create powerful AI agents like assistants, tutors, and companions. The OS allows agents to collaborate, integrate with services, and control smart devices. OpenDAN offers features like rapid installation, AI agent customization, connectivity via Telegram/Email, building a local knowledge base, distributed AI computing, and more. It aims to simplify life by putting AI in users' hands. The project is in early stages with ongoing development and future plans for user and kernel mode separation, home IoT device control, and an official OpenDAN SDK release.

codegate

CodeGate is a local gateway that enhances the safety of AI coding assistants by ensuring AI-generated recommendations adhere to best practices, safeguarding code integrity, and protecting individual privacy. Developed by Stacklok, CodeGate allows users to confidently leverage AI in their development workflow without compromising security or productivity. It works seamlessly with coding assistants, providing real-time security analysis of AI suggestions. CodeGate is designed with privacy at its core, keeping all data on the user's machine and offering complete control over data.

For similar tasks

Pathway-AI-Bootcamp

Welcome to the μLearn x Pathway Initiative, an exciting adventure into the world of Artificial Intelligence (AI)! This comprehensive course, developed in collaboration with Pathway, will empower you with the knowledge and skills needed to navigate the fascinating world of AI, with a special focus on Large Language Models (LLMs).

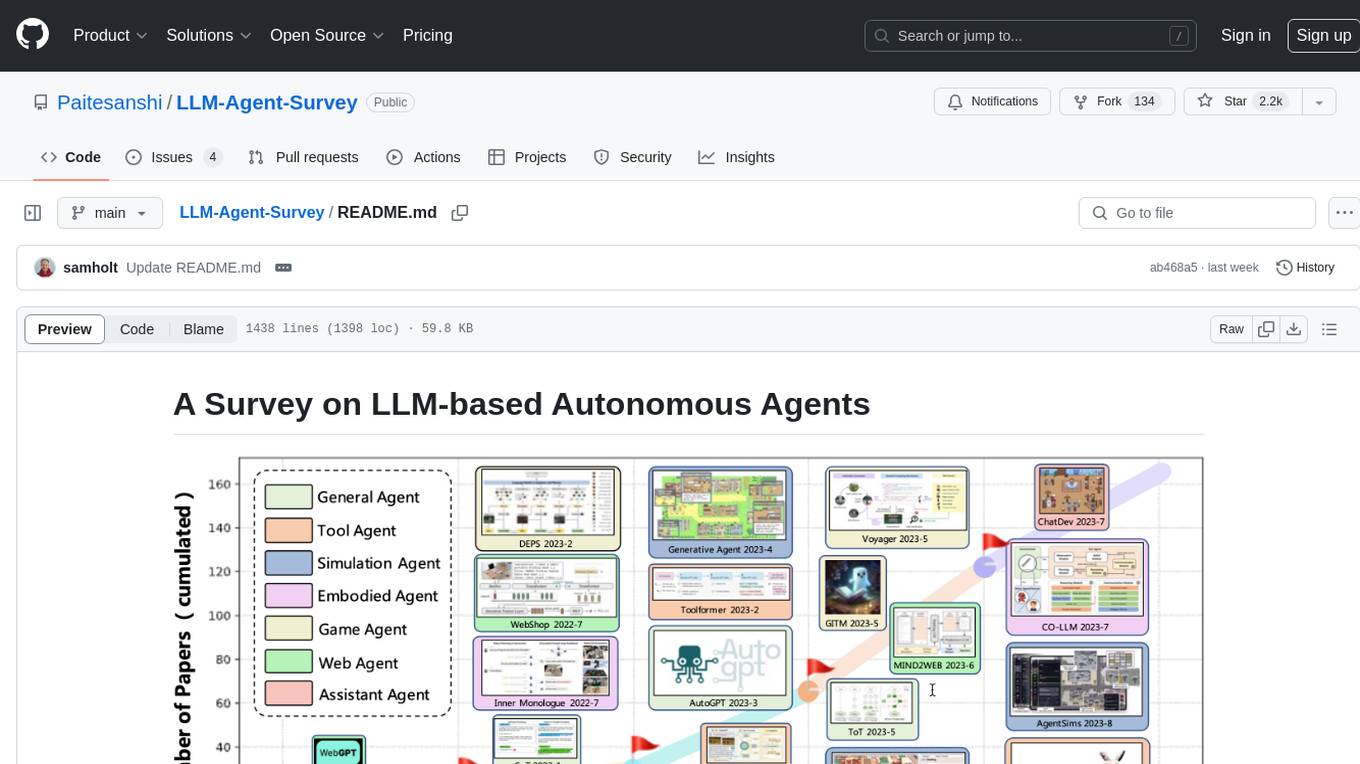

LLM-Agent-Survey

Autonomous agents are designed to achieve specific objectives through self-guided instructions. With the emergence and growth of large language models (LLMs), there is a growing trend in utilizing LLMs as fundamental controllers for these autonomous agents. This repository conducts a comprehensive survey study on the construction, application, and evaluation of LLM-based autonomous agents. It explores essential components of AI agents, application domains in natural sciences, social sciences, and engineering, and evaluation strategies. The survey aims to be a resource for researchers and practitioners in this rapidly evolving field.

genkit

Firebase Genkit (beta) is a framework with powerful tooling to help app developers build, test, deploy, and monitor AI-powered features with confidence. Genkit is cloud optimized and code-centric, integrating with many services that have free tiers to get started. It provides unified API for generation, context-aware AI features, evaluation of AI workflow, extensibility with plugins, easy deployment to Firebase or Google Cloud, observability and monitoring with OpenTelemetry, and a developer UI for prototyping and testing AI features locally. Genkit works seamlessly with Firebase or Google Cloud projects through official plugins and templates.

vector-cookbook

The Vector Cookbook is a collection of recipes and sample application starter kits for building AI applications with LLMs using PostgreSQL and Timescale Vector. Timescale Vector enhances PostgreSQL for AI applications by enabling the storage of vector, relational, and time-series data with faster search, higher recall, and more efficient time-based filtering. The repository includes resources, sample applications like TSV Time Machine, and guides for creating, storing, and querying OpenAI embeddings with PostgreSQL and pgvector. Users can learn about Timescale Vector, explore performance benchmarks, and access Python client libraries and tutorials.

cogai

The W3C Cognitive AI Community Group focuses on advancing Cognitive AI through collaboration on defining use cases, open source implementations, and application areas. The group aims to demonstrate the potential of Cognitive AI in various domains such as customer services, healthcare, cybersecurity, online learning, autonomous vehicles, manufacturing, and web search. They work on formal specifications for chunk data and rules, plausible knowledge notation, and neural networks for human-like AI. The group positions Cognitive AI as a combination of symbolic and statistical approaches inspired by human thought processes. They address research challenges including mimicry, emotional intelligence, natural language processing, and common sense reasoning. The long-term goal is to develop cognitive agents that are knowledgeable, creative, collaborative, empathic, and multilingual, capable of continual learning and self-awareness.

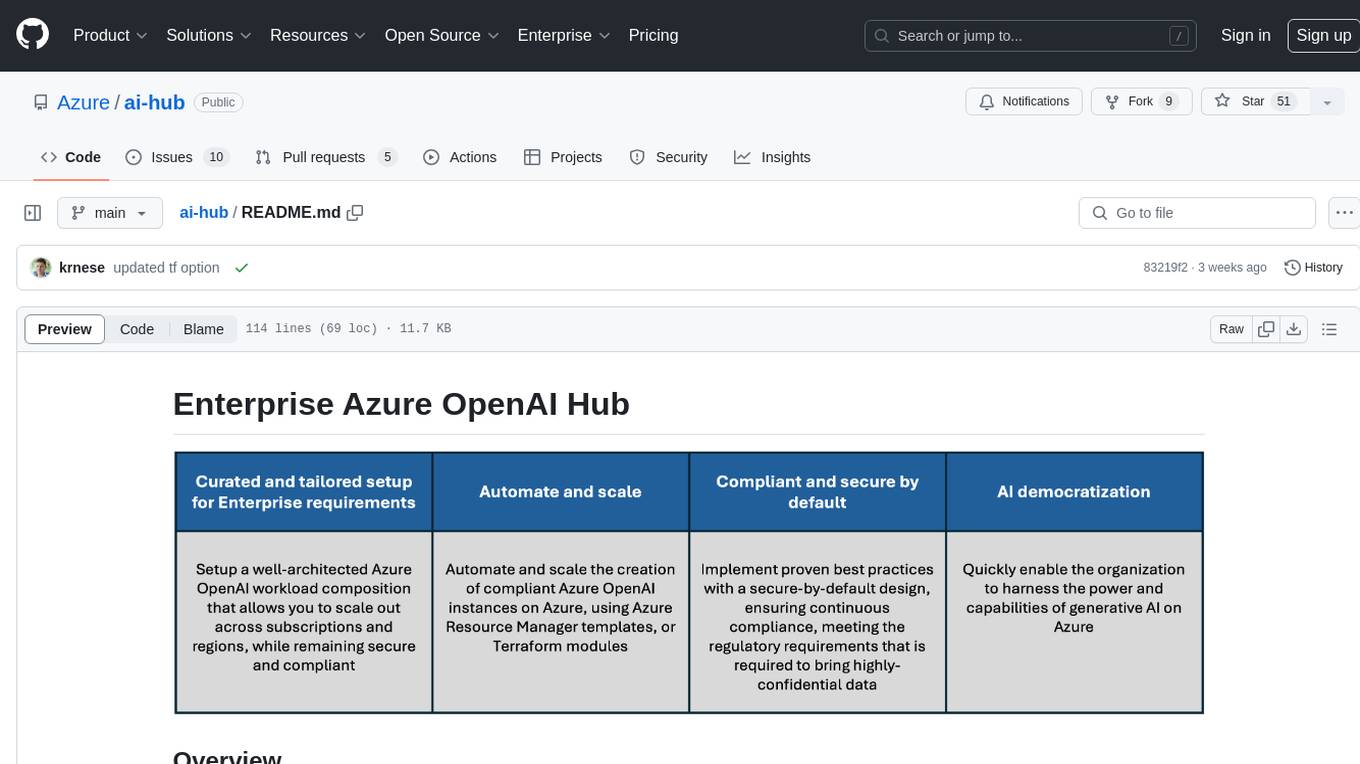

ai-hub

The Enterprise Azure OpenAI Hub is a comprehensive repository designed to guide users through the world of Generative AI on the Azure platform. It offers a structured learning experience to accelerate the transition from concept to production in an Enterprise context. The hub empowers users to explore various use cases with Azure services, ensuring security and compliance. It provides real-world examples and playbooks for practical insights into solving complex problems and developing cutting-edge AI solutions. The repository also serves as a library of proven patterns, aligning with industry standards and promoting best practices for secure and compliant AI development.

earth2studio

Earth2Studio is a Python-based package designed to enable users to quickly get started with AI weather and climate models. It provides access to pre-trained models, diagnostic tools, data sources, IO utilities, perturbation methods, and sample workflows for building custom weather prediction workflows. The package aims to empower users to explore AI-driven meteorology through modular components and seamless integration with other Nvidia packages like Modulus.

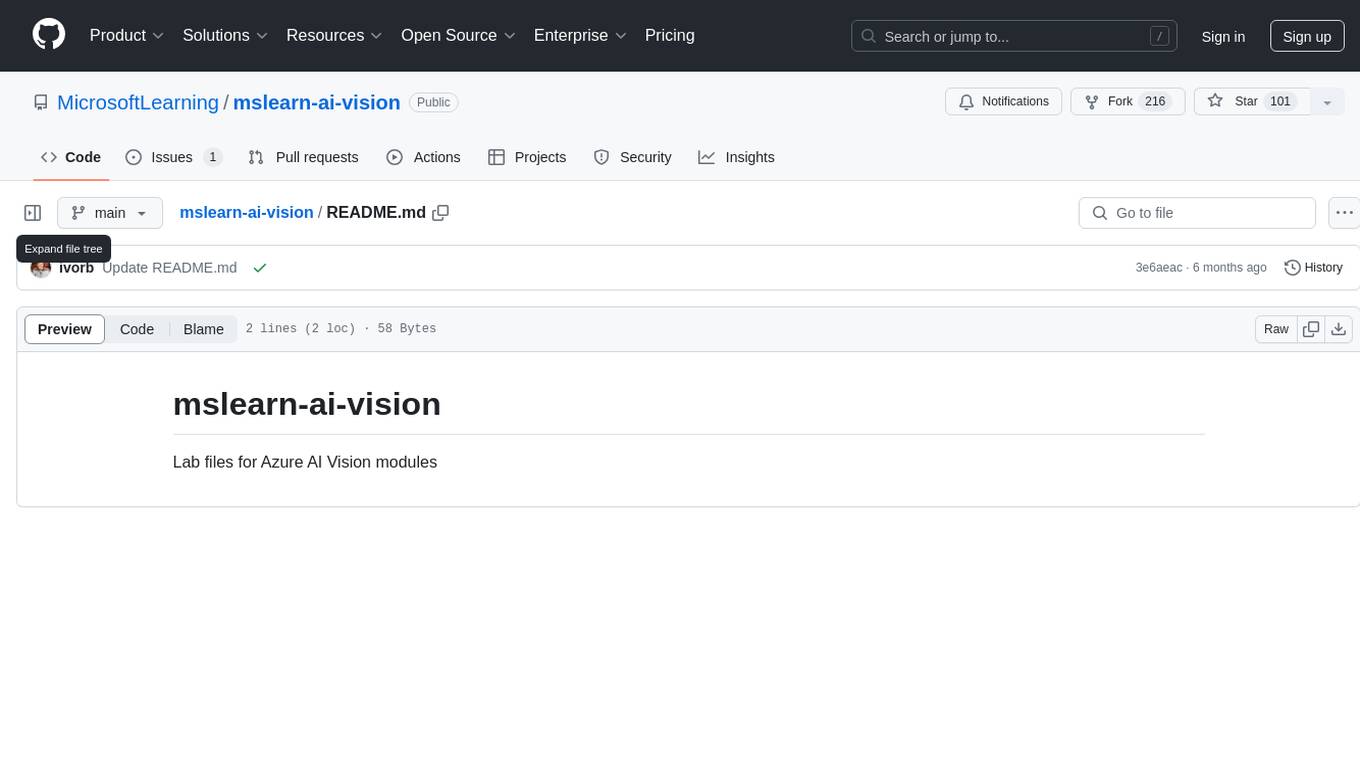

mslearn-ai-vision

The 'mslearn-ai-vision' repository contains lab files for Azure AI Vision modules. It provides hands-on exercises and resources for learning about AI vision capabilities on the Azure platform. The labs cover topics such as image recognition, object detection, and image classification using Azure's AI services. By following the lab exercises, users can gain practical experience in building and deploying AI vision solutions in the cloud.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.