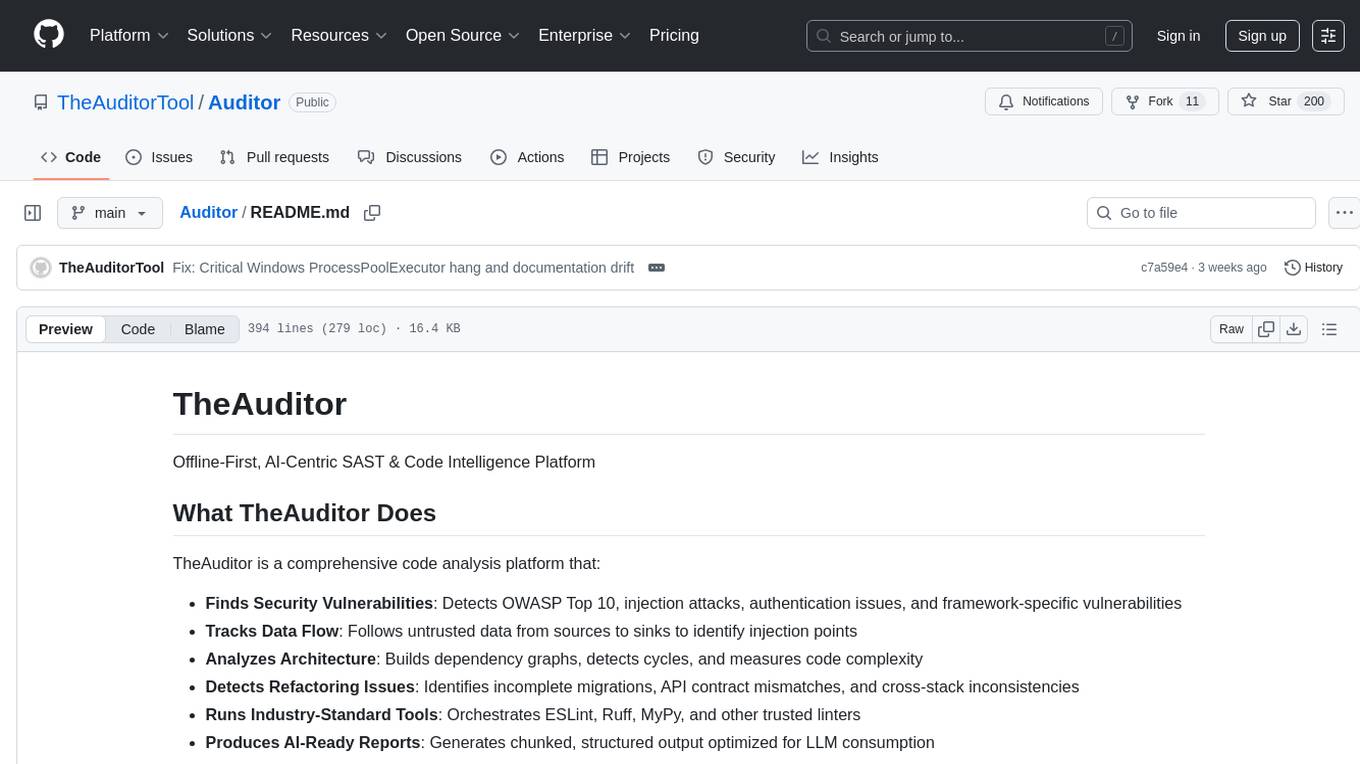

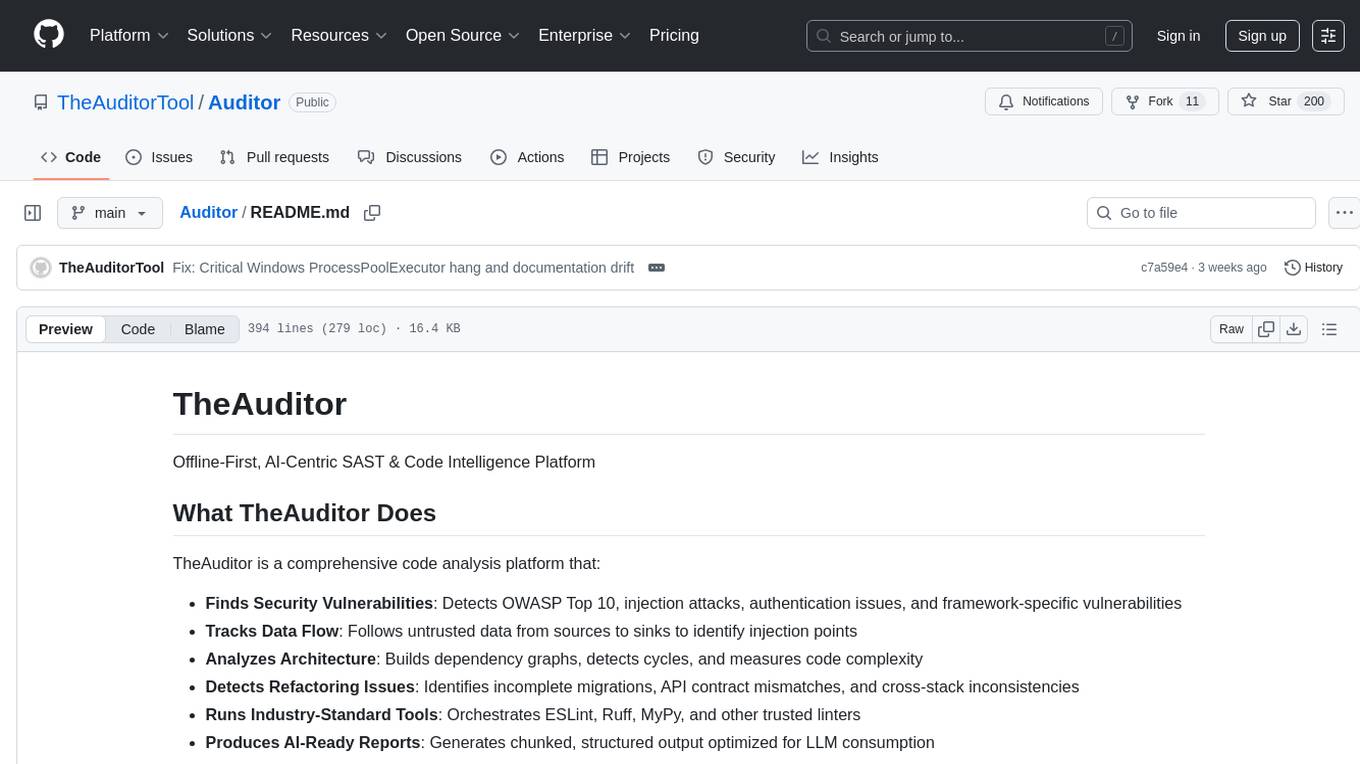

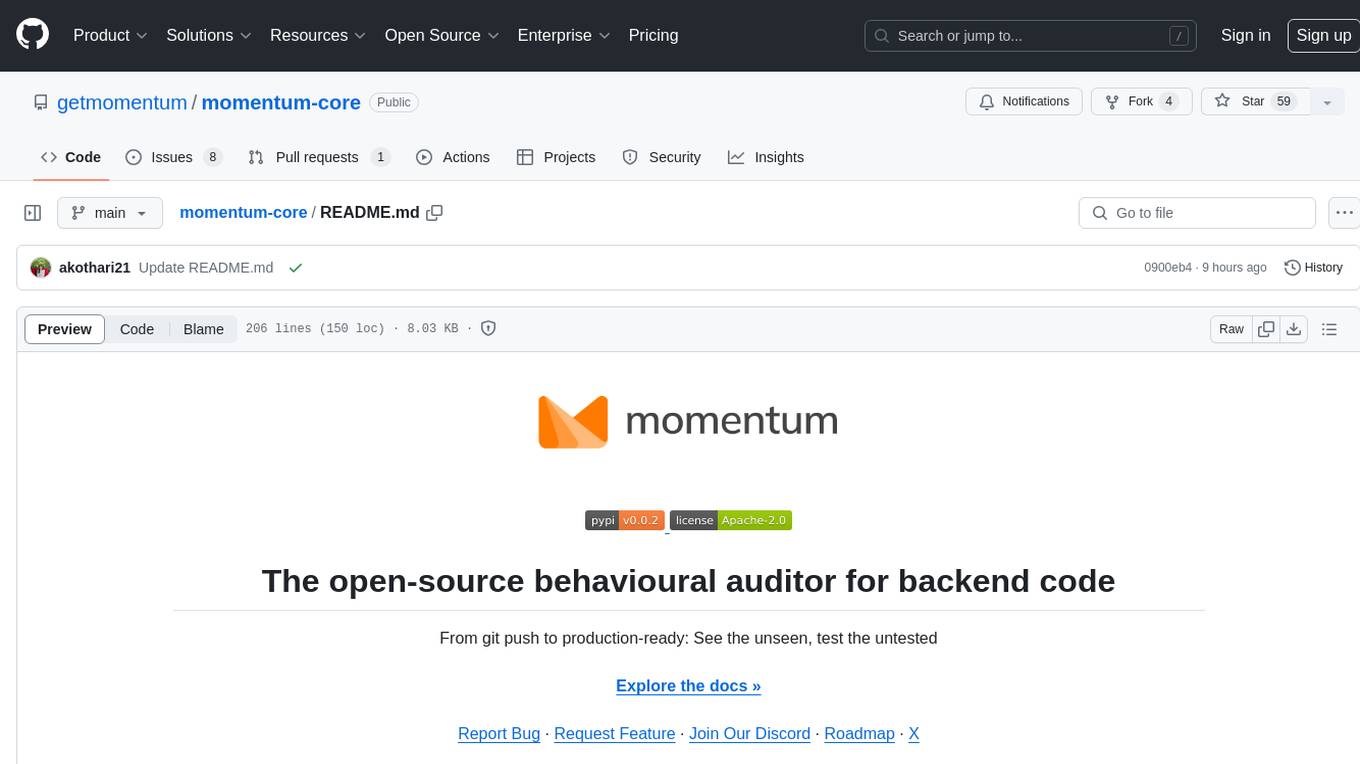

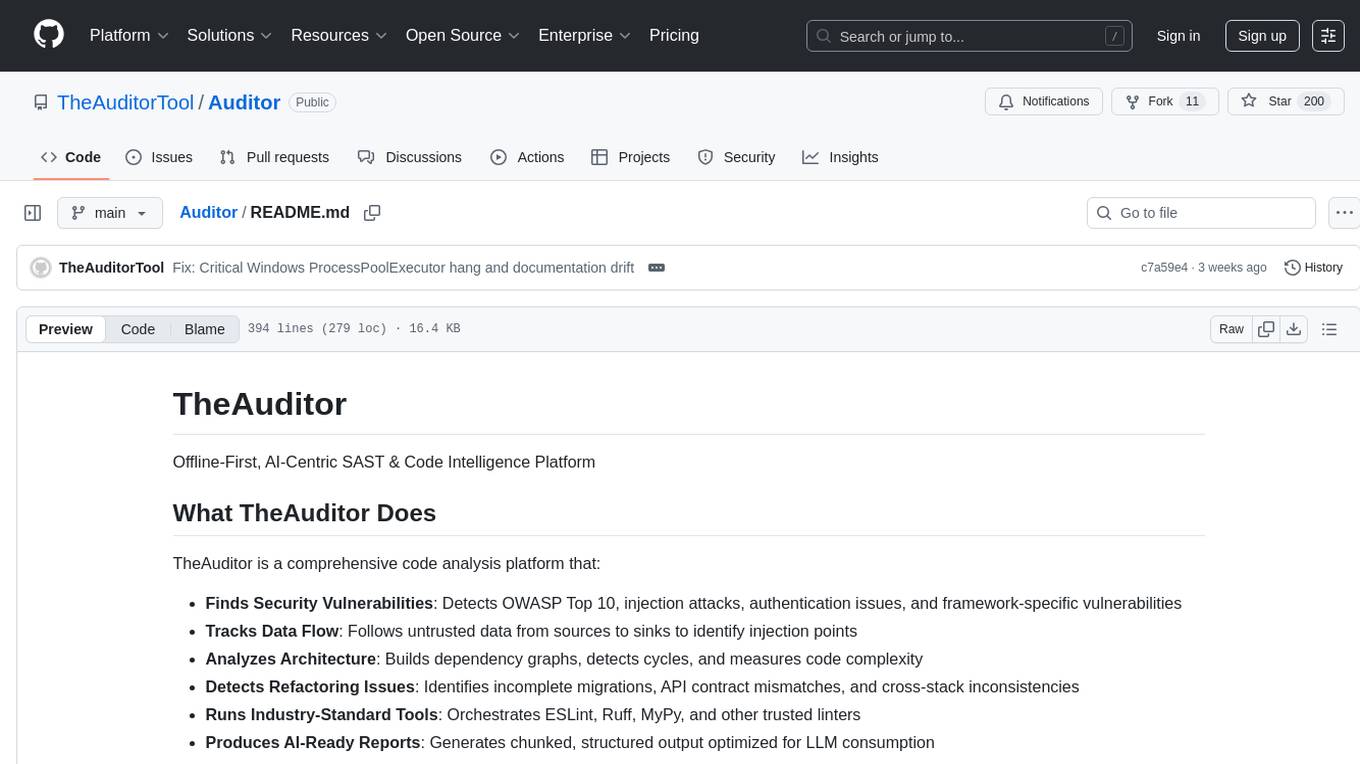

Auditor

Antidote to VibeCoding

Stars: 199

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

README:

Offline-First, AI-Centric SAST & Code Intelligence Platform

TheAuditor is a comprehensive code analysis platform that:

- Finds Security Vulnerabilities: Detects OWASP Top 10, injection attacks, authentication issues, and framework-specific vulnerabilities

- Tracks Data Flow: Follows untrusted data from sources to sinks to identify injection points

- Analyzes Architecture: Builds dependency graphs, detects cycles, and measures code complexity

- Detects Refactoring Issues: Identifies incomplete migrations, API contract mismatches, and cross-stack inconsistencies

- Runs Industry-Standard Tools: Orchestrates ESLint, Ruff, MyPy, and other trusted linters

- Produces AI-Ready Reports: Generates chunked, structured output optimized for LLM consumption

Unlike traditional SAST tools, TheAuditor is designed specifically for AI-assisted development workflows, providing ground truth that both developers and AI assistants can trust.

# Clone TheAuditor to your tools directory (NOT your project!)

cd ~/tools # or wherever you keep development tools

git clone https://github.com/TheAuditorTool/Auditor.git

cd TheAuditor

# Install using your SYSTEM Python (no venv needed!)

pip install -e .

# Verify installation

aud --version# Navigate to YOUR PROJECT directory (not TheAuditor!)

cd ~/my-project-to-audit

# Setup sandbox environment for THIS project

aud setup-claude --target .

# Run analysis

aud init # First time only

aud full # Complete security audit

# Check results

ls .pf/readthis/Important Directory Structure:

-

~/tools/TheAuditor/- Where TheAuditor tool lives -

~/my-project/- Your project being analyzed -

~/my-project/.auditor_venv/- Sandbox created BY TheAuditor -

~/my-project/.pf/- Analysis results

That's it! TheAuditor will analyze your codebase and generate AI-ready reports in .pf/readthis/.

Universal Integration: Just tell your AI assistant to run aud full and read the results from .pf/readthis/. No SDK, no integration, no setup - it just works with Claude, Cursor, Windsurf, Copilot, or any future AI tool that can run commands and read files.

TheAuditor is the antidote. It was built to stop "vibe coding" your way into security and quality assurance nightmares. Its mission is to provide an incorruptible source of ground truth for both the developer and their AI assistant.

Its philosophy is a direct rejection of the current trend:

- It Orchestrates Verifiable Data. The tool runs a suite of industry-standard linters and security scanners, preserving the raw, unfiltered output from each. It does not summarize or interpret this core data.

- It's Built for AI Consumption. The tool's primary engineering challenge is to adapt this raw truth into structured, AI-digestible chunks. It ensures the AI works with facts, not faulty summaries.

- It's Focused and Extensible. The initial focus is on Python and the Node.js ecosystem, but the modular, pattern-based architecture is designed to invite contributions for other languages and frameworks.

TheAuditor is not a replacement for a formal third-party audit. It is an engineering tool designed to catch the vast majority of glaring issues—from the OWASP Top 10 to common framework anti-patterns. Its core commitment is to never cross the line from verifiable truth into semantic interpretation.

Every AI assistant - Claude Code, Cursor, Windsurf, Copilot - they're all blind. They can write code but can't verify it's secure, correct, or complete. TheAuditor gives them eyes.

-

Tool Agnostic - Works with ANY AI assistant or IDE

-

aud fullfrom any terminal - Results in

.pf/readthis/ready for any LLM

-

-

AI Becomes Self-Correcting

- AI writes code

- AI runs

aud full - AI reads the ground truth

- AI fixes its own mistakes

- Recursive loop until actually correct

-

No Human Intervention Required

- You never touch the terminal

- The AI runs everything

- You just review and approve

Human: "Add authentication to my app"

↓

AI: *writes auth code*

↓

AI: `aud full`

↓

AI: *reads .pf/readthis/*

↓

AI: "Found 3 security issues, fixing..."

↓

AI: *fixes issues*

↓

AI: `aud full`

↓

AI: "Clean. Authentication complete."

Every developer using AI assistants has this problem:

- AI writes insecure code

- AI introduces bugs

- AI doesn't see the full picture

- AI can't verify its work

TheAuditor solves ALL of this. It's not a "nice to have" - it's the missing piece that makes AI development actually trustworthy.

I've built the tool that makes AI assistants production-ready. This isn't competing with SonarQube/SemGrep. This is creating an entirely new category: AI Development Verification Tools.

My background is in systems architecture/infrastructure, not professional software development. I have only been "coding/developing" for little over 3 months. This gives me a unique perspective: I can see the forest, but I'm blind to the individual trees of the code. After immersing myself for 500+ hours in AI-assisted development, I concluded that the entire ecosystem is built on a fundamentally flawed premise: it lacks a source of ground truth.

From start to launch on GitHub took me about a month across 250 active hours in front of the computer, for anyone that wonders or cares :P

Most AI development tools try to solve the wrong problem. They focus on perfecting the input—better prompts, more context—but they ignore the critical issue of compounding deviation.

An LLM is a powerful statistical engine, but it doesn't understand. The modern AI workflow forces this engine to play a high-stakes game of "telephone," where the original intent is corrupted at every step:

- A human has an idea.

- An AI refines it into a prompt.

- Other tools add their own interpretive layers.

- The primary AI assistant (e.g., Claude Opus) interprets the final, distorted prompt to generate code.

As a rookie "developer," the only thing I could trust was the raw output: the code and its errors. In a vacuum of deep programming knowledge, these facts were my only anchors.

This architectural flaw is amplified by two dangerous behaviours inherent to AI assistants:

-

Security Theater: AI assistants are optimized to "make it work," which often means introducing rampant security anti-patterns like hardcoded credentials, disabled authentication, and the pervasive use of

as anyin TypeScript. This creates a dangerous illusion of progress. - Context Blindness: With aggressive context compaction, an AI never sees the full picture. It works with fleeting snapshots of code, forcing it to make assumptions instead of decisions based on facts.

TheAuditor runs a comprehensive audit through multiple analysis phases organized in parallel stages:

STAGE 1: Foundation (Sequential)

- Index Repository - Build complete code inventory and SQLite database

- Detect Frameworks - Identify Django, Flask, React, Vue, etc.

STAGE 2: Concurrent Analysis (3 parallel tracks)

Track A - Network Operations: 3. Check Dependencies - Analyze package versions and known vulnerabilities 4. Fetch Documentation - Extract docstrings and comments 5. Summarize Documentation - Create AI-readable documentation chunks

Track B - Code Analysis: 6. Create Workset - Identify all source files for analysis 7. Run Linting - Execute Ruff, MyPy, ESLint as configured 8. Detect Patterns - Apply 100+ security pattern rules

Track C - Graph & Flow: 9. Build Graph - Create dependency graph structure 10. Analyze Graph - Find cycles, measure complexity 11. Visualize Graph - Generate multiple graph views 12. Taint Analysis - Track data flow from sources to sinks

STAGE 3: Final Aggregation (Sequential)

13. Factual Correlation Engine - Cross-reference findings across all tools

14. Generate Report - Produce final AI-consumable chunks in .pf/readthis/

15. Summary Generation - Create executive summary of findings

TheAuditor detects incomplete refactorings and cross-stack inconsistencies using correlation rules:

# Analyze refactoring impact

aud refactor --file models/Product.ts --line 42

# Auto-detect from migrations

aud refactor --auto-detect

# Analyze workset

aud refactor --workset --output refactor_report.jsonDetects:

- Data Model Changes: Fields moved between tables

- API Contract Mismatches: Frontend/backend inconsistencies

- Foreign Key Updates: Incomplete reference changes

- Cross-Stack Issues: TypeScript interfaces not matching models

Users define custom rules in /correlations/rules/, example provided in refactoring.yaml to detect project-specific patterns.

TheAuditor now includes rich visual intelligence for dependency graphs using Graphviz:

- Multiple View Modes: Full graph, cycles-only, hotspots, architectural layers, impact analysis

-

Visual Intelligence Encoding:

- Node colors indicate programming language (Python=blue, JS=yellow, TypeScript=blue)

- Node size shows importance based on connectivity

- Red highlighting for dependency cycles

- Border thickness encodes code churn

- Actionable Insights: Focus on what matters with filtered views

- AI-Readable Output: Generate SVG visualizations that LLMs can analyze

# Basic visualization

aud graph viz

# Show only dependency cycles

aud graph viz --view cycles --include-analysis

# Top 5 hotspots with connections

aud graph viz --view hotspots --top-hotspots 5

# Architectural layers visualization

aud graph viz --view layers --format svg

# Impact analysis for a specific file

aud graph viz --view impact --impact-target "src/auth.py"Separate from the core Truth Courier modules, TheAuditor offers optional Insights for technical scoring:

# Run insights analysis on existing audit data

aud insights --mode all

# ML-powered insights (requires: pip install -e ".[ml]")

aud insights --mode ml --ml-train

# Graph health metrics and recommendations

aud insights --mode graph

# Generate comprehensive insights report

aud insights --output insights_report.jsonInsights modules add interpretive scoring on top of factual data:

- Health Scores: Architecture quality metrics

- Severity Classification: Risk assessment beyond raw findings

- Recommendations: Actionable improvement suggestions

- ML Predictions: Pattern-based issue prediction

TheAuditor is a security scanner that identifies vulnerabilities in your code. By its very nature, it must:

- Read and analyze security vulnerabilities - SQL injection, XSS attacks, hardcoded passwords

- Write these findings to disk - Creating reports with exact code snippets as evidence

- Process files rapidly - Scanning entire codebases in parallel for efficiency

This creates an inherent conflict with antivirus software, which sees these exact same behaviours as potentially malicious. When TheAuditor finds and documents a SQL injection vulnerability in your code, your antivirus sees us writing "malicious SQL injection patterns" to disk - because that's literally what we're doing, just for legitimate security analysis purposes.

When running TheAuditor, you may notice:

- Increased antivirus CPU usage - Your AV will scan every file we read AND every finding we write

- Approximately 10-50% performance reduction, depending on software. - Both TheAuditor and your AV are reading the same files simultaneously

- Occasional delays or pauses - Your AV may temporarily quarantine our output files for deeper inspection

This is not a bug or inefficiency in TheAuditor - it's the unavoidable consequence of two security tools doing their jobs simultaneously.

We do NOT recommend:

- ❌ Disabling your antivirus software

- ❌ Adding TheAuditor to your exclusion/whitelist

- ❌ Reducing your system's security in any way

Your antivirus is correctly identifying that we're writing security vulnerability patterns to disk. That's exactly what we do - we find vulnerabilities and document them. The fact that your AV is suspicious of this behavior means it's working properly.

- Intelligent resource management - We automatically reduce parallel workers when system resources are constrained

- Pattern defanging - We insert invisible characters into dangerous patterns to reduce false positives

- Adaptive performance - We monitor CPU and RAM usage to avoid overwhelming your system

This is not a problem unique to TheAuditor. Every legitimate security scanner faces this same issue:

- GitHub Advanced Security runs in isolated cloud containers to avoid this

- Commercial SAST tools require enterprise AV exceptions

- Popular scanners explicitly document AV conflicts in their installation guides

The fundamental paradox: A tool that finds security vulnerabilities must write those vulnerabilities to disk, which makes it indistinguishable from malware to an antivirus. There is no technical solution to this - it's the inherent nature of security analysis tools.

- Run TheAuditor when system load is low for best performance

- Expect the analysis to take longer than the raw processing time due to AV overhead

- If your AV quarantines output files in

.pf/, you may need to restore them manually - Consider running TheAuditor in a controlled environment if performance is critical

We believe in complete transparency about these limitations. This interaction with antivirus software is not a flaw in TheAuditor - it's proof that both your AV and our scanner are doing exactly what they're designed to do: identify and handle potentially dangerous code patterns.

-

Cause: Running

aud initon a fresh project -

Fix: Update TheAuditor and reinstall:

cd ~/tools/TheAuditor git pull pip install -e .

- Cause: Missing AST analysis tools

-

Fix: Reinstall the sandbox in your project:

cd ~/my-project rm -rf .auditor_venv aud setup-claude --target .

- Cause: Slow compilation of C extensions

-

Fix: Update TheAuditor or manually install:

cd ~/my-project .auditor_venv/bin/pip install tree-sitter tree-sitter-language-pack

- Issue: Created your own venv before installing

-

Fix: Exit all venvs and use system Python:

deactivate # Exit any active venv cd ~/tools/TheAuditor pip install -e . # Use system pip

- How to Use - Complete installation and usage guide

- Architecture - Technical architecture and design patterns

- Contributing - How to contribute to TheAuditor

- Roadmap - Future development plans

We welcome contributions! See CONTRIBUTING.md for:

- How to add new language support

- Creating security patterns

- Adding framework-specific rules

- Development guidelines

We especially need help with:

- GraphQL analysis

- Java/Spring support

- Go patterns

- Ruby on Rails detection

- C#/.NET analysis

AGPL-3.0

TheAuditor is AGPL-3.0 licensed. For commercial use, SaaS deployment, or integration into proprietary systems, please contact via GitHub for licensing options.

For issues, questions, or feature requests, please open an issue on our GitHub repository.

TheAuditor: Bringing ground truth to AI-assisted development

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Auditor

Similar Open Source Tools

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

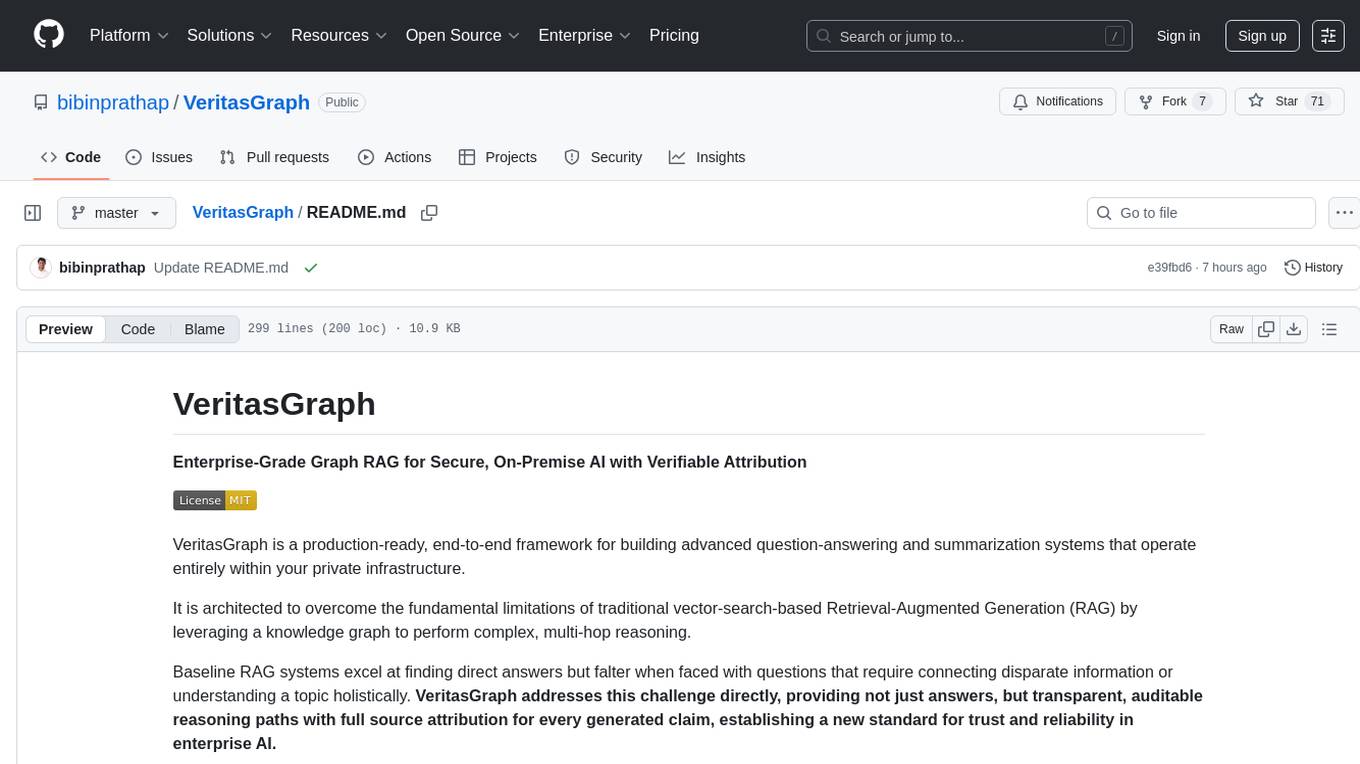

VeritasGraph

VeritasGraph is an enterprise-grade graph RAG framework designed for secure, on-premise AI applications. It leverages a knowledge graph to perform complex, multi-hop reasoning, providing transparent, auditable reasoning paths with full source attribution. The framework excels at answering complex questions that traditional vector search engines struggle with, ensuring trust and reliability in enterprise AI. VeritasGraph offers full control over data and AI models, verifiable attribution for every claim, advanced graph reasoning capabilities, and open-source deployment with sovereignty and customization.

kollektiv

Kollektiv is a Retrieval-Augmented Generation (RAG) system designed to enable users to chat with their favorite documentation easily. It aims to provide LLMs with access to the most up-to-date knowledge, reducing inaccuracies and improving productivity. The system utilizes intelligent web crawling, advanced document processing, vector search, multi-query expansion, smart re-ranking, AI-powered responses, and dynamic system prompts. The technical stack includes Python/FastAPI for backend, Supabase, ChromaDB, and Redis for storage, OpenAI and Anthropic Claude 3.5 Sonnet for AI/ML, and Chainlit for UI. Kollektiv is licensed under a modified version of the Apache License 2.0, allowing free use for non-commercial purposes.

deer-flow

DeerFlow is a community-driven Deep Research framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It supports FaaS deployment and one-click deployment based on Volcengine. The framework includes core capabilities like LLM integration, search and retrieval, RAG integration, MCP seamless integration, human collaboration, report post-editing, and content creation. The architecture is based on a modular multi-agent system with components like Coordinator, Planner, Research Team, and Text-to-Speech integration. DeerFlow also supports interactive mode, human-in-the-loop mechanism, and command-line arguments for customization.

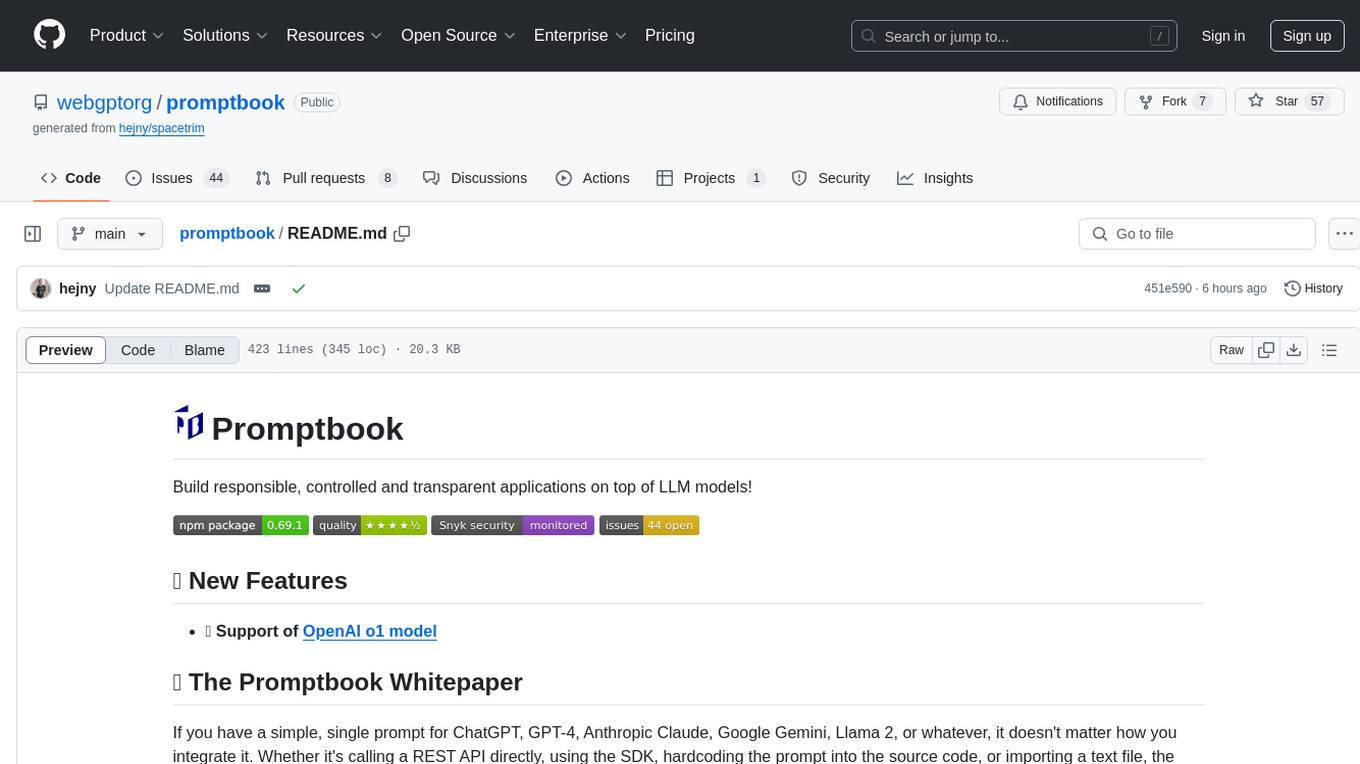

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

testzeus-hercules

Hercules is the world’s first open-source testing agent designed to handle the toughest testing tasks for modern web applications. It turns simple Gherkin steps into fully automated end-to-end tests, making testing simple, reliable, and efficient. Hercules adapts to various platforms like Salesforce and is suitable for CI/CD pipelines. It aims to democratize and disrupt test automation, making top-tier testing accessible to everyone. The tool is transparent, reliable, and community-driven, empowering teams to deliver better software. Hercules offers multiple ways to get started, including using PyPI package, Docker, or building and running from source code. It supports various AI models, provides detailed installation and usage instructions, and integrates with Nuclei for security testing and WCAG for accessibility testing. The tool is production-ready, open core, and open source, with plans for enhanced LLM support, advanced tooling, improved DOM distillation, community contributions, extensive documentation, and a bounty program.

spacy-llm

This package integrates Large Language Models (LLMs) into spaCy, featuring a modular system for **fast prototyping** and **prompting** , and turning unstructured responses into **robust outputs** for various NLP tasks, **no training data** required. It supports open-source LLMs hosted on Hugging Face 🤗: Falcon, Dolly, Llama 2, OpenLLaMA, StableLM, Mistral. Integration with LangChain 🦜️🔗 - all `langchain` models and features can be used in `spacy-llm`. Tasks available out of the box: Named Entity Recognition, Text classification, Lemmatization, Relationship extraction, Sentiment analysis, Span categorization, Summarization, Entity linking, Translation, Raw prompt execution for maximum flexibility. Soon: Semantic role labeling. Easy implementation of **your own functions** via spaCy's registry for custom prompting, parsing and model integrations. For an example, see here. Map-reduce approach for splitting prompts too long for LLM's context window and fusing the results back together

MyDeviceAI

MyDeviceAI is a personal AI assistant app for iPhone that brings the power of artificial intelligence directly to the device. It focuses on privacy, performance, and personalization by running AI models locally and integrating with privacy-focused web services. The app offers seamless user experience, web search integration, advanced reasoning capabilities, personalization features, chat history access, and broad device support. It requires macOS, Xcode, CocoaPods, Node.js, and a React Native development environment for installation. The technical stack includes React Native framework, AI models like Qwen 3 and BGE Small, SearXNG integration, Redux for state management, AsyncStorage for storage, Lucide for UI components, and tools like ESLint and Prettier for code quality.

ai_automation_suggester

An integration for Home Assistant that leverages AI models to understand your unique home environment and propose intelligent automations. By analyzing your entities, devices, areas, and existing automations, the AI Automation Suggester helps you discover new, context-aware use cases you might not have considered, ultimately streamlining your home management and improving efficiency, comfort, and convenience. The tool acts as a personal automation consultant, providing actionable YAML-based automations that can save energy, improve security, enhance comfort, and reduce manual intervention. It turns the complexity of a large Home Assistant environment into actionable insights and tangible benefits.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

Zentara-Code

Zentara Code is an AI coding assistant for VS Code that turns chat instructions into precise, auditable changes in the codebase. It is optimized for speed, safety, and correctness through parallel execution, LSP semantics, and integrated runtime debugging. It offers features like parallel subagents, integrated LSP tools, and runtime debugging for efficient code modification and analysis.

yu-ai-agent

The Yu AI Agent repository is a comprehensive guide for AI development in 2025, focusing on creating the AI Love Master application and the ReAct mode autonomous planning agent, YuManus. It equips programmers with essential AI skills through tutorials on AI model integration, Spring AI core features, prompt engineering, RAG, vector databases, tool calling, MCP, AI agent development, and Cursor AI tools. The project enhances resume and job prospects in the AI-driven job market.

LLMstudio

LLMstudio by TensorOps is a platform that offers prompt engineering tools for accessing models from providers like OpenAI, VertexAI, and Bedrock. It provides features such as Python Client Gateway, Prompt Editing UI, History Management, and Context Limit Adaptability. Users can track past runs, log costs and latency, and export history to CSV. The tool also supports automatic switching to larger-context models when needed. Coming soon features include side-by-side comparison of LLMs, automated testing, API key administration, project organization, and resilience against rate limits. LLMstudio aims to streamline prompt engineering, provide execution history tracking, and enable effortless data export, offering an evolving environment for teams to experiment with advanced language models.

momentum-core

Momentum is an open-source behavioral auditor for backend code that helps developers generate powerful insights into their codebase. It analyzes code behavior, tests it at every git push, and ensures readiness for production. Momentum understands backend code, visualizes dependencies, identifies behaviors, generates test code, runs code in the local environment, and provides debugging solutions. It aims to improve code quality, streamline testing processes, and enhance developer productivity.

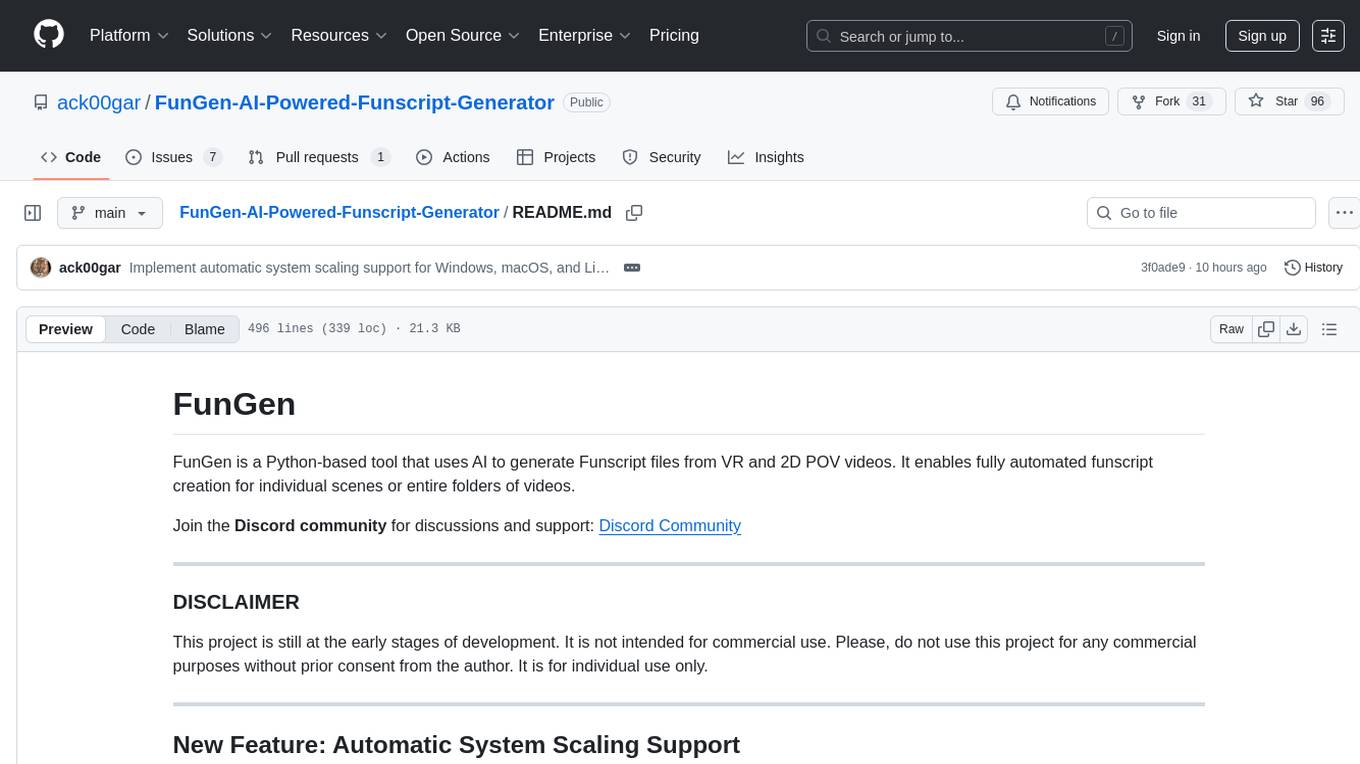

FunGen-AI-Powered-Funscript-Generator

FunGen is a Python-based tool that uses AI to generate Funscript files from VR and 2D POV videos. It enables fully automated funscript creation for individual scenes or entire folders of videos. The tool includes features like automatic system scaling support, quick installation guides for Windows, Linux, and macOS, manual installation instructions, NVIDIA GPU setup, AMD GPU acceleration, YOLO model download, GUI settings, GitHub token setup, command-line usage, modular systems for funscript filtering and motion tracking, performance and parallel processing tips, and more. The project is still in early development stages and is not intended for commercial use.

For similar tasks

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

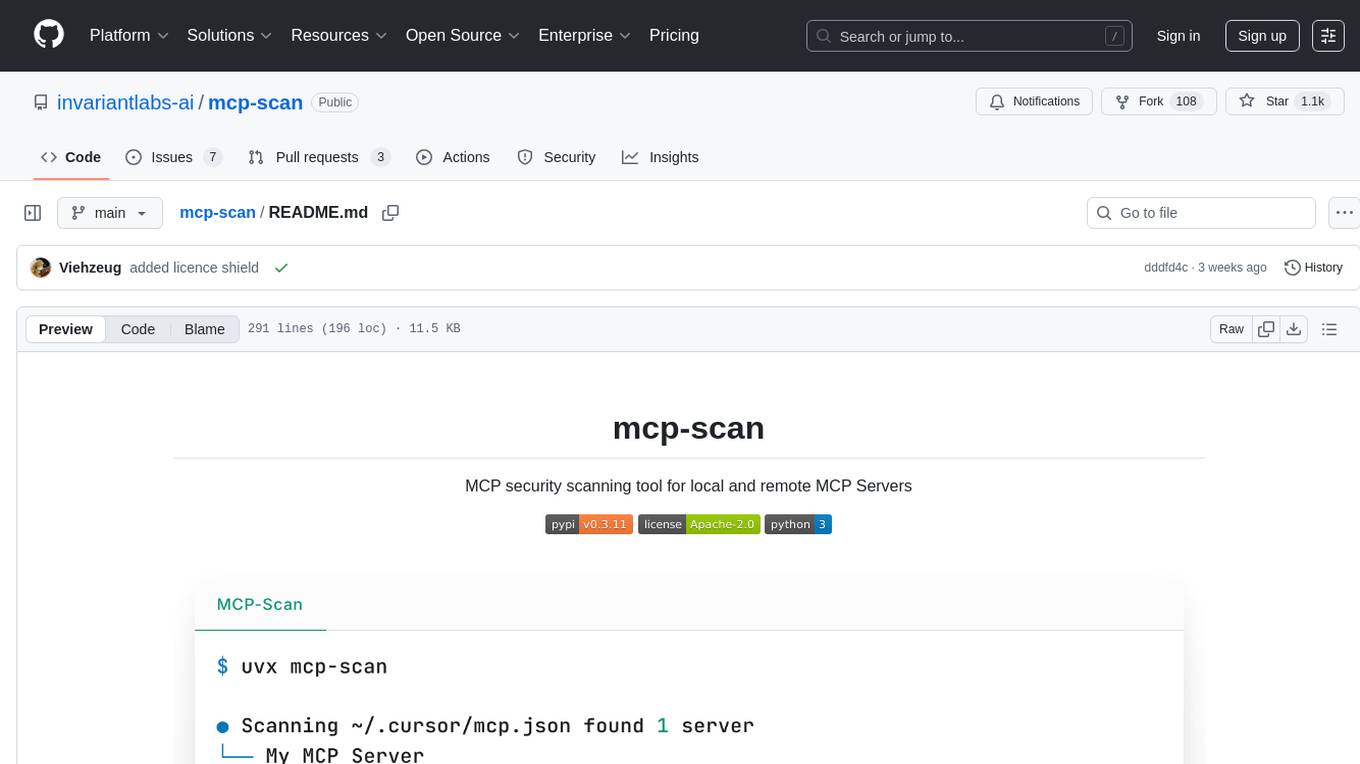

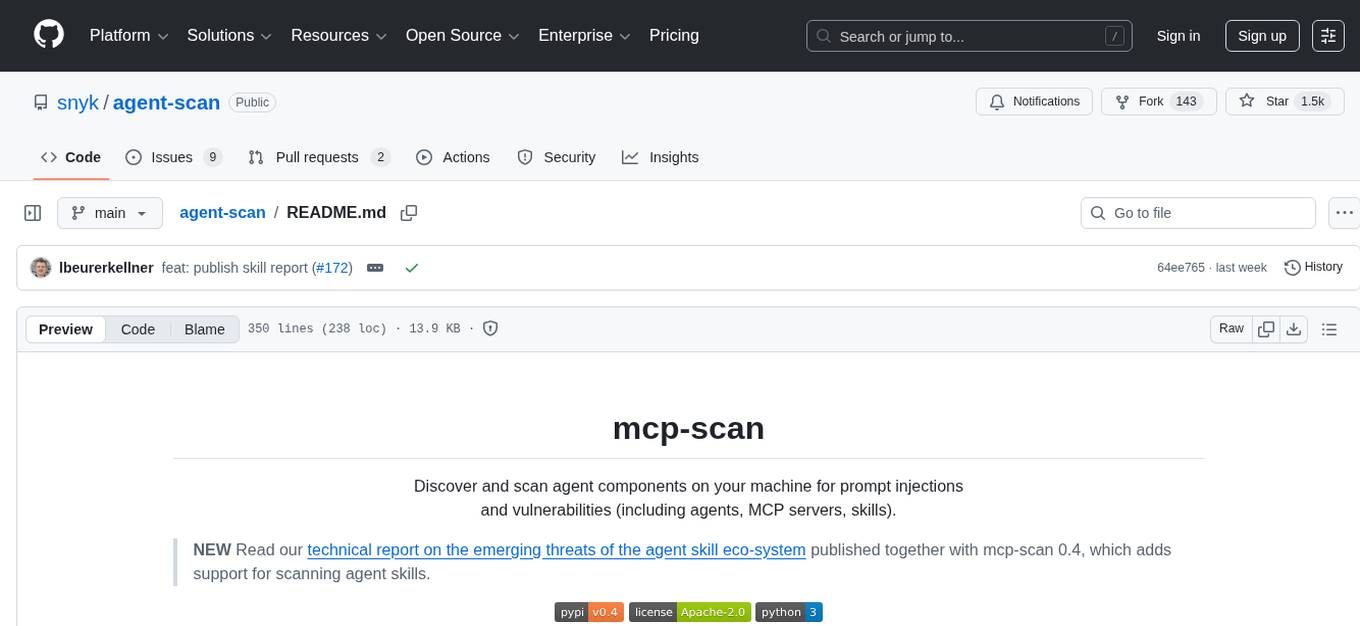

mcp-scan

MCP-Scan is a security scanning tool designed to detect common security vulnerabilities in Model Context Protocol (MCP) servers. It can auto-discover various MCP configurations, scan both local and remote servers for security issues like prompt injection attacks, tool poisoning attacks, and toxic flows. The tool operates in two main modes - 'scan' for static scanning of installed servers and 'proxy' for real-time monitoring and guardrailing of MCP connections. It offers features like scanning for specific attacks, enforcing guardrailing policies, auditing MCP traffic, and detecting changes to MCP tools. MCP-Scan does not store or log usage data and can be used to enhance the security of MCP environments.

agent-scan

MCP-scan is a security scanning tool designed to discover and scan agent components on a machine for prompt injections and vulnerabilities. It helps keep an inventory of installed agent components and scans them for threats like prompt injections, sensitive data handling, and malware payloads. The tool can auto-discover MCP configurations, agent tools, and skills, and detect security vulnerabilities in both servers and agent skills. It operates in two main modes - scanning and proxying, offering features like scanning for prompt injection attacks, enforcing guardrailing policies, monitoring MCP traffic in real-time, and detecting cross-origin escalation attacks. MCP-scan does not store or log any usage data and can be used to scan MCP configurations for security vulnerabilities and manage whitelist of approved entities.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.