Stake-Crash-Predictor

The Stake Crash Predictor is a focused toolkit that combines statistical analysis, optional server fairness seed hash decrypt helpers, and AI-assisted summaries to help you study rounds on Stake.us. This project centers on the stake mines predictor and stake predictor workflows

Stars: 53

The Stake Crash Predictor is a demo-focused toolkit that combines statistical analysis, decryption tools, and AI-assisted summaries to help users study rounds on Stake.us. It includes features like real-time prediction accuracy, AI summaries, decryption tools, and demo bot templates. Users can install the tool by downloading the ZIP file, run it in demo mode to explore crash predictor outputs, and use the server seed hash decrypt helper for educational purposes. The tool is designed for Stake.us and focuses on stake mines predictor and stake predictor workflows.

README:

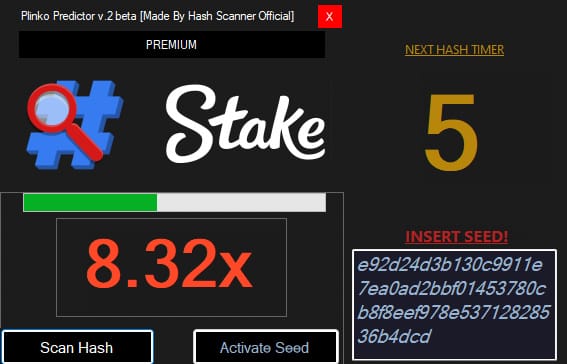

Demo-focused stake crash predictor tools — seed-inspection helpers (SHA-512 / SHA-256), AI-assisted summaries, and demo bot templates for stake mines predictor too, Start in demo mode to test safely.

| Stake Crash Predictor | 09 / 19 / 2025 | Download |

|---|

Welcome to the Stake Crash Predictor repository! SHA-512 decryption and fairness‑seed inspection power our stake crash predictor for stake crash, stake mines and stake plinko. Use the decryption-based Stake predictor tools, stake predictor app builds, and demo predictor bot templates included here. Start in demo mode to safely explore how crash predictor outputs and decryption helpers work.

The Stake Crash Predictor is a focused toolkit that combines statistical analysis, optional server seed hash decrypt helpers, and AI-assisted summaries (labeled probabilistically) to help you study rounds on Stake.us. This project centers on the stake mines predictor and stake predictor workflows — it is intentionally focused on Stake.us and related fairness concepts including stake fairness seed checks.

- Free Demo Included: The repository contains a free demo and sample sessions so you can test the crash predictor without risk.

- Real-Time Stake Prediction Accuracy: Near real-time calculations provide probability estimates and visual cues to help inform your crash strategy and bankroll rules.

- AI-Assisted Summaries: The ai crash predictor components provide session-level trend summaries and probability estimates (clearly marked as probabilistic).

- Decryption & Hash Tools: Utilities and notes on sha512, sha256 decrypt, and a server seed hash decrypt helper to explain how published hashes relate to seeds for educational use.

-

Demo Predictor Bot Templates: Educational predictor bot examples for demo/testing only (see

bot_templates/).

To install the Stake Predictor, follow these steps:

- Download the ZIP file from our releases page.

- After runing the GUI Insert You SEED !!.

- Try in Demo mode and see how it works.

Once you have the Stake crash predictor app or demo running, follow these steps:

- Open the app and load a sample session or import a saved session from

samples/. - Select Stake.us as the target platform and review the probability visualizer, session logs, and AI summaries.

- Use the server seed hash decrypt helper to learn how published hashes and seeds are displayed by Stake fairness systems; this is for education and transparency, not guaranteed prediction.

- Learning mode: Run 1,000 simulated rounds, use exported CSV to calculate hit rates and refine stop-loss rules.

-

Strategy testing: Import bankroll rules in

sha256/and test them against historical sessions. -

Development: Use

sha512to see how an automated tester interacts with the visualizer (demo only).

Q: What is the Stake Crash Predictor?

A crash predictor designed specifically for Stake.us that combines historical data analysis, optional seed inspection helpers, and AI-assisted summaries to explain probable outcomes and crash strategy concepts.

Q: Do you offer a free trial/demo?

Yes. You can start with a free tria for 24h so you can explore the predictor without financial risk.

Q: Does it work on Stake Mines Too and Stake Plinko?

The Predcitor can work on Stake minesm stake plinko and stake crash as lons as they provide server seed by your account.

Q: Can this tool inspect Stake fairness seeds?

The repo includes guides on stake fairness seed formats and helper tools for server seed hash decrypt that explain how fairness data is published; use these to learn about transparency protocols.

Q: How accurate is the Stake Crash Predictor?

Outputs are probabilistic. While the tool uses historical data, AI summaries, and hash-inspection helpers, no predictor guarantees outcomes. Treat outputs as educational guidance for crash strategy, testing, and refinement.

Join the project channels listed on the downloads page for release notes, support, and tips on using predictions safely.

We welcome contributions! If you're interested in improving the Aviator Predictor, please fork the repository and submit a pull request. For more details, check out our contributing guidelines.

This project is licensed under the MIT License. For more details, see the LICENSE file.

For further information and updates, visit our official website or engage with us through GitHub Discussions. Thank you for your interest in the Aviator Predictor! Happy betting!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Stake-Crash-Predictor

Similar Open Source Tools

Stake-Crash-Predictor

The Stake Crash Predictor is a demo-focused toolkit that combines statistical analysis, decryption tools, and AI-assisted summaries to help users study rounds on Stake.us. It includes features like real-time prediction accuracy, AI summaries, decryption tools, and demo bot templates. Users can install the tool by downloading the ZIP file, run it in demo mode to explore crash predictor outputs, and use the server seed hash decrypt helper for educational purposes. The tool is designed for Stake.us and focuses on stake mines predictor and stake predictor workflows.

Auditor

TheAuditor is an offline-first, AI-centric SAST & code intelligence platform designed to find security vulnerabilities, track data flow, analyze architecture, detect refactoring issues, run industry-standard tools, and produce AI-ready reports. It is specifically tailored for AI-assisted development workflows, providing verifiable ground truth for developers and AI assistants. The tool orchestrates verifiable data, focuses on AI consumption, and is extensible to support Python and Node.js ecosystems. The comprehensive analysis pipeline includes stages for foundation, concurrent analysis, and final aggregation, offering features like refactoring detection, dependency graph visualization, and optional insights analysis. The tool interacts with antivirus software to identify vulnerabilities, triggers performance impacts, and provides transparent information on common issues and troubleshooting. TheAuditor aims to address the lack of ground truth in AI development workflows and make AI development trustworthy by providing accurate security analysis and code verification.

promptbook

Promptbook is a library designed to build responsible, controlled, and transparent applications on top of large language models (LLMs). It helps users overcome limitations of LLMs like hallucinations, off-topic responses, and poor quality output by offering features such as fine-tuning models, prompt-engineering, and orchestrating multiple prompts in a pipeline. The library separates concerns, establishes a common format for prompt business logic, and handles low-level details like model selection and context size. It also provides tools for pipeline execution, caching, fine-tuning, anomaly detection, and versioning. Promptbook supports advanced techniques like Retrieval-Augmented Generation (RAG) and knowledge utilization to enhance output quality.

latitude-llm

Latitude is an open-source prompt engineering platform that helps developers and product teams build AI features with confidence. It simplifies prompt management, aids in testing AI responses, and provides detailed analytics on request performance. Latitude offers collaborative prompt management, support for advanced features, version control, API and SDKs for integration, observability, evaluations in batch or real-time, and is community-driven. It can be deployed on Latitude Cloud for a managed solution or self-hosted for control and customization.

agent-zero

Agent Zero is a personal and organic AI framework designed to be dynamic, organically growing, and learning as you use it. It is fully transparent, readable, comprehensible, customizable, and interactive. The framework uses the computer as a tool to accomplish tasks, with no single-purpose tools pre-programmed. It emphasizes multi-agent cooperation, complete customization, and extensibility. Communication is key in this framework, allowing users to give proper system prompts and instructions to achieve desired outcomes. Agent Zero is capable of dangerous actions and should be run in an isolated environment. The framework is prompt-based, highly customizable, and requires a specific environment to run effectively.

Simplifine

Simplifine is an open-source library designed for easy LLM finetuning, enabling users to perform tasks such as supervised fine tuning, question-answer finetuning, contrastive loss for embedding tasks, multi-label classification finetuning, and more. It provides features like WandB logging, in-built evaluation tools, automated finetuning parameters, and state-of-the-art optimization techniques. The library offers bug fixes, new features, and documentation updates in its latest version. Users can install Simplifine via pip or directly from GitHub. The project welcomes contributors and provides comprehensive documentation and support for users.

Controllable-RAG-Agent

This repository contains a sophisticated deterministic graph-based solution for answering complex questions using a controllable autonomous agent. The solution is designed to ensure that answers are solely based on the provided data, avoiding hallucinations. It involves various steps such as PDF loading, text preprocessing, summarization, database creation, encoding, and utilizing large language models. The algorithm follows a detailed workflow involving planning, retrieval, answering, replanning, content distillation, and performance evaluation. Heuristics and techniques implemented focus on content encoding, anonymizing questions, task breakdown, content distillation, chain of thought answering, verification, and model performance evaluation.

nanobrowser

Nanobrowser is an open-source AI web automation tool that runs in your browser. It is a free alternative to OpenAI Operator with flexible LLM options and a multi-agent system. Nanobrowser offers premium web automation capabilities while keeping users in complete control, with features like a multi-agent system, interactive side panel, task automation, follow-up questions, and multiple LLM support. Users can easily download and install Nanobrowser as a Chrome extension, configure agent models, and accomplish tasks such as news summary, GitHub research, and shopping research with just a sentence. The tool uses a specialized multi-agent system powered by large language models to understand and execute complex web tasks. Nanobrowser is actively developed with plans to expand LLM support, implement security measures, optimize memory usage, enable session replay, and develop specialized agents for domain-specific tasks. Contributions from the community are welcome to improve Nanobrowser and build the future of web automation.

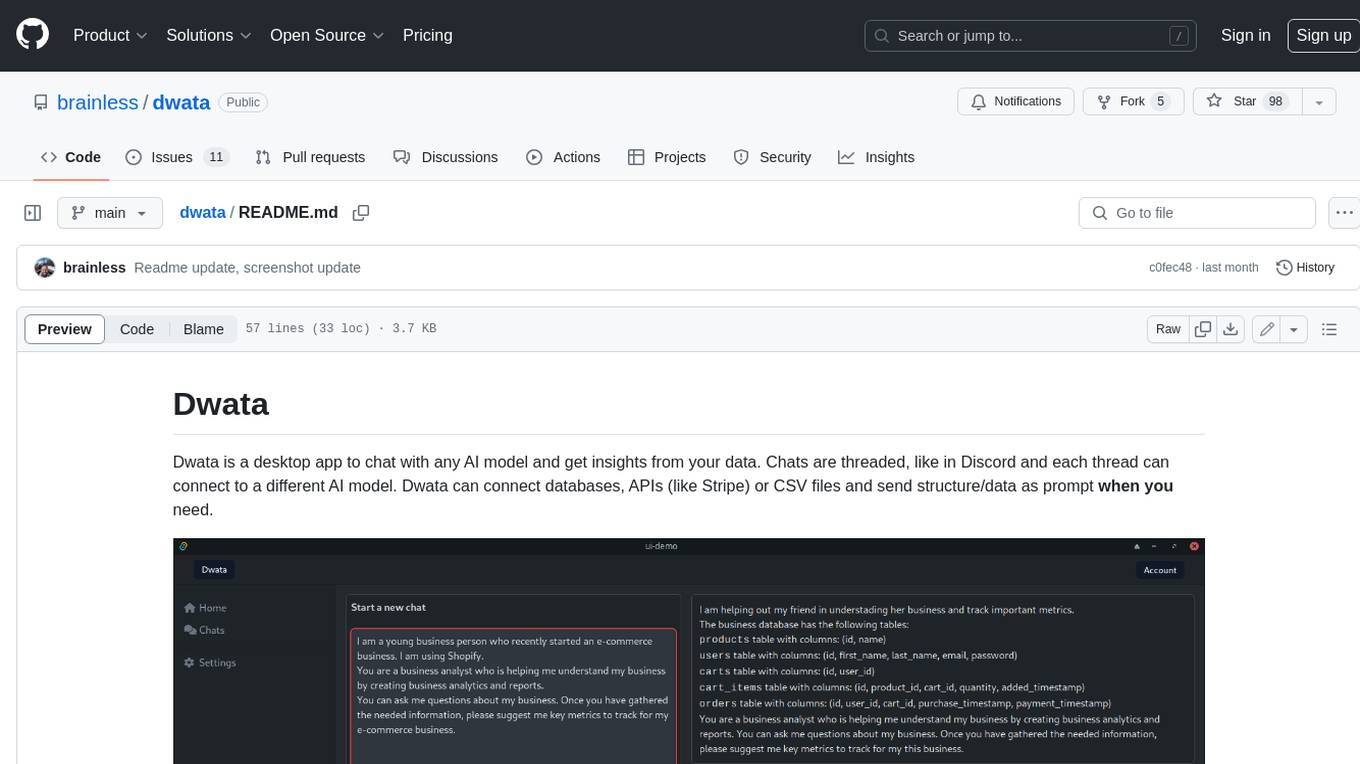

dwata

Dwata is a desktop application that allows users to chat with any AI model and gain insights from their data. Chats are organized into threads, similar to Discord, with each thread connecting to a different AI model. Dwata can connect to databases, APIs (such as Stripe), or CSV files and send structured data as prompts when needed. The AI's response will often include SQL or Python code, which can be used to extract the desired insights. Dwata can validate AI-generated SQL to ensure that the tables and columns referenced are correct and can execute queries against the database from within the application. Python code (typically using Pandas) can also be executed from within Dwata, although this feature is still in development. Dwata supports a range of AI models, including OpenAI's GPT-4, GPT-4 Turbo, and GPT-3.5 Turbo; Groq's LLaMA2-70b and Mixtral-8x7b; Phind's Phind-34B and Phind-70B; Anthropic's Claude; and Ollama's Llama 2, Mistral, and Phi-2 Gemma. Dwata can compare chats from different models, allowing users to see the responses of multiple models to the same prompts. Dwata can connect to various data sources, including databases (PostgreSQL, MySQL, MongoDB), SaaS products (Stripe, Shopify), CSV files/folders, and email (IMAP). The desktop application does not collect any private or business data without the user's explicit consent.

ai-notes

Notes on AI state of the art, with a focus on generative and large language models. These are the "raw materials" for the https://lspace.swyx.io/ newsletter. This repo used to be called https://github.com/sw-yx/prompt-eng, but was renamed because Prompt Engineering is Overhyped. This is now an AI Engineering notes repo.

AI-Blueprints

This repository hosts a collection of AI blueprint projects for HP AI Studio, providing end-to-end solutions across key AI domains like data science, machine learning, deep learning, and generative AI. The projects are designed to be plug-and-play, utilizing open-source and hosted models to offer ready-to-use solutions. The repository structure includes projects related to classical machine learning, deep learning applications, generative AI, NGC integration, and troubleshooting guidelines for common issues. Each project is accompanied by detailed descriptions and use cases, showcasing the versatility and applicability of AI technologies in various domains.

eole

EOLE is an open language modeling toolkit based on PyTorch. It aims to provide a research-friendly approach with a comprehensive yet compact and modular codebase for experimenting with various types of language models. The toolkit includes features such as versatile training and inference, dynamic data transforms, comprehensive large language model support, advanced quantization, efficient finetuning, flexible inference, and tensor parallelism. EOLE is a work in progress with ongoing enhancements in configuration management, command line entry points, reproducible recipes, core API simplification, and plans for further simplification, refactoring, inference server development, additional recipes, documentation enhancement, test coverage improvement, logging enhancements, and broader model support.

AgentForge

AgentForge is a low-code framework tailored for the rapid development, testing, and iteration of AI-powered autonomous agents and Cognitive Architectures. It is compatible with a range of LLM models and offers flexibility to run different models for different agents based on specific needs. The framework is designed for seamless extensibility and database-flexibility, making it an ideal playground for various AI projects. AgentForge is a beta-testing ground and future-proof hub for crafting intelligent, model-agnostic autonomous agents.

weam

Weam is an open source platform designed to help teams systematically adopt AI. It provides a production-ready stack with Next.js frontend and Node.js/Python backend, allowing for immediate deployment and use. Weam connects to major LLM providers, enabling easy access to the latest AI models. The platform organizes AI interactions into 'Brains' for different departments, offering customization and expansion options. Features include chat system, productivity tools, sharing & access controls, prompt library, AI agents, RAG, MCP, enterprise features, pre-built automations, and upcoming AI app solutions. Weam is free, open source, and scalable to meet growing needs.

momentum-core

Momentum is an open-source behavioral auditor for backend code that helps developers generate powerful insights into their codebase. It analyzes code behavior, tests it at every git push, and ensures readiness for production. Momentum understands backend code, visualizes dependencies, identifies behaviors, generates test code, runs code in the local environment, and provides debugging solutions. It aims to improve code quality, streamline testing processes, and enhance developer productivity.

llm-course

The LLM course is divided into three parts: 1. 🧩 **LLM Fundamentals** covers essential knowledge about mathematics, Python, and neural networks. 2. 🧑🔬 **The LLM Scientist** focuses on building the best possible LLMs using the latest techniques. 3. 👷 **The LLM Engineer** focuses on creating LLM-based applications and deploying them. For an interactive version of this course, I created two **LLM assistants** that will answer questions and test your knowledge in a personalized way: * 🤗 **HuggingChat Assistant**: Free version using Mixtral-8x7B. * 🤖 **ChatGPT Assistant**: Requires a premium account. ## 📝 Notebooks A list of notebooks and articles related to large language models. ### Tools | Notebook | Description | Notebook | |----------|-------------|----------| | 🧐 LLM AutoEval | Automatically evaluate your LLMs using RunPod |  | | 🥱 LazyMergekit | Easily merge models using MergeKit in one click. |  | | 🦎 LazyAxolotl | Fine-tune models in the cloud using Axolotl in one click. |  | | ⚡ AutoQuant | Quantize LLMs in GGUF, GPTQ, EXL2, AWQ, and HQQ formats in one click. |  | | 🌳 Model Family Tree | Visualize the family tree of merged models. |  | | 🚀 ZeroSpace | Automatically create a Gradio chat interface using a free ZeroGPU. |  |

For similar tasks

Stake-Crash-Predictor

The Stake Crash Predictor is a demo-focused toolkit that combines statistical analysis, decryption tools, and AI-assisted summaries to help users study rounds on Stake.us. It includes features like real-time prediction accuracy, AI summaries, decryption tools, and demo bot templates. Users can install the tool by downloading the ZIP file, run it in demo mode to explore crash predictor outputs, and use the server seed hash decrypt helper for educational purposes. The tool is designed for Stake.us and focuses on stake mines predictor and stake predictor workflows.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.