Genkit

An open source framework for building AI-powered apps with familiar code-centric patterns. Genkit makes it easy to develop, integrate, and test AI features with observability and evaluations. Genkit works with various models and platforms.

Stars: 2809

Genkit is an open-source framework for building full-stack AI-powered applications, used in production by Google's Firebase. It provides SDKs for JavaScript/TypeScript (Stable), Go (Beta), and Python (Alpha) with unified interface for integrating AI models from providers like Google, OpenAI, Anthropic, Ollama. Rapidly build chatbots, automations, and recommendation systems using streamlined APIs for multimodal content, structured outputs, tool calling, and agentic workflows. Genkit simplifies AI integration with open-source SDK, unified APIs, and offers text and image generation, structured data generation, tool calling, prompt templating, persisted chat interfaces, AI workflows, and AI-powered data retrieval (RAG).

README:

Genkit is an open-source framework for building full-stack AI-powered applications, built and used in production by Google's Firebase. It provides SDKs for multiple programming languages with varying levels of stability:

- JavaScript/TypeScript (Stable): Production-ready with full feature support

- Go (Beta): Feature-complete but may have breaking changes

- Python (Alpha): Early development with core functionality

It offers a unified interface for integrating AI models from providers like Google, OpenAI, Anthropic, Ollama, and more. Rapidly build and deploy production-ready chatbots, automations, and recommendation systems using streamlined APIs for multimodal content, structured outputs, tool calling, and agentic workflows.

Get started with just a few lines of code:

import { genkit } from 'genkit';

import { googleAI } from '@genkit-ai/google-genai';

const ai = genkit({ plugins: [googleAI()] });

const { text } = await ai.generate({

model: googleAI.model('gemini-2.5-flash'),

prompt: 'Why is Firebase awesome?'

});Play with AI sample apps, with visualizations of the Genkit code that powers them, at no cost to you.

| Broad AI model support | Use a unified interface to integrate with hundreds of models from providers like Google, OpenAI, Anthropic, Ollama, and more. Explore, compare, and use the best models for your needs. |

| Simplified AI development | Use streamlined APIs to build AI features with structured output, agentic tool calling, context-aware generation, multi-modal input/output, and more. Genkit handles the complexity of AI development, so you can build and iterate faster. |

| Web and mobile ready | Integrate seamlessly with frameworks and platforms including Next.js, React, Angular, iOS, Android, using purpose-built client SDKs and helpers. |

| Cross-language support | Build with the language that best fits your project. Genkit provides SDKs for JavaScript/TypeScript (Stable), Go (Beta), and Python (Alpha) with consistent APIs and capabilities across all supported languages. |

| Deploy anywhere | Deploy AI logic to any environment that supports your chosen programming language, such as Cloud Functions for Firebase, Google Cloud Run, or third-party platforms, with or without Google services. |

| Developer tools | Accelerate AI development with a purpose-built, local CLI and Developer UI. Test prompts and flows against individual inputs or datasets, compare outputs from different models, debug with detailed execution traces, and use immediate visual feedback to iterate rapidly on prompts. |

| Production monitoring | Ship AI features with confidence using comprehensive production monitoring. Track model performance, and request volumes, latency, and error rates in a purpose-built dashboard. Identify issues quickly with detailed observability metrics, and ensure your AI features meet quality and performance targets in real-world usage. |

Genkit simplifies AI integration with an open-source SDK and unified APIs that work across various model providers and programming languages. It abstracts away complexity so you can focus on delivering great user experiences.

Some key features offered by Genkit include:

- Text and image generation

- Type-safe, structured data generation

- Tool calling

- Prompt templating

- Persisted chat interfaces

- AI workflows

- AI-powered data retrieval (RAG)

Genkit is designed for server-side deployment in multiple language environments, and also provides seamless client-side integration through dedicated helpers and client SDKs.

| 1 | Choose your language and model provider | Select the Genkit SDK for your preferred language (JavaScript/TypeScript (Stable), Go (Beta), or Python (Alpha)). Choose a model provider like Google Gemini or Anthropic, and get an API key. Some providers, like Vertex AI, may rely on a different means of authentication. |

| 2 | Install the SDK and initialize | Install the Genkit SDK, model-provider package of your choice, and the Genkit CLI. Import the Genkit and provider packages and initialize Genkit with the provider API key. |

| 3 | Write and test AI features | Use the Genkit SDK to build AI features for your use case, from basic text generation to complex multi-step workflows and agents. Use the CLI and Developer UI to help you rapidly test and iterate. |

| 4 | Deploy and monitor | Deploy your AI features to Firebase, Google Cloud Run, or any environment that supports your chosen programming language. Integrate them into your app, and monitor them in production in the Firebase console. |

- JavaScript/TypeScript quickstart (Stable)

- Go quickstart (Beta)

- Python quickstart (Alpha)

Genkit provides a CLI and a local UI to streamline your AI development workflow.

The Genkit CLI includes commands for running and evaluating your Genkit functions (flows) and collecting telemetry and logs.

-

Install:

npm install -g genkit-cli -

Run a command, wrapped with telemetry, a interactive developer UI, etc:

genkit start -- <command to run your code>

The Genkit developer UI is a local interface for testing, debugging, and iterating on your AI application.

Key features:

- Run: Execute and experiment with Genkit flows, prompts, queries, and more in dedicated playgrounds.

- Inspect: Analyze detailed traces of past executions, including step-by-step breakdowns of complex flows.

- Evaluate: Review the results of evaluations run against your flows, including performance metrics and links to relevant traces.

Want to skip the local setup? Click below to try out Genkit using Firebase Studio, Google's AI-assisted workspace for full-stack app development in the cloud.

- Join us on Discord – Get help, share ideas, and chat with other developers.

- Contribute on GitHub – Report bugs, suggest features, or explore the source code.

- Contribute to Documentation and Samples – Report issues in Genkit's documentation, or contribute to the samples.

Contributions to Genkit are welcome and highly appreciated! See our Contribution Guide to get started.

Genkit is built by Firebase with contributions from the Open Source Community.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for Genkit

Similar Open Source Tools

Genkit

Genkit is an open-source framework for building full-stack AI-powered applications, used in production by Google's Firebase. It provides SDKs for JavaScript/TypeScript (Stable), Go (Beta), and Python (Alpha) with unified interface for integrating AI models from providers like Google, OpenAI, Anthropic, Ollama. Rapidly build chatbots, automations, and recommendation systems using streamlined APIs for multimodal content, structured outputs, tool calling, and agentic workflows. Genkit simplifies AI integration with open-source SDK, unified APIs, and offers text and image generation, structured data generation, tool calling, prompt templating, persisted chat interfaces, AI workflows, and AI-powered data retrieval (RAG).

baserow

Baserow is a secure, open-source platform that allows users to build databases, applications, automations, and AI agents without writing any code. With enterprise-grade security compliance and both cloud and self-hosted deployment options, Baserow empowers teams to structure data, automate processes, create internal tools, and build custom dashboards. It features a spreadsheet database hybrid, AI Assistant for natural language database creation, GDPR, HIPAA, and SOC 2 Type II compliance, and seamless integration with existing tools. Baserow is API-first, extensible, and uses frameworks like Django, Vue.js, and PostgreSQL.

OM1

OpenMind's OM1 is a modular AI runtime empowering developers to create and deploy multimodal AI agents across digital environments and physical robots. OM1 agents process diverse inputs like web data, social media, camera feeds, and LIDAR, enabling actions including motion, autonomous navigation, and natural conversations. The goal is to create highly capable human-focused robots that are easy to upgrade and reconfigure for different physical form factors. OM1 features a modular architecture, supports new hardware via plugins, offers web-based debugging display, and pre-configured endpoints for various services.

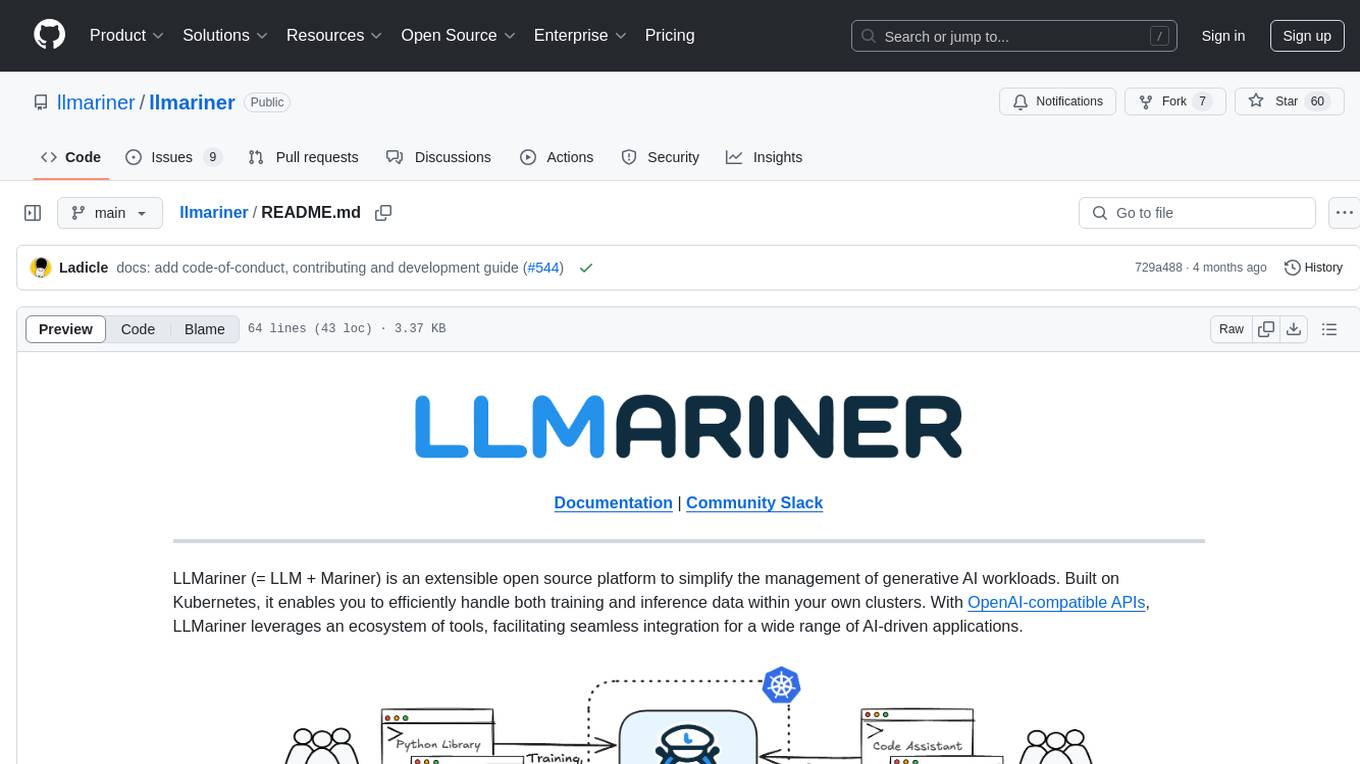

llmariner

LLMariner is an extensible open source platform built on Kubernetes to simplify the management of generative AI workloads. It enables efficient handling of training and inference data within clusters, with OpenAI-compatible APIs for seamless integration with a wide range of AI-driven applications.

LazyLLM

LazyLLM is a low-code development tool for building complex AI applications with multiple agents. It assists developers in building AI applications at a low cost and continuously optimizing their performance. The tool provides a convenient workflow for application development and offers standard processes and tools for various stages of application development. Users can quickly prototype applications with LazyLLM, analyze bad cases with scenario task data, and iteratively optimize key components to enhance the overall application performance. LazyLLM aims to simplify the AI application development process and provide flexibility for both beginners and experts to create high-quality applications.

metorial-platform

Metorial Platform is an open source integration platform designed for developers to easily connect their AI applications to external data sources, APIs, and tools. It provides one-liner SDKs for JavaScript/TypeScript and Python, is powered by the Model Context Protocol (MCP), and offers features like self-hosting, large server catalog, embedded MCP Explorer, monitoring and debugging capabilities. The platform is built to scale for enterprise-grade applications and offers customizable options, open-source flexibility, multi-instance support, powerful SDKs, detailed documentation, full API access, and an advanced dashboard for managing integrations.

coze-studio

Coze Studio is an all-in-one AI agent development tool that offers the most convenient AI agent development environment, from development to deployment. It provides core technologies for AI agent development, complete app templates, and build frameworks. Coze Studio aims to simplify creating, debugging, and deploying AI agents through visual design and build tools, enabling powerful AI app development and customized business logic. The tool is developed using Golang for the backend, React + TypeScript for the frontend, and follows microservices architecture based on domain-driven design principles.

refly

Refly.AI is an open-source AI-native creation engine that empowers users to transform ideas into production-ready content. It features a free-form canvas interface with multi-threaded conversations, knowledge base integration, contextual memory, intelligent search, WYSIWYG AI editor, and more. Users can leverage AI-powered capabilities, context memory, knowledge base integration, quotes, and AI document editing to enhance their content creation process. Refly offers both cloud and self-hosting options, making it suitable for individuals, enterprises, and organizations. The tool is designed to facilitate human-AI collaboration and streamline content creation workflows.

Geoweaver

Geoweaver is an in-browser software that enables users to easily compose and execute full-stack data processing workflows using online spatial data facilities, high-performance computation platforms, and open-source deep learning libraries. It provides server management, code repository, workflow orchestration software, and history recording capabilities. Users can run it from both local and remote machines. Geoweaver aims to make data processing workflows manageable for non-coder scientists and preserve model run history. It offers features like progress storage, organization, SSH connection to external servers, and a web UI with Python support.

draive

draive is an open-source Python library designed to simplify and accelerate the development of LLM-based applications. It offers abstract building blocks for connecting functionalities with large language models, flexible integration with various AI solutions, and a user-friendly framework for building scalable data processing pipelines. The library follows a function-oriented design, allowing users to represent complex programs as simple functions. It also provides tools for measuring and debugging functionalities, ensuring type safety and efficient asynchronous operations for modern Python apps.

goose

Codename Goose is an open-source, extensible AI agent designed to provide functionalities beyond code suggestions. Users can install, execute, edit, and test with any LLM. The tool aims to enhance the coding experience by offering advanced features and capabilities. Stay updated for the upcoming 1.0 release scheduled by the end of January 2025. Explore the v0.X documentation available on the project's GitHub pages.

adk-js

Agent Development Kit (ADK) for TypeScript is an open-source toolkit designed for developers to build, evaluate, and deploy sophisticated AI agents with flexibility and control. It allows defining agent behavior, orchestration, and tool use directly in code for robust debugging, versioning, and deployment. With rich tool ecosystem, code-first development, and modular multi-agent systems, ADK offers tight integration with the Google ecosystem and enables the creation of scalable applications by composing multiple specialized agents into flexible hierarchies.

countly-server

Countly is a privacy-first, AI-ready analytics and customer engagement platform built for organizations that require full data ownership and deployment flexibility. It can be deployed on-premises or in a private cloud, giving complete control over data, infrastructure, compliance, and security. Teams use Countly to understand user behavior across mobile, web, desktop, and connected devices, optimize product and customer experiences in real time, and automate and personalize customer engagement across channels. With flexible data tracking, customizable dashboards, and a modular plugin-based architecture, Countly scales with the product while ensuring long-term autonomy and zero vendor lock-in. Built for privacy, designed for flexibility, and ready for AI-driven innovation.

AgentUp

AgentUp is an active development tool that provides a developer-first agent framework for creating AI agents with enterprise-grade infrastructure. It allows developers to define agents with configuration, ensuring consistent behavior across environments. The tool offers secure design, configuration-driven architecture, extensible ecosystem for customizations, agent-to-agent discovery, asynchronous task architecture, deterministic routing, and MCP support. It supports multiple agent types like reactive agents and iterative agents, making it suitable for chatbots, interactive applications, research tasks, and more. AgentUp is built by experienced engineers from top tech companies and is designed to make AI agents production-ready, secure, and reliable.

For similar tasks

agentcloud

AgentCloud is an open-source platform that enables companies to build and deploy private LLM chat apps, empowering teams to securely interact with their data. It comprises three main components: Agent Backend, Webapp, and Vector Proxy. To run this project locally, clone the repository, install Docker, and start the services. The project is licensed under the GNU Affero General Public License, version 3 only. Contributions and feedback are welcome from the community.

zep-python

Zep is an open-source platform for building and deploying large language model (LLM) applications. It provides a suite of tools and services that make it easy to integrate LLMs into your applications, including chat history memory, embedding, vector search, and data enrichment. Zep is designed to be scalable, reliable, and easy to use, making it a great choice for developers who want to build LLM-powered applications quickly and easily.

lollms

LoLLMs Server is a text generation server based on large language models. It provides a Flask-based API for generating text using various pre-trained language models. This server is designed to be easy to install and use, allowing developers to integrate powerful text generation capabilities into their applications.

LlamaIndexTS

LlamaIndex.TS is a data framework for your LLM application. Use your own data with large language models (LLMs, OpenAI ChatGPT and others) in Typescript and Javascript.

semantic-kernel

Semantic Kernel is an SDK that integrates Large Language Models (LLMs) like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C#, Python, and Java. Semantic Kernel achieves this by allowing you to define plugins that can be chained together in just a few lines of code. What makes Semantic Kernel _special_ , however, is its ability to _automatically_ orchestrate plugins with AI. With Semantic Kernel planners, you can ask an LLM to generate a plan that achieves a user's unique goal. Afterwards, Semantic Kernel will execute the plan for the user.

botpress

Botpress is a platform for building next-generation chatbots and assistants powered by OpenAI. It provides a range of tools and integrations to help developers quickly and easily create and deploy chatbots for various use cases.

BotSharp

BotSharp is an open-source machine learning framework for building AI bot platforms. It provides a comprehensive set of tools and components for developing and deploying intelligent virtual assistants. BotSharp is designed to be modular and extensible, allowing developers to easily integrate it with their existing systems and applications. With BotSharp, you can quickly and easily create AI-powered chatbots, virtual assistants, and other conversational AI applications.

qdrant

Qdrant is a vector similarity search engine and vector database. It is written in Rust, which makes it fast and reliable even under high load. Qdrant can be used for a variety of applications, including: * Semantic search * Image search * Product recommendations * Chatbots * Anomaly detection Qdrant offers a variety of features, including: * Payload storage and filtering * Hybrid search with sparse vectors * Vector quantization and on-disk storage * Distributed deployment * Highlighted features such as query planning, payload indexes, SIMD hardware acceleration, async I/O, and write-ahead logging Qdrant is available as a fully managed cloud service or as an open-source software that can be deployed on-premises.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.