AI-Vibe-Writing-Skills

Using AI for high quality writing

Stars: 95

AI Vibe Writing Skills is an AI Skill that provides 'Style Transfer' and 'Error Memory' capabilities for personalized writing. It aims to assist in writing tasks by focusing on creativity, content refinement, and personalized style, rather than replacing the creative process. The tool offers features like style transfer, error memory, grammar check, long-term memory storage, writing knowledge bases, and multi-agent collaboration to enhance content production efficiency and quality.

README:

An AI Skill that provides "Style Transfer" and "Error Memory" capabilities for personalized writing.

一个具备“风格迁移”和“错误记忆”功能的 AI 写作助手,打造你专属的“影子写手”。

v1.4 - Multi-Agent Writing Skill / 多智能体协作写作 Added outline-manager, content-writer, content-review agents with a coordinator loop. 新增大纲管理、写作、检阅智能体与流程协调器,完成写作闭环。

v1.3 - Writing Knowledge Bases / 写作知识库 Added curated writing knowledge bases for grant proposals, papers, and theses. 新增基金、论文与学位论文写作知识库,支持按写作类型检索与应用。

v1.2 - Long-Term Memory / 长期记忆 Added domain-based hard/soft memory to preserve precise terms and user preferences. 新增按领域划分的硬性/柔性记忆,用于精准术语与偏好存储。

v1.1 - Grammar & Spell Checker / 语法与拼写检查器 Added a dedicated module to detect and correct grammatical errors and typos in both English and Chinese. 新增了专用的语法与拼写检查模块,支持中英文双语纠错。

本工程(AI-Vibe-Writing-Skills)的初衷,是跳出 “AI 替代创作” 的误区,聚焦于AI 的辅助价值 将写作从重复、机械的 “dirty work”(如素材整理、格式规范、基础校对、灵感初步筛选等)中解放出来,把精力聚焦在创意构思、内容深度打磨、风格个性化等核心环节,最终实现更高效率、更高质量的内容生产。

Mimic: Analyzes your past writings to extract "Style DNA".

Consistency: Maintains your unique tone, sentence structure, and vocabulary.

原理: 分析过往文章提取“风格指纹”,保持语调、句式和用词的一致性。

Learning: Remembers your corrections and "Don'ts".

Avoidance: Automatically checks against the "Error Log" before writing.

原理: 记住你的纠正和禁忌,在生成前自动查阅“错题本”以避免重犯。

Quality: Built-in bilingual grammar and spell checker.

Review: Identifies typos and awkward phrasing without changing your style.

原理: 内置中英文双语语法检查,识别错别字和语病,同时保留原有风格。

Hard Memory: Stores exact terms, units, and key values by domain.

Soft Memory: Stores preferences, phrasing, and tone by domain.

原理: 硬性记忆用于术语、单位、关键数值的精确存储;柔性记忆用于偏好与表达习惯的持续适配。

Grant: Reviewer-aligned structure, persuasion, and feasibility cues.

Paper: Academic rigor, novelty framing, and LaTeX cleanliness.

Thesis: Long-form clarity, topic sentences, and narrative flow.

原理: 针对不同写作类型沉淀可复用规范,按领域检索并持续迭代。

Outline Manager: Enforces outline constraints and validates outputs.

Writer: Drafts and revises under outline and memory constraints.

Reviewer: Detects AI tone and integrates multi-platform checks.

原理: 通过大纲约束→内容创作→AI 味检测→多平台核验实现闭环。

Context: Adapts to specific audiences (e.g., Technical, General) and topics.

Outline: Manages structure for long-form content.

原理: 自动适配目标受众和主题,支持长文大纲管理。

"Use outline-manager-agent to generate a 3-level outline for topic X."

“调用大纲管理智能体,为主题 X 生成三级大纲。”

"Use content-writer-agent to draft section 2 based on outline-001."

“调用写作智能体,基于 outline-001 写第 2 节。”

"Use content-review-agent to review the latest draft and report AI tone."

“调用检阅智能体,检查最新草稿并输出 AI 味报告。”

"Use workflow-coordinator to run the full multi-agent loop."

“调用流程协调器,执行完整多智能体闭环。”

You can activate this system immediately by following these steps: 你可以立即尝试以下步骤来“激活”这个系统:

You MUST clone the full repository to use this system.

The system relies on the local .ai_context folder for memory, style profiles, and agent configurations. Without cloning, the agents cannot access your style or project settings.

你必须克隆完整仓库才能使用本系统。

系统依赖本地 .ai_context 文件夹来读取记忆、风格配置和智能体设置。如果不克隆,智能体将无法访问你的风格或项目设置。

git clone https://github.com/donghuixin/AI-Vibe-Writing-Skills.gitOpen the cloned folder in your IDE (Trae, VS Code, Cursor) to activate the context. 克隆后在 IDE(Trae, VS Code, Cursor)中打开该文件夹以激活上下文。

Choose the right agent for your task. You don't always need all of them. 根据你的任务选择合适的智能体组合,不需要每次都全量开启。

| Goal / 目标 | Recommended Agents / 推荐智能体 | Why / 原因 |

|---|---|---|

| Simple Writing / 简单写作 | Content Writer | Direct drafting with style mimicry. 直接生成,保留风格。 |

| Long-form Content / 长文创作 | Outline Manager + Content Writer | Ensures logical structure and flow. 保证长文结构逻辑严密。 |

| Quality Assurance / 质量把控 | Content Writer + Content Review | Checks for AI tone and plagiarism. 检测 AI 味和查重。 |

| Full Automation / 全自动闭环 | Workflow Coordinator | Orchestrates the full loop (Outline → Write → Review). 自动调度全流程。 |

Required for first-time use. Provide 3-5 of your past high-quality writings to the AI. 首次使用必须执行。 把你的 3-5 篇过往高质量文章发给 AI,并说:

"Please use the Style Extractor to analyze these texts and update

style_profile.md."“请使用 Style Extractor 分析这些文章,并更新

style_profile.md。”

Optional but recommended.

Open .ai_context/custom_specs.md and fill in your common writing context.

可选但推荐。

你可以打开 .ai_context/custom_specs.md,填入你常用的写作背景,这样我每次写作都会自动适配这些背景。

Example / 例如:

- Audience / 受众: Technical Beginners / 技术小白

- Domain / 领域: Artificial Intelligence / 人工智能

Agent: Content Writer Just give a task. No need to repeat complex prompts. 直接发布任务即可,无需每次重复 Prompt。

"Based on my style, write an introduction to RAG technology."

“基于我的风格写一篇关于 RAG 技术的介绍。”

I will automatically read style_profile.md to mimic your tone and check error_log.md to avoid taboos.

我会自动读取 style_profile.md 模仿你的语气,并检查 error_log.md 避开禁忌。

If I make a mistake (e.g., use a word you dislike), correct me immediately. 如果我犯了错(比如用了你不喜欢的词),直接告诉我:

"Don't use the word 'delve'. Add this to my error log."

“不要用‘delve’这个词,把它加入错题本。”

I will automatically update error_log.md to ensure I don't make the same mistake again.

我会自动更新 error_log.md,保证下次不再犯。

Provide durable domain facts or preferences to store. 提供稳定的领域事实或偏好以便长期存储:

"In medical writing, always use mmol/L for glucose. Save this as hard memory."

“在医学领域,葡萄糖单位固定使用 mmol/L,作为硬性记忆存储。”

Select a writing domain and apply the corresponding knowledge base. 选择写作类型并应用对应知识库:

"Use the paper knowledge base and draft the Related Work with reviewer-style rigor."

“调用论文知识库,以审稿人视角写 Related Work。”

Agents: Workflow Coordinator / Outline Manager / Content Writer / Content Review Trigger the multi-agent loop and let the system orchestrate writing. 启动多智能体闭环并交由系统协调:

"Use outline-manager-agent + content-writer-agent + content-review-agent to draft section 2."

"调用大纲管理、写作、检阅三智能体完成第 2 章。"

Agent: Content Review Ready for AI & Plagiarism Detection. The system is pre-configured to support GPTZero, Copyleaks, and other detection APIs. You just need to add your API key. 已预置 AI 与查重检测能力。 系统已预配置支持 GPTZero、Copyleaks 等检测 API。你只需填入 API Key 即可启用。

-

Open configuration file:

.ai_context/custom_specs.md - Find API Keys section: Look for "Detector API Keys"

-

Configure keys:

-

GPTZero: Set your GPTZero API Key (e.g.,

env:GPTZERO_API_KEY) - Other services: Set keys for Originality, Copyscape, Turnitin, etc.

-

GPTZero: Set your GPTZero API Key (e.g.,

- Environment variables: Set the actual API keys in your environment

"Set GPTZero API key in custom_specs.md and environment"

"在 custom_specs.md 中设置 GPTZero API Key 并配置环境变量"

Note: The system will ask for confirmation before using paid services like GPTZero. 注意:系统在使用 GPTZero 等付费服务前会请求用户确认。

Tip: Looking for free alternatives? Check out FREE_AI_DETECTION_APIS.md for a curated list of free AI detection APIs (Copyleaks, Sapling, etc.).

提示:寻找免费替代方案?查看 FREE_AI_DETECTION_APIS.md 获取精选的免费 AI 检测 API 列表(如 Copyleaks, Sapling 等)。

-

FREE_AI_DETECTION_APIS.md: Guide to free AI detection APIs. -

.ai_context/: The brain of the system.-

style_profile.md: Your style fingerprint. -

error_log.md: Your negative constraints. -

custom_specs.md: User-defined writing context. -

outline_template.md: Template for structuring content. -

memory/hard_memory.json: Domain hard memory (terms, units, key values). -

memory/soft_memory.json: Domain soft memory (preferences, phrasing, tone). -

prompts/: Core logic prompts.1_style_extractor.md2_writer.md3_error_logger.md4_grammar_checker.md5_long_term_memory.md6_outline_manager_agent.md7_content_writer_agent.md8_content_review_agent.md9_workflow_coordinator.md

-

-

.traerules: System instructions ensuring the workflow is followed.

graph TD

A[用户写作请求 / User Request] --> B{分析阶段 / Analysis Phase}

B --> C[Style Extractor

<br />风格提取器]

B --> D[Custom Specs

<br />自定义规范]

B --> P[Long-Term Memory

<br />长期记忆]

C --> E[Style Profile

<br />风格库]

D --> F[Outline Template

<br />大纲模板]

A --> G{存储阶段 / Storage Phase}

G --> E

G --> H[Error Log

<br />错题本]

G --> Q[Hard Memory

<br />硬性记忆]

G --> R[Soft Memory

<br />柔性记忆]

A --> I{生成阶段 / Generation Phase}

I --> J[The Writer

<br />写作引擎]

I --> K[Grammar Checker

<br />语法检查器]

I --> S[Memory Recall

<br />记忆检索]

J --> L[生成内容 / Generated Content]

K --> L

S --> L

L --> M{迭代阶段 / Iteration Phase}

M --> N[用户反馈 / User Feedback]

N --> O[Error Logger

<br />错误记录器]

O --> H

O --> Q

O --> R

style A fill:#f9f,stroke:#333,stroke-width:2px

style L fill:#9f9,stroke:#333,stroke-width:2px

style H fill:#ff9,stroke:#333,stroke-width:2px

style E fill:#9ff,stroke:#333,stroke-width:2pxCore Logic / 核心逻辑: 分析(提取风格) -> 存储(建立风格库与错题本) -> 生成(RAG 检索增强) -> 迭代(更新错题本)

Workflow Explanation / 流程说明:

- Analysis: The system analyzes user-provided samples and domain context to extract style traits and memory candidates.

- Storage: Hard memory and soft memory are stored by domain alongside the style profile and error log.

- Generation: The Writer retrieves relevant hard/soft memory to ensure accuracy and tone alignment, while the Grammar Checker ensures quality.

- Iteration: User feedback updates both the error log and long-term memory to improve future outputs.

This tutorial shows how to configure each agent role using existing prompt and spec files.

以下教程演示如何通过现有的 prompt 与规范文件配置各智能体角色。

Purpose / 作用: Create, store, and validate outlines. / 创建、存储并校验大纲。

Where to edit / 编辑位置:

.ai_context/prompts/6_outline_manager_agent.md.ai_context/outline_template.md.ai_context/custom_specs.md

Configuration Steps / 配置步骤:

-

Define Structure: Open

.ai_context/outline_template.mdand define your preferred outline JSON structure (sections, paragraphs, word ranges). -

Set Validation Rules: In

.ai_context/custom_specs.md, adjust outline validation thresholds:-

Word Deviation Tolerance: Acceptable deviation from word count targets (e.g., 0.1 for 10%). -

Core Point Coverage: Minimum percentage of core points that must be covered (e.g., 0.9).

-

-

Configure Storage: (Optional) In

6_outline_manager_agent.md, modify the outline storage key format if needed.

Purpose / 作用: Draft and revise content based on outline and memory. / 按大纲与记忆写作与修订。

Where to edit / 编辑位置:

.ai_context/prompts/7_content_writer_agent.md.ai_context/custom_specs.md.ai_context/memory/hard_memory.json.ai_context/memory/soft_memory.json

Configuration Steps / 配置步骤:

-

Set Writing Context: In

.ai_context/custom_specs.md, defineTarget AudienceandTopicto guide the writer's tone and depth. -

Configure Revision Limits: In

.ai_context/custom_specs.md, setMax Revision Roundsto control how many times the writer can iterate on a draft. -

Populate Memory:

- Add domain facts (terms, units) to

.ai_context/memory/hard_memory.json. - Add style preferences (phrasing, tone) to

.ai_context/memory/soft_memory.json.

- Add domain facts (terms, units) to

-

Output Format: (Optional) In

7_content_writer_agent.md, customize the content output format and metadata fields if specific metadata is required.

Purpose / 作用: Detect AI tone and aggregate platform checks. / AI 味检测与多平台核验。

Where to edit / 编辑位置:

.ai_context/prompts/8_content_review_agent.md.ai_context/custom_specs.md

Configuration Steps / 配置步骤:

-

Configure GPTZero MCP (New!):

- The agent now supports GPTZero via MCP for AI detection and plagiarism checking.

- Code Reference: 8_content_review_agent.md

-

Set API Keys & Settings:

- Open

.ai_context/custom_specs.md. - Fill in

GPTZero MCPsettings: Service Name, Method, Timeout, and Retry count. - Set your

GPTZero API Key. - Code Reference: custom_specs.md

- Open

- Usage: When you trigger "Review" or "Detection", the agent will automatically call GPTZero via MCP and include the results (AI probability, Plagiarism score) in the unified report.

-

Adjust Thresholds: In

.ai_context/custom_specs.md, setAI Tone Thresholdto determine when a rewrite is triggered.

Purpose / 作用: Orchestrate outline → write → review loops. / 协调整体闭环流程。

Where to edit / 编辑位置:

.ai_context/prompts/9_workflow_coordinator.md.ai_context/custom_specs.md

Configuration Steps / 配置步骤:

- In

9_workflow_coordinator.md, set the loop order and max revision rounds. - In

custom_specs.md, align coordination rules with your writing cadence.

- outline-manager-agent

- content-writer-agent

- content-review-agent

- workflow-coordinator

graph TD

Start([Start / 开始]) --> Coordinator[Workflow Coordinator

<br />流程协调器]

Coordinator -->|1. Create/Load| OutlineMgr[Outline Manager

<br />大纲管理智能体]

OutlineMgr -->|Save to Memory| HardMem[(Hard Memory

<br />硬性记忆)]

OutlineMgr -->|Valid Outline| Coordinator

Coordinator -->|2. Draft Section| Writer[Content Writer

<br />写作智能体]

HardMem -.->|Read Outline| Writer

Writer -->|Draft| Coordinator

Coordinator -->|3. Review| Reviewer[Content Review

<br />检阅智能体]

Reviewer -->|Check| MCP[GPTZero MCP]

Reviewer -->|Result| Decision{Pass? / 通过?}

Decision -->|Yes| Finish([Finish / 完成])

Decision -->|No: Revise / 修订| Writer

style Coordinator fill:#f96,stroke:#333,stroke-width:2px

style OutlineMgr fill:#9cf,stroke:#333,stroke-width:2px

style Writer fill:#9f9,stroke:#333,stroke-width:2px

style Reviewer fill:#fc9,stroke:#333,stroke-width:2px

If the chart looks stale, update the cache parameter to force refresh.

如果图表显示滞后,可更新 cache 参数以强制刷新。

This project is licensed under the MIT License.

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AI-Vibe-Writing-Skills

Similar Open Source Tools

AI-Vibe-Writing-Skills

AI Vibe Writing Skills is an AI Skill that provides 'Style Transfer' and 'Error Memory' capabilities for personalized writing. It aims to assist in writing tasks by focusing on creativity, content refinement, and personalized style, rather than replacing the creative process. The tool offers features like style transfer, error memory, grammar check, long-term memory storage, writing knowledge bases, and multi-agent collaboration to enhance content production efficiency and quality.

lighteval

LightEval is a lightweight LLM evaluation suite that Hugging Face has been using internally with the recently released LLM data processing library datatrove and LLM training library nanotron. We're releasing it with the community in the spirit of building in the open. Note that it is still very much early so don't expect 100% stability ^^' In case of problems or question, feel free to open an issue!

agentfield

AgentField is an open-source control plane designed for autonomous AI agents, providing infrastructure for agents to make decisions beyond chatbots. It offers features like scaling infrastructure, routing & discovery, async execution, durable state, observability, trust infrastructure with cryptographic identity, verifiable credentials, and policy enforcement. Users can write agents in Python, Go, TypeScript, or interact via REST APIs. The tool enables the creation of AI backends that reason autonomously within defined boundaries, offering predictability and flexibility. AgentField aims to bridge the gap between AI frameworks and production-ready infrastructure for AI agents.

PAI

PAI is an open-source personal AI infrastructure designed to orchestrate personal and professional lives. It provides a scaffolding framework with real-world examples for life management, professional tasks, and personal goals. The core mission is to augment humans with AI capabilities to thrive in a world full of AI. PAI features UFC Context Architecture for persistent memory, specialized digital assistants for various tasks, an integrated tool ecosystem with MCP Servers, voice system, browser automation, and API integrations. The philosophy of PAI focuses on augmenting human capability rather than replacing it. The tool is MIT licensed and encourages contributions from the open-source community.

automem

AutoMem is a production-grade long-term memory system for AI assistants, achieving 90.53% accuracy on the LoCoMo benchmark. It combines FalkorDB (Graph) and Qdrant (Vectors) storage systems to store, recall, connect, learn, and perform with memories. AutoMem enables AI assistants to remember, connect, and evolve their understanding over time, similar to human long-term memory. It implements techniques from peer-reviewed memory research and offers features like multi-hop bridge discovery, knowledge graphs that evolve, 9-component hybrid scoring, memory consolidation cycles, background intelligence, 11 relationship types, and more. AutoMem is benchmark-proven, research-validated, and production-ready, with features like sub-100ms recall, concurrent writes, automatic retries, health monitoring, dual storage redundancy, and automated backups.

presenton

Presenton is an open-source AI presentation generator and API that allows users to create professional presentations locally on their devices. It offers complete control over the presentation workflow, including custom templates, AI template generation, flexible generation options, and export capabilities. Users can use their own API keys for various models, integrate with Ollama for local model running, and connect to OpenAI-compatible endpoints. The tool supports multiple providers for text and image generation, runs locally without cloud dependencies, and can be deployed as a Docker container with GPU support.

tensorzero

TensorZero is an open-source platform that helps LLM applications graduate from API wrappers into defensible AI products. It enables a data & learning flywheel for LLMs by unifying inference, observability, optimization, and experimentation. The platform includes a high-performance model gateway, structured schema-based inference, observability, experimentation, and data warehouse for analytics. TensorZero Recipes optimize prompts and models, and the platform supports experimentation features and GitOps orchestration for deployment.

evi-run

evi-run is a powerful, production-ready multi-agent AI system built on Python using the OpenAI Agents SDK. It offers instant deployment, ultimate flexibility, built-in analytics, Telegram integration, and scalable architecture. The system features memory management, knowledge integration, task scheduling, multi-agent orchestration, custom agent creation, deep research, web intelligence, document processing, image generation, DEX analytics, and Solana token swap. It supports flexible usage modes like private, free, and pay mode, with upcoming features including NSFW mode, task scheduler, and automatic limit orders. The technology stack includes Python 3.11, OpenAI Agents SDK, Telegram Bot API, PostgreSQL, Redis, and Docker & Docker Compose for deployment.

rkllama

RKLLama is a server and client tool designed for running and interacting with LLM models optimized for Rockchip RK3588(S) and RK3576 platforms. It allows models to run on the NPU, with features such as running models on NPU, partial Ollama API compatibility, pulling models from Huggingface, API REST with documentation, dynamic loading/unloading of models, inference requests with streaming modes, simplified model naming, CPU model auto-detection, and optional debug mode. The tool supports Python 3.8 to 3.12 and has been tested on Orange Pi 5 Pro and Orange Pi 5 Plus with specific OS versions.

claude_code_bridge

Claude Code Bridge (ccb) is a new multi-model collaboration tool that enables effective collaboration among multiple AI models in a split-pane CLI environment. It offers features like visual and controllable interface, persistent context maintenance, token savings, and native workflow integration. The tool allows users to unleash the full power of CLI by avoiding model bias, cognitive blind spots, and context limitations. It provides a new WYSIWYG solution for multi-model collaboration, making it easier to control and visualize multiple AI models simultaneously.

local-cocoa

Local Cocoa is a privacy-focused tool that runs entirely on your device, turning files into memory to spark insights and power actions. It offers features like fully local privacy, multimodal memory, vector-powered retrieval, intelligent indexing, vision understanding, hardware acceleration, focused user experience, integrated notes, and auto-sync. The tool combines file ingestion, intelligent chunking, and local retrieval to build a private on-device knowledge system. The ultimate goal includes more connectors like Google Drive integration, voice mode for local speech-to-text interaction, and a plugin ecosystem for community tools and agents. Local Cocoa is built using Electron, React, TypeScript, FastAPI, llama.cpp, and Qdrant.

Wegent

Wegent is an open-source AI-native operating system designed to define, organize, and run intelligent agent teams. It offers various core features such as a chat agent with multi-model support, conversation history, group chat, attachment parsing, follow-up mode, error correction mode, long-term memory, sandbox execution, and extensions. Additionally, Wegent includes a code agent for cloud-based code execution, AI feed for task triggers, AI knowledge for document management, and AI device for running tasks locally. The platform is highly extensible, allowing for custom agents, agent creation wizard, organization management, collaboration modes, skill support, MCP tools, execution engines, YAML config, and an API for easy integration with other systems.

OpenMemory

OpenMemory is a cognitive memory engine for AI agents, providing real long-term memory capabilities beyond simple embeddings. It is self-hosted and supports Python + Node SDKs, with integrations for various tools like LangChain, CrewAI, AutoGen, and more. Users can ingest data from sources like GitHub, Notion, Google Drive, and others directly into memory. OpenMemory offers explainable traces for recalled information and supports multi-sector memory, temporal reasoning, decay engine, waypoint graph, and more. It aims to provide a true memory system rather than just a vector database with marketing copy, enabling users to build agents, copilots, journaling systems, and coding assistants that can remember and reason effectively.

AIPex

AIPex is a revolutionary Chrome extension that transforms your browser into an intelligent automation platform. Using natural language commands and AI-powered intelligence, AIPex can automate virtually any browser task - from complex multi-step workflows to simple repetitive actions. It offers features like natural language control, AI-powered intelligence, multi-step automation, universal compatibility, smart data extraction, precision actions, form automation, visual understanding, developer-friendly with extensive API, and lightning-fast execution of automation tasks.

alphora

Alphora is a full-stack framework for building production AI agents, providing agent orchestration, prompt engineering, tool execution, memory management, streaming, and deployment with an async-first, OpenAI-compatible design. It offers features like agent derivation, reasoning-action loop, async streaming, visual debugger, OpenAI compatibility, multimodal support, tool system with zero-config tools and type safety, prompt engine with dynamic prompts, memory and storage management, sandbox for secure execution, deployment as API, and more. Alphora allows users to build sophisticated AI agents easily and efficiently.

handit.ai

Handit.ai is an autonomous engineer tool designed to fix AI failures 24/7. It catches failures, writes fixes, tests them, and ships PRs automatically. It monitors AI applications, detects issues, generates fixes, tests them against real data, and ships them as pull requests—all automatically. Users can write JavaScript, TypeScript, Python, and more, and the tool automates what used to require manual debugging and firefighting.

For similar tasks

grammar-llm

GrammarLLM is an AI-powered grammar correction tool that utilizes fine-tuned language models to fix grammatical errors in text. It offers real-time grammar and spelling correction with individual suggestion acceptance. The tool features a clean and responsive web interface, a FastAPI backend integrated with llama.cpp, and support for multiple grammar models. Users can easily deploy the tool using Docker Compose and interact with it through a web interface or REST API. The default model, GRMR-V3-G4B-Q8_0, provides grammar correction, spelling correction, punctuation fixes, and style improvements without requiring a GPU. The tool also includes endpoints for applying single or multiple suggestions to text, a health check endpoint, and detailed documentation for functionality and model details. Testing and verification steps are provided for manual and Docker testing, along with community guidelines for contributing, reporting issues, and getting support.

AI-Vibe-Writing-Skills

AI Vibe Writing Skills is an AI Skill that provides 'Style Transfer' and 'Error Memory' capabilities for personalized writing. It aims to assist in writing tasks by focusing on creativity, content refinement, and personalized style, rather than replacing the creative process. The tool offers features like style transfer, error memory, grammar check, long-term memory storage, writing knowledge bases, and multi-agent collaboration to enhance content production efficiency and quality.

CEO

CEO is an intuitive and modular AI agent framework designed for task automation. It provides a flexible environment for building agents with specific abilities and personalities, allowing users to assign tasks and interact with the agents to automate various processes. The framework supports multi-agent collaboration scenarios and offers functionalities like instantiating agents, granting abilities, assigning queries, and executing tasks. Users can customize agent personalities and define specific abilities using decorators, making it easy to create complex automation workflows.

For similar jobs

MaxKB

MaxKB is a knowledge base Q&A system based on the LLM large language model. MaxKB = Max Knowledge Base, which aims to become the most powerful brain of the enterprise.

crewAI

crewAI is a cutting-edge framework for orchestrating role-playing, autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It provides a flexible and structured approach to AI collaboration, enabling users to define agents with specific roles, goals, and tools, and assign them tasks within a customizable process. crewAI supports integration with various LLMs, including OpenAI, and offers features such as autonomous task delegation, flexible task management, and output parsing. It is open-source and welcomes contributions, with a focus on improving the library based on usage data collected through anonymous telemetry.

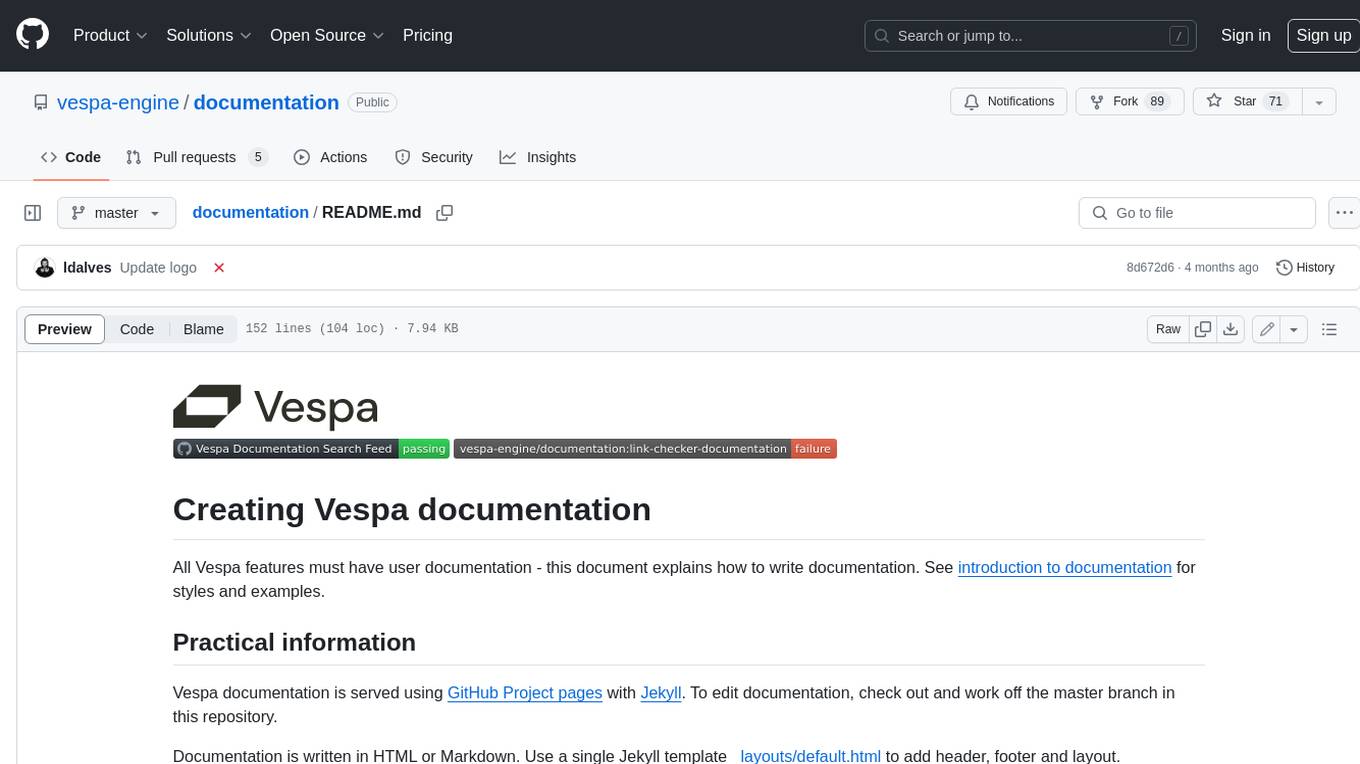

documentation

Vespa documentation is served using GitHub Project pages with Jekyll. To edit documentation, check out and work off the master branch in this repository. Documentation is written in HTML or Markdown. Use a single Jekyll template _layouts/default.html to add header, footer and layout. Install bundler, then $ bundle install $ bundle exec jekyll serve --incremental --drafts --trace to set up a local server at localhost:4000 to see the pages as they will look when served. If you get strange errors on bundle install try $ export PATH=“/usr/local/opt/[email protected]/bin:$PATH” $ export LDFLAGS=“-L/usr/local/opt/[email protected]/lib” $ export CPPFLAGS=“-I/usr/local/opt/[email protected]/include” $ export PKG_CONFIG_PATH=“/usr/local/opt/[email protected]/lib/pkgconfig” The output will highlight rendering/other problems when starting serving. Alternatively, use the docker image `jekyll/jekyll` to run the local server on Mac $ docker run -ti --rm --name doc \ --publish 4000:4000 -e JEKYLL_UID=$UID -v $(pwd):/srv/jekyll \ jekyll/jekyll jekyll serve or RHEL 8 $ podman run -it --rm --name doc -p 4000:4000 -e JEKYLL_ROOTLESS=true \ -v "$PWD":/srv/jekyll:Z docker.io/jekyll/jekyll jekyll serve The layout is written in denali.design, see _layouts/default.html for usage. Please do not add custom style sheets, as it is harder to maintain.

deep-seek

DeepSeek is a new experimental architecture for a large language model (LLM) powered internet-scale retrieval engine. Unlike current research agents designed as answer engines, DeepSeek aims to process a vast amount of sources to collect a comprehensive list of entities and enrich them with additional relevant data. The end result is a table with retrieved entities and enriched columns, providing a comprehensive overview of the topic. DeepSeek utilizes both standard keyword search and neural search to find relevant content, and employs an LLM to extract specific entities and their associated contents. It also includes a smaller answer agent to enrich the retrieved data, ensuring thoroughness. DeepSeek has the potential to revolutionize research and information gathering by providing a comprehensive and structured way to access information from the vastness of the internet.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

discourse-chatbot

The discourse-chatbot is an original AI chatbot for Discourse forums that allows users to converse with the bot in posts or chat channels. Users can customize the character of the bot, enable RAG mode for expert answers, search Wikipedia, news, and Google, provide market data, perform accurate math calculations, and experiment with vision support. The bot uses cutting-edge Open AI API and supports Azure and proxy server connections. It includes a quota system for access management and can be used in RAG mode or basic bot mode. The setup involves creating embeddings to make the bot aware of forum content and setting up bot access permissions based on trust levels. Users must obtain an API token from Open AI and configure group quotas to interact with the bot. The plugin is extensible to support other cloud bots and content search beyond the provided set.

crewAI

CrewAI is a cutting-edge framework designed to orchestrate role-playing autonomous AI agents. By fostering collaborative intelligence, CrewAI empowers agents to work together seamlessly, tackling complex tasks. It enables AI agents to assume roles, share goals, and operate in a cohesive unit, much like a well-oiled crew. Whether you're building a smart assistant platform, an automated customer service ensemble, or a multi-agent research team, CrewAI provides the backbone for sophisticated multi-agent interactions. With features like role-based agent design, autonomous inter-agent delegation, flexible task management, and support for various LLMs, CrewAI offers a dynamic and adaptable solution for both development and production workflows.

KB-Builder

KB Builder is an open-source knowledge base generation system based on the LLM large language model. It utilizes the RAG (Retrieval-Augmented Generation) data generation enhancement method to provide users with the ability to enhance knowledge generation and quickly build knowledge bases based on RAG. It aims to be the central hub for knowledge construction in enterprises, offering platform-based intelligent dialogue services and document knowledge base management functionality. Users can upload docx, pdf, txt, and md format documents and generate high-quality knowledge base question-answer pairs by invoking large models through the 'Parse Document' feature.