all-in-rag

🔍大模型应用开发实战一:RAG技术全栈指南,在线阅读地址:https://datawhalechina.github.io/all-in-rag/

Stars: 710

All-in-RAG is a comprehensive repository for all things related to Randomized Algorithms and Graphs. It provides a wide range of resources, including implementations of various randomized algorithms, graph data structures, and visualization tools. The repository aims to serve as a one-stop solution for researchers, students, and enthusiasts interested in exploring the intersection of randomized algorithms and graph theory. Whether you are looking to study theoretical concepts, implement algorithms in practice, or visualize graph structures, All-in-RAG has got you covered.

README:

| 🎯 系统化学习 完整的RAG技术体系 |

🛠️ 动手实践 丰富的项目案例 |

🚀 生产就绪 工程化最佳实践 |

📊 多模态支持 文本+图像检索 |

项目简介(中文 | English)

本项目是一个面向大模型应用开发者的RAG(检索增强生成)技术全栈教程,旨在通过体系化的学习路径和动手实践项目,帮助开发者掌握基于大语言模型的RAG应用开发技能,构建生产级的智能问答和知识检索系统。

主要内容包括:

- RAG技术基础:深入浅出地介绍RAG的核心概念、技术原理和应用场景

- 数据处理全流程:从数据加载、清洗到文本分块的完整数据准备流程

- 索引构建与优化:向量嵌入、多模态嵌入、向量数据库构建及索引优化技术

- 检索技术进阶:混合检索、查询构建、Text2SQL等高级检索技术

- 生成集成与评估:格式化生成、系统评估与优化方法

- 项目实战:从基础到进阶的完整RAG应用开发实践

随着大语言模型的快速发展,RAG技术已成为构建智能问答系统、知识检索应用的核心技术。然而,现有的RAG教程往往零散且缺乏系统性,初学者难以形成完整的技术体系认知。

本项目从实践出发,结合最新的RAG技术发展趋势,构建了一套完整的RAG学习体系,帮助开发者:

- 系统掌握RAG技术的理论基础和实践技能

- 理解RAG系统的完整架构和各组件的作用

- 具备独立开发RAG应用的能力

- 掌握RAG系统的评估和优化方法

本项目适合以下人群学习:

- 具备Python编程基础,对RAG技术感兴趣的开发者

- 希望系统学习RAG技术的AI工程师

- 想要构建智能问答系统的产品开发者

- 对检索增强生成技术有学习需求的研究人员

前置要求:

- 掌握Python基础语法和常用库的使用

- 能够简单使用docker

- 了解基本的LLM概念(推荐但非必需)

- 具备基础的Linux命令行操作能力

- 体系化学习路径:从基础概念到高级应用,构建完整的RAG技术学习体系

- 理论与实践并重:每个章节都包含理论讲解和代码实践,确保学以致用

- 多模态支持:不仅涵盖文本RAG,还包括多模态嵌入和检索技术

- 工程化导向:注重实际应用中的工程化问题,包括性能优化、系统评估等

- 丰富的实战项目:提供从基础到进阶的多个实战项目,帮助巩固学习成果

第一章 解锁RAG 📖 查看章节

- [x] RAG简介 - RAG技术概述与应用场景

- [x] 准备工作 - 环境配置与准备

- [x] 四步构建RAG - 快速上手RAG开发

- [x] 附:环境部署 - Python虚拟环境部署方案补充 (贡献者: @anarchysaiko)

第二章 数据准备 📖 查看章节

第三章 索引构建 📖 查看章节

- [x] 向量嵌入 - 文本向量化技术详解

- [x] 多模态嵌入 - 图文多模态向量化

- [x] 向量数据库 - 向量存储与检索系统

- [x] Milvus实践 - Milvus多模态检索实战

- [x] 索引优化 - 索引性能调优技巧

第四章 检索优化 📖 查看章节

- [x] 混合检索 - 稠密+稀疏检索融合

- [x] 查询构建 - 智能查询理解与构建

- [x] Text2SQL - 自然语言转SQL查询

- [x] 查询重构与分发 - 查询优化策略

- [x] 检索进阶技术 - 高级检索算法

第五章 生成集成 📖 查看章节

- [x] 格式化生成 - 结构化输出与格式控制

第六章 RAG系统评估 📖 查看章节

第七章 高级RAG架构(拓展部分) 📖 查看章节

- [x] 基于知识图谱的RAG

第八章 项目实战一 📖 查看章节

第九章 项目实战一优化(选修篇) 📖 查看章节

- [x] 图RAG架构设计

- [x] 图数据建模与准备

- [x] Milvus索引构建

- [x] 智能查询路由与检索策略

第十章 项目实战二(选修篇) 📖 查看章节 规划中

all-in-rag/

├── docs/ # 教程文档

├── code/ # 代码示例

├── data/ # 示例数据

├── models/ # 预训练模型

└── README.md # 项目说明

核心贡献者

- 尹大吕-项目负责人(项目发起人与主要贡献者)

额外章节贡献者

- 孙超-内容创作者(Datawhale成员-上海工程技术大学)

- 感谢 @Sm1les 对本项目的帮助与支持

- 感谢所有为本项目做出贡献的开发者们

- 感谢开源社区提供的优秀工具和框架支持

- 特别感谢以下为教程做出贡献的开发者!

Made with contrib.rocks.

我们欢迎所有形式的贡献,包括但不限于:

- 🚨 Bug报告:发现问题请提交 Issue

- 💭 教程建议:有好的想法欢迎在 Discussions 中讨论

- 📚 文档改进:帮助完善文档内容和示例代码(当前仅支持第七章优质内容pr)

如果这个项目对你有帮助,请给我们一个 ⭐️

让更多人发现这个项目(护食?发来!)

本作品采用 知识共享署名-非商业性使用-相同方式共享 4.0 国际许可协议 进行许可。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for all-in-rag

Similar Open Source Tools

all-in-rag

All-in-RAG is a comprehensive repository for all things related to Randomized Algorithms and Graphs. It provides a wide range of resources, including implementations of various randomized algorithms, graph data structures, and visualization tools. The repository aims to serve as a one-stop solution for researchers, students, and enthusiasts interested in exploring the intersection of randomized algorithms and graph theory. Whether you are looking to study theoretical concepts, implement algorithms in practice, or visualize graph structures, All-in-RAG has got you covered.

base-llm

Base LLM is a comprehensive learning tutorial from traditional Natural Language Processing (NLP) to Large Language Models (LLM), covering core technologies such as word embeddings, RNN, Transformer architecture, BERT, GPT, and Llama series models. The project aims to help developers build a solid technical foundation by providing a clear path from theory to practical engineering. It covers NLP theory, Transformer architecture, pre-trained language models, advanced model implementation, and deployment processes.

KubeDoor

KubeDoor is a microservice resource management platform developed using Python and Vue, based on K8S admission control mechanism. It supports unified remote storage, monitoring, alerting, notification, and display for multiple K8S clusters. The platform focuses on resource analysis and control during daily peak hours of microservices, ensuring consistency between resource request rate and actual usage rate.

Snap-Solver

Snap-Solver is a revolutionary AI tool for online exam solving, designed for students, test-takers, and self-learners. With just a keystroke, it automatically captures any question on the screen, analyzes it using AI, and provides detailed answers. Whether it's complex math formulas, physics problems, coding issues, or challenges from other disciplines, Snap-Solver offers clear, accurate, and structured solutions to help you better understand and master the subject matter.

BiBi-Keyboard

BiBi-Keyboard is an AI-based intelligent voice input method that aims to make voice input more natural and efficient. It provides features such as voice recognition with simple and intuitive operations, multiple ASR engine support, AI text post-processing, floating ball input for cross-input method usage, AI editing panel with rich editing tools, Material3 design for modern interface style, and support for multiple languages. Users can adjust keyboard height, test input directly in the settings page, view recognition word count statistics, receive vibration feedback, and check for updates automatically. The tool requires Android 10.0 or higher, microphone permission for voice recognition, optional overlay permission for the floating ball feature, and optional accessibility permission for automatic text insertion.

douyin-chatgpt-bot

Douyin ChatGPT Bot is an AI-driven system for automatic replies on Douyin, including comment and private message replies. It offers features such as comment filtering, customizable robot responses, and automated account management. The system aims to enhance user engagement and brand image on the Douyin platform, providing a seamless experience for managing interactions with followers and potential customers.

MaiMBot

MaiMBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, utilizes LLM for conversation abilities, MongoDB for data persistence, and NapCat for QQ protocol support. The bot features keyword-triggered proactive responses, dynamic prompt construction, support for images and message forwarding, typo generation, multiple replies, emotion-based emoji responses, daily schedule generation, user relationship management, knowledge base, and group impressions. Work-in-progress features include personality, group atmosphere, image handling, humor, meme functions, and Minecraft interactions. The tool is in active development with plans for GIF compatibility, mini-program link parsing, bug fixes, documentation improvements, and logic enhancements for emoji sending.

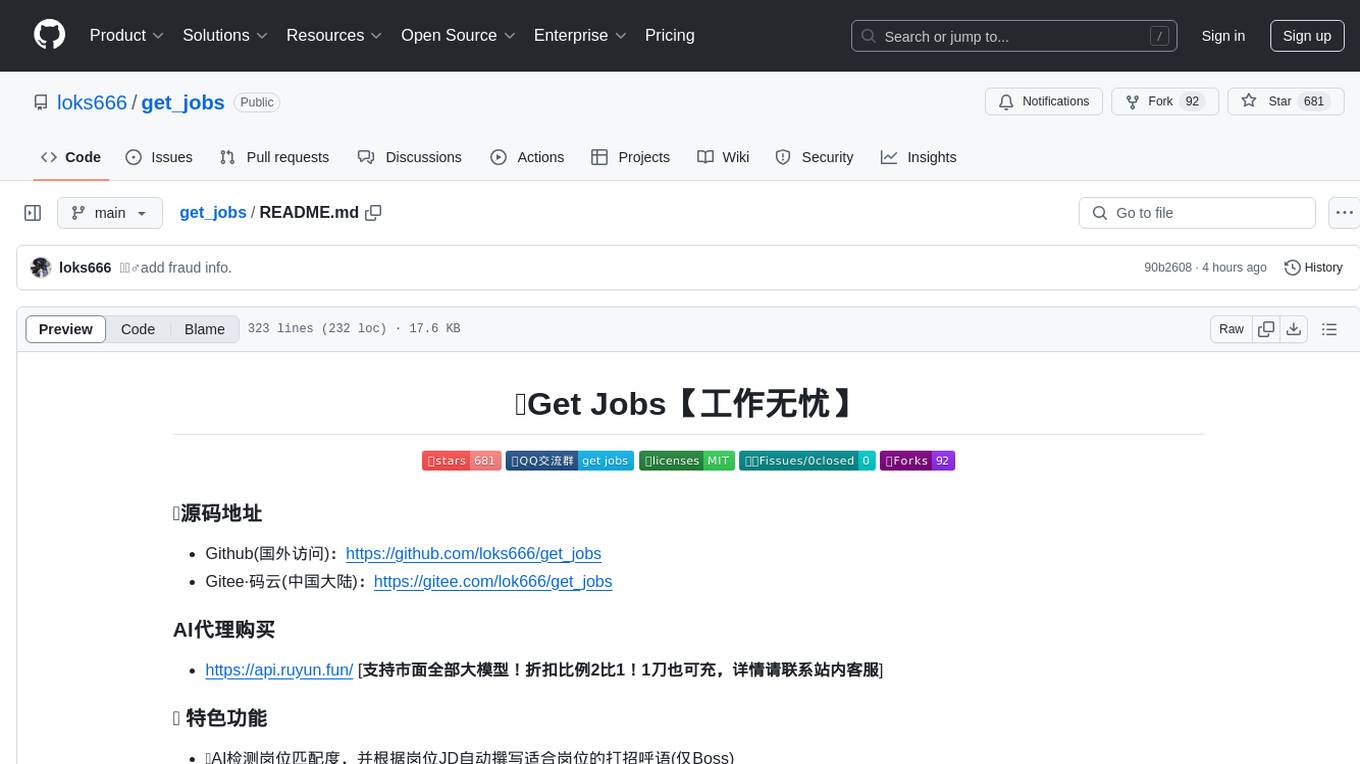

get_jobs

Get Jobs is a tool designed to help users find and apply for job positions on various recruitment platforms in China. It features AI job matching, automatic cover letter generation, multi-platform job application, automated filtering of inactive HR and headhunter positions, real-time WeChat message notifications, blacklisted company updates, driver adaptation for Win11, centralized configuration, long-lasting cookie login, XPathHelper plugin, global logging, and more. The tool supports platforms like Boss直聘, 猎聘, 拉勾, 51job, and 智联招聘. Users can configure the tool for customized job searches and applications.

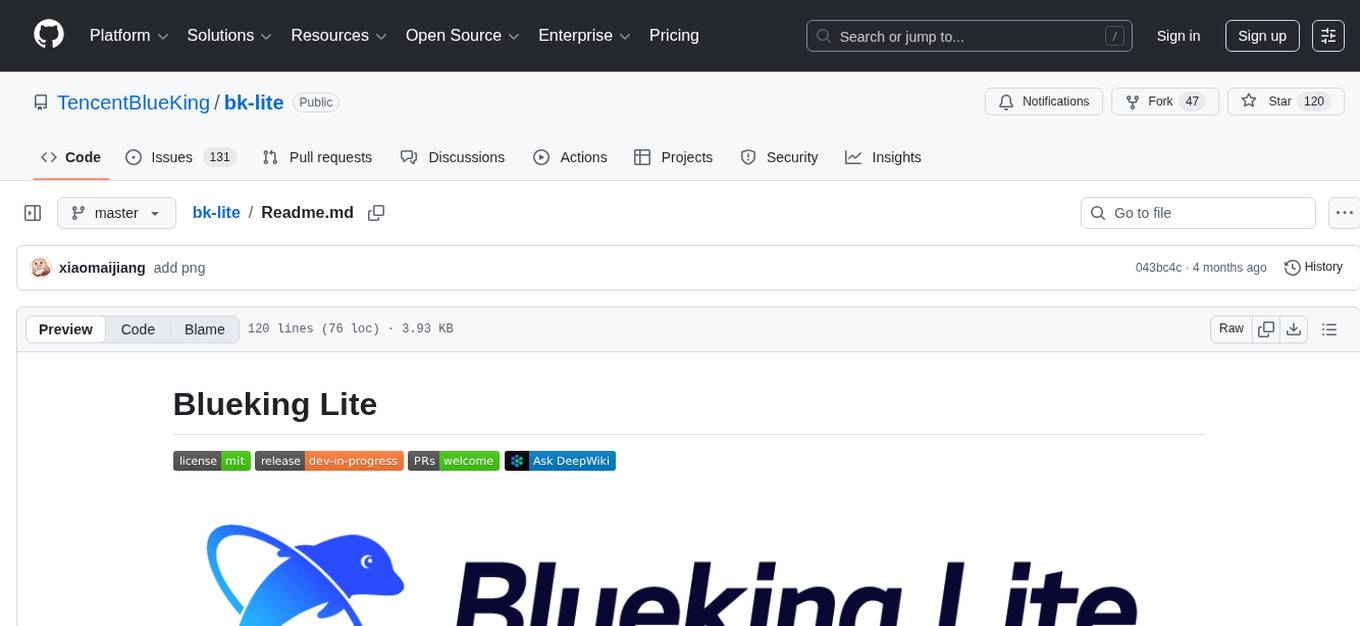

bk-lite

Blueking Lite is an AI First lightweight operation product with low deployment resource requirements, low usage costs, and progressive experience, providing essential tools for operation administrators.

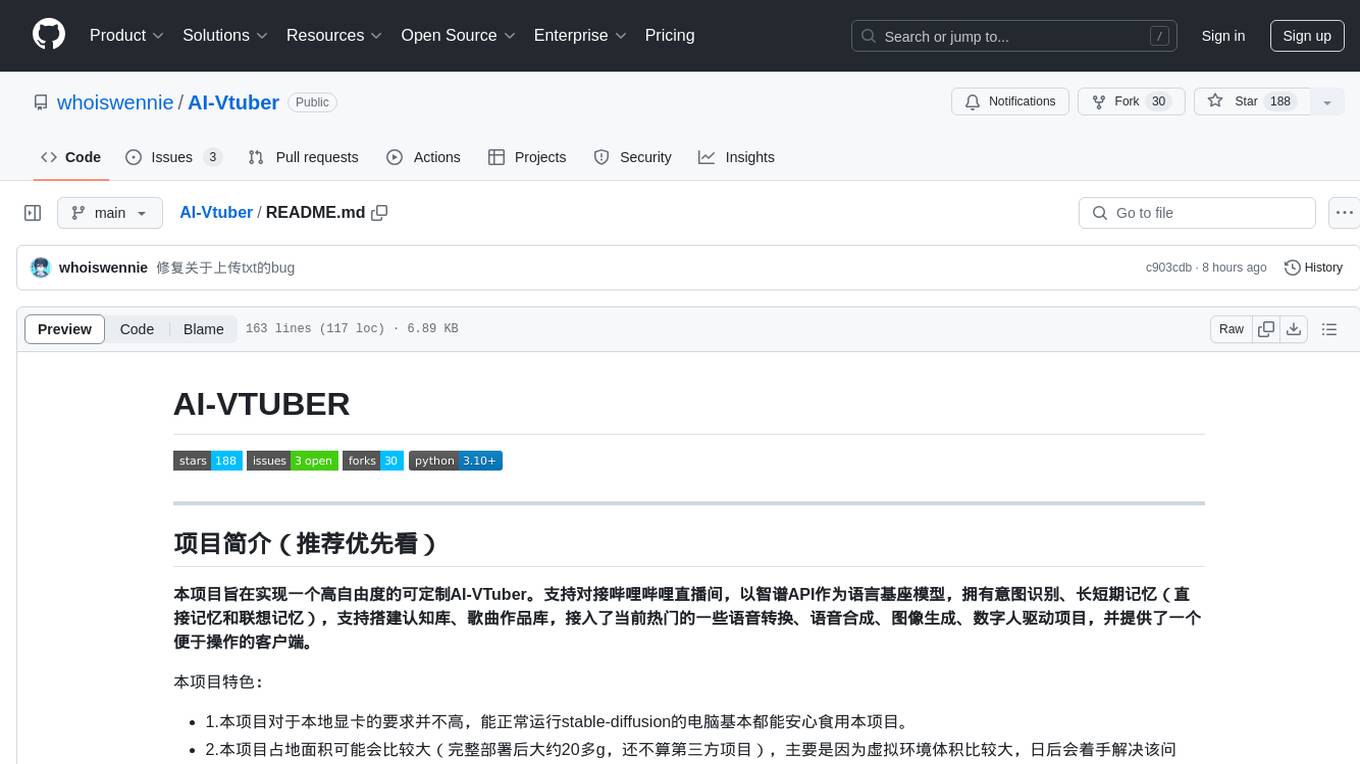

AI-Vtuber

AI-VTuber is a highly customizable AI VTuber project that integrates with Bilibili live streaming, uses Zhifu API as the language base model, and includes intent recognition, short-term and long-term memory, cognitive library building, song library creation, and integration with various voice conversion, voice synthesis, image generation, and digital human projects. It provides a user-friendly client for operations. The project supports virtual VTuber template construction, multi-person device template management, real-time switching of virtual VTuber templates, and offers various practical tools such as video/audio crawlers, voice recognition, voice separation, voice synthesis, voice conversion, AI drawing, and image background removal.

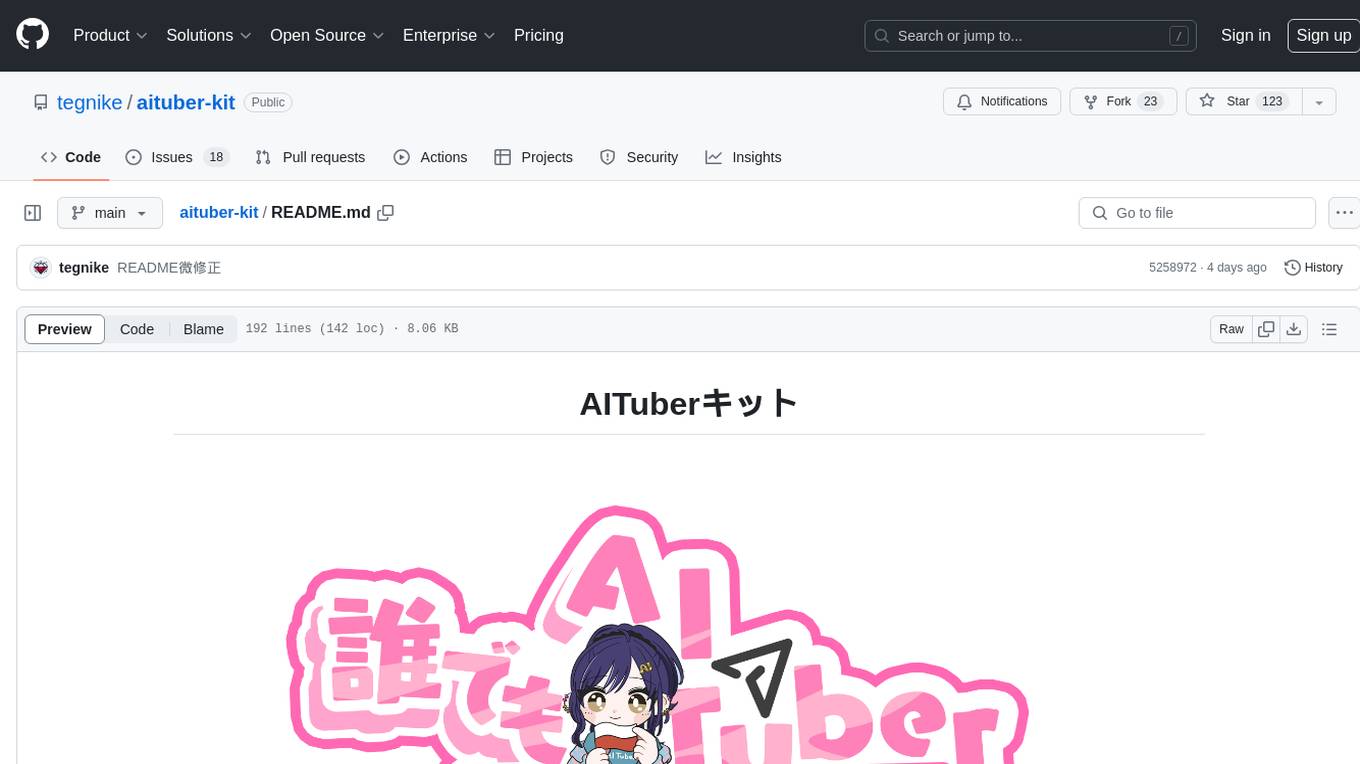

aituber-kit

AITuber-Kit is a tool that enables users to interact with AI characters, conduct AITuber live streams, and engage in external integration modes. Users can easily converse with AI characters using various LLM APIs, stream on YouTube with AI character reactions, and send messages to server apps via WebSocket. The tool provides settings for API keys, character configurations, voice synthesis engines, and more. It supports multiple languages and allows customization of VRM models and background images. AITuber-Kit follows the MIT license and offers guidelines for adding new languages to the project.

chatless

Chatless is a modern AI chat desktop application built on Tauri and Next.js. It supports multiple AI providers, can connect to local Ollama models, supports document parsing and knowledge base functions. All data is stored locally to protect user privacy. The application is lightweight, simple, starts quickly, and consumes minimal resources.

LLM-Dojo

LLM-Dojo is an open-source platform for learning and practicing large models, providing a framework for building custom large model training processes, implementing various tricks and principles in the llm_tricks module, and mainstream model chat templates. The project includes an open-source large model training framework, detailed explanations and usage of the latest LLM tricks, and a collection of mainstream model chat templates. The term 'Dojo' symbolizes a place dedicated to learning and practice, borrowing its meaning from martial arts training.

KouriChat

KouriChat is a project that seamlessly integrates virtual and real interactions, providing eternal gentle bonds. It offers features like WeChat integration, immersive role-playing, intelligent conversation segmentation, emotion-based emojis, image generation, image recognition, voice messages, and more. The project is focused on technical research and learning exchanges, with a strong emphasis on ethical and legal guidelines. Users are required to take full responsibility for their actions, especially minors who should use the tool under supervision. The project architecture includes avatar configurations, data storage, handlers, AI service interfaces, a web UI, and utility libraries.

TypeTale

TypeTale is an AIGC creation software designed specifically for content creators, primarily used for novel promotion. It offers a wide range of AI capabilities such as image, video, and audio generation, as well as text processing and story extraction. The tool also provides workflow customization, AI assistant support, and a vast library of creative materials. With a user-friendly interface and system requirements compatible with Windows operating systems, TypeTale aims to streamline the content creation process for writers and creators.

BigBanana-AI-Director

BigBanana AI Director is an industrial AI motion comic and video workbench platform that provides a one-stop solution for creating short dramas and comics. It utilizes a 'Script-to-Asset-to-Keyframe' workflow with advanced AI models to automate the process from script to final production, ensuring precise control over character consistency, scene continuity, and camera movements. The tool is designed to streamline the production process for creators, enabling efficient production from idea to finished product.

For similar tasks

Awesome-LLM4Graph-Papers

A collection of papers and resources about Large Language Models (LLM) for Graph Learning (Graph). Integrating LLMs with graph learning techniques to enhance performance in graph learning tasks. Categorizes approaches based on four primary paradigms and nine secondary-level categories. Valuable for research or practice in self-supervised learning for recommendation systems.

Graph-CoT

This repository contains the source code and datasets for Graph Chain-of-Thought: Augmenting Large Language Models by Reasoning on Graphs accepted to ACL 2024. It proposes a framework called Graph Chain-of-thought (Graph-CoT) to enable Language Models to traverse graphs step-by-step for reasoning, interaction, and execution. The motivation is to alleviate hallucination issues in Language Models by augmenting them with structured knowledge sources represented as graphs.

Awesome-Graph-LLM

Awesome-Graph-LLM is a curated collection of research papers exploring the intersection of graph-based techniques with Large Language Models (LLMs). The repository aims to bridge the gap between LLMs and graph structures prevalent in real-world applications by providing a comprehensive list of papers covering various aspects of graph reasoning, node classification, graph classification/regression, knowledge graphs, multimodal models, applications, and tools. It serves as a valuable resource for researchers and practitioners interested in leveraging LLMs for graph-related tasks.

all-in-rag

All-in-RAG is a comprehensive repository for all things related to Randomized Algorithms and Graphs. It provides a wide range of resources, including implementations of various randomized algorithms, graph data structures, and visualization tools. The repository aims to serve as a one-stop solution for researchers, students, and enthusiasts interested in exploring the intersection of randomized algorithms and graph theory. Whether you are looking to study theoretical concepts, implement algorithms in practice, or visualize graph structures, All-in-RAG has got you covered.

Interview-for-Algorithm-Engineer

This repository provides a collection of interview questions and answers for algorithm engineers. The questions are organized by topic, and each question includes a detailed explanation of the answer. This repository is a valuable resource for anyone preparing for an algorithm engineering interview.

lmql

LMQL is a programming language designed for large language models (LLMs) that offers a unique way of integrating traditional programming with LLM interaction. It allows users to write programs that combine algorithmic logic with LLM calls, enabling model reasoning capabilities within the context of the program. LMQL provides features such as Python syntax integration, rich control-flow options, advanced decoding techniques, powerful constraints via logit masking, runtime optimization, sync and async API support, multi-model compatibility, and extensive applications like JSON decoding and interactive chat interfaces. The tool also offers library integration, flexible tooling, and output streaming options for easy model output handling.

learnopencv

LearnOpenCV is a repository containing code for Computer Vision, Deep learning, and AI research articles shared on the blog LearnOpenCV.com. It serves as a resource for individuals looking to enhance their expertise in AI through various courses offered by OpenCV. The repository includes a wide range of topics such as image inpainting, instance segmentation, robotics, deep learning models, and more, providing practical implementations and code examples for readers to explore and learn from.

Java-AI-Book-Code

The Java-AI-Book-Code repository contains code examples for the 2020 edition of 'Practical Artificial Intelligence With Java'. It is a comprehensive update of the previous 2013 edition, featuring new content on deep learning, knowledge graphs, anomaly detection, linked data, genetic algorithms, search algorithms, and more. The repository serves as a valuable resource for Java developers interested in AI applications and provides practical implementations of various AI techniques and algorithms.

For similar jobs

Perplexica

Perplexica is an open-source AI-powered search engine that utilizes advanced machine learning algorithms to provide clear answers with sources cited. It offers various modes like Copilot Mode, Normal Mode, and Focus Modes for specific types of questions. Perplexica ensures up-to-date information by using SearxNG metasearch engine. It also features image and video search capabilities and upcoming features include finalizing Copilot Mode and adding Discover and History Saving features.

KULLM

KULLM (구름) is a Korean Large Language Model developed by Korea University NLP & AI Lab and HIAI Research Institute. It is based on the upstage/SOLAR-10.7B-v1.0 model and has been fine-tuned for instruction. The model has been trained on 8×A100 GPUs and is capable of generating responses in Korean language. KULLM exhibits hallucination and repetition phenomena due to its decoding strategy. Users should be cautious as the model may produce inaccurate or harmful results. Performance may vary in benchmarks without a fixed system prompt.

MMMU

MMMU is a benchmark designed to evaluate multimodal models on college-level subject knowledge tasks, covering 30 subjects and 183 subfields with 11.5K questions. It focuses on advanced perception and reasoning with domain-specific knowledge, challenging models to perform tasks akin to those faced by experts. The evaluation of various models highlights substantial challenges, with room for improvement to stimulate the community towards expert artificial general intelligence (AGI).

1filellm

1filellm is a command-line data aggregation tool designed for LLM ingestion. It aggregates and preprocesses data from various sources into a single text file, facilitating the creation of information-dense prompts for large language models. The tool supports automatic source type detection, handling of multiple file formats, web crawling functionality, integration with Sci-Hub for research paper downloads, text preprocessing, and token count reporting. Users can input local files, directories, GitHub repositories, pull requests, issues, ArXiv papers, YouTube transcripts, web pages, Sci-Hub papers via DOI or PMID. The tool provides uncompressed and compressed text outputs, with the uncompressed text automatically copied to the clipboard for easy pasting into LLMs.

gpt-researcher

GPT Researcher is an autonomous agent designed for comprehensive online research on a variety of tasks. It can produce detailed, factual, and unbiased research reports with customization options. The tool addresses issues of speed, determinism, and reliability by leveraging parallelized agent work. The main idea involves running 'planner' and 'execution' agents to generate research questions, seek related information, and create research reports. GPT Researcher optimizes costs and completes tasks in around 3 minutes. Features include generating long research reports, aggregating web sources, an easy-to-use web interface, scraping web sources, and exporting reports to various formats.

ChatTTS

ChatTTS is a generative speech model optimized for dialogue scenarios, providing natural and expressive speech synthesis with fine-grained control over prosodic features. It supports multiple speakers and surpasses most open-source TTS models in terms of prosody. The model is trained with 100,000+ hours of Chinese and English audio data, and the open-source version on HuggingFace is a 40,000-hour pre-trained model without SFT. The roadmap includes open-sourcing additional features like VQ encoder, multi-emotion control, and streaming audio generation. The tool is intended for academic and research use only, with precautions taken to limit potential misuse.

HebTTS

HebTTS is a language modeling approach to diacritic-free Hebrew text-to-speech (TTS) system. It addresses the challenge of accurately mapping text to speech in Hebrew by proposing a language model that operates on discrete speech representations and is conditioned on a word-piece tokenizer. The system is optimized using weakly supervised recordings and outperforms diacritic-based Hebrew TTS systems in terms of content preservation and naturalness of generated speech.

do-research-in-AI

This repository is a collection of research lectures and experience sharing posts from frontline researchers in the field of AI. It aims to help individuals upgrade their research skills and knowledge through insightful talks and experiences shared by experts. The content covers various topics such as evaluating research papers, choosing research directions, research methodologies, and tips for writing high-quality scientific papers. The repository also includes discussions on academic career paths, research ethics, and the emotional aspects of research work. Overall, it serves as a valuable resource for individuals interested in advancing their research capabilities in the field of AI.