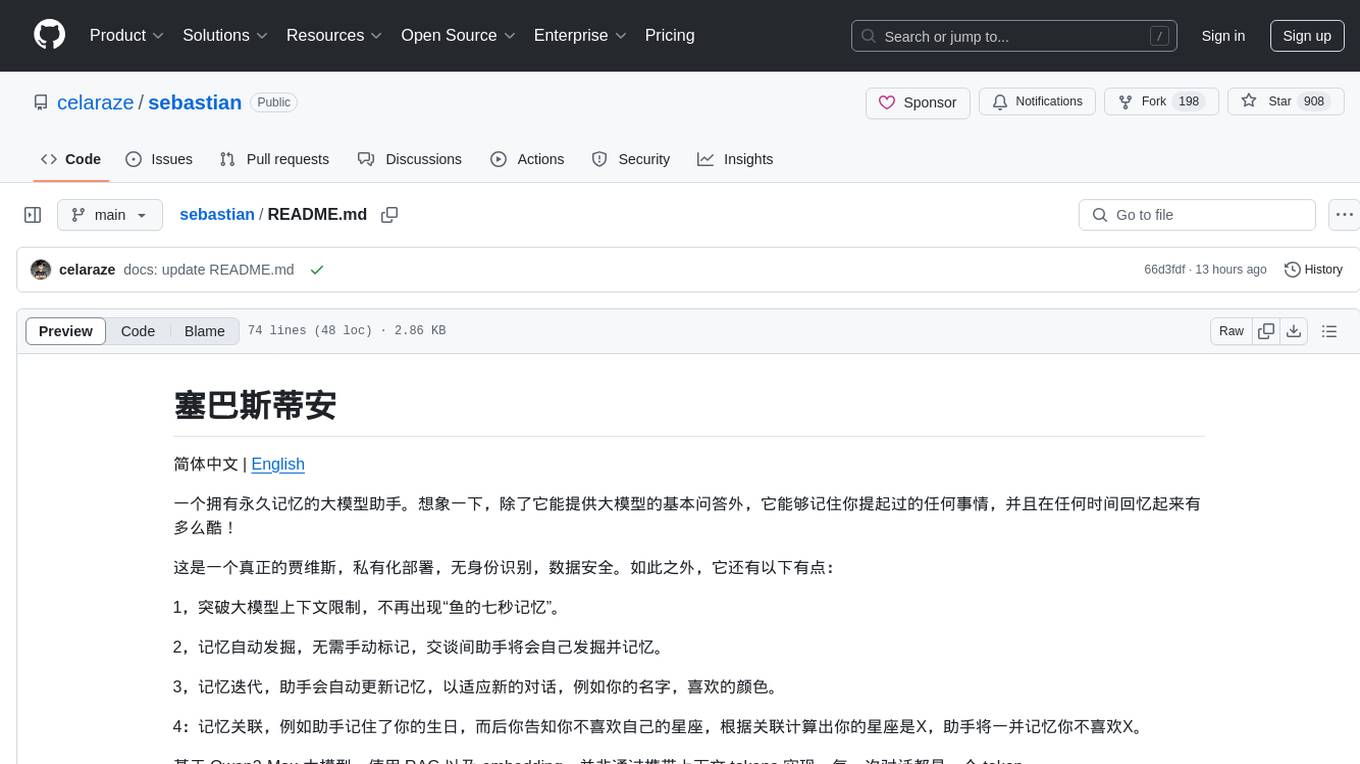

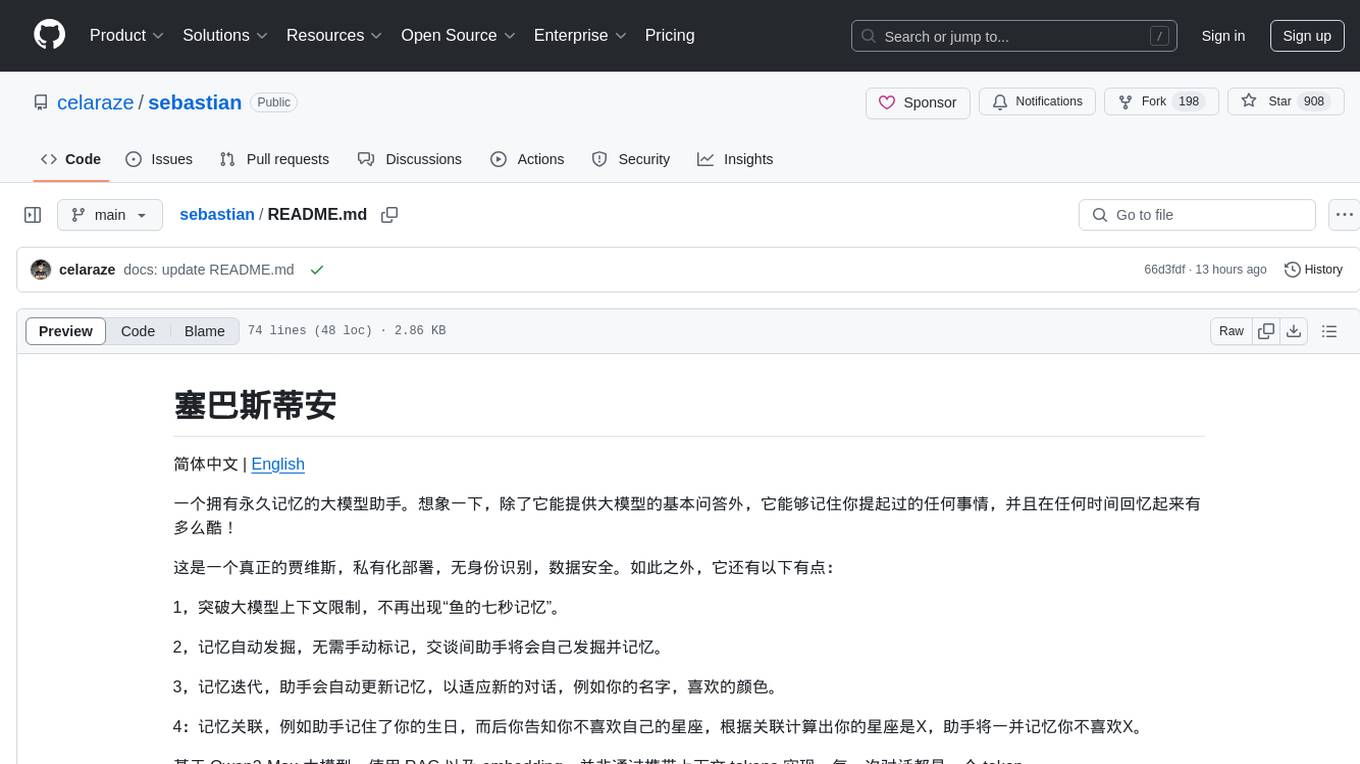

sebastian

Sebastian is a LLM assistant with the ability to have permanent memory.

Stars: 908

Sebastian is a large model assistant with permanent memory. It not only provides basic answers from large models but also remembers anything you mention, recalling it at any time. It functions like a real Jarvis, with private deployment, no identity recognition, and data security. It breaks the context limits of large models, has automatic memory discovery, iterative memory updates, and memory associations. Based on Qwen2-Max model, using RAG and embedding, each conversation is a token. Users can interact with Sebastian to remember and recall information seamlessly over time.

README:

简体中文 | English

一个拥有永久记忆的大模型助手。想象一下,除了它能提供大模型的基本问答外,它能够记住你提起过的任何事情,并且在任何时间回忆起来有多么酷!

这是一个真正的贾维斯,私有化部署,无身份识别,数据安全。如此之外,它还有以下有点:

1,突破大模型上下文限制,不再出现“鱼的七秒记忆”。

2,记忆自动发掘,无需手动标记,交谈间助手将会自己发掘并记忆。

3,记忆迭代,助手会自动更新记忆,以适应新的对话,例如你的名字,喜欢的颜色。

4:记忆关联,例如助手记住了你的生日,而后你告知你不喜欢自己的星座,根据关联计算出你的星座是X,助手将一并记忆你不喜欢X。

基于 Qwen2-Max 大模型,使用 RAG 以及 embedding。并非通过携带上下文 tokens 实现,每一次对话都是一个 token。

场景1:

# 事件发生于 2022年某天

用户:我今天刚买了一个黑色的鼠标,它有三年的退换服务。

助手:我记住了。

# 事件发生于 2025年某天

用户:我的鼠标的按键有些使用故障,你知道如何处理吗?

助手:您在 2012 年购买过一个黑色鼠标,现在即将到期,您可以联系售后服务。

用户:我忘记了,什么鼠标?

助手:一个黑色的鼠标,购买时间是 2012年X月X日。场景2:

# 事件发生于某天。

用户:我是张三。

助手:你好,张三。

# 事件发生于几年后的某天,期间与助手经历过无数次对话,且助手应用时常关闭打开。

用户:我是谁?

助手:你是张三。

用户:我改名了,现在叫李四。

助手:好的,你的名字是李四。我们建议使用阿里云百炼大模型平台来获取大模型能力,这不仅可以得到最新流行的大模型,还可以获得更好的性能。通过以下链接获取

api_key:

https://help.aliyun.com/zh/model-studio/developer-reference/get-api-key

进入项目目录,执行 docker-compose.yml 中的参数,尤其是 api_key。

执行 docker-compose up -d 启动应用。

而后通过访问 http://ip:8000/chat/text 实现文字对话,访问 http://ip:8000/chat/audio 实现语音对话。

如果您有任何建议或发现任何错误,请随时提交问题或拉取请求。

Afdian.net 是一个为创作者提供支持的平台。如果你喜欢这个项目,可以在 Afdian.net 上支持我。

感谢 JetBrains 提供优秀的 IDE。

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for sebastian

Similar Open Source Tools

sebastian

Sebastian is a large model assistant with permanent memory. It not only provides basic answers from large models but also remembers anything you mention, recalling it at any time. It functions like a real Jarvis, with private deployment, no identity recognition, and data security. It breaks the context limits of large models, has automatic memory discovery, iterative memory updates, and memory associations. Based on Qwen2-Max model, using RAG and embedding, each conversation is a token. Users can interact with Sebastian to remember and recall information seamlessly over time.

Discord-AI-Chatbot

Discord AI Chatbot is a versatile tool that seamlessly integrates into your Discord server, offering a wide range of capabilities to enhance your communication and engagement. With its advanced language model, the bot excels at imaginative generation, providing endless possibilities for creative expression. Additionally, it offers secure credential management, ensuring the privacy of your data. The bot's hybrid command system combines the best of slash and normal commands, providing flexibility and ease of use. It also features mention recognition, ensuring prompt responses whenever you mention it or use its name. The bot's message handling capabilities prevent confusion by recognizing when you're replying to others. You can customize the bot's behavior by selecting from a range of pre-existing personalities or creating your own. The bot's web access feature unlocks a new level of convenience, allowing you to interact with it from anywhere. With its open-source nature, you have the freedom to modify and adapt the bot to your specific needs.

MindSearch

MindSearch is an open-source AI Search Engine Framework that mimics human minds to provide deep AI search capabilities. It allows users to deploy their own search engine using either close-source or open-source language models. MindSearch offers features such as answering any question using web knowledge, in-depth knowledge discovery, detailed solution paths, optimized UI experience, and dynamic graph construction process.

mods

AI for the command line, built for pipelines. LLM based AI is really good at interpreting the output of commands and returning the results in CLI friendly text formats like Markdown. Mods is a simple tool that makes it super easy to use AI on the command line and in your pipelines. Mods works with OpenAI, Groq, Azure OpenAI, and LocalAI To get started, install Mods and check out some of the examples below. Since Mods has built-in Markdown formatting, you may also want to grab Glow to give the output some _pizzazz_.

ChatGPT-Telegram-Bot

ChatGPT Telegram Bot is a Telegram bot that provides a smooth AI experience. It supports both Azure OpenAI and native OpenAI, and offers real-time (streaming) response to AI, with a faster and smoother experience. The bot also has 15 preset bot identities that can be quickly switched, and supports custom bot identities to meet personalized needs. Additionally, it supports clearing the contents of the chat with a single click, and restarting the conversation at any time. The bot also supports native Telegram bot button support, making it easy and intuitive to implement required functions. User level division is also supported, with different levels enjoying different single session token numbers, context numbers, and session frequencies. The bot supports English and Chinese on UI, and is containerized for easy deployment.

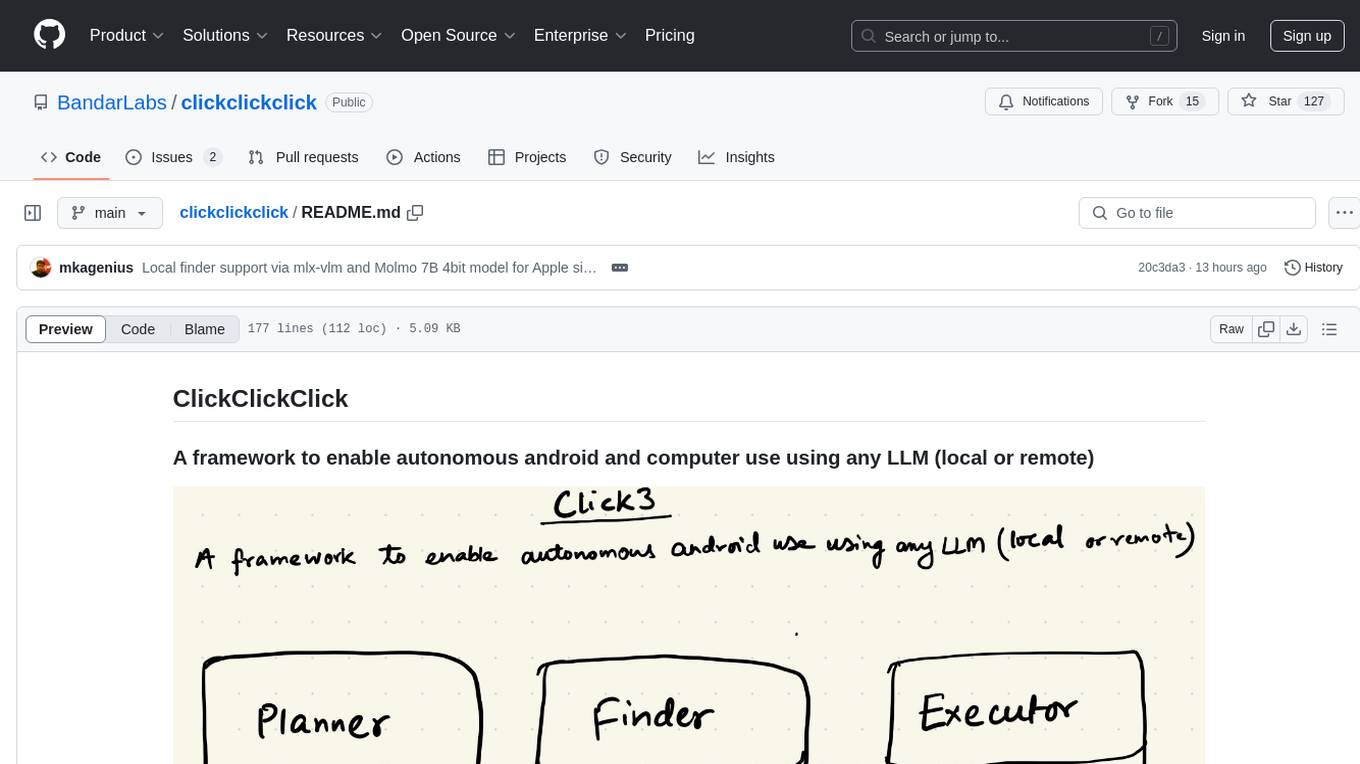

clickclickclick

ClickClickClick is a framework designed to enable autonomous Android and computer use using various LLM models, both locally and remotely. It supports tasks such as drafting emails, opening browsers, and starting games, with current support for local models via Ollama, Gemini, and GPT 4o. The tool is highly experimental and evolving, with the best results achieved using specific model combinations. Users need prerequisites like `adb` installation and USB debugging enabled on Android phones. The tool can be installed via cloning the repository, setting up a virtual environment, and installing dependencies. It can be used as a CLI tool or script, allowing users to configure planner and finder models for different tasks. Additionally, it can be used as an API to execute tasks based on provided prompts, platform, and models.

cria

Cria is a Python library designed for running Large Language Models with minimal configuration. It provides an easy and concise way to interact with LLMs, offering advanced features such as custom models, streams, message history management, and running multiple models in parallel. Cria simplifies the process of using LLMs by providing a straightforward API that requires only a few lines of code to get started. It also handles model installation automatically, making it efficient and user-friendly for various natural language processing tasks.

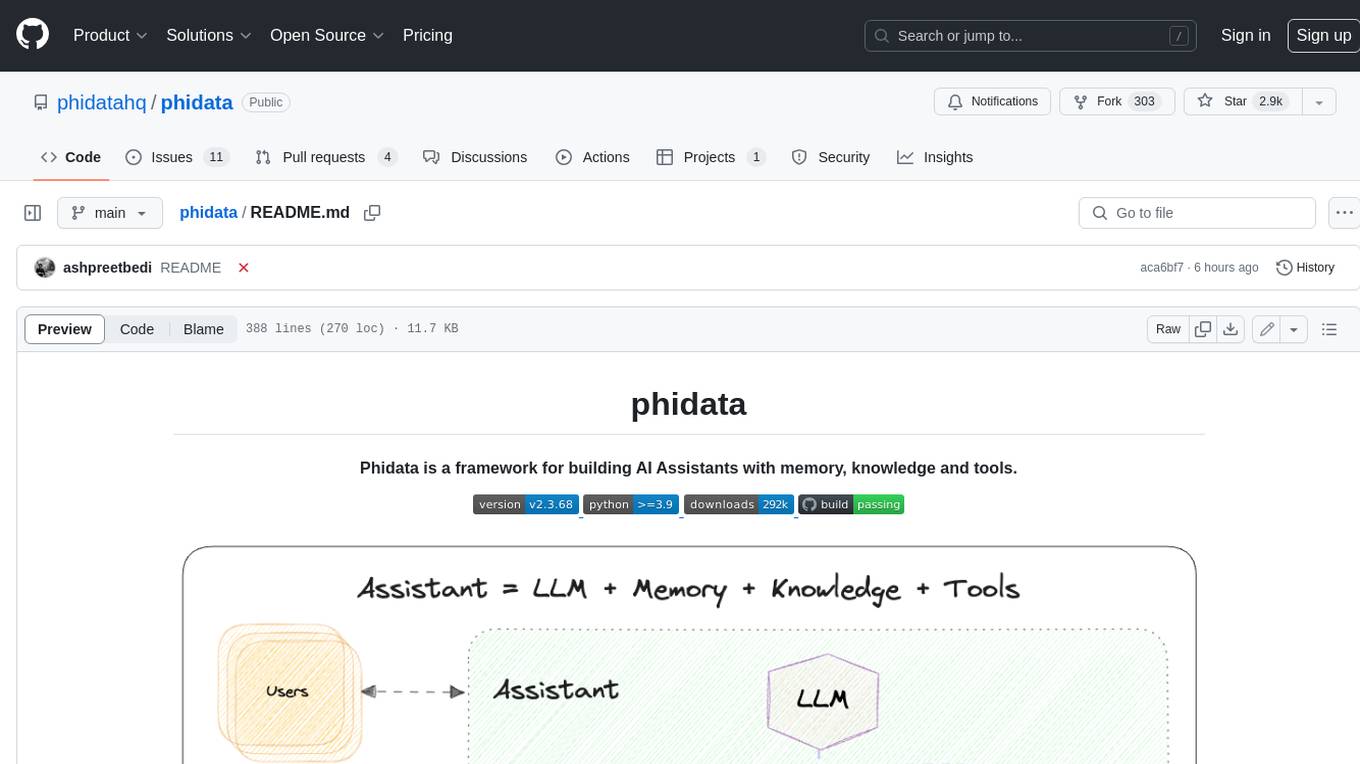

phidata

Phidata is a framework for building AI Assistants with memory, knowledge, and tools. It enables LLMs to have long-term conversations by storing chat history in a database, provides them with business context by storing information in a vector database, and enables them to take actions like pulling data from an API, sending emails, or querying a database. Memory and knowledge make LLMs smarter, while tools make them autonomous.

tiledesk-dashboard

Tiledesk is an open-source live chat platform with integrated chatbots written in Node.js and Express. It is designed to be a multi-channel platform for web, Android, and iOS, and it can be used to increase sales or provide post-sales customer service. Tiledesk's chatbot technology allows for automation of conversations, and it also provides APIs and webhooks for connecting external applications. Additionally, it offers a marketplace for apps and features such as CRM, ticketing, and data export.

chatlab

ChatLab is a Python package that simplifies experimenting with OpenAI's chat models. It provides an interactive interface for chatting with the models and registering custom functions. Users can easily create chat experiments, visualize color palettes, work with function registry, create knowledge graphs, and perform direct parallel function calling. The tool enables users to interact with chat models and customize functionalities for various tasks.

june

june-va is a local voice chatbot that combines Ollama for language model capabilities, Hugging Face Transformers for speech recognition, and the Coqui TTS Toolkit for text-to-speech synthesis. It provides a flexible, privacy-focused solution for voice-assisted interactions on your local machine, ensuring that no data is sent to external servers. The tool supports various interaction modes including text input/output, voice input/text output, text input/audio output, and voice input/audio output. Users can customize the tool's behavior with a JSON configuration file and utilize voice conversion features for voice cloning. The application can be further customized using a configuration file with attributes for language model, speech-to-text model, and text-to-speech model configurations.

dive

Dive is an AI toolkit for Go that enables the creation of specialized teams of AI agents and seamless integration with leading LLMs. It offers a CLI and APIs for easy integration, with features like creating specialized agents, hierarchical agent systems, declarative configuration, multiple LLM support, extended reasoning, model context protocol, advanced model settings, tools for agent capabilities, tool annotations, streaming, CLI functionalities, thread management, confirmation system, deep research, and semantic diff. Dive also provides semantic diff analysis, unified interface for LLM providers, tool system with annotations, custom tool creation, and support for various verified models. The toolkit is designed for developers to build AI-powered applications with rich agent capabilities and tool integrations.

ControlLLM

ControlLLM is a framework that empowers large language models to leverage multi-modal tools for solving complex real-world tasks. It addresses challenges like ambiguous user prompts, inaccurate tool selection, and inefficient tool scheduling by utilizing a task decomposer, a Thoughts-on-Graph paradigm, and an execution engine with a rich toolbox. The framework excels in tasks involving image, audio, and video processing, showcasing superior accuracy, efficiency, and versatility compared to existing methods.

ash_ai

Ash AI is a tool that provides a Model Context Protocol (MCP) server for exposing tool definitions to an MCP client. It allows for the installation of dev and production MCP servers, and supports features like OAuth2 flow with AshAuthentication, tool data access, tool execution callbacks, prompt-backed actions, and vectorization strategies. Users can also generate a chat feature for their Ash & Phoenix application using `ash_oban` and `ash_postgres`, and specify LLM API keys for OpenAI. The tool is designed to help developers experiment with tools and actions, monitor tool execution, and expose actions as tool calls.

agent-q

Agentq is a tool that utilizes various agentic architectures to complete tasks on the web reliably. It includes a planner-navigator multi-agent architecture, a solo planner-actor agent, an actor-critic multi-agent architecture, and an actor-critic architecture with reinforcement learning and DPO finetuning. The repository also contains an open-source implementation of the research paper 'Agent Q'. Users can set up the tool by installing dependencies, starting Chrome in dev mode, and setting up necessary environment variables. The tool can be run to perform various tasks related to autonomous AI agents.

basehub

JavaScript / TypeScript SDK for BaseHub, the first AI-native content hub. **Features:** * ✨ Infers types from your BaseHub repository... _meaning IDE autocompletion works great._ * 🏎️ No dependency on graphql... _meaning your bundle is more lightweight._ * 🌐 Works everywhere `fetch` is supported... _meaning you can use it anywhere._

For similar tasks

sebastian

Sebastian is a large model assistant with permanent memory. It not only provides basic answers from large models but also remembers anything you mention, recalling it at any time. It functions like a real Jarvis, with private deployment, no identity recognition, and data security. It breaks the context limits of large models, has automatic memory discovery, iterative memory updates, and memory associations. Based on Qwen2-Max model, using RAG and embedding, each conversation is a token. Users can interact with Sebastian to remember and recall information seamlessly over time.

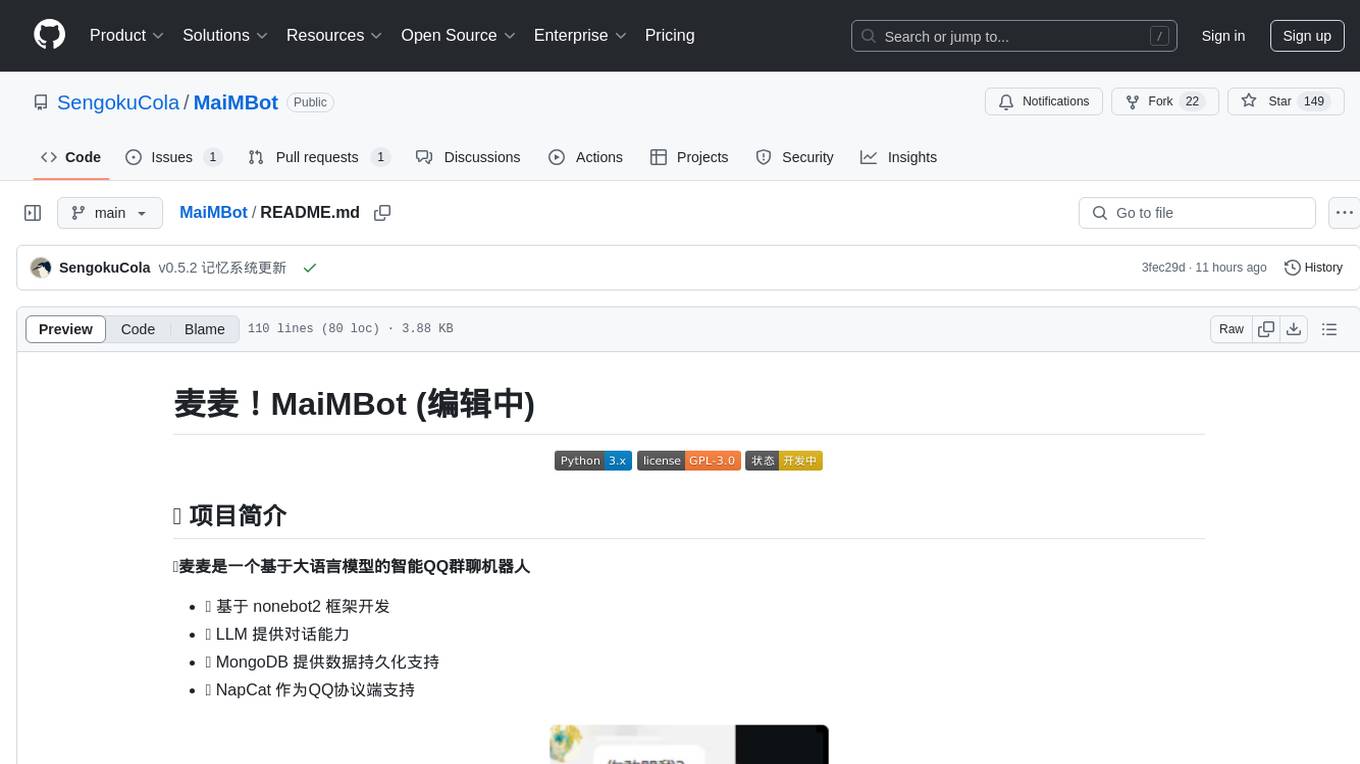

MaiMBot

MaiMBot is an intelligent QQ group chat bot based on a large language model. It is developed using the nonebot2 framework, utilizes LLM for conversation abilities, MongoDB for data persistence, and NapCat for QQ protocol support. The bot features keyword-triggered proactive responses, dynamic prompt construction, support for images and message forwarding, typo generation, multiple replies, emotion-based emoji responses, daily schedule generation, user relationship management, knowledge base, and group impressions. Work-in-progress features include personality, group atmosphere, image handling, humor, meme functions, and Minecraft interactions. The tool is in active development with plans for GIF compatibility, mini-program link parsing, bug fixes, documentation improvements, and logic enhancements for emoji sending.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.