OpenSandbox

OpenSandbox is a general-purpose sandbox platform for AI applications, offering multi-language SDKs, unified sandbox APIs, and Docker/Kubernetes runtimes for scenarios like Coding Agents, GUI Agents, Agent Evaluation, AI Code Execution, and RL Training.

Stars: 1033

OpenSandbox is a general-purpose sandbox platform for AI applications, offering multi-language SDKs, unified sandbox APIs, and Docker/Kubernetes runtimes for scenarios like Coding Agents, GUI Agents, Agent Evaluation, AI Code Execution, and RL Training. It provides features such as multi-language SDKs, sandbox protocol, sandbox runtime, sandbox environments, and network policy. Users can perform basic sandbox operations like installing and configuring the sandbox server, starting the sandbox server, creating a code interpreter, and executing commands. The platform also offers examples for coding agent integrations, browser and desktop environments, and ML and training tasks.

README:

English | 中文

OpenSandbox is a general-purpose sandbox platform for AI applications, offering multi-language SDKs, unified sandbox APIs, and Docker/Kubernetes runtimes for scenarios like Coding Agents, GUI Agents, Agent Evaluation, AI Code Execution, and RL Training.

- Multi-language SDKs: Client SDKs for Python, Java/Kotlin, and JavaScript/TypeScript.

- Sandbox Protocol: Defines sandbox lifecycle management APIs and sandbox execution APIs so you can extend custom sandbox runtimes.

- Sandbox Runtime: Built-in lifecycle management supporting Docker and high-performance Kubernetes runtime, enabling both local runs and large-scale distributed scheduling.

- Sandbox Environments: Built-in Command, Filesystem, and Code Interpreter implementations. Examples cover Coding Agents (e.g., Claude Code), browser automation (Chrome, Playwright), and desktop environments (VNC, VS Code).

- Network Policy: Unified Ingress Gateway with multiple routing strategies plus per-sandbox egress controls.

Requirements:

- Docker (required for local execution)

- Python 3.10+ (recommended for examples and local runtime)

uv pip install opensandbox-server

opensandbox-server init-config ~/.sandbox.toml --example dockerIf you prefer working from source, you can still clone the repo for development, but server startup no longer requires it.

git clone https://github.com/alibaba/OpenSandbox.git cd OpenSandbox/server uv sync cp example.config.toml ~/.sandbox.toml # Copy configuration file uv run python -m src.main # Start the service

opensandbox-server

# opensandbox-server -h # Show helpInstall the Code Interpreter SDK

uv pip install opensandbox-code-interpreterCreate a sandbox and execute commands

import asyncio

from datetime import timedelta

from code_interpreter import CodeInterpreter, SupportedLanguage

from opensandbox import Sandbox

from opensandbox.models import WriteEntry

async def main() -> None:

# 1. Create a sandbox

sandbox = await Sandbox.create(

"opensandbox/code-interpreter:v1.0.1",

entrypoint=["/opt/opensandbox/code-interpreter.sh"],

env={"PYTHON_VERSION": "3.11"},

timeout=timedelta(minutes=10),

)

async with sandbox:

# 2. Execute a shell command

execution = await sandbox.commands.run("echo 'Hello OpenSandbox!'")

print(execution.logs.stdout[0].text)

# 3. Write a file

await sandbox.files.write_files([

WriteEntry(path="/tmp/hello.txt", data="Hello World", mode=644)

])

# 4. Read a file

content = await sandbox.files.read_file("/tmp/hello.txt")

print(f"Content: {content}") # Content: Hello World

# 5. Create a code interpreter

interpreter = await CodeInterpreter.create(sandbox)

# 6. Execute Python code (single-run, pass language directly)

result = await interpreter.codes.run(

"""

import sys

print(sys.version)

result = 2 + 2

result

""",

language=SupportedLanguage.PYTHON,

)

print(result.result[0].text) # 4

print(result.logs.stdout[0].text) # 3.11.14

# 7. Cleanup the sandbox

await sandbox.kill()

if __name__ == "__main__":

asyncio.run(main())OpenSandbox provides rich examples demonstrating sandbox usage in different scenarios. All example code is located in the examples/ directory.

- code-interpreter - End-to-end Code Interpreter SDK workflow in a sandbox.

- aio-sandbox - All-in-One sandbox setup using the OpenSandbox SDK.

- agent-sandbox - Run OpenSandbox on Kubernetes via kubernetes-sigs/agent-sandbox.

- claude-code - Run Claude Code inside OpenSandbox.

- gemini-cli - Run Google Gemini CLI inside OpenSandbox.

- codex-cli - Run OpenAI Codex CLI inside OpenSandbox.

- iflow-cli - Run iFLow CLI inside OpenSandbox.

- langgraph - LangGraph state-machine workflow that creates/runs a sandbox job with fallback retry.

- google-adk - Google ADK agent using OpenSandbox tools to write/read files and run commands.

- chrome - Headless Chromium with VNC and DevTools access for automation/debugging.

- playwright - Playwright + Chromium headless scraping and testing example.

- desktop - Full desktop environment in a sandbox with VNC access.

- vscode - code-server (VS Code Web) running inside a sandbox for remote dev.

- rl-training - DQN CartPole training in a sandbox with checkpoints and summary output.

For more details, please refer to examples and the README files in each example directory.

| Directory | Description |

|---|---|

sdks/ |

Multi-language SDKs (Python, Java/Kotlin, TypeScript/JavaScript) |

specs/ |

OpenAPI specs and lifecycle specifications |

server/ |

Python FastAPI sandbox lifecycle server |

kubernetes/ |

Kubernetes deployment and examples |

components/execd/ |

Sandbox execution daemon (commands and file operations) |

components/ingress/ |

Sandbox traffic ingress proxy |

components/egress/ |

Sandbox network egress control |

sandboxes/ |

Runtime sandbox implementations |

examples/ |

Integration examples and use cases |

oseps/ |

OpenSandbox Enhancement Proposals |

docs/ |

Architecture and design documentation |

tests/ |

Cross-component E2E tests |

scripts/ |

Development and maintenance scripts |

For detailed architecture, see docs/architecture.md.

- docs/architecture.md – Overall architecture & design philosophy

- SDK

- Sandbox base SDK (Java\Kotlin SDK, Python SDK, JavaScript/TypeScript SDK) - includes sandbox lifecycle, command execution, file operations

- Code Interpreter SDK (Java\Kotlin SDK, Python SDK, JavaScript/TypeScript SDK) - code interpreter

- specs/README.md - OpenAPI definitions for sandbox lifecycle API and sandbox execution API

- server/README.md - Sandbox server startup and configuration; supports Docker and Kubernetes runtimes

This project is open source under the Apache 2.0 License.

- [ ] Go SDK - Go client SDK for sandbox lifecycle management, command execution, and file operations.

- [ ] Persistent storage - Mountable persistent storage for sandboxes (see Proposal 0003).

- [ ] Ingress multi-network strategies - Multi-Kubernetes provisioning and multi-network modes for the Ingress Gateway.

- [ ] Local lightweight sandbox - Lightweight sandbox for AI tools running directly on PCs.

- [ ] Kubernetes Helm - Helm charts to deploy all components.

- Issues: Submit bugs, feature requests, or design discussions through GitHub Issues

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for OpenSandbox

Similar Open Source Tools

OpenSandbox

OpenSandbox is a general-purpose sandbox platform for AI applications, offering multi-language SDKs, unified sandbox APIs, and Docker/Kubernetes runtimes for scenarios like Coding Agents, GUI Agents, Agent Evaluation, AI Code Execution, and RL Training. It provides features such as multi-language SDKs, sandbox protocol, sandbox runtime, sandbox environments, and network policy. Users can perform basic sandbox operations like installing and configuring the sandbox server, starting the sandbox server, creating a code interpreter, and executing commands. The platform also offers examples for coding agent integrations, browser and desktop environments, and ML and training tasks.

shadcn-ui-mcp-server

A Model Context Protocol (MCP) server that provides AI assistants with comprehensive access to shadcn/ui v4 components, blocks, demos, and metadata. It allows users to seamlessly retrieve React, Svelte, Vue, and React Native implementations for AI-powered development workflows. The server supports multi-frameworks, component source code, demos, blocks, metadata access, directory browsing, smart caching, SSE transport, and Docker containerization. Users can quickly start the server, integrate with Claude Desktop, deploy with SSE transport or Docker, and access documentation for installation, configuration, integration, usage, frameworks, troubleshooting, and API reference. The server supports React, Svelte, Vue, and React Native implementations, with options for UI library selection. It enables AI-powered development, multi-client deployments, production environments, component discovery, multi-framework learning, rapid prototyping, and code generation.

inspector

A developer tool for testing and debugging Model Context Protocol (MCP) servers. It allows users to test the compliance of their MCP servers with the latest MCP specs, supports various transports like STDIO, SSE, and Streamable HTTP, features an LLM Playground for testing server behavior against different models, provides comprehensive logging and error reporting for MCP server development, and offers a modern developer experience with multiple server connections and saved configurations. The tool is built using Next.js and integrates MCP capabilities, AI SDKs from OpenAI, Anthropic, and Ollama, and various technologies like Node.js, TypeScript, and Next.js.

cua

Cua is a tool for creating and running high-performance macOS and Linux virtual machines on Apple Silicon, with built-in support for AI agents. It provides libraries like Lume for running VMs with near-native performance, Computer for interacting with sandboxes, and Agent for running agentic workflows. Users can refer to the documentation for onboarding, explore demos showcasing AI-Gradio and GitHub issue fixing, and utilize accessory libraries like Core, PyLume, Computer Server, and SOM. Contributions are welcome, and the tool is open-sourced under the MIT License.

alphora

Alphora is a full-stack framework for building production AI agents, providing agent orchestration, prompt engineering, tool execution, memory management, streaming, and deployment with an async-first, OpenAI-compatible design. It offers features like agent derivation, reasoning-action loop, async streaming, visual debugger, OpenAI compatibility, multimodal support, tool system with zero-config tools and type safety, prompt engine with dynamic prompts, memory and storage management, sandbox for secure execution, deployment as API, and more. Alphora allows users to build sophisticated AI agents easily and efficiently.

conduit

Conduit is an open-source, cross-platform mobile application for Open-WebUI, providing a native mobile experience for interacting with your self-hosted AI infrastructure. It supports real-time chat, model selection, conversation management, markdown rendering, theme support, voice input, file uploads, multi-modal support, secure storage, folder management, and tools invocation. Conduit offers multiple authentication flows and follows a clean architecture pattern with Riverpod for state management, Dio for HTTP networking, WebSocket for real-time streaming, and Flutter Secure Storage for credential management.

ClaudeBar

ClaudeBar is a macOS menu bar application that monitors AI coding assistant usage quotas. It allows users to keep track of their usage of Claude, Codex, Gemini, GitHub Copilot, Antigravity, and Z.ai at a glance. The application offers multi-provider support, real-time quota tracking, multiple themes, visual status indicators, system notifications, auto-refresh feature, and keyboard shortcuts for quick access. Users can customize monitoring by toggling individual providers on/off and receive alerts when quota status changes. The tool requires macOS 15+, Swift 6.2+, and CLI tools installed for the providers to be monitored.

aegra

Aegra is a self-hosted AI agent backend platform that provides LangGraph power without vendor lock-in. Built with FastAPI + PostgreSQL, it offers complete control over agent orchestration for teams looking to escape vendor lock-in, meet data sovereignty requirements, enable custom deployments, and optimize costs. Aegra is Agent Protocol compliant and perfect for teams seeking a free, self-hosted alternative to LangGraph Platform with zero lock-in, full control, and compatibility with existing LangGraph Client SDK.

ai-real-estate-assistant

AI Real Estate Assistant is a modern platform that uses AI to assist real estate agencies in helping buyers and renters find their ideal properties. It features multiple AI model providers, intelligent query processing, advanced search and retrieval capabilities, and enhanced user experience. The tool is built with a FastAPI backend and Next.js frontend, offering semantic search, hybrid agent routing, and real-time analytics.

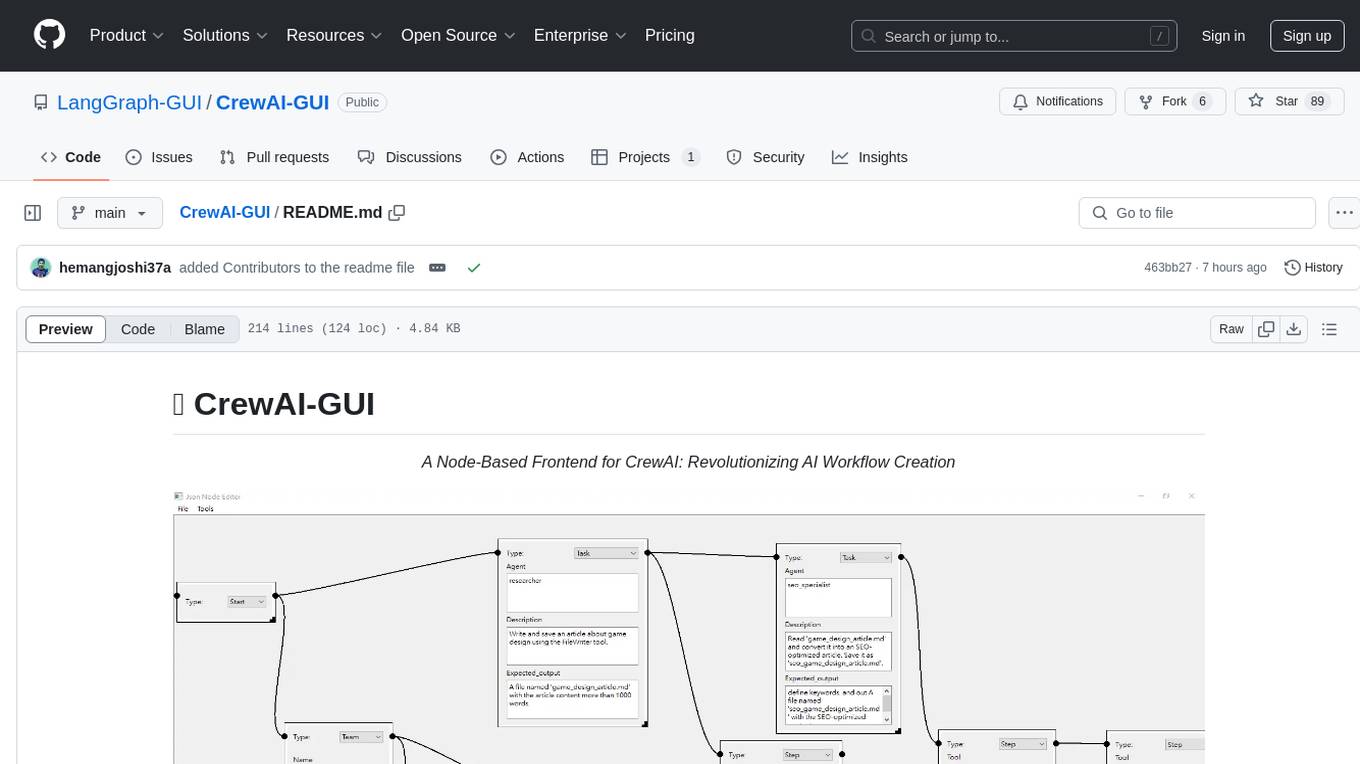

CrewAI-GUI

CrewAI-GUI is a Node-Based Frontend tool designed to revolutionize AI workflow creation. It empowers users to design complex AI agent interactions through an intuitive drag-and-drop interface, export designs to JSON for modularity and reusability, and supports both GPT-4 API and Ollama for flexible AI backend. The tool ensures cross-platform compatibility, allowing users to create AI workflows on Windows, Linux, or macOS efficiently.

Lumina-Note

Lumina Note is a local-first AI note-taking app designed to help users write, connect, and evolve knowledge with AI capabilities while ensuring data ownership. It offers a knowledge-centered workflow with features like Markdown editor, WikiLinks, and graph view. The app includes AI workspace modes such as Chat, Agent, Deep Research, and Codex, along with support for multiple model providers. Users can benefit from bidirectional links, LaTeX support, graph visualization, PDF reader with annotations, real-time voice input, and plugin ecosystem for extended functionalities. Lumina Note is built on Tauri v2 framework with a tech stack including React 18, TypeScript, Tailwind CSS, and SQLite for vector storage.

dexto

Dexto is a lightweight runtime for creating and running AI agents that turn natural language into real-world actions. It serves as the missing intelligence layer for building AI applications, standalone chatbots, or as the reasoning engine inside larger products. Dexto features a powerful CLI and Web UI for running AI agents, supports multiple interfaces, allows hot-swapping of LLMs from various providers, connects to remote tool servers via the Model Context Protocol, is config-driven with version-controlled YAML, offers production-ready core features, extensibility for custom services, and enables multi-agent collaboration via MCP and A2A.

agentscope

AgentScope is an agent-oriented programming tool for building LLM (Large Language Model) applications. It provides transparent development, realtime steering, agentic tools management, model agnostic programming, LEGO-style agent building, multi-agent support, and high customizability. The tool supports async invocation, reasoning models, streaming returns, async/sync tool functions, user interruption, group-wise tools management, streamable transport, stateful/stateless mode MCP client, distributed and parallel evaluation, multi-agent conversation management, and fine-grained MCP control. AgentScope Studio enables tracing and visualization of agent applications. The tool is highly customizable and encourages customization at various levels.

flashinfer

FlashInfer is a library for Language Languages Models that provides high-performance implementation of LLM GPU kernels such as FlashAttention, PageAttention and LoRA. FlashInfer focus on LLM serving and inference, and delivers state-the-art performance across diverse scenarios.

auto-engineer

Auto Engineer is a tool designed to automate the Software Development Life Cycle (SDLC) by building production-grade applications with a combination of human and AI agents. It offers a plugin-based architecture that allows users to install only the necessary functionality for their projects. The tool guides users through key stages including Flow Modeling, IA Generation, Deterministic Scaffolding, AI Coding & Testing Loop, and Comprehensive Quality Checks. Auto Engineer follows a command/event-driven architecture and provides a modular plugin system for specific functionalities. It supports TypeScript with strict typing throughout and includes a built-in message bus server with a web dashboard for monitoring commands and events.

executorch

ExecuTorch is an end-to-end solution for enabling on-device inference capabilities across mobile and edge devices including wearables, embedded devices and microcontrollers. It is part of the PyTorch Edge ecosystem and enables efficient deployment of PyTorch models to edge devices. Key value propositions of ExecuTorch are: * **Portability:** Compatibility with a wide variety of computing platforms, from high-end mobile phones to highly constrained embedded systems and microcontrollers. * **Productivity:** Enabling developers to use the same toolchains and SDK from PyTorch model authoring and conversion, to debugging and deployment to a wide variety of platforms. * **Performance:** Providing end users with a seamless and high-performance experience due to a lightweight runtime and utilizing full hardware capabilities such as CPUs, NPUs, and DSPs.

For similar tasks

OpenAnalyst

OpenAnalyst is an open-source VS Code AI agent specialized in data analytics and general coding tasks. It merges features from KiloCode, Roo Code, and Cline, offering code generation from natural language, data analytics mode, self-checking, terminal command running, browser automation, latest AI models, and API keys option. It supports multi-mode operation for roles like Data Analyst, Code, Ask, and Debug. OpenAnalyst is a fork of KiloCode, combining the best features from Cline, Roo Code, and KiloCode, with enhancements like MCP Server Marketplace, automated refactoring, and support for latest AI models.

OpenSandbox

OpenSandbox is a general-purpose sandbox platform for AI applications, offering multi-language SDKs, unified sandbox APIs, and Docker/Kubernetes runtimes for scenarios like Coding Agents, GUI Agents, Agent Evaluation, AI Code Execution, and RL Training. It provides features such as multi-language SDKs, sandbox protocol, sandbox runtime, sandbox environments, and network policy. Users can perform basic sandbox operations like installing and configuring the sandbox server, starting the sandbox server, creating a code interpreter, and executing commands. The platform also offers examples for coding agent integrations, browser and desktop environments, and ML and training tasks.

openrl

OpenRL is an open-source general reinforcement learning research framework that supports training for various tasks such as single-agent, multi-agent, offline RL, self-play, and natural language. Developed based on PyTorch, the goal of OpenRL is to provide a simple-to-use, flexible, efficient and sustainable platform for the reinforcement learning research community. It supports a universal interface for all tasks/environments, single-agent and multi-agent tasks, offline RL training with expert dataset, self-play training, reinforcement learning training for natural language tasks, DeepSpeed, Arena for evaluation, importing models and datasets from Hugging Face, user-defined environments, models, and datasets, gymnasium environments, callbacks, visualization tools, unit testing, and code coverage testing. It also supports various algorithms like PPO, DQN, SAC, and environments like Gymnasium, MuJoCo, Atari, and more.

AgentGym

AgentGym is a framework designed to help the AI community evaluate and develop generally-capable Large Language Model-based agents. It features diverse interactive environments and tasks with real-time feedback and concurrency. The platform supports 14 environments across various domains like web navigating, text games, house-holding tasks, digital games, and more. AgentGym includes a trajectory set (AgentTraj) and a benchmark suite (AgentEval) to facilitate agent exploration and evaluation. The framework allows for agent self-evolution beyond existing data, showcasing comparable results to state-of-the-art models.

synthora

Synthora is a lightweight and extensible framework for LLM-driven Agents and ALM research. It aims to simplify the process of building, testing, and evaluating agents by providing essential components. The framework allows for easy agent assembly with a single config, reducing the effort required for tuning and sharing agents. Although in early development stages with unstable APIs, Synthora welcomes feedback and contributions to enhance its stability and functionality.

adk-java

Agent Development Kit (ADK) for Java is an open-source toolkit designed for developers to build, evaluate, and deploy sophisticated AI agents with flexibility and control. It allows defining agent behavior, orchestration, and tool use directly in code, enabling robust debugging, versioning, and deployment anywhere. The toolkit offers a rich tool ecosystem, code-first development approach, and support for modular multi-agent systems, making it ideal for creating advanced AI agents integrated with Google Cloud services.

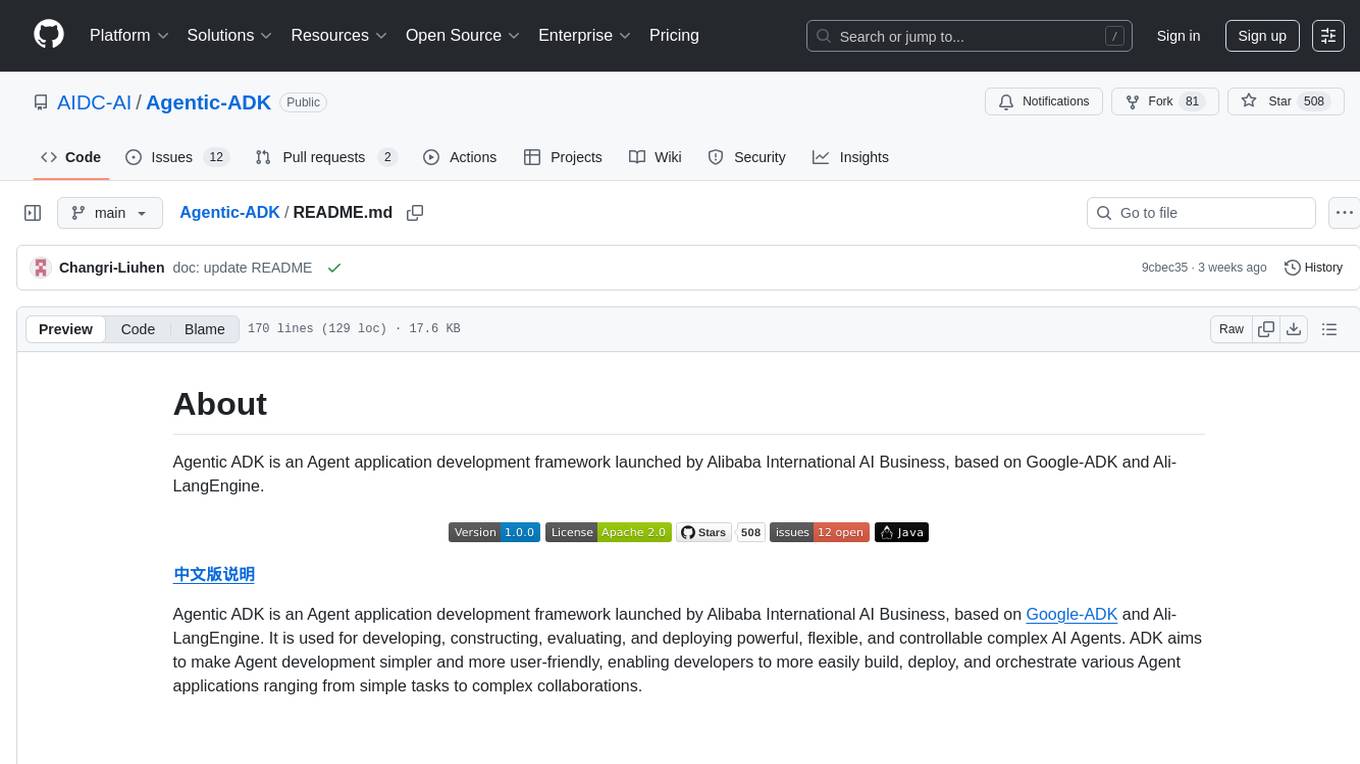

Agentic-ADK

Agentic ADK is an Agent application development framework launched by Alibaba International AI Business, based on Google-ADK and Ali-LangEngine. It is used for developing, constructing, evaluating, and deploying powerful, flexible, and controllable complex AI Agents. ADK aims to make Agent development simpler and more user-friendly, enabling developers to more easily build, deploy, and orchestrate various Agent applications ranging from simple tasks to complex collaborations.

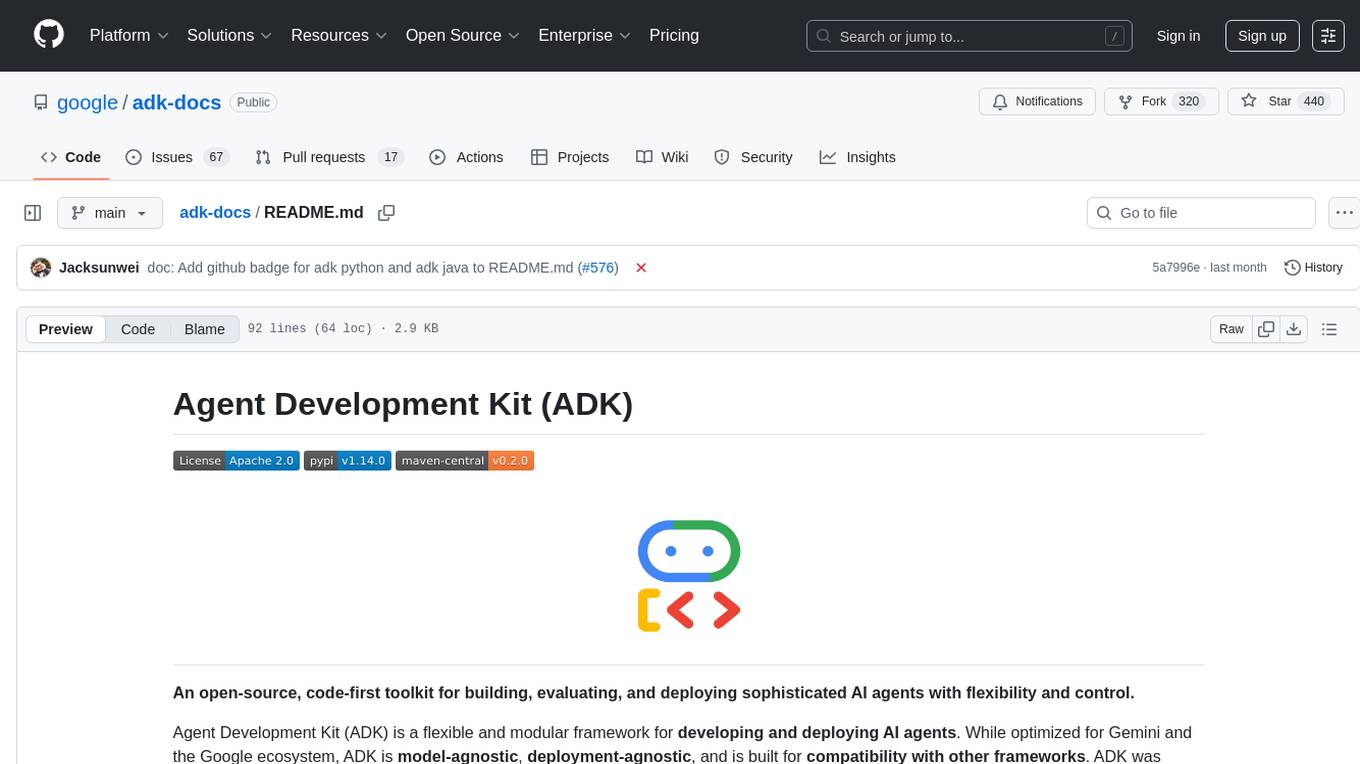

adk-docs

Agent Development Kit (ADK) is an open-source, code-first toolkit for building, evaluating, and deploying sophisticated AI agents with flexibility and control. It is a flexible and modular framework optimized for Gemini and the Google ecosystem, model-agnostic, deployment-agnostic, and compatible with other frameworks. ADK simplifies agent development by making it feel more like software development, enabling developers to create, deploy, and orchestrate agentic architectures from simple tasks to complex workflows.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.