fastagency

The fastest way to bring multi-agent workflows to production.

Stars: 421

FastAgency is a powerful tool that leverages the AutoGen framework to quickly build applications with multi-agent workflows. It supports various interfaces like ConsoleUI and MesopUI, allowing users to create interactive applications. The tool enables defining workflows between agents, such as students and teachers, and summarizing conversations. FastAgency aims to expand its capabilities by integrating with additional agentic frameworks like CrewAI, providing more options for workflow definition and AI tool integration.

README:

The fastest way to bring multi-agent workflows to production.

For start, FastAgency is not yet another agentic AI framework. There are many such frameworks available today, the most popular open-source one is AG2 (formerly AutoGen). FastAgency provides you with a unified programming interface for deploying agentic workflows written in AG2 agentic framework in both development and productional settings. With only a few lines of code, you can create a web chat application or REST API service interacting with agents of your choice. If you need to scale-up your workloads, FastAgency can help you deploy a fully distributed system using internal message brokers coordinating multiple machines in multiple datacenters with just a few lines of code changed from your local development setup.

FastAgency is an open-source framework designed to accelerate the transition from prototype to production for multi-agent AI workflows. For developers who use the AG2 (formerly AutoGen) framework, FastAgency enables you to seamlessly scale Jupyter notebook prototypes into a fully functional, production-ready applications. With multi-framework support, a unified programming interface, and powerful API integration capabilities, FastAgency streamlines the deployment process, saving time and effort while maintaining flexibility and performance.

Whether you're orchestrating complex AI agents or integrating external APIs into workflows, FastAgency provides the tools necessary to quickly transition from concept to production, reducing development cycles and allowing you to focus on optimizing your multi-agent systems.

-

Unified Programming Interface Across UIs: FastAgency features a common programming interface that enables you to develop your core workflows once and reuse them across various user interfaces without rewriting code. This includes support for both console-based applications via

ConsoleUIand web-based applications viaMesopUI. Whether you need a command-line tool or a fully interactive web app, FastAgency allows you to deploy the same underlying workflows across environments, saving development time and ensuring consistency. -

Seamless External API Integration: One of FastAgency's standout features is its ability to easily integrate external APIs into your agent workflows. With just a few lines of code, you can import an OpenAPI specification, and in only one more line, you can connect it to your agents. This dramatically simplifies the process of enhancing AI agents with real-time data, external processing, or third-party services. For example, you can easily integrate a weather API to provide dynamic, real-time weather updates to your users, making your application more interactive and useful with minimal effort.

-

Tester Class for Continuous Integration: FastAgency also provides a Tester Class that enables developers to write and execute tests for their multi-agent workflows. This feature is crucial for maintaining the reliability and robustness of your application, allowing you to automatically verify agent behavior and interactions. The Tester Class is designed to integrate smoothly with continuous integration (CI) pipelines, helping you catch bugs early and ensure that your workflows remain functional as they scale into production.

-

Command-Line Interface (CLI) for Orchestration: FastAgency includes a powerful command-line interface (CLI) for orchestrating and managing multi-agent applications directly from the terminal. The CLI allows developers to quickly run workflows, pass parameters, and monitor agent interactions without needing a full GUI. This is especially useful for automating deployments and integrating workflows into broader DevOps pipelines, enabling developers to maintain control and flexibility in how they manage AI-driven applications.

FastAgency bridges the gap between rapid prototyping and production-ready deployment, empowering developers to bring their multi-agent systems to life quickly and efficiently. By integrating familiar frameworks like AG2 (formerly AutoGen), providing powerful API integration, and offering robust CI testing tools, FastAgency reduces the complexity and overhead typically associated with deploying AI agents in real-world applications.

Whether you’re building interactive console tools, developing fully-featured web apps, or orchestrating large-scale multi-agent systems, FastAgency is built to help you deploy faster, more reliably, and with greater flexibility.

Currently, the only supported runtime is AG2 (formerly AutoGen).

FastAgency currently supports workflows defined using AG2 (formerly AutoGen) and provides options for different types of applications:

-

Console: Use the

ConsoleUIinterface for command-line based interaction. This is ideal for developing and testing workflows in a text-based environment. -

Mesop: Utilize

MesopUIfor web-based applications. This interface is suitable for creating web applications with a user-friendly interface.

FastAgency can use chainable network adapters that can be used to easily create scalable, production ready architectures for serving your workflows. Currently, we support the following network adapters:

-

REST API via FastAPI: Use the

FastAPIAdapterto serve your workflow using FastAPI server. This setup allows you to work your workflows in multiple workers and serve them using the highly extensible and stable ASGI server. -

NATS.io via FastStream: Utilize the

NatsAdapterto use NATS.io MQ message broker for highly-scalable, production-ready setup. This interface is suitable for setups in VPN-s or, in combination with theFastAPIAdapterto serve public workflows in an authenticated, secure manner.

We strongly recommend using Cookiecutter for setting up a FastAgency project. It creates the project folder structure, default workflow, automatically installs all the necessary requirements, and creates a devcontainer that can be used with Visual Studio Code for development.

-

Install Cookiecutter with the following command:

pip install cookiecutter -

Run the

cookiecuttercommand:cookiecutter https://github.com/airtai/cookiecutter-fastagency.git -

Assuming that you used the default values, you should get the following output:

[1/4] project_name (My FastAgency App): [2/4] project_slug (my_fastagency_app): [3/4] Select app_type 1 - fastapi+mesop 2 - mesop 3 - nats+fastapi+mesop Choose from [1/2/3] (1): 1 [4/4] Select python_version 1 - 3.12 2 - 3.11 3 - 3.10 Choose from [1/2/3] (1): [5/5] Select authentication 1 - none 2 - google Choose from [1/2] (1):

-

To run LLM-based applications, you need an API key for the LLM used. The most commonly used LLM is OpenAI. To use it, create an OpenAI API Key and set it as an environment variable in the terminal using the following command:

export OPENAI_API_KEY=openai_api_key_here -

Open the generated project in Visual Studio Code with the following command:

code my_fastagency_app -

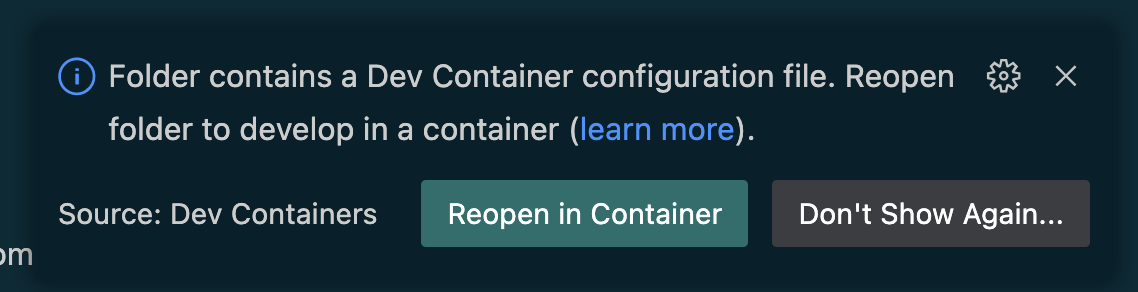

Once the project is opened, you will get the following option to reopen it in a devcontainer:

-

After reopening the project in devcontainer, you can verify that the setup is correct by running the provided tests with the following command:

pytest -sYou should get the following output if everything is correctly setup.

=================================== test session starts =================================== platform linux -- Python 3.12.7, pytest-8.3.3, pluggy-1.5.0 rootdir: /workspaces/my_fastagency_app configfile: pyproject.toml plugins: asyncio-0.24.0, anyio-4.6.2.post1 asyncio: mode=Mode.STRICT, default_loop_scope=None collected 1 item tests/test_workflow.py . [100%] ==================================== 1 passed in 1.02s ====================================

You need to define the workflow that your application will use. This is where you specify how the agents interact and what they do. Here's a simple example of a workflow definition as it is generated by the cookie cutter under my_fastagency_app/workflow.py:

import os

from typing import Any

from autogen.agentchat import ConversableAgent

from fastagency import UI

from fastagency.runtimes.ag2 import Workflow

llm_config = {

"config_list": [

{

"model": "gpt-4o-mini",

"api_key": os.getenv("OPENAI_API_KEY"),

}

],

"temperature": 0.8,

}

wf = Workflow()

@wf.register(name="simple_learning", description="Student and teacher learning chat") # type: ignore[misc]

def simple_workflow(ui: UI, params: dict[str, Any]) -> str:

initial_message = ui.text_input(

sender="Workflow",

recipient="User",

prompt="I can help you learn about mathematics. What subject you would like to explore?",

)

student_agent = ConversableAgent(

name="Student_Agent",

system_message="You are a student willing to learn.",

llm_config=llm_config,

)

teacher_agent = ConversableAgent(

name="Teacher_Agent",

system_message="You are a math teacher.",

llm_config=llm_config,

)

chat_result = student_agent.initiate_chat(

teacher_agent,

message=initial_message,

summary_method="reflection_with_llm",

max_turns=3,

)

return str(chat_result.summary)This code snippet sets up a simple learning chat between a student and a teacher. It defines the agents and how they should interact and specify how the conversation should be summarized.

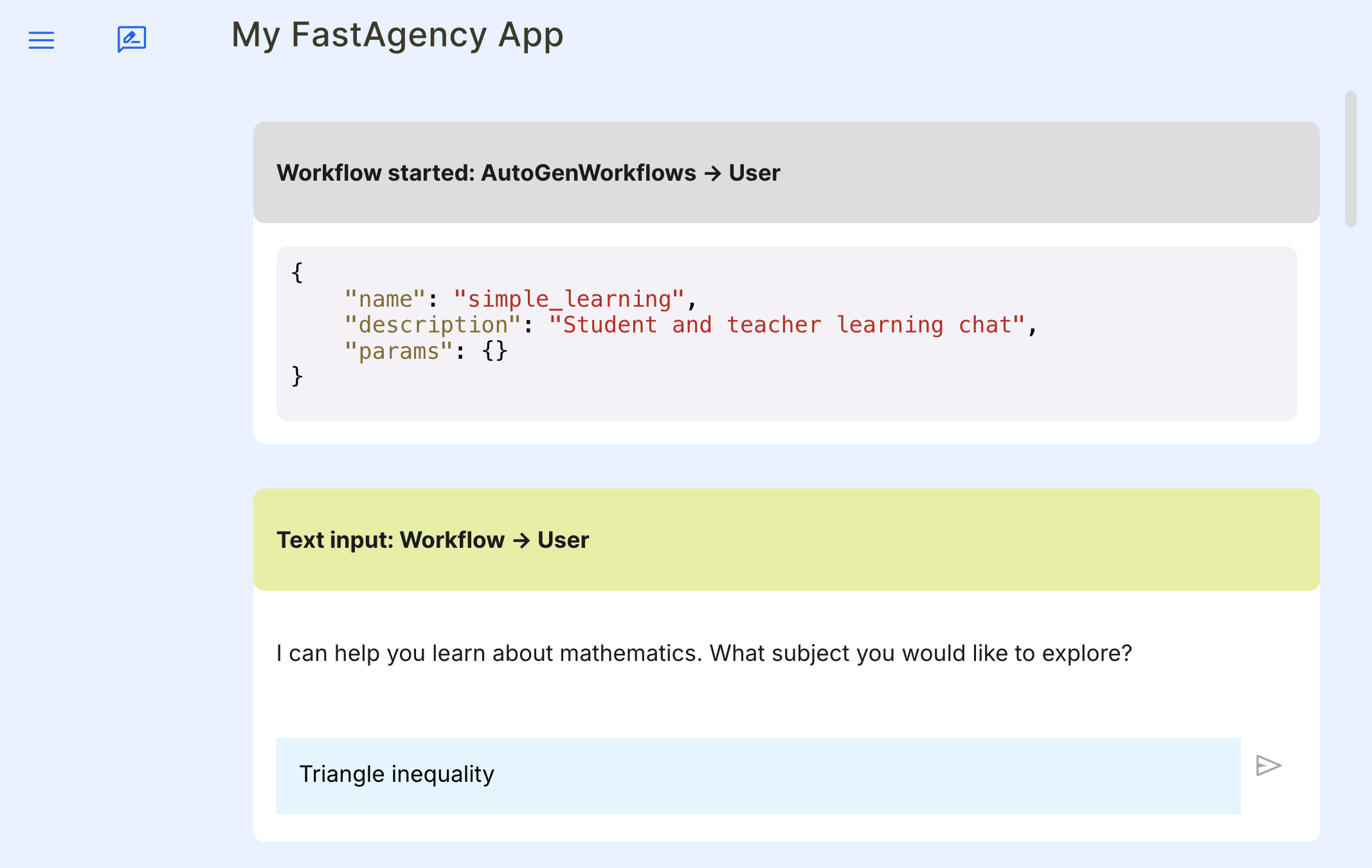

To ensure that the workflow we have defined is working properly, we can test it locally using MesopUI. The code below can be found under my_fastagency_app/local/main_mesop.py and imports the defined workflow and sets up MesopUI.

You can run the Mesop application locally with the following command on Linux and MacOS:

gunicorn my_fastagency_app.local.main_mesop:appOn Windows, please use the following command:

waitress-serve --listen=0.0.0.0:8000 my_fastagency_app.local.main_mesop:appOpen the MesopUI URL http://localhost:8000 in your browser. You can now use the graphical user interface to start, run, test and debug the autogen workflow manually.

If you created the project using Cookiecutter, then building the Docker image is as simple as running the provided script, as shown below:

./scripts/build_docker.shSimilarly, running the Docker container is as simple as running the provided script, as shown below:

./scripts/run_docker.shIf you created the project using Cookiecutter, there are built-in scripts to deploy your workflow to Fly.io. In Fly.io, the application namespace is global, so the application name you chose might already be taken. To check your application's name availability and to reserve it, you can run the following script:

./scripts/register_to_fly_io.shOnce you have reserved your application name, you can test whether you can deploy your application to Fly.io using the following script:

./scripts/deploy_to_fly_io.shThis is only for testing purposes. You should deploy using GitHub Actions{target="_blank"} as explained below.

Cookiecutter generated all the necessary files to deploy your application to Fly.io using GitHub Actions. Simply push your code to your github repository's main branch and GitHub Actions will automatically deploy your application to Fly.io. For this, you need to set the following secrets in your GitHub repository:

FLY_API_TOKENOPENAI_API_KEY

To learn how to create keys and add them as secrets, use the following links:

We are actively working on expanding FastAgency’s capabilities. In addition to supporting AG2 (formerly AutoGen), we plan to integrate support for other frameworks, other network providers and other UI frameworks.

Stay up to date with new features and integrations by following our documentation and community updates on our Discord server. FastAgency is continually evolving to support new frameworks, APIs, and deployment strategies, ensuring you remain at the forefront of AI-driven development.

Last but not least, show us your support by giving a star to our GitHub repository.

Your support helps us to stay in touch with you and encourages us to continue developing and improving the framework. Thank you for your support!

Thanks to all of these amazing people who made the project better!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for fastagency

Similar Open Source Tools

fastagency

FastAgency is a powerful tool that leverages the AutoGen framework to quickly build applications with multi-agent workflows. It supports various interfaces like ConsoleUI and MesopUI, allowing users to create interactive applications. The tool enables defining workflows between agents, such as students and teachers, and summarizing conversations. FastAgency aims to expand its capabilities by integrating with additional agentic frameworks like CrewAI, providing more options for workflow definition and AI tool integration.

fastagency

FastAgency is an open-source framework designed to accelerate the transition from prototype to production for multi-agent AI workflows. It provides a unified programming interface for deploying agentic workflows written in AG2 agentic framework in both development and productional settings. With features like seamless external API integration, a Tester Class for continuous integration, and a Command-Line Interface (CLI) for orchestration, FastAgency streamlines the deployment process, saving time and effort while maintaining flexibility and performance. Whether orchestrating complex AI agents or integrating external APIs, FastAgency helps users quickly transition from concept to production, reducing development cycles and optimizing multi-agent systems.

UltraRAG

The UltraRAG framework is a researcher and developer-friendly RAG system solution that simplifies the process from data construction to model fine-tuning in domain adaptation. It introduces an automated knowledge adaptation technology system, supporting no-code programming, one-click synthesis and fine-tuning, multidimensional evaluation, and research-friendly exploration work integration. The architecture consists of Frontend, Service, and Backend components, offering flexibility in customization and optimization. Performance evaluation in the legal field shows improved results compared to VanillaRAG, with specific metrics provided. The repository is licensed under Apache-2.0 and encourages citation for support.

arbigent

Arbigent (Arbiter-Agent) is an AI agent testing framework designed to make AI agent testing practical for modern applications. It addresses challenges faced by traditional UI testing frameworks and AI agents by breaking down complex tasks into smaller, dependent scenarios. The framework is customizable for various AI providers, operating systems, and form factors, empowering users with extensive customization capabilities. Arbigent offers an intuitive UI for scenario creation and a powerful code interface for seamless test execution. It supports multiple form factors, optimizes UI for AI interaction, and is cost-effective by utilizing models like GPT-4o mini. With a flexible code interface and open-source nature, Arbigent aims to revolutionize AI agent testing in modern applications.

TaskingAI

TaskingAI brings Firebase's simplicity to **AI-native app development**. The platform enables the creation of GPTs-like multi-tenant applications using a wide range of LLMs from various providers. It features distinct, modular functions such as Inference, Retrieval, Assistant, and Tool, seamlessly integrated to enhance the development process. TaskingAI’s cohesive design ensures an efficient, intelligent, and user-friendly experience in AI application development.

langmanus

LangManus is a community-driven AI automation framework that combines language models with specialized tools for tasks like web search, crawling, and Python code execution. It implements a hierarchical multi-agent system with agents like Coordinator, Planner, Supervisor, Researcher, Coder, Browser, and Reporter. The framework supports LLM integration, search and retrieval tools, Python integration, workflow management, and visualization. LangManus aims to give back to the open-source community and welcomes contributions in various forms.

RainbowGPT

RainbowGPT is a versatile tool that offers a range of functionalities, including Stock Analysis for financial decision-making, MySQL Management for database navigation, and integration of AI technologies like GPT-4 and ChatGlm3. It provides a user-friendly interface suitable for all skill levels, ensuring seamless information flow and continuous expansion of emerging technologies. The tool enhances adaptability, creativity, and insight, making it a valuable asset for various projects and tasks.

DevoxxGenieIDEAPlugin

Devoxx Genie is a Java-based IntelliJ IDEA plugin that integrates with local and cloud-based LLM providers to aid in reviewing, testing, and explaining project code. It supports features like code highlighting, chat conversations, and adding files/code snippets to context. Users can modify REST endpoints and LLM parameters in settings, including support for cloud-based LLMs. The plugin requires IntelliJ version 2023.3.4 and JDK 17. Building and publishing the plugin is done using Gradle tasks. Users can select an LLM provider, choose code, and use commands like review, explain, or generate unit tests for code analysis.

maiar-ai

MAIAR is a composable, plugin-based AI agent framework designed to abstract data ingestion, decision-making, and action execution into modular plugins. It enables developers to define triggers and actions as standalone plugins, while the core runtime handles decision-making dynamically. This framework offers extensibility, composability, and model-driven behavior, allowing seamless addition of new functionality. MAIAR's architecture is influenced by Unix pipes, ensuring highly composable plugins, dynamic execution pipelines, and transparent debugging. It remains declarative and extensible, allowing developers to build complex AI workflows without rigid architectures.

premsql

PremSQL is an open-source library designed to help developers create secure, fully local Text-to-SQL solutions using small language models. It provides essential tools for building and deploying end-to-end Text-to-SQL pipelines with customizable components, ideal for secure, autonomous AI-powered data analysis. The library offers features like Local-First approach, Customizable Datasets, Robust Executors and Evaluators, Advanced Generators, Error Handling and Self-Correction, Fine-Tuning Support, and End-to-End Pipelines. Users can fine-tune models, generate SQL queries from natural language inputs, handle errors, and evaluate model performance against predefined metrics. PremSQL is extendible for customization and private data usage.

llm-answer-engine

This repository contains the code and instructions needed to build a sophisticated answer engine that leverages the capabilities of Groq, Mistral AI's Mixtral, Langchain.JS, Brave Search, Serper API, and OpenAI. Designed to efficiently return sources, answers, images, videos, and follow-up questions based on user queries, this project is an ideal starting point for developers interested in natural language processing and search technologies.

Director

Director is a framework to build video agents that can reason through complex video tasks like search, editing, compilation, generation, etc. It enables users to summarize videos, search for specific moments, create clips instantly, integrate GenAI projects and APIs, add overlays, generate thumbnails, and more. Built on VideoDB's 'video-as-data' infrastructure, Director is perfect for developers, creators, and teams looking to simplify media workflows and unlock new possibilities.

multi-agent-orchestrator

Multi-Agent Orchestrator is a flexible and powerful framework for managing multiple AI agents and handling complex conversations. It intelligently routes queries to the most suitable agent based on context and content, supports dual language implementation in Python and TypeScript, offers flexible agent responses, context management across agents, extensible architecture for customization, universal deployment options, and pre-built agents and classifiers. It is suitable for various applications, from simple chatbots to sophisticated AI systems, accommodating diverse requirements and scaling efficiently.

clearml

ClearML is a suite of tools designed to streamline the machine learning workflow. It includes an experiment manager, MLOps/LLMOps, data management, and model serving capabilities. ClearML is open-source and offers a free tier hosting option. It supports various ML/DL frameworks and integrates with Jupyter Notebook and PyCharm. ClearML provides extensive logging capabilities, including source control info, execution environment, hyper-parameters, and experiment outputs. It also offers automation features, such as remote job execution and pipeline creation. ClearML is designed to be easy to integrate, requiring only two lines of code to add to existing scripts. It aims to improve collaboration, visibility, and data transparency within ML teams.

chatnio

Chat Nio is a next-generation AI one-stop solution that provides a rich and user-friendly interface for interacting with various AI models. It offers features such as AI chat conversation, rich format compatibility, markdown support, message menu support, multi-platform adaptation, dialogue memory, full-model file parsing, full-model DuckDuckGo online search, full-screen large text editing, model marketplace, preset support, site announcements, preference settings, internationalization support, and a rich admin system. Chat Nio also boasts a powerful channel management system that utilizes a self-developed channel distribution algorithm, supports multi-channel management, is compatible with multiple formats, allows for custom models, supports channel retries, enables balanced load within the same channel, and provides channel model mapping and user grouping. Additionally, Chat Nio offers forwarding API services that are compatible with multiple formats in the OpenAI universal format and support multiple model compatible layers. It also provides a custom build and install option for highly customizable deployments. Chat Nio is an open-source project licensed under the Apache License 2.0 and welcomes contributions from the community.

preswald

Preswald is a full-stack platform for building, deploying, and managing interactive data applications in Python. It simplifies the process by combining ingestion, storage, transformation, and visualization into one lightweight SDK. With Preswald, users can connect to various data sources, customize app themes, and easily deploy apps locally. The platform focuses on code-first simplicity, end-to-end coverage, and efficiency by design, making it suitable for prototyping internal tools or deploying production-grade apps with reduced complexity and cost.

For similar tasks

python-tutorial-notebooks

This repository contains Jupyter-based tutorials for NLP, ML, AI in Python for classes in Computational Linguistics, Natural Language Processing (NLP), Machine Learning (ML), and Artificial Intelligence (AI) at Indiana University.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

MoonshotAI-Cookbook

The MoonshotAI-Cookbook provides example code and guides for accomplishing common tasks with the MoonshotAI API. To run these examples, you'll need an MoonshotAI account and associated API key. Most code examples are written in Python, though the concepts can be applied in any language.

AHU-AI-Repository

This repository is dedicated to the learning and exchange of resources for the School of Artificial Intelligence at Anhui University. Notes will be published on this website first: https://www.aoaoaoao.cn and will be synchronized to the repository regularly. You can also contact me at [email protected].

modern_ai_for_beginners

This repository provides a comprehensive guide to modern AI for beginners, covering both theoretical foundations and practical implementation. It emphasizes the importance of understanding both the mathematical principles and the code implementation of AI models. The repository includes resources on PyTorch, deep learning fundamentals, mathematical foundations, transformer-based LLMs, diffusion models, software engineering, and full-stack development. It also features tutorials on natural language processing with transformers, reinforcement learning, and practical deep learning for coders.

Building-AI-Applications-with-ChatGPT-APIs

This repository is for the book 'Building AI Applications with ChatGPT APIs' published by Packt. It provides code examples and instructions for mastering ChatGPT, Whisper, and DALL-E APIs through building innovative AI projects. Readers will learn to develop AI applications using ChatGPT APIs, integrate them with frameworks like Flask and Django, create AI-generated art with DALL-E APIs, and optimize ChatGPT models through fine-tuning.

examples

This repository contains a collection of sample applications and Jupyter Notebooks for hands-on experience with Pinecone vector databases and common AI patterns, tools, and algorithms. It includes production-ready examples for review and support, as well as learning-optimized examples for exploring AI techniques and building applications. Users can contribute, provide feedback, and collaborate to improve the resource.

lingoose

LinGoose is a modular Go framework designed for building AI/LLM applications. It offers the flexibility to import only the necessary modules, abstracts features for customization, and provides a comprehensive solution for developing AI/LLM applications from scratch. The framework simplifies the process of creating intelligent applications by allowing users to choose preferred implementations or create their own. LinGoose empowers developers to leverage its capabilities to streamline the development of cutting-edge AI and LLM projects.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.