mangaba_ai

Repositório minimalista para criação de agentes de IA inteligentes e versáteis com protocolos A2A (Agent-to-Agent) e MCP (Model Context Protocol).

Stars: 166

Mangaba AI is a minimalist repository for creating intelligent and versatile AI agents with A2A (Agent-to-Agent) and MCP (Model Context Protocol) protocols. It supports any AI provider, facilitates communication between agents, manages context effectively, and offers integrated functionalities like chat, analysis, and translation. The setup is straightforward with only 2 steps to get started. The repository includes scripts for automated configuration, manual setup, and environment validation. Users can easily chat with context, analyze text, translate, and interact with multiple agents using A2A protocol. The MCP protocol handles advanced context management automatically, categorizing context types and priorities. The repository also provides examples, documentation, and a comprehensive wiki in Brazilian Portuguese for beginners and developers.

README:

Repositório minimalista para criação de agentes de IA inteligentes e versáteis com protocolos A2A (Agent-to-Agent) e MCP (Model Context Protocol).

📚 WIKI AVANÇADA - Documentação completa em português brasileiro

📋 ÍNDICE COMPLETO - Navegação rápida por todo o repositório

- 🤖 Agente de IA Versátil: Suporte a qualquer provedor de IA

- 🔗 Protocolo A2A: Comunicação entre agentes

- 🧠 Protocolo MCP: Gerenciamento avançado de contexto

- 📝 Funcionalidades Integradas: Chat, análise, tradução e mais

- ⚡ Configuração Simples: Apenas 2 passos para começar

# Configuração completa em um comando

python quick_setup.py# 1. Instalar dependências

pip install -r requirements.txt

# 2. Configurar ambiente

copy .env.template .env

# Edite o arquivo .env com suas configurações

# 3. Validar instalação

python validate_env.pyO script quick_setup.py automatiza todo o processo:

- ✅ Cria ambiente virtual

- ✅ Instala dependências

- ✅ Configura arquivo .env

- ✅ Valida instalação

-

Configure o arquivo .env (copie de

.env.template):

# Obrigatório

GOOGLE_API_KEY=sua_chave_google_api_aqui

# Opcional (com valores padrão)

MODEL_NAME=gemini-2.5-flash

AGENT_NAME=MangabaAgent

LOG_LEVEL=INFO-

Obtenha sua Google API Key:

- Acesse: https://makersuite.google.com/app/apikey

- Crie uma nova chave

- Cole no arquivo .env

# Verifica se tudo está configurado corretamente

python validate_env.py

# Salva relatório detalhado

python validate_env.py --save-reportfrom mangaba_ai import MangabaAgent

# Inicializar com protocolos A2A e MCP habilitados

agent = MangabaAgent()

# Chat com contexto automático

resposta = agent.chat("Olá! Como você pode me ajudar?")

print(resposta)from mangaba_ai import MangabaAgent

agent = MangabaAgent()

# O contexto é mantido automaticamente

print(agent.chat("Meu nome é João"))

print(agent.chat("Qual é o meu nome?")) # Lembra do contexto anterioragent = MangabaAgent()

text = "A inteligência artificial está transformando o mundo."

analysis = agent.analyze_text(text, "Faça uma análise detalhada")

print(analysis)agent = MangabaAgent()

translation = agent.translate("Hello, how are you?", "português")

print(translation)agent = MangabaAgent()

# Após algumas interações...

summary = agent.get_context_summary()

print(summary)O protocolo A2A permite comunicação entre múltiplos agentes:

# Criar dois agentes

agent1 = MangabaAgent()

agent2 = MangabaAgent()

# Enviar requisição de um agente para outro

result = agent1.send_agent_request(

target_agent_id=agent2.agent_id,

action="chat",

params={"message": "Olá do Agent 1!"}

)agent = MangabaAgent()

# Enviar mensagem para todos os agentes conectados

result = agent.broadcast_message(

message="Olá a todos!",

tags=["general", "announcement"]

)- REQUEST: Requisições entre agentes

- RESPONSE: Respostas a requisições

- BROADCAST: Mensagens para múltiplos agentes

- NOTIFICATION: Notificações assíncronas

- ERROR: Mensagens de erro

O protocolo MCP gerencia contexto avançado automaticamente:

- CONVERSATION: Conversas e diálogos

- TASK: Tarefas e operações específicas

- MEMORY: Memórias de longo prazo

- SYSTEM: Informações do sistema

- HIGH: Contexto crítico (sempre preservado)

- MEDIUM: Contexto importante

- LOW: Contexto opcional

agent = MangabaAgent()

# Chat com contexto automático

response = agent.chat("Mensagem", use_context=True)

# Chat sem contexto

response = agent.chat("Mensagem", use_context=False)

# Obter resumo do contexto atual

summary = agent.get_context_summary()from mangaba_ai import MangabaAgent

def demo_completa():

# Criar agente com protocolos habilitados

agent = MangabaAgent()

print(f"Agent ID: {agent.agent_id}")

print(f"MCP Habilitado: {agent.mcp_enabled}")

# Sequência de interações com contexto

agent.chat("Olá, meu nome é Maria")

agent.chat("Eu trabalho com programação")

# Análise com contexto preservado

analysis = agent.analyze_text(

"Python é uma linguagem versátil",

"Analise considerando meu perfil profissional"

)

# Tradução

translation = agent.translate("Good morning", "português")

# Resumo do contexto acumulado

context = agent.get_context_summary()

print("Contexto atual:", context)

# Comunicação A2A

agent.broadcast_message("Demonstração concluída!")

if __name__ == "__main__":

demo_completa()Execute o exemplo interativo:

python examples/basic_example.pyComandos disponíveis:

-

/analyze <texto>- Analisa texto -

/translate <texto>- Traduz texto -

/context- Mostra contexto atual -

/broadcast <mensagem>- Envia broadcast -

/request <agent_id> <action>- Requisição para outro agente -

/help- Ajuda

Para ver uma demonstração completa dos protocolos A2A e MCP:

python examples/basic_example.py --demo-

chat(message, use_context=True)- Chat com/sem contexto -

analyze_text(text, instruction)- Análise de texto -

translate(text, target_language)- Tradução -

get_context_summary()- Resumo do contexto -

send_agent_request(agent_id, action, params)- Requisição A2A -

broadcast_message(message, tags)- Broadcast A2A

- A2A Protocol: Comunicação entre agentes

- MCP Protocol: Gerenciamento de contexto

- Handlers Customizados: Para requisições específicas

- Sessões MCP: Contexto isolado por sessão

API_KEY=sua_chave_api_aqui # Obrigatório

MODEL=modelo_desejado # Opcional

LOG_LEVEL=INFO # Opcional (DEBUG, INFO, WARNING, ERROR)# Agente com configurações customizadas

agent = MangabaAgent()

# Acessar protocolos diretamente

a2a = agent.a2a_protocol

mcp = agent.mcp

# ID único do agente

print(f"Agent ID: {agent.agent_id}")

# Sessão MCP atual

print(f"Session ID: {agent.current_session_id}")agent = MangabaAgent() resposta = agent.chat_with_context( context="Você é um tutor de programação", message="Como criar uma lista em Python?" ) print(resposta)

### Análise de Texto

```python

from mangaba_ai import MangabaAgent

agent = MangabaAgent()

texto = "Este é um texto para analisar..."

analise = agent.analyze_text(texto, "Resuma os pontos principais")

print(analise)

Para usar um modelo diferente, apenas mude no .env:

MODEL=modelo-avancado # Modelo mais avançado

MODEL=modelo-multimodal # Para diferentes tipos de entrada

🔧 Todos os scripts estão organizados na pasta scripts/

-

validate_env.py- Valida configuração do ambiente -

quick_setup.py- Configuração rápida automatizada -

example_env_usage.py- Exemplo de uso das configurações -

exemplo_curso_basico.py- Exemplos práticos do curso básico -

setup_env.py- Configuração manual detalhada

mangaba_ai/

├── 📁 docs/ # 📚 Documentação

│ ├── CURSO_BASICO.md # Curso básico completo

│ ├── SETUP.md # Guia de configuração

│ ├── PROTOCOLS.md # Documentação dos protocolos

│ ├── CHANGELOG.md # Histórico de mudanças

│ ├── SCRIPTS.md # Documentação dos scripts

│ └── README.md # Índice da documentação

├── 📁 scripts/ # 🔧 Scripts de configuração

│ ├── validate_env.py # Validação do ambiente

│ ├── quick_setup.py # Setup rápido automatizado

│ ├── example_env_usage.py # Exemplo de uso

│ ├── exemplo_curso_basico.py # Exemplos do curso

│ ├── setup_env.py # Setup manual detalhado

│ └── README.md # Documentação dos scripts

├── 📁 protocols/ # 🌐 Protocolos de comunicação

│ ├── mcp_protocol.py # Model Context Protocol

│ └── a2a_protocol.py # Agent-to-Agent Protocol

├── 📁 examples/ # 📖 Exemplos de uso

│ └── basic_example.py # Exemplo básico completo

├── 📁 utils/ # 🛠️ Utilitários

│ ├── __init__.py

│ └── logger.py # Sistema de logs

├── mangaba_agent.py # 🤖 Agente principal

├── config.py # ⚙️ Configurações do sistema

├── ESTRUTURA.md # 📁 Organização do repositório

├── .env.example # 🔐 Exemplo de configuração

├── requirements.txt # 📦 Dependências Python

└── README.md # 📖 Este arquivo

📋 Para detalhes completos da estrutura, consulte ESTRUTURA.md

# 1. Configuração rápida

python scripts/quick_setup.py

# 2. Validar ambiente

python scripts/validate_env.py

# 3. Testar exemplo

python scripts/example_env_usage.py

# 4. Exemplos do curso básico

python scripts/exemplo_curso_basico.py

# 5. Exemplo interativo

python examples/basic_example.py🌟 📖 WIKI COMPLETA - Portal Principal da Documentação

A Wiki Avançada do Mangaba AI oferece documentação abrangente em português brasileiro para todos os níveis:

- 🚀 Visão Geral do Projeto - O que é e para que serve

- 🎓 Curso Básico Completo - Tutorial passo-a-passo

- ⚙️ Instalação e Configuração - Guia detalhado de setup

- ❓ FAQ - Perguntas Frequentes - Dúvidas comuns e soluções

- 🌐 Protocolos A2A e MCP - Documentação técnica completa

- ⭐ Melhores Práticas - Guia de boas práticas

- 🤝 Como Contribuir - Diretrizes de contribuição

- 📝 Glossário de Termos - Definições técnicas

- 🔧 Scripts e Automação - Documentação dos scripts

- 📊 Histórico de Mudanças - Changelog completo

- 📁 Estrutura do Projeto - Organização do repositório

🎯 Comece pela Wiki Principal - É seu portal de entrada para toda a documentação!

Agradecemos seu interesse em contribuir! Consulte nosso Guia Completo de Contribuição para informações detalhadas.

- 📚 Leia as Diretrizes de Contribuição

- 🍴 Faça fork do projeto

- 🔧 Configure o ambiente de desenvolvimento

- ⭐ Siga as Melhores Práticas

- 🧪 Execute os testes

- 📤 Abra um Pull Request

- 🐛 Correção de bugs

- ✨ Novas funcionalidades

- 📚 Melhoria da documentação

- 🧪 Adição de testes

- 🌐 Tradução para outros idiomas

📖 Primeira contribuição? Procure por issues marcadas com

good first issue!

MIT License

Mangaba AI - Agentes de IA simples e eficazes! 🤖✨

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for mangaba_ai

Similar Open Source Tools

mangaba_ai

Mangaba AI is a minimalist repository for creating intelligent and versatile AI agents with A2A (Agent-to-Agent) and MCP (Model Context Protocol) protocols. It supports any AI provider, facilitates communication between agents, manages context effectively, and offers integrated functionalities like chat, analysis, and translation. The setup is straightforward with only 2 steps to get started. The repository includes scripts for automated configuration, manual setup, and environment validation. Users can easily chat with context, analyze text, translate, and interact with multiple agents using A2A protocol. The MCP protocol handles advanced context management automatically, categorizing context types and priorities. The repository also provides examples, documentation, and a comprehensive wiki in Brazilian Portuguese for beginners and developers.

aios-core

Synkra AIOS is a Framework for Universal AI Agents powered by AI. It is founded on Agent-Driven Agile Development, offering revolutionary capabilities for AI-driven development and more. Transform any domain with specialized AI expertise: software development, entertainment, creative writing, business strategy, personal well-being, and more. The framework follows a clear hierarchy of priorities: CLI First, Observability Second, UI Third. The CLI is where intelligence resides, all execution, decisions, and automation happen there. Observability is for observing and monitoring what happens in the CLI in real-time. The UI is for specific management and visualizations when necessary. The two key innovations of Synkra AIOS are Planejamento Agêntico and Desenvolvimento Contextualizado por Engenharia, which eliminate inconsistency in planning and loss of context in AI-assisted development.

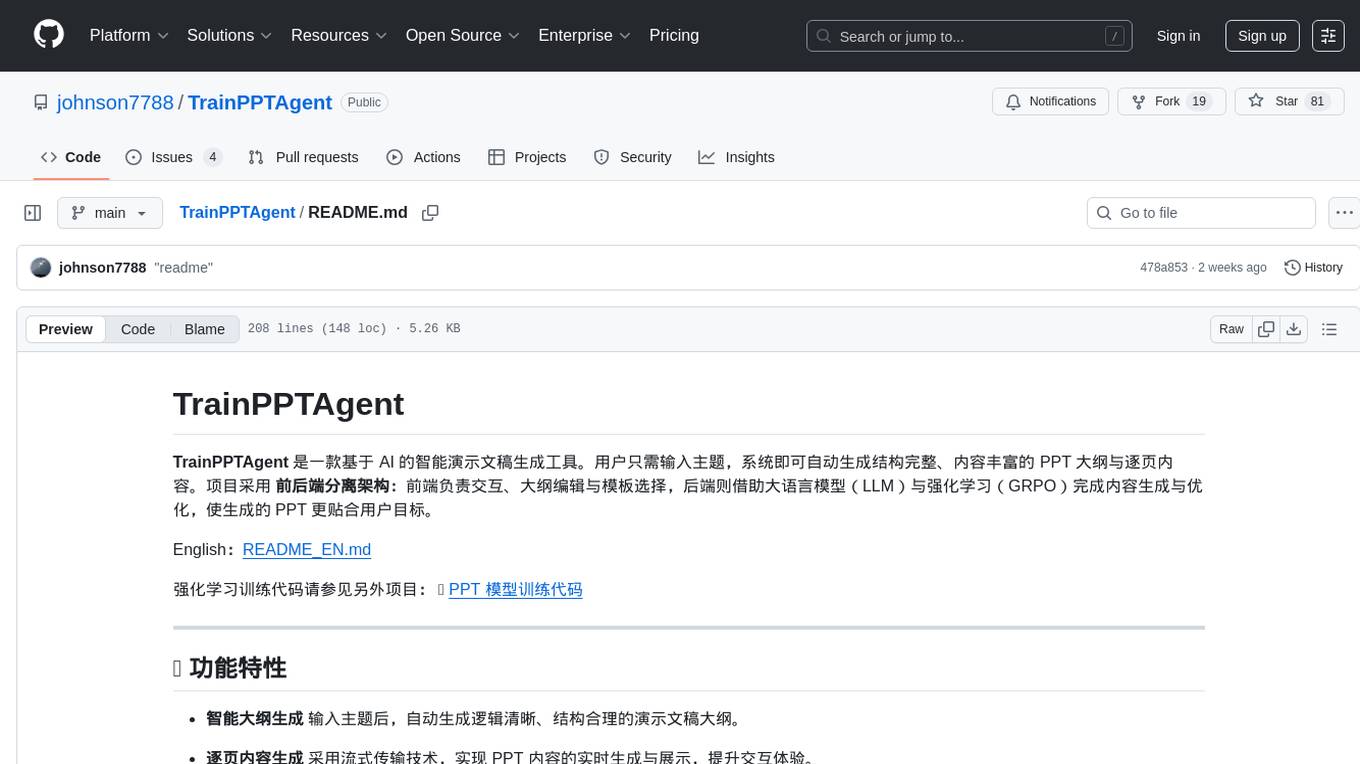

TrainPPTAgent

TrainPPTAgent is an AI-based intelligent presentation generation tool. Users can input a topic and the system will automatically generate a well-structured and content-rich PPT outline and page-by-page content. The project adopts a front-end and back-end separation architecture: the front-end is responsible for interaction, outline editing, and template selection, while the back-end leverages large language models (LLM) and reinforcement learning (GRPO) to complete content generation and optimization, making the generated PPT more tailored to user goals.

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

Con-Nav-Item

Con-Nav-Item is a modern personal navigation system designed for digital workers. It is not just a link bookmark but also an all-in-one workspace integrated with AI smart generation, multi-device synchronization, card-based management, and deep browser integration.

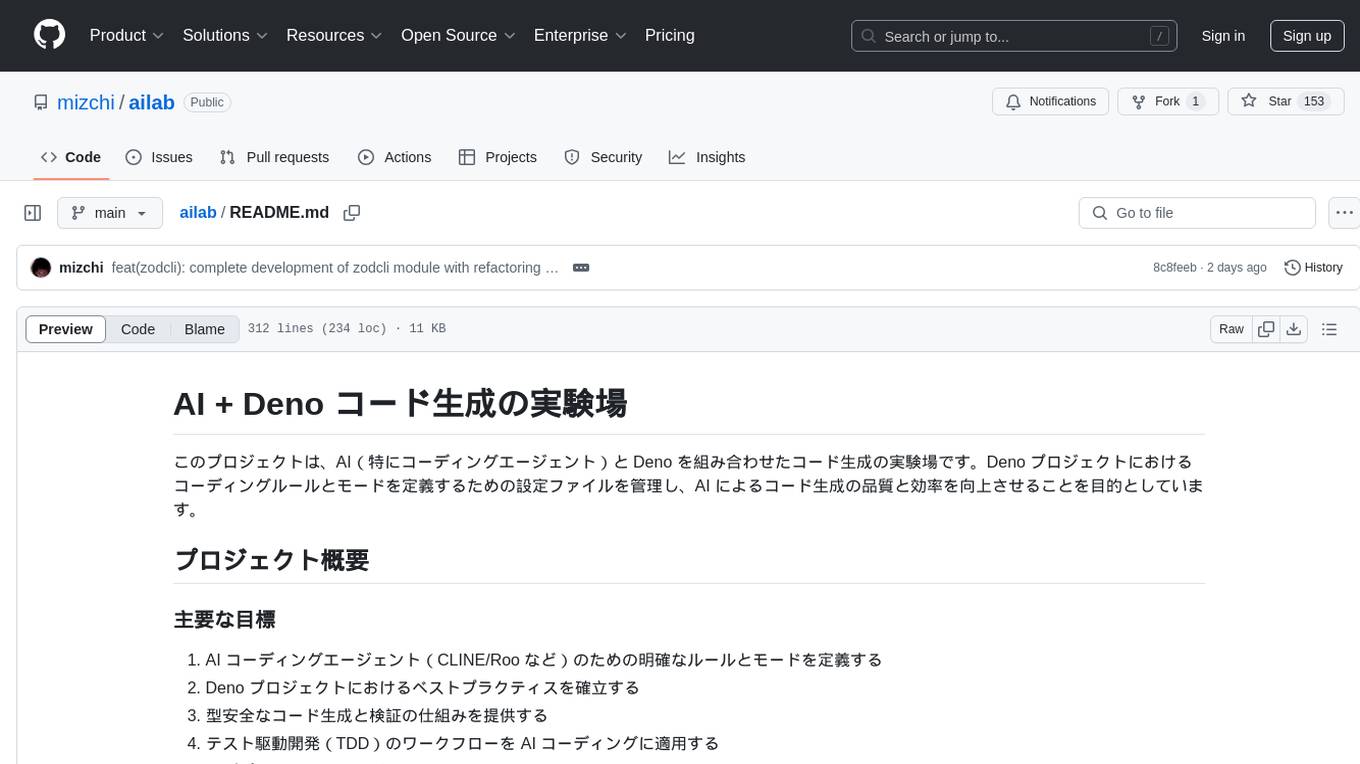

ailab

The 'ailab' project is an experimental ground for code generation combining AI (especially coding agents) and Deno. It aims to manage configuration files defining coding rules and modes in Deno projects, enhancing the quality and efficiency of code generation by AI. The project focuses on defining clear rules and modes for AI coding agents, establishing best practices in Deno projects, providing mechanisms for type-safe code generation and validation, applying test-driven development (TDD) workflow to AI coding, and offering implementation examples utilizing design patterns like adapter pattern.

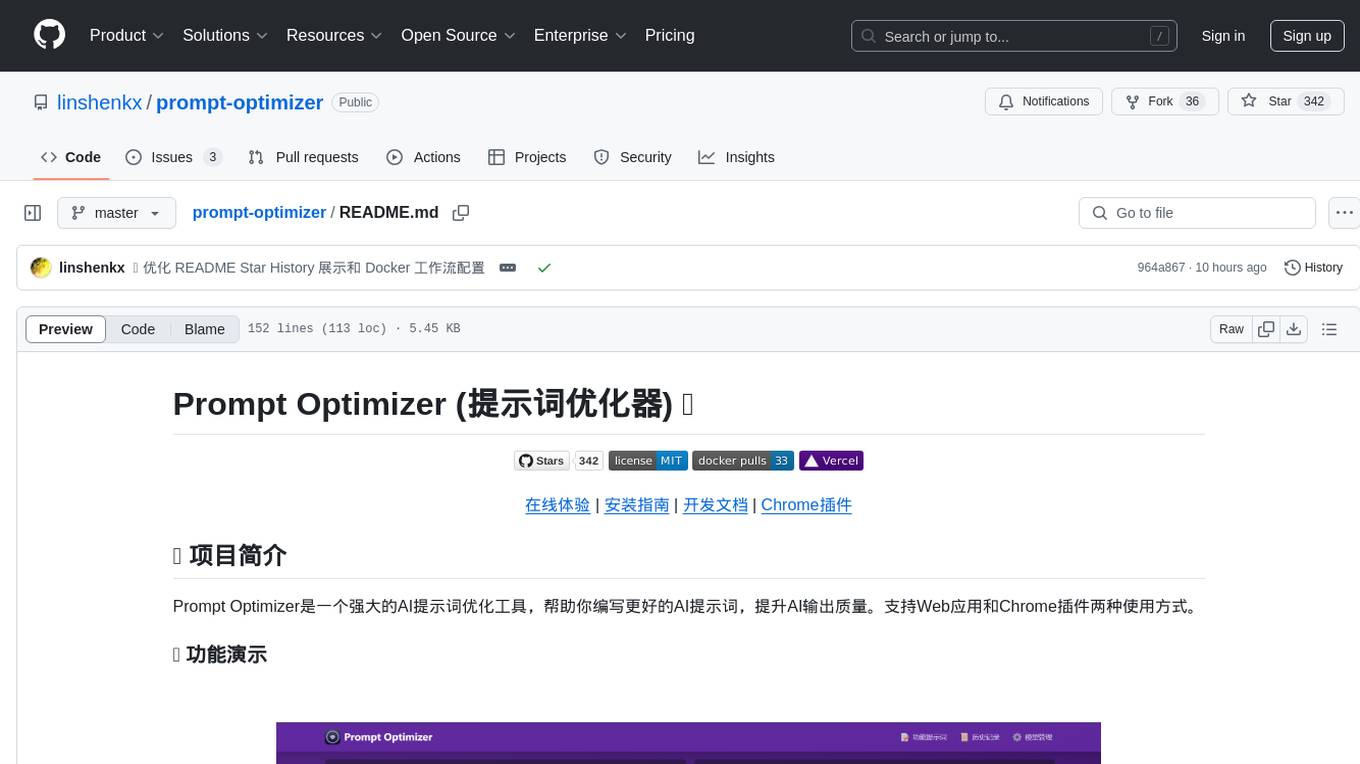

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

AivisSpeech-Engine

AivisSpeech-Engine is a powerful open-source tool for speech recognition and synthesis. It provides state-of-the-art algorithms for converting speech to text and text to speech. The tool is designed to be user-friendly and customizable, allowing developers to easily integrate speech capabilities into their applications. With AivisSpeech-Engine, users can transcribe audio recordings, create voice-controlled interfaces, and generate natural-sounding speech output. Whether you are building a virtual assistant, developing a speech-to-text application, or experimenting with voice technology, AivisSpeech-Engine offers a comprehensive solution for all your speech processing needs.

PersonalExam

PersonalExam is a personalized question generation system based on LLM and knowledge graph collaboration. It utilizes the BKT algorithm, RAG engine, and OpenPangu model to achieve personalized intelligent question generation and recommendation. The system features adaptive question recommendation, fine-grained knowledge tracking, AI answer evaluation, student profiling, visual reports, interactive knowledge graph, user management, and system monitoring.

LLMAI-writer

LLMAI-writer is a powerful AI tool for assisting in novel writing, utilizing state-of-the-art large language models to help writers brainstorm, plan, and create novels. Whether you are an experienced writer or a beginner, LLMAI-writer can help you efficiently complete the writing process.

DocTranslator

DocTranslator is a document translation tool that supports various file formats, compatible with OpenAI format API, and offers batch operations and multi-threading support. Whether for individual users or enterprise teams, DocTranslator helps efficiently complete document translation tasks. It supports formats like txt, markdown, word, csv, excel, pdf (non-scanned), and ppt for AI translation. The tool is deployed using Docker for easy setup and usage.

goclaw

goclaw is a powerful AI Agent framework written in Go language. It provides a complete tool system for FileSystem, Shell, Web, and Browser with Docker sandbox support and permission control. The framework includes a skill system compatible with OpenClaw and AgentSkills specifications, supporting automatic discovery and environment gating. It also offers persistent session storage, multi-channel support for Telegram, WhatsApp, Feishu, QQ, and WeWork, flexible configuration with YAML/JSON support, multiple LLM providers like OpenAI, Anthropic, and OpenRouter, WebSocket Gateway, Cron scheduling, and Browser automation based on Chrome DevTools Protocol.

AivisSpeech

AivisSpeech is a Japanese text-to-speech software based on the VOICEVOX editor UI. It incorporates the AivisSpeech Engine for generating emotionally rich voices easily. It supports AIVMX format voice synthesis model files and specific model architectures like Style-Bert-VITS2. Users can download AivisSpeech and AivisSpeech Engine for Windows and macOS PCs, with minimum memory requirements specified. The development follows the latest version of VOICEVOX, focusing on minimal modifications, rebranding only where necessary, and avoiding refactoring. The project does not update documentation, maintain test code, or refactor unused features to prevent conflicts with VOICEVOX.

Code-Interpreter-Api

Code Interpreter API is a project that combines a scheduling center with a sandbox environment, dedicated to creating the world's best code interpreter. It aims to provide a secure, reliable API interface for remotely running code and obtaining execution results, accelerating the development of various AI agents, and being a boon to many AI enthusiasts. The project innovatively combines Docker container technology to achieve secure isolation and execution of Python code. Additionally, the project supports storing generated image data in a PostgreSQL database and accessing it through API endpoints, providing rich data processing and storage capabilities.

Lim-Code

LimCode is a powerful VS Code AI programming assistant that supports multiple AI models, intelligent tool invocation, and modular architecture. It features support for various AI channels, a smart tool system for code manipulation, MCP protocol support for external tool extension, intelligent context management, session management, and more. Users can install LimCode from the plugin store or via VSIX, or build it from the source code. The tool offers a rich set of features for AI programming and code manipulation within the VS Code environment.

For similar tasks

phospho

Phospho is a text analytics platform for LLM apps. It helps you detect issues and extract insights from text messages of your users or your app. You can gather user feedback, measure success, and iterate on your app to create the best conversational experience for your users.

OpenFactVerification

Loki is an open-source tool designed to automate the process of verifying the factuality of information. It provides a comprehensive pipeline for dissecting long texts into individual claims, assessing their worthiness for verification, generating queries for evidence search, crawling for evidence, and ultimately verifying the claims. This tool is especially useful for journalists, researchers, and anyone interested in the factuality of information.

open-parse

Open Parse is a Python library for visually discerning document layouts and chunking them effectively. It is designed to fill the gap in open-source libraries for handling complex documents. Unlike text splitting, which converts a file to raw text and slices it up, Open Parse visually analyzes documents for superior LLM input. It also supports basic markdown for parsing headings, bold, and italics, and has high-precision table support, extracting tables into clean Markdown formats with accuracy that surpasses traditional tools. Open Parse is extensible, allowing users to easily implement their own post-processing steps. It is also intuitive, with great editor support and completion everywhere, making it easy to use and learn.

spaCy

spaCy is an industrial-strength Natural Language Processing (NLP) library in Python and Cython. It incorporates the latest research and is designed for real-world applications. The library offers pretrained pipelines supporting 70+ languages, with advanced neural network models for tasks such as tagging, parsing, named entity recognition, and text classification. It also facilitates multi-task learning with pretrained transformers like BERT, along with a production-ready training system and streamlined model packaging, deployment, and workflow management. spaCy is commercial open-source software released under the MIT license.

NanoLLM

NanoLLM is a tool designed for optimized local inference for Large Language Models (LLMs) using HuggingFace-like APIs. It supports quantization, vision/language models, multimodal agents, speech, vector DB, and RAG. The tool aims to provide efficient and effective processing for LLMs on local devices, enhancing performance and usability for various AI applications.

ontogpt

OntoGPT is a Python package for extracting structured information from text using large language models, instruction prompts, and ontology-based grounding. It provides a command line interface and a minimal web app for easy usage. The tool has been evaluated on test data and is used in related projects like TALISMAN for gene set analysis. OntoGPT enables users to extract information from text by specifying relevant terms and provides the extracted objects as output.

lima

LIMA is a multilingual linguistic analyzer developed by the CEA LIST, LASTI laboratory. It is Free Software available under the MIT license. LIMA has state-of-the-art performance for more than 60 languages using deep learning modules. It also includes a powerful rules-based mechanism called ModEx for extracting information in new domains without annotated data.

liboai

liboai is a simple C++17 library for the OpenAI API, providing developers with access to OpenAI endpoints through a collection of methods and classes. It serves as a spiritual port of OpenAI's Python library, 'openai', with similar structure and features. The library supports various functionalities such as ChatGPT, Audio, Azure, Functions, Image DALL·E, Models, Completions, Edit, Embeddings, Files, Fine-tunes, Moderation, and Asynchronous Support. Users can easily integrate the library into their C++ projects to interact with OpenAI services.

For similar jobs

promptflow

**Prompt flow** is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

deepeval

DeepEval is a simple-to-use, open-source LLM evaluation framework specialized for unit testing LLM outputs. It incorporates various metrics such as G-Eval, hallucination, answer relevancy, RAGAS, etc., and runs locally on your machine for evaluation. It provides a wide range of ready-to-use evaluation metrics, allows for creating custom metrics, integrates with any CI/CD environment, and enables benchmarking LLMs on popular benchmarks. DeepEval is designed for evaluating RAG and fine-tuning applications, helping users optimize hyperparameters, prevent prompt drifting, and transition from OpenAI to hosting their own Llama2 with confidence.

MegaDetector

MegaDetector is an AI model that identifies animals, people, and vehicles in camera trap images (which also makes it useful for eliminating blank images). This model is trained on several million images from a variety of ecosystems. MegaDetector is just one of many tools that aims to make conservation biologists more efficient with AI. If you want to learn about other ways to use AI to accelerate camera trap workflows, check out our of the field, affectionately titled "Everything I know about machine learning and camera traps".

leapfrogai

LeapfrogAI is a self-hosted AI platform designed to be deployed in air-gapped resource-constrained environments. It brings sophisticated AI solutions to these environments by hosting all the necessary components of an AI stack, including vector databases, model backends, API, and UI. LeapfrogAI's API closely matches that of OpenAI, allowing tools built for OpenAI/ChatGPT to function seamlessly with a LeapfrogAI backend. It provides several backends for various use cases, including llama-cpp-python, whisper, text-embeddings, and vllm. LeapfrogAI leverages Chainguard's apko to harden base python images, ensuring the latest supported Python versions are used by the other components of the stack. The LeapfrogAI SDK provides a standard set of protobuffs and python utilities for implementing backends and gRPC. LeapfrogAI offers UI options for common use-cases like chat, summarization, and transcription. It can be deployed and run locally via UDS and Kubernetes, built out using Zarf packages. LeapfrogAI is supported by a community of users and contributors, including Defense Unicorns, Beast Code, Chainguard, Exovera, Hypergiant, Pulze, SOSi, United States Navy, United States Air Force, and United States Space Force.

llava-docker

This Docker image for LLaVA (Large Language and Vision Assistant) provides a convenient way to run LLaVA locally or on RunPod. LLaVA is a powerful AI tool that combines natural language processing and computer vision capabilities. With this Docker image, you can easily access LLaVA's functionalities for various tasks, including image captioning, visual question answering, text summarization, and more. The image comes pre-installed with LLaVA v1.2.0, Torch 2.1.2, xformers 0.0.23.post1, and other necessary dependencies. You can customize the model used by setting the MODEL environment variable. The image also includes a Jupyter Lab environment for interactive development and exploration. Overall, this Docker image offers a comprehensive and user-friendly platform for leveraging LLaVA's capabilities.

carrot

The 'carrot' repository on GitHub provides a list of free and user-friendly ChatGPT mirror sites for easy access. The repository includes sponsored sites offering various GPT models and services. Users can find and share sites, report errors, and access stable and recommended sites for ChatGPT usage. The repository also includes a detailed list of ChatGPT sites, their features, and accessibility options, making it a valuable resource for ChatGPT users seeking free and unlimited GPT services.

TrustLLM

TrustLLM is a comprehensive study of trustworthiness in LLMs, including principles for different dimensions of trustworthiness, established benchmark, evaluation, and analysis of trustworthiness for mainstream LLMs, and discussion of open challenges and future directions. Specifically, we first propose a set of principles for trustworthy LLMs that span eight different dimensions. Based on these principles, we further establish a benchmark across six dimensions including truthfulness, safety, fairness, robustness, privacy, and machine ethics. We then present a study evaluating 16 mainstream LLMs in TrustLLM, consisting of over 30 datasets. The document explains how to use the trustllm python package to help you assess the performance of your LLM in trustworthiness more quickly. For more details about TrustLLM, please refer to project website.

AI-YinMei

AI-YinMei is an AI virtual anchor Vtuber development tool (N card version). It supports fastgpt knowledge base chat dialogue, a complete set of solutions for LLM large language models: [fastgpt] + [one-api] + [Xinference], supports docking bilibili live broadcast barrage reply and entering live broadcast welcome speech, supports Microsoft edge-tts speech synthesis, supports Bert-VITS2 speech synthesis, supports GPT-SoVITS speech synthesis, supports expression control Vtuber Studio, supports painting stable-diffusion-webui output OBS live broadcast room, supports painting picture pornography public-NSFW-y-distinguish, supports search and image search service duckduckgo (requires magic Internet access), supports image search service Baidu image search (no magic Internet access), supports AI reply chat box [html plug-in], supports AI singing Auto-Convert-Music, supports playlist [html plug-in], supports dancing function, supports expression video playback, supports head touching action, supports gift smashing action, supports singing automatic start dancing function, chat and singing automatic cycle swing action, supports multi scene switching, background music switching, day and night automatic switching scene, supports open singing and painting, let AI automatically judge the content.