AIstudioProxyAPI

Python + FastAPI + Playwright + Camoufox 中间层代理服务器,兼容 OpenAI API且支持部分参数设置。项目通过网页自动化模拟人工将请求转发到 Google AI Studio 网页,并同样按照OpenAI标准格式返回输出的工具。课余时间有限,随缘更新

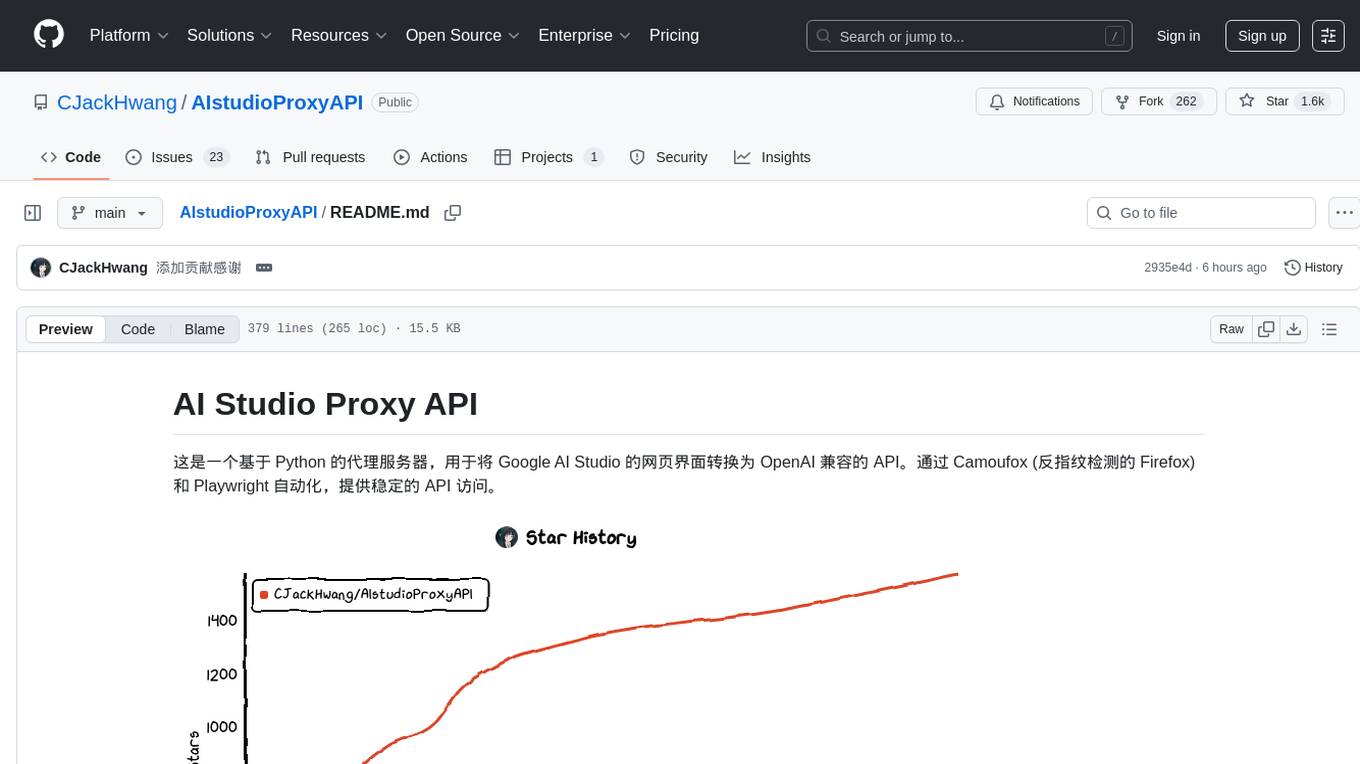

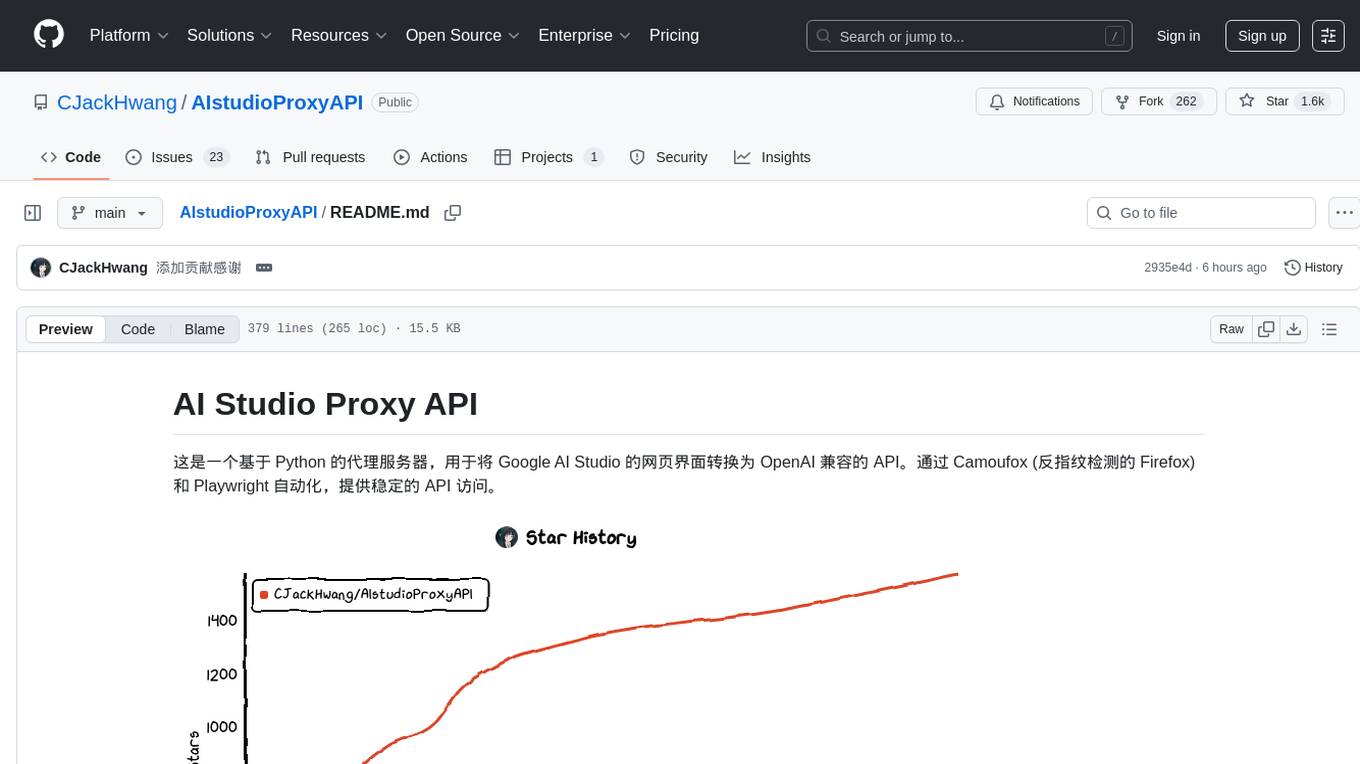

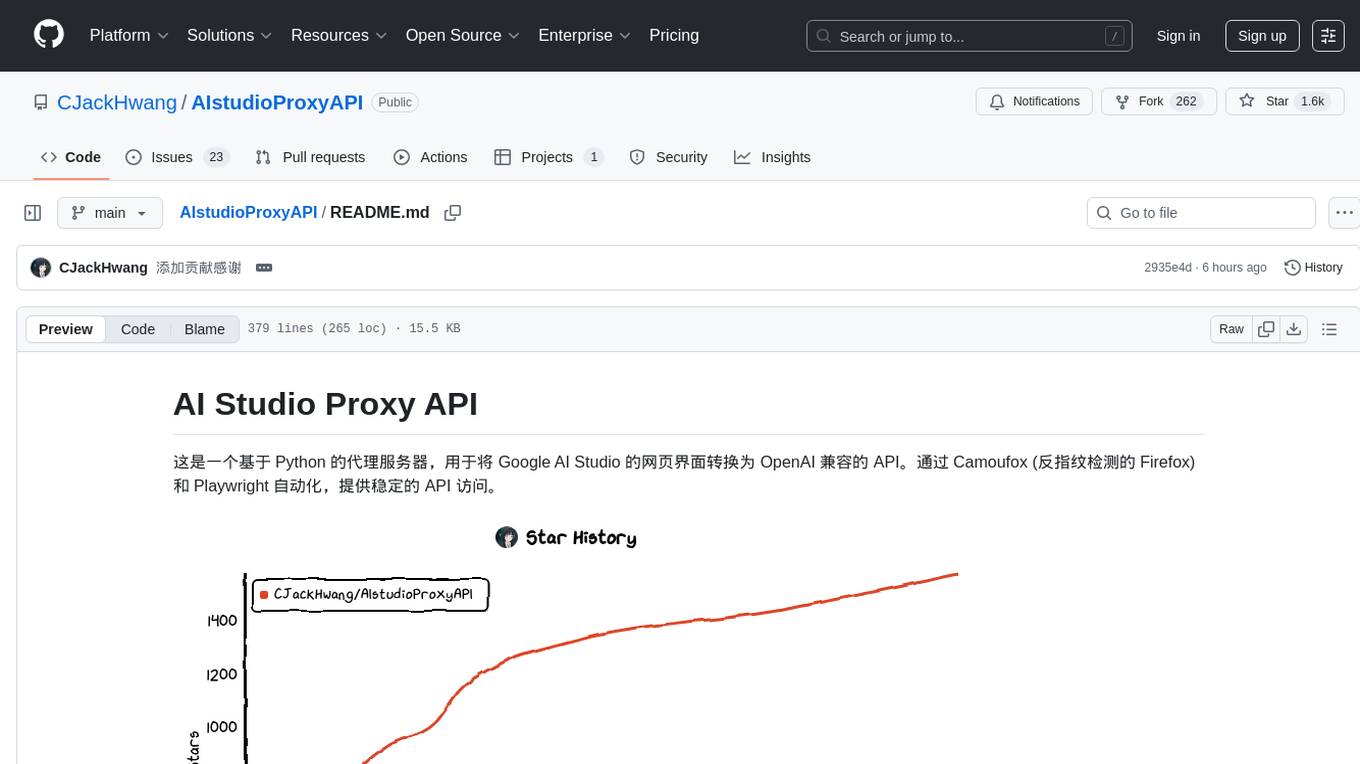

Stars: 1576

AI Studio Proxy API is a Python-based proxy server that converts the Google AI Studio web interface into an OpenAI-compatible API. It provides stable API access through Camoufox (anti-fingerprint detection Firefox) and Playwright automation. The project offers an OpenAI-compatible API endpoint, a three-layer streaming response mechanism, dynamic model switching, complete parameter control, anti-fingerprint detection, script injection functionality, modern web UI, graphical interface launcher, flexible authentication system, modular architecture, unified configuration management, and modern development tools.

README:

这是一个基于 Python 的代理服务器,用于将 Google AI Studio 的网页界面转换为 OpenAI 兼容的 API。通过 Camoufox (反指纹检测的 Firefox) 和 Playwright 自动化,提供稳定的 API 访问。

This project is generously sponsored by ZMTO. Visit their website: https://zmto.com/

本项目由 ZMTO 慷慨赞助服务器支持。访问他们的网站:https://zmto.com/

本项目的诞生与发展,离不开以下个人、组织和社区的慷慨支持与智慧贡献:

- 项目发起与主要开发: @CJackHwang (https://github.com/CJackHwang)

- 功能完善、页面操作优化思路贡献: @ayuayue (https://github.com/ayuayue)

- 实时流式功能优化与完善: @luispater (https://github.com/luispater)

- 3400+行主文件项目重构伟大贡献: @yattin (Holt) (https://github.com/yattin)

- 项目后期高质量维护: @Louie (https://github.com/NikkeTryHard)

- 社区支持与灵感碰撞: 特别感谢 Linux.do 社区 成员们的热烈讨论、宝贵建议和问题反馈,你们的参与是项目前进的重要动力。

同时,我们衷心感谢所有通过提交 Issue、提供建议、分享使用体验、贡献代码修复等方式为本项目默默奉献的每一位朋友。是你们共同的努力,让这个项目变得更好!

这是当前维护的 Python 版本。不再维护的 Javascript 版本请参见 deprecated_javascript_version/README.md。

- Python: >=3.9, <4.0 (推荐 3.10+ 以获得最佳性能,Docker 环境使用 3.10)

- 依赖管理: Poetry (现代化 Python 依赖管理工具,替代传统 requirements.txt)

- 类型检查: Pyright (可选,用于开发时类型检查和 IDE 支持)

- 操作系统: Windows, macOS, Linux (完全跨平台支持,Docker 部署支持 x86_64 和 ARM64)

- 内存: 建议 2GB+ 可用内存 (浏览器自动化需要)

- 网络: 稳定的互联网连接访问 Google AI Studio (支持代理配置)

-

OpenAI 兼容 API: 支持

/v1/chat/completions端点,完全兼容 OpenAI 客户端和第三方工具 - 三层流式响应机制: 集成流式代理 → 外部 Helper 服务 → Playwright 页面交互的多重保障

-

智能模型切换: 通过 API 请求中的

model字段动态切换 AI Studio 中的模型 -

完整参数控制: 支持

temperature、max_output_tokens、top_p、stop、reasoning_effort等所有主要参数 - 反指纹检测: 使用 Camoufox 浏览器降低被检测为自动化脚本的风险

- 脚本注入功能 v3.0: 使用 Playwright 原生网络拦截,支持油猴脚本动态挂载,100%可靠 🆕

- 现代化 Web UI: 内置测试界面,支持实时聊天、状态监控、分级 API 密钥管理

- 图形界面启动器: 提供功能丰富的 GUI 启动器,简化配置和进程管理

- 灵活认证系统: 支持可选的 API 密钥认证,完全兼容 OpenAI 标准的 Bearer token 格式

- 模块化架构: 清晰的模块分离设计,api_utils/、browser_utils/、config/ 等独立模块

-

统一配置管理: 基于

.env文件的统一配置方式,支持环境变量覆盖,Docker 兼容 - 现代化开发工具: Poetry 依赖管理 + Pyright 类型检查,提供优秀的开发体验

graph TD

subgraph "用户端 (User End)"

User["用户 (User)"]

WebUI["Web UI (Browser)"]

API_Client["API 客户端 (API Client)"]

end

subgraph "启动与配置 (Launch & Config)"

GUI_Launch["gui_launcher.py (图形启动器)"]

CLI_Launch["launch_camoufox.py (命令行启动)"]

EnvConfig[".env (统一配置)"]

KeyFile["auth_profiles/key.txt (API Keys)"]

ConfigDir["config/ (配置模块)"]

end

subgraph "核心应用 (Core Application)"

FastAPI_App["api_utils/app.py (FastAPI 应用)"]

Routes["api_utils/routes.py (路由处理)"]

RequestProcessor["api_utils/request_processor.py (请求处理)"]

AuthUtils["api_utils/auth_utils.py (认证管理)"]

PageController["browser_utils/page_controller.py (页面控制)"]

ScriptManager["browser_utils/script_manager.py (脚本注入)"]

ModelManager["browser_utils/model_management.py (模型管理)"]

StreamProxy["stream/ (流式代理服务器)"]

end

subgraph "外部依赖 (External Dependencies)"

CamoufoxInstance["Camoufox 浏览器 (反指纹)"]

AI_Studio["Google AI Studio"]

UserScript["油猴脚本 (可选)"]

end

User -- "运行 (Run)" --> GUI_Launch

User -- "运行 (Run)" --> CLI_Launch

User -- "访问 (Access)" --> WebUI

GUI_Launch -- "启动 (Starts)" --> CLI_Launch

CLI_Launch -- "启动 (Starts)" --> FastAPI_App

CLI_Launch -- "配置 (Configures)" --> StreamProxy

API_Client -- "API 请求 (Request)" --> FastAPI_App

WebUI -- "聊天请求 (Chat Request)" --> FastAPI_App

FastAPI_App -- "读取配置 (Reads Config)" --> EnvConfig

FastAPI_App -- "使用路由 (Uses Routes)" --> Routes

AuthUtils -- "验证密钥 (Validates Key)" --> KeyFile

ConfigDir -- "提供设置 (Provides Settings)" --> EnvConfig

Routes -- "处理请求 (Processes Request)" --> RequestProcessor

Routes -- "认证管理 (Auth Management)" --> AuthUtils

RequestProcessor -- "控制浏览器 (Controls Browser)" --> PageController

RequestProcessor -- "通过代理 (Uses Proxy)" --> StreamProxy

PageController -- "模型管理 (Model Management)" --> ModelManager

PageController -- "脚本注入 (Script Injection)" --> ScriptManager

ScriptManager -- "加载脚本 (Loads Script)" --> UserScript

ScriptManager -- "增强功能 (Enhances)" --> CamoufoxInstance

PageController -- "自动化 (Automates)" --> CamoufoxInstance

CamoufoxInstance -- "访问 (Accesses)" --> AI_Studio

StreamProxy -- "转发请求 (Forwards Request)" --> AI_Studio

AI_Studio -- "响应 (Response)" --> CamoufoxInstance

AI_Studio -- "响应 (Response)" --> StreamProxy

CamoufoxInstance -- "返回数据 (Returns Data)" --> PageController

StreamProxy -- "返回数据 (Returns Data)" --> RequestProcessor

FastAPI_App -- "API 响应 (Response)" --> API_Client

FastAPI_App -- "UI 响应 (Response)" --> WebUI新功能: 项目现在支持通过 .env 文件进行配置管理,避免硬编码参数!

# 1. 复制配置模板

cp .env.example .env

# 2. 编辑配置文件

nano .env # 或使用其他编辑器

# 3. 启动服务(自动读取配置)

python gui_launcher.py

# 或直接命令行启动

python launch_camoufox.py --headless- ✅ 版本更新无忧: 一个

git pull就完成更新,无需重新配置 - ✅ 配置集中管理: 所有配置项统一在

.env文件中 - ✅ 启动命令简化: 无需复杂的命令行参数,一键启动

- ✅ 安全性:

.env文件已被.gitignore忽略,不会泄露配置 - ✅ 灵活性: 支持不同环境的配置管理

- ✅ Docker 兼容: Docker 和本地环境使用相同的配置方式

详细配置说明请参见 环境变量配置指南。

推荐使用 gui_launcher.py (图形界面) 或直接使用 launch_camoufox.py (命令行) 进行日常运行。仅在首次设置或认证过期时才需要使用调试模式。

本项目采用现代化的 Python 开发工具链,使用 Poetry 进行依赖管理,Pyright 进行类型检查。

# macOS/Linux 用户

curl -sSL https://raw.githubusercontent.com/CJackHwang/AIstudioProxyAPI/main/scripts/install.sh | bash

# Windows 用户 (PowerShell)

iwr -useb https://raw.githubusercontent.com/CJackHwang/AIstudioProxyAPI/main/scripts/install.ps1 | iex-

安装 Poetry (如果尚未安装):

# macOS/Linux curl -sSL https://install.python-poetry.org | python3 - # Windows (PowerShell) (Invoke-WebRequest -Uri https://install.python-poetry.org -UseBasicParsing).Content | py - # 或使用包管理器 # macOS: brew install poetry # Ubuntu/Debian: apt install python3-poetry

-

克隆项目:

git clone https://github.com/CJackHwang/AIstudioProxyAPI.git cd AIstudioProxyAPI -

安装依赖: Poetry 会自动创建虚拟环境并安装所有依赖:

poetry install

-

激活虚拟环境:

# 方式1: 激活 shell (推荐日常开发) poetry env activate # 方式2: 直接运行命令 (推荐自动化脚本) poetry run python gui_launcher.py

- 环境配置: 参见 环境变量配置指南 - 推荐先配置

- 首次认证: 参见 认证设置指南

- 日常运行: 参见 日常运行指南

- API 使用: 参见 API 使用指南

- Web 界面: 参见 Web UI 使用指南

如果您是开发者,还可以:

# 安装开发依赖 (包含类型检查、测试工具等)

poetry install --with dev

# 启用类型检查 (需要安装 pyright)

npm install -g pyright

pyright

# 查看项目依赖树

poetry show --tree

# 更新依赖

poetry update- API 使用指南 - API 端点和客户端配置

- Web UI 使用指南 - Web 界面功能说明

- 脚本注入指南 - 油猴脚本动态挂载功能使用指南 (v3.0) 🆕

以 Open WebUI 为例:

- 打开 Open WebUI

- 进入 "设置" -> "连接"

- 在 "模型" 部分,点击 "添加模型"

-

模型名称: 输入你想要的名字,例如

aistudio-gemini-py -

API 基础 URL: 输入

http://127.0.0.1:2048/v1 - API 密钥: 留空或输入任意字符

- 保存设置并开始聊天

本项目支持通过 Docker 进行部署,使用 Poetry 进行依赖管理,完全支持 .env 配置文件!

📁 注意: 所有 Docker 相关文件已移至

docker/目录,保持项目根目录整洁。

# 1. 准备配置文件

cd docker

cp .env.docker .env

nano .env # 编辑配置

# 2. 使用 Docker Compose 启动

docker compose up -d

# 3. 查看日志

docker compose logs -f

# 4. 版本更新 (在 docker 目录下)

bash update.sh-

Docker 部署指南 (docker/README-Docker.md) - 包含完整的 Poetry +

.env配置说明 - Docker 快速指南 (docker/README.md) - 快速开始指南

- ✅ Poetry 依赖管理: 使用现代化的 Python 依赖管理工具

- ✅ 多阶段构建: 优化镜像大小和构建速度

- ✅ 配置统一: 使用

.env文件管理所有配置 - ✅ 版本更新:

bash update.sh即可完成更新 - ✅ 目录整洁: Docker 文件已移至

docker/目录 - ✅ 跨平台支持: 支持 x86_64 和 ARM64 架构

⚠️ 认证文件: 首次运行需要在主机上获取认证文件,然后挂载到容器中

本项目使用 Camoufox 来提供具有增强反指纹检测能力的浏览器实例。

- 核心目标: 模拟真实用户流量,避免被网站识别为自动化脚本或机器人

- 实现方式: Camoufox 基于 Firefox,通过修改浏览器底层 C++ 实现来伪装设备指纹(如屏幕、操作系统、WebGL、字体等),而不是通过容易被检测到的 JavaScript 注入

- Playwright 兼容: Camoufox 提供了与 Playwright 兼容的接口

-

Python 接口: Camoufox 提供了 Python 包,可以通过

camoufox.server.launch_server()启动其服务,并通过 WebSocket 连接进行控制

使用 Camoufox 的主要目的是提高与 AI Studio 网页交互时的隐蔽性,减少被检测或限制的可能性。但请注意,没有任何反指纹技术是绝对完美的。

-

响应获取优先级: 项目采用三层响应获取机制,确保高可用性:

- 集成流式代理服务 (Stream Proxy): 默认启用,端口 3120,提供最佳性能和稳定性

- 外部 Helper 服务: 可选配置,需要有效认证文件,作为备用方案

- Playwright 页面交互: 最终后备方案,通过浏览器自动化获取响应

-

参数控制机制:

- 流式代理模式: 支持基础参数传递,性能最优

- Helper 服务模式: 参数支持取决于外部服务实现

-

Playwright 模式: 完整支持所有参数(

temperature,max_output_tokens,top_p,stop,reasoning_effort等)

-

脚本注入增强: v3.0 版本使用 Playwright 原生网络拦截,确保注入模型与原生模型 100%一致

客户端管理历史,代理不支持 UI 内编辑: 客户端负责维护完整的聊天记录并将其发送给代理。代理服务器本身不支持在 AI Studio 界面中对历史消息进行编辑或分叉操作。

以下是一些计划中的改进方向:

- 云服务器部署指南: 提供更详细的在主流云平台上部署和管理服务的指南

- 认证更新流程优化: 探索更便捷的认证文件更新机制,减少手动操作

- 流程健壮性优化: 减少错误几率和接近原生体验

欢迎提交 Issue 和 Pull Request!

如果您觉得本项目对您有帮助,并且希望支持作者的持续开发,欢迎通过以下方式进行捐赠。您的支持是对我们最大的鼓励!

For Tasks:

Click tags to check more tools for each tasksFor Jobs:

Alternative AI tools for AIstudioProxyAPI

Similar Open Source Tools

AIstudioProxyAPI

AI Studio Proxy API is a Python-based proxy server that converts the Google AI Studio web interface into an OpenAI-compatible API. It provides stable API access through Camoufox (anti-fingerprint detection Firefox) and Playwright automation. The project offers an OpenAI-compatible API endpoint, a three-layer streaming response mechanism, dynamic model switching, complete parameter control, anti-fingerprint detection, script injection functionality, modern web UI, graphical interface launcher, flexible authentication system, modular architecture, unified configuration management, and modern development tools.

PersonalExam

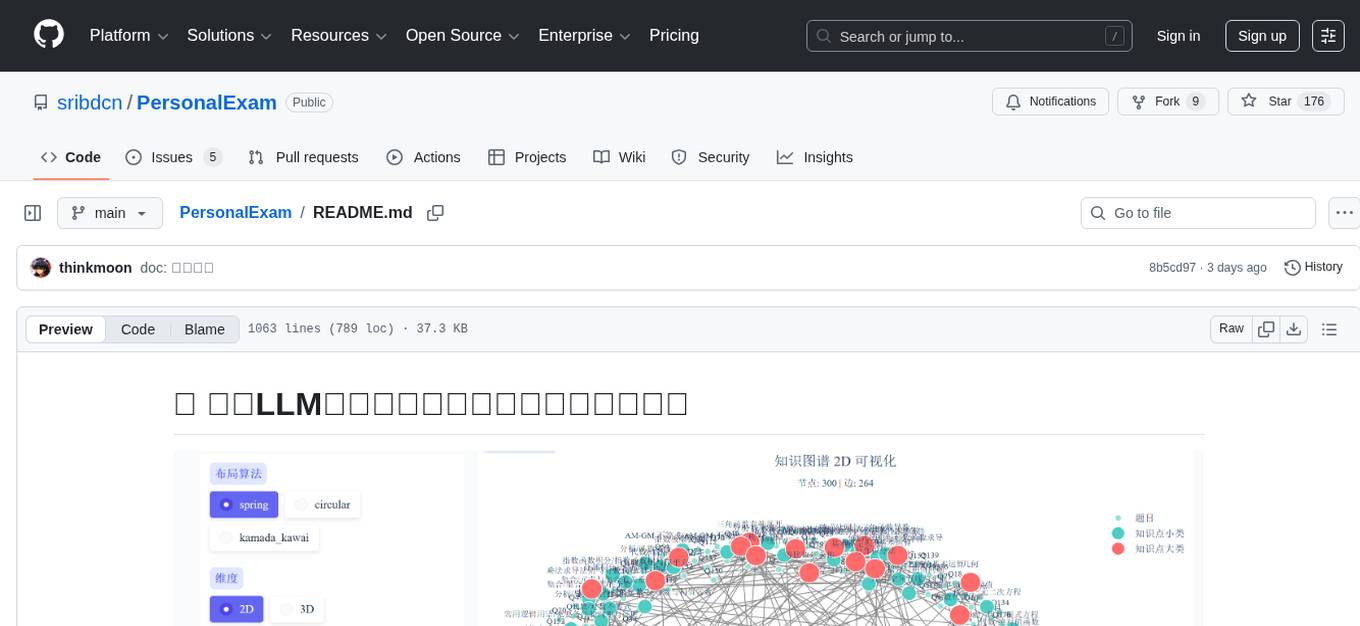

PersonalExam is a personalized question generation system based on LLM and knowledge graph collaboration. It utilizes the BKT algorithm, RAG engine, and OpenPangu model to achieve personalized intelligent question generation and recommendation. The system features adaptive question recommendation, fine-grained knowledge tracking, AI answer evaluation, student profiling, visual reports, interactive knowledge graph, user management, and system monitoring.

BigBanana-AI-Director

BigBanana AI Director is an industrial AI motion comic and video workbench platform that provides a one-stop solution for creating short dramas and comics. It utilizes a 'Script-to-Asset-to-Keyframe' workflow with advanced AI models to automate the process from script to final production, ensuring precise control over character consistency, scene continuity, and camera movements. The tool is designed to streamline the production process for creators, enabling efficient production from idea to finished product.

Con-Nav-Item

Con-Nav-Item is a modern personal navigation system designed for digital workers. It is not just a link bookmark but also an all-in-one workspace integrated with AI smart generation, multi-device synchronization, card-based management, and deep browser integration.

AirPower4T

AirPower4T is a development base library based on Vue3 TypeScript Element Plus Vite, using decorators, object-oriented, Hook and other front-end development methods. It provides many common components and some feedback components commonly used in background management systems, and provides a lot of enums and decorators.

prompt-optimizer

Prompt Optimizer is a powerful AI prompt optimization tool that helps you write better AI prompts, improving AI output quality. It supports both web application and Chrome extension usage. The tool features intelligent optimization for prompt words, real-time testing to compare before and after optimization, integration with multiple mainstream AI models, client-side processing for security, encrypted local storage for data privacy, responsive design for user experience, and more.

hugging-llm

HuggingLLM is a project that aims to introduce ChatGPT to a wider audience, particularly those interested in using the technology to create new products or applications. The project focuses on providing practical guidance on how to use ChatGPT-related APIs to create new features and applications. It also includes detailed background information and system design introductions for relevant tasks, as well as example code and implementation processes. The project is designed for individuals with some programming experience who are interested in using ChatGPT for practical applications, and it encourages users to experiment and create their own applications and demos.

aio-hub

AIO Hub is a cross-platform AI hub built on Tauri + Vue 3 + TypeScript, aiming to provide developers and creators with precise LLM control experience and efficient toolchain. It features a chat function designed for complex tasks and deep exploration, a unified context pipeline for controlling every token sent to the model, interactive AI buttons, dual-view management for non-linear conversation mapping, open ecosystem compatibility with various AI models, and a rich text renderer for LLM output. The tool also includes features for media workstation, developer productivity, system and asset management, regex applier, collaboration enhancement between developers and AI, and more.

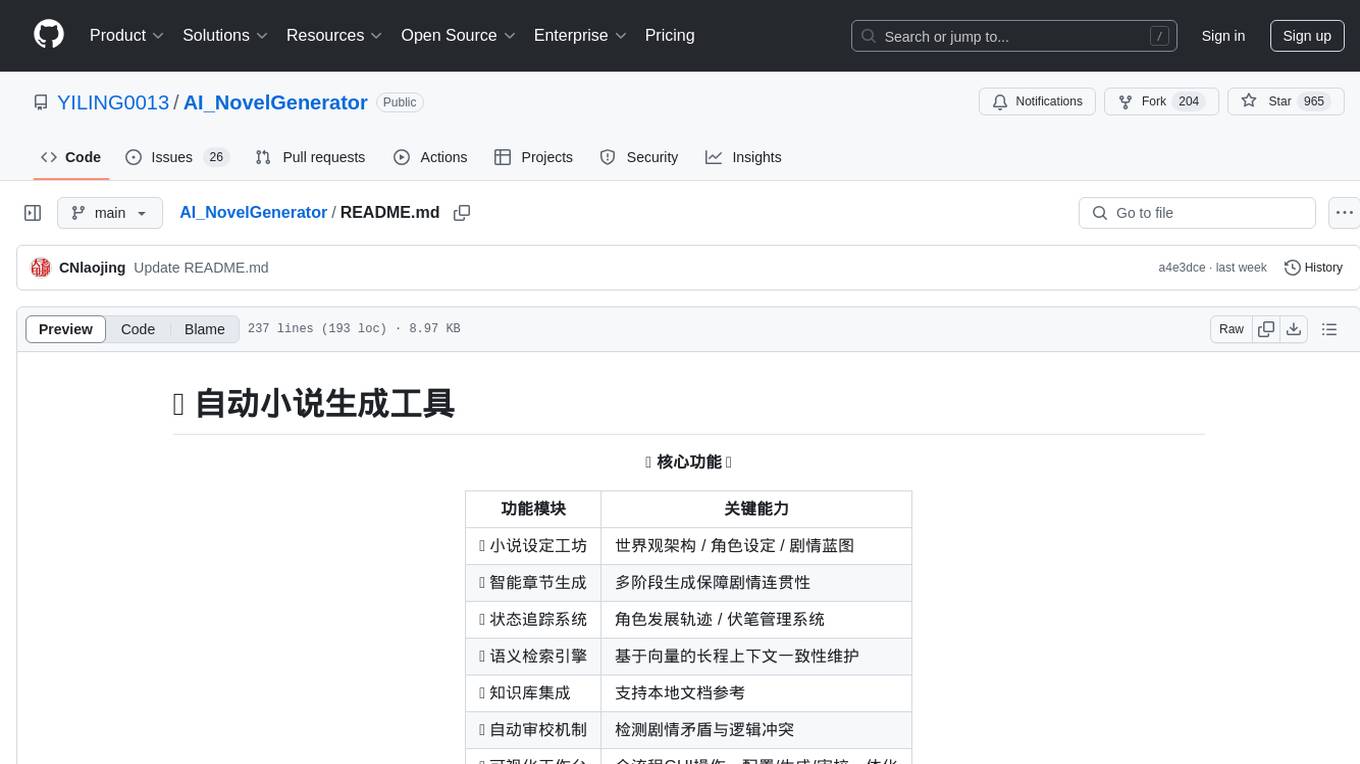

AI_NovelGenerator

AI_NovelGenerator is a versatile novel generation tool based on large language models. It features a novel setting workshop for world-building, character development, and plot blueprinting, intelligent chapter generation for coherent storytelling, a status tracking system for character arcs and foreshadowing management, a semantic retrieval engine for maintaining long-range context consistency, integration with knowledge bases for local document references, an automatic proofreading mechanism for detecting plot contradictions and logic conflicts, and a visual workspace for GUI operations encompassing configuration, generation, and proofreading. The tool aims to assist users in efficiently creating logically rigorous and thematically consistent long-form stories.

langchain4j-aideepin

LangChain4j-AIDeepin is an open-source, offline deployable retrieval enhancement generation (RAG) project based on large language models such as ChatGPT and Langchain4j application framework. It offers features like registration & login, multi-session support, image generation, prompt words, quota control, knowledge base, model-based search, model switching, and search engine switching. The project integrates models like ChatGPT 3.5, Tongyi Qianwen, Wenxin Yiyuan, Ollama, and DALL-E 2. The backend uses technologies like JDK 17, Spring Boot 3.0.5, Langchain4j, and PostgreSQL with pgvector extension, while the frontend is built with Vue3, TypeScript, and PNPM.

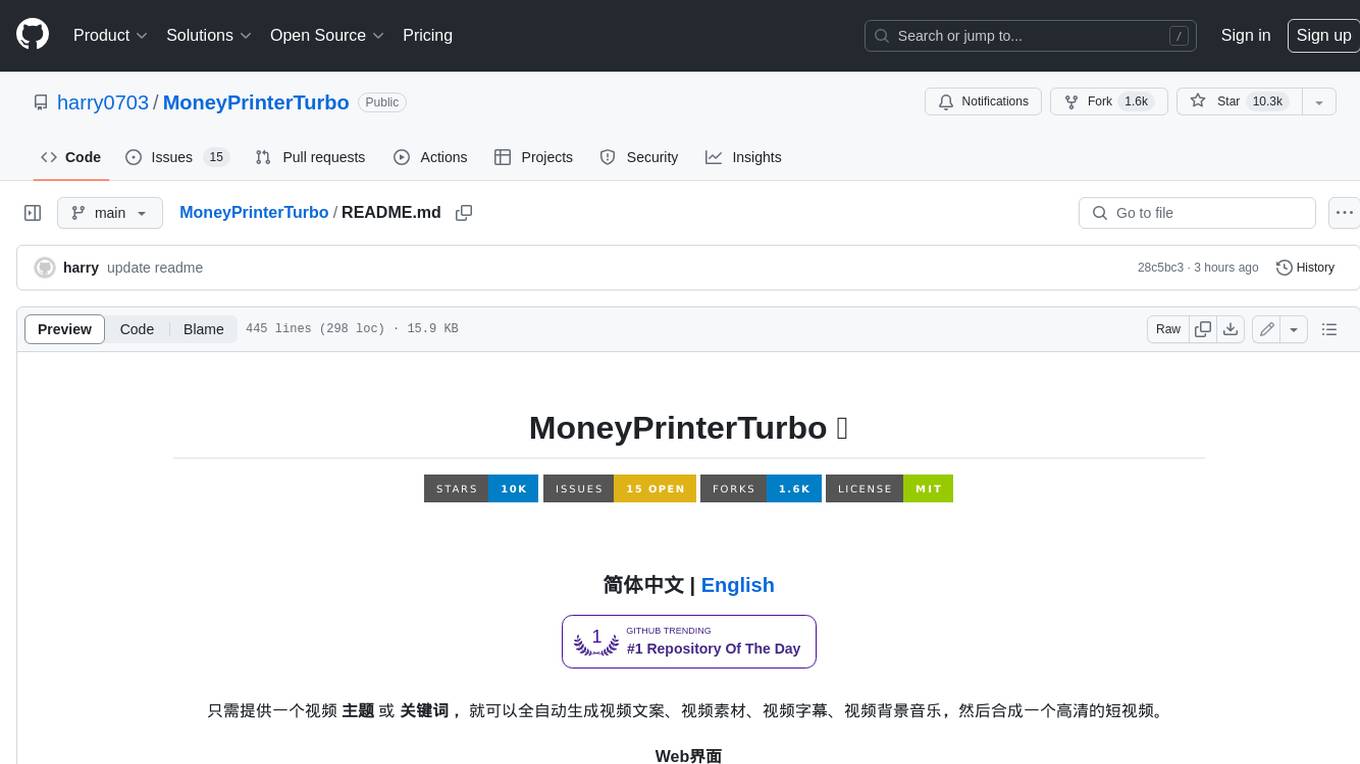

MoneyPrinterTurbo

MoneyPrinterTurbo is a tool that can automatically generate video content based on a provided theme or keyword. It can create video scripts, materials, subtitles, and background music, and then compile them into a high-definition short video. The tool features a web interface and an API interface, supporting AI-generated video scripts, customizable scripts, multiple HD video sizes, batch video generation, customizable video segment duration, multilingual video scripts, multiple voice synthesis options, subtitle generation with font customization, background music selection, access to high-definition and copyright-free video materials, and integration with various AI models like OpenAI, moonshot, Azure, and more. The tool aims to simplify the video creation process and offers future plans to enhance voice synthesis, add video transition effects, provide more video material sources, offer video length options, include free network proxies, enable real-time voice and music previews, support additional voice synthesis services, and facilitate automatic uploads to YouTube platform.

BiBi-Keyboard

BiBi-Keyboard is an AI-based intelligent voice input method that aims to make voice input more natural and efficient. It provides features such as voice recognition with simple and intuitive operations, multiple ASR engine support, AI text post-processing, floating ball input for cross-input method usage, AI editing panel with rich editing tools, Material3 design for modern interface style, and support for multiple languages. Users can adjust keyboard height, test input directly in the settings page, view recognition word count statistics, receive vibration feedback, and check for updates automatically. The tool requires Android 10.0 or higher, microphone permission for voice recognition, optional overlay permission for the floating ball feature, and optional accessibility permission for automatic text insertion.

AutoGLM-GUI

AutoGLM-GUI is an AI-driven Android automation productivity tool that supports scheduled tasks, remote deployment, and 24/7 AI assistance. It features core functionalities such as deploying to servers, scheduling tasks, and creating an AI automation assistant. The tool enhances productivity by automating repetitive tasks, managing multiple devices, and providing a layered agent mode for complex task planning and execution. It also supports real-time screen preview, direct device control, and zero-configuration deployment. Users can easily download the tool for Windows, macOS, and Linux systems, and can also install it via Python package. The tool is suitable for various use cases such as server automation, batch device management, development testing, and personal productivity enhancement.

chatless

Chatless is a modern AI chat desktop application built on Tauri and Next.js. It supports multiple AI providers, can connect to local Ollama models, supports document parsing and knowledge base functions. All data is stored locally to protect user privacy. The application is lightweight, simple, starts quickly, and consumes minimal resources.

CodeAsk

CodeAsk is a code analysis tool designed to tackle complex issues such as code that seems to self-replicate, cryptic comments left by predecessors, messy and unclear code, and long-lasting temporary solutions. It offers intelligent code organization and analysis, security vulnerability detection, code quality assessment, and other interesting prompts to help users understand and work with legacy code more efficiently. The tool aims to translate 'legacy code mountains' into understandable language, creating an illusion of comprehension and facilitating knowledge transfer to new team members.

Code-Interpreter-Api

Code Interpreter API is a project that combines a scheduling center with a sandbox environment, dedicated to creating the world's best code interpreter. It aims to provide a secure, reliable API interface for remotely running code and obtaining execution results, accelerating the development of various AI agents, and being a boon to many AI enthusiasts. The project innovatively combines Docker container technology to achieve secure isolation and execution of Python code. Additionally, the project supports storing generated image data in a PostgreSQL database and accessing it through API endpoints, providing rich data processing and storage capabilities.

For similar tasks

AIstudioProxyAPI

AI Studio Proxy API is a Python-based proxy server that converts the Google AI Studio web interface into an OpenAI-compatible API. It provides stable API access through Camoufox (anti-fingerprint detection Firefox) and Playwright automation. The project offers an OpenAI-compatible API endpoint, a three-layer streaming response mechanism, dynamic model switching, complete parameter control, anti-fingerprint detection, script injection functionality, modern web UI, graphical interface launcher, flexible authentication system, modular architecture, unified configuration management, and modern development tools.

chatgpt-web-sea

ChatGPT Web Sea is an open-source project based on ChatGPT-web for secondary development. It supports all models that comply with the OpenAI interface standard, allows for model selection, configuration, and extension, and is compatible with OneAPI. The tool includes a Chinese ChatGPT tuning guide, supports file uploads, and provides model configuration options. Users can interact with the tool through a web interface, configure models, and perform tasks such as model selection, API key management, and chat interface setup. The project also offers Docker deployment options and instructions for manual packaging.

farfalle

Farfalle is an open-source AI-powered search engine that allows users to run their own local LLM or utilize the cloud. It provides a tech stack including Next.js for frontend, FastAPI for backend, Tavily for search API, Logfire for logging, and Redis for rate limiting. Users can get started by setting up prerequisites like Docker and Ollama, and obtaining API keys for Tavily, OpenAI, and Groq. The tool supports models like llama3, mistral, and gemma. Users can clone the repository, set environment variables, run containers using Docker Compose, and deploy the backend and frontend using services like Render and Vercel.

ComfyUI-Tara-LLM-Integration

Tara is a powerful node for ComfyUI that integrates Large Language Models (LLMs) to enhance and automate workflow processes. With Tara, you can create complex, intelligent workflows that refine and generate content, manage API keys, and seamlessly integrate various LLMs into your projects. It comprises nodes for handling OpenAI-compatible APIs, saving and loading API keys, composing multiple texts, and using predefined templates for OpenAI and Groq. Tara supports OpenAI and Grok models with plans to expand support to together.ai and Replicate. Users can install Tara via Git URL or ComfyUI Manager and utilize it for tasks like input guidance, saving and loading API keys, and generating text suitable for chaining in workflows.

conversational-agent-langchain

This repository contains a Rest-Backend for a Conversational Agent that allows embedding documents, semantic search, QA based on documents, and document processing with Large Language Models. It uses Aleph Alpha and OpenAI Large Language Models to generate responses to user queries, includes a vector database, and provides a REST API built with FastAPI. The project also features semantic search, secret management for API keys, installation instructions, and development guidelines for both backend and frontend components.

ChatGPT-Next-Web-Pro

ChatGPT-Next-Web-Pro is a tool that provides an enhanced version of ChatGPT-Next-Web with additional features and functionalities. It offers complete ChatGPT-Next-Web functionality, file uploading and storage capabilities, drawing and video support, multi-modal support, reverse model support, knowledge base integration, translation, customizations, and more. The tool can be deployed with or without a backend, allowing users to interact with AI models, manage accounts, create models, manage API keys, handle orders, manage memberships, and more. It supports various cloud services like Aliyun OSS, Tencent COS, and Minio for file storage, and integrates with external APIs like Azure, Google Gemini Pro, and Luma. The tool also provides options for customizing website titles, subtitles, icons, and plugin buttons, and offers features like voice input, file uploading, real-time token count display, and more.

APIMyLlama

APIMyLlama is a server application that provides an interface to interact with the Ollama API, a powerful AI tool to run LLMs. It allows users to easily distribute API keys to create amazing things. The tool offers commands to generate, list, remove, add, change, activate, deactivate, and manage API keys, as well as functionalities to work with webhooks, set rate limits, and get detailed information about API keys. Users can install APIMyLlama packages with NPM, PIP, Jitpack Repo+Gradle or Maven, or from the Crates Repository. The tool supports Node.JS, Python, Java, and Rust for generating responses from the API. Additionally, it provides built-in health checking commands for monitoring API health status.

IntelliChat

IntelliChat is an open-source AI chatbot tool designed to accelerate the integration of multiple language models into chatbot apps. Users can select their preferred AI provider and model from the UI, manage API keys, and access data using Intellinode. The tool is built with Intellinode and Next.js, and supports various AI providers such as OpenAI ChatGPT, Google Gemini, Azure Openai, Cohere Coral, Replicate, Mistral AI, Anthropic, and vLLM. It offers a user-friendly interface for developers to easily incorporate AI capabilities into their chatbot applications.

For similar jobs

sweep

Sweep is an AI junior developer that turns bugs and feature requests into code changes. It automatically handles developer experience improvements like adding type hints and improving test coverage.

teams-ai

The Teams AI Library is a software development kit (SDK) that helps developers create bots that can interact with Teams and Microsoft 365 applications. It is built on top of the Bot Framework SDK and simplifies the process of developing bots that interact with Teams' artificial intelligence capabilities. The SDK is available for JavaScript/TypeScript, .NET, and Python.

ai-guide

This guide is dedicated to Large Language Models (LLMs) that you can run on your home computer. It assumes your PC is a lower-end, non-gaming setup.

classifai

Supercharge WordPress Content Workflows and Engagement with Artificial Intelligence. Tap into leading cloud-based services like OpenAI, Microsoft Azure AI, Google Gemini and IBM Watson to augment your WordPress-powered websites. Publish content faster while improving SEO performance and increasing audience engagement. ClassifAI integrates Artificial Intelligence and Machine Learning technologies to lighten your workload and eliminate tedious tasks, giving you more time to create original content that matters.

chatbot-ui

Chatbot UI is an open-source AI chat app that allows users to create and deploy their own AI chatbots. It is easy to use and can be customized to fit any need. Chatbot UI is perfect for businesses, developers, and anyone who wants to create a chatbot.

BricksLLM

BricksLLM is a cloud native AI gateway written in Go. Currently, it provides native support for OpenAI, Anthropic, Azure OpenAI and vLLM. BricksLLM aims to provide enterprise level infrastructure that can power any LLM production use cases. Here are some use cases for BricksLLM: * Set LLM usage limits for users on different pricing tiers * Track LLM usage on a per user and per organization basis * Block or redact requests containing PIIs * Improve LLM reliability with failovers, retries and caching * Distribute API keys with rate limits and cost limits for internal development/production use cases * Distribute API keys with rate limits and cost limits for students

uAgents

uAgents is a Python library developed by Fetch.ai that allows for the creation of autonomous AI agents. These agents can perform various tasks on a schedule or take action on various events. uAgents are easy to create and manage, and they are connected to a fast-growing network of other uAgents. They are also secure, with cryptographically secured messages and wallets.

griptape

Griptape is a modular Python framework for building AI-powered applications that securely connect to your enterprise data and APIs. It offers developers the ability to maintain control and flexibility at every step. Griptape's core components include Structures (Agents, Pipelines, and Workflows), Tasks, Tools, Memory (Conversation Memory, Task Memory, and Meta Memory), Drivers (Prompt and Embedding Drivers, Vector Store Drivers, Image Generation Drivers, Image Query Drivers, SQL Drivers, Web Scraper Drivers, and Conversation Memory Drivers), Engines (Query Engines, Extraction Engines, Summary Engines, Image Generation Engines, and Image Query Engines), and additional components (Rulesets, Loaders, Artifacts, Chunkers, and Tokenizers). Griptape enables developers to create AI-powered applications with ease and efficiency.